How to Minimize GC-MS Baseline Noise in Chromatograms

SEP 22, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

GC-MS Baseline Noise Background and Objectives

Gas Chromatography-Mass Spectrometry (GC-MS) has evolved significantly since its inception in the 1950s, becoming an indispensable analytical technique in various fields including environmental analysis, forensic science, pharmaceutical research, and food safety. The technology combines the separation capabilities of gas chromatography with the detection specificity of mass spectrometry, allowing for precise identification and quantification of complex chemical mixtures.

Baseline noise in GC-MS chromatograms represents one of the most persistent challenges in analytical chemistry, often limiting detection sensitivity and quantitative accuracy. This noise manifests as irregular fluctuations in the baseline signal, potentially masking low-concentration analytes and compromising data interpretation. The signal-to-noise ratio (S/N) has become a critical parameter in evaluating analytical method performance.

Historical approaches to noise reduction have progressed from simple hardware modifications to sophisticated digital signal processing algorithms. Early techniques focused primarily on improving instrument components, while modern approaches incorporate advanced mathematical models and machine learning algorithms for post-acquisition data processing.

The evolution of noise reduction techniques has paralleled advancements in computing power and algorithm development. Traditional methods such as Savitzky-Golay filtering and Fourier transformation have been supplemented by wavelet transformation, principal component analysis, and artificial neural networks, reflecting the increasing sophistication of analytical methodologies.

Current technological trends indicate a convergence of hardware improvements and software solutions. Manufacturers are developing more stable ion sources, improved vacuum systems, and enhanced detector technologies, while simultaneously refining data processing algorithms to extract meaningful signals from noisy backgrounds.

The primary objectives of baseline noise minimization research include: enhancing detection limits for trace analysis; improving quantitative accuracy, particularly for complex environmental and biological samples; increasing laboratory throughput by reducing the need for sample pre-concentration; and developing robust, automated data processing workflows that can be implemented across different instrument platforms.

Future developments are likely to focus on real-time noise filtering algorithms, adaptive baseline correction techniques, and the integration of artificial intelligence for automated method optimization. These advancements will be crucial for emerging applications in metabolomics, environmental monitoring, and clinical diagnostics, where the detection of increasingly lower analyte concentrations is required.

The ultimate goal remains the development of comprehensive strategies that address noise at its various sources—from sample preparation through instrumental analysis to data processing—creating integrated solutions that maximize analytical performance while minimizing operator intervention.

Baseline noise in GC-MS chromatograms represents one of the most persistent challenges in analytical chemistry, often limiting detection sensitivity and quantitative accuracy. This noise manifests as irregular fluctuations in the baseline signal, potentially masking low-concentration analytes and compromising data interpretation. The signal-to-noise ratio (S/N) has become a critical parameter in evaluating analytical method performance.

Historical approaches to noise reduction have progressed from simple hardware modifications to sophisticated digital signal processing algorithms. Early techniques focused primarily on improving instrument components, while modern approaches incorporate advanced mathematical models and machine learning algorithms for post-acquisition data processing.

The evolution of noise reduction techniques has paralleled advancements in computing power and algorithm development. Traditional methods such as Savitzky-Golay filtering and Fourier transformation have been supplemented by wavelet transformation, principal component analysis, and artificial neural networks, reflecting the increasing sophistication of analytical methodologies.

Current technological trends indicate a convergence of hardware improvements and software solutions. Manufacturers are developing more stable ion sources, improved vacuum systems, and enhanced detector technologies, while simultaneously refining data processing algorithms to extract meaningful signals from noisy backgrounds.

The primary objectives of baseline noise minimization research include: enhancing detection limits for trace analysis; improving quantitative accuracy, particularly for complex environmental and biological samples; increasing laboratory throughput by reducing the need for sample pre-concentration; and developing robust, automated data processing workflows that can be implemented across different instrument platforms.

Future developments are likely to focus on real-time noise filtering algorithms, adaptive baseline correction techniques, and the integration of artificial intelligence for automated method optimization. These advancements will be crucial for emerging applications in metabolomics, environmental monitoring, and clinical diagnostics, where the detection of increasingly lower analyte concentrations is required.

The ultimate goal remains the development of comprehensive strategies that address noise at its various sources—from sample preparation through instrumental analysis to data processing—creating integrated solutions that maximize analytical performance while minimizing operator intervention.

Market Demand for High-Precision Chromatographic Analysis

The global market for high-precision chromatographic analysis has experienced substantial growth over the past decade, driven primarily by increasing demands in pharmaceutical research, environmental monitoring, food safety testing, and forensic applications. The need for minimizing baseline noise in GC-MS chromatograms specifically represents a critical segment within this broader market, as noise reduction directly correlates with improved detection limits and more reliable quantitative analysis.

Recent market research indicates that the analytical instrumentation market, which includes GC-MS systems, is projected to grow at a compound annual growth rate of 6.7% through 2028. Within this sector, technologies focused on enhancing signal-to-noise ratios and reducing baseline interference are experiencing particularly strong demand, as laboratories face increasing pressure to detect ever-lower concentrations of analytes in complex matrices.

The pharmaceutical and biotechnology sectors remain the largest consumers of high-precision chromatographic technologies, accounting for approximately 40% of the market share. These industries require exceptionally clean baselines for accurate quantification of drug substances, impurities, and metabolites at trace levels. Regulatory requirements, such as those from FDA and EMA, continue to push detection limits lower, creating sustained demand for advanced noise reduction solutions.

Environmental testing laboratories represent another significant market segment, with growing requirements for detecting emerging contaminants at parts-per-trillion levels. The ability to distinguish true signals from baseline noise becomes paramount when monitoring persistent organic pollutants, pesticide residues, and other environmental contaminants that pose risks at extremely low concentrations.

Food safety testing has emerged as a rapidly expanding application area, particularly in developed economies with stringent regulatory frameworks. The need to detect adulterants, pesticides, and natural toxins in complex food matrices drives demand for improved baseline stability and noise reduction in chromatographic analyses.

Clinical diagnostics and forensic toxicology laboratories face similar challenges, requiring reliable detection of biomarkers and drugs at physiologically relevant concentrations. The market for specialized solutions addressing baseline noise in these applications has grown substantially, with particular emphasis on automated data processing algorithms that can distinguish true signals from instrumental noise.

Geographically, North America and Europe currently dominate the market for high-precision chromatographic technologies, though the Asia-Pacific region is experiencing the fastest growth rate, driven by expanding pharmaceutical manufacturing, contract research organizations, and increasing regulatory oversight in China, India, and South Korea.

Recent market research indicates that the analytical instrumentation market, which includes GC-MS systems, is projected to grow at a compound annual growth rate of 6.7% through 2028. Within this sector, technologies focused on enhancing signal-to-noise ratios and reducing baseline interference are experiencing particularly strong demand, as laboratories face increasing pressure to detect ever-lower concentrations of analytes in complex matrices.

The pharmaceutical and biotechnology sectors remain the largest consumers of high-precision chromatographic technologies, accounting for approximately 40% of the market share. These industries require exceptionally clean baselines for accurate quantification of drug substances, impurities, and metabolites at trace levels. Regulatory requirements, such as those from FDA and EMA, continue to push detection limits lower, creating sustained demand for advanced noise reduction solutions.

Environmental testing laboratories represent another significant market segment, with growing requirements for detecting emerging contaminants at parts-per-trillion levels. The ability to distinguish true signals from baseline noise becomes paramount when monitoring persistent organic pollutants, pesticide residues, and other environmental contaminants that pose risks at extremely low concentrations.

Food safety testing has emerged as a rapidly expanding application area, particularly in developed economies with stringent regulatory frameworks. The need to detect adulterants, pesticides, and natural toxins in complex food matrices drives demand for improved baseline stability and noise reduction in chromatographic analyses.

Clinical diagnostics and forensic toxicology laboratories face similar challenges, requiring reliable detection of biomarkers and drugs at physiologically relevant concentrations. The market for specialized solutions addressing baseline noise in these applications has grown substantially, with particular emphasis on automated data processing algorithms that can distinguish true signals from instrumental noise.

Geographically, North America and Europe currently dominate the market for high-precision chromatographic technologies, though the Asia-Pacific region is experiencing the fastest growth rate, driven by expanding pharmaceutical manufacturing, contract research organizations, and increasing regulatory oversight in China, India, and South Korea.

Current Challenges in GC-MS Baseline Noise Reduction

Despite significant advancements in GC-MS technology, baseline noise remains a persistent challenge that affects analytical sensitivity, accuracy, and reproducibility. Current GC-MS systems face several interconnected noise sources that collectively degrade chromatogram quality. Electronic noise from detector components, amplifiers, and analog-to-digital converters introduces random fluctuations that become particularly problematic when analyzing trace compounds. This electronic interference varies between instrument models and can worsen as hardware ages.

Column bleed represents another major challenge, occurring when stationary phases thermally degrade at elevated temperatures, releasing compounds that contribute to baseline drift. Modern columns have improved thermal stability, but complete elimination of column bleed remains elusive, especially during temperature-programmed analyses where baseline drift typically increases with temperature.

Carrier gas impurities introduce significant complications, as even trace contaminants in helium, hydrogen, or nitrogen can produce background signals. The global helium shortage has forced many laboratories to switch to alternative carrier gases, creating new baseline noise profiles that analysts must learn to manage. Oxygen and water vapor contamination particularly contribute to increased baseline noise and column degradation.

Sample matrix effects constitute a substantial challenge in complex sample analysis. Non-target compounds co-extracted from matrices can create broad, elevated baselines that mask analytes of interest. This issue is especially pronounced in environmental, biological, and food samples where hundreds of matrix components may be present. Current sample preparation techniques cannot completely eliminate these interfering compounds.

Instrumental parameters significantly impact baseline noise levels. Suboptimal flow rates, temperature programs, split ratios, and detector settings can all exacerbate noise issues. Many laboratories struggle to establish optimal conditions that balance separation efficiency, analysis time, and baseline stability across diverse sample types.

Data processing limitations further complicate noise reduction efforts. While modern software offers various baseline correction algorithms, these mathematical approaches sometimes introduce artifacts or distort quantitative results. The lack of standardized approaches to baseline correction creates inconsistencies in data processing across laboratories and research groups.

Aging instrumentation presents additional challenges as electronic components deteriorate, vacuum systems develop leaks, and contamination accumulates in the system. Many laboratories operate equipment beyond its optimal lifespan due to budget constraints, resulting in progressively worsening baseline performance that becomes increasingly difficult to address through routine maintenance procedures.

Column bleed represents another major challenge, occurring when stationary phases thermally degrade at elevated temperatures, releasing compounds that contribute to baseline drift. Modern columns have improved thermal stability, but complete elimination of column bleed remains elusive, especially during temperature-programmed analyses where baseline drift typically increases with temperature.

Carrier gas impurities introduce significant complications, as even trace contaminants in helium, hydrogen, or nitrogen can produce background signals. The global helium shortage has forced many laboratories to switch to alternative carrier gases, creating new baseline noise profiles that analysts must learn to manage. Oxygen and water vapor contamination particularly contribute to increased baseline noise and column degradation.

Sample matrix effects constitute a substantial challenge in complex sample analysis. Non-target compounds co-extracted from matrices can create broad, elevated baselines that mask analytes of interest. This issue is especially pronounced in environmental, biological, and food samples where hundreds of matrix components may be present. Current sample preparation techniques cannot completely eliminate these interfering compounds.

Instrumental parameters significantly impact baseline noise levels. Suboptimal flow rates, temperature programs, split ratios, and detector settings can all exacerbate noise issues. Many laboratories struggle to establish optimal conditions that balance separation efficiency, analysis time, and baseline stability across diverse sample types.

Data processing limitations further complicate noise reduction efforts. While modern software offers various baseline correction algorithms, these mathematical approaches sometimes introduce artifacts or distort quantitative results. The lack of standardized approaches to baseline correction creates inconsistencies in data processing across laboratories and research groups.

Aging instrumentation presents additional challenges as electronic components deteriorate, vacuum systems develop leaks, and contamination accumulates in the system. Many laboratories operate equipment beyond its optimal lifespan due to budget constraints, resulting in progressively worsening baseline performance that becomes increasingly difficult to address through routine maintenance procedures.

Established Methods for Baseline Noise Minimization

01 Signal processing techniques for noise reduction

Various signal processing algorithms can be applied to reduce baseline noise in GC-MS data. These include digital filtering, wavelet transforms, and mathematical modeling approaches that can effectively separate the analytical signal from background noise. Advanced computational methods help in identifying and removing systematic noise patterns while preserving the integrity of chromatographic peaks, resulting in improved signal-to-noise ratios and more reliable analytical results.- Signal processing techniques for baseline noise reduction: Various signal processing algorithms can be applied to reduce baseline noise in GC-MS data. These include digital filtering, wavelet transforms, and mathematical modeling approaches that can effectively separate the analytical signal from background noise. Advanced computational methods help in identifying and removing systematic noise patterns while preserving the integrity of chromatographic peaks, resulting in improved detection limits and more accurate quantification.

- Hardware modifications to minimize baseline noise: Physical modifications to GC-MS instrumentation can significantly reduce baseline noise. These include improved ion source designs, enhanced vacuum systems, temperature-controlled components, and vibration isolation mechanisms. Specialized detector configurations and optimized electronic components help minimize electronic noise and thermal fluctuations that contribute to baseline instability, resulting in higher signal-to-noise ratios and better analytical performance.

- Calibration and reference standards for noise compensation: Implementation of calibration procedures and reference standards can help compensate for baseline noise in GC-MS analysis. By incorporating internal standards and developing calibration models that account for background variations, analysts can normalize data and correct for systematic noise. Regular system suitability testing and the use of quality control samples enable consistent baseline correction across multiple analyses.

- Sample preparation techniques to reduce matrix interference: Advanced sample preparation methods can minimize matrix-related baseline noise in GC-MS analysis. Techniques such as solid-phase extraction, liquid-liquid extraction, and derivatization can remove interfering compounds that contribute to baseline instability. Clean-up procedures targeting specific contaminants help reduce chemical noise, while optimized extraction protocols improve sample homogeneity and reduce variability in baseline response.

- Automated baseline correction algorithms: Automated software solutions can effectively address baseline noise issues in GC-MS data. These include machine learning algorithms that can identify and subtract background noise patterns, adaptive baseline correction methods that adjust to changing chromatographic conditions, and intelligent peak detection systems that can distinguish between true analyte signals and noise artifacts. These automated approaches improve data processing efficiency and reduce subjective errors in manual baseline adjustments.

02 Hardware modifications and optimizations

Physical modifications to GC-MS instrumentation can significantly reduce baseline noise. These include improvements in ion source design, detector sensitivity enhancements, and thermal stability controls. Specialized components such as noise-reducing column connections, improved vacuum systems, and electronic noise suppression circuits help minimize baseline fluctuations. Hardware optimizations focus on reducing electronic interference, improving temperature control, and enhancing overall instrument stability.Expand Specific Solutions03 Calibration and reference standards methods

Implementing proper calibration procedures and reference standards can compensate for baseline noise in GC-MS analysis. These methods involve the use of internal standards, blank subtraction techniques, and multi-point calibration curves to normalize data and account for background interference. Regular system suitability testing and the application of statistical tools help establish reliable baseline corrections and improve quantitative accuracy in the presence of noise.Expand Specific Solutions04 Sample preparation techniques

Advanced sample preparation methods can minimize contaminants that contribute to baseline noise in GC-MS analysis. These include selective extraction procedures, sample clean-up protocols, and derivatization techniques that enhance analyte detection while reducing matrix effects. Proper sample handling, storage conditions, and the use of high-purity solvents and reagents help eliminate extraneous compounds that can cause baseline irregularities and interfere with target analyte identification.Expand Specific Solutions05 Automated baseline correction algorithms

Specialized software solutions incorporate automated baseline correction algorithms specifically designed for GC-MS data. These intelligent systems can identify and adjust for baseline drift, random noise, and systematic interference patterns without manual intervention. Machine learning approaches and adaptive algorithms continuously improve noise recognition capabilities, allowing for real-time baseline corrections and enhanced peak detection even in complex sample matrices with significant background noise.Expand Specific Solutions

Leading Manufacturers and Research Groups in GC-MS Instrumentation

The GC-MS baseline noise minimization market is in a mature growth phase, with an estimated global analytical instruments market size of $25-30 billion. Technologically, the field has reached high maturity with continuous incremental innovations. Leading players include Shimadzu Corporation and Agilent Technologies, who dominate with comprehensive GC-MS solutions featuring advanced noise reduction algorithms. Waters Corporation (through Micromass UK) offers specialized high-sensitivity MS systems, while Tosoh Corporation provides complementary chromatography technologies. The competitive landscape is characterized by established players focusing on integration of AI and machine learning for automated baseline correction, with increasing emphasis on software solutions rather than hardware-only approaches to noise minimization.

Shimadzu Corp.

Technical Solution: Shimadzu's approach to minimizing GC-MS baseline noise incorporates advanced digital filtering algorithms and hardware innovations. Their Advanced Flow Technology (AFT) optimizes carrier gas flow paths to reduce baseline fluctuations, while their proprietary noise-reduction algorithms apply sophisticated mathematical filters to chromatogram data in real-time. The company's latest GC-MS systems feature temperature-controlled ion sources that maintain consistent ionization conditions, significantly reducing thermal noise contributions. Additionally, Shimadzu implements active column bleed compensation technology that automatically subtracts column bleed profiles from analytical results, providing cleaner baselines even at high temperatures. Their systems also utilize high-precision electronic pressure control (EPC) that minimizes pressure fluctuations, a common source of baseline instability.

Strengths: Superior hardware integration with software filtering creates comprehensive noise reduction across the entire analytical system. Their temperature-controlled components provide exceptional stability in varying laboratory conditions. Weaknesses: Premium hardware solutions increase initial investment costs, and some advanced filtering algorithms may require specialized training for optimal configuration.

Tosoh Corp.

Technical Solution: Tosoh Corporation tackles GC-MS baseline noise through their proprietary CleanColumn technology, which features specialized stationary phases designed to minimize column bleed even at elevated temperatures. Their approach focuses on fundamental improvements to column chemistry and manufacturing processes, resulting in significantly reduced chemical background noise. Tosoh's GC-MS systems incorporate advanced electronic noise suppression circuits that filter power supply fluctuations before they affect sensitive detector components. Their data systems feature adaptive baseline correction algorithms that can distinguish between true analytical signals and background variations. Additionally, Tosoh implements specialized inlet designs that minimize sample degradation during injection, reducing ghost peaks and other artifacts that complicate baseline interpretation. Their systems also utilize high-purity carrier gas management systems with integrated moisture and oxygen traps to eliminate contaminant-induced baseline disturbances.

Strengths: Exceptional column technology provides remarkably stable baselines even during high-temperature programming. Their integrated approach to contamination prevention reduces maintenance requirements. Weaknesses: Some specialized column technologies may have more limited application ranges compared to general-purpose columns, and their systems may require specific consumables for optimal performance.

Critical Patents and Algorithms for Signal-to-Noise Enhancement

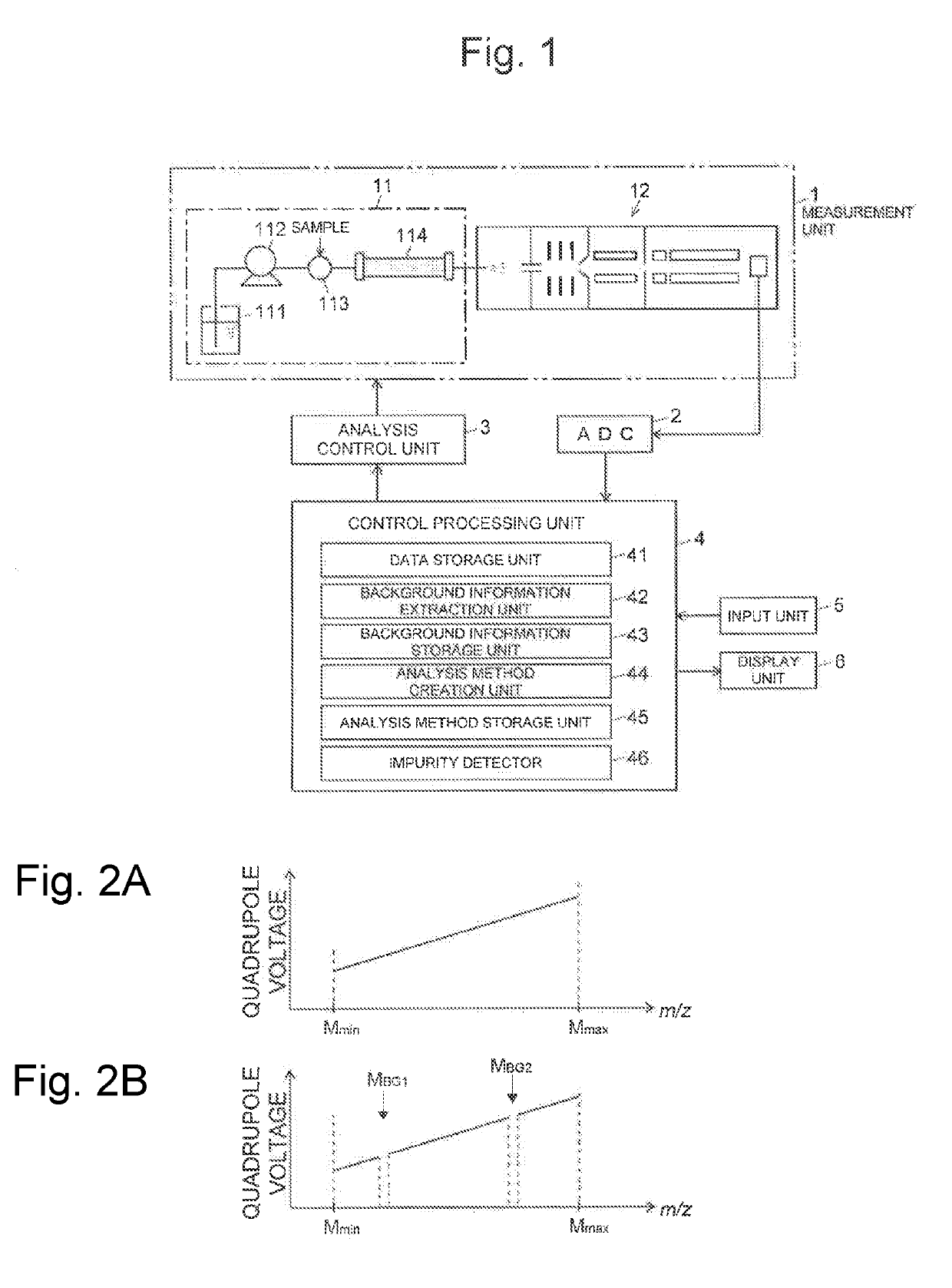

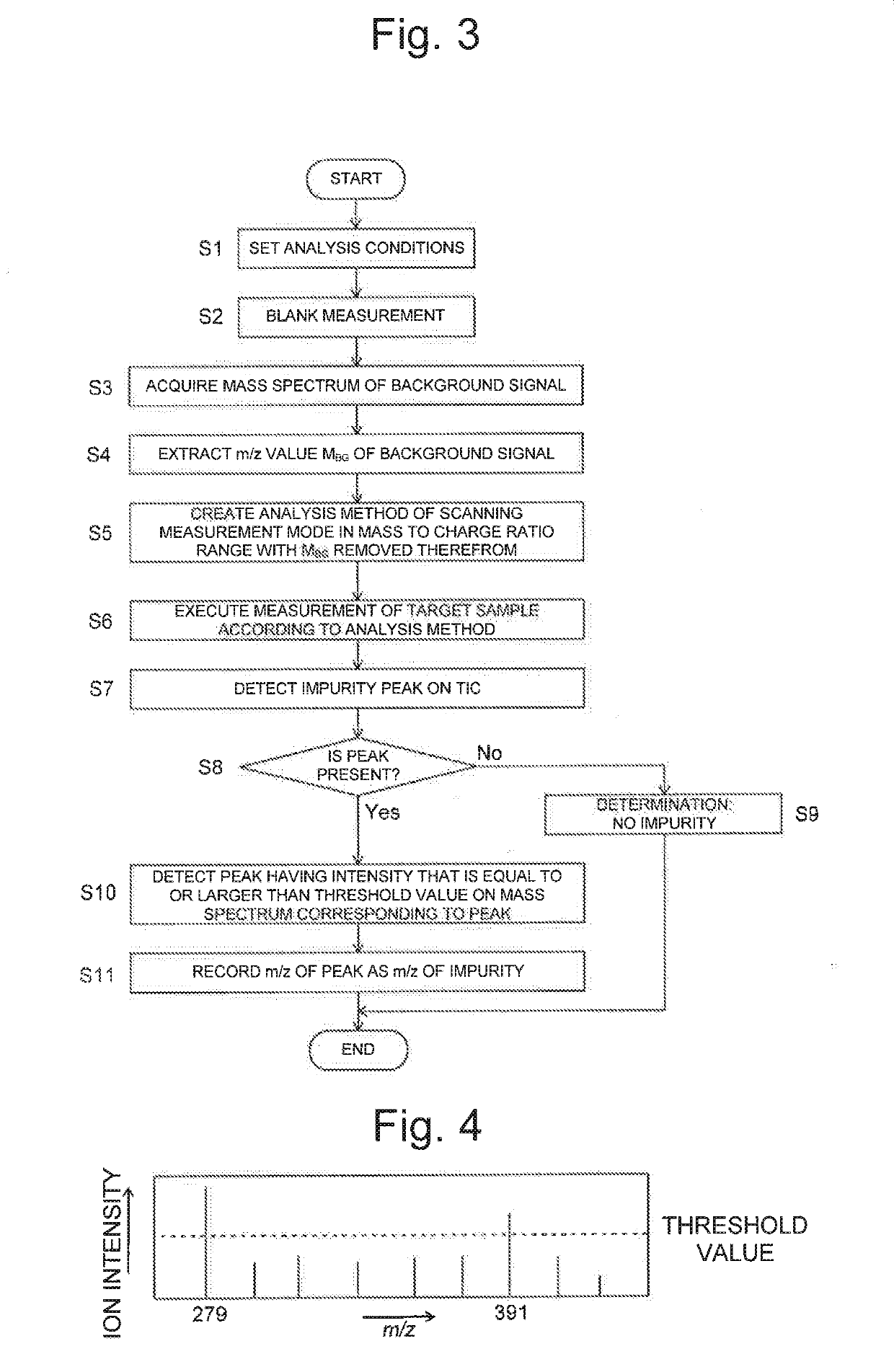

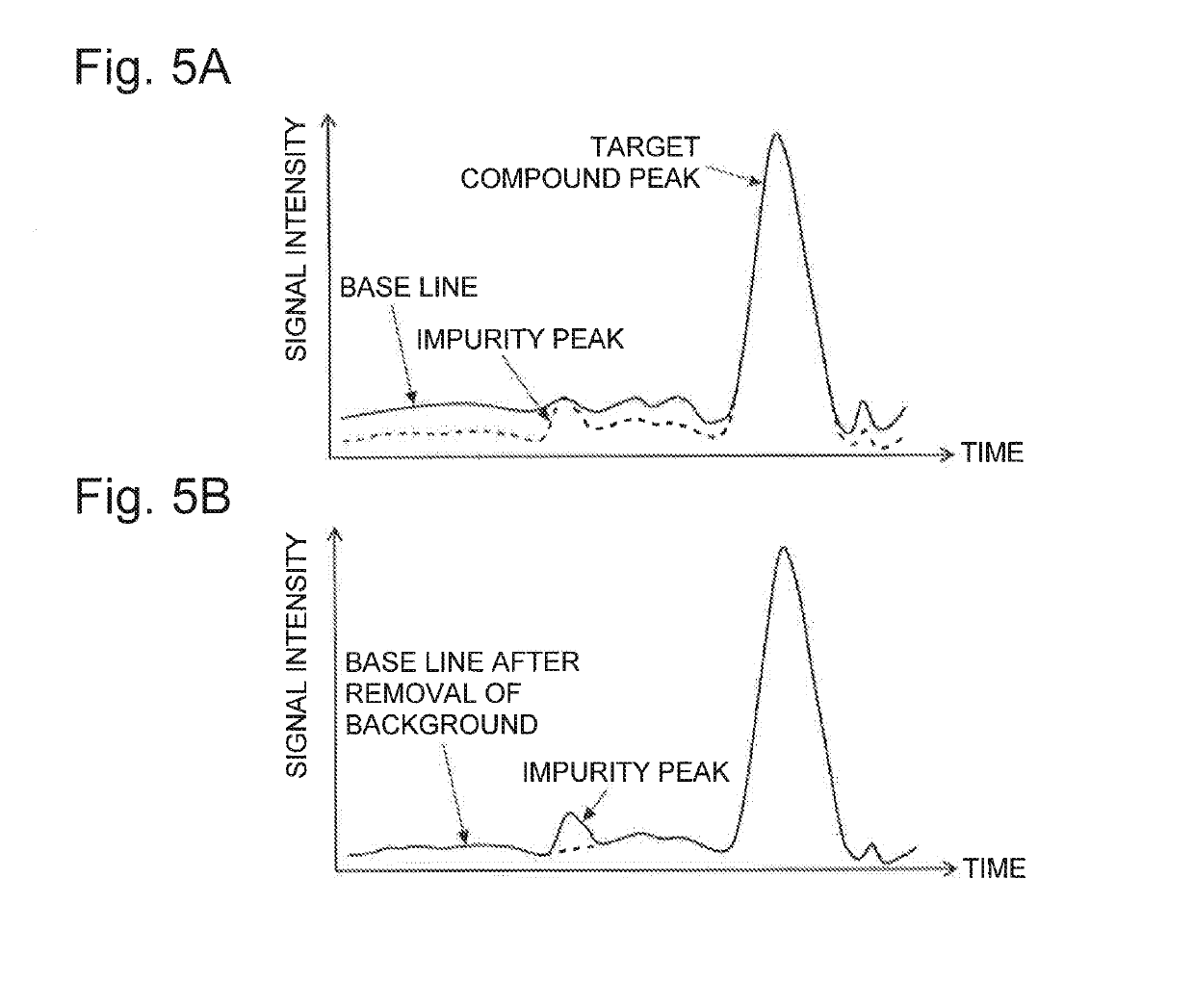

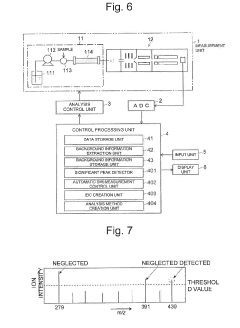

Analysis device

PatentActiveUS20190170712A1

Innovation

- An analysis device that includes a background information storage unit to store parameter values of background signals and an analysis method creation unit to exclude these signals from the analysis, allowing for the detection of minute impurities by setting specific analysis conditions, such as mass-to-charge ratios or wavelength ranges, thereby isolating and identifying impurity peaks.

Gas chromatograph mass spectrometer

PatentWO2021224973A1

Innovation

- Incorporating a sixth opening in the ionization chamber with a specific area ratio to the internal volume, allowing for the efficient discharge of carrier gases like nitrogen, thereby reducing their residence time and minimizing sensitivity loss.

Validation Protocols for GC-MS Method Development

Validation protocols for GC-MS method development require systematic approaches to ensure reliable, reproducible, and accurate analytical results. These protocols must address baseline noise issues which can significantly impact detection limits and quantification accuracy. The validation process begins with establishing system suitability parameters that specifically evaluate baseline stability and noise levels before any analytical run.

Method validation should include specificity testing to ensure that baseline disturbances are not misinterpreted as analyte signals. This involves analyzing blank samples under identical conditions to those used for actual samples, allowing for clear differentiation between baseline noise and genuine analyte response. Linearity assessment must account for signal-to-noise ratios at lower concentration levels, ensuring that baseline fluctuations do not compromise quantification at these critical points.

Precision evaluation protocols should incorporate repeated injections not only to determine analytical variability but also to assess the consistency of baseline behavior over time. Short-term and long-term precision studies can reveal whether baseline drift or noise patterns change with extended instrument operation, providing valuable information for method robustness.

Detection and quantification limit determinations are directly impacted by baseline noise levels. Validation protocols should specify signal-to-noise ratio requirements (typically 3:1 for LOD and 10:1 for LOQ) and include procedures for baseline noise measurement. Statistical approaches such as standard deviation of response and slope methods should be employed to establish these critical parameters.

Robustness testing within validation protocols must specifically challenge conditions that might affect baseline stability. This includes evaluations of column temperature fluctuations, carrier gas flow variations, and injection technique modifications. Each parameter should be systematically varied within reasonable operational ranges to determine its impact on baseline characteristics.

Inter-laboratory validation components should include specific criteria for comparing baseline noise levels between different instruments and operators. Standardized procedures for measuring and reporting noise amplitude, frequency, and pattern characteristics enable meaningful comparisons across different laboratory environments.

Documentation requirements within validation protocols must include detailed records of all baseline optimization procedures performed, including electronic data files showing before-and-after chromatograms. This documentation serves as evidence of method performance and provides valuable reference material for troubleshooting future baseline issues that may arise during routine analysis.

Method validation should include specificity testing to ensure that baseline disturbances are not misinterpreted as analyte signals. This involves analyzing blank samples under identical conditions to those used for actual samples, allowing for clear differentiation between baseline noise and genuine analyte response. Linearity assessment must account for signal-to-noise ratios at lower concentration levels, ensuring that baseline fluctuations do not compromise quantification at these critical points.

Precision evaluation protocols should incorporate repeated injections not only to determine analytical variability but also to assess the consistency of baseline behavior over time. Short-term and long-term precision studies can reveal whether baseline drift or noise patterns change with extended instrument operation, providing valuable information for method robustness.

Detection and quantification limit determinations are directly impacted by baseline noise levels. Validation protocols should specify signal-to-noise ratio requirements (typically 3:1 for LOD and 10:1 for LOQ) and include procedures for baseline noise measurement. Statistical approaches such as standard deviation of response and slope methods should be employed to establish these critical parameters.

Robustness testing within validation protocols must specifically challenge conditions that might affect baseline stability. This includes evaluations of column temperature fluctuations, carrier gas flow variations, and injection technique modifications. Each parameter should be systematically varied within reasonable operational ranges to determine its impact on baseline characteristics.

Inter-laboratory validation components should include specific criteria for comparing baseline noise levels between different instruments and operators. Standardized procedures for measuring and reporting noise amplitude, frequency, and pattern characteristics enable meaningful comparisons across different laboratory environments.

Documentation requirements within validation protocols must include detailed records of all baseline optimization procedures performed, including electronic data files showing before-and-after chromatograms. This documentation serves as evidence of method performance and provides valuable reference material for troubleshooting future baseline issues that may arise during routine analysis.

Environmental Factors Affecting Chromatographic Performance

Environmental factors play a crucial role in the performance of GC-MS systems and can significantly contribute to baseline noise in chromatograms. Laboratory temperature fluctuations represent one of the most impactful environmental variables, as even minor variations can cause thermal expansion or contraction of system components, leading to baseline drift and increased noise levels. Optimal GC-MS operation typically requires temperature stability within ±2°C, with dedicated climate control systems being essential in analytical laboratories.

Electromagnetic interference (EMI) constitutes another significant environmental factor affecting chromatographic performance. Modern laboratories contain numerous electronic devices that generate electromagnetic fields capable of interfering with the sensitive electronic components of GC-MS systems. Power supplies, nearby motors, fluorescent lighting, and unshielded electronic equipment can all contribute to signal distortion and elevated baseline noise. Proper grounding, EMI shielding, and strategic placement of GC-MS instruments away from major EMI sources are necessary preventive measures.

Vibration represents a third critical environmental factor that can degrade chromatographic performance. Mechanical vibrations transmitted through building structures from HVAC systems, centrifuges, or even foot traffic can affect the precision of sample injection, column stability, and detector response. These vibrations manifest as irregular, high-frequency noise patterns in chromatograms. Anti-vibration tables, isolation platforms, and proper instrument placement away from vibration sources are essential for minimizing this type of interference.

Humidity variations can also significantly impact GC-MS baseline stability. Excessive humidity may cause condensation within electronic components, leading to electrical noise or even short circuits. Conversely, extremely low humidity environments can generate static electricity, which interferes with sensitive electronic measurements. Maintaining relative humidity between 40-60% typically provides optimal conditions for GC-MS operation.

Atmospheric contaminants present in laboratory air can directly affect chromatographic performance. Volatile organic compounds from solvents, cleaning agents, or personal care products can enter the GC system and appear as ghost peaks or elevated baseline noise. Similarly, particulate matter can contaminate samples or instrument components. HEPA filtration systems, proper laboratory ventilation, and implementation of clean room protocols in sample preparation areas can significantly reduce these environmental contaminants.

Barometric pressure fluctuations, though often overlooked, can influence carrier gas flow rates and retention times in GC systems. Modern GC instruments typically incorporate electronic pressure control to compensate for these variations, but older systems may exhibit baseline instability during significant weather changes or in laboratories at varying elevations.

Electromagnetic interference (EMI) constitutes another significant environmental factor affecting chromatographic performance. Modern laboratories contain numerous electronic devices that generate electromagnetic fields capable of interfering with the sensitive electronic components of GC-MS systems. Power supplies, nearby motors, fluorescent lighting, and unshielded electronic equipment can all contribute to signal distortion and elevated baseline noise. Proper grounding, EMI shielding, and strategic placement of GC-MS instruments away from major EMI sources are necessary preventive measures.

Vibration represents a third critical environmental factor that can degrade chromatographic performance. Mechanical vibrations transmitted through building structures from HVAC systems, centrifuges, or even foot traffic can affect the precision of sample injection, column stability, and detector response. These vibrations manifest as irregular, high-frequency noise patterns in chromatograms. Anti-vibration tables, isolation platforms, and proper instrument placement away from vibration sources are essential for minimizing this type of interference.

Humidity variations can also significantly impact GC-MS baseline stability. Excessive humidity may cause condensation within electronic components, leading to electrical noise or even short circuits. Conversely, extremely low humidity environments can generate static electricity, which interferes with sensitive electronic measurements. Maintaining relative humidity between 40-60% typically provides optimal conditions for GC-MS operation.

Atmospheric contaminants present in laboratory air can directly affect chromatographic performance. Volatile organic compounds from solvents, cleaning agents, or personal care products can enter the GC system and appear as ghost peaks or elevated baseline noise. Similarly, particulate matter can contaminate samples or instrument components. HEPA filtration systems, proper laboratory ventilation, and implementation of clean room protocols in sample preparation areas can significantly reduce these environmental contaminants.

Barometric pressure fluctuations, though often overlooked, can influence carrier gas flow rates and retention times in GC systems. Modern GC instruments typically incorporate electronic pressure control to compensate for these variations, but older systems may exhibit baseline instability during significant weather changes or in laboratories at varying elevations.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!