How to Validate GC-MS Data for Trace-level Analysis

SEP 22, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

GC-MS Trace Analysis Background and Objectives

Gas Chromatography-Mass Spectrometry (GC-MS) has evolved significantly since its inception in the 1950s, becoming an indispensable analytical technique for trace-level analysis across various industries including environmental monitoring, food safety, forensic science, and pharmaceutical development. The technology combines the separation capabilities of gas chromatography with the detection specificity of mass spectrometry, enabling the identification and quantification of compounds at extremely low concentrations, often in the parts-per-billion (ppb) or parts-per-trillion (ppt) range.

The evolution of GC-MS technology has been marked by continuous improvements in sensitivity, selectivity, and reliability. Early systems were limited by low resolution and sensitivity constraints, but modern instruments feature advanced ionization techniques, high-resolution mass analyzers, and sophisticated data processing algorithms that have dramatically enhanced detection capabilities for trace analysis.

Current technological trends in GC-MS validation include the development of automated validation protocols, implementation of machine learning algorithms for data interpretation, and integration with other analytical techniques to create comprehensive analytical platforms. These advancements are driving the field toward greater precision and efficiency in trace-level analysis.

The primary objective of GC-MS data validation for trace-level analysis is to ensure the reliability, accuracy, and reproducibility of analytical results at extremely low concentrations where signal-to-noise ratios are challenging. This involves establishing robust validation protocols that address the unique challenges of trace analysis, including matrix effects, contamination risks, and instrument sensitivity limitations.

Specific technical goals include developing standardized validation procedures that comply with regulatory requirements across different industries, optimizing sample preparation techniques to enhance recovery of trace analytes, implementing effective calibration strategies for quantification at low concentrations, and establishing appropriate quality control measures to monitor system performance and data integrity throughout the analytical process.

Additionally, there is a growing emphasis on harmonizing validation approaches across laboratories and regulatory frameworks to ensure consistency in trace-level analysis results globally. This harmonization effort aims to establish universal acceptance criteria for method validation parameters such as limit of detection (LOD), limit of quantification (LOQ), linearity, precision, and accuracy specifically tailored to the challenges of trace analysis.

The ultimate technical objective is to develop a comprehensive validation framework that ensures GC-MS data for trace-level analysis is scientifically sound, defensible, and fit-for-purpose across diverse applications, while accommodating the rapid technological advancements in instrumentation and data processing capabilities.

The evolution of GC-MS technology has been marked by continuous improvements in sensitivity, selectivity, and reliability. Early systems were limited by low resolution and sensitivity constraints, but modern instruments feature advanced ionization techniques, high-resolution mass analyzers, and sophisticated data processing algorithms that have dramatically enhanced detection capabilities for trace analysis.

Current technological trends in GC-MS validation include the development of automated validation protocols, implementation of machine learning algorithms for data interpretation, and integration with other analytical techniques to create comprehensive analytical platforms. These advancements are driving the field toward greater precision and efficiency in trace-level analysis.

The primary objective of GC-MS data validation for trace-level analysis is to ensure the reliability, accuracy, and reproducibility of analytical results at extremely low concentrations where signal-to-noise ratios are challenging. This involves establishing robust validation protocols that address the unique challenges of trace analysis, including matrix effects, contamination risks, and instrument sensitivity limitations.

Specific technical goals include developing standardized validation procedures that comply with regulatory requirements across different industries, optimizing sample preparation techniques to enhance recovery of trace analytes, implementing effective calibration strategies for quantification at low concentrations, and establishing appropriate quality control measures to monitor system performance and data integrity throughout the analytical process.

Additionally, there is a growing emphasis on harmonizing validation approaches across laboratories and regulatory frameworks to ensure consistency in trace-level analysis results globally. This harmonization effort aims to establish universal acceptance criteria for method validation parameters such as limit of detection (LOD), limit of quantification (LOQ), linearity, precision, and accuracy specifically tailored to the challenges of trace analysis.

The ultimate technical objective is to develop a comprehensive validation framework that ensures GC-MS data for trace-level analysis is scientifically sound, defensible, and fit-for-purpose across diverse applications, while accommodating the rapid technological advancements in instrumentation and data processing capabilities.

Market Demand for Accurate Trace-level Analysis

The global market for trace-level analytical techniques has experienced significant growth in recent years, driven primarily by increasing regulatory requirements across multiple industries. Environmental monitoring agencies worldwide have established progressively stricter limits for contaminants in soil, water, and air, necessitating more sensitive and accurate analytical methods. The EPA, EU Environmental Directives, and similar bodies in Asia have continuously lowered acceptable thresholds for various pollutants, creating substantial demand for validated GC-MS methodologies capable of reliable trace-level detection.

The pharmaceutical industry represents another major market driver, with regulatory bodies like the FDA and EMA enforcing stringent guidelines for impurity profiling. The ICH Q3 guidelines specifically address the need for validated analytical methods to detect genotoxic impurities at levels as low as parts per billion. This regulatory landscape has created a substantial market for advanced GC-MS validation protocols, with pharmaceutical companies investing heavily in analytical capabilities that can meet these requirements.

Food safety testing constitutes a rapidly expanding segment, particularly following high-profile contamination incidents that have heightened consumer awareness and regulatory scrutiny. The global food testing market, valued at approximately $19.5 billion in 2021, is projected to grow at a CAGR of 8.2% through 2028, with trace contaminant analysis representing a significant portion of this growth.

Forensic toxicology presents another critical application area where validated trace-level analysis is essential. The ability to detect minute quantities of substances in biological matrices has profound legal implications, driving demand for rigorously validated analytical methods that can withstand legal challenges.

The clinical diagnostics sector has also emerged as a significant market for trace-level analysis, particularly for therapeutic drug monitoring and metabolomics research. The growing emphasis on personalized medicine has increased the need for highly sensitive analytical techniques capable of detecting biomarkers at extremely low concentrations.

Industrial quality control applications, particularly in semiconductor manufacturing and advanced materials production, require increasingly sensitive analytical capabilities to detect contaminants that can affect product performance. This sector's demand for validated trace analysis continues to grow as manufacturing tolerances become more exacting.

Market research indicates that laboratories are increasingly seeking comprehensive validation solutions rather than piecemeal approaches, creating opportunities for integrated software and hardware systems specifically designed for GC-MS validation at trace levels. This trend toward holistic validation solutions represents a significant market opportunity for analytical instrument manufacturers and software developers.

The pharmaceutical industry represents another major market driver, with regulatory bodies like the FDA and EMA enforcing stringent guidelines for impurity profiling. The ICH Q3 guidelines specifically address the need for validated analytical methods to detect genotoxic impurities at levels as low as parts per billion. This regulatory landscape has created a substantial market for advanced GC-MS validation protocols, with pharmaceutical companies investing heavily in analytical capabilities that can meet these requirements.

Food safety testing constitutes a rapidly expanding segment, particularly following high-profile contamination incidents that have heightened consumer awareness and regulatory scrutiny. The global food testing market, valued at approximately $19.5 billion in 2021, is projected to grow at a CAGR of 8.2% through 2028, with trace contaminant analysis representing a significant portion of this growth.

Forensic toxicology presents another critical application area where validated trace-level analysis is essential. The ability to detect minute quantities of substances in biological matrices has profound legal implications, driving demand for rigorously validated analytical methods that can withstand legal challenges.

The clinical diagnostics sector has also emerged as a significant market for trace-level analysis, particularly for therapeutic drug monitoring and metabolomics research. The growing emphasis on personalized medicine has increased the need for highly sensitive analytical techniques capable of detecting biomarkers at extremely low concentrations.

Industrial quality control applications, particularly in semiconductor manufacturing and advanced materials production, require increasingly sensitive analytical capabilities to detect contaminants that can affect product performance. This sector's demand for validated trace analysis continues to grow as manufacturing tolerances become more exacting.

Market research indicates that laboratories are increasingly seeking comprehensive validation solutions rather than piecemeal approaches, creating opportunities for integrated software and hardware systems specifically designed for GC-MS validation at trace levels. This trend toward holistic validation solutions represents a significant market opportunity for analytical instrument manufacturers and software developers.

Current Challenges in GC-MS Data Validation

Despite significant advancements in GC-MS technology, data validation for trace-level analysis remains fraught with complex challenges. The fundamental difficulty lies in distinguishing genuine analyte signals from background noise when working with concentrations at parts-per-billion or parts-per-trillion levels. This signal-to-noise ratio challenge is exacerbated by matrix effects, where components in the sample matrix can suppress or enhance analyte signals, leading to inaccurate quantification.

Instrument sensitivity limitations present another significant hurdle. Even with modern high-sensitivity GC-MS systems, detection limits may still be insufficient for ultra-trace analysis in complex environmental, forensic, or biological samples. This is particularly problematic when regulatory requirements demand quantification at levels approaching instrumental detection limits.

Calibration at trace levels introduces unique validation challenges. The preparation of calibration standards at extremely low concentrations is prone to errors due to adsorption losses, contamination, and degradation. Additionally, the linear dynamic range of many GC-MS methods may not extend sufficiently into the trace region, necessitating complex calibration strategies.

Carryover effects become increasingly problematic at trace levels. Minute amounts of analytes retained in the GC system from previous injections can contribute significantly to subsequent analyses, potentially leading to false positives or inaccurate quantification. This necessitates rigorous system cleaning protocols and careful sequence design.

Quality control measures face heightened scrutiny in trace analysis. Traditional approaches to calculating method detection limits (MDLs) and limits of quantification (LOQs) may not adequately address the statistical challenges of trace-level work. Furthermore, reference materials certified at ultra-trace levels are often unavailable for many analytes, complicating method validation.

Data processing algorithms present additional challenges. Peak integration becomes increasingly subjective at trace levels where peaks may be partially obscured by noise. Automated integration algorithms may fail to consistently identify true analyte signals, while manual integration introduces operator subjectivity and potential bias.

Regulatory compliance adds another layer of complexity. Different regulatory bodies worldwide have established varying requirements for trace-level analysis validation, creating challenges for laboratories operating in multiple jurisdictions. Meeting these diverse requirements while maintaining scientific rigor demands sophisticated validation strategies.

Emerging contaminants of concern, such as per- and polyfluoroalkyl substances (PFAS) and pharmaceutical residues, are driving the need for increasingly sensitive analytical methods. These compounds often require detection at extraordinarily low concentrations, pushing current GC-MS validation protocols to their limits and necessitating innovative approaches to ensure data quality.

Instrument sensitivity limitations present another significant hurdle. Even with modern high-sensitivity GC-MS systems, detection limits may still be insufficient for ultra-trace analysis in complex environmental, forensic, or biological samples. This is particularly problematic when regulatory requirements demand quantification at levels approaching instrumental detection limits.

Calibration at trace levels introduces unique validation challenges. The preparation of calibration standards at extremely low concentrations is prone to errors due to adsorption losses, contamination, and degradation. Additionally, the linear dynamic range of many GC-MS methods may not extend sufficiently into the trace region, necessitating complex calibration strategies.

Carryover effects become increasingly problematic at trace levels. Minute amounts of analytes retained in the GC system from previous injections can contribute significantly to subsequent analyses, potentially leading to false positives or inaccurate quantification. This necessitates rigorous system cleaning protocols and careful sequence design.

Quality control measures face heightened scrutiny in trace analysis. Traditional approaches to calculating method detection limits (MDLs) and limits of quantification (LOQs) may not adequately address the statistical challenges of trace-level work. Furthermore, reference materials certified at ultra-trace levels are often unavailable for many analytes, complicating method validation.

Data processing algorithms present additional challenges. Peak integration becomes increasingly subjective at trace levels where peaks may be partially obscured by noise. Automated integration algorithms may fail to consistently identify true analyte signals, while manual integration introduces operator subjectivity and potential bias.

Regulatory compliance adds another layer of complexity. Different regulatory bodies worldwide have established varying requirements for trace-level analysis validation, creating challenges for laboratories operating in multiple jurisdictions. Meeting these diverse requirements while maintaining scientific rigor demands sophisticated validation strategies.

Emerging contaminants of concern, such as per- and polyfluoroalkyl substances (PFAS) and pharmaceutical residues, are driving the need for increasingly sensitive analytical methods. These compounds often require detection at extraordinarily low concentrations, pushing current GC-MS validation protocols to their limits and necessitating innovative approaches to ensure data quality.

Existing GC-MS Data Validation Protocols

01 GC-MS calibration and quality control methods

Various calibration and quality control methods are employed to ensure the accuracy and reliability of GC-MS data. These include the use of internal standards, calibration curves, and quality control samples. Regular system suitability tests and performance checks are conducted to maintain instrument performance. Validation parameters such as linearity, precision, accuracy, and detection limits are established to ensure the reliability of analytical results.- Validation methods for GC-MS data accuracy: Various methods are employed to validate the accuracy of GC-MS data, including the use of reference standards, calibration curves, and statistical analysis. These validation procedures ensure that the analytical results are reliable and reproducible. The methods typically involve comparing the obtained data with known standards, evaluating the linearity of response, and assessing the precision and accuracy of the measurements.

- Quality control systems for GC-MS analysis: Quality control systems are implemented to monitor and maintain the reliability of GC-MS analysis. These systems include automated validation protocols, internal quality checks, and performance verification procedures. They help to identify and correct analytical errors, ensure instrument performance, and maintain data integrity throughout the analytical process.

- Automated data processing and validation software: Specialized software solutions are developed for automated processing and validation of GC-MS data. These software tools can perform peak identification, quantification, and validation with minimal human intervention. They incorporate algorithms for background subtraction, noise reduction, and automated quality assessment, significantly improving the efficiency and reliability of GC-MS data analysis.

- Calibration techniques for GC-MS instruments: Specific calibration techniques are essential for ensuring the accuracy of GC-MS instruments. These include multi-point calibration methods, internal standard calibration, and matrix-matched calibration approaches. Proper calibration accounts for instrument drift, matrix effects, and ensures that quantitative measurements are accurate across the analytical range of interest.

- Hardware innovations for improved GC-MS data validation: Hardware innovations have been developed to enhance the validation capabilities of GC-MS systems. These include advanced detectors with improved sensitivity and selectivity, automated sample preparation systems, and integrated quality control modules. Such hardware improvements reduce analytical variability, minimize contamination risks, and provide more consistent and reliable analytical results.

02 Automated data processing and validation systems

Automated systems have been developed for processing and validating GC-MS data to improve efficiency and reduce human error. These systems include software algorithms that can automatically identify peaks, perform integration, and validate results against predefined criteria. Machine learning approaches are also being implemented to enhance data validation processes by recognizing patterns and anomalies in complex datasets.Expand Specific Solutions03 Method validation protocols for specific applications

Specialized validation protocols have been developed for specific GC-MS applications in various fields such as environmental analysis, food safety, pharmaceutical testing, and forensic science. These protocols define specific validation parameters, acceptance criteria, and procedures tailored to the particular analytical requirements of each application. They ensure that the analytical methods are fit for their intended purpose and produce reliable results.Expand Specific Solutions04 Hardware innovations for improved data quality

Hardware innovations in GC-MS systems have been developed to improve data quality and validation capabilities. These include advanced ion source designs, improved detector technologies, and enhanced separation columns. Specialized sample introduction systems and automated sample preparation devices help reduce contamination and improve reproducibility. Hardware diagnostics and self-validation features are also incorporated to ensure system performance.Expand Specific Solutions05 Statistical approaches for data validation

Statistical methods are employed to validate GC-MS data and assess measurement uncertainty. These include techniques for outlier detection, trend analysis, and statistical process control. Advanced statistical approaches such as principal component analysis, cluster analysis, and multivariate statistical methods are used to evaluate complex datasets and identify patterns or anomalies. Statistical tools help establish confidence intervals and determine the significance of analytical results.Expand Specific Solutions

Key Players in Analytical Instrumentation Industry

The GC-MS trace-level analysis validation market is in a growth phase, with increasing demand driven by stringent regulatory requirements across pharmaceutical, environmental, and food safety sectors. The market size is expanding at approximately 5-7% annually, reaching an estimated $1.2 billion globally. Technologically, the field shows varying maturity levels with established players like Shimadzu Corp. offering advanced solutions alongside emerging innovations. Key competitors include Shimadzu Corp., which leads with comprehensive validation software; Thales SA focusing on security-oriented validation protocols; NUCTECH Co. developing specialized detection systems; and Robert Bosch GmbH integrating validation into broader analytical platforms. Academic institutions like Shanghai University and Central South University contribute significant research advancements, while companies like Baidu and Tencent are exploring AI-enhanced data validation approaches for next-generation GC-MS systems.

Comprehensive Technical Service Center of Fuqing Entry Exit Inspection and Quarantine Bureau

Technical Solution: The Comprehensive Technical Service Center of Fuqing Entry Exit Inspection and Quarantine Bureau has developed a specialized GC-MS data validation protocol for trace contaminant analysis in imported and exported goods. Their approach implements a multi-level quality assurance system that begins with pre-analytical validation including sample homogeneity testing and storage stability studies to ensure sample integrity before analysis. For trace-level detection, they employ matrix-matched calibration curves with internal standardization using isotopically-labeled analogues whenever possible. Their validation protocol includes mandatory proficiency testing participation with international reference laboratories to ensure alignment with global standards. The system incorporates comprehensive measurement uncertainty calculations following ISO/IEC 17025 guidelines, with expanded uncertainty (k=2) reported for all trace-level results. For particularly challenging matrices, they implement a sequential extraction and cleanup procedure with recovery checks at each step, ensuring minimal analyte loss while maximizing interference removal. Their data review process requires independent verification of all trace-level findings by a second qualified analyst before results are reported.

Strengths: Exceptionally thorough validation approach designed specifically for regulatory compliance; excellent integration with international standards; comprehensive uncertainty reporting enhances result reliability. Weaknesses: Extensive validation requirements increase analysis time and cost; highly specialized for regulatory applications with less flexibility for research purposes; significant training requirements for analysts to implement the complete protocol.

Shimadzu Corp.

Technical Solution: Shimadzu Corporation has developed comprehensive GC-MS data validation solutions for trace-level analysis through their LabSolutions software platform. Their approach incorporates automated system suitability testing (SST) that continuously monitors instrument performance parameters including mass accuracy, resolution, and sensitivity. For trace-level analysis, Shimadzu employs their Advanced Flow Technology (AFT) which includes backflushing capabilities to prevent carryover contamination that can compromise trace detection. Their Smart Compounds Database contains over 15,000 compounds with retention indices and mass spectra for reliable identification of trace components. Shimadzu's GCMS-TQ series implements automated tuning protocols specifically optimized for trace analysis, maintaining consistent ion ratios even at sub-ppb levels. Their validation workflow includes comprehensive statistical evaluation tools that automatically calculate method detection limits (MDLs), limits of quantitation (LOQs), and measurement uncertainty to ensure data reliability at trace concentrations.

Strengths: Industry-leading sensitivity with detection limits in the femtogram range; comprehensive software validation tools specifically designed for regulatory compliance; extensive compound libraries for accurate identification. Weaknesses: Higher initial investment compared to some competitors; complex software interface requires significant training; some validation procedures require specialized knowledge beyond standard laboratory training.

Critical Innovations in Trace Analysis Validation

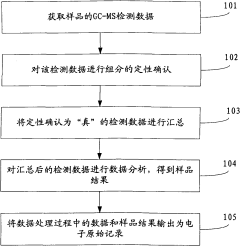

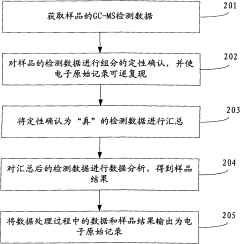

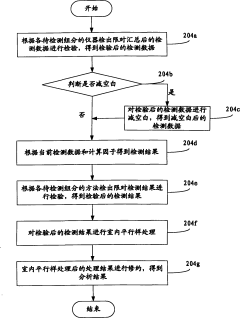

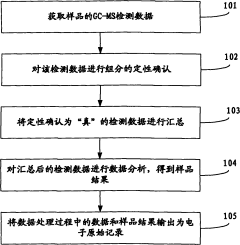

Data processing method of gas chromatography-mass spectrometry (GC-MS) analysis

PatentActiveCN102466662A

Innovation

- Provide a data processing method for GC-MS analysis. Through fast batch import, qualitative confirmation and data summary, VBA macro code is used to realize data analysis and output in EXCEL application, ensuring the accuracy and reversible reproduction of electronic original records.

Phospholipid containing garlic, curry leaves and turmeric extracts for treatment of adipogenesis

PatentPendingIN202141048482A

Innovation

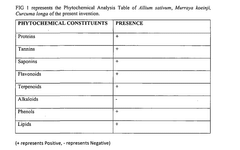

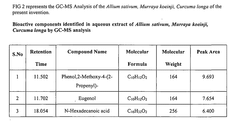

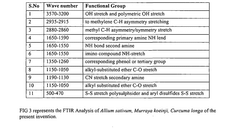

- A synergistic extract derived from Allium sativum, Murraya koenji, and Curcuma longa, combined with phospholipid as a Phytosome complex, is developed for enhanced bioavailability and therapeutic potential, involving a method of extraction, purification, and characterization using GC-MS, FTIR, and SEM, demonstrating the presence of bioactive compounds and antioxidant activity.

Regulatory Compliance for Analytical Methods

Regulatory compliance forms the cornerstone of analytical method validation for GC-MS trace-level analysis across pharmaceutical, environmental, and food safety sectors. Organizations must navigate a complex landscape of regulations established by authorities such as the FDA, EPA, ICH, and ISO. The FDA's 21 CFR Part 11 mandates electronic record integrity, while ICH Q2(R1) guidelines provide comprehensive frameworks for method validation parameters including specificity, linearity, accuracy, precision, detection limits, and robustness.

For trace-level GC-MS analysis, compliance with EPA Method 8270 for semi-volatile organic compounds becomes particularly relevant, establishing specific quality control requirements and performance criteria. Similarly, ISO/IEC 17025 sets laboratory competence standards that directly impact validation protocols and documentation practices for analytical methods.

The regulatory landscape continues to evolve, with increasing emphasis on data integrity principles summarized by ALCOA+ (Attributable, Legible, Contemporaneous, Original, Accurate, plus Complete, Consistent, Enduring, and Available). These principles have become fundamental requirements for GC-MS validation documentation, particularly for trace analysis where data quality is paramount.

Compliance strategies must incorporate comprehensive audit trails, electronic signatures, and robust data management systems. For trace-level analysis, regulatory bodies typically require enhanced validation protocols with lower detection limits, more stringent precision requirements, and extensive interference studies to ensure reliable quantification at low concentrations.

Method Transfer and Cross-Laboratory Validation represent additional regulatory considerations, especially for multi-site operations. Regulatory bodies increasingly expect harmonized validation approaches across different laboratory locations, with documented evidence of method equivalency and performance consistency.

Risk-based approaches to compliance have gained regulatory acceptance, allowing organizations to focus validation efforts on critical aspects of methods based on intended use and risk assessment. For trace-level GC-MS analysis, this typically translates to more rigorous validation of detection capability, matrix effects, and stability parameters.

Maintaining compliance requires ongoing method verification through system suitability tests, proficiency testing, and periodic revalidation. Regulatory inspections frequently focus on documentation completeness, data integrity controls, and evidence of appropriate staff training in both analytical techniques and compliance requirements.

For trace-level GC-MS analysis, compliance with EPA Method 8270 for semi-volatile organic compounds becomes particularly relevant, establishing specific quality control requirements and performance criteria. Similarly, ISO/IEC 17025 sets laboratory competence standards that directly impact validation protocols and documentation practices for analytical methods.

The regulatory landscape continues to evolve, with increasing emphasis on data integrity principles summarized by ALCOA+ (Attributable, Legible, Contemporaneous, Original, Accurate, plus Complete, Consistent, Enduring, and Available). These principles have become fundamental requirements for GC-MS validation documentation, particularly for trace analysis where data quality is paramount.

Compliance strategies must incorporate comprehensive audit trails, electronic signatures, and robust data management systems. For trace-level analysis, regulatory bodies typically require enhanced validation protocols with lower detection limits, more stringent precision requirements, and extensive interference studies to ensure reliable quantification at low concentrations.

Method Transfer and Cross-Laboratory Validation represent additional regulatory considerations, especially for multi-site operations. Regulatory bodies increasingly expect harmonized validation approaches across different laboratory locations, with documented evidence of method equivalency and performance consistency.

Risk-based approaches to compliance have gained regulatory acceptance, allowing organizations to focus validation efforts on critical aspects of methods based on intended use and risk assessment. For trace-level GC-MS analysis, this typically translates to more rigorous validation of detection capability, matrix effects, and stability parameters.

Maintaining compliance requires ongoing method verification through system suitability tests, proficiency testing, and periodic revalidation. Regulatory inspections frequently focus on documentation completeness, data integrity controls, and evidence of appropriate staff training in both analytical techniques and compliance requirements.

Quality Assurance Best Practices

Quality assurance best practices for GC-MS trace-level analysis validation require systematic approaches to ensure data reliability and accuracy. Implementing a robust quality control system begins with establishing standard operating procedures (SOPs) that detail every aspect of the analytical process, from sample preparation to data interpretation. These SOPs should be regularly reviewed and updated to incorporate technological advancements and regulatory changes.

Method validation represents the cornerstone of quality assurance, requiring thorough assessment of specificity, linearity, accuracy, precision, detection limits, and robustness. For trace-level analysis, particular attention must be paid to limits of detection (LOD) and quantification (LOQ), which should be experimentally determined rather than theoretically calculated to account for real-world matrix effects.

System suitability tests should be performed before each analytical run to verify instrument performance. These tests typically include evaluations of retention time reproducibility, peak resolution, and signal-to-noise ratios. For trace analysis, more stringent criteria may be necessary, with signal-to-noise ratios of at least 10:1 recommended for reliable quantification.

Quality control samples must be incorporated throughout analytical batches, including method blanks, matrix spikes, and certified reference materials. The frequency of QC samples should increase for trace-level analysis, with recommended insertion of a QC sample after every 5-10 analytical samples to monitor system stability and detect potential drift or contamination.

Data review protocols should follow a multi-tier approach, with initial review by the analyst, followed by peer review, and final verification by a quality assurance officer. Automated data flagging systems can assist in identifying outliers or anomalous results that require further investigation, particularly valuable for large datasets generated during trace analysis.

Documentation practices must be comprehensive, including instrument maintenance logs, calibration records, and complete audit trails for all data processing steps. Electronic data systems should comply with 21 CFR Part 11 or equivalent regulations, ensuring data integrity through appropriate access controls, audit trails, and electronic signatures.

Proficiency testing participation provides external validation of analytical capabilities. Laboratories should regularly participate in interlaboratory comparison studies specifically designed for trace-level analysis to benchmark their performance against peers and identify areas for improvement in their analytical processes.

Method validation represents the cornerstone of quality assurance, requiring thorough assessment of specificity, linearity, accuracy, precision, detection limits, and robustness. For trace-level analysis, particular attention must be paid to limits of detection (LOD) and quantification (LOQ), which should be experimentally determined rather than theoretically calculated to account for real-world matrix effects.

System suitability tests should be performed before each analytical run to verify instrument performance. These tests typically include evaluations of retention time reproducibility, peak resolution, and signal-to-noise ratios. For trace analysis, more stringent criteria may be necessary, with signal-to-noise ratios of at least 10:1 recommended for reliable quantification.

Quality control samples must be incorporated throughout analytical batches, including method blanks, matrix spikes, and certified reference materials. The frequency of QC samples should increase for trace-level analysis, with recommended insertion of a QC sample after every 5-10 analytical samples to monitor system stability and detect potential drift or contamination.

Data review protocols should follow a multi-tier approach, with initial review by the analyst, followed by peer review, and final verification by a quality assurance officer. Automated data flagging systems can assist in identifying outliers or anomalous results that require further investigation, particularly valuable for large datasets generated during trace analysis.

Documentation practices must be comprehensive, including instrument maintenance logs, calibration records, and complete audit trails for all data processing steps. Electronic data systems should comply with 21 CFR Part 11 or equivalent regulations, ensuring data integrity through appropriate access controls, audit trails, and electronic signatures.

Proficiency testing participation provides external validation of analytical capabilities. Laboratories should regularly participate in interlaboratory comparison studies specifically designed for trace-level analysis to benchmark their performance against peers and identify areas for improvement in their analytical processes.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!