Human-In-The-Loop Interfaces For Oversight Of Autonomous Experiments

AUG 29, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

HITL Interface Evolution and Objectives

Human-in-the-Loop (HITL) interfaces have evolved significantly over the past decades, transitioning from basic command-line interfaces to sophisticated systems that enable meaningful human oversight of autonomous experiments. The evolution began in the 1980s with simple supervisory control systems where humans monitored automated processes with minimal interaction capabilities. By the 1990s, graphical user interfaces emerged, allowing operators to visualize experimental data and system states in real-time.

The 2000s marked a significant advancement with the introduction of adaptive interfaces that could adjust their presentation based on the operator's expertise level and the complexity of the experimental context. These systems began incorporating basic machine learning techniques to predict user needs and streamline interaction workflows. The 2010s witnessed the integration of mixed reality technologies, enabling researchers to interact with experimental data and autonomous systems through immersive visualizations.

Current HITL interfaces leverage advanced AI techniques to create collaborative human-machine partnerships. Modern systems employ explainable AI approaches to communicate decision rationales, uncertainty quantification to express confidence levels, and attention-directing mechanisms to highlight critical experimental parameters requiring human judgment. These developments reflect the shift from humans as mere supervisors to collaborative partners in autonomous experimentation.

The primary objective of contemporary HITL interfaces is to establish an optimal balance between autonomous operation and human intervention. This balance aims to leverage the strengths of both human expertise (intuition, creativity, ethical judgment) and machine capabilities (consistency, tireless operation, rapid data processing). Effective interfaces must provide situational awareness without overwhelming operators with excessive information.

Another critical objective is to support different intervention modalities, allowing humans to interact with autonomous systems at various levels - from high-level goal setting to direct manipulation of experimental parameters when necessary. This flexibility enables appropriate human oversight regardless of the experiment's complexity or criticality.

Looking forward, HITL interfaces are evolving toward more anticipatory systems that can predict when human intervention would be most valuable, thereby optimizing the use of human attention as a limited resource. The development trajectory also points toward interfaces that can adapt to individual researchers' working styles and expertise levels, creating personalized interaction experiences that maximize experimental productivity while maintaining appropriate oversight.

The 2000s marked a significant advancement with the introduction of adaptive interfaces that could adjust their presentation based on the operator's expertise level and the complexity of the experimental context. These systems began incorporating basic machine learning techniques to predict user needs and streamline interaction workflows. The 2010s witnessed the integration of mixed reality technologies, enabling researchers to interact with experimental data and autonomous systems through immersive visualizations.

Current HITL interfaces leverage advanced AI techniques to create collaborative human-machine partnerships. Modern systems employ explainable AI approaches to communicate decision rationales, uncertainty quantification to express confidence levels, and attention-directing mechanisms to highlight critical experimental parameters requiring human judgment. These developments reflect the shift from humans as mere supervisors to collaborative partners in autonomous experimentation.

The primary objective of contemporary HITL interfaces is to establish an optimal balance between autonomous operation and human intervention. This balance aims to leverage the strengths of both human expertise (intuition, creativity, ethical judgment) and machine capabilities (consistency, tireless operation, rapid data processing). Effective interfaces must provide situational awareness without overwhelming operators with excessive information.

Another critical objective is to support different intervention modalities, allowing humans to interact with autonomous systems at various levels - from high-level goal setting to direct manipulation of experimental parameters when necessary. This flexibility enables appropriate human oversight regardless of the experiment's complexity or criticality.

Looking forward, HITL interfaces are evolving toward more anticipatory systems that can predict when human intervention would be most valuable, thereby optimizing the use of human attention as a limited resource. The development trajectory also points toward interfaces that can adapt to individual researchers' working styles and expertise levels, creating personalized interaction experiences that maximize experimental productivity while maintaining appropriate oversight.

Market Analysis for HITL Autonomous Experimentation

The Human-In-The-Loop (HITL) interfaces for autonomous experimentation market is experiencing significant growth, driven by the increasing adoption of automation across research and development sectors. Current market estimates value this specialized segment at approximately $2.3 billion, with projections indicating a compound annual growth rate of 18.7% through 2028, potentially reaching $5.4 billion by that time.

The pharmaceutical and biotechnology sectors represent the largest market share, accounting for nearly 42% of current HITL interface implementations. These industries leverage autonomous experimentation systems with human oversight to accelerate drug discovery processes and optimize formulation development, resulting in reduced time-to-market and significant cost savings.

Materials science applications follow closely behind at 27% market share, where HITL interfaces enable researchers to efficiently explore vast parameter spaces while maintaining critical human judgment for unexpected discoveries or safety concerns. The chemical industry represents approximately 18% of the market, with applications primarily in process optimization and catalyst discovery.

Geographically, North America leads adoption with 38% market share, followed by Europe (31%) and Asia-Pacific (24%). The latter region demonstrates the fastest growth trajectory, with China and South Korea making substantial investments in autonomous research infrastructure.

Customer segmentation reveals three primary market tiers: large pharmaceutical and chemical corporations (48%), academic and government research institutions (32%), and emerging biotechnology startups (20%). Each segment exhibits distinct purchasing behaviors and implementation requirements, with large corporations prioritizing enterprise-wide integration capabilities while academic institutions focus on flexibility and customization.

Key market drivers include the exponential growth in experimental data volumes, increasing complexity of research challenges, and pressure to accelerate innovation cycles. The COVID-19 pandemic has further accelerated market growth by highlighting the value of remote research capabilities and automated systems that can maintain productivity during disruptions.

Market barriers include significant initial investment costs, integration challenges with legacy systems, and organizational resistance to automation. Additionally, regulatory uncertainties regarding autonomous systems in regulated industries like pharmaceuticals present adoption hurdles that solution providers must address through comprehensive validation frameworks.

Customer demand increasingly focuses on intuitive interfaces that democratize access to autonomous experimentation capabilities, robust audit trails for regulatory compliance, and seamless integration with existing laboratory information management systems (LIMS).

The pharmaceutical and biotechnology sectors represent the largest market share, accounting for nearly 42% of current HITL interface implementations. These industries leverage autonomous experimentation systems with human oversight to accelerate drug discovery processes and optimize formulation development, resulting in reduced time-to-market and significant cost savings.

Materials science applications follow closely behind at 27% market share, where HITL interfaces enable researchers to efficiently explore vast parameter spaces while maintaining critical human judgment for unexpected discoveries or safety concerns. The chemical industry represents approximately 18% of the market, with applications primarily in process optimization and catalyst discovery.

Geographically, North America leads adoption with 38% market share, followed by Europe (31%) and Asia-Pacific (24%). The latter region demonstrates the fastest growth trajectory, with China and South Korea making substantial investments in autonomous research infrastructure.

Customer segmentation reveals three primary market tiers: large pharmaceutical and chemical corporations (48%), academic and government research institutions (32%), and emerging biotechnology startups (20%). Each segment exhibits distinct purchasing behaviors and implementation requirements, with large corporations prioritizing enterprise-wide integration capabilities while academic institutions focus on flexibility and customization.

Key market drivers include the exponential growth in experimental data volumes, increasing complexity of research challenges, and pressure to accelerate innovation cycles. The COVID-19 pandemic has further accelerated market growth by highlighting the value of remote research capabilities and automated systems that can maintain productivity during disruptions.

Market barriers include significant initial investment costs, integration challenges with legacy systems, and organizational resistance to automation. Additionally, regulatory uncertainties regarding autonomous systems in regulated industries like pharmaceuticals present adoption hurdles that solution providers must address through comprehensive validation frameworks.

Customer demand increasingly focuses on intuitive interfaces that democratize access to autonomous experimentation capabilities, robust audit trails for regulatory compliance, and seamless integration with existing laboratory information management systems (LIMS).

Current HITL Interface Challenges

Despite significant advancements in autonomous experimentation systems, current Human-In-The-Loop (HITL) interfaces face several critical challenges that limit their effectiveness in providing meaningful oversight. The primary challenge lies in achieving an optimal balance between automation and human intervention. Many existing interfaces either overwhelm users with excessive information or provide insufficient visibility into the autonomous system's decision-making processes.

Real-time monitoring presents another significant hurdle. Current interfaces often struggle to present complex experimental data streams in an interpretable format that allows human operators to quickly identify anomalies or unexpected patterns. This is particularly problematic in high-throughput autonomous experiments where decisions must be made rapidly based on evolving data.

The interpretability of machine learning models driving autonomous experiments remains inadequate in most current interfaces. The "black box" nature of many advanced algorithms makes it difficult for human operators to understand why specific experimental paths are chosen over others, creating a trust deficit that undermines effective oversight.

Alert fatigue represents another persistent challenge, as many systems generate excessive notifications without proper prioritization. This leads to human operators potentially missing critical alerts amidst the noise of less important ones, defeating the purpose of human oversight in catching experimental errors or unexpected outcomes.

Visualization tools in current interfaces frequently fail to represent multi-dimensional experimental spaces effectively. This limitation makes it difficult for operators to develop intuitive understandings of the experimental landscape being explored by autonomous systems, particularly in complex parameter spaces with numerous variables.

Knowledge transfer between the autonomous system and human operators remains suboptimal. Current interfaces rarely facilitate effective learning from past experiments or incorporate human expertise efficiently into the autonomous decision-making process, creating a disconnect between machine and human knowledge bases.

Accessibility issues persist across many interfaces, with designs that require specialized training or technical expertise to operate effectively. This creates barriers to adoption and limits the pool of potential operators who can provide oversight, particularly in interdisciplinary research environments where users may have varying technical backgrounds.

Latency in feedback mechanisms represents another significant challenge, as many interfaces do not support rapid human intervention when needed. This creates scenarios where autonomous systems may continue suboptimal experimental paths for too long before human correction can be implemented, wasting valuable resources and time.

Real-time monitoring presents another significant hurdle. Current interfaces often struggle to present complex experimental data streams in an interpretable format that allows human operators to quickly identify anomalies or unexpected patterns. This is particularly problematic in high-throughput autonomous experiments where decisions must be made rapidly based on evolving data.

The interpretability of machine learning models driving autonomous experiments remains inadequate in most current interfaces. The "black box" nature of many advanced algorithms makes it difficult for human operators to understand why specific experimental paths are chosen over others, creating a trust deficit that undermines effective oversight.

Alert fatigue represents another persistent challenge, as many systems generate excessive notifications without proper prioritization. This leads to human operators potentially missing critical alerts amidst the noise of less important ones, defeating the purpose of human oversight in catching experimental errors or unexpected outcomes.

Visualization tools in current interfaces frequently fail to represent multi-dimensional experimental spaces effectively. This limitation makes it difficult for operators to develop intuitive understandings of the experimental landscape being explored by autonomous systems, particularly in complex parameter spaces with numerous variables.

Knowledge transfer between the autonomous system and human operators remains suboptimal. Current interfaces rarely facilitate effective learning from past experiments or incorporate human expertise efficiently into the autonomous decision-making process, creating a disconnect between machine and human knowledge bases.

Accessibility issues persist across many interfaces, with designs that require specialized training or technical expertise to operate effectively. This creates barriers to adoption and limits the pool of potential operators who can provide oversight, particularly in interdisciplinary research environments where users may have varying technical backgrounds.

Latency in feedback mechanisms represents another significant challenge, as many interfaces do not support rapid human intervention when needed. This creates scenarios where autonomous systems may continue suboptimal experimental paths for too long before human correction can be implemented, wasting valuable resources and time.

Existing HITL Interface Architectures

01 Human-AI collaborative decision-making systems

Systems that facilitate collaboration between humans and AI for decision-making processes, where human expertise guides and oversees AI operations. These interfaces allow humans to provide input, validate AI recommendations, and make final decisions while leveraging AI capabilities for data processing and analysis. The human-in-the-loop approach ensures appropriate oversight while maximizing the benefits of automation and artificial intelligence.- User Interface Design for Human-in-the-Loop Systems: Effective human-in-the-loop systems require carefully designed interfaces that facilitate meaningful human oversight and intervention. These interfaces must balance automation with human judgment, providing clear visualizations and controls that allow users to understand system states, review decisions, and intervene when necessary. The design focuses on reducing cognitive load while maintaining situational awareness, often incorporating customizable dashboards and intuitive feedback mechanisms that support collaborative decision-making between humans and automated systems.

- Regulatory and Compliance Frameworks for AI Oversight: Regulatory frameworks for human-in-the-loop oversight establish governance structures for artificial intelligence systems, particularly in high-risk domains. These frameworks define requirements for human supervision, transparency, and accountability in automated decision-making processes. They specify when and how human intervention should occur, documentation requirements for oversight activities, and standards for measuring the effectiveness of human oversight. Such frameworks aim to ensure that AI systems remain under meaningful human control while complying with legal and ethical standards.

- Machine Learning Systems with Human Feedback Loops: Machine learning systems incorporating human feedback loops leverage human expertise to improve model performance and ensure appropriate operation. These systems collect human evaluations of algorithmic outputs, using this feedback to refine models, correct errors, and align with human values. The feedback mechanisms include explicit rating systems, annotation tools, and interactive correction interfaces that allow domain experts to guide the learning process. This approach is particularly valuable for handling edge cases, adapting to changing conditions, and maintaining ethical boundaries in autonomous systems.

- Decision Support Systems with Human Verification: Decision support systems with human verification combine algorithmic recommendations with human judgment to improve decision quality. These systems present AI-generated analyses and suggestions while requiring human review before implementation of critical actions. They typically include confidence metrics, explanation features, and verification workflows that highlight potential risks or uncertainties. The human verification component serves as a safeguard against algorithmic errors, biases, or contextual misunderstandings, particularly in domains like healthcare, finance, and security where decisions carry significant consequences.

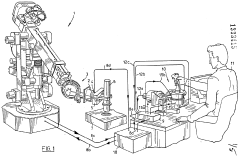

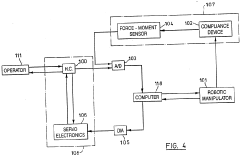

- Autonomous Systems with Human Intervention Protocols: Autonomous systems with human intervention protocols establish structured mechanisms for human operators to monitor, override, or adjust automated operations. These protocols define escalation procedures, intervention thresholds, and handover processes between automated and manual control. They include alert systems that notify human operators when intervention may be needed, along with tools for seamless transition between autonomous and manual operation. Such protocols are essential in applications like autonomous vehicles, industrial robotics, and critical infrastructure management where maintaining appropriate human oversight is crucial for safety and reliability.

02 Regulatory compliance and governance frameworks

Frameworks and systems designed to ensure human oversight of automated processes meets regulatory requirements. These solutions implement governance structures that maintain appropriate levels of human supervision over AI and automated systems, particularly in regulated industries. They include audit trails, verification mechanisms, and compliance documentation to demonstrate that human oversight is maintained at critical decision points.Expand Specific Solutions03 Interface design for effective human supervision

User interface technologies specifically designed to optimize human oversight of automated systems. These interfaces present information in ways that enhance human understanding of complex AI operations, highlight areas requiring attention, and facilitate timely intervention. Features include customizable dashboards, alert systems, visualization tools, and intuitive controls that enable effective human monitoring and control of automated processes.Expand Specific Solutions04 Training and feedback systems for AI improvement

Systems that enable humans to provide feedback and training data to improve AI performance over time. These interfaces allow subject matter experts to correct AI errors, provide additional training examples, and refine AI models through continuous feedback loops. The human-in-the-loop training approach helps address AI limitations, reduce bias, and improve accuracy in automated decision-making systems.Expand Specific Solutions05 Risk management in automated systems

Technologies that incorporate human oversight specifically for risk management in automated systems. These solutions identify high-risk decisions that require human review, implement escalation protocols, and provide mechanisms for human intervention when automated systems encounter uncertain situations. They balance efficiency gains from automation with appropriate human oversight to mitigate risks in critical applications.Expand Specific Solutions

Leading Organizations in HITL Systems

The Human-In-The-Loop (HITL) interfaces for autonomous experiments market is currently in an early growth phase, characterized by increasing adoption across research and industrial applications. The market size is expanding rapidly, driven by the growing need for human oversight in automated systems. Technology maturity varies significantly among key players, with established tech giants like Google, IBM, and UiPath leading in AI integration and automation platforms. Research institutions such as MIT and Case Western Reserve University contribute fundamental innovations, while industrial players like Siemens, ABB, and Honeywell focus on practical implementations. Companies like Artificial, Inc. and Testbot.AI represent specialized startups developing purpose-built HITL solutions for laboratory and testing environments, indicating a diversifying competitive landscape.

Massachusetts Institute of Technology

Technical Solution: MIT's human-in-the-loop interface framework for autonomous experimentation focuses on adaptive control systems that dynamically adjust the level of human oversight based on experiment complexity and risk assessment. Their approach implements a tiered intervention model where routine experimental decisions are handled autonomously while novel or critical decision points trigger human review. MIT researchers have developed specialized visualization tools that present multidimensional experimental data through intuitive interfaces, enabling rapid human assessment of complex situations. The system incorporates uncertainty quantification methods that explicitly communicate confidence levels in autonomous decisions to human supervisors. MIT's framework has been successfully deployed in robotics research and materials science, where it demonstrated a 40% reduction in failed experiments compared to fully autonomous approaches. Their platform emphasizes knowledge transfer between human experts and AI systems, creating a continuous learning loop that improves both autonomous capabilities and human understanding of experimental spaces.

Strengths: Cutting-edge research in human-computer interaction produces highly intuitive interfaces; strong theoretical foundations ensure robust decision-making frameworks. Weaknesses: Academic focus may result in systems that require additional engineering for industrial deployment; limited commercial support infrastructure compared to industry solutions.

Google LLC

Technical Solution: Google's human-in-the-loop (HITL) interface system for autonomous experiments integrates DeepMind's AI capabilities with human oversight frameworks. Their approach combines reinforcement learning algorithms with intuitive dashboards that allow researchers to monitor experiment progress in real-time. The system features dynamic intervention thresholds that automatically flag experiments requiring human attention based on predefined safety parameters or unexpected outcomes. Google's implementation particularly excels in materials science and drug discovery, where their AutoML systems work alongside domain experts through specialized visualization tools that translate complex experimental data into actionable insights. Their platform includes explainable AI components that provide reasoning behind autonomous decisions, enabling researchers to understand and validate experimental paths. The system also incorporates federated learning techniques to maintain data privacy while allowing collaborative oversight across research teams.

Strengths: Superior AI infrastructure and computational resources enable complex experiment modeling; extensive experience with large-scale machine learning applications provides robust oversight mechanisms. Weaknesses: Potential over-reliance on proprietary systems may limit interoperability with third-party research tools; high implementation costs for smaller research organizations.

Key HITL Oversight Technologies

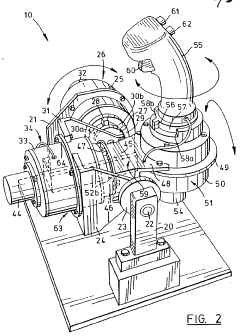

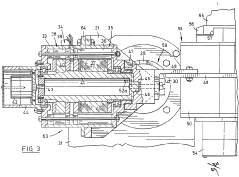

Human-in-the-loop machine control loop

PatentInactiveCA1333415C

Innovation

- A human-in-the-loop control loop with a hand controller having multiple degrees of freedom, torque generators, and feedback mechanisms that provide force feedback proportional to the intensity and rate of motion, allowing for precise control of robotic manipulators without the need for visual feedback and enabling operation in both active and passive modes.

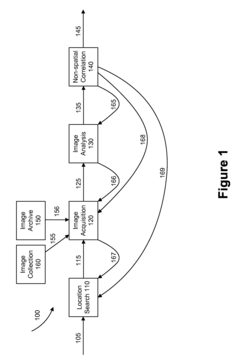

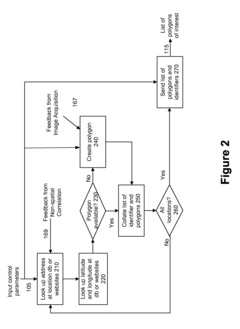

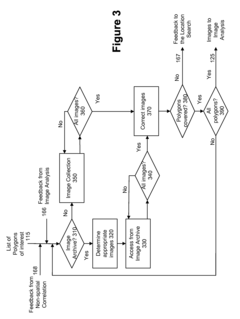

Adaptive image acquisition and processing with image analysis feedback

PatentActiveUS9105128B2

Innovation

- A system that uses human-in-the-loop processing with Human Intelligence Tasks (HITs) and automated image processing, where images of interest are enhanced and analyzed by human workers through a novel task-specific user interface, with feedback loops to improve polygon accuracy, image quality, and data correlation, correlating image analysis results with non-spatial data for predictive purposes.

Safety and Risk Management Frameworks

The integration of safety and risk management frameworks into Human-In-The-Loop (HITL) interfaces for autonomous experiments represents a critical component for ensuring responsible innovation. These frameworks must address both immediate operational risks and longer-term ethical considerations that emerge when human oversight is incorporated into autonomous experimental systems.

Current safety frameworks typically employ multi-layered approaches, combining technical safeguards with procedural controls. For autonomous experiments with human oversight, these frameworks must be adapted to account for the unique dynamics of intermittent human intervention. Leading organizations have developed risk assessment matrices specifically tailored to HITL systems, categorizing risks based on both severity and the time-sensitivity of required human responses.

Real-time monitoring systems constitute a fundamental element of effective safety frameworks, providing continuous assessment of experimental parameters and triggering human intervention when predefined thresholds are exceeded. These systems increasingly incorporate predictive analytics to anticipate potential safety issues before they manifest, extending the window for human oversight and intervention.

Regulatory compliance represents another crucial dimension, with frameworks needing to address domain-specific requirements across pharmaceutical, chemical, and materials science sectors. The FDA's guidance on computer systems validation and the EU's risk-based approach to laboratory automation provide foundational principles that are being extended to accommodate HITL interfaces in autonomous experimentation.

Incident response protocols within these frameworks must account for the distributed nature of modern research environments, where the human operator may be geographically separated from the experimental apparatus. This necessitates clear escalation pathways and redundant communication channels to ensure timely human intervention when automated systems detect anomalies.

Training requirements form an essential component of comprehensive risk management, with operators needing specialized preparation in both the technical aspects of the experimental domain and the human factors considerations of effective oversight. Simulation-based training environments are increasingly utilized to prepare operators for low-frequency, high-consequence scenarios that may require rapid human judgment.

Emerging best practices include the implementation of graduated autonomy models, where systems progressively assume greater independence as safety records are established, while maintaining appropriate human oversight touchpoints. These adaptive frameworks allow organizations to balance innovation velocity with prudent risk management, tailoring the level of human involvement to the specific risk profile of each experimental protocol.

Current safety frameworks typically employ multi-layered approaches, combining technical safeguards with procedural controls. For autonomous experiments with human oversight, these frameworks must be adapted to account for the unique dynamics of intermittent human intervention. Leading organizations have developed risk assessment matrices specifically tailored to HITL systems, categorizing risks based on both severity and the time-sensitivity of required human responses.

Real-time monitoring systems constitute a fundamental element of effective safety frameworks, providing continuous assessment of experimental parameters and triggering human intervention when predefined thresholds are exceeded. These systems increasingly incorporate predictive analytics to anticipate potential safety issues before they manifest, extending the window for human oversight and intervention.

Regulatory compliance represents another crucial dimension, with frameworks needing to address domain-specific requirements across pharmaceutical, chemical, and materials science sectors. The FDA's guidance on computer systems validation and the EU's risk-based approach to laboratory automation provide foundational principles that are being extended to accommodate HITL interfaces in autonomous experimentation.

Incident response protocols within these frameworks must account for the distributed nature of modern research environments, where the human operator may be geographically separated from the experimental apparatus. This necessitates clear escalation pathways and redundant communication channels to ensure timely human intervention when automated systems detect anomalies.

Training requirements form an essential component of comprehensive risk management, with operators needing specialized preparation in both the technical aspects of the experimental domain and the human factors considerations of effective oversight. Simulation-based training environments are increasingly utilized to prepare operators for low-frequency, high-consequence scenarios that may require rapid human judgment.

Emerging best practices include the implementation of graduated autonomy models, where systems progressively assume greater independence as safety records are established, while maintaining appropriate human oversight touchpoints. These adaptive frameworks allow organizations to balance innovation velocity with prudent risk management, tailoring the level of human involvement to the specific risk profile of each experimental protocol.

Human Factors in Autonomous System Design

The integration of human factors into autonomous system design represents a critical dimension in the development of effective Human-In-The-Loop interfaces for experimental oversight. When designing these interfaces, understanding human cognitive capabilities and limitations becomes paramount to ensure optimal interaction between human operators and autonomous systems.

Human perception and information processing capacities must be carefully considered in interface design. Research indicates that humans can effectively monitor 5-7 information streams simultaneously, beyond which cognitive overload occurs. Therefore, interfaces must prioritize critical information and utilize appropriate visualization techniques that align with human perceptual strengths, such as pattern recognition and anomaly detection.

Decision-making frameworks within these interfaces should account for known cognitive biases that affect human judgment. Confirmation bias, availability heuristic, and automation bias can significantly impact how operators interpret and respond to system recommendations. Well-designed interfaces incorporate debiasing mechanisms, such as presenting alternative hypotheses or explicitly highlighting uncertainty in autonomous system predictions.

Mental workload management represents another crucial consideration. The interface must dynamically adjust information density based on situational demands, preventing both cognitive overload during critical events and underload during routine operations. Adaptive automation that shifts control responsibilities between human and machine based on workload measurements has shown promising results in maintaining optimal operator engagement.

Trust calibration mechanisms are essential for effective human-machine collaboration. Interfaces should provide appropriate transparency into autonomous system decision-making processes, allowing operators to develop accurate mental models of system capabilities and limitations. Research demonstrates that explanatory features that communicate system confidence levels and reasoning processes significantly improve appropriate trust development and intervention decisions.

Skill maintenance presents a particular challenge in highly automated environments. As autonomous systems handle routine operations, human operators may experience skill degradation, reducing their effectiveness during manual intervention scenarios. Interface designs must incorporate periodic skill reinforcement opportunities through simulation exercises or guided manual operation sessions to maintain operator proficiency for critical oversight functions.

Cross-cultural considerations also impact interface effectiveness. Research shows significant variations in automation trust levels and information processing preferences across different cultural contexts. Interfaces designed for global deployment must accommodate these differences through customizable information presentation formats and adjustable automation levels to ensure consistent effectiveness across diverse user populations.

Human perception and information processing capacities must be carefully considered in interface design. Research indicates that humans can effectively monitor 5-7 information streams simultaneously, beyond which cognitive overload occurs. Therefore, interfaces must prioritize critical information and utilize appropriate visualization techniques that align with human perceptual strengths, such as pattern recognition and anomaly detection.

Decision-making frameworks within these interfaces should account for known cognitive biases that affect human judgment. Confirmation bias, availability heuristic, and automation bias can significantly impact how operators interpret and respond to system recommendations. Well-designed interfaces incorporate debiasing mechanisms, such as presenting alternative hypotheses or explicitly highlighting uncertainty in autonomous system predictions.

Mental workload management represents another crucial consideration. The interface must dynamically adjust information density based on situational demands, preventing both cognitive overload during critical events and underload during routine operations. Adaptive automation that shifts control responsibilities between human and machine based on workload measurements has shown promising results in maintaining optimal operator engagement.

Trust calibration mechanisms are essential for effective human-machine collaboration. Interfaces should provide appropriate transparency into autonomous system decision-making processes, allowing operators to develop accurate mental models of system capabilities and limitations. Research demonstrates that explanatory features that communicate system confidence levels and reasoning processes significantly improve appropriate trust development and intervention decisions.

Skill maintenance presents a particular challenge in highly automated environments. As autonomous systems handle routine operations, human operators may experience skill degradation, reducing their effectiveness during manual intervention scenarios. Interface designs must incorporate periodic skill reinforcement opportunities through simulation exercises or guided manual operation sessions to maintain operator proficiency for critical oversight functions.

Cross-cultural considerations also impact interface effectiveness. Research shows significant variations in automation trust levels and information processing preferences across different cultural contexts. Interfaces designed for global deployment must accommodate these differences through customizable information presentation formats and adjustable automation levels to ensure consistent effectiveness across diverse user populations.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!