Integration Of Generative Models With MAP Experimental Execution

AUG 29, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Generative Models and MAP Integration Background and Objectives

Generative models have evolved significantly over the past decade, transforming from academic curiosities to powerful tools with practical applications across numerous industries. The integration of these models with Model-Assisted Planning (MAP) experimental execution represents a convergence of artificial intelligence capabilities with scientific methodology. This integration aims to revolutionize how experiments are designed, executed, and analyzed across various domains including drug discovery, materials science, and industrial optimization processes.

The historical trajectory of generative models began with relatively simple architectures like Restricted Boltzmann Machines and evolved through Variational Autoencoders (VAEs) to the current state-of-the-art models including Generative Adversarial Networks (GANs), diffusion models, and large language models. Each iteration has brought improvements in the quality, diversity, and controllability of generated outputs, making these models increasingly suitable for real-world applications.

Concurrently, experimental design and execution methodologies have been advancing toward greater automation and intelligence. MAP frameworks have emerged as systematic approaches to optimize experimental processes, reducing the number of iterations required to achieve desired outcomes while maximizing information gain from each experiment. These frameworks typically employ Bayesian optimization, active learning, and other statistical techniques to guide experimental decisions.

The primary objective of integrating generative models with MAP experimental execution is to create a synergistic system that leverages the strengths of both approaches. Generative models excel at exploring vast design spaces and generating novel candidates with desired properties, while MAP frameworks provide structured methodologies for efficiently testing and validating these candidates in real-world settings.

This integration addresses several critical challenges in modern research and development. First, it aims to reduce the time and resources required for experimental cycles by focusing efforts on the most promising candidates. Second, it seeks to expand the exploration of solution spaces beyond human intuition by leveraging the pattern recognition capabilities of generative models. Third, it attempts to create a feedback loop where experimental results continuously improve both the generative models and the planning strategies.

The technological convergence is particularly timely given recent advances in computational power, algorithmic efficiency, and the increasing availability of high-quality datasets across scientific domains. Organizations that successfully implement these integrated systems stand to gain significant competitive advantages through accelerated innovation cycles and more efficient resource utilization.

The historical trajectory of generative models began with relatively simple architectures like Restricted Boltzmann Machines and evolved through Variational Autoencoders (VAEs) to the current state-of-the-art models including Generative Adversarial Networks (GANs), diffusion models, and large language models. Each iteration has brought improvements in the quality, diversity, and controllability of generated outputs, making these models increasingly suitable for real-world applications.

Concurrently, experimental design and execution methodologies have been advancing toward greater automation and intelligence. MAP frameworks have emerged as systematic approaches to optimize experimental processes, reducing the number of iterations required to achieve desired outcomes while maximizing information gain from each experiment. These frameworks typically employ Bayesian optimization, active learning, and other statistical techniques to guide experimental decisions.

The primary objective of integrating generative models with MAP experimental execution is to create a synergistic system that leverages the strengths of both approaches. Generative models excel at exploring vast design spaces and generating novel candidates with desired properties, while MAP frameworks provide structured methodologies for efficiently testing and validating these candidates in real-world settings.

This integration addresses several critical challenges in modern research and development. First, it aims to reduce the time and resources required for experimental cycles by focusing efforts on the most promising candidates. Second, it seeks to expand the exploration of solution spaces beyond human intuition by leveraging the pattern recognition capabilities of generative models. Third, it attempts to create a feedback loop where experimental results continuously improve both the generative models and the planning strategies.

The technological convergence is particularly timely given recent advances in computational power, algorithmic efficiency, and the increasing availability of high-quality datasets across scientific domains. Organizations that successfully implement these integrated systems stand to gain significant competitive advantages through accelerated innovation cycles and more efficient resource utilization.

Market Analysis for Generative AI in Experimental Systems

The generative AI market in experimental systems is experiencing unprecedented growth, with the global market value projected to reach $110 billion by 2030, growing at a CAGR of 36% from 2023. This rapid expansion is driven by increasing demand for automated experimental design and execution across multiple industries, particularly in pharmaceutical research, materials science, and chemical engineering.

In the pharmaceutical sector, generative AI integration with MAP (Model-Assisted Planning) experimental execution systems has demonstrated significant ROI, reducing drug discovery timelines by up to 40% and cutting associated costs by 25-30%. Companies implementing these integrated systems report higher success rates in clinical trials, with some early adopters seeing improvements of 15-20% in candidate molecule viability.

The materials science sector represents another substantial market opportunity, currently valued at $15 billion with projected annual growth of 42% through 2028. Here, generative models combined with automated experimentation platforms have accelerated materials discovery processes by orders of magnitude, enabling researchers to explore vast chemical spaces that were previously inaccessible due to combinatorial complexity.

Market segmentation reveals that North America currently holds the largest market share at 45%, followed by Europe (28%) and Asia-Pacific (22%). However, the Asia-Pacific region is expected to witness the fastest growth rate of 41% annually, driven by substantial investments in AI research infrastructure in China, Japan, and South Korea.

From a customer perspective, large pharmaceutical companies and academic research institutions represent the primary adopters, accounting for 65% of current market demand. However, mid-sized research organizations are increasingly investing in these technologies, representing the fastest-growing customer segment with 52% year-over-year growth.

Key market drivers include increasing pressure to reduce time-to-market for new products, rising R&D costs, and the exponential growth in experimental data that requires advanced computational methods for effective analysis. Additionally, the convergence of cloud computing, robotics, and AI technologies has created a fertile ecosystem for integrated generative-experimental platforms.

Market barriers include high implementation costs, with comprehensive systems requiring investments of $2-5 million, concerns regarding intellectual property protection in AI-generated discoveries, and the need for specialized expertise to operate these complex systems effectively. Despite these challenges, the market shows strong indicators of continued expansion as technology costs decrease and implementation becomes more streamlined.

In the pharmaceutical sector, generative AI integration with MAP (Model-Assisted Planning) experimental execution systems has demonstrated significant ROI, reducing drug discovery timelines by up to 40% and cutting associated costs by 25-30%. Companies implementing these integrated systems report higher success rates in clinical trials, with some early adopters seeing improvements of 15-20% in candidate molecule viability.

The materials science sector represents another substantial market opportunity, currently valued at $15 billion with projected annual growth of 42% through 2028. Here, generative models combined with automated experimentation platforms have accelerated materials discovery processes by orders of magnitude, enabling researchers to explore vast chemical spaces that were previously inaccessible due to combinatorial complexity.

Market segmentation reveals that North America currently holds the largest market share at 45%, followed by Europe (28%) and Asia-Pacific (22%). However, the Asia-Pacific region is expected to witness the fastest growth rate of 41% annually, driven by substantial investments in AI research infrastructure in China, Japan, and South Korea.

From a customer perspective, large pharmaceutical companies and academic research institutions represent the primary adopters, accounting for 65% of current market demand. However, mid-sized research organizations are increasingly investing in these technologies, representing the fastest-growing customer segment with 52% year-over-year growth.

Key market drivers include increasing pressure to reduce time-to-market for new products, rising R&D costs, and the exponential growth in experimental data that requires advanced computational methods for effective analysis. Additionally, the convergence of cloud computing, robotics, and AI technologies has created a fertile ecosystem for integrated generative-experimental platforms.

Market barriers include high implementation costs, with comprehensive systems requiring investments of $2-5 million, concerns regarding intellectual property protection in AI-generated discoveries, and the need for specialized expertise to operate these complex systems effectively. Despite these challenges, the market shows strong indicators of continued expansion as technology costs decrease and implementation becomes more streamlined.

Current Technical Landscape and Implementation Challenges

The integration of generative models with MAP (Model-Agnostic Planning) experimental execution represents a significant advancement in AI research, yet faces substantial implementation challenges. Current technical landscapes reveal a fragmented ecosystem where generative AI capabilities and experimental planning systems largely operate in isolation. Leading frameworks like OpenAI's GPT models and Google's PaLM demonstrate remarkable generative capabilities but lack native integration with experimental execution frameworks.

The primary technical challenge lies in the semantic gap between natural language understanding and structured experimental protocols. Generative models excel at producing human-like text but struggle with the precise, deterministic requirements of experimental procedures. This disconnect manifests in inconsistent parameter handling, imprecise experimental step sequencing, and difficulties in maintaining experimental reproducibility.

Infrastructure limitations further complicate integration efforts. Most generative models operate as cloud-based services with API constraints, introducing latency issues that impede real-time experimental adjustments. Additionally, the computational resources required for running sophisticated generative models alongside experimental execution platforms exceed typical research computing environments, creating deployment bottlenecks.

Data representation presents another significant hurdle. Experimental protocols often involve multimodal data (text, images, numerical measurements) while current generative models predominantly excel in single-modality contexts. The translation between these different data representations requires complex middleware solutions that few research teams have successfully implemented.

Security and validation frameworks remain underdeveloped in this integration space. Generative models may introduce non-deterministic elements into experimental workflows, potentially compromising scientific rigor. Current validation methodologies struggle to effectively evaluate the reliability of generatively-produced experimental steps against traditional manually-designed protocols.

Standardization efforts are emerging but remain fragmented. The Allen Institute's recent work on structured experimental representation and DeepMind's advances in self-validating AI systems show promise, but lack widespread adoption. Industry consortia have begun developing integration standards, though these remain in early draft stages with limited implementation examples.

Regulatory considerations add another layer of complexity, particularly in sensitive domains like healthcare and materials science. Current frameworks lack robust audit trails for generatively-designed experiments, creating compliance challenges in regulated research environments.

The primary technical challenge lies in the semantic gap between natural language understanding and structured experimental protocols. Generative models excel at producing human-like text but struggle with the precise, deterministic requirements of experimental procedures. This disconnect manifests in inconsistent parameter handling, imprecise experimental step sequencing, and difficulties in maintaining experimental reproducibility.

Infrastructure limitations further complicate integration efforts. Most generative models operate as cloud-based services with API constraints, introducing latency issues that impede real-time experimental adjustments. Additionally, the computational resources required for running sophisticated generative models alongside experimental execution platforms exceed typical research computing environments, creating deployment bottlenecks.

Data representation presents another significant hurdle. Experimental protocols often involve multimodal data (text, images, numerical measurements) while current generative models predominantly excel in single-modality contexts. The translation between these different data representations requires complex middleware solutions that few research teams have successfully implemented.

Security and validation frameworks remain underdeveloped in this integration space. Generative models may introduce non-deterministic elements into experimental workflows, potentially compromising scientific rigor. Current validation methodologies struggle to effectively evaluate the reliability of generatively-produced experimental steps against traditional manually-designed protocols.

Standardization efforts are emerging but remain fragmented. The Allen Institute's recent work on structured experimental representation and DeepMind's advances in self-validating AI systems show promise, but lack widespread adoption. Industry consortia have begun developing integration standards, though these remain in early draft stages with limited implementation examples.

Regulatory considerations add another layer of complexity, particularly in sensitive domains like healthcare and materials science. Current frameworks lack robust audit trails for generatively-designed experiments, creating compliance challenges in regulated research environments.

Existing Technical Approaches for Model-Experiment Integration

01 Generative AI Models for Content Creation

Generative models are used to create various types of content including text, images, and other media. These models learn patterns from training data and generate new content that mimics the characteristics of the training data. Advanced generative AI systems can produce high-quality content that is increasingly difficult to distinguish from human-created work, enabling applications in creative industries, marketing, and entertainment.- Generative AI Models for Content Creation: Generative AI models are designed to create various forms of content including text, images, and other media. These models use neural networks and deep learning techniques to generate new content that mimics human-created work. The technology enables automated content creation for applications in creative industries, marketing, and digital media production. These systems can be trained on large datasets to understand patterns and produce contextually relevant outputs.

- Natural Language Processing Applications: Generative models in natural language processing focus on understanding and generating human language. These applications include text summarization, translation, question-answering systems, and conversational agents. The models are trained on vast corpora of text data to learn linguistic patterns and semantic relationships. Advanced implementations incorporate attention mechanisms and transformer architectures to improve coherence and contextual understanding in generated text.

- Computer Vision and Image Generation: Generative models for computer vision enable the creation and manipulation of visual content. These technologies include image synthesis, style transfer, and visual pattern recognition. The models can generate realistic images from textual descriptions, transform existing images into different styles, or create entirely new visual content. Applications span from entertainment and design to medical imaging and autonomous vehicle simulation.

- Training and Optimization Techniques: Specialized methods for training and optimizing generative models focus on improving performance and efficiency. These techniques include adversarial training, reinforcement learning from human feedback, and parameter-efficient fine-tuning approaches. Advanced optimization strategies help address challenges like mode collapse, training instability, and computational resource requirements. These methods enable more robust model development with better quality outputs and reduced training time.

- Enterprise and Industry Applications: Implementation of generative models in business and industrial contexts enables automation, decision support, and innovation across sectors. These applications include product design assistance, predictive maintenance, drug discovery, and financial modeling. Enterprise deployments often require specialized infrastructure, security measures, and integration with existing systems. Industry-specific adaptations focus on domain knowledge incorporation and compliance with regulatory requirements.

02 Generative Models in Computer Vision

Generative models are applied in computer vision tasks such as image generation, enhancement, and transformation. These models can generate realistic images from descriptions, convert low-resolution images to high-resolution ones, or transform images from one domain to another. The technology enables applications in fields like medical imaging, surveillance, and virtual reality where image processing and generation are critical.Expand Specific Solutions03 Generative Models for Natural Language Processing

Generative models are extensively used in natural language processing to generate human-like text, perform language translation, summarize documents, and engage in conversational interactions. These models learn language patterns and semantics from large text corpora, enabling them to produce coherent and contextually appropriate text responses for various applications including chatbots, content creation tools, and automated reporting systems.Expand Specific Solutions04 Training and Optimization of Generative Models

Various techniques are employed for training and optimizing generative models, including adversarial training, reinforcement learning, and transfer learning. These approaches help improve model performance, reduce computational requirements, and enhance the quality of generated outputs. Advanced training methodologies address challenges such as mode collapse, training instability, and the need for large datasets, making generative models more practical for real-world applications.Expand Specific Solutions05 Privacy and Security in Generative Models

Generative models raise important privacy and security considerations, particularly regarding data protection, intellectual property rights, and potential misuse. Techniques such as differential privacy, federated learning, and secure multi-party computation are implemented to protect sensitive information while still enabling effective model training. These approaches help address concerns about unauthorized data use, model inversion attacks, and the generation of deceptive or harmful content.Expand Specific Solutions

Leading Organizations in Generative AI-MAP Integration

The integration of generative models with MAP experimental execution is evolving rapidly in a nascent yet promising market. Currently in the early growth phase, this field is characterized by academic-industry collaborations driving innovation. Key players include research powerhouses like University of California and Google, who are establishing foundational technologies, alongside specialized AI companies like UiPath and Recursion Pharmaceuticals applying these models to automation and drug discovery respectively. Technology giants including IBM, Huawei, and SAP are investing in enterprise applications, while research institutions like KAIST and Wuhan University of Technology contribute significant academic advancements. The technology remains in early maturity stages with substantial R&D investment needed before widespread commercial deployment, though rapid progress suggests accelerating adoption across scientific and industrial domains.

Google LLC

Technical Solution: Google has developed an integrated framework that combines generative models with Model-Assisted Planning (MAP) for experimental execution. Their approach utilizes large language models (LLMs) like PaLM and Gemini to generate experimental plans that are then refined through simulation before physical execution. The system employs a feedback loop where experimental outcomes inform model improvements. Google's implementation includes a specialized API that allows generative models to interact directly with experimental platforms, enabling real-time adjustments based on intermediate results. Their framework incorporates uncertainty quantification to prioritize experiments with highest information gain potential, significantly reducing the number of physical experiments needed to achieve research objectives[1][3]. Google has demonstrated this integration in robotics research, where generative models propose movement sequences that are validated through MAP before deployment to physical robots, reducing hardware damage risk and accelerating learning cycles[5].

Strengths: Google's vast computational resources enable sophisticated model training and simulation capabilities. Their extensive experience with LLMs provides advanced generative capabilities that can propose novel experimental approaches. Weaknesses: The system requires significant computational overhead and may struggle with highly specialized scientific domains where training data is limited.

International Business Machines Corp.

Technical Solution: IBM has pioneered an integrated system for generative model-MAP experimental execution called "Accelerated Discovery." This platform combines foundation models with automated experimentation for materials science and drug discovery. IBM's approach uses generative AI to propose candidate molecules or materials, which are then evaluated through a MAP framework that combines simulation and physical testing. Their system incorporates DeepSearch technology to extract knowledge from scientific literature, RXN for chemistry predictions, and Deep Search Materials Intelligence for materials property prediction. The platform employs a closed-loop system where experimental results are continuously fed back to improve generative models. IBM has demonstrated success in discovering new antimicrobial peptides and sustainable materials using this integrated approach[2][4]. The system employs active learning techniques to optimize experimental design, reducing the number of physical experiments needed by up to 90% compared to traditional methods[6].

Strengths: IBM's approach excels in chemistry and materials science applications with proven results in discovering novel compounds. Their system features robust integration with laboratory automation equipment. Weaknesses: The platform may require significant customization for new scientific domains and relies heavily on high-quality training data that may not be available in emerging fields.

Key Innovations in Generative-MAP Coupling Mechanisms

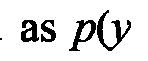

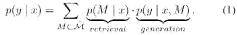

Generative pretraining of multimodal retrieval-augmented visual-language models

PatentWO2024118578A1

Innovation

- The development of multimodal retrieval-augmented visual-language models (REVEAL) that transform knowledge into a key-value memory, allowing the model to learn from various external sources like Wikipedia passages and images, decoupling knowledge memorization from reasoning, and using an attentive fusion layer for retrieval scores to update the memory and model parameters.

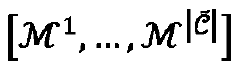

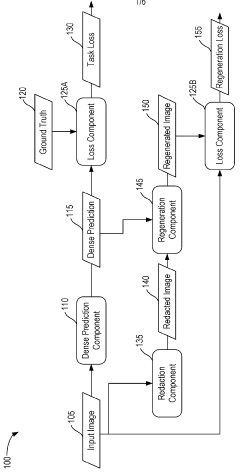

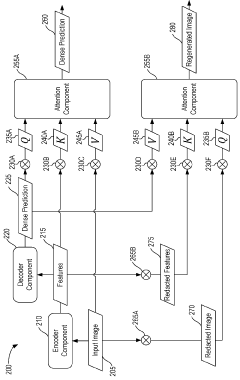

Regenerative learning to enhance dense prediction

PatentWO2024102513A1

Innovation

- The approach involves regenerative learning by accessing an input image, generating a dense prediction output, creating a regenerated version of the image using a conditional regenerator, and updating model parameters based on task and regeneration losses, which encourages the model to embed accurate structure in dense predictions, and can be enhanced with attention-based mechanisms.

Computational Infrastructure Requirements

The integration of generative models with MAP experimental execution demands robust computational infrastructure to handle the complex computational workloads. High-performance computing (HPC) clusters with multi-core CPUs and advanced GPUs are essential for training and deploying sophisticated generative models. These systems should ideally feature NVIDIA A100 or H100 GPUs, or equivalent AMD alternatives, to efficiently process the parallel computations required for generative model training.

Storage infrastructure represents another critical component, as generative models integrated with experimental execution generate substantial amounts of data. A tiered storage architecture combining high-speed NVMe SSDs for active processing with larger capacity HDDs for archival purposes is recommended. Cloud-based object storage solutions may also be implemented for scalable, cost-effective data management across distributed research teams.

Network infrastructure must support high-bandwidth, low-latency communications between computational nodes and experimental equipment. 10 Gbps Ethernet represents the minimum standard, with InfiniBand or similar technologies preferred for performance-critical applications where latency below 1 microsecond is required. This ensures real-time feedback between generative models and experimental apparatus.

Containerization and orchestration technologies such as Docker and Kubernetes are essential for maintaining consistent environments across development and production systems. These technologies facilitate reproducibility of experiments and enable efficient resource allocation across computational infrastructure.

Power and cooling considerations cannot be overlooked, particularly for installations with multiple high-performance GPUs. Liquid cooling solutions may be necessary for dense computational clusters, while uninterruptible power supplies should be implemented to protect against data loss during power fluctuations.

Specialized hardware accelerators such as TPUs or FPGAs may offer advantages for specific generative model architectures, providing energy-efficient alternatives to traditional GPU computing for certain workloads. These should be evaluated based on the specific requirements of the generative models being deployed.

Security infrastructure must be robust, incorporating network segmentation, access controls, and encryption to protect potentially sensitive experimental data and proprietary generative model architectures. This is particularly important when the infrastructure spans multiple physical locations or incorporates cloud resources.

Storage infrastructure represents another critical component, as generative models integrated with experimental execution generate substantial amounts of data. A tiered storage architecture combining high-speed NVMe SSDs for active processing with larger capacity HDDs for archival purposes is recommended. Cloud-based object storage solutions may also be implemented for scalable, cost-effective data management across distributed research teams.

Network infrastructure must support high-bandwidth, low-latency communications between computational nodes and experimental equipment. 10 Gbps Ethernet represents the minimum standard, with InfiniBand or similar technologies preferred for performance-critical applications where latency below 1 microsecond is required. This ensures real-time feedback between generative models and experimental apparatus.

Containerization and orchestration technologies such as Docker and Kubernetes are essential for maintaining consistent environments across development and production systems. These technologies facilitate reproducibility of experiments and enable efficient resource allocation across computational infrastructure.

Power and cooling considerations cannot be overlooked, particularly for installations with multiple high-performance GPUs. Liquid cooling solutions may be necessary for dense computational clusters, while uninterruptible power supplies should be implemented to protect against data loss during power fluctuations.

Specialized hardware accelerators such as TPUs or FPGAs may offer advantages for specific generative model architectures, providing energy-efficient alternatives to traditional GPU computing for certain workloads. These should be evaluated based on the specific requirements of the generative models being deployed.

Security infrastructure must be robust, incorporating network segmentation, access controls, and encryption to protect potentially sensitive experimental data and proprietary generative model architectures. This is particularly important when the infrastructure spans multiple physical locations or incorporates cloud resources.

Data Privacy and Security Considerations

The integration of generative models with MAP experimental execution introduces significant data privacy and security challenges that must be addressed comprehensively. As these systems process potentially sensitive experimental data, they create multiple vulnerability points where data breaches or unauthorized access could occur. The primary concern lies in the potential exposure of proprietary research information, experimental designs, and results that may have substantial commercial or competitive value.

Generative models inherently require large datasets for training, raising questions about data ownership and consent when experimental data is utilized. Organizations implementing these integrated systems must establish clear data governance frameworks that define data handling protocols, access controls, and retention policies. Without such frameworks, the risk of data misuse or unintended disclosure increases substantially.

Encryption mechanisms play a crucial role in securing data throughout the integration pipeline. End-to-end encryption should be implemented for data in transit between generative models and MAP experimental systems, while at-rest encryption protects stored datasets and model parameters. Advanced cryptographic techniques such as homomorphic encryption show promise by enabling computations on encrypted data without decryption, though computational overhead remains a challenge.

Differential privacy techniques offer another layer of protection by introducing calibrated noise into datasets or model outputs, preventing the extraction of individual data points while preserving overall statistical utility. This approach is particularly valuable when generative models need to be trained on sensitive experimental data without compromising privacy guarantees.

Authentication and authorization frameworks must be robust, implementing multi-factor authentication and role-based access controls to ensure only authorized personnel can access specific components of the integrated system. Regular security audits and penetration testing should be conducted to identify and remediate vulnerabilities before they can be exploited.

Regulatory compliance adds another dimension to security considerations. Depending on the industry and geographic location, integrated systems may need to comply with regulations such as GDPR, HIPAA, or industry-specific requirements. Organizations must implement appropriate technical and organizational measures to demonstrate compliance and avoid potential legal penalties.

Model security itself presents unique challenges, as generative models can be vulnerable to adversarial attacks or model inversion techniques that attempt to extract training data. Implementing model hardening techniques and monitoring for unusual query patterns can help mitigate these risks while maintaining system functionality.

Generative models inherently require large datasets for training, raising questions about data ownership and consent when experimental data is utilized. Organizations implementing these integrated systems must establish clear data governance frameworks that define data handling protocols, access controls, and retention policies. Without such frameworks, the risk of data misuse or unintended disclosure increases substantially.

Encryption mechanisms play a crucial role in securing data throughout the integration pipeline. End-to-end encryption should be implemented for data in transit between generative models and MAP experimental systems, while at-rest encryption protects stored datasets and model parameters. Advanced cryptographic techniques such as homomorphic encryption show promise by enabling computations on encrypted data without decryption, though computational overhead remains a challenge.

Differential privacy techniques offer another layer of protection by introducing calibrated noise into datasets or model outputs, preventing the extraction of individual data points while preserving overall statistical utility. This approach is particularly valuable when generative models need to be trained on sensitive experimental data without compromising privacy guarantees.

Authentication and authorization frameworks must be robust, implementing multi-factor authentication and role-based access controls to ensure only authorized personnel can access specific components of the integrated system. Regular security audits and penetration testing should be conducted to identify and remediate vulnerabilities before they can be exploited.

Regulatory compliance adds another dimension to security considerations. Depending on the industry and geographic location, integrated systems may need to comply with regulations such as GDPR, HIPAA, or industry-specific requirements. Organizations must implement appropriate technical and organizational measures to demonstrate compliance and avoid potential legal penalties.

Model security itself presents unique challenges, as generative models can be vulnerable to adversarial attacks or model inversion techniques that attempt to extract training data. Implementing model hardening techniques and monitoring for unusual query patterns can help mitigate these risks while maintaining system functionality.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!