Integration With Automotive Sensor Fusion Architectures

SEP 1, 202510 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Automotive Sensor Fusion Background and Objectives

Sensor fusion technology in automotive applications has evolved significantly over the past two decades, transitioning from basic implementations in premium vehicles to becoming a cornerstone of modern automotive safety and autonomous driving systems. The integration of multiple sensor types—including radar, LiDAR, cameras, ultrasonic sensors, and GPS—has enabled vehicles to perceive their environment with unprecedented accuracy and reliability. This technological progression has been driven by the increasing demand for advanced driver assistance systems (ADAS) and the push toward higher levels of vehicle autonomy.

The evolution of automotive sensor fusion can be traced through several key developmental phases. Initially, single-sensor systems provided limited functionality for specific applications such as parking assistance or adaptive cruise control. As computational capabilities advanced, multi-sensor systems emerged, allowing for cross-validation of data and improved environmental perception. The current generation of sensor fusion architectures implements sophisticated algorithms that combine data from heterogeneous sensors to create comprehensive environmental models in real-time.

Market trends indicate an accelerating adoption rate of sensor fusion technologies across all vehicle segments, not just luxury models. This democratization is propelled by regulatory requirements for safety features, consumer demand for convenience functions, and the automotive industry's strategic pivot toward autonomous mobility solutions. The technology roadmap suggests continued refinement of fusion algorithms, increased sensor integration density, and enhanced processing capabilities to handle the growing data volumes.

The primary objective of modern automotive sensor fusion is to create robust, redundant systems that can operate reliably under diverse environmental conditions and driving scenarios. This includes functioning effectively during adverse weather, varying lighting conditions, and complex traffic situations. Additionally, these systems aim to provide seamless operation despite potential sensor failures or degradation, ensuring consistent safety performance.

Technical goals for next-generation sensor fusion architectures include reducing latency in data processing, minimizing power consumption, optimizing sensor placement and coverage, and developing more efficient algorithms for real-time decision-making. There is also significant focus on creating standardized interfaces and protocols to facilitate integration across different vehicle platforms and sensor technologies from various suppliers.

Looking forward, the industry is moving toward centralized fusion architectures that consolidate processing from previously distributed systems, enabling more sophisticated cross-sensor analytics and decision-making. This centralization trend is complemented by efforts to develop more adaptive fusion algorithms that can dynamically adjust their operation based on contextual factors and sensor health status, further enhancing system resilience and performance.

The evolution of automotive sensor fusion can be traced through several key developmental phases. Initially, single-sensor systems provided limited functionality for specific applications such as parking assistance or adaptive cruise control. As computational capabilities advanced, multi-sensor systems emerged, allowing for cross-validation of data and improved environmental perception. The current generation of sensor fusion architectures implements sophisticated algorithms that combine data from heterogeneous sensors to create comprehensive environmental models in real-time.

Market trends indicate an accelerating adoption rate of sensor fusion technologies across all vehicle segments, not just luxury models. This democratization is propelled by regulatory requirements for safety features, consumer demand for convenience functions, and the automotive industry's strategic pivot toward autonomous mobility solutions. The technology roadmap suggests continued refinement of fusion algorithms, increased sensor integration density, and enhanced processing capabilities to handle the growing data volumes.

The primary objective of modern automotive sensor fusion is to create robust, redundant systems that can operate reliably under diverse environmental conditions and driving scenarios. This includes functioning effectively during adverse weather, varying lighting conditions, and complex traffic situations. Additionally, these systems aim to provide seamless operation despite potential sensor failures or degradation, ensuring consistent safety performance.

Technical goals for next-generation sensor fusion architectures include reducing latency in data processing, minimizing power consumption, optimizing sensor placement and coverage, and developing more efficient algorithms for real-time decision-making. There is also significant focus on creating standardized interfaces and protocols to facilitate integration across different vehicle platforms and sensor technologies from various suppliers.

Looking forward, the industry is moving toward centralized fusion architectures that consolidate processing from previously distributed systems, enabling more sophisticated cross-sensor analytics and decision-making. This centralization trend is complemented by efforts to develop more adaptive fusion algorithms that can dynamically adjust their operation based on contextual factors and sensor health status, further enhancing system resilience and performance.

Market Demand Analysis for Integrated Sensor Systems

The automotive sensor fusion market is experiencing unprecedented growth, driven by the rapid advancement of autonomous driving technologies and increasing safety requirements in modern vehicles. According to recent market research, the global automotive sensor fusion market is projected to reach $22.2 billion by 2026, growing at a CAGR of 23.5% from 2021. This significant growth reflects the industry's recognition of integrated sensor systems as a critical component for achieving higher levels of vehicle autonomy and enhanced safety features.

Consumer demand for advanced driver assistance systems (ADAS) has become a primary market driver, with over 70% of new car buyers now considering ADAS features essential rather than optional. This shift in consumer preference has compelled automotive manufacturers to integrate more sophisticated sensor fusion architectures into their vehicle designs, creating substantial market opportunities for technology providers in this space.

Regulatory frameworks worldwide are increasingly mandating advanced safety features that rely on sensor fusion technology. The European New Car Assessment Programme (Euro NCAP) has updated its safety rating system to include evaluation of sensor-based safety systems, while similar initiatives are being implemented in North America and Asia-Pacific regions. These regulatory pressures are accelerating market adoption of integrated sensor systems across all vehicle segments, not just premium models.

Commercial fleet operators represent another significant market segment, with logistics companies investing heavily in vehicles equipped with advanced sensor fusion systems to improve operational efficiency and reduce accident-related costs. Fleet management solutions incorporating sensor fusion technology have demonstrated up to 30% reduction in collision rates and 15% improvement in fuel efficiency through optimized routing and driving behavior analysis.

The market for retrofit sensor fusion solutions is also expanding, particularly in regions with aging vehicle fleets. This segment offers considerable growth potential as it enables existing vehicles to benefit from advanced sensing capabilities without complete replacement of transportation assets.

Regional analysis indicates that while North America and Europe currently lead in adoption rates, the Asia-Pacific region is expected to witness the fastest growth over the next five years. China, in particular, is making substantial investments in autonomous driving infrastructure and manufacturing capabilities for integrated sensor systems.

Market research also reveals a growing demand for sensor fusion architectures that can accommodate over-the-air updates and future sensor additions, indicating that flexibility and scalability are becoming key purchasing factors for automotive manufacturers seeking long-term technology partnerships.

Consumer demand for advanced driver assistance systems (ADAS) has become a primary market driver, with over 70% of new car buyers now considering ADAS features essential rather than optional. This shift in consumer preference has compelled automotive manufacturers to integrate more sophisticated sensor fusion architectures into their vehicle designs, creating substantial market opportunities for technology providers in this space.

Regulatory frameworks worldwide are increasingly mandating advanced safety features that rely on sensor fusion technology. The European New Car Assessment Programme (Euro NCAP) has updated its safety rating system to include evaluation of sensor-based safety systems, while similar initiatives are being implemented in North America and Asia-Pacific regions. These regulatory pressures are accelerating market adoption of integrated sensor systems across all vehicle segments, not just premium models.

Commercial fleet operators represent another significant market segment, with logistics companies investing heavily in vehicles equipped with advanced sensor fusion systems to improve operational efficiency and reduce accident-related costs. Fleet management solutions incorporating sensor fusion technology have demonstrated up to 30% reduction in collision rates and 15% improvement in fuel efficiency through optimized routing and driving behavior analysis.

The market for retrofit sensor fusion solutions is also expanding, particularly in regions with aging vehicle fleets. This segment offers considerable growth potential as it enables existing vehicles to benefit from advanced sensing capabilities without complete replacement of transportation assets.

Regional analysis indicates that while North America and Europe currently lead in adoption rates, the Asia-Pacific region is expected to witness the fastest growth over the next five years. China, in particular, is making substantial investments in autonomous driving infrastructure and manufacturing capabilities for integrated sensor systems.

Market research also reveals a growing demand for sensor fusion architectures that can accommodate over-the-air updates and future sensor additions, indicating that flexibility and scalability are becoming key purchasing factors for automotive manufacturers seeking long-term technology partnerships.

Current Challenges in Automotive Sensor Fusion

Despite significant advancements in automotive sensor fusion technology, several critical challenges persist that impede optimal integration and performance. The heterogeneous nature of automotive sensors presents a fundamental obstacle, as each sensor type—cameras, radar, LiDAR, ultrasonic sensors—operates with different sampling rates, data formats, and physical principles. This diversity creates synchronization issues when attempting to create a unified perception model of the vehicle's surroundings.

Computational resource constraints represent another significant barrier. Modern vehicles generate enormous volumes of sensor data—up to several terabytes per hour—requiring substantial processing power for real-time fusion. Edge computing solutions implemented in vehicles must balance performance requirements with power consumption limitations, creating a complex optimization problem that affects system responsiveness.

Environmental resilience remains problematic for sensor fusion architectures. Weather conditions such as heavy rain, snow, fog, or extreme temperatures can degrade sensor performance asymmetrically across different sensor types. Creating fusion algorithms that dynamically compensate for these environmental effects while maintaining perception accuracy presents a substantial technical challenge.

Calibration and alignment issues frequently arise during integration. Sensors mounted at different positions on the vehicle require precise spatial and temporal alignment. Even minor calibration errors can propagate through the fusion process, leading to significant perception inaccuracies that compromise safety-critical functions.

Scalability and modularity challenges emerge as automotive manufacturers seek flexible architectures that can accommodate different vehicle models and optional features. Creating fusion frameworks that seamlessly integrate varying sensor configurations while maintaining consistent performance across product lines requires sophisticated software architecture approaches.

Validation and testing methodologies for sensor fusion systems remain underdeveloped. Traditional automotive testing approaches are insufficient for complex AI-driven fusion algorithms, particularly for edge cases and rare scenarios. The industry lacks standardized benchmarks and evaluation metrics specifically designed for sensor fusion performance.

Security vulnerabilities present growing concerns as sensor fusion systems become more connected. Potential attack vectors include sensor spoofing, data injection, and manipulation of fusion algorithms. Implementing robust security measures without compromising real-time performance requirements presents a delicate balance that many current architectures struggle to achieve.

Regulatory compliance and certification processes have not fully adapted to the complexity of modern sensor fusion systems. Demonstrating the safety and reliability of AI-based fusion algorithms to regulatory bodies requires new approaches to verification and validation that can address the probabilistic nature of these systems.

Computational resource constraints represent another significant barrier. Modern vehicles generate enormous volumes of sensor data—up to several terabytes per hour—requiring substantial processing power for real-time fusion. Edge computing solutions implemented in vehicles must balance performance requirements with power consumption limitations, creating a complex optimization problem that affects system responsiveness.

Environmental resilience remains problematic for sensor fusion architectures. Weather conditions such as heavy rain, snow, fog, or extreme temperatures can degrade sensor performance asymmetrically across different sensor types. Creating fusion algorithms that dynamically compensate for these environmental effects while maintaining perception accuracy presents a substantial technical challenge.

Calibration and alignment issues frequently arise during integration. Sensors mounted at different positions on the vehicle require precise spatial and temporal alignment. Even minor calibration errors can propagate through the fusion process, leading to significant perception inaccuracies that compromise safety-critical functions.

Scalability and modularity challenges emerge as automotive manufacturers seek flexible architectures that can accommodate different vehicle models and optional features. Creating fusion frameworks that seamlessly integrate varying sensor configurations while maintaining consistent performance across product lines requires sophisticated software architecture approaches.

Validation and testing methodologies for sensor fusion systems remain underdeveloped. Traditional automotive testing approaches are insufficient for complex AI-driven fusion algorithms, particularly for edge cases and rare scenarios. The industry lacks standardized benchmarks and evaluation metrics specifically designed for sensor fusion performance.

Security vulnerabilities present growing concerns as sensor fusion systems become more connected. Potential attack vectors include sensor spoofing, data injection, and manipulation of fusion algorithms. Implementing robust security measures without compromising real-time performance requirements presents a delicate balance that many current architectures struggle to achieve.

Regulatory compliance and certification processes have not fully adapted to the complexity of modern sensor fusion systems. Demonstrating the safety and reliability of AI-based fusion algorithms to regulatory bodies requires new approaches to verification and validation that can address the probabilistic nature of these systems.

Current Sensor Fusion Architectures and Implementations

01 Multi-sensor data integration architectures

Architectures designed for integrating data from multiple sensors to enhance accuracy and reliability of measurements. These systems typically employ algorithms that combine inputs from various sensor types (e.g., cameras, LiDAR, radar) to create a more comprehensive environmental understanding. The fusion process involves synchronizing data streams, aligning coordinate systems, and implementing filtering techniques to handle sensor uncertainties and environmental noise.- Multi-sensor data fusion architectures: Multi-sensor data fusion architectures integrate data from various sensors to provide more accurate and reliable information. These architectures typically involve combining data from different sensor types (cameras, LiDAR, radar, etc.) using algorithms that can handle different data formats and sampling rates. The fusion process can occur at different levels: raw data level, feature level, or decision level, depending on the application requirements and computational constraints.

- Centralized vs. distributed fusion architectures: Sensor fusion systems can be implemented using either centralized or distributed architectures. In centralized architectures, all sensor data is sent to a central processing unit for fusion, providing comprehensive analysis but potentially creating bottlenecks. Distributed architectures process data locally before sharing results, reducing bandwidth requirements and increasing system resilience. Hybrid approaches combine elements of both to optimize performance for specific applications.

- Real-time sensor fusion implementation: Real-time sensor fusion architectures focus on processing and integrating sensor data with minimal latency for time-critical applications. These implementations often employ parallel processing, hardware acceleration, and optimized algorithms to ensure timely decision-making. The architecture must balance computational complexity with speed requirements, often utilizing specialized hardware like FPGAs or GPUs to accelerate fusion operations.

- Software frameworks for sensor fusion: Software frameworks provide structured approaches for implementing sensor fusion systems, offering reusable components, standardized interfaces, and development tools. These frameworks facilitate the integration of different algorithms and sensor types while managing data flow and synchronization. They often include libraries for common fusion algorithms, visualization tools, and simulation capabilities to test fusion performance before deployment in real-world environments.

- Application-specific fusion architectures: Sensor fusion architectures are often tailored to specific application domains such as autonomous vehicles, robotics, or industrial monitoring. These specialized architectures consider the unique requirements of each application, including sensor selection, fusion algorithms, and performance metrics. The design focuses on optimizing the fusion process for the particular environmental conditions, reliability requirements, and computational constraints of the target application.

02 Centralized vs. distributed fusion frameworks

Different architectural approaches to sensor fusion implementation, comparing centralized systems where all sensor data is processed in a single computational unit versus distributed frameworks where processing is spread across multiple nodes. Centralized architectures offer simplified data management but may create bottlenecks, while distributed systems provide redundancy and scalability but require more complex communication protocols and synchronization mechanisms.Expand Specific Solutions03 Software integration platforms for sensor fusion

Software frameworks and middleware solutions specifically designed to facilitate sensor fusion implementation. These platforms provide standardized interfaces, data processing pipelines, and development tools that simplify the integration of diverse sensors and fusion algorithms. They often include features like plugin architectures, real-time processing capabilities, and visualization tools to support rapid development and deployment of sensor fusion applications.Expand Specific Solutions04 AI and machine learning approaches for sensor fusion

Advanced sensor fusion techniques that leverage artificial intelligence and machine learning algorithms to improve integration and interpretation of multi-sensor data. These approaches use neural networks, deep learning, and other AI methods to automatically learn optimal fusion strategies from training data, adapt to changing conditions, and extract higher-level information from raw sensor inputs. They can handle complex, non-linear relationships between sensor data that traditional methods struggle with.Expand Specific Solutions05 Embedded systems for real-time sensor fusion

Hardware architectures and embedded systems designed specifically for implementing sensor fusion in real-time applications with strict latency requirements. These systems often combine specialized processors (GPUs, FPGAs, or dedicated ASICs) with optimized software to achieve high-performance fusion while meeting size, weight, power, and cost constraints. They typically include hardware acceleration for common fusion algorithms and efficient interfaces for connecting multiple sensor types.Expand Specific Solutions

Key Industry Players in Automotive Sensor Integration

The automotive sensor fusion architecture market is in a growth phase, characterized by rapid technological advancements and expanding applications in autonomous driving systems. The global market size is projected to grow significantly as vehicle automation levels increase, driven by safety regulations and consumer demand for advanced driver assistance systems. Leading automotive manufacturers like Hyundai, Kia, BMW, and Mercedes-Benz are collaborating with technology specialists including Bosch, Continental, and Qualcomm to develop integrated sensor fusion solutions. Traditional tier-1 suppliers are competing with tech companies like Waymo and emerging players from China such as BYD and Great Wall Motor. The technology is maturing rapidly with companies focusing on standardization, real-time processing capabilities, and seamless integration with existing vehicle architectures to enable higher levels of autonomous driving functionality.

Robert Bosch GmbH

Technical Solution: Bosch has developed a comprehensive sensor fusion architecture that integrates data from multiple sensor types including radar, camera, lidar, and ultrasonic sensors. Their central sensor fusion ECU employs a hierarchical approach with both low-level and high-level fusion techniques. The system uses Kalman filtering algorithms for object tracking and implements redundancy mechanisms to ensure reliability. Bosch's architecture supports AUTOSAR compatibility and features a modular design that allows for scalability across different vehicle platforms. Their sensor fusion technology enables 360-degree environmental perception with real-time processing capabilities, achieving detection ranges of up to 250 meters for their combined radar-camera systems. The architecture incorporates AI-based algorithms for object classification with reported accuracy rates exceeding 95% in various weather conditions.

Strengths: Industry-leading sensor integration expertise; robust redundancy mechanisms; proven reliability in production vehicles; extensive automotive supplier network. Weaknesses: Higher implementation costs compared to simpler systems; complex integration requirements; potential vendor lock-in due to proprietary components.

Continental Automotive Technologies GmbH

Technical Solution: Continental's sensor fusion architecture employs a distributed processing approach with their "Assisted & Automated Driving Control Unit" (ADCU) as the central integration hub. Their system processes data from up to 20 different sensors simultaneously, including high-resolution cameras, radar, and lidar. Continental utilizes a multi-layer fusion strategy that combines raw data fusion for precise object detection with feature-level fusion for environmental modeling. Their architecture implements parallel processing pathways for safety-critical functions, ensuring redundancy. The system achieves processing latencies below 50ms for critical detection scenarios and supports over-the-air updates for continuous improvement. Continental's fusion algorithms incorporate machine learning techniques for enhanced object classification and prediction, with particular strength in adverse weather condition performance.

Strengths: Highly scalable architecture supporting various automation levels; proven integration with multiple OEM platforms; strong performance in adverse weather conditions. Weaknesses: Higher computational requirements than single-sensor systems; complex calibration procedures; challenges with real-time processing of high-bandwidth sensor data.

Core Patents and Research in Sensor Data Integration

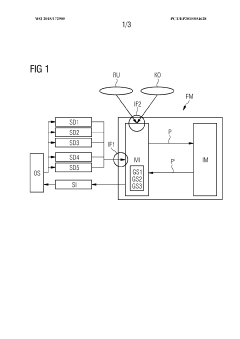

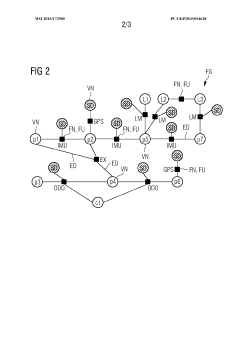

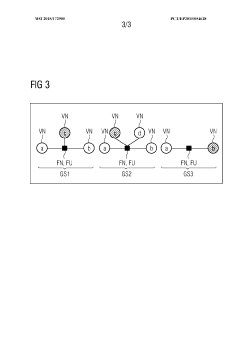

Arrangement and method for sensor fusion

PatentWO2015172905A1

Innovation

- An arrangement and method for sensor fusion that uses an interface to receive data from multiple real and virtual sensors, generating a factor graph processed by a fusion machine, which includes configurable graph sections and rules to adapt to various sensor constellations and tasks, allowing for flexible configuration and adaptation.

Systems and methods for cooperative sensor fusion by parameterized covariance generation in connected autonomous vehicles

PatentPendingUS20250026371A1

Innovation

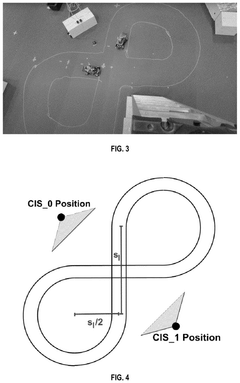

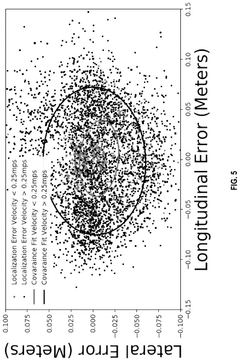

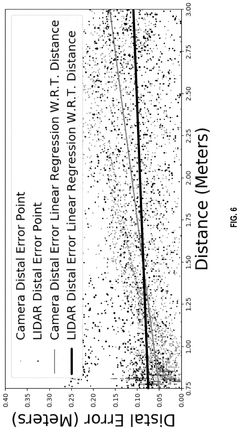

- A parameterized covariance generation system that estimates errors using key predictor terms correlated with sensing and localization accuracy, incorporating a tiered fusion model with local and global sensor fusion steps, and using measured distance and velocity to generate accurate covariance matrices.

Standardization and Interoperability Frameworks

The automotive industry is witnessing a significant push toward standardization and interoperability frameworks for sensor fusion architectures. These frameworks are essential for ensuring that diverse sensor technologies from multiple vendors can seamlessly integrate and communicate within a unified vehicle architecture. Currently, several industry consortia are developing standards, with the Automotive Open System Architecture (AUTOSAR) and GENIVI Alliance leading efforts to establish common interfaces and protocols for sensor data integration.

Key standardization initiatives include the development of the Sensor Interface Specification (SenDIS) which defines standardized data formats and communication protocols for various automotive sensors including cameras, radar, lidar, and ultrasonic systems. This specification enables hardware-agnostic software development, allowing OEMs to interchange sensors without significant architectural modifications.

Interoperability challenges remain substantial, particularly in harmonizing data from sensors operating at different frequencies and resolutions. The emergence of Time-Sensitive Networking (TSN) standards addresses timing synchronization issues critical for accurate sensor fusion. Additionally, the development of common middleware solutions such as Robot Operating System for Automotive (ROS-A) and Apollo Auto provides standardized APIs that abstract hardware complexities.

Security considerations have become integral to these frameworks, with standards like ISO 21434 for automotive cybersecurity engineering establishing protocols for secure sensor data transmission and processing. These standards ensure that sensor fusion systems remain protected against potential cyber threats while maintaining functional integrity.

Cross-industry collaboration has accelerated framework development, with technology companies joining traditional automotive manufacturers in standards committees. The IEEE P2020 working group focuses specifically on automotive imaging standards, while the 5G Automotive Association (5GAA) works on connectivity standards essential for V2X communications that complement onboard sensor fusion.

Implementation challenges include backward compatibility with legacy systems and the need for flexible frameworks that can accommodate rapid technological advancements. Regulatory bodies across different regions are increasingly mandating compliance with specific standards, creating a complex landscape for global vehicle platforms.

Future standardization efforts are focusing on AI model interoperability, allowing different machine learning algorithms to work cohesively within sensor fusion architectures. Edge computing standardization is also emerging as a critical area, with frameworks defining how processing should be distributed between in-sensor computing, domain controllers, and centralized vehicle computers.

Key standardization initiatives include the development of the Sensor Interface Specification (SenDIS) which defines standardized data formats and communication protocols for various automotive sensors including cameras, radar, lidar, and ultrasonic systems. This specification enables hardware-agnostic software development, allowing OEMs to interchange sensors without significant architectural modifications.

Interoperability challenges remain substantial, particularly in harmonizing data from sensors operating at different frequencies and resolutions. The emergence of Time-Sensitive Networking (TSN) standards addresses timing synchronization issues critical for accurate sensor fusion. Additionally, the development of common middleware solutions such as Robot Operating System for Automotive (ROS-A) and Apollo Auto provides standardized APIs that abstract hardware complexities.

Security considerations have become integral to these frameworks, with standards like ISO 21434 for automotive cybersecurity engineering establishing protocols for secure sensor data transmission and processing. These standards ensure that sensor fusion systems remain protected against potential cyber threats while maintaining functional integrity.

Cross-industry collaboration has accelerated framework development, with technology companies joining traditional automotive manufacturers in standards committees. The IEEE P2020 working group focuses specifically on automotive imaging standards, while the 5G Automotive Association (5GAA) works on connectivity standards essential for V2X communications that complement onboard sensor fusion.

Implementation challenges include backward compatibility with legacy systems and the need for flexible frameworks that can accommodate rapid technological advancements. Regulatory bodies across different regions are increasingly mandating compliance with specific standards, creating a complex landscape for global vehicle platforms.

Future standardization efforts are focusing on AI model interoperability, allowing different machine learning algorithms to work cohesively within sensor fusion architectures. Edge computing standardization is also emerging as a critical area, with frameworks defining how processing should be distributed between in-sensor computing, domain controllers, and centralized vehicle computers.

Safety and Reliability Considerations

Safety and reliability are paramount concerns in automotive sensor fusion architectures, especially as vehicles become increasingly autonomous. The integration of multiple sensor types (LiDAR, radar, cameras, ultrasonic) into a cohesive system introduces complex failure modes that must be thoroughly addressed. Sensor fusion systems must maintain operational integrity even when individual sensors degrade or fail completely, requiring robust redundancy mechanisms and graceful degradation protocols.

Functional safety standards, particularly ISO 26262, establish rigorous requirements for automotive electronic systems. These standards mandate comprehensive hazard analysis and risk assessment (HARA) processes to identify potential failure scenarios in sensor fusion implementations. Automotive Safety Integrity Levels (ASIL) ratings, ranging from A to D, dictate the necessary safety measures based on severity, exposure, and controllability of potential failures.

Environmental resilience presents another critical challenge, as sensor fusion systems must operate reliably across extreme temperature ranges, precipitation conditions, and electromagnetic interference scenarios. Validation testing must account for these environmental factors, with particular attention to edge cases that might compromise system performance. Sensor fusion algorithms must incorporate confidence metrics that dynamically adjust based on environmental conditions affecting sensor reliability.

Real-time performance requirements introduce additional complexity, as safety-critical decisions must be made within strict timing constraints. Latency in sensor data processing or fusion algorithms can significantly impact system safety, particularly in emergency scenarios requiring immediate response. Hardware acceleration technologies and optimized software architectures are essential to meeting these timing requirements while maintaining system reliability.

Verification and validation methodologies for sensor fusion systems require sophisticated approaches beyond traditional testing. Simulation environments must accurately model complex real-world scenarios, while hardware-in-the-loop testing validates system behavior under realistic conditions. Statistical validation approaches help quantify system reliability across the vast state space of possible driving scenarios.

Cybersecurity considerations have emerged as a critical safety component, as sensor fusion systems may be vulnerable to spoofing, jamming, or other malicious attacks. Security measures must be implemented without compromising real-time performance, creating a challenging engineering trade-off. Secure boot processes, encrypted communications, and intrusion detection systems are becoming standard components of automotive sensor fusion architectures.

Human-machine interface design represents the final safety consideration, as drivers must understand the capabilities and limitations of sensor fusion systems. Clear communication of system status, particularly during handover scenarios between automated and manual driving, is essential for maintaining safety during system operation.

Functional safety standards, particularly ISO 26262, establish rigorous requirements for automotive electronic systems. These standards mandate comprehensive hazard analysis and risk assessment (HARA) processes to identify potential failure scenarios in sensor fusion implementations. Automotive Safety Integrity Levels (ASIL) ratings, ranging from A to D, dictate the necessary safety measures based on severity, exposure, and controllability of potential failures.

Environmental resilience presents another critical challenge, as sensor fusion systems must operate reliably across extreme temperature ranges, precipitation conditions, and electromagnetic interference scenarios. Validation testing must account for these environmental factors, with particular attention to edge cases that might compromise system performance. Sensor fusion algorithms must incorporate confidence metrics that dynamically adjust based on environmental conditions affecting sensor reliability.

Real-time performance requirements introduce additional complexity, as safety-critical decisions must be made within strict timing constraints. Latency in sensor data processing or fusion algorithms can significantly impact system safety, particularly in emergency scenarios requiring immediate response. Hardware acceleration technologies and optimized software architectures are essential to meeting these timing requirements while maintaining system reliability.

Verification and validation methodologies for sensor fusion systems require sophisticated approaches beyond traditional testing. Simulation environments must accurately model complex real-world scenarios, while hardware-in-the-loop testing validates system behavior under realistic conditions. Statistical validation approaches help quantify system reliability across the vast state space of possible driving scenarios.

Cybersecurity considerations have emerged as a critical safety component, as sensor fusion systems may be vulnerable to spoofing, jamming, or other malicious attacks. Security measures must be implemented without compromising real-time performance, creating a challenging engineering trade-off. Secure boot processes, encrypted communications, and intrusion detection systems are becoming standard components of automotive sensor fusion architectures.

Human-machine interface design represents the final safety consideration, as drivers must understand the capabilities and limitations of sensor fusion systems. Clear communication of system status, particularly during handover scenarios between automated and manual driving, is essential for maintaining safety during system operation.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!