Maximizing GC-MS Throughput with Automated Samples

SEP 22, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

GC-MS Automation Background and Objectives

Gas Chromatography-Mass Spectrometry (GC-MS) has evolved significantly since its inception in the 1950s, becoming an indispensable analytical technique in various industries including pharmaceuticals, environmental monitoring, food safety, forensics, and petrochemicals. The integration of these two powerful analytical methods combines the high separation efficiency of gas chromatography with the exceptional identification capabilities of mass spectrometry, enabling precise compound identification and quantification in complex mixtures.

Traditional GC-MS workflows have been characterized by labor-intensive manual processes, including sample preparation, injection, and data analysis. These manual operations not only consume significant time but also introduce variability in results due to human error. As analytical demands have grown exponentially across industries, the limitations of manual GC-MS operations have become increasingly apparent, creating bottlenecks in laboratory productivity and constraining throughput capacity.

The technological evolution of GC-MS systems has been driven by the persistent need for higher throughput, improved accuracy, and enhanced operational efficiency. Recent advancements in automation technologies, robotics, and artificial intelligence have opened new possibilities for transforming GC-MS workflows. The integration of these technologies promises to address longstanding challenges in sample handling, instrument calibration, and data processing.

The primary objective of maximizing GC-MS throughput with automated samples is to develop comprehensive automation solutions that optimize every aspect of the analytical workflow. This includes automating sample preparation, implementing intelligent sample scheduling, developing robust auto-sampling mechanisms, and creating integrated data processing systems. The ultimate goal is to establish a seamless, high-throughput analytical platform that minimizes human intervention while maximizing analytical output and reliability.

Secondary objectives include reducing operational costs through efficient resource utilization, minimizing solvent consumption through optimized methods, enhancing reproducibility by eliminating human variability factors, and enabling 24/7 operation capabilities. Additionally, the development of adaptive systems capable of handling diverse sample types and analytical requirements represents a significant technological aspiration in this field.

The evolution toward fully automated GC-MS systems aligns with broader industry trends toward laboratory digitalization and the implementation of Industry 4.0 principles in analytical chemistry. As laboratories face increasing pressure to process larger sample volumes with fewer resources, the development of advanced automation solutions for GC-MS has emerged as a critical technological priority with substantial implications for scientific research, quality control, and regulatory compliance across multiple sectors.

Traditional GC-MS workflows have been characterized by labor-intensive manual processes, including sample preparation, injection, and data analysis. These manual operations not only consume significant time but also introduce variability in results due to human error. As analytical demands have grown exponentially across industries, the limitations of manual GC-MS operations have become increasingly apparent, creating bottlenecks in laboratory productivity and constraining throughput capacity.

The technological evolution of GC-MS systems has been driven by the persistent need for higher throughput, improved accuracy, and enhanced operational efficiency. Recent advancements in automation technologies, robotics, and artificial intelligence have opened new possibilities for transforming GC-MS workflows. The integration of these technologies promises to address longstanding challenges in sample handling, instrument calibration, and data processing.

The primary objective of maximizing GC-MS throughput with automated samples is to develop comprehensive automation solutions that optimize every aspect of the analytical workflow. This includes automating sample preparation, implementing intelligent sample scheduling, developing robust auto-sampling mechanisms, and creating integrated data processing systems. The ultimate goal is to establish a seamless, high-throughput analytical platform that minimizes human intervention while maximizing analytical output and reliability.

Secondary objectives include reducing operational costs through efficient resource utilization, minimizing solvent consumption through optimized methods, enhancing reproducibility by eliminating human variability factors, and enabling 24/7 operation capabilities. Additionally, the development of adaptive systems capable of handling diverse sample types and analytical requirements represents a significant technological aspiration in this field.

The evolution toward fully automated GC-MS systems aligns with broader industry trends toward laboratory digitalization and the implementation of Industry 4.0 principles in analytical chemistry. As laboratories face increasing pressure to process larger sample volumes with fewer resources, the development of advanced automation solutions for GC-MS has emerged as a critical technological priority with substantial implications for scientific research, quality control, and regulatory compliance across multiple sectors.

Market Demand Analysis for High-Throughput GC-MS

The global market for high-throughput GC-MS systems has experienced significant growth in recent years, driven by increasing demand across multiple sectors including pharmaceuticals, environmental monitoring, food safety, and forensic analysis. This growth trajectory is expected to continue, with the market projected to reach $1.5 billion by 2027, representing a compound annual growth rate of 5.8% from 2022.

The pharmaceutical and biotechnology sectors remain the largest consumers of high-throughput GC-MS technology, accounting for approximately 35% of the total market share. These industries require rapid, accurate analysis of complex samples for drug discovery, development, and quality control processes. The ability to process large sample batches automatically has become a critical factor in accelerating time-to-market for new pharmaceutical products.

Environmental testing laboratories represent another significant market segment, with growing regulatory requirements driving demand for efficient analytical methods. Government agencies worldwide have implemented stricter monitoring protocols for air, water, and soil contaminants, necessitating higher sample throughput capabilities. This regulatory pressure has created a substantial market opportunity for automated GC-MS systems that can handle increased sample volumes while maintaining analytical precision.

Food and beverage safety testing has emerged as a rapidly expanding application area, growing at nearly 7% annually. Consumer awareness regarding food contaminants and adulterants has heightened demand for comprehensive testing protocols. Major food producers and regulatory bodies require systems capable of screening thousands of samples daily for pesticides, mycotoxins, and other harmful compounds.

Clinical diagnostics represents a promising growth segment, with metabolomics and clinical toxicology applications driving adoption of high-throughput GC-MS systems. The need for rapid screening in emergency toxicology and therapeutic drug monitoring has created demand for automated systems that can deliver results within critical timeframes.

Market research indicates that end-users increasingly prioritize total laboratory efficiency over instrument purchase price alone. A survey of laboratory managers revealed that 78% consider sample throughput capacity a "very important" factor in purchasing decisions, while 65% specifically seek automation capabilities to address workforce constraints and improve operational efficiency.

Regional analysis shows North America currently dominating the market with 38% share, followed by Europe (32%) and Asia-Pacific (24%). However, the Asia-Pacific region is experiencing the fastest growth rate at 8.2% annually, driven by expanding pharmaceutical manufacturing, environmental monitoring programs, and food safety initiatives in China, India, and South Korea.

The pharmaceutical and biotechnology sectors remain the largest consumers of high-throughput GC-MS technology, accounting for approximately 35% of the total market share. These industries require rapid, accurate analysis of complex samples for drug discovery, development, and quality control processes. The ability to process large sample batches automatically has become a critical factor in accelerating time-to-market for new pharmaceutical products.

Environmental testing laboratories represent another significant market segment, with growing regulatory requirements driving demand for efficient analytical methods. Government agencies worldwide have implemented stricter monitoring protocols for air, water, and soil contaminants, necessitating higher sample throughput capabilities. This regulatory pressure has created a substantial market opportunity for automated GC-MS systems that can handle increased sample volumes while maintaining analytical precision.

Food and beverage safety testing has emerged as a rapidly expanding application area, growing at nearly 7% annually. Consumer awareness regarding food contaminants and adulterants has heightened demand for comprehensive testing protocols. Major food producers and regulatory bodies require systems capable of screening thousands of samples daily for pesticides, mycotoxins, and other harmful compounds.

Clinical diagnostics represents a promising growth segment, with metabolomics and clinical toxicology applications driving adoption of high-throughput GC-MS systems. The need for rapid screening in emergency toxicology and therapeutic drug monitoring has created demand for automated systems that can deliver results within critical timeframes.

Market research indicates that end-users increasingly prioritize total laboratory efficiency over instrument purchase price alone. A survey of laboratory managers revealed that 78% consider sample throughput capacity a "very important" factor in purchasing decisions, while 65% specifically seek automation capabilities to address workforce constraints and improve operational efficiency.

Regional analysis shows North America currently dominating the market with 38% share, followed by Europe (32%) and Asia-Pacific (24%). However, the Asia-Pacific region is experiencing the fastest growth rate at 8.2% annually, driven by expanding pharmaceutical manufacturing, environmental monitoring programs, and food safety initiatives in China, India, and South Korea.

Current Limitations and Technical Challenges in GC-MS Automation

Despite significant advancements in GC-MS automation technologies, several critical limitations and technical challenges continue to impede optimal throughput in automated sample analysis. The current generation of autosamplers faces reliability issues during extended operation periods, with mechanical failures occurring in sample handling components such as robotic arms, syringe mechanisms, and carousel systems. These failures not only interrupt analytical sequences but also potentially compromise sample integrity, necessitating costly reruns and extending project timelines.

Sample carryover remains a persistent challenge, particularly when analyzing complex matrices or compounds with high adsorption properties. Current washing protocols and materials used in sample path components have not fully eliminated this issue, leading to potential cross-contamination that compromises data quality and requires additional validation steps.

The integration of automation systems with existing laboratory information management systems (LIMS) presents significant compatibility challenges. Many GC-MS automation platforms utilize proprietary software architectures that create data silos, hindering seamless information flow across the analytical workflow and requiring manual intervention for data transfer and processing.

Current automated systems demonstrate limited adaptability to varying sample types and preparation requirements. Most platforms are optimized for specific sample matrices and preparation protocols, requiring substantial reconfiguration when transitioning between different analytical methods or sample types. This inflexibility reduces overall laboratory efficiency and increases operational complexity.

Temperature control inconsistencies in sample storage components of automated systems represent another significant limitation. Samples awaiting analysis may experience temperature fluctuations that can affect volatile compound stability, particularly during extended analytical sequences. This issue is especially problematic for thermally sensitive compounds and can introduce variability in quantitative results.

Resource utilization inefficiencies are evident in current automation approaches, with suboptimal scheduling algorithms that fail to maximize instrument uptime. Many systems lack intelligent queue management capabilities that could prioritize samples based on stability considerations, analysis urgency, or optimal method sequencing to reduce equilibration times between different analytical conditions.

The scalability of existing automation solutions presents challenges for laboratories experiencing fluctuating sample volumes. Current systems often require significant capital investment for capacity expansion and lack modular architectures that would allow incremental scaling to match changing throughput requirements, creating operational bottlenecks during high-demand periods.

Sample carryover remains a persistent challenge, particularly when analyzing complex matrices or compounds with high adsorption properties. Current washing protocols and materials used in sample path components have not fully eliminated this issue, leading to potential cross-contamination that compromises data quality and requires additional validation steps.

The integration of automation systems with existing laboratory information management systems (LIMS) presents significant compatibility challenges. Many GC-MS automation platforms utilize proprietary software architectures that create data silos, hindering seamless information flow across the analytical workflow and requiring manual intervention for data transfer and processing.

Current automated systems demonstrate limited adaptability to varying sample types and preparation requirements. Most platforms are optimized for specific sample matrices and preparation protocols, requiring substantial reconfiguration when transitioning between different analytical methods or sample types. This inflexibility reduces overall laboratory efficiency and increases operational complexity.

Temperature control inconsistencies in sample storage components of automated systems represent another significant limitation. Samples awaiting analysis may experience temperature fluctuations that can affect volatile compound stability, particularly during extended analytical sequences. This issue is especially problematic for thermally sensitive compounds and can introduce variability in quantitative results.

Resource utilization inefficiencies are evident in current automation approaches, with suboptimal scheduling algorithms that fail to maximize instrument uptime. Many systems lack intelligent queue management capabilities that could prioritize samples based on stability considerations, analysis urgency, or optimal method sequencing to reduce equilibration times between different analytical conditions.

The scalability of existing automation solutions presents challenges for laboratories experiencing fluctuating sample volumes. Current systems often require significant capital investment for capacity expansion and lack modular architectures that would allow incremental scaling to match changing throughput requirements, creating operational bottlenecks during high-demand periods.

Current Automated Sample Handling Solutions for GC-MS

01 Automated sample handling systems for GC-MS

Automated sample handling systems for GC-MS incorporate robotic components to streamline the analytical workflow. These systems can automatically prepare, inject, and process multiple samples in sequence, significantly increasing throughput compared to manual operations. The automation reduces human error, ensures consistent sample preparation, and allows for continuous operation, which is particularly valuable for high-volume testing environments.- Automated sample handling systems for GC-MS: Automated sample handling systems for GC-MS improve throughput by enabling continuous operation without manual intervention. These systems typically include robotic arms, autosampler trays, and programmable controllers that can prepare and inject multiple samples sequentially. The automation reduces human error, increases reproducibility, and allows for 24/7 operation, significantly enhancing the analytical throughput of GC-MS instruments.

- Multi-sample parallel processing techniques: Parallel processing techniques allow multiple samples to be analyzed simultaneously or in rapid succession, dramatically increasing GC-MS throughput. These approaches include multi-column configurations, multiplexed injection systems, and parallel detection methods. By processing multiple samples concurrently rather than sequentially, these systems can achieve several-fold improvements in sample throughput while maintaining analytical quality.

- Optimized sample preparation and injection methods: Advanced sample preparation and injection methods enhance GC-MS throughput by reducing processing time and improving efficiency. These include rapid extraction techniques, automated derivatization, fast sample concentration, and optimized injection parameters. By minimizing sample preparation bottlenecks and maximizing injection efficiency, these methods significantly reduce the overall analysis time per sample.

- High-speed GC-MS technologies: High-speed GC-MS technologies incorporate innovations such as fast temperature ramping, short analytical columns, optimized carrier gas flow rates, and rapid scanning mass spectrometers. These technologies reduce chromatographic run times from tens of minutes to just a few minutes per sample while maintaining separation efficiency and detection sensitivity, thereby substantially increasing sample throughput.

- Integrated data processing and analysis software: Advanced data processing and analysis software systems enhance GC-MS throughput by automating peak identification, quantification, and reporting processes. These software solutions incorporate machine learning algorithms for automated data interpretation, batch processing capabilities, and streamlined reporting functions. By reducing the time required for data analysis and interpretation, these systems eliminate post-acquisition bottlenecks and improve overall workflow efficiency.

02 Multi-column and parallel processing techniques

Multi-column configurations and parallel processing techniques enable simultaneous analysis of multiple samples, dramatically improving GC-MS throughput. These systems utilize multiple chromatographic columns operating in parallel, with coordinated detection systems that can process several sample streams concurrently. This approach reduces the overall analysis time per sample and increases the number of samples that can be processed within a given timeframe.Expand Specific Solutions03 Advanced sample introduction and injection technologies

Innovative sample introduction and injection technologies enhance GC-MS throughput by optimizing the transfer of samples into the analytical system. These technologies include high-speed autosamplers, multi-port injection systems, and programmable temperature vaporizers that can rapidly process samples with minimal carryover. Advanced injection techniques also allow for smaller sample volumes while maintaining sensitivity, further reducing analysis time.Expand Specific Solutions04 Integrated data processing and analysis software

Sophisticated data processing and analysis software systems are essential for high-throughput GC-MS operations. These software solutions automate peak identification, quantification, and reporting processes, significantly reducing the time required for data interpretation. Machine learning algorithms and automated calibration routines further enhance efficiency by minimizing manual intervention and accelerating the transition from raw data to actionable results.Expand Specific Solutions05 Fast GC-MS techniques and hardware optimizations

Fast GC-MS techniques incorporate hardware optimizations such as short, narrow-bore columns, rapid temperature programming, and high-speed mass analyzers to reduce analysis time while maintaining separation efficiency. These systems employ optimized carrier gas flow rates, enhanced thermal management, and specialized detectors capable of high acquisition rates. The combination of these technologies enables significantly shorter run times per sample, directly increasing overall system throughput.Expand Specific Solutions

Leading Manufacturers and Competitors in GC-MS Automation

The GC-MS automated sample throughput market is in a growth phase, characterized by increasing demand for high-throughput analytical solutions across pharmaceutical, environmental, and food safety sectors. The market size is expanding steadily, projected to reach significant value as laboratories seek efficiency improvements. Technologically, the field shows moderate maturity with ongoing innovation focused on integration and workflow optimization. Leading players include Thermo Fisher Scientific (through Thermo Finnigan and Bremen subsidiaries), Agilent Technologies, Waters Technology, and Shimadzu, who dominate with comprehensive automation solutions. LECO Corporation offers specialized time-of-flight MS systems, while emerging competition comes from regional players like Maccura Biotechnology. The competitive landscape is characterized by continuous innovation in sample handling, data processing, and system integration capabilities.

Thermo Finnigan Corp.

Technical Solution: Thermo Finnigan (now part of Thermo Fisher Scientific) has developed the ISQ 7000 GC-MS system with Advanced Electron Ionization (AEI) source technology that delivers enhanced sensitivity and stability for high-throughput applications. Their solution incorporates the ExtractaBrite ion source that can be removed and cleaned without breaking vacuum, significantly reducing maintenance downtime[3]. The company's AutoSIM/SRM technology automatically optimizes selected ion monitoring parameters during method development, improving both throughput and data quality. Their Chromeleon Chromatography Data System (CDS) software provides automated sample scheduling, instrument control, and data processing capabilities across multiple instruments simultaneously. Thermo's TriPlus RSH autosampler supports liquid, headspace, and SPME sampling techniques with capacity for up to 972 samples[4]. Their SmartTune technology performs automated instrument calibration and optimization, ensuring consistent performance across long sample sequences. The system also features NeverVent technology that maintains vacuum during routine maintenance operations, eliminating lengthy pump-down cycles and maximizing instrument uptime.

Strengths: Exceptional sensitivity and stability with their AEI source technology; versatile autosampling capabilities supporting multiple injection techniques; comprehensive software integration with enterprise-level data management. Weaknesses: Complex software ecosystem may require significant training investment; higher maintenance requirements for some components; premium pricing structure compared to mid-range alternatives.

Waters Technology Corp.

Technical Solution: Waters' approach to GC-MS throughput maximization centers on their Atmospheric Pressure Gas Chromatography (APGC) technology integrated with Xevo TQ-XS and Xevo TQ-S mass spectrometers. This innovative ionization technique operates at atmospheric pressure rather than under vacuum, allowing for softer ionization and reduced fragmentation of target compounds[5]. Their solution includes the Universal Source Architecture that enables rapid switching between different ionization techniques without breaking vacuum or changing hardware. Waters' UNIFI Scientific Information System provides comprehensive workflow management from sample submission through reporting, with automated method development tools that optimize separation parameters based on compound properties. Their sample manager can accommodate up to 768 samples with integrated barcode reading and sample tracking. The company's QuanOptimize technology automatically determines optimal MRM transitions for target compounds, streamlining method development for complex matrices. Waters also offers intelligent sample scheduling that prioritizes urgent samples and optimizes instrument utilization based on method parameters and sample stability requirements[6].

Strengths: Unique atmospheric pressure ionization technology providing enhanced sensitivity for certain compound classes; seamless integration with LC-MS workflows in the same software environment; advanced data mining capabilities for retrospective analysis. Weaknesses: More specialized approach that may not be optimal for all GC-MS applications; higher technical complexity requiring specialized expertise; more limited GC-specific consumables portfolio compared to dedicated GC vendors.

Key Innovations in High-Throughput GC-MS Technologies

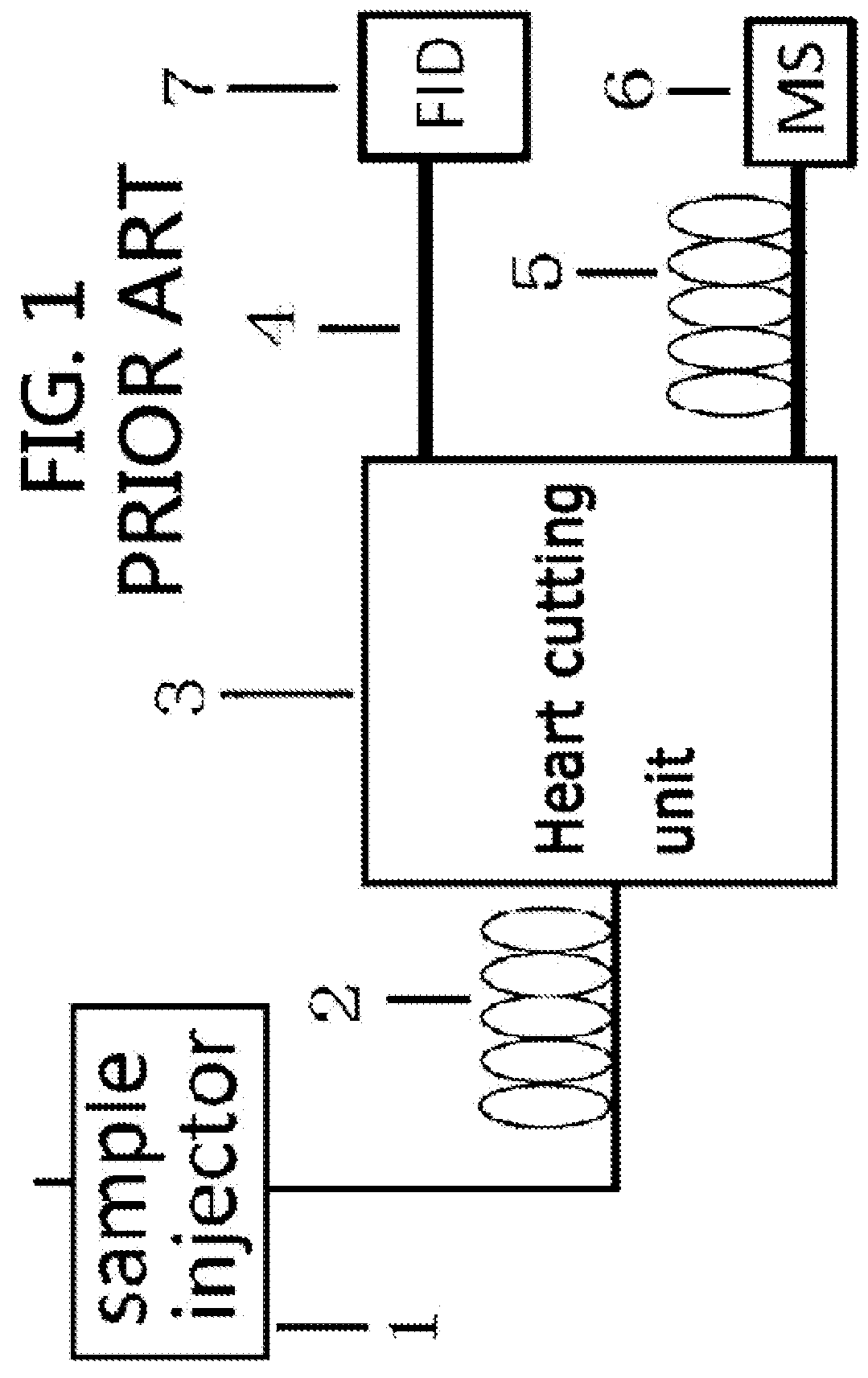

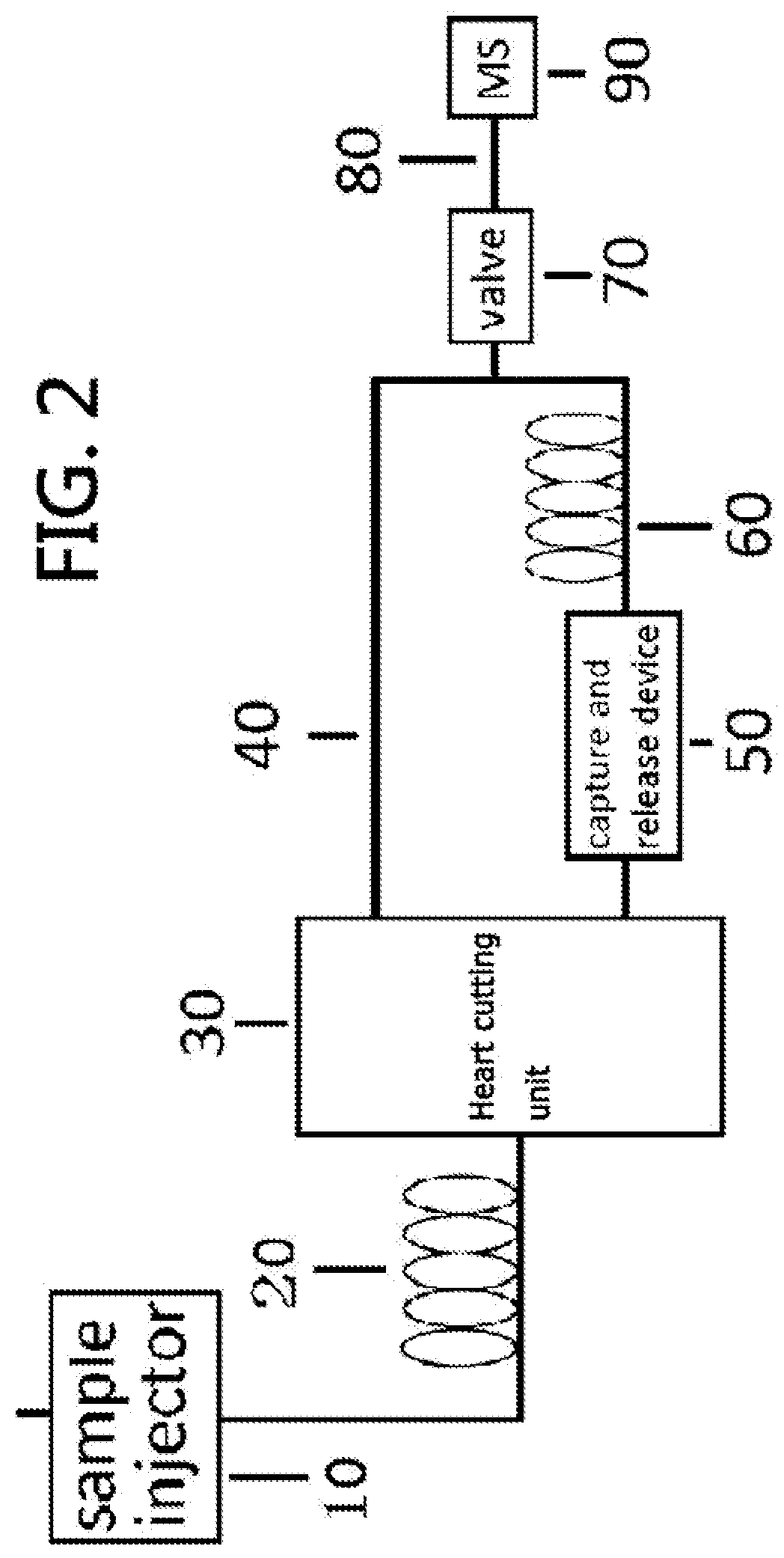

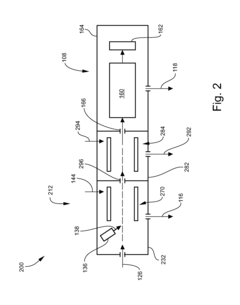

Gas chromatography-mass spectrometry method and gas chromatography-mass spectrometry apparatus therefor having a capture and release device

PatentActiveUS9228984B2

Innovation

- A capture and release device with a switching valve is integrated into the GC-MS system, allowing for the capture and release of eluted compounds using cooling and heating units, enabling simultaneous analysis of both simple and complex compounds by rotating the switching valve to connect different capillary columns to the mass spectrometer.

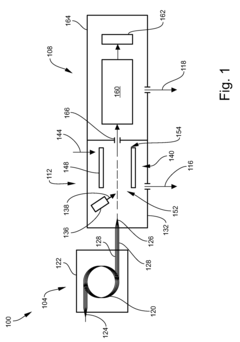

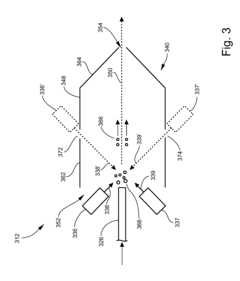

Multi-capillary column and high-capacity ionization interface for GC-MS

PatentInactiveUS8841611B2

Innovation

- A GC-MS system is designed with an ionization interface that includes an ionization device and an ion guide with guide electrodes configured to converge ions radially, allowing for efficient ion beam confinement and transmission to a mass analyzer, while maintaining an intermediate pressure between the gas chromatograph and mass spectrometer, thereby enhancing ion collection and transfer efficiency.

Cost-Benefit Analysis of Automated GC-MS Systems

The implementation of automated GC-MS systems represents a significant capital investment that requires thorough financial analysis. Initial acquisition costs for automated GC-MS platforms typically range from $150,000 to $500,000, depending on system specifications, autosampler capacity, and additional features such as multi-column configurations or advanced detection capabilities. Beyond hardware expenses, organizations must consider installation costs, facility modifications, and staff training, which can add 15-25% to the initial investment.

Operating costs present a more nuanced picture when comparing manual versus automated approaches. Labor costs decrease substantially with automation, with studies indicating reductions of 60-75% in technician hours for sample preparation and analysis. A typical laboratory processing 50 samples daily can reallocate approximately 20-25 hours of technician time weekly to higher-value activities, representing annual labor savings of $40,000-$60,000 at average industry compensation rates.

Consumable usage patterns shift with automation, with potential reductions in solvent consumption by 30-40% through optimized delivery systems. However, specialized consumables for automated platforms may carry premium pricing, partially offsetting these savings. Maintenance contracts for automated systems typically cost 8-12% of the initial system price annually, compared to 5-7% for manual systems, reflecting the increased complexity of robotic components.

The throughput advantages translate directly to financial benefits. Laboratories implementing automated GC-MS systems report capacity increases of 200-300% without corresponding staffing increases. This enhanced productivity enables either cost reduction through staff optimization or revenue growth through increased testing capacity. The average payback period for mid-range automated systems ranges from 18-36 months, depending on utilization rates and laboratory cost structures.

Quality improvements yield additional financial benefits through reduced repeat testing. Laboratories report 40-60% fewer sample preparation errors after automation implementation, translating to approximately 5-8% reduction in overall operating costs. For regulated industries, improved documentation and traceability reduce compliance risks and associated potential penalties.

Long-term ROI calculations must incorporate system lifespan considerations. While automated systems typically have higher maintenance requirements, their modular design often allows component upgrades rather than complete replacement, extending functional lifespan to 8-10 years compared to 5-7 years for traditional systems. This extended utilization period significantly improves lifetime value calculations when properly maintained.

Operating costs present a more nuanced picture when comparing manual versus automated approaches. Labor costs decrease substantially with automation, with studies indicating reductions of 60-75% in technician hours for sample preparation and analysis. A typical laboratory processing 50 samples daily can reallocate approximately 20-25 hours of technician time weekly to higher-value activities, representing annual labor savings of $40,000-$60,000 at average industry compensation rates.

Consumable usage patterns shift with automation, with potential reductions in solvent consumption by 30-40% through optimized delivery systems. However, specialized consumables for automated platforms may carry premium pricing, partially offsetting these savings. Maintenance contracts for automated systems typically cost 8-12% of the initial system price annually, compared to 5-7% for manual systems, reflecting the increased complexity of robotic components.

The throughput advantages translate directly to financial benefits. Laboratories implementing automated GC-MS systems report capacity increases of 200-300% without corresponding staffing increases. This enhanced productivity enables either cost reduction through staff optimization or revenue growth through increased testing capacity. The average payback period for mid-range automated systems ranges from 18-36 months, depending on utilization rates and laboratory cost structures.

Quality improvements yield additional financial benefits through reduced repeat testing. Laboratories report 40-60% fewer sample preparation errors after automation implementation, translating to approximately 5-8% reduction in overall operating costs. For regulated industries, improved documentation and traceability reduce compliance risks and associated potential penalties.

Long-term ROI calculations must incorporate system lifespan considerations. While automated systems typically have higher maintenance requirements, their modular design often allows component upgrades rather than complete replacement, extending functional lifespan to 8-10 years compared to 5-7 years for traditional systems. This extended utilization period significantly improves lifetime value calculations when properly maintained.

Quality Control and Validation Protocols for Automated GC-MS

Quality control and validation protocols are essential components of any automated GC-MS system designed to maximize throughput. These protocols ensure that the high-speed analytical processes maintain accuracy, precision, and reliability throughout extended operational periods.

The foundation of effective quality control begins with system suitability testing (SST), which should be performed at the start of each analytical sequence. This includes verification of retention time stability, peak area reproducibility, and mass spectral quality. For automated systems handling large sample batches, implementing dynamic SST that periodically inserts control samples throughout the sequence provides continuous monitoring of instrument performance.

Calibration verification represents another critical aspect of quality assurance. Automated systems should incorporate multi-level calibration curves with internal standards to compensate for potential drift during extended runs. Statistical evaluation of calibration data, including correlation coefficients and residual analysis, should be automated to flag deviations that exceed predetermined acceptance criteria.

Blank analysis serves as a crucial contamination control measure. In high-throughput environments, strategic placement of blanks after high-concentration samples helps detect potential carryover issues. Automated data processing algorithms can be programmed to identify and flag samples requiring reanalysis based on blank contamination thresholds.

Method validation for automated GC-MS systems must specifically address throughput-related parameters. This includes robustness testing under accelerated conditions, such as shortened run times and rapid temperature programming. Validation protocols should evaluate the impact of increased sample throughput on detection limits, quantification accuracy, and chromatographic resolution.

Quality control charts represent valuable tools for monitoring long-term system performance. Implementing Levey-Jennings or CUSUM charts for tracking retention times, peak areas, and signal-to-noise ratios helps identify gradual system degradation before it impacts analytical results. These charts should be integrated into the automated workflow with alert mechanisms for out-of-specification trends.

Inter-laboratory proficiency testing provides external validation of automated methods. Participation in collaborative studies using standardized samples allows comparison of results across different laboratories and instruments, confirming that throughput optimization has not compromised analytical quality. Documentation of these validation efforts, including detailed standard operating procedures and training records, ensures regulatory compliance while maintaining maximum sample processing efficiency.

The foundation of effective quality control begins with system suitability testing (SST), which should be performed at the start of each analytical sequence. This includes verification of retention time stability, peak area reproducibility, and mass spectral quality. For automated systems handling large sample batches, implementing dynamic SST that periodically inserts control samples throughout the sequence provides continuous monitoring of instrument performance.

Calibration verification represents another critical aspect of quality assurance. Automated systems should incorporate multi-level calibration curves with internal standards to compensate for potential drift during extended runs. Statistical evaluation of calibration data, including correlation coefficients and residual analysis, should be automated to flag deviations that exceed predetermined acceptance criteria.

Blank analysis serves as a crucial contamination control measure. In high-throughput environments, strategic placement of blanks after high-concentration samples helps detect potential carryover issues. Automated data processing algorithms can be programmed to identify and flag samples requiring reanalysis based on blank contamination thresholds.

Method validation for automated GC-MS systems must specifically address throughput-related parameters. This includes robustness testing under accelerated conditions, such as shortened run times and rapid temperature programming. Validation protocols should evaluate the impact of increased sample throughput on detection limits, quantification accuracy, and chromatographic resolution.

Quality control charts represent valuable tools for monitoring long-term system performance. Implementing Levey-Jennings or CUSUM charts for tracking retention times, peak areas, and signal-to-noise ratios helps identify gradual system degradation before it impacts analytical results. These charts should be integrated into the automated workflow with alert mechanisms for out-of-specification trends.

Inter-laboratory proficiency testing provides external validation of automated methods. Participation in collaborative studies using standardized samples allows comparison of results across different laboratories and instruments, confirming that throughput optimization has not compromised analytical quality. Documentation of these validation efforts, including detailed standard operating procedures and training records, ensures regulatory compliance while maintaining maximum sample processing efficiency.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!