mmWave Role in Ultra-Low Latency Communications Systems

SEP 22, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

mmWave Technology Background and Objectives

Millimeter wave (mmWave) technology has evolved significantly over the past decades, transitioning from primarily military and scientific applications to becoming a cornerstone of next-generation communication systems. Operating in frequency bands between 30 GHz and 300 GHz, mmWave offers substantially wider bandwidth compared to traditional sub-6 GHz frequencies, making it particularly valuable for ultra-low latency communications where data transfer speed is paramount.

The historical development of mmWave technology can be traced back to the 1940s with early radar applications. However, its commercial viability for communications remained limited until recent advancements in semiconductor technology, antenna design, and signal processing algorithms overcame previous implementation barriers. The turning point came in the early 2010s when researchers demonstrated practical mmWave communication systems with acceptable power consumption and form factors.

Current technological evolution is driven by the exponential growth in data traffic and the increasing demand for near-instantaneous response times in applications such as autonomous vehicles, industrial automation, telemedicine, and augmented reality. These use cases require not just high throughput but also ultra-low latency, often below 1 millisecond, which conventional communication technologies struggle to deliver consistently.

The primary technical objective for mmWave in ultra-low latency communications is to leverage its inherent bandwidth advantages while addressing its propagation challenges. Specifically, the technology aims to achieve end-to-end latencies below 1 millisecond, reliability rates exceeding 99.999%, and support for device densities of up to 1 million devices per square kilometer – all while maintaining energy efficiency for battery-powered devices.

Another critical objective is overcoming mmWave's physical limitations, particularly its susceptibility to blockage, atmospheric absorption, and limited propagation distance. This necessitates the development of advanced beamforming techniques, intelligent reflective surfaces, and dense network architectures that can maintain connectivity even under challenging conditions.

The integration of mmWave with other emerging technologies represents another important goal. Combining mmWave with edge computing, artificial intelligence, and network slicing can further reduce latency by optimizing resource allocation and traffic management. Similarly, hybrid approaches that intelligently switch between mmWave and sub-6 GHz frequencies based on environmental conditions and application requirements are being explored to ensure consistent performance.

Looking forward, the technology roadmap for mmWave in ultra-low latency communications includes the standardization of frequencies above 100 GHz, the development of more efficient modulation schemes, and the creation of intelligent, self-organizing network architectures that can dynamically adapt to changing conditions and requirements.

The historical development of mmWave technology can be traced back to the 1940s with early radar applications. However, its commercial viability for communications remained limited until recent advancements in semiconductor technology, antenna design, and signal processing algorithms overcame previous implementation barriers. The turning point came in the early 2010s when researchers demonstrated practical mmWave communication systems with acceptable power consumption and form factors.

Current technological evolution is driven by the exponential growth in data traffic and the increasing demand for near-instantaneous response times in applications such as autonomous vehicles, industrial automation, telemedicine, and augmented reality. These use cases require not just high throughput but also ultra-low latency, often below 1 millisecond, which conventional communication technologies struggle to deliver consistently.

The primary technical objective for mmWave in ultra-low latency communications is to leverage its inherent bandwidth advantages while addressing its propagation challenges. Specifically, the technology aims to achieve end-to-end latencies below 1 millisecond, reliability rates exceeding 99.999%, and support for device densities of up to 1 million devices per square kilometer – all while maintaining energy efficiency for battery-powered devices.

Another critical objective is overcoming mmWave's physical limitations, particularly its susceptibility to blockage, atmospheric absorption, and limited propagation distance. This necessitates the development of advanced beamforming techniques, intelligent reflective surfaces, and dense network architectures that can maintain connectivity even under challenging conditions.

The integration of mmWave with other emerging technologies represents another important goal. Combining mmWave with edge computing, artificial intelligence, and network slicing can further reduce latency by optimizing resource allocation and traffic management. Similarly, hybrid approaches that intelligently switch between mmWave and sub-6 GHz frequencies based on environmental conditions and application requirements are being explored to ensure consistent performance.

Looking forward, the technology roadmap for mmWave in ultra-low latency communications includes the standardization of frequencies above 100 GHz, the development of more efficient modulation schemes, and the creation of intelligent, self-organizing network architectures that can dynamically adapt to changing conditions and requirements.

Market Demand for Ultra-Low Latency Communications

Ultra-low latency communications have emerged as a critical requirement across multiple industries, driven by the evolution of applications demanding real-time responsiveness. The market for these systems is experiencing exponential growth, with global valuation projected to reach $35.8 billion by 2026, growing at a CAGR of 22.3% from 2021.

The automotive sector represents one of the largest market segments, with autonomous vehicles requiring latency under 10ms for critical safety functions. Vehicle-to-everything (V2X) communications demand ultra-reliable connections with latencies below 5ms to enable collision avoidance systems and coordinated driving. Industry analysts forecast that by 2025, over 70% of new premium vehicles will incorporate some form of V2X technology requiring ultra-low latency communications.

Industrial automation constitutes another significant market driver, with Industry 4.0 implementations requiring deterministic networks with latencies below 1ms for precision robotics and manufacturing processes. The smart factory market is expected to grow to $244.8 billion by 2024, with ultra-low latency communications serving as a foundational technology enabler.

Healthcare applications represent an emerging but rapidly growing segment, particularly in telesurgery and remote patient monitoring. These applications require latencies below 2ms to ensure proper tactile feedback and real-time response. The telemedicine market, accelerated by recent global events, is projected to reach $185.6 billion by 2026, with ultra-low latency solutions becoming increasingly critical components.

Gaming and entertainment industries are driving consumer-facing demand, with cloud gaming services requiring latencies below 20ms to provide responsive gameplay experiences. The global cloud gaming market is expected to reach $7.24 billion by 2027, with latency performance being a key differentiator among service providers.

Financial services, particularly high-frequency trading, demand the absolute lowest latencies possible, often measured in microseconds rather than milliseconds. A single millisecond advantage can translate to millions in additional trading profits, creating a premium market segment willing to invest substantially in ultra-low latency infrastructure.

The deployment of 5G networks is accelerating market growth, with network slicing capabilities allowing operators to guarantee ultra-low latency performance for specific applications. Telecommunications providers are increasingly offering specialized ultra-low latency services with premium pricing models, creating new revenue streams in an otherwise commoditized connectivity market.

The automotive sector represents one of the largest market segments, with autonomous vehicles requiring latency under 10ms for critical safety functions. Vehicle-to-everything (V2X) communications demand ultra-reliable connections with latencies below 5ms to enable collision avoidance systems and coordinated driving. Industry analysts forecast that by 2025, over 70% of new premium vehicles will incorporate some form of V2X technology requiring ultra-low latency communications.

Industrial automation constitutes another significant market driver, with Industry 4.0 implementations requiring deterministic networks with latencies below 1ms for precision robotics and manufacturing processes. The smart factory market is expected to grow to $244.8 billion by 2024, with ultra-low latency communications serving as a foundational technology enabler.

Healthcare applications represent an emerging but rapidly growing segment, particularly in telesurgery and remote patient monitoring. These applications require latencies below 2ms to ensure proper tactile feedback and real-time response. The telemedicine market, accelerated by recent global events, is projected to reach $185.6 billion by 2026, with ultra-low latency solutions becoming increasingly critical components.

Gaming and entertainment industries are driving consumer-facing demand, with cloud gaming services requiring latencies below 20ms to provide responsive gameplay experiences. The global cloud gaming market is expected to reach $7.24 billion by 2027, with latency performance being a key differentiator among service providers.

Financial services, particularly high-frequency trading, demand the absolute lowest latencies possible, often measured in microseconds rather than milliseconds. A single millisecond advantage can translate to millions in additional trading profits, creating a premium market segment willing to invest substantially in ultra-low latency infrastructure.

The deployment of 5G networks is accelerating market growth, with network slicing capabilities allowing operators to guarantee ultra-low latency performance for specific applications. Telecommunications providers are increasingly offering specialized ultra-low latency services with premium pricing models, creating new revenue streams in an otherwise commoditized connectivity market.

mmWave Technical Challenges and Limitations

Despite the promising capabilities of millimeter wave (mmWave) technology in ultra-low latency communications systems, several significant technical challenges and limitations must be addressed. The high-frequency nature of mmWave signals (typically 30-300 GHz) introduces fundamental physical constraints that impact system performance and reliability.

Signal propagation presents a primary challenge, as mmWave signals experience severe path loss that increases quadratically with frequency. This attenuation is 20-40 dB greater than traditional sub-6 GHz bands, significantly limiting communication range. Additionally, mmWave signals demonstrate poor penetration capabilities through common materials such as concrete walls, metalized glass, and even human bodies, restricting their effectiveness to line-of-sight (LOS) scenarios.

Atmospheric absorption compounds these issues, with oxygen molecules and water vapor causing frequency-dependent signal absorption peaks, particularly around 60 GHz and 180 GHz. Rain attenuation becomes particularly problematic, with heavy rainfall potentially causing signal degradation of up to 30 dB/km at 73 GHz.

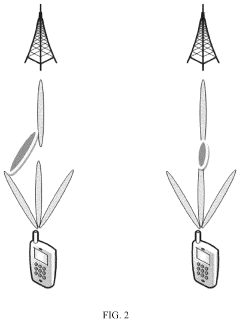

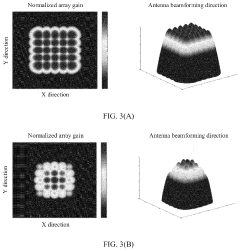

The narrow beamwidth characteristic of mmWave communications introduces beam management complexities. While enabling spatial multiplexing and interference reduction, it necessitates sophisticated beam alignment, tracking, and switching mechanisms. Mobile scenarios present particular difficulties as maintaining beam alignment during user movement requires complex algorithms and frequent recalibration.

Hardware limitations further constrain mmWave implementation. High-frequency operation demands specialized components with tight manufacturing tolerances. Phase noise, I/Q imbalance, and nonlinearities in RF components become more pronounced at mmWave frequencies. Power amplifier efficiency decreases significantly, creating thermal management challenges and increasing power consumption—a critical concern for battery-powered devices.

Channel estimation and modeling present additional challenges. The directional nature of mmWave propagation and sparse multipath environment require new channel estimation techniques beyond those used in conventional systems. Traditional channel models prove inadequate for accurately representing mmWave propagation characteristics.

From a system perspective, the integration of mmWave with existing network infrastructure demands complex heterogeneous network architectures. The limited coverage necessitates dense deployment of access points, raising significant backhaul challenges and increasing deployment costs. Furthermore, maintaining ultra-low latency requirements while handling beam management overhead presents a fundamental trade-off that system designers must carefully navigate.

These technical challenges collectively impact the practical implementation of mmWave technology in ultra-low latency communications systems, requiring innovative solutions across hardware design, signal processing algorithms, and network architecture.

Signal propagation presents a primary challenge, as mmWave signals experience severe path loss that increases quadratically with frequency. This attenuation is 20-40 dB greater than traditional sub-6 GHz bands, significantly limiting communication range. Additionally, mmWave signals demonstrate poor penetration capabilities through common materials such as concrete walls, metalized glass, and even human bodies, restricting their effectiveness to line-of-sight (LOS) scenarios.

Atmospheric absorption compounds these issues, with oxygen molecules and water vapor causing frequency-dependent signal absorption peaks, particularly around 60 GHz and 180 GHz. Rain attenuation becomes particularly problematic, with heavy rainfall potentially causing signal degradation of up to 30 dB/km at 73 GHz.

The narrow beamwidth characteristic of mmWave communications introduces beam management complexities. While enabling spatial multiplexing and interference reduction, it necessitates sophisticated beam alignment, tracking, and switching mechanisms. Mobile scenarios present particular difficulties as maintaining beam alignment during user movement requires complex algorithms and frequent recalibration.

Hardware limitations further constrain mmWave implementation. High-frequency operation demands specialized components with tight manufacturing tolerances. Phase noise, I/Q imbalance, and nonlinearities in RF components become more pronounced at mmWave frequencies. Power amplifier efficiency decreases significantly, creating thermal management challenges and increasing power consumption—a critical concern for battery-powered devices.

Channel estimation and modeling present additional challenges. The directional nature of mmWave propagation and sparse multipath environment require new channel estimation techniques beyond those used in conventional systems. Traditional channel models prove inadequate for accurately representing mmWave propagation characteristics.

From a system perspective, the integration of mmWave with existing network infrastructure demands complex heterogeneous network architectures. The limited coverage necessitates dense deployment of access points, raising significant backhaul challenges and increasing deployment costs. Furthermore, maintaining ultra-low latency requirements while handling beam management overhead presents a fundamental trade-off that system designers must carefully navigate.

These technical challenges collectively impact the practical implementation of mmWave technology in ultra-low latency communications systems, requiring innovative solutions across hardware design, signal processing algorithms, and network architecture.

Current mmWave Solutions for Low Latency Applications

01 Low latency communication systems using mmWave technology

mmWave technology enables ultra-low latency communication systems by utilizing high-frequency bands (typically 30-300 GHz) that provide wider bandwidth. These systems can achieve significantly reduced latency compared to traditional wireless technologies, making them suitable for time-sensitive applications. The high data rates and reduced processing time contribute to overall system performance improvements, enabling near real-time communication capabilities essential for advanced wireless networks.- Low latency communication systems using mmWave technology: Millimeter wave (mmWave) technology enables ultra-low latency communication systems by utilizing high-frequency spectrum bands. These systems can achieve significantly reduced latency compared to traditional wireless technologies, making them suitable for time-sensitive applications. The high bandwidth available in mmWave frequencies allows for faster data transmission and processing, contributing to overall latency reduction in wireless networks.

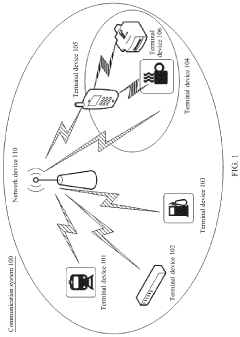

- Network architecture optimization for mmWave latency reduction: Specialized network architectures are designed to minimize latency in mmWave systems. These architectures include edge computing implementations, optimized routing protocols, and distributed processing frameworks that bring computational resources closer to end users. By restructuring network components and data paths specifically for mmWave transmission characteristics, these architectures can significantly reduce round-trip times and processing delays in high-frequency wireless communications.

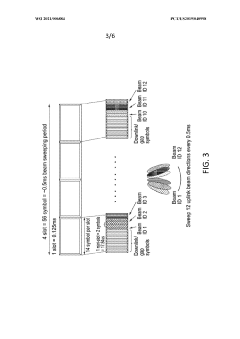

- Beamforming techniques for latency improvement in mmWave systems: Advanced beamforming techniques are employed in mmWave systems to improve signal quality and reduce latency. These techniques include adaptive beamforming algorithms, multi-beam operations, and beam tracking mechanisms that maintain optimal connectivity even during mobility. By focusing signal energy in specific directions and dynamically adjusting to changing conditions, these beamforming approaches minimize retransmissions and signal processing time, thereby reducing overall system latency.

- Hardware acceleration for mmWave latency reduction: Specialized hardware components are developed to accelerate signal processing in mmWave systems, reducing latency at the physical layer. These include custom integrated circuits, field-programmable gate arrays (FPGAs), and application-specific processors designed to handle the high data rates of mmWave communications. Hardware acceleration techniques optimize critical signal processing functions such as encoding/decoding, modulation/demodulation, and channel estimation, significantly reducing processing time and overall system latency.

- Cross-layer optimization for mmWave latency minimization: Cross-layer optimization approaches integrate multiple protocol layers to minimize latency in mmWave systems. These techniques coordinate operations across physical, MAC, network, and application layers to create holistic latency reduction solutions. By jointly optimizing parameters across different layers, such as transmission scheduling, resource allocation, and congestion control, these approaches can achieve significant improvements in end-to-end latency performance for mmWave communications.

02 mmWave technology for 5G/6G networks with reduced latency

mmWave technology is a key enabler for 5G and upcoming 6G networks, offering significantly reduced latency through beamforming techniques and wider channel bandwidths. These networks leverage mmWave's high-frequency characteristics to achieve sub-millisecond latency requirements for next-generation wireless communications. The implementation includes specialized network architectures and protocols designed to minimize processing delays and optimize signal transmission in high-frequency bands, supporting applications requiring instantaneous response times.Expand Specific Solutions03 Hardware optimizations for reducing latency in mmWave systems

Specialized hardware components are designed to minimize latency in mmWave communication systems. These include advanced antenna arrays, dedicated signal processors, and optimized RF front-end modules that reduce signal processing time. Hardware-level improvements focus on minimizing propagation delays and accelerating data processing through dedicated circuits and optimized architectures, resulting in significantly lower end-to-end latency for mmWave communications.Expand Specific Solutions04 Edge computing integration with mmWave for latency reduction

Combining edge computing with mmWave technology creates ultra-low latency communication systems by processing data closer to the source. This integration minimizes the need for data to travel to distant servers, significantly reducing round-trip latency. Edge computing nodes connected via mmWave links can process time-sensitive information locally, enabling real-time applications in various fields including autonomous vehicles, industrial automation, and augmented reality that require minimal communication delays.Expand Specific Solutions05 Protocol and software optimizations for mmWave latency reduction

Specialized communication protocols and software algorithms are developed to minimize latency in mmWave systems. These optimizations include streamlined packet processing, efficient resource allocation mechanisms, and advanced scheduling algorithms that prioritize time-sensitive data. Software-defined networking approaches enable dynamic adaptation to changing network conditions, while cross-layer optimizations ensure that all aspects of the communication stack work together to minimize delays in mmWave communications.Expand Specific Solutions

Key Industry Players in mmWave Ecosystem

The mmWave technology in ultra-low latency communications is currently in a growth phase, with the market expanding rapidly as 5G deployments accelerate globally. Industry leaders like Qualcomm, Intel, and Samsung are driving innovation through advanced chipset development, while telecommunications giants such as Huawei, Nokia, and British Telecommunications are implementing mmWave solutions in network infrastructure. The technology is approaching maturity for fixed wireless applications but remains in development for mobile use cases. Academic institutions including Xidian University and University of Electronic Science & Technology of China are collaborating with industry players like MediaTek and Apple to address technical challenges including signal propagation limitations and power consumption, positioning mmWave as a critical enabler for future ultra-low latency applications.

Intel Corp.

Technical Solution: Intel's approach to mmWave for ultra-low latency communications centers around their programmable network infrastructure solutions. Their technology combines specialized FPGA-based hardware accelerators with their Xeon processors to handle the intensive signal processing requirements of mmWave communications while maintaining deterministic latency profiles. Intel's solution architecture incorporates time-sensitive networking (TSN) capabilities that ensure precise timing synchronization across the network, critical for maintaining ultra-low latency performance. Their mmWave reference designs feature modular components that can be customized for different deployment scenarios, from dense urban environments to industrial settings. Intel has developed specialized software-defined radio implementations that leverage their hardware capabilities to achieve flexible beam management with latencies under 5ms. Their platform supports network slicing techniques that can isolate ultra-low latency traffic from other network functions, ensuring consistent performance for critical applications.

Strengths: Strong integration with edge computing platforms; programmable hardware approach offering flexibility for different deployment scenarios; robust ecosystem of development partners. Weaknesses: Less vertical integration compared to end-to-end vendors; higher computational overhead for software-defined approaches; relatively newer entrant to mmWave space compared to telecommunications specialists.

QUALCOMM, Inc.

Technical Solution: Qualcomm has pioneered mmWave technology for 5G networks with their Snapdragon X Modem series. Their solution integrates advanced beamforming techniques with up to 800 MHz bandwidth channels in the 24-40 GHz spectrum, enabling ultra-low latency communications with sub-millisecond response times. Qualcomm's QTM527 antenna module works in conjunction with their modems to overcome mmWave's limited range and penetration challenges through adaptive beamforming and beam tracking algorithms. Their system architecture incorporates specialized hardware accelerators for signal processing that reduce computational delays, while their RF front-end modules feature custom-designed power amplifiers that optimize energy efficiency without compromising performance. Qualcomm has demonstrated end-to-end latencies as low as 1ms in controlled environments, making their technology suitable for mission-critical applications requiring instantaneous response times.

Strengths: Industry-leading integration of mmWave technology with mobile platforms; extensive patent portfolio in beamforming techniques; proven deployment experience across multiple carriers. Weaknesses: Higher power consumption compared to sub-6GHz solutions; implementation complexity requiring specialized expertise; cost premium for mmWave-capable devices.

Core mmWave Patents and Technical Innovations

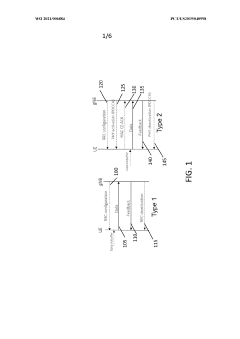

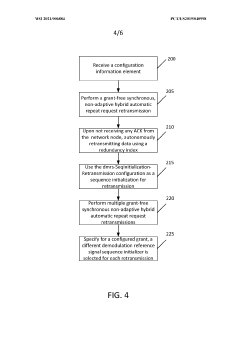

5g new radio ultra reliable low latency communications in millimeter wave spectrum

PatentWO2021006884A1

Innovation

- Implementing grant-free synchronous non-adaptive hybrid automatic repeat request (HARQ) retransmissions without a physical downlink control channel scheduling grant, using a new field in the configuration information element to specify usage and enable autonomous retransmissions on pre-configured radio resources, and employing Layer-1 encoding for differentiating initial transmissions from retransmissions.

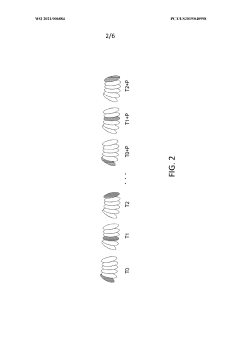

Beam Alignment Method and Related Device

PatentPendingUS20240230816A1

Innovation

- A beam alignment method that involves receiving multiple beams, determining the optimal receive beam based on RSRP, calculating the required rotation angle and direction to align the peak direction beam with the transmit beam, and adjusting the terminal device's location to form an optimal beam pair, thereby increasing transmit or receive gain and improving coverage and mobility.

Spectrum Allocation and Regulatory Framework

The millimeter wave (mmWave) spectrum allocation landscape is undergoing significant transformation to accommodate the growing demands of ultra-low latency communications systems. Regulatory bodies worldwide have recognized the potential of mmWave frequencies (30-300 GHz) and have been actively working to establish frameworks that enable their efficient utilization. The Federal Communications Commission (FCC) in the United States has pioneered this effort by allocating substantial portions of the spectrum above 24 GHz for mobile and fixed wireless services, including the 28 GHz, 37 GHz, 39 GHz, and 64-71 GHz bands.

The European Conference of Postal and Telecommunications Administrations (CEPT) has similarly identified the 26 GHz band (24.25-27.5 GHz) as a priority band for early 5G deployment, with additional considerations for the 40 GHz and 66-71 GHz bands. In Asia, countries like Japan, South Korea, and China have allocated various mmWave bands, with Japan focusing on the 28 GHz band, South Korea utilizing both 28 GHz and 39 GHz bands, and China exploring the 24.75-27.5 GHz and 37-43.5 GHz ranges.

Regulatory challenges specific to mmWave deployment in ultra-low latency systems include interference management, international harmonization, and sharing mechanisms. The propagation characteristics of mmWave signals necessitate dense network deployments, raising concerns about potential interference between adjacent systems. Regulators are implementing technical rules such as power limits, out-of-band emission restrictions, and coordination requirements to mitigate these issues.

License frameworks for mmWave spectrum vary globally, with approaches ranging from traditional exclusive licensing to more flexible models like light licensing and unlicensed access. The United States has implemented a hybrid approach, with auction-based exclusive licenses for certain bands (28 GHz, 39 GHz) and unlicensed access for others (64-71 GHz). This diversity in licensing models reflects the experimental nature of mmWave deployment and the need for regulatory flexibility.

International harmonization efforts are being coordinated through the International Telecommunication Union (ITU), particularly through the World Radiocommunication Conference (WRC) process. WRC-19 identified several mmWave bands for IMT-2020 (5G) services, providing a foundation for global alignment. However, regional variations persist, creating challenges for equipment manufacturers and service providers operating across multiple markets.

The regulatory landscape continues to evolve as practical deployment experience accumulates. Emerging trends include the development of dynamic spectrum sharing technologies, the exploration of higher frequency bands (above 100 GHz), and the implementation of automated frequency coordination systems to maximize spectrum efficiency while maintaining the ultra-low latency performance critical for next-generation applications.

The European Conference of Postal and Telecommunications Administrations (CEPT) has similarly identified the 26 GHz band (24.25-27.5 GHz) as a priority band for early 5G deployment, with additional considerations for the 40 GHz and 66-71 GHz bands. In Asia, countries like Japan, South Korea, and China have allocated various mmWave bands, with Japan focusing on the 28 GHz band, South Korea utilizing both 28 GHz and 39 GHz bands, and China exploring the 24.75-27.5 GHz and 37-43.5 GHz ranges.

Regulatory challenges specific to mmWave deployment in ultra-low latency systems include interference management, international harmonization, and sharing mechanisms. The propagation characteristics of mmWave signals necessitate dense network deployments, raising concerns about potential interference between adjacent systems. Regulators are implementing technical rules such as power limits, out-of-band emission restrictions, and coordination requirements to mitigate these issues.

License frameworks for mmWave spectrum vary globally, with approaches ranging from traditional exclusive licensing to more flexible models like light licensing and unlicensed access. The United States has implemented a hybrid approach, with auction-based exclusive licenses for certain bands (28 GHz, 39 GHz) and unlicensed access for others (64-71 GHz). This diversity in licensing models reflects the experimental nature of mmWave deployment and the need for regulatory flexibility.

International harmonization efforts are being coordinated through the International Telecommunication Union (ITU), particularly through the World Radiocommunication Conference (WRC) process. WRC-19 identified several mmWave bands for IMT-2020 (5G) services, providing a foundation for global alignment. However, regional variations persist, creating challenges for equipment manufacturers and service providers operating across multiple markets.

The regulatory landscape continues to evolve as practical deployment experience accumulates. Emerging trends include the development of dynamic spectrum sharing technologies, the exploration of higher frequency bands (above 100 GHz), and the implementation of automated frequency coordination systems to maximize spectrum efficiency while maintaining the ultra-low latency performance critical for next-generation applications.

Implementation Costs and ROI Analysis

Implementing mmWave technology for ultra-low latency communications systems requires substantial initial investment, with hardware costs representing the most significant expenditure. Base station equipment typically ranges from $25,000 to $100,000 per unit, depending on coverage requirements and technical specifications. User equipment and specialized mmWave transceivers add $500-2,000 per endpoint. These hardware costs are considerably higher than traditional sub-6 GHz systems due to the specialized components required for millimeter wave frequencies.

Infrastructure modifications present additional expenses, as mmWave deployments often require denser network arrangements with more access points to overcome limited propagation characteristics. Organizations must budget for site acquisition, installation labor, and potential structural reinforcements, averaging $10,000-30,000 per site depending on location complexity and existing infrastructure.

Software development and integration costs typically range from $500,000 to several million dollars for enterprise-scale implementations. This includes specialized protocol stacks, beam management algorithms, and system optimization software necessary for ultra-low latency applications. Annual maintenance expenses generally account for 15-20% of the initial implementation cost.

Despite these substantial investments, ROI analysis reveals compelling financial justification for specific use cases. Manufacturing environments implementing mmWave-based ultra-low latency systems report 30-45% reductions in production downtime and 15-25% improvements in overall equipment effectiveness. The average payback period ranges from 18-36 months for industrial applications.

Healthcare implementations show even more promising returns, with remote surgery applications demonstrating potential cost savings of $2,000-5,000 per procedure through reduced complications and shorter hospital stays. Financial trading systems leveraging mmWave technology report competitive advantages worth $10-50 million annually for large institutions through microsecond-level latency improvements.

The ROI timeline varies significantly by industry, with mission-critical applications in defense, aerospace, and financial services typically achieving positive returns within 12-24 months. Consumer-facing applications generally require longer periods of 36-48 months to reach profitability. Organizations should conduct phased implementations, beginning with high-value use cases to establish early ROI validation before expanding to broader deployments.

Cost optimization strategies include infrastructure sharing arrangements, which can reduce capital expenditures by 30-40%, and leveraging hybrid network architectures that combine mmWave technology with existing communications infrastructure to minimize overall deployment costs while maintaining ultra-low latency performance where most critical.

Infrastructure modifications present additional expenses, as mmWave deployments often require denser network arrangements with more access points to overcome limited propagation characteristics. Organizations must budget for site acquisition, installation labor, and potential structural reinforcements, averaging $10,000-30,000 per site depending on location complexity and existing infrastructure.

Software development and integration costs typically range from $500,000 to several million dollars for enterprise-scale implementations. This includes specialized protocol stacks, beam management algorithms, and system optimization software necessary for ultra-low latency applications. Annual maintenance expenses generally account for 15-20% of the initial implementation cost.

Despite these substantial investments, ROI analysis reveals compelling financial justification for specific use cases. Manufacturing environments implementing mmWave-based ultra-low latency systems report 30-45% reductions in production downtime and 15-25% improvements in overall equipment effectiveness. The average payback period ranges from 18-36 months for industrial applications.

Healthcare implementations show even more promising returns, with remote surgery applications demonstrating potential cost savings of $2,000-5,000 per procedure through reduced complications and shorter hospital stays. Financial trading systems leveraging mmWave technology report competitive advantages worth $10-50 million annually for large institutions through microsecond-level latency improvements.

The ROI timeline varies significantly by industry, with mission-critical applications in defense, aerospace, and financial services typically achieving positive returns within 12-24 months. Consumer-facing applications generally require longer periods of 36-48 months to reach profitability. Organizations should conduct phased implementations, beginning with high-value use cases to establish early ROI validation before expanding to broader deployments.

Cost optimization strategies include infrastructure sharing arrangements, which can reduce capital expenditures by 30-40%, and leveraging hybrid network architectures that combine mmWave technology with existing communications infrastructure to minimize overall deployment costs while maintaining ultra-low latency performance where most critical.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!