Calibrate mmWave in Ensuring Accurate Autonomous Guidance

SEP 22, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

mmWave Radar Calibration Background and Objectives

Millimeter-wave (mmWave) radar technology has evolved significantly over the past two decades, transitioning from primarily military applications to becoming a cornerstone of autonomous guidance systems. Operating in frequency bands between 30GHz and 300GHz, mmWave radar offers distinct advantages in autonomous navigation due to its ability to function effectively in adverse weather conditions where optical sensors often fail. The evolution of this technology has been accelerated by advancements in semiconductor manufacturing, signal processing algorithms, and the increasing demand for reliable sensing solutions in autonomous vehicles.

The calibration of mmWave radar systems represents a critical technical challenge that has gained prominence as deployment of these systems has expanded. Historically, radar calibration methodologies were developed for large-scale military and weather radar systems, but the miniaturization and mass production of automotive-grade mmWave radar necessitates new approaches to ensure accuracy and reliability at scale. The technical objective of this research is to establish robust, efficient, and scalable calibration methodologies specifically tailored for mmWave radar systems in autonomous guidance applications.

Current calibration techniques face significant limitations when applied to autonomous guidance systems. These include sensitivity to environmental variations, complexity of multi-radar fusion, and challenges in maintaining calibration accuracy over the operational lifetime of the system. The primary technical goals include developing calibration methods that can compensate for manufacturing variations, environmental factors, and aging effects while maintaining sub-degree angular accuracy and centimeter-level range precision.

The technological trajectory indicates a convergence toward integrated calibration solutions that combine factory calibration with continuous in-field self-calibration capabilities. This evolution is driven by the increasing autonomy levels in vehicles and other guided systems, which demand higher reliability and precision from their sensing infrastructure. The industry is moving toward standardized calibration protocols that can ensure consistent performance across different radar modules and manufacturers.

Recent technological breakthroughs in digital signal processing and machine learning have opened new avenues for adaptive calibration techniques. These approaches promise to overcome traditional limitations by dynamically adjusting calibration parameters based on real-time environmental conditions and system performance metrics. The ultimate objective is to develop calibration systems that are transparent to the end user while ensuring the radar maintains optimal performance throughout its operational life.

The calibration of mmWave radar systems represents a critical technical challenge that has gained prominence as deployment of these systems has expanded. Historically, radar calibration methodologies were developed for large-scale military and weather radar systems, but the miniaturization and mass production of automotive-grade mmWave radar necessitates new approaches to ensure accuracy and reliability at scale. The technical objective of this research is to establish robust, efficient, and scalable calibration methodologies specifically tailored for mmWave radar systems in autonomous guidance applications.

Current calibration techniques face significant limitations when applied to autonomous guidance systems. These include sensitivity to environmental variations, complexity of multi-radar fusion, and challenges in maintaining calibration accuracy over the operational lifetime of the system. The primary technical goals include developing calibration methods that can compensate for manufacturing variations, environmental factors, and aging effects while maintaining sub-degree angular accuracy and centimeter-level range precision.

The technological trajectory indicates a convergence toward integrated calibration solutions that combine factory calibration with continuous in-field self-calibration capabilities. This evolution is driven by the increasing autonomy levels in vehicles and other guided systems, which demand higher reliability and precision from their sensing infrastructure. The industry is moving toward standardized calibration protocols that can ensure consistent performance across different radar modules and manufacturers.

Recent technological breakthroughs in digital signal processing and machine learning have opened new avenues for adaptive calibration techniques. These approaches promise to overcome traditional limitations by dynamically adjusting calibration parameters based on real-time environmental conditions and system performance metrics. The ultimate objective is to develop calibration systems that are transparent to the end user while ensuring the radar maintains optimal performance throughout its operational life.

Autonomous Guidance Market Requirements Analysis

The autonomous guidance market is experiencing unprecedented growth, driven by advancements in sensor technologies, particularly millimeter-wave (mmWave) radar systems. Current market analysis indicates that the global autonomous vehicle market is projected to reach $556.67 billion by 2026, with a compound annual growth rate of 39.47% from 2019 to 2026. Within this ecosystem, the demand for precise calibration of mmWave radar systems has emerged as a critical requirement for ensuring the safety and reliability of autonomous navigation systems.

Market research reveals that automotive manufacturers and technology companies are increasingly prioritizing radar calibration solutions that can deliver sub-centimeter accuracy in diverse environmental conditions. This demand stems from the recognition that even minor calibration errors in mmWave radar systems can lead to significant navigation inaccuracies, potentially compromising passenger safety and regulatory compliance.

Consumer expectations are evolving rapidly, with 78% of potential autonomous vehicle users citing safety as their primary concern. This has created a market pull for radar systems with demonstrable calibration verification protocols and real-time performance monitoring capabilities. Fleet operators, particularly in the logistics and public transportation sectors, require calibration solutions that minimize vehicle downtime and can be integrated into existing maintenance schedules.

The regulatory landscape is also shaping market requirements, with transportation authorities in North America, Europe, and Asia implementing increasingly stringent standards for autonomous guidance systems. These regulations typically mandate regular calibration verification and documentation, creating demand for automated calibration solutions with comprehensive audit trails.

From a geographical perspective, the North American market currently leads in adoption of advanced calibration technologies, followed closely by Europe and East Asia. Emerging markets in South America and Southeast Asia are expected to show accelerated growth as autonomous vehicle technologies become more accessible and regulatory frameworks mature.

Market segmentation analysis indicates distinct requirements across different application domains. Urban mobility solutions prioritize calibration systems optimized for complex environments with numerous reflective surfaces, while highway applications emphasize long-range accuracy and stability at high speeds. Industrial applications, such as autonomous mining and agriculture, require calibration solutions robust against extreme environmental conditions and mechanical vibrations.

The competitive landscape is characterized by increasing collaboration between traditional automotive suppliers, radar manufacturers, and specialized calibration technology providers. This trend reflects the recognition that effective calibration requires deep expertise across multiple domains, including radar physics, environmental modeling, and machine learning for adaptive calibration.

Market research reveals that automotive manufacturers and technology companies are increasingly prioritizing radar calibration solutions that can deliver sub-centimeter accuracy in diverse environmental conditions. This demand stems from the recognition that even minor calibration errors in mmWave radar systems can lead to significant navigation inaccuracies, potentially compromising passenger safety and regulatory compliance.

Consumer expectations are evolving rapidly, with 78% of potential autonomous vehicle users citing safety as their primary concern. This has created a market pull for radar systems with demonstrable calibration verification protocols and real-time performance monitoring capabilities. Fleet operators, particularly in the logistics and public transportation sectors, require calibration solutions that minimize vehicle downtime and can be integrated into existing maintenance schedules.

The regulatory landscape is also shaping market requirements, with transportation authorities in North America, Europe, and Asia implementing increasingly stringent standards for autonomous guidance systems. These regulations typically mandate regular calibration verification and documentation, creating demand for automated calibration solutions with comprehensive audit trails.

From a geographical perspective, the North American market currently leads in adoption of advanced calibration technologies, followed closely by Europe and East Asia. Emerging markets in South America and Southeast Asia are expected to show accelerated growth as autonomous vehicle technologies become more accessible and regulatory frameworks mature.

Market segmentation analysis indicates distinct requirements across different application domains. Urban mobility solutions prioritize calibration systems optimized for complex environments with numerous reflective surfaces, while highway applications emphasize long-range accuracy and stability at high speeds. Industrial applications, such as autonomous mining and agriculture, require calibration solutions robust against extreme environmental conditions and mechanical vibrations.

The competitive landscape is characterized by increasing collaboration between traditional automotive suppliers, radar manufacturers, and specialized calibration technology providers. This trend reflects the recognition that effective calibration requires deep expertise across multiple domains, including radar physics, environmental modeling, and machine learning for adaptive calibration.

mmWave Radar Calibration Challenges and Global Status

Millimeter-wave (mmWave) radar systems have emerged as critical sensing technologies for autonomous guidance applications, offering advantages in adverse weather conditions where optical sensors may fail. However, the calibration of these systems presents significant technical challenges that must be addressed to ensure reliable performance in safety-critical applications.

The global landscape of mmWave radar calibration reveals varying levels of technological maturity across regions. North America, particularly the United States, leads in advanced calibration methodologies, with companies like Texas Instruments and NXP Semiconductors developing proprietary calibration algorithms. European research institutions, including those in Germany and Sweden, have made substantial contributions to standardizing calibration procedures for automotive applications.

In Asia, Japan and South Korea have focused on miniaturization and cost-effective calibration solutions, while China has rapidly expanded its research capabilities in this domain, particularly for urban mobility applications. This geographical distribution highlights the global recognition of calibration as a critical enabler for autonomous systems.

The primary technical challenges in mmWave radar calibration include phase coherence maintenance across multiple transmit and receive channels, temperature drift compensation, and environmental adaptability. Phase calibration errors as small as a few degrees can significantly impact angular resolution and object detection accuracy, creating potentially dangerous blind spots in autonomous guidance systems.

Another substantial challenge is the development of efficient in-field calibration methods that don't require specialized equipment or controlled environments. Current solutions often demand laboratory conditions that are impractical for deployed systems requiring regular recalibration.

Cross-sensor fusion calibration represents an emerging challenge, as autonomous systems increasingly rely on data from multiple sensor types. Ensuring that mmWave radar data properly aligns with information from cameras, LiDAR, and other sensors requires sophisticated calibration approaches that account for different sensing modalities and their unique error characteristics.

The industry also faces standardization challenges, with different manufacturers employing proprietary calibration methods that complicate system integration. The lack of universally accepted calibration standards has slowed adoption in certain sectors where interoperability is essential.

Recent technological advances have introduced machine learning approaches to calibration, using neural networks to compensate for systematic errors and environmental factors. While promising, these methods require extensive validation before deployment in safety-critical applications, creating tension between innovation speed and reliability requirements.

The global landscape of mmWave radar calibration reveals varying levels of technological maturity across regions. North America, particularly the United States, leads in advanced calibration methodologies, with companies like Texas Instruments and NXP Semiconductors developing proprietary calibration algorithms. European research institutions, including those in Germany and Sweden, have made substantial contributions to standardizing calibration procedures for automotive applications.

In Asia, Japan and South Korea have focused on miniaturization and cost-effective calibration solutions, while China has rapidly expanded its research capabilities in this domain, particularly for urban mobility applications. This geographical distribution highlights the global recognition of calibration as a critical enabler for autonomous systems.

The primary technical challenges in mmWave radar calibration include phase coherence maintenance across multiple transmit and receive channels, temperature drift compensation, and environmental adaptability. Phase calibration errors as small as a few degrees can significantly impact angular resolution and object detection accuracy, creating potentially dangerous blind spots in autonomous guidance systems.

Another substantial challenge is the development of efficient in-field calibration methods that don't require specialized equipment or controlled environments. Current solutions often demand laboratory conditions that are impractical for deployed systems requiring regular recalibration.

Cross-sensor fusion calibration represents an emerging challenge, as autonomous systems increasingly rely on data from multiple sensor types. Ensuring that mmWave radar data properly aligns with information from cameras, LiDAR, and other sensors requires sophisticated calibration approaches that account for different sensing modalities and their unique error characteristics.

The industry also faces standardization challenges, with different manufacturers employing proprietary calibration methods that complicate system integration. The lack of universally accepted calibration standards has slowed adoption in certain sectors where interoperability is essential.

Recent technological advances have introduced machine learning approaches to calibration, using neural networks to compensate for systematic errors and environmental factors. While promising, these methods require extensive validation before deployment in safety-critical applications, creating tension between innovation speed and reliability requirements.

Current mmWave Radar Calibration Methodologies

01 Signal processing techniques for improved accuracy

Various signal processing algorithms and techniques can be implemented in mmWave radar systems to enhance accuracy. These include advanced filtering methods, noise reduction algorithms, and signal optimization techniques that help to extract clearer target information from radar returns. By implementing sophisticated digital signal processing, radar systems can achieve higher resolution and more precise measurements even in challenging environments.- Signal processing techniques for improved accuracy: Various signal processing algorithms and techniques can be implemented in mmWave radar systems to enhance accuracy. These include advanced filtering methods, noise reduction algorithms, and signal optimization techniques that help to extract clearer data from radar returns. By implementing sophisticated signal processing, radar systems can achieve higher resolution and more precise target detection even in challenging environments.

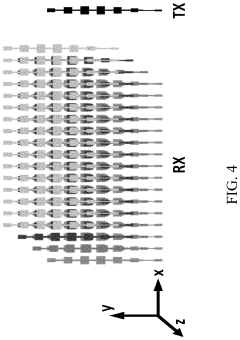

- Hardware design optimization for accuracy enhancement: The physical design and hardware components of mmWave radar systems significantly impact accuracy. This includes antenna array configurations, transceiver design, and component quality. Multiple-input multiple-output (MIMO) antenna arrays, high-quality RF components, and optimized circuit designs can substantially improve the accuracy of range, velocity, and angular measurements in mmWave radar systems.

- Calibration and error compensation methods: Implementing robust calibration procedures and error compensation algorithms is crucial for achieving high accuracy in mmWave radar systems. These methods address systematic errors, environmental factors, and hardware imperfections that can degrade radar performance. Techniques include temperature compensation, phase calibration, and adaptive algorithms that continuously adjust for changing conditions to maintain measurement precision.

- Multi-sensor fusion for enhanced accuracy: Combining mmWave radar data with information from other sensing modalities can significantly improve overall system accuracy. Sensor fusion approaches integrate radar with cameras, LiDAR, ultrasonic sensors, or other radar frequencies to overcome the limitations of individual sensors. This integration enables more robust object detection, classification, and tracking, particularly in complex environments with varying weather and lighting conditions.

- Advanced algorithms for target detection and tracking: Sophisticated algorithms for target detection, classification, and tracking play a vital role in improving mmWave radar accuracy. Machine learning and AI-based approaches can enhance the system's ability to distinguish between different types of objects, filter out false positives, and maintain tracking consistency. These algorithms can adapt to different scenarios and environmental conditions, providing more reliable and accurate radar performance.

02 Hardware design optimization for accuracy enhancement

The physical design and hardware components of mmWave radar systems significantly impact measurement accuracy. This includes antenna array configurations, transceiver design, and component quality. Multiple-input multiple-output (MIMO) antenna arrays, precision oscillators, and high-quality RF components can substantially improve the accuracy of range, velocity, and angular measurements in mmWave radar systems.Expand Specific Solutions03 Calibration and error compensation methods

Implementing robust calibration procedures and error compensation algorithms is crucial for achieving high accuracy in mmWave radar systems. These methods address systematic errors from hardware imperfections, environmental factors, and signal distortions. Advanced calibration techniques can compensate for phase errors, gain imbalances, and timing offsets, significantly improving measurement precision in real-world applications.Expand Specific Solutions04 Multi-sensor fusion for enhanced accuracy

Combining mmWave radar data with information from complementary sensors such as cameras, LiDAR, or other radar frequencies can significantly improve overall system accuracy. Sensor fusion algorithms integrate the strengths of different sensing modalities while mitigating their individual weaknesses. This approach enhances detection reliability, measurement precision, and object classification accuracy across various operating conditions.Expand Specific Solutions05 Environmental adaptation and interference mitigation

Techniques for adapting to changing environmental conditions and mitigating interference are essential for maintaining high accuracy in mmWave radar systems. These include methods for operating in adverse weather conditions, handling multi-path reflections, and rejecting interference from other radar systems or electromagnetic sources. Adaptive algorithms that can recognize and compensate for environmental challenges help maintain consistent measurement accuracy across diverse operating scenarios.Expand Specific Solutions

Leading Companies in mmWave Radar Calibration

The mmWave radar calibration market for autonomous guidance is currently in its growth phase, with increasing adoption across automotive and industrial sectors. The market is projected to expand significantly as autonomous vehicle technology matures, with an estimated value exceeding $2 billion by 2025. Technologically, the field shows varying maturity levels among key players. Industry leaders like Keysight Technologies, Robert Bosch, and NXP offer advanced calibration solutions, while automotive specialists including GM Global Technology, ZF Friedrichshafen, and Toyota are integrating these systems into production vehicles. Research institutions such as KAIST and University of Padua are advancing fundamental calibration methodologies, while defense entities like Naval Research Laboratory contribute specialized expertise. The competitive landscape features established electronics manufacturers (Intel, Sony) alongside emerging specialized radar technology providers.

Keysight Technologies, Inc.

Technical Solution: Keysight Technologies has developed comprehensive mmWave radar calibration solutions that combine hardware and software approaches. Their system utilizes reference targets with precisely known radar cross-sections placed at calibrated distances to establish baseline measurements. The company's SignalVu-PC software with pulse measurement option enables precise characterization of radar signals, while their vector network analyzers provide phase and amplitude calibration across the mmWave spectrum. Keysight's approach incorporates temperature compensation algorithms that continuously adjust calibration parameters based on environmental conditions, ensuring consistent performance across varying operational environments. Their automated calibration routines can detect and correct for phase center variations, timing errors, and amplitude inconsistencies that would otherwise compromise guidance accuracy. The system also employs multi-point calibration techniques that characterize the entire field of view rather than single-point references, enabling more accurate spatial mapping for autonomous navigation systems.

Strengths: Industry-leading measurement accuracy with comprehensive environmental compensation; integrated hardware-software solution provides end-to-end calibration capabilities. Weaknesses: Higher implementation cost compared to simpler solutions; requires specialized expertise to fully utilize advanced calibration features.

Robert Bosch GmbH

Technical Solution: Bosch has pioneered an integrated calibration approach for mmWave radar systems focused on automotive applications. Their technology employs a multi-stage calibration process that begins with factory calibration using precision reference targets and controlled environments to establish baseline performance parameters. This is complemented by an in-field dynamic calibration system that continuously monitors and adjusts for environmental variations and component aging. Bosch's solution incorporates cross-sensor validation, where radar measurements are compared with data from cameras and LiDAR to identify and correct systematic errors. Their proprietary signal processing algorithms compensate for temperature drift, vibration effects, and electromagnetic interference that can degrade radar performance in real-world conditions. The system also features adaptive beamforming calibration that optimizes antenna array performance for different driving scenarios, ensuring consistent detection capabilities whether in urban environments or highway conditions. Bosch's approach includes specialized calibration for different weather conditions, maintaining guidance accuracy during rain, fog, and snow.

Strengths: Robust automotive-grade solution with proven reliability in harsh environments; comprehensive cross-sensor validation improves overall system accuracy. Weaknesses: Primarily optimized for automotive applications; may require adaptation for other autonomous guidance contexts.

Key Patents and Research in mmWave Calibration

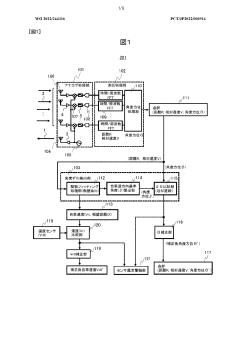

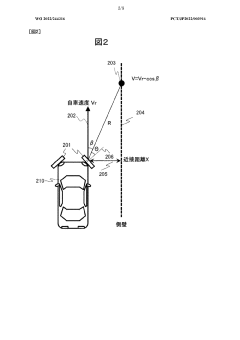

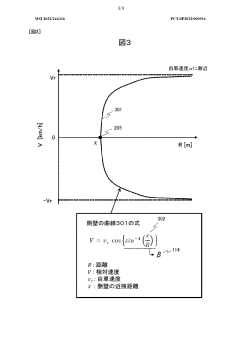

Radar device

PatentWO2022244316A1

Innovation

- A radar device with a function fitting processing section that extracts side walls parallel to the vehicle's travel direction, calculates angular orientations, and compares them to detect and correct installation angle deviations using a comparison processing unit, enabling automatic detection and correction of mounting angle deviations in real-time.

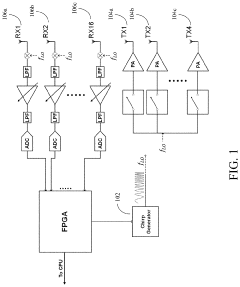

Millimeter-wave radar for unmanned aerial vehicle swarming, tracking, and collision avoidance

PatentActiveUS20200284901A1

Innovation

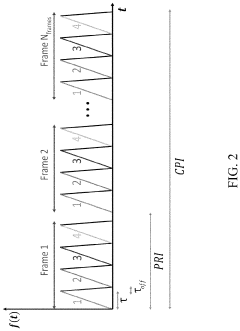

- Adaptation of automotive millimeter-wave (mmW) radar technology using a frequency-modulated continuous-wave (FMCW) multiple-input multiple-output (MIMO) radar system with digital beamforming, employing a limited number of antenna channels to provide a broad field of view and high-fidelity angular estimation in azimuth, while tolerating a narrower field of view and lower fidelity in elevation, to enhance UAV swarming algorithms and object detection capabilities.

Safety Standards and Regulatory Compliance

The calibration of mmWave radar systems for autonomous guidance must adhere to stringent safety standards and regulatory frameworks across different jurisdictions. In the United States, the Federal Communications Commission (FCC) regulates the frequency bands for mmWave radar operations, while the National Highway Traffic Safety Administration (NHTSA) provides guidelines for autonomous vehicle safety systems. Similarly, the European Union has established the Radio Equipment Directive (RED) and the General Safety Regulation (GSR) that directly impact mmWave radar calibration requirements.

ISO 26262, the international standard for functional safety of electrical and electronic systems in production automobiles, plays a crucial role in defining the calibration parameters for mmWave radar systems. It mandates comprehensive validation procedures to ensure that radar calibration meets Automotive Safety Integrity Level (ASIL) requirements, particularly for systems involved in autonomous guidance functions.

The IEEE 802.11ad and IEEE 802.11ay standards provide technical specifications for mmWave communications that influence radar calibration protocols, especially in vehicle-to-everything (V2X) communication scenarios. These standards establish performance benchmarks that calibration processes must achieve to ensure reliable operation in diverse environmental conditions.

Regulatory bodies are increasingly focusing on the electromagnetic compatibility (EMC) aspects of mmWave radar systems. The International Electrotechnical Commission (IEC) standards, particularly IEC 61000 series, define the limits for electromagnetic emissions and immunity that calibrated radar systems must comply with to prevent interference with other critical electronic systems.

Recent developments in autonomous vehicle regulations have introduced more specific requirements for sensor calibration verification. For instance, UN Regulation No. 157 on Automated Lane Keeping Systems (ALKS) includes provisions for sensor monitoring and calibration validation. These regulations require manufacturers to implement continuous calibration monitoring systems that can detect and alert when radar performance falls below specified thresholds.

The aviation industry's standards for radar calibration, such as those from the Radio Technical Commission for Aeronautics (RTCA), are increasingly being adapted for automotive applications. These standards provide robust frameworks for environmental testing and performance validation under extreme conditions, which are essential for ensuring radar calibration stability in autonomous guidance systems.

Compliance with these standards requires comprehensive documentation of calibration procedures, validation methodologies, and performance metrics. Manufacturers must maintain detailed records of calibration parameters and their impact on system performance, particularly in safety-critical scenarios where radar data directly influences vehicle control decisions.

ISO 26262, the international standard for functional safety of electrical and electronic systems in production automobiles, plays a crucial role in defining the calibration parameters for mmWave radar systems. It mandates comprehensive validation procedures to ensure that radar calibration meets Automotive Safety Integrity Level (ASIL) requirements, particularly for systems involved in autonomous guidance functions.

The IEEE 802.11ad and IEEE 802.11ay standards provide technical specifications for mmWave communications that influence radar calibration protocols, especially in vehicle-to-everything (V2X) communication scenarios. These standards establish performance benchmarks that calibration processes must achieve to ensure reliable operation in diverse environmental conditions.

Regulatory bodies are increasingly focusing on the electromagnetic compatibility (EMC) aspects of mmWave radar systems. The International Electrotechnical Commission (IEC) standards, particularly IEC 61000 series, define the limits for electromagnetic emissions and immunity that calibrated radar systems must comply with to prevent interference with other critical electronic systems.

Recent developments in autonomous vehicle regulations have introduced more specific requirements for sensor calibration verification. For instance, UN Regulation No. 157 on Automated Lane Keeping Systems (ALKS) includes provisions for sensor monitoring and calibration validation. These regulations require manufacturers to implement continuous calibration monitoring systems that can detect and alert when radar performance falls below specified thresholds.

The aviation industry's standards for radar calibration, such as those from the Radio Technical Commission for Aeronautics (RTCA), are increasingly being adapted for automotive applications. These standards provide robust frameworks for environmental testing and performance validation under extreme conditions, which are essential for ensuring radar calibration stability in autonomous guidance systems.

Compliance with these standards requires comprehensive documentation of calibration procedures, validation methodologies, and performance metrics. Manufacturers must maintain detailed records of calibration parameters and their impact on system performance, particularly in safety-critical scenarios where radar data directly influences vehicle control decisions.

Environmental Impact on Calibration Performance

Environmental conditions significantly influence the calibration performance of millimeter-wave (mmWave) radar systems, creating substantial challenges for autonomous guidance applications. Temperature variations cause thermal expansion and contraction of radar components, altering signal propagation characteristics and introducing calibration drift. Research indicates that for every 10°C change, phase errors can increase by up to 5 degrees, potentially resulting in angular measurement errors exceeding 2 degrees at extended ranges.

Humidity presents another critical environmental factor affecting calibration stability. Water vapor in the atmosphere attenuates mmWave signals differently across the frequency spectrum, with attenuation rates increasing dramatically during precipitation events. Studies demonstrate that heavy rainfall can reduce radar detection range by 30-40% and introduce velocity measurement errors of up to 0.5 m/s, necessitating dynamic calibration adjustments.

Vibration and mechanical stress, particularly prevalent in automotive applications, disrupt the precise alignment of radar components. Field tests reveal that continuous vibration exposure can gradually shift antenna alignment by up to 0.3 degrees per 1000 km of operation, requiring periodic recalibration to maintain guidance accuracy. This effect becomes more pronounced in off-road environments where vibration profiles are more severe and unpredictable.

Electromagnetic interference (EMI) from nearby electronic systems represents a growing concern for radar calibration. The proliferation of wireless devices operating in adjacent frequency bands creates complex interference patterns that can corrupt calibration procedures. Recent research demonstrates that calibration performed in electromagnetically "quiet" environments may become invalid when deployed in dense urban settings with multiple RF emitters.

Atmospheric pressure variations, though often overlooked, influence signal propagation characteristics by altering the refractive index of air. This effect becomes particularly significant for long-range detection scenarios where cumulative errors can compromise guidance decisions. Altitude changes of 1000 meters can introduce range measurement errors exceeding 1.5 meters without appropriate compensation algorithms.

Advanced calibration methodologies are emerging to address these environmental challenges. Adaptive calibration systems utilizing environmental sensors to detect changing conditions and apply real-time compensation factors show promising results. Machine learning approaches that recognize environmental patterns and predict calibration drift before it affects performance represent the cutting edge of research in this domain. These developments point toward environmentally-robust calibration systems capable of maintaining autonomous guidance accuracy across diverse operating conditions.

Humidity presents another critical environmental factor affecting calibration stability. Water vapor in the atmosphere attenuates mmWave signals differently across the frequency spectrum, with attenuation rates increasing dramatically during precipitation events. Studies demonstrate that heavy rainfall can reduce radar detection range by 30-40% and introduce velocity measurement errors of up to 0.5 m/s, necessitating dynamic calibration adjustments.

Vibration and mechanical stress, particularly prevalent in automotive applications, disrupt the precise alignment of radar components. Field tests reveal that continuous vibration exposure can gradually shift antenna alignment by up to 0.3 degrees per 1000 km of operation, requiring periodic recalibration to maintain guidance accuracy. This effect becomes more pronounced in off-road environments where vibration profiles are more severe and unpredictable.

Electromagnetic interference (EMI) from nearby electronic systems represents a growing concern for radar calibration. The proliferation of wireless devices operating in adjacent frequency bands creates complex interference patterns that can corrupt calibration procedures. Recent research demonstrates that calibration performed in electromagnetically "quiet" environments may become invalid when deployed in dense urban settings with multiple RF emitters.

Atmospheric pressure variations, though often overlooked, influence signal propagation characteristics by altering the refractive index of air. This effect becomes particularly significant for long-range detection scenarios where cumulative errors can compromise guidance decisions. Altitude changes of 1000 meters can introduce range measurement errors exceeding 1.5 meters without appropriate compensation algorithms.

Advanced calibration methodologies are emerging to address these environmental challenges. Adaptive calibration systems utilizing environmental sensors to detect changing conditions and apply real-time compensation factors show promising results. Machine learning approaches that recognize environmental patterns and predict calibration drift before it affects performance represent the cutting edge of research in this domain. These developments point toward environmentally-robust calibration systems capable of maintaining autonomous guidance accuracy across diverse operating conditions.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!