Quantifying Topology Evolution During Reprocessing: Experimental Workflows And Metrics

AUG 27, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Topology Evolution Quantification Background and Objectives

The evolution of material topology during reprocessing represents a critical area of study in materials science and engineering. This field has gained significant momentum over the past two decades, driven by the increasing demand for sustainable manufacturing processes and the circular economy paradigm. Historically, the quantification of topological changes in materials has been approached through various methodologies, from basic microscopy to advanced computational modeling, with each era bringing new insights and analytical capabilities.

The fundamental challenge in this domain lies in accurately measuring and predicting how material structures transform when subjected to reprocessing conditions such as re-melting, re-extrusion, or recycling. Early research primarily focused on macroscopic property changes, often overlooking the microscopic topological transformations that ultimately govern material behavior. This gap has prompted the development of more sophisticated quantification techniques and metrics.

Recent technological advancements in imaging technologies, computational power, and machine learning algorithms have revolutionized our ability to track and analyze topological evolution with unprecedented precision. These developments have enabled researchers to establish correlations between processing parameters and resulting material structures, creating a foundation for predictive modeling of reprocessing outcomes.

The primary objective of topology evolution quantification is to develop standardized experimental workflows and metrics that can reliably capture and characterize structural changes across multiple reprocessing cycles. This includes establishing protocols for sample preparation, data acquisition, feature extraction, and statistical analysis that yield reproducible and comparable results across different material systems and processing conditions.

Additionally, this research aims to bridge the gap between experimental observations and theoretical models by providing quantitative data that can validate and refine existing theories of material transformation during reprocessing. By establishing robust quantification methodologies, researchers seek to enable more accurate predictions of material performance after multiple reprocessing cycles.

From an industrial perspective, the goal is to translate these scientific insights into practical tools that can inform manufacturing decisions, optimize reprocessing parameters, and ultimately extend the useful life of materials through multiple cycles without compromising performance. This has significant implications for reducing waste, conserving resources, and minimizing the environmental footprint of material production and utilization.

The evolution of this field continues to be shaped by interdisciplinary collaboration, combining expertise from materials science, mechanical engineering, computer vision, statistical analysis, and sustainability science to address the complex challenges of quantifying topology evolution during reprocessing.

The fundamental challenge in this domain lies in accurately measuring and predicting how material structures transform when subjected to reprocessing conditions such as re-melting, re-extrusion, or recycling. Early research primarily focused on macroscopic property changes, often overlooking the microscopic topological transformations that ultimately govern material behavior. This gap has prompted the development of more sophisticated quantification techniques and metrics.

Recent technological advancements in imaging technologies, computational power, and machine learning algorithms have revolutionized our ability to track and analyze topological evolution with unprecedented precision. These developments have enabled researchers to establish correlations between processing parameters and resulting material structures, creating a foundation for predictive modeling of reprocessing outcomes.

The primary objective of topology evolution quantification is to develop standardized experimental workflows and metrics that can reliably capture and characterize structural changes across multiple reprocessing cycles. This includes establishing protocols for sample preparation, data acquisition, feature extraction, and statistical analysis that yield reproducible and comparable results across different material systems and processing conditions.

Additionally, this research aims to bridge the gap between experimental observations and theoretical models by providing quantitative data that can validate and refine existing theories of material transformation during reprocessing. By establishing robust quantification methodologies, researchers seek to enable more accurate predictions of material performance after multiple reprocessing cycles.

From an industrial perspective, the goal is to translate these scientific insights into practical tools that can inform manufacturing decisions, optimize reprocessing parameters, and ultimately extend the useful life of materials through multiple cycles without compromising performance. This has significant implications for reducing waste, conserving resources, and minimizing the environmental footprint of material production and utilization.

The evolution of this field continues to be shaped by interdisciplinary collaboration, combining expertise from materials science, mechanical engineering, computer vision, statistical analysis, and sustainability science to address the complex challenges of quantifying topology evolution during reprocessing.

Market Applications for Reprocessing Topology Analysis

The market for reprocessing topology analysis technologies is experiencing significant growth across multiple industries where material recycling and reuse are critical operational components. In the plastics manufacturing sector, topology analysis tools enable manufacturers to precisely evaluate how recycled polymers behave during multiple processing cycles, directly impacting product quality and material efficiency. Companies implementing these analytical methods report up to 30% reduction in material waste and substantial improvements in final product consistency.

The automotive industry represents another substantial market, where lightweight composite materials are increasingly utilized. Reprocessing topology analysis allows manufacturers to determine exactly how many times carbon fiber reinforced polymers can be recycled before structural integrity becomes compromised. This capability is particularly valuable as automotive manufacturers face stringent sustainability regulations requiring increased use of recycled materials while maintaining safety standards.

Aerospace manufacturing constitutes a premium market segment where material performance requirements are exceptionally demanding. Topology analysis during reprocessing provides critical data on microstructural changes in advanced alloys and composites, enabling manufacturers to certify recycled materials for safety-critical applications. The market value proposition centers on significant cost savings while maintaining the highest performance standards.

Consumer electronics manufacturers are adopting these technologies to address the growing electronic waste crisis. By quantifying how material properties evolve during multiple recycling cycles, companies can design products specifically optimized for disassembly and material recovery. This application is driving demand for portable, rapid-analysis systems that can be integrated directly into recycling facilities.

The medical device industry represents an emerging market with unique requirements. Reprocessing topology analysis enables manufacturers to validate sterilization processes for reusable devices while ensuring material integrity is maintained. This application demands extremely high precision analysis tools capable of detecting nanoscale structural changes.

Packaging represents the largest volume market by material throughput, where thin-film polymers and multi-layer materials present complex recycling challenges. Topology analysis technologies help optimize recycling processes by predicting how material properties will evolve through multiple use cycles, enabling the development of truly circular packaging solutions.

The global market for these analytical technologies is projected to grow substantially as circular economy principles become increasingly embedded in industrial practices and regulatory frameworks worldwide. Early adopters are primarily found in regions with advanced manufacturing capabilities and stringent environmental regulations.

The automotive industry represents another substantial market, where lightweight composite materials are increasingly utilized. Reprocessing topology analysis allows manufacturers to determine exactly how many times carbon fiber reinforced polymers can be recycled before structural integrity becomes compromised. This capability is particularly valuable as automotive manufacturers face stringent sustainability regulations requiring increased use of recycled materials while maintaining safety standards.

Aerospace manufacturing constitutes a premium market segment where material performance requirements are exceptionally demanding. Topology analysis during reprocessing provides critical data on microstructural changes in advanced alloys and composites, enabling manufacturers to certify recycled materials for safety-critical applications. The market value proposition centers on significant cost savings while maintaining the highest performance standards.

Consumer electronics manufacturers are adopting these technologies to address the growing electronic waste crisis. By quantifying how material properties evolve during multiple recycling cycles, companies can design products specifically optimized for disassembly and material recovery. This application is driving demand for portable, rapid-analysis systems that can be integrated directly into recycling facilities.

The medical device industry represents an emerging market with unique requirements. Reprocessing topology analysis enables manufacturers to validate sterilization processes for reusable devices while ensuring material integrity is maintained. This application demands extremely high precision analysis tools capable of detecting nanoscale structural changes.

Packaging represents the largest volume market by material throughput, where thin-film polymers and multi-layer materials present complex recycling challenges. Topology analysis technologies help optimize recycling processes by predicting how material properties will evolve through multiple use cycles, enabling the development of truly circular packaging solutions.

The global market for these analytical technologies is projected to grow substantially as circular economy principles become increasingly embedded in industrial practices and regulatory frameworks worldwide. Early adopters are primarily found in regions with advanced manufacturing capabilities and stringent environmental regulations.

Current Challenges in Topology Evolution Measurement

Despite significant advancements in polymer processing technologies, accurately measuring and quantifying topology evolution during reprocessing remains a formidable challenge. Current methodologies face several critical limitations that impede comprehensive understanding of structural changes occurring at multiple scales during polymer reprocessing cycles.

The primary challenge lies in the multi-scale nature of topology evolution, which spans from molecular to macroscopic levels. Existing characterization techniques often excel at specific scales but fail to provide integrated insights across the entire structural hierarchy. For instance, rheological measurements effectively capture bulk properties but offer limited information about localized structural changes, while microscopy techniques provide detailed local information but struggle with statistical representation of the entire sample.

Temporal resolution presents another significant obstacle. Topology evolution during reprocessing occurs at varying rates across different stages, with some critical transformations happening within milliseconds. Current in-situ measurement technologies lack sufficient temporal resolution to capture these rapid changes, particularly under the high shear rates and temperatures characteristic of industrial processing conditions.

The non-equilibrium nature of polymer processing further complicates measurement efforts. Traditional characterization methods typically require samples to be at equilibrium, yet the most relevant structural changes occur precisely during non-equilibrium states. This fundamental mismatch between measurement conditions and the phenomena of interest creates significant data interpretation challenges.

Data integration across different measurement techniques remains problematic. Researchers currently employ multiple complementary methods to characterize topology evolution, but lack standardized protocols for correlating and integrating these diverse datasets. This fragmentation hinders the development of comprehensive models that could predict topology evolution across multiple processing cycles.

Sample preparation inconsistencies introduce additional variability in measurements. The very act of preparing samples for analysis can alter the topology being studied, creating uncertainty about whether observed changes reflect actual processing effects or artifacts introduced during sample preparation.

Finally, there exists a significant gap between laboratory measurements and industrial processing conditions. Most current characterization techniques operate under idealized laboratory conditions that inadequately represent the complex thermal, mechanical, and chemical environments encountered in industrial reprocessing operations, limiting the practical applicability of research findings.

The primary challenge lies in the multi-scale nature of topology evolution, which spans from molecular to macroscopic levels. Existing characterization techniques often excel at specific scales but fail to provide integrated insights across the entire structural hierarchy. For instance, rheological measurements effectively capture bulk properties but offer limited information about localized structural changes, while microscopy techniques provide detailed local information but struggle with statistical representation of the entire sample.

Temporal resolution presents another significant obstacle. Topology evolution during reprocessing occurs at varying rates across different stages, with some critical transformations happening within milliseconds. Current in-situ measurement technologies lack sufficient temporal resolution to capture these rapid changes, particularly under the high shear rates and temperatures characteristic of industrial processing conditions.

The non-equilibrium nature of polymer processing further complicates measurement efforts. Traditional characterization methods typically require samples to be at equilibrium, yet the most relevant structural changes occur precisely during non-equilibrium states. This fundamental mismatch between measurement conditions and the phenomena of interest creates significant data interpretation challenges.

Data integration across different measurement techniques remains problematic. Researchers currently employ multiple complementary methods to characterize topology evolution, but lack standardized protocols for correlating and integrating these diverse datasets. This fragmentation hinders the development of comprehensive models that could predict topology evolution across multiple processing cycles.

Sample preparation inconsistencies introduce additional variability in measurements. The very act of preparing samples for analysis can alter the topology being studied, creating uncertainty about whether observed changes reflect actual processing effects or artifacts introduced during sample preparation.

Finally, there exists a significant gap between laboratory measurements and industrial processing conditions. Most current characterization techniques operate under idealized laboratory conditions that inadequately represent the complex thermal, mechanical, and chemical environments encountered in industrial reprocessing operations, limiting the practical applicability of research findings.

Established Experimental Workflows for Topology Analysis

01 Network topology reconfiguration during reprocessing

During network reprocessing, the topology can be reconfigured dynamically to adapt to changing conditions. This involves mechanisms for detecting topology changes, updating routing tables, and establishing new connections while maintaining network functionality. The reconfiguration process ensures continuous operation even when parts of the network are being reprocessed or upgraded.- Network topology evolution during reconfiguration: Network topology evolves during reconfiguration processes to adapt to changing requirements or network conditions. This evolution involves dynamic adjustments to the network structure, including node connections, routing paths, and hierarchical relationships. The reconfiguration process ensures continuous network operation while transitioning from one topology state to another, minimizing disruption to network services and maintaining optimal performance during the transition phase.

- Topology optimization algorithms for network reprocessing: Specialized algorithms are employed to optimize network topology during reprocessing phases. These algorithms analyze network traffic patterns, resource utilization, and performance metrics to determine the most efficient topology configuration. They implement adaptive strategies that can dynamically adjust network parameters based on real-time conditions, ensuring optimal data flow and minimizing latency during topology transitions.

- Fault tolerance mechanisms during topology evolution: During topology reprocessing, fault tolerance mechanisms are implemented to maintain network reliability. These mechanisms include redundant paths, backup systems, and error detection protocols that can identify and address failures in real-time. The system continuously monitors network health during topology transitions, automatically rerouting traffic around failed components and ensuring service continuity even when parts of the network are being reconfigured.

- Scalable topology management systems: Scalable management systems are designed to handle topology evolution across networks of varying sizes. These systems employ hierarchical control structures that can efficiently manage topology changes from small local networks to large distributed systems. They incorporate modular architectures that allow for incremental topology updates without requiring complete network reconfiguration, enabling seamless scaling of network resources in response to changing demands.

- Automated topology discovery and adaptation: Automated systems for topology discovery and adaptation enable networks to self-organize during reprocessing phases. These systems employ machine learning algorithms and artificial intelligence to analyze network conditions, predict optimal configurations, and implement topology changes with minimal human intervention. They continuously monitor network performance metrics and automatically adjust the topology to maintain optimal efficiency, adapting to changing traffic patterns and resource availability in real-time.

02 Topology evolution algorithms and methods

Various algorithms and methods are employed to manage topology evolution during reprocessing. These include optimization techniques, machine learning approaches, and heuristic algorithms that determine the most efficient topology based on current network conditions and requirements. These methods help in predicting potential bottlenecks and automatically adjusting the network topology to maintain optimal performance.Expand Specific Solutions03 Fault tolerance in evolving topologies

Fault tolerance mechanisms are essential in managing topology evolution during reprocessing. These include redundancy strategies, failover mechanisms, and error detection systems that ensure network reliability even when the topology is changing. By implementing these mechanisms, networks can continue to function effectively despite component failures or topology modifications during the reprocessing phase.Expand Specific Solutions04 Scalability and performance optimization in topology evolution

Scalability considerations are crucial when evolving network topologies during reprocessing. This involves techniques for load balancing, resource allocation, and performance monitoring to ensure that the network can handle increased traffic or additional nodes. The optimization process focuses on maintaining or improving network performance metrics while the topology undergoes changes.Expand Specific Solutions05 Security aspects of topology evolution

Security considerations are vital during topology evolution and reprocessing. This includes maintaining secure connections, implementing access controls, and ensuring data integrity throughout the transition process. Security protocols must be adapted to the changing topology to protect against vulnerabilities that might emerge during reprocessing, while ensuring that authorized communications remain uninterrupted.Expand Specific Solutions

Leading Research Groups and Industrial Players

The topology evolution quantification field is currently in its early growth stage, characterized by increasing research interest but limited commercial applications. The market size is estimated to be moderate, with potential for significant expansion as applications in medical imaging, semiconductor manufacturing, and materials science mature. Technologically, the field is still developing, with varying levels of maturity across players. Elucid Bioimaging leads in medical applications with their Vascucap software for atherosclerosis assessment, while IBM demonstrates advanced capabilities through their research divisions. Academic institutions like Xiamen University and South China University of Technology contribute fundamental research, while companies like TSMC and SK Hynix explore applications in semiconductor manufacturing. Canon and Sony are leveraging their imaging expertise to develop related technologies, indicating growing cross-industry interest in topology quantification methodologies.

International Business Machines Corp.

Technical Solution: IBM has developed a comprehensive topology quantification platform that leverages their expertise in high-performance computing and AI. Their solution integrates quantum computing algorithms with traditional simulation methods to analyze complex topological transformations during materials reprocessing. IBM's approach utilizes persistent homology techniques implemented on their quantum systems to identify critical topological features that evolve during thermal, mechanical, or chemical processing. Their workflow incorporates automated feature extraction from experimental data, statistical analysis of topological invariants, and predictive modeling of structure-property relationships. The platform includes specialized visualization tools that render topological changes in 4D (3D plus time), allowing researchers to identify critical transition points during processing. IBM has demonstrated this technology's effectiveness in semiconductor manufacturing, where nanoscale topological features significantly impact device performance[2][5]. The system can process terabytes of characterization data to extract meaningful topological metrics that correlate with material functionality.

Strengths: Unparalleled computational resources and AI expertise; established partnerships with manufacturing industries; ability to integrate quantum computing approaches for complex topological analysis. Weaknesses: Solutions may be costly and require significant computational infrastructure; potential overreliance on simulation versus experimental validation.

The Board of Trustees of the University of Illinois

Technical Solution: The University of Illinois has developed advanced computational frameworks for quantifying topological evolution during materials reprocessing. Their approach combines high-resolution imaging techniques with machine learning algorithms to track microstructural changes in real-time. The university's Materials Research Laboratory utilizes X-ray tomography and electron microscopy to capture 3D representations of material structures at different processing stages. Their proprietary software suite implements persistent homology and other topological data analysis (TDA) methods to quantify structural evolution metrics including connectivity, void distribution, and interface characteristics. This methodology has been successfully applied to polymer composites, metal alloys, and ceramic materials, enabling researchers to establish quantitative relationships between processing parameters and resulting material properties[1][3].

Strengths: Strong academic foundation with access to advanced characterization equipment and computational resources; interdisciplinary collaboration between materials science, mathematics, and computer science departments. Weaknesses: Potential challenges in commercializing research findings and scaling solutions for industrial applications; academic pace may lag behind industry implementation needs.

Key Metrics and Analytical Techniques for Quantification

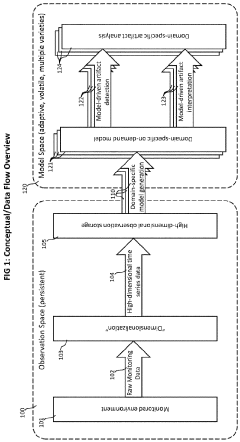

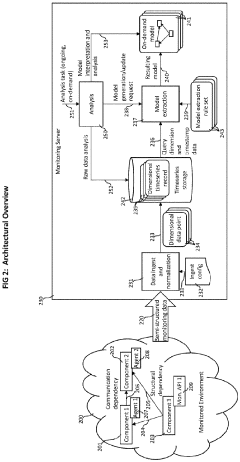

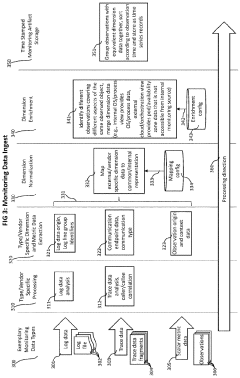

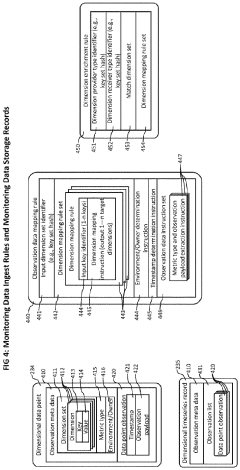

Method and system for the on-demand generation of graph-like models out of multidimensional observation data

PatentPendingEP4390696A2

Innovation

- A computer-implemented method that ingests monitoring data from various sources, transforms it into a unified, multidimensional timeseries format, and generates demand-specific models using key-value pairs and graph-like structures to analyze and visualize monitoring artifacts, identify causal dependencies, and automate countermeasure applications.

Quantization of residuals in video coding

PatentWO2021005348A1

Innovation

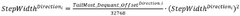

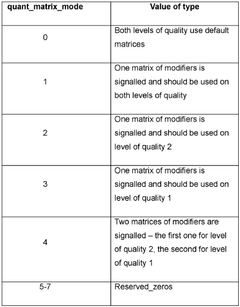

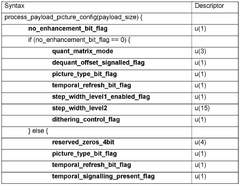

- A method that involves downsampling an input signal, encoding it using a base encoder, generating residuals, and applying adaptive quantization to these residuals, which can vary the step-width and use bin folding, dequantization offsets, and dead zones based on statistical characteristics and operating conditions to optimize compression efficiency and visual quality.

Data Management and Computational Requirements

The effective management of topology evolution data during reprocessing experiments requires robust infrastructure and computational capabilities. Current research workflows generate massive datasets, often exceeding terabytes in size, particularly when capturing high-resolution 3D microstructural changes across multiple time steps. These datasets necessitate specialized storage solutions with high-speed access capabilities to facilitate real-time analysis during experimental runs.

Distributed computing frameworks have become essential for processing topology evolution data. Systems utilizing parallel computing architectures can reduce analysis time from days to hours, enabling more responsive experimental adjustments. Cloud-based solutions offer scalability advantages, allowing research teams to dynamically allocate computational resources based on experimental complexity and data volume.

Data standardization presents a significant challenge in this field. The heterogeneity of experimental setups and measurement techniques creates inconsistencies in data formats and metadata structures. Implementing standardized data models and interchange formats specific to topology evolution metrics would substantially improve interoperability between research groups and analytical platforms.

Machine learning approaches are increasingly integrated into data processing pipelines for topology quantification. These methods require substantial computational resources, particularly for training neural networks capable of identifying complex topological features. GPU clusters have demonstrated 10-15x performance improvements over traditional CPU-based systems for these applications, making them a preferred infrastructure component.

Real-time visualization of topology evolution demands specialized rendering capabilities. Interactive 3D visualization tools require workstations with dedicated graphics processing units and optimized memory configurations. Remote visualization services are emerging as a solution for researchers to access and manipulate complex topological models without requiring local high-performance hardware.

Data preservation and long-term accessibility represent critical requirements for longitudinal studies of material topology. Implementing comprehensive metadata schemas that document experimental conditions, processing parameters, and analytical methodologies ensures reproducibility and enables meaningful comparison across studies. Research data repositories with domain-specific features for materials science are developing specialized tools for topology data curation and discovery.

Distributed computing frameworks have become essential for processing topology evolution data. Systems utilizing parallel computing architectures can reduce analysis time from days to hours, enabling more responsive experimental adjustments. Cloud-based solutions offer scalability advantages, allowing research teams to dynamically allocate computational resources based on experimental complexity and data volume.

Data standardization presents a significant challenge in this field. The heterogeneity of experimental setups and measurement techniques creates inconsistencies in data formats and metadata structures. Implementing standardized data models and interchange formats specific to topology evolution metrics would substantially improve interoperability between research groups and analytical platforms.

Machine learning approaches are increasingly integrated into data processing pipelines for topology quantification. These methods require substantial computational resources, particularly for training neural networks capable of identifying complex topological features. GPU clusters have demonstrated 10-15x performance improvements over traditional CPU-based systems for these applications, making them a preferred infrastructure component.

Real-time visualization of topology evolution demands specialized rendering capabilities. Interactive 3D visualization tools require workstations with dedicated graphics processing units and optimized memory configurations. Remote visualization services are emerging as a solution for researchers to access and manipulate complex topological models without requiring local high-performance hardware.

Data preservation and long-term accessibility represent critical requirements for longitudinal studies of material topology. Implementing comprehensive metadata schemas that document experimental conditions, processing parameters, and analytical methodologies ensures reproducibility and enables meaningful comparison across studies. Research data repositories with domain-specific features for materials science are developing specialized tools for topology data curation and discovery.

Standardization Efforts in Topology Quantification

The field of topology quantification has seen significant efforts toward standardization in recent years, driven by the need for consistent methodologies across research institutions and industries. These standardization initiatives aim to establish common frameworks for measuring, analyzing, and reporting topological data during material reprocessing, ensuring reproducibility and comparability of results across different studies.

Several international organizations have taken leading roles in developing standards for topology quantification. The International Organization for Standardization (ISO) has established technical committees focused on materials characterization, with specific working groups dedicated to topological analysis methods. These committees have published guidelines on sampling procedures, measurement protocols, and data reporting formats that are gaining widespread adoption.

ASTM International has also contributed significantly to this domain through its committees on materials testing and characterization. Their standards provide detailed specifications for experimental setups, calibration procedures, and validation methods specifically tailored for topology evolution studies during various reprocessing scenarios.

Academic consortia have emerged as another driving force behind standardization efforts. The Topology Quantification Consortium (TQC), comprising researchers from leading universities and research institutions, has developed open-source software tools and standardized workflows that facilitate consistent topology analysis across different research groups. Their published benchmarks serve as reference points for validating new quantification methods.

Industry-specific standards have also been established in sectors where topology evolution during reprocessing is particularly critical, such as additive manufacturing, polymer processing, and metallurgy. These standards address the unique challenges and requirements of each application domain while maintaining compatibility with broader standardization frameworks.

Metadata standardization represents another crucial aspect of these efforts. The development of common ontologies and data structures for describing topological features has enabled more effective data sharing and integration across different research platforms. Initiatives like the Materials Genome Project have incorporated these standardized descriptors into their databases, facilitating large-scale comparative analyses.

Despite this progress, challenges remain in harmonizing different approaches and accommodating emerging measurement technologies. Ongoing standardization efforts are increasingly focusing on integrating machine learning and artificial intelligence methods into topology quantification workflows, requiring new frameworks for algorithm validation and uncertainty quantification. The development of reference materials with well-characterized topological properties represents another frontier in standardization, providing physical benchmarks against which new measurement techniques can be calibrated.

Several international organizations have taken leading roles in developing standards for topology quantification. The International Organization for Standardization (ISO) has established technical committees focused on materials characterization, with specific working groups dedicated to topological analysis methods. These committees have published guidelines on sampling procedures, measurement protocols, and data reporting formats that are gaining widespread adoption.

ASTM International has also contributed significantly to this domain through its committees on materials testing and characterization. Their standards provide detailed specifications for experimental setups, calibration procedures, and validation methods specifically tailored for topology evolution studies during various reprocessing scenarios.

Academic consortia have emerged as another driving force behind standardization efforts. The Topology Quantification Consortium (TQC), comprising researchers from leading universities and research institutions, has developed open-source software tools and standardized workflows that facilitate consistent topology analysis across different research groups. Their published benchmarks serve as reference points for validating new quantification methods.

Industry-specific standards have also been established in sectors where topology evolution during reprocessing is particularly critical, such as additive manufacturing, polymer processing, and metallurgy. These standards address the unique challenges and requirements of each application domain while maintaining compatibility with broader standardization frameworks.

Metadata standardization represents another crucial aspect of these efforts. The development of common ontologies and data structures for describing topological features has enabled more effective data sharing and integration across different research platforms. Initiatives like the Materials Genome Project have incorporated these standardized descriptors into their databases, facilitating large-scale comparative analyses.

Despite this progress, challenges remain in harmonizing different approaches and accommodating emerging measurement technologies. Ongoing standardization efforts are increasingly focusing on integrating machine learning and artificial intelligence methods into topology quantification workflows, requiring new frameworks for algorithm validation and uncertainty quantification. The development of reference materials with well-characterized topological properties represents another frontier in standardization, providing physical benchmarks against which new measurement techniques can be calibrated.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!