A Comparison of Analog, Digital, and Mixed-Signal Neuromorphic Design.

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. The evolution of this field can be traced back to the 1980s when Carver Mead first introduced the concept of using analog VLSI systems to mimic neurobiological architectures. This pioneering work established the foundation for what would become a multidisciplinary field combining neuroscience, computer engineering, and materials science.

The trajectory of neuromorphic computing development has been characterized by several distinct phases. Initially, research focused primarily on analog implementations that directly mimicked neural behavior through physical properties of electronic components. As digital technology advanced, purely digital neuromorphic systems emerged, offering precision and programmability at the cost of higher power consumption. The most recent evolution has been toward mixed-signal designs that attempt to leverage the advantages of both analog and digital approaches.

A critical milestone in this evolution was the development of spike-based computing models, particularly Spiking Neural Networks (SNNs), which more accurately represent the temporal dynamics of biological neurons compared to traditional artificial neural networks. This advancement has enabled more efficient processing of temporal data patterns and event-driven computation.

The objectives of neuromorphic computing span multiple dimensions. From a technical perspective, the primary goal is to develop computing architectures that can process information with the efficiency, adaptability, and fault tolerance characteristic of biological neural systems. This includes achieving ultra-low power consumption, real-time processing capabilities, and on-chip learning mechanisms.

From an application standpoint, neuromorphic systems aim to excel in tasks where conventional computing architectures struggle, particularly in pattern recognition, sensory processing, and adaptive control systems. These systems are especially valuable for edge computing applications where power constraints are significant, such as in autonomous vehicles, advanced robotics, and portable medical devices.

Research objectives in the field have increasingly focused on scalability challenges, addressing how to effectively scale neuromorphic systems from small proof-of-concept demonstrations to large-scale practical implementations. This includes developing standardized benchmarks for performance evaluation and creating software frameworks that can effectively utilize neuromorphic hardware.

The long-term vision for neuromorphic computing extends beyond mere performance improvements, aspiring to create truly brain-inspired computing systems that can exhibit cognitive capabilities such as learning from limited examples, adapting to novel situations, and integrating information across multiple sensory modalities—all while maintaining energy efficiency orders of magnitude better than conventional computing approaches.

The trajectory of neuromorphic computing development has been characterized by several distinct phases. Initially, research focused primarily on analog implementations that directly mimicked neural behavior through physical properties of electronic components. As digital technology advanced, purely digital neuromorphic systems emerged, offering precision and programmability at the cost of higher power consumption. The most recent evolution has been toward mixed-signal designs that attempt to leverage the advantages of both analog and digital approaches.

A critical milestone in this evolution was the development of spike-based computing models, particularly Spiking Neural Networks (SNNs), which more accurately represent the temporal dynamics of biological neurons compared to traditional artificial neural networks. This advancement has enabled more efficient processing of temporal data patterns and event-driven computation.

The objectives of neuromorphic computing span multiple dimensions. From a technical perspective, the primary goal is to develop computing architectures that can process information with the efficiency, adaptability, and fault tolerance characteristic of biological neural systems. This includes achieving ultra-low power consumption, real-time processing capabilities, and on-chip learning mechanisms.

From an application standpoint, neuromorphic systems aim to excel in tasks where conventional computing architectures struggle, particularly in pattern recognition, sensory processing, and adaptive control systems. These systems are especially valuable for edge computing applications where power constraints are significant, such as in autonomous vehicles, advanced robotics, and portable medical devices.

Research objectives in the field have increasingly focused on scalability challenges, addressing how to effectively scale neuromorphic systems from small proof-of-concept demonstrations to large-scale practical implementations. This includes developing standardized benchmarks for performance evaluation and creating software frameworks that can effectively utilize neuromorphic hardware.

The long-term vision for neuromorphic computing extends beyond mere performance improvements, aspiring to create truly brain-inspired computing systems that can exhibit cognitive capabilities such as learning from limited examples, adapting to novel situations, and integrating information across multiple sensory modalities—all while maintaining energy efficiency orders of magnitude better than conventional computing approaches.

Market Analysis for Brain-Inspired Computing Solutions

The brain-inspired computing market is experiencing significant growth, driven by increasing demand for efficient AI processing solutions. According to recent market research, the global neuromorphic computing market is projected to reach $8.9 billion by 2025, growing at a CAGR of 49.1% from 2020. This remarkable growth reflects the expanding applications across various industries seeking more energy-efficient and powerful computing solutions.

The market for neuromorphic computing solutions can be segmented based on implementation approaches: analog, digital, and mixed-signal designs. Each segment addresses different market needs and presents unique value propositions. Analog neuromorphic solutions currently dominate applications requiring ultra-low power consumption, particularly in edge computing and IoT devices. These solutions have gained traction in the wearable technology market, which is expected to exceed $70 billion by 2025.

Digital neuromorphic implementations have captured significant market share in data centers and high-performance computing environments where precision and programmability are paramount. This segment benefits from compatibility with existing digital infrastructure, making it attractive for enterprise customers seeking gradual adoption paths. The enterprise AI hardware market, where digital neuromorphic solutions play a growing role, is expected to reach $25 billion by 2024.

Mixed-signal neuromorphic designs represent the fastest-growing segment, with projected annual growth rates exceeding 60% through 2025. These hybrid solutions are particularly appealing in automotive, robotics, and advanced sensing applications where both power efficiency and computational precision are required. The automotive AI hardware market alone is expected to reach $15 billion by 2025, with neuromorphic computing solutions capturing an increasing share.

Geographically, North America currently leads the neuromorphic computing market with approximately 40% market share, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is demonstrating the highest growth rate, driven by substantial investments in AI infrastructure in China, Japan, and South Korea. Government initiatives supporting brain-inspired computing research have allocated over $5 billion in funding across these regions in the past five years.

Customer segments for neuromorphic computing solutions include research institutions, technology companies, defense contractors, and increasingly, commercial enterprises. The defense and aerospace sector represents a particularly lucrative market, with neuromorphic computing applications in autonomous systems and signal processing generating over $1.2 billion in 2022. Healthcare applications, especially in medical imaging and diagnostics, are emerging as another high-growth segment, projected to exceed $3 billion by 2026.

The market for neuromorphic computing solutions can be segmented based on implementation approaches: analog, digital, and mixed-signal designs. Each segment addresses different market needs and presents unique value propositions. Analog neuromorphic solutions currently dominate applications requiring ultra-low power consumption, particularly in edge computing and IoT devices. These solutions have gained traction in the wearable technology market, which is expected to exceed $70 billion by 2025.

Digital neuromorphic implementations have captured significant market share in data centers and high-performance computing environments where precision and programmability are paramount. This segment benefits from compatibility with existing digital infrastructure, making it attractive for enterprise customers seeking gradual adoption paths. The enterprise AI hardware market, where digital neuromorphic solutions play a growing role, is expected to reach $25 billion by 2024.

Mixed-signal neuromorphic designs represent the fastest-growing segment, with projected annual growth rates exceeding 60% through 2025. These hybrid solutions are particularly appealing in automotive, robotics, and advanced sensing applications where both power efficiency and computational precision are required. The automotive AI hardware market alone is expected to reach $15 billion by 2025, with neuromorphic computing solutions capturing an increasing share.

Geographically, North America currently leads the neuromorphic computing market with approximately 40% market share, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is demonstrating the highest growth rate, driven by substantial investments in AI infrastructure in China, Japan, and South Korea. Government initiatives supporting brain-inspired computing research have allocated over $5 billion in funding across these regions in the past five years.

Customer segments for neuromorphic computing solutions include research institutions, technology companies, defense contractors, and increasingly, commercial enterprises. The defense and aerospace sector represents a particularly lucrative market, with neuromorphic computing applications in autonomous systems and signal processing generating over $1.2 billion in 2022. Healthcare applications, especially in medical imaging and diagnostics, are emerging as another high-growth segment, projected to exceed $3 billion by 2026.

Current Landscape and Challenges in Neuromorphic Design

The neuromorphic computing landscape has evolved significantly over the past decade, with three primary design approaches emerging: analog, digital, and mixed-signal implementations. Each approach presents distinct advantages and challenges in the quest to emulate brain-like computing efficiency. Currently, the field is experiencing rapid growth with global research initiatives and commercial ventures accelerating development across all three paradigms.

Analog neuromorphic designs leverage the inherent physics of electronic components to directly implement neural computations. These implementations excel in power efficiency and computational density but face significant challenges in scalability and precision. Manufacturing variations in analog circuits create device-to-device inconsistencies that complicate large-scale deployment. Additionally, analog designs struggle with long-term stability and noise susceptibility, limiting their application in mission-critical systems requiring deterministic behavior.

Digital neuromorphic architectures utilize conventional digital logic to implement neural networks, offering excellent precision, programmability, and manufacturing scalability. IBM's TrueNorth and Intel's Loihi represent significant achievements in this domain. However, digital implementations typically consume more power and occupy larger silicon area compared to their analog counterparts. The sequential nature of digital computation also creates bottlenecks that limit the parallel processing capabilities inherent to biological neural systems.

Mixed-signal designs attempt to combine the best aspects of both approaches, using analog computation for efficient neural processing while employing digital infrastructure for communication, programming, and control. This hybrid approach shows promise in balancing efficiency with reliability but introduces complex design challenges at the analog-digital interface. Signal conversion overhead and timing synchronization between domains remain significant hurdles.

A critical challenge across all neuromorphic approaches is the lack of standardized benchmarks and evaluation metrics. Unlike traditional computing, where performance metrics are well-established, neuromorphic systems require new frameworks that account for energy efficiency, learning capability, and computational flexibility simultaneously.

Memory-computation integration represents another fundamental challenge. Traditional von Neumann architectures separate memory and processing, creating data transfer bottlenecks. Neuromorphic designs aim to overcome this limitation through in-memory computing, but implementing efficient memory structures that can simultaneously store and process information remains technically challenging.

The software ecosystem supporting neuromorphic hardware is still in its infancy. Programming models, development tools, and algorithms specifically optimized for neuromorphic architectures are limited, creating barriers to adoption. This gap between hardware capabilities and software support represents a significant obstacle to widespread implementation of neuromorphic solutions across industries.

Analog neuromorphic designs leverage the inherent physics of electronic components to directly implement neural computations. These implementations excel in power efficiency and computational density but face significant challenges in scalability and precision. Manufacturing variations in analog circuits create device-to-device inconsistencies that complicate large-scale deployment. Additionally, analog designs struggle with long-term stability and noise susceptibility, limiting their application in mission-critical systems requiring deterministic behavior.

Digital neuromorphic architectures utilize conventional digital logic to implement neural networks, offering excellent precision, programmability, and manufacturing scalability. IBM's TrueNorth and Intel's Loihi represent significant achievements in this domain. However, digital implementations typically consume more power and occupy larger silicon area compared to their analog counterparts. The sequential nature of digital computation also creates bottlenecks that limit the parallel processing capabilities inherent to biological neural systems.

Mixed-signal designs attempt to combine the best aspects of both approaches, using analog computation for efficient neural processing while employing digital infrastructure for communication, programming, and control. This hybrid approach shows promise in balancing efficiency with reliability but introduces complex design challenges at the analog-digital interface. Signal conversion overhead and timing synchronization between domains remain significant hurdles.

A critical challenge across all neuromorphic approaches is the lack of standardized benchmarks and evaluation metrics. Unlike traditional computing, where performance metrics are well-established, neuromorphic systems require new frameworks that account for energy efficiency, learning capability, and computational flexibility simultaneously.

Memory-computation integration represents another fundamental challenge. Traditional von Neumann architectures separate memory and processing, creating data transfer bottlenecks. Neuromorphic designs aim to overcome this limitation through in-memory computing, but implementing efficient memory structures that can simultaneously store and process information remains technically challenging.

The software ecosystem supporting neuromorphic hardware is still in its infancy. Programming models, development tools, and algorithms specifically optimized for neuromorphic architectures are limited, creating barriers to adoption. This gap between hardware capabilities and software support represents a significant obstacle to widespread implementation of neuromorphic solutions across industries.

Comparative Analysis of Analog, Digital, and Mixed-Signal Approaches

01 Analog Neuromorphic Circuit Design

Analog neuromorphic designs mimic brain functions using continuous-value circuits that efficiently process information with low power consumption. These designs implement neural network functions through analog components like operational amplifiers, transistors, and capacitors to create synaptic connections and neuron behaviors. Analog implementations offer advantages in power efficiency and real-time processing but face challenges in scaling and precision compared to digital approaches.- Analog Neuromorphic Circuit Design: Analog neuromorphic designs mimic the brain's neural networks using analog electronic circuits. These designs focus on implementing synaptic functions, neural processing, and learning mechanisms through analog components like transistors operating in subthreshold regions. Analog implementations offer advantages in power efficiency and real-time processing capabilities, making them suitable for applications requiring low power consumption and high-speed neural computation.

- Digital Neuromorphic Architectures: Digital neuromorphic designs implement neural networks using digital logic circuits and memory elements. These architectures focus on precise computation, scalability, and integration with existing digital systems. Digital implementations offer advantages in terms of noise immunity, design flexibility, and compatibility with standard digital design flows, making them suitable for complex neural network applications requiring high precision and reproducibility.

- Mixed-Signal Neuromorphic Systems: Mixed-signal neuromorphic designs combine both analog and digital circuits to leverage the advantages of each approach. These systems typically use analog circuits for neural processing and synaptic functions while employing digital circuits for control, memory, and communication. This hybrid approach offers a balance between energy efficiency, computation accuracy, and design flexibility, making it suitable for complex neuromorphic applications with varying performance requirements.

- Neuromorphic Design Verification and Simulation: Verification and simulation tools are essential for neuromorphic design development. These tools enable designers to model, simulate, and verify the behavior of neuromorphic circuits before physical implementation. Advanced simulation frameworks support multi-level abstraction, from device physics to system-level behavior, allowing for comprehensive analysis of performance, power consumption, and functionality. These methodologies help reduce design iterations and ensure the reliability of neuromorphic systems.

- Neuromorphic Hardware Optimization Techniques: Optimization techniques for neuromorphic hardware focus on improving performance, energy efficiency, and area utilization. These techniques include specialized circuit topologies, novel device integration, and architectural innovations that enhance the implementation of neural networks in hardware. Advanced optimization methods address challenges such as reducing power consumption, minimizing latency, and maximizing computational density, enabling more efficient neuromorphic computing systems for various applications.

02 Digital Neuromorphic Architecture Implementation

Digital neuromorphic designs use binary logic to implement neural network functions, offering advantages in precision, programmability, and integration with existing digital systems. These architectures typically employ digital processing elements, memory units, and interconnection networks to simulate neural behavior. Digital implementations provide better scalability and manufacturing compatibility with standard CMOS processes while enabling complex learning algorithms and network topologies.Expand Specific Solutions03 Mixed-Signal Neuromorphic Systems

Mixed-signal neuromorphic designs combine analog and digital components to leverage the advantages of both approaches. These systems typically use analog circuits for efficient computation and signal processing while employing digital circuits for control, memory, and communication. This hybrid approach offers a balance between the energy efficiency of analog designs and the precision and programmability of digital implementations, making them suitable for applications requiring both low power consumption and computational flexibility.Expand Specific Solutions04 Neuromorphic Design Simulation and Verification Tools

Specialized simulation and verification tools are essential for neuromorphic design development, enabling designers to model, test, and optimize neural circuits before physical implementation. These tools support various abstraction levels from behavioral modeling to circuit-level simulation and can analyze performance metrics such as power consumption, timing, and neural network accuracy. Advanced verification methodologies help ensure that neuromorphic designs meet functional requirements and performance specifications while reducing development time and costs.Expand Specific Solutions05 Hardware Acceleration for Neural Networks

Hardware accelerators for neural networks implement specialized architectures optimized for neural computation, significantly improving performance and energy efficiency compared to general-purpose processors. These designs incorporate parallel processing elements, optimized memory hierarchies, and dedicated interconnects to accelerate neural network operations. Implementations range from application-specific integrated circuits (ASICs) to field-programmable gate arrays (FPGAs), each offering different trade-offs between performance, flexibility, and development cost for neuromorphic applications.Expand Specific Solutions

Leading Organizations and Research Groups in Neuromorphic Engineering

The neuromorphic design landscape is evolving rapidly, with the market currently in a growth phase characterized by increasing commercial applications and expanding research initiatives. The global neuromorphic computing market is projected to reach significant scale as AI edge computing demands rise. In terms of technological maturity, IBM and Qualcomm lead with established neuromorphic architectures, while Samsung and NXP focus on mixed-signal implementations that balance power efficiency and computational capabilities. Syntiant and Polyn Technology are pioneering specialized analog neuromorphic solutions for ultra-low-power applications. Academic institutions like Tsinghua University and research organizations such as KIST are advancing fundamental neuromorphic concepts, particularly in novel materials and architectures. The competitive landscape reflects a strategic balance between digital approaches prioritizing precision and analog designs emphasizing energy efficiency.

International Business Machines Corp.

Technical Solution: IBM has pioneered neuromorphic computing with their TrueNorth and subsequent systems. Their approach combines digital processing with analog-inspired architectures. IBM's TrueNorth chip features 5.4 billion transistors, 4,096 neurosynaptic cores, one million programmable neurons, and 256 million configurable synapses. The chip operates on an event-driven, parallel, and fault-tolerant architecture that consumes only 70mW of power while delivering 46 giga-synaptic operations per second. More recently, IBM has developed analog in-memory computing approaches using phase-change memory (PCM) and other resistive memory technologies to perform neural network computations directly within memory arrays, significantly reducing the energy costs associated with data movement between processing and memory units. This mixed-signal approach allows IBM to leverage the efficiency of analog computing for matrix operations while maintaining digital precision for control logic.

Strengths: Extremely low power consumption compared to traditional architectures; highly scalable design; fault tolerance built into architecture; significant experience in both digital and analog neuromorphic implementations. Weaknesses: Specialized programming models required; challenges in training networks directly on neuromorphic hardware; analog components subject to noise and variability issues.

Syntiant Corp.

Technical Solution: Syntiant has developed a specialized Neural Decision Processor (NDP) architecture that employs an analog-digital hybrid approach optimized for deep learning at the edge. Their NDP100 and NDP200 series chips are designed specifically for always-on applications requiring voice and sensor processing with minimal power consumption. The architecture uses analog computation for the core neural network operations, where matrix multiplications are performed in the analog domain, achieving significant power efficiency advantages. Syntiant's chips can run deep neural networks while consuming less than 200 microwatts of power, enabling always-on voice interfaces and sensor applications in battery-powered devices. The company's mixed-signal approach allows them to achieve 100x better energy efficiency compared to traditional digital processors for specific AI workloads. Their architecture maintains digital interfaces and control logic while leveraging analog computing for the most computationally intensive operations.

Strengths: Ultra-low power consumption (sub-milliwatt operation); optimized for specific edge AI applications like keyword spotting and sensor processing; production-ready solutions already deployed in commercial products. Weaknesses: Limited to specific application domains; less flexible than general-purpose neuromorphic architectures; analog components require careful calibration to maintain accuracy across manufacturing variations and operating conditions.

Breakthrough Technologies in Neuromorphic Circuit Design

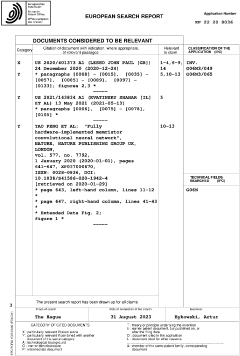

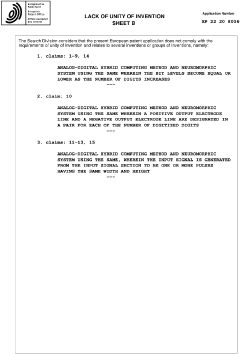

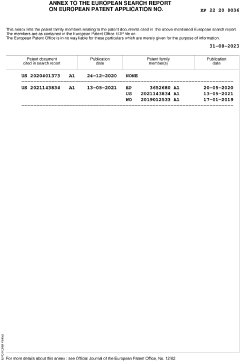

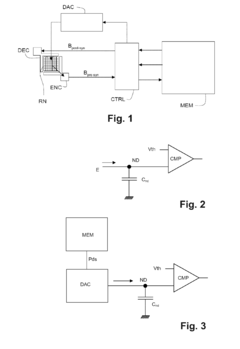

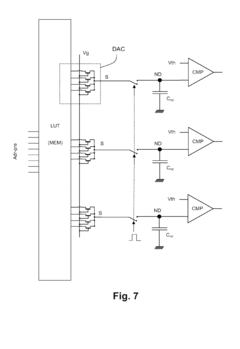

Analog-digital hybrid computing method and neuromorphic system using the same

PatentPendingEP4198828A3

Innovation

- Hybrid architecture combining analog memory arrays for synaptic weight storage with digital computation for signal processing, leveraging the advantages of both domains in neuromorphic computing.

- Configurable digitization precision for each output electrode line, allowing for flexible trade-offs between computational accuracy and efficiency.

- Use of non-volatile memory cells arranged in a crossbar array structure to efficiently implement synaptic weights and perform parallel matrix-vector multiplication operations.

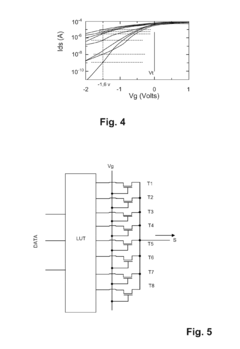

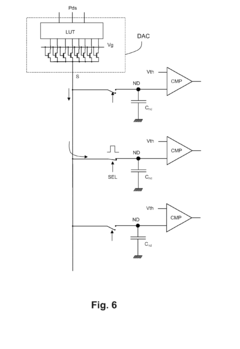

Digital-to-analog converter and neuromorphic circuit using such a converter

PatentInactiveEP2602937A1

Innovation

- A digital-analog converter utilizing a set of transistors with dispersed current-voltage characteristics, along with a digital look-up table to select transistors based on measured characteristics, ensuring a variable current output that represents digital inputs as analog signals, optimizing power consumption and surface area.

Energy Efficiency Considerations in Neuromorphic Systems

Energy efficiency represents a critical factor in the development and implementation of neuromorphic computing systems. When comparing analog, digital, and mixed-signal neuromorphic designs, energy consumption patterns emerge as a key differentiator that significantly impacts practical applications and scalability.

Analog neuromorphic systems typically demonstrate superior energy efficiency for certain neural operations. These designs leverage the inherent physics of electronic components to perform computations, often achieving energy consumption rates in the picojoule to femtojoule range per synaptic operation. The direct mapping of neural dynamics to physical processes eliminates the need for digital-to-analog conversion, substantially reducing energy overhead in signal processing tasks.

Digital neuromorphic implementations, while generally more power-hungry than their analog counterparts, offer advantages in precision and programmability. Recent advancements in digital neuromorphic hardware have focused on event-driven processing and sparse activation techniques, which have narrowed the efficiency gap. IBM's TrueNorth and Intel's Loihi chips exemplify this progress, achieving energy efficiencies of approximately 26 pJ and 23.6 pJ per synaptic operation respectively, marking significant improvements over traditional digital computing architectures.

Mixed-signal approaches attempt to harness the benefits of both paradigms, strategically employing analog components for computation-intensive operations while utilizing digital circuits for control, memory, and communication functions. This hybrid architecture can potentially optimize the energy-performance trade-off, with recent research demonstrating energy efficiencies approaching those of pure analog systems while maintaining digital precision where required.

The energy scaling properties across these designs present interesting contrasts. Analog systems typically show favorable scaling at small network sizes but face challenges with larger implementations due to noise accumulation and signal degradation. Digital systems demonstrate more predictable scaling characteristics but at higher absolute energy costs. Mixed-signal designs aim to achieve optimal scaling by dynamically allocating computational tasks between analog and digital domains based on energy considerations.

Looking forward, emerging materials and device technologies promise to further enhance energy efficiency across all neuromorphic design approaches. Memristive devices, spintronic components, and photonic computing elements are being explored as potential building blocks for ultra-low-power neuromorphic systems, with theoretical energy consumption approaching the thermodynamic limits of computation.

Analog neuromorphic systems typically demonstrate superior energy efficiency for certain neural operations. These designs leverage the inherent physics of electronic components to perform computations, often achieving energy consumption rates in the picojoule to femtojoule range per synaptic operation. The direct mapping of neural dynamics to physical processes eliminates the need for digital-to-analog conversion, substantially reducing energy overhead in signal processing tasks.

Digital neuromorphic implementations, while generally more power-hungry than their analog counterparts, offer advantages in precision and programmability. Recent advancements in digital neuromorphic hardware have focused on event-driven processing and sparse activation techniques, which have narrowed the efficiency gap. IBM's TrueNorth and Intel's Loihi chips exemplify this progress, achieving energy efficiencies of approximately 26 pJ and 23.6 pJ per synaptic operation respectively, marking significant improvements over traditional digital computing architectures.

Mixed-signal approaches attempt to harness the benefits of both paradigms, strategically employing analog components for computation-intensive operations while utilizing digital circuits for control, memory, and communication functions. This hybrid architecture can potentially optimize the energy-performance trade-off, with recent research demonstrating energy efficiencies approaching those of pure analog systems while maintaining digital precision where required.

The energy scaling properties across these designs present interesting contrasts. Analog systems typically show favorable scaling at small network sizes but face challenges with larger implementations due to noise accumulation and signal degradation. Digital systems demonstrate more predictable scaling characteristics but at higher absolute energy costs. Mixed-signal designs aim to achieve optimal scaling by dynamically allocating computational tasks between analog and digital domains based on energy considerations.

Looking forward, emerging materials and device technologies promise to further enhance energy efficiency across all neuromorphic design approaches. Memristive devices, spintronic components, and photonic computing elements are being explored as potential building blocks for ultra-low-power neuromorphic systems, with theoretical energy consumption approaching the thermodynamic limits of computation.

Hardware-Software Co-design for Neuromorphic Applications

Hardware-software co-design represents a critical approach in neuromorphic computing, where the integration of analog, digital, and mixed-signal designs requires synchronized development of both hardware architectures and software frameworks. This synergistic development methodology enables optimal performance by considering the unique characteristics of neuromorphic systems from inception.

The co-design process begins with defining computational models that effectively leverage the strengths of different neuromorphic implementations. For analog designs, software must accommodate continuous-time dynamics and inherent variability, while digital implementations require efficient event-based processing algorithms. Mixed-signal approaches necessitate software frameworks capable of bridging these paradigms through appropriate abstraction layers.

Neuromorphic programming models have evolved significantly to address these hardware-specific requirements. Specialized languages such as Nengo, PyNN, and SpiNNaker software stacks provide abstraction layers that shield developers from hardware complexities while enabling efficient resource utilization. These frameworks implement neural network models with consideration for the underlying hardware architecture's constraints and capabilities.

Compiler optimization for neuromorphic systems presents unique challenges across different design approaches. Analog implementations require mapping algorithms that account for device characteristics and variability. Digital designs benefit from optimizations focused on event timing and sparse computation. Mixed-signal systems demand sophisticated partitioning strategies to determine optimal execution between analog and digital components.

Runtime management systems play a crucial role in balancing computational efficiency with energy constraints. These systems dynamically adjust parameters such as spike thresholds, neuron activation functions, and clock frequencies based on workload characteristics and power budgets. This adaptive approach is particularly valuable for mixed-signal designs where performance-energy tradeoffs can be optimized in real-time.

Simulation environments and development tools have become increasingly sophisticated, offering multi-level abstraction capabilities that support hardware-software co-design workflows. Tools like BRIAN, NEST, and hardware-specific simulators enable developers to validate algorithms before deployment while accounting for the specific characteristics of analog, digital, or mixed-signal implementations.

The future of neuromorphic hardware-software co-design points toward automated design space exploration tools that can intelligently partition applications across heterogeneous neuromorphic components. These tools will likely incorporate machine learning techniques to optimize mapping decisions based on application requirements and hardware capabilities, further blurring the boundaries between traditional hardware and software development processes.

The co-design process begins with defining computational models that effectively leverage the strengths of different neuromorphic implementations. For analog designs, software must accommodate continuous-time dynamics and inherent variability, while digital implementations require efficient event-based processing algorithms. Mixed-signal approaches necessitate software frameworks capable of bridging these paradigms through appropriate abstraction layers.

Neuromorphic programming models have evolved significantly to address these hardware-specific requirements. Specialized languages such as Nengo, PyNN, and SpiNNaker software stacks provide abstraction layers that shield developers from hardware complexities while enabling efficient resource utilization. These frameworks implement neural network models with consideration for the underlying hardware architecture's constraints and capabilities.

Compiler optimization for neuromorphic systems presents unique challenges across different design approaches. Analog implementations require mapping algorithms that account for device characteristics and variability. Digital designs benefit from optimizations focused on event timing and sparse computation. Mixed-signal systems demand sophisticated partitioning strategies to determine optimal execution between analog and digital components.

Runtime management systems play a crucial role in balancing computational efficiency with energy constraints. These systems dynamically adjust parameters such as spike thresholds, neuron activation functions, and clock frequencies based on workload characteristics and power budgets. This adaptive approach is particularly valuable for mixed-signal designs where performance-energy tradeoffs can be optimized in real-time.

Simulation environments and development tools have become increasingly sophisticated, offering multi-level abstraction capabilities that support hardware-software co-design workflows. Tools like BRIAN, NEST, and hardware-specific simulators enable developers to validate algorithms before deployment while accounting for the specific characteristics of analog, digital, or mixed-signal implementations.

The future of neuromorphic hardware-software co-design points toward automated design space exploration tools that can intelligently partition applications across heterogeneous neuromorphic components. These tools will likely incorporate machine learning techniques to optimize mapping decisions based on application requirements and hardware capabilities, further blurring the boundaries between traditional hardware and software development processes.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!