Benchmark DDR5 Use in Distributed Computing Environments

SEP 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

DDR5 Evolution and Performance Objectives

DDR5 memory technology represents a significant evolution in the DRAM landscape, building upon the foundations established by previous generations while introducing substantial architectural improvements. The development trajectory of DDR memory has consistently focused on increasing bandwidth, reducing latency, and improving power efficiency. DDR5, introduced in 2021, continues this progression with transfer rates starting at 4800 MT/s compared to DDR4's initial 2133 MT/s, representing a substantial performance leap.

The evolution from DDR4 to DDR5 encompasses several critical advancements. Channel architecture has been redesigned, with DDR5 implementing a dual-channel architecture per DIMM, effectively doubling the accessible bandwidth without increasing pin count. This architectural shift allows for more efficient data handling in distributed computing environments where memory bandwidth often becomes a bottleneck for data-intensive workloads.

Power management has seen significant improvement with DDR5 moving voltage regulation from the motherboard to the memory module itself. This on-module power management integrated circuit (PMIC) enables more precise voltage control, resulting in improved signal integrity and power efficiency - crucial factors in large-scale distributed computing deployments where energy consumption directly impacts operational costs.

Error correction capabilities have been enhanced with DDR5 implementing on-die ECC, which operates independently from traditional system-level ECC. This dual-layer error protection is particularly valuable in distributed computing environments where data integrity is paramount and system uptime requirements are stringent.

The performance objectives for DDR5 in distributed computing environments are multifaceted. Primary goals include supporting higher computational densities through increased bandwidth per server, enabling more efficient parallel processing across distributed nodes. With theoretical bandwidth improvements of up to 85% over DDR4, DDR5 aims to alleviate memory bottlenecks in data-intensive distributed applications.

Latency reduction, despite higher transfer rates, remains a critical objective. While raw access latency (measured in nanoseconds) may not show dramatic improvement, the effective latency for data-intensive operations benefits from architectural enhancements like improved burst lengths and bank group structures.

Scalability objectives are particularly relevant for distributed computing, with DDR5 supporting higher density modules (up to 128GB per DIMM compared to DDR4's typical 64GB maximum). This density increase enables more memory-resident data processing without expanding physical infrastructure.

Energy efficiency targets include reducing power consumption per bit transferred, with DDR5 operating at 1.1V compared to DDR4's 1.2V. This voltage reduction, combined with more sophisticated power management, aims to improve performance-per-watt metrics critical for large-scale distributed computing deployments where energy costs represent a significant operational expense.

The evolution from DDR4 to DDR5 encompasses several critical advancements. Channel architecture has been redesigned, with DDR5 implementing a dual-channel architecture per DIMM, effectively doubling the accessible bandwidth without increasing pin count. This architectural shift allows for more efficient data handling in distributed computing environments where memory bandwidth often becomes a bottleneck for data-intensive workloads.

Power management has seen significant improvement with DDR5 moving voltage regulation from the motherboard to the memory module itself. This on-module power management integrated circuit (PMIC) enables more precise voltage control, resulting in improved signal integrity and power efficiency - crucial factors in large-scale distributed computing deployments where energy consumption directly impacts operational costs.

Error correction capabilities have been enhanced with DDR5 implementing on-die ECC, which operates independently from traditional system-level ECC. This dual-layer error protection is particularly valuable in distributed computing environments where data integrity is paramount and system uptime requirements are stringent.

The performance objectives for DDR5 in distributed computing environments are multifaceted. Primary goals include supporting higher computational densities through increased bandwidth per server, enabling more efficient parallel processing across distributed nodes. With theoretical bandwidth improvements of up to 85% over DDR4, DDR5 aims to alleviate memory bottlenecks in data-intensive distributed applications.

Latency reduction, despite higher transfer rates, remains a critical objective. While raw access latency (measured in nanoseconds) may not show dramatic improvement, the effective latency for data-intensive operations benefits from architectural enhancements like improved burst lengths and bank group structures.

Scalability objectives are particularly relevant for distributed computing, with DDR5 supporting higher density modules (up to 128GB per DIMM compared to DDR4's typical 64GB maximum). This density increase enables more memory-resident data processing without expanding physical infrastructure.

Energy efficiency targets include reducing power consumption per bit transferred, with DDR5 operating at 1.1V compared to DDR4's 1.2V. This voltage reduction, combined with more sophisticated power management, aims to improve performance-per-watt metrics critical for large-scale distributed computing deployments where energy costs represent a significant operational expense.

Market Demand Analysis for High-Performance Memory in Distributed Systems

The distributed computing market is experiencing unprecedented growth, driven by the expansion of cloud services, big data analytics, and artificial intelligence applications. This growth has created substantial demand for high-performance memory solutions, with DDR5 emerging as a critical technology. Market research indicates that the global high-performance memory market is projected to grow at a CAGR of 21.3% from 2023 to 2028, reaching a valuation that significantly exceeds previous generation memory technologies.

Enterprise data centers represent the largest segment demanding DDR5 memory, as they continuously seek to improve computational efficiency while managing increasing workloads. These organizations require memory solutions that can handle massive parallel processing tasks while maintaining low latency and high throughput. The financial services sector, in particular, has shown aggressive adoption rates for high-performance memory, driven by algorithmic trading systems that demand microsecond response times.

Cloud service providers constitute another major market segment, with companies like AWS, Google Cloud, and Microsoft Azure investing heavily in memory infrastructure upgrades. These providers are transitioning to DDR5 to support their customers' growing demands for faster data processing and analysis capabilities. The competitive nature of cloud services has accelerated this transition, as providers seek performance advantages over rivals.

Research institutions and scientific computing facilities form a smaller but technologically demanding segment. These organizations require extreme memory performance for complex simulations, genomic sequencing, climate modeling, and other computation-intensive applications. Their requirements often push the boundaries of what current memory technologies can deliver.

From a geographical perspective, North America leads in high-performance memory adoption, followed closely by Asia-Pacific, where rapid digital transformation is occurring. Europe shows steady growth, particularly in financial centers and research institutions. Emerging markets are experiencing faster percentage growth, albeit from a smaller base, as they build out modern computing infrastructure.

The demand for DDR5 in distributed environments is driven by several key factors: the exponential growth in data volumes requiring processing, the increasing complexity of distributed applications, the rise of real-time analytics, and the push toward edge computing architectures that require efficient memory utilization. Additionally, energy efficiency has become a critical consideration, with data centers seeking memory solutions that deliver higher performance per watt to manage operational costs and meet sustainability goals.

Industry surveys indicate that over 65% of enterprise IT decision-makers consider memory performance a critical bottleneck in their distributed computing environments, highlighting the market's readiness for DDR5 adoption despite its premium pricing compared to previous generations.

Enterprise data centers represent the largest segment demanding DDR5 memory, as they continuously seek to improve computational efficiency while managing increasing workloads. These organizations require memory solutions that can handle massive parallel processing tasks while maintaining low latency and high throughput. The financial services sector, in particular, has shown aggressive adoption rates for high-performance memory, driven by algorithmic trading systems that demand microsecond response times.

Cloud service providers constitute another major market segment, with companies like AWS, Google Cloud, and Microsoft Azure investing heavily in memory infrastructure upgrades. These providers are transitioning to DDR5 to support their customers' growing demands for faster data processing and analysis capabilities. The competitive nature of cloud services has accelerated this transition, as providers seek performance advantages over rivals.

Research institutions and scientific computing facilities form a smaller but technologically demanding segment. These organizations require extreme memory performance for complex simulations, genomic sequencing, climate modeling, and other computation-intensive applications. Their requirements often push the boundaries of what current memory technologies can deliver.

From a geographical perspective, North America leads in high-performance memory adoption, followed closely by Asia-Pacific, where rapid digital transformation is occurring. Europe shows steady growth, particularly in financial centers and research institutions. Emerging markets are experiencing faster percentage growth, albeit from a smaller base, as they build out modern computing infrastructure.

The demand for DDR5 in distributed environments is driven by several key factors: the exponential growth in data volumes requiring processing, the increasing complexity of distributed applications, the rise of real-time analytics, and the push toward edge computing architectures that require efficient memory utilization. Additionally, energy efficiency has become a critical consideration, with data centers seeking memory solutions that deliver higher performance per watt to manage operational costs and meet sustainability goals.

Industry surveys indicate that over 65% of enterprise IT decision-makers consider memory performance a critical bottleneck in their distributed computing environments, highlighting the market's readiness for DDR5 adoption despite its premium pricing compared to previous generations.

DDR5 Technical Landscape and Implementation Challenges

DDR5 memory technology represents a significant advancement in the evolution of DRAM, offering substantial improvements over its predecessor, DDR4. The current technical landscape of DDR5 in distributed computing environments is characterized by higher bandwidth capabilities, increased density, and improved power efficiency. With data rates starting at 4800 MT/s and scaling up to 8400 MT/s in current implementations, DDR5 delivers approximately twice the bandwidth of DDR4, addressing a critical bottleneck in data-intensive distributed computing workloads.

The architecture of DDR5 introduces several fundamental changes, including an on-die ECC (Error Correction Code) mechanism that significantly enhances data integrity - a crucial factor for distributed systems where data corruption can cascade across nodes. The implementation of dual 32-bit channels per DIMM, replacing the single 64-bit channel design of DDR4, allows for more efficient memory access patterns particularly beneficial for parallel computing operations common in distributed environments.

Despite these advancements, several technical challenges persist in DDR5 implementation for distributed computing. Thermal management represents a significant concern as higher operating frequencies generate increased heat, requiring more sophisticated cooling solutions in dense server environments. The higher operating frequencies also introduce signal integrity challenges, necessitating more precise PCB design and potentially limiting maximum trace lengths between memory controllers and DIMMs.

Power delivery architecture has been substantially redesigned in DDR5, moving voltage regulation from the motherboard to the DIMM itself through the introduction of Power Management ICs (PMICs). While this improves power efficiency and reduces noise, it introduces new failure points and increases DIMM complexity, potentially affecting reliability in mission-critical distributed systems.

Compatibility issues present another layer of implementation challenges. Memory controllers in current server processors require significant redesign to fully leverage DDR5 capabilities, creating a transitional period where performance gains may not be fully realized. Additionally, the new JEDEC specifications for DDR5 introduce changes to command structures and timing parameters that require substantial firmware and BIOS updates.

Cost considerations remain a significant barrier to widespread DDR5 adoption in large-scale distributed environments. The premium pricing of DDR5 modules, coupled with the need for new server platforms, creates a substantial total cost of ownership challenge that must be weighed against performance benefits. This economic factor is particularly relevant for hyperscale deployments where memory costs represent a significant portion of infrastructure investment.

The architecture of DDR5 introduces several fundamental changes, including an on-die ECC (Error Correction Code) mechanism that significantly enhances data integrity - a crucial factor for distributed systems where data corruption can cascade across nodes. The implementation of dual 32-bit channels per DIMM, replacing the single 64-bit channel design of DDR4, allows for more efficient memory access patterns particularly beneficial for parallel computing operations common in distributed environments.

Despite these advancements, several technical challenges persist in DDR5 implementation for distributed computing. Thermal management represents a significant concern as higher operating frequencies generate increased heat, requiring more sophisticated cooling solutions in dense server environments. The higher operating frequencies also introduce signal integrity challenges, necessitating more precise PCB design and potentially limiting maximum trace lengths between memory controllers and DIMMs.

Power delivery architecture has been substantially redesigned in DDR5, moving voltage regulation from the motherboard to the DIMM itself through the introduction of Power Management ICs (PMICs). While this improves power efficiency and reduces noise, it introduces new failure points and increases DIMM complexity, potentially affecting reliability in mission-critical distributed systems.

Compatibility issues present another layer of implementation challenges. Memory controllers in current server processors require significant redesign to fully leverage DDR5 capabilities, creating a transitional period where performance gains may not be fully realized. Additionally, the new JEDEC specifications for DDR5 introduce changes to command structures and timing parameters that require substantial firmware and BIOS updates.

Cost considerations remain a significant barrier to widespread DDR5 adoption in large-scale distributed environments. The premium pricing of DDR5 modules, coupled with the need for new server platforms, creates a substantial total cost of ownership challenge that must be weighed against performance benefits. This economic factor is particularly relevant for hyperscale deployments where memory costs represent a significant portion of infrastructure investment.

Current DDR5 Deployment Architectures in Distributed Environments

01 DDR5 memory performance testing methodologies

Various methodologies and systems for benchmarking DDR5 memory performance, including automated testing frameworks that measure latency, throughput, and power efficiency. These methodologies enable standardized comparison between different memory modules and configurations, providing quantitative metrics for performance evaluation under various workloads and operating conditions.- DDR5 memory performance testing methodologies: Various methodologies and systems for benchmarking DDR5 memory performance have been developed. These include automated testing frameworks that measure key performance metrics such as data transfer rates, latency, and power efficiency. The testing methodologies often involve specialized hardware and software tools designed to stress the memory under different workloads to evaluate real-world performance characteristics.

- DDR5 memory architecture optimizations for performance enhancement: Architectural innovations in DDR5 memory design focus on improving performance benchmarks through enhanced channel utilization, improved prefetching mechanisms, and optimized memory controller designs. These optimizations include advanced decision-making algorithms for memory access patterns, reduced command-to-data latency, and improved parallelism capabilities that significantly boost throughput in high-demand computing environments.

- Power efficiency and thermal performance in DDR5 memory benchmarking: DDR5 memory benchmarking includes evaluation of power efficiency metrics and thermal performance characteristics. Advanced voltage regulation techniques integrated directly onto memory modules help reduce power consumption while maintaining high performance. Benchmarking tools measure the relationship between performance scaling and power usage, providing insights into efficiency across different workloads and operating conditions.

- Comparative analysis frameworks for DDR5 versus previous memory generations: Specialized benchmarking frameworks have been developed to provide comparative analysis between DDR5 and previous memory generations. These frameworks evaluate performance improvements in areas such as bandwidth, latency, and data integrity across different workloads. The comparative analysis helps quantify the generational improvements and identifies specific use cases where DDR5 provides significant advantages over DDR4 and earlier memory technologies.

- Application-specific DDR5 memory performance optimization: Benchmarking methodologies tailored to specific application domains evaluate how DDR5 memory performs under different workloads such as artificial intelligence, high-performance computing, and data analytics. These specialized benchmarks measure performance metrics relevant to particular use cases, including sustained throughput for data-intensive applications, random access performance for database operations, and memory scaling efficiency for virtualized environments.

02 DDR5 memory architecture optimizations

Architectural improvements in DDR5 memory that enhance performance, including advanced channel designs, improved prefetching mechanisms, and optimized memory controller interfaces. These innovations focus on reducing latency, increasing bandwidth, and improving overall system throughput through structural enhancements to the memory subsystem architecture.Expand Specific Solutions03 Power efficiency and thermal management in DDR5

Techniques for optimizing power consumption and thermal characteristics of DDR5 memory during high-performance operations. These include dynamic voltage and frequency scaling, intelligent power state management, and thermal monitoring systems that maintain optimal performance while minimizing energy usage and heat generation.Expand Specific Solutions04 DDR5 memory controller optimization techniques

Advanced memory controller designs specifically optimized for DDR5 memory, featuring improved scheduling algorithms, enhanced request handling, and intelligent data prefetching. These controllers maximize memory bandwidth utilization, reduce access latencies, and optimize data transfer rates between the processor and memory subsystem.Expand Specific Solutions05 DDR5 performance in specific application workloads

Analysis of DDR5 memory performance across various specialized workloads including artificial intelligence, high-performance computing, data analytics, and virtualized environments. These benchmarks evaluate how DDR5 memory characteristics affect application-specific performance metrics and identify optimal configurations for different use cases.Expand Specific Solutions

Leading DDR5 Manufacturers and Cloud Infrastructure Providers

The DDR5 memory market in distributed computing is evolving rapidly, currently transitioning from early adoption to mainstream implementation. The market is projected to grow significantly as data centers upgrade infrastructure to support AI workloads and high-performance computing. Leading semiconductor manufacturers like Micron, SK Hynix, and Samsung are at the forefront of DDR5 technology development, while system integrators such as IBM, Huawei, and Inspur are implementing these solutions in enterprise environments. Cloud providers including Google and Alibaba are driving adoption through large-scale deployments. NVIDIA and AMD are integrating DDR5 support into their latest computing platforms, creating a competitive ecosystem where performance benchmarking is becoming increasingly critical for enterprise decision-making.

Huawei Technologies Co., Ltd.

Technical Solution: Huawei has developed a comprehensive DDR5 implementation strategy for their distributed computing platforms, focusing on both performance and reliability at scale. Their architecture leverages DDR5's increased bandwidth (up to 6400 MT/s) and incorporates proprietary memory controller designs that optimize for distributed workloads. Huawei's implementation features advanced memory pooling technologies that create a unified memory address space across multiple nodes, reducing data movement overhead in distributed applications. Their DDR5 platform incorporates sophisticated RAS features including predictive failure analysis that uses machine learning to identify potential memory failures before they occur, critical for maintaining uptime in large distributed environments. Huawei has also developed specialized memory traffic management algorithms that prioritize critical system operations across distributed nodes, ensuring consistent performance under varying workloads. Their implementation includes enhanced power management capabilities that dynamically adjust memory operating parameters based on workload characteristics and thermal conditions across the distributed system.

Strengths: Exceptional reliability features particularly valuable in mission-critical distributed environments; advanced memory pooling technologies that effectively create larger virtual memory spaces across nodes. Weaknesses: Some proprietary elements may limit interoperability with third-party components; implementation complexity requires specialized expertise for optimal deployment and management.

NVIDIA Corp.

Technical Solution: NVIDIA has developed a comprehensive DDR5 implementation strategy for their GPU-accelerated distributed computing platforms, focusing on maximizing memory bandwidth and minimizing latency for AI and HPC workloads. Their architecture leverages DDR5's higher bandwidth (up to 4800-6400 MT/s) alongside GPU-specific optimizations including custom memory controllers that efficiently manage the increased channel count of DDR5. NVIDIA's approach includes advanced memory pooling technologies that allow GPUs in distributed environments to access memory across nodes with minimal latency overhead, effectively creating larger virtual memory spaces. Their implementation incorporates specialized prefetching algorithms optimized for distributed AI workloads that predict data access patterns across node boundaries. NVIDIA has also developed custom power management techniques that dynamically adjust DDR5 operating parameters based on workload characteristics across the distributed system, optimizing for either maximum performance or energy efficiency depending on application requirements.

Strengths: Exceptional integration with GPU computing architectures providing optimized memory access patterns for distributed AI workloads; advanced memory pooling technologies that effectively increase available memory for large models. Weaknesses: Solutions primarily optimized for NVIDIA's own hardware ecosystem, potentially limiting flexibility in heterogeneous environments; higher implementation complexity requiring specialized expertise in both GPU and memory subsystem optimization.

Critical DDR5 Performance Metrics and Benchmarking Methodologies

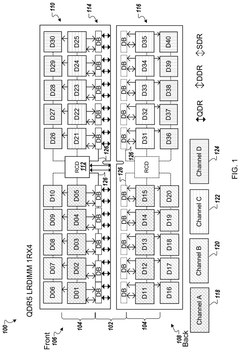

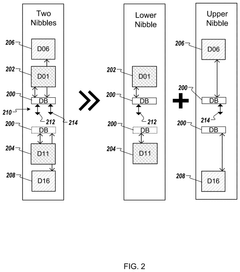

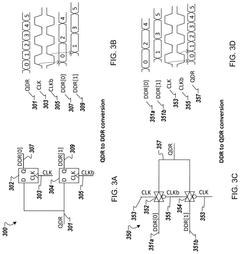

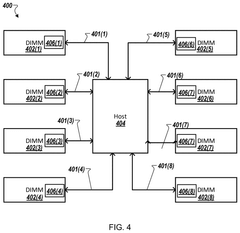

Quad-data-rate (QDR) host interface in a memory system

PatentPendingUS20250130739A1

Innovation

- The implementation of a dual in-line memory module (DIMM) with a quad-data-rate (QDR) host interface, which includes conversion circuitry to buffer data between a host device and memory devices, allowing the host interface to operate at QDR while maintaining DDR data rates for DRAM devices.

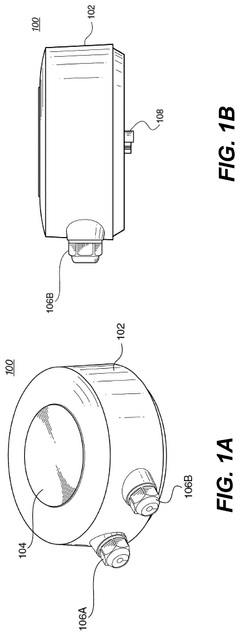

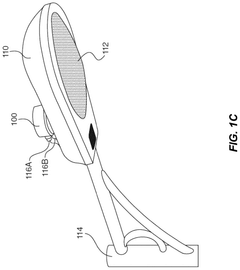

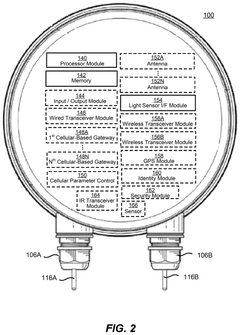

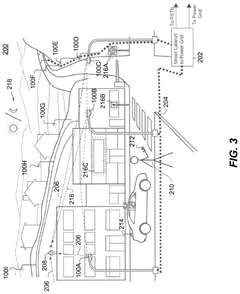

Distributed computing environment via a plurality of regularly spaced, aerially mounted wireless smart sensor networking devices

PatentPendingUS20240349413A1

Innovation

- Integration of network capabilities with light control functions in processing devices mounted on street lights, utilizing a processor module, communication module, and a connector compliant with standards like ANSI C136, enabling distributed computing tasks and light control signals to be shared and transmitted.

Power Efficiency and Thermal Considerations for DDR5 at Scale

Power consumption in DDR5 memory systems represents a significant challenge when deployed at scale in distributed computing environments. The new DDR5 standard operates at higher voltages and frequencies than its predecessors, resulting in increased power demands despite efficiency improvements. In large-scale deployments with hundreds or thousands of nodes, these incremental increases compound dramatically, potentially adding megawatts to data center power requirements.

Thermal management becomes increasingly critical as memory density grows. DDR5 modules generate more heat due to higher operating speeds and increased transistor density. This heat must be effectively dissipated to prevent performance throttling and ensure reliability. Traditional air cooling solutions may prove insufficient for high-density DDR5 deployments, necessitating advanced cooling technologies such as liquid cooling or immersion cooling systems.

Benchmarking reveals that DDR5's power efficiency varies significantly across workloads. Memory-intensive applications with high bandwidth requirements show better performance-per-watt metrics compared to latency-sensitive workloads. This variability necessitates workload-specific optimization strategies when deploying DDR5 in distributed environments.

The introduction of on-die ECC (Error Correction Code) in DDR5 adds another dimension to power considerations. While this feature improves reliability, it also increases power consumption by approximately 2-5% depending on implementation. At scale, this overhead becomes significant and must be factored into power budgeting and thermal design.

Dynamic voltage and frequency scaling (DVFS) capabilities in DDR5 offer promising avenues for power optimization. Preliminary benchmarks indicate potential energy savings of 15-20% through intelligent frequency scaling based on workload characteristics. However, implementing effective DVFS strategies requires sophisticated memory controllers and management software that can respond to changing workload demands without compromising performance.

Idle power consumption remains a concern in distributed environments where not all nodes operate at full capacity simultaneously. DDR5's multiple power-down modes show improvements over DDR4, but the transition latencies between these states must be carefully managed to prevent performance degradation. Benchmarks indicate that optimized power state management can reduce system-wide memory power consumption by up to 30% during periods of variable utilization.

Thermal management becomes increasingly critical as memory density grows. DDR5 modules generate more heat due to higher operating speeds and increased transistor density. This heat must be effectively dissipated to prevent performance throttling and ensure reliability. Traditional air cooling solutions may prove insufficient for high-density DDR5 deployments, necessitating advanced cooling technologies such as liquid cooling or immersion cooling systems.

Benchmarking reveals that DDR5's power efficiency varies significantly across workloads. Memory-intensive applications with high bandwidth requirements show better performance-per-watt metrics compared to latency-sensitive workloads. This variability necessitates workload-specific optimization strategies when deploying DDR5 in distributed environments.

The introduction of on-die ECC (Error Correction Code) in DDR5 adds another dimension to power considerations. While this feature improves reliability, it also increases power consumption by approximately 2-5% depending on implementation. At scale, this overhead becomes significant and must be factored into power budgeting and thermal design.

Dynamic voltage and frequency scaling (DVFS) capabilities in DDR5 offer promising avenues for power optimization. Preliminary benchmarks indicate potential energy savings of 15-20% through intelligent frequency scaling based on workload characteristics. However, implementing effective DVFS strategies requires sophisticated memory controllers and management software that can respond to changing workload demands without compromising performance.

Idle power consumption remains a concern in distributed environments where not all nodes operate at full capacity simultaneously. DDR5's multiple power-down modes show improvements over DDR4, but the transition latencies between these states must be carefully managed to prevent performance degradation. Benchmarks indicate that optimized power state management can reduce system-wide memory power consumption by up to 30% during periods of variable utilization.

Interoperability Standards and Compatibility Frameworks

In the rapidly evolving landscape of distributed computing environments, interoperability standards and compatibility frameworks for DDR5 memory have become critical components for ensuring seamless integration across diverse hardware ecosystems. The JEDEC Solid State Technology Association has established comprehensive DDR5 specifications that serve as the foundation for interoperability, defining signal integrity requirements, command protocols, and timing parameters essential for cross-vendor compatibility.

Memory manufacturers including Samsung, Micron, and SK Hynix have collaborated with system integrators to develop compatibility validation frameworks specifically designed for distributed computing workloads. These frameworks incorporate standardized testing methodologies that verify DDR5 modules can maintain performance consistency across heterogeneous server clusters, particularly important when scaling out computational resources.

The Distributed Management Task Force (DMTF) has extended its Redfish API specifications to include detailed DDR5 memory monitoring and management capabilities, enabling standardized approaches to memory subsystem administration across multi-vendor environments. This API standardization allows system administrators to implement unified memory management policies regardless of the underlying hardware diversity.

PCIe 5.0 and CXL 2.0 specifications have been harmonized with DDR5 implementations to ensure coherent memory access patterns in disaggregated memory architectures. This coordination between interconnect and memory standards is particularly valuable in distributed computing environments where memory pooling and dynamic resource allocation are becoming increasingly common deployment strategies.

Open Compute Project (OCP) has developed reference designs incorporating DDR5 memory subsystems with standardized form factors and electrical interfaces, promoting interchangeability between different vendors' products. These designs include specific provisions for distributed computing workloads, with emphasis on memory coherency protocols and remote memory access optimizations.

Industry consortiums have established certification programs that validate DDR5 components against interoperability requirements specific to distributed computing environments. These programs include stress testing under variable network latency conditions and verification of memory consistency models across distributed nodes, ensuring that memory behavior remains predictable even when accessed from remote compute resources.

The emergence of memory-semantic networking protocols compatible with DDR5 timing characteristics has further enhanced interoperability in distributed environments. These protocols enable more efficient remote memory operations by reducing translation layers between network and memory domains, critical for maintaining performance in geographically dispersed computing clusters utilizing DDR5 technology.

Memory manufacturers including Samsung, Micron, and SK Hynix have collaborated with system integrators to develop compatibility validation frameworks specifically designed for distributed computing workloads. These frameworks incorporate standardized testing methodologies that verify DDR5 modules can maintain performance consistency across heterogeneous server clusters, particularly important when scaling out computational resources.

The Distributed Management Task Force (DMTF) has extended its Redfish API specifications to include detailed DDR5 memory monitoring and management capabilities, enabling standardized approaches to memory subsystem administration across multi-vendor environments. This API standardization allows system administrators to implement unified memory management policies regardless of the underlying hardware diversity.

PCIe 5.0 and CXL 2.0 specifications have been harmonized with DDR5 implementations to ensure coherent memory access patterns in disaggregated memory architectures. This coordination between interconnect and memory standards is particularly valuable in distributed computing environments where memory pooling and dynamic resource allocation are becoming increasingly common deployment strategies.

Open Compute Project (OCP) has developed reference designs incorporating DDR5 memory subsystems with standardized form factors and electrical interfaces, promoting interchangeability between different vendors' products. These designs include specific provisions for distributed computing workloads, with emphasis on memory coherency protocols and remote memory access optimizations.

Industry consortiums have established certification programs that validate DDR5 components against interoperability requirements specific to distributed computing environments. These programs include stress testing under variable network latency conditions and verification of memory consistency models across distributed nodes, ensuring that memory behavior remains predictable even when accessed from remote compute resources.

The emergence of memory-semantic networking protocols compatible with DDR5 timing characteristics has further enhanced interoperability in distributed environments. These protocols enable more efficient remote memory operations by reducing translation layers between network and memory domains, critical for maintaining performance in geographically dispersed computing clusters utilizing DDR5 technology.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!