DDR5 vs SD RAM: Bandwidth Analysis in Server Environments

SEP 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

DDR5 Evolution and Performance Objectives

Memory technology has undergone significant evolution since the introduction of SDRAM in the 1990s. DDR5, the fifth generation of Double Data Rate Synchronous Dynamic Random-Access Memory, represents a substantial leap forward in memory performance capabilities. This evolution has been driven by increasing demands for higher bandwidth, lower latency, and improved power efficiency in server environments where data processing requirements continue to grow exponentially.

The development trajectory from SDRAM through DDR, DDR2, DDR3, and DDR4 has consistently focused on increasing data transfer rates while reducing power consumption. DDR5 continues this trend with substantial architectural improvements. Initially standardized by JEDEC in 2020, DDR5 was designed specifically to address the memory bottlenecks that have become increasingly problematic in high-performance computing and server applications.

The primary performance objectives for DDR5 include doubling the bandwidth compared to its predecessor DDR4, with theoretical maximum transfer rates exceeding 6400 MT/s in initial implementations and a roadmap extending to 8400 MT/s and beyond. This represents a significant improvement over DDR4's typical 3200 MT/s performance ceiling in server environments.

Beyond raw bandwidth improvements, DDR5 aims to enhance overall system efficiency through several key innovations. The introduction of two independent 32-bit channels per DIMM (versus a single 64-bit channel in DDR4) enables more efficient memory access patterns and improved parallelism. This architectural change is particularly beneficial for server workloads that frequently access small, non-contiguous memory segments.

Power efficiency stands as another critical objective in DDR5's development. Operating at a reduced voltage of 1.1V compared to DDR4's 1.2V, DDR5 incorporates on-die voltage regulation to improve signal integrity and power delivery. This advancement is especially relevant for data centers where energy consumption directly impacts operational costs and environmental footprint.

Reliability improvements represent a third major objective for DDR5, with the implementation of on-die Error Correction Code (ECC) capabilities. This feature provides enhanced data integrity protection at the component level, complementing traditional system-level ECC implementations common in server environments. The result is a more robust memory subsystem capable of handling the increasing demands of mission-critical applications.

The timing of DDR5's introduction aligns strategically with the proliferation of data-intensive workloads including artificial intelligence, machine learning, and real-time analytics that characterize modern server environments. These applications generate unprecedented memory bandwidth requirements that previous generations of DRAM technology struggle to satisfy efficiently.

The development trajectory from SDRAM through DDR, DDR2, DDR3, and DDR4 has consistently focused on increasing data transfer rates while reducing power consumption. DDR5 continues this trend with substantial architectural improvements. Initially standardized by JEDEC in 2020, DDR5 was designed specifically to address the memory bottlenecks that have become increasingly problematic in high-performance computing and server applications.

The primary performance objectives for DDR5 include doubling the bandwidth compared to its predecessor DDR4, with theoretical maximum transfer rates exceeding 6400 MT/s in initial implementations and a roadmap extending to 8400 MT/s and beyond. This represents a significant improvement over DDR4's typical 3200 MT/s performance ceiling in server environments.

Beyond raw bandwidth improvements, DDR5 aims to enhance overall system efficiency through several key innovations. The introduction of two independent 32-bit channels per DIMM (versus a single 64-bit channel in DDR4) enables more efficient memory access patterns and improved parallelism. This architectural change is particularly beneficial for server workloads that frequently access small, non-contiguous memory segments.

Power efficiency stands as another critical objective in DDR5's development. Operating at a reduced voltage of 1.1V compared to DDR4's 1.2V, DDR5 incorporates on-die voltage regulation to improve signal integrity and power delivery. This advancement is especially relevant for data centers where energy consumption directly impacts operational costs and environmental footprint.

Reliability improvements represent a third major objective for DDR5, with the implementation of on-die Error Correction Code (ECC) capabilities. This feature provides enhanced data integrity protection at the component level, complementing traditional system-level ECC implementations common in server environments. The result is a more robust memory subsystem capable of handling the increasing demands of mission-critical applications.

The timing of DDR5's introduction aligns strategically with the proliferation of data-intensive workloads including artificial intelligence, machine learning, and real-time analytics that characterize modern server environments. These applications generate unprecedented memory bandwidth requirements that previous generations of DRAM technology struggle to satisfy efficiently.

Server Memory Market Demand Analysis

The server memory market has experienced significant growth in recent years, driven primarily by the expansion of data centers, cloud computing services, and the increasing demand for high-performance computing applications. Current market analysis indicates that the global server memory market is valued at over 19 billion USD, with projections suggesting continued growth at a compound annual growth rate of 23% through 2028. This remarkable expansion reflects the critical role that memory bandwidth plays in modern server environments.

The transition from traditional SDRAM to DDR5 technology is being accelerated by several key market demands. Enterprise customers are increasingly requiring higher memory bandwidth to support data-intensive applications such as artificial intelligence, machine learning, and real-time analytics. These applications generate massive datasets that must be processed with minimal latency, creating substantial demand for memory solutions that can handle higher data throughput.

Cloud service providers represent the largest segment of the server memory market, accounting for approximately 42% of total demand. These providers are continuously expanding their infrastructure to support the growing adoption of cloud-based services across various industries. The need for improved memory performance is particularly acute in this segment, as providers seek to optimize resource utilization and enhance service quality while managing operational costs.

Financial services and healthcare sectors are emerging as significant growth drivers for high-bandwidth server memory. Financial institutions require robust memory solutions for high-frequency trading systems and risk analysis platforms, where milliseconds can translate to millions in profit or loss. Similarly, healthcare organizations are increasingly implementing advanced imaging systems and genomic sequencing technologies that process enormous volumes of data, necessitating superior memory bandwidth.

Regional analysis reveals that North America currently dominates the server memory market with a 38% share, followed by Asia-Pacific at 34% and Europe at 22%. However, the Asia-Pacific region is expected to witness the fastest growth rate, driven by rapid digital transformation initiatives and increasing investments in data center infrastructure across countries like China, India, and Singapore.

The demand for DDR5 memory in server environments is further influenced by the growing emphasis on energy efficiency. Data centers are under increasing pressure to reduce power consumption, and DDR5's improved power efficiency compared to previous generations makes it an attractive option for organizations seeking to minimize operational costs and environmental impact while maximizing performance.

The transition from traditional SDRAM to DDR5 technology is being accelerated by several key market demands. Enterprise customers are increasingly requiring higher memory bandwidth to support data-intensive applications such as artificial intelligence, machine learning, and real-time analytics. These applications generate massive datasets that must be processed with minimal latency, creating substantial demand for memory solutions that can handle higher data throughput.

Cloud service providers represent the largest segment of the server memory market, accounting for approximately 42% of total demand. These providers are continuously expanding their infrastructure to support the growing adoption of cloud-based services across various industries. The need for improved memory performance is particularly acute in this segment, as providers seek to optimize resource utilization and enhance service quality while managing operational costs.

Financial services and healthcare sectors are emerging as significant growth drivers for high-bandwidth server memory. Financial institutions require robust memory solutions for high-frequency trading systems and risk analysis platforms, where milliseconds can translate to millions in profit or loss. Similarly, healthcare organizations are increasingly implementing advanced imaging systems and genomic sequencing technologies that process enormous volumes of data, necessitating superior memory bandwidth.

Regional analysis reveals that North America currently dominates the server memory market with a 38% share, followed by Asia-Pacific at 34% and Europe at 22%. However, the Asia-Pacific region is expected to witness the fastest growth rate, driven by rapid digital transformation initiatives and increasing investments in data center infrastructure across countries like China, India, and Singapore.

The demand for DDR5 memory in server environments is further influenced by the growing emphasis on energy efficiency. Data centers are under increasing pressure to reduce power consumption, and DDR5's improved power efficiency compared to previous generations makes it an attractive option for organizations seeking to minimize operational costs and environmental impact while maximizing performance.

Current Memory Technologies and Bandwidth Limitations

The memory landscape in server environments has evolved significantly over the past decade, with various technologies competing to address the growing bandwidth demands of modern applications. Currently, DDR4 SDRAM dominates the server memory market, offering speeds up to 3200 MT/s and bandwidth capabilities reaching 25.6 GB/s per module. However, as data-intensive applications like AI, machine learning, and real-time analytics continue to expand, these specifications are increasingly becoming bottlenecks in system performance.

Traditional SDRAM technologies face fundamental limitations in scaling bandwidth further. The parallel bus architecture of DDR4 encounters signal integrity challenges at higher frequencies, while power consumption increases disproportionately with speed improvements. Current server configurations typically employ multi-channel memory architectures to aggregate bandwidth across multiple modules, but this approach faces diminishing returns due to increased system complexity and memory controller limitations.

DDR5 represents the newest generation of mainstream memory technology, offering substantial improvements with speeds starting at 4800 MT/s and theoretical bandwidth of 38.4 GB/s per module. Early benchmark results indicate that DDR5 delivers 36-40% higher effective bandwidth in server workloads compared to equivalent DDR4 configurations. The technology also introduces important architectural changes including dual-channel DIMM design and on-die ECC, which further enhance data throughput capabilities.

Alternative memory technologies are also gaining traction in specialized server environments. High Bandwidth Memory (HBM) provides exceptional bandwidth through 3D stacking and wide interfaces, delivering up to 307 GB/s per stack in its latest generation. However, HBM remains primarily confined to GPU and specialized accelerator applications due to integration complexities and cost considerations.

Persistent memory technologies like Intel's Optane DC represent another approach, bridging the gap between DRAM and storage with lower bandwidth (around 7-8 GB/s) but significantly higher capacity and persistence. These solutions address different aspects of the memory hierarchy rather than directly competing on raw bandwidth metrics.

Memory bandwidth limitations manifest in various performance bottlenecks across server workloads. In virtualized environments, memory contention between virtual machines can severely impact application performance. Database systems frequently encounter bandwidth constraints during large table scans and join operations, while AI training workloads may experience extended processing times due to insufficient memory throughput when models exceed GPU memory capacity.

The industry is actively addressing these limitations through both hardware and software approaches. Memory controller optimizations, advanced prefetching algorithms, and cache hierarchy improvements help mitigate bandwidth constraints, while software-defined memory management enables more efficient resource allocation across demanding workloads.

Traditional SDRAM technologies face fundamental limitations in scaling bandwidth further. The parallel bus architecture of DDR4 encounters signal integrity challenges at higher frequencies, while power consumption increases disproportionately with speed improvements. Current server configurations typically employ multi-channel memory architectures to aggregate bandwidth across multiple modules, but this approach faces diminishing returns due to increased system complexity and memory controller limitations.

DDR5 represents the newest generation of mainstream memory technology, offering substantial improvements with speeds starting at 4800 MT/s and theoretical bandwidth of 38.4 GB/s per module. Early benchmark results indicate that DDR5 delivers 36-40% higher effective bandwidth in server workloads compared to equivalent DDR4 configurations. The technology also introduces important architectural changes including dual-channel DIMM design and on-die ECC, which further enhance data throughput capabilities.

Alternative memory technologies are also gaining traction in specialized server environments. High Bandwidth Memory (HBM) provides exceptional bandwidth through 3D stacking and wide interfaces, delivering up to 307 GB/s per stack in its latest generation. However, HBM remains primarily confined to GPU and specialized accelerator applications due to integration complexities and cost considerations.

Persistent memory technologies like Intel's Optane DC represent another approach, bridging the gap between DRAM and storage with lower bandwidth (around 7-8 GB/s) but significantly higher capacity and persistence. These solutions address different aspects of the memory hierarchy rather than directly competing on raw bandwidth metrics.

Memory bandwidth limitations manifest in various performance bottlenecks across server workloads. In virtualized environments, memory contention between virtual machines can severely impact application performance. Database systems frequently encounter bandwidth constraints during large table scans and join operations, while AI training workloads may experience extended processing times due to insufficient memory throughput when models exceed GPU memory capacity.

The industry is actively addressing these limitations through both hardware and software approaches. Memory controller optimizations, advanced prefetching algorithms, and cache hierarchy improvements help mitigate bandwidth constraints, while software-defined memory management enables more efficient resource allocation across demanding workloads.

DDR5 Implementation Strategies for Servers

01 DDR5 memory architecture and bandwidth improvements

DDR5 memory technology offers significant bandwidth improvements over previous generations through architectural enhancements. These include higher data transfer rates, improved channel efficiency, and advanced signaling techniques. The architecture supports higher frequencies and incorporates features like decision feedback equalization to maintain signal integrity at higher speeds, resulting in substantially increased memory bandwidth for high-performance computing applications.- DDR5 memory bandwidth improvements: DDR5 memory technology offers significant bandwidth improvements over previous generations through architectural enhancements such as higher data rates, improved channel efficiency, and advanced signaling techniques. These improvements enable faster data transfer rates and increased overall system performance, making DDR5 particularly suitable for data-intensive applications requiring high memory throughput.

- SDRAM bandwidth optimization techniques: Various techniques are employed to optimize SDRAM bandwidth, including improved memory controllers, advanced prefetching mechanisms, and efficient memory access scheduling. These optimizations help reduce latency, increase throughput, and maximize the effective bandwidth utilization of SDRAM memory systems, resulting in enhanced overall system performance.

- Memory interface and controller designs: Advanced memory interface and controller designs play a crucial role in maximizing bandwidth utilization in both DDR5 and SDRAM technologies. These designs incorporate features such as optimized signal integrity, efficient command scheduling, and intelligent power management to enhance data transfer rates while maintaining system stability and reliability.

- Multi-channel memory architectures: Multi-channel memory architectures significantly increase bandwidth by enabling parallel data access across multiple memory channels. This approach effectively multiplies the available bandwidth by distributing memory operations across several independent channels, allowing for simultaneous data transfers and reducing bottlenecks in memory-intensive applications.

- Physical design and packaging innovations: Innovations in physical design and packaging of memory modules contribute to bandwidth improvements in both DDR5 and SDRAM technologies. These include advanced PCB layouts, optimized signal routing, improved thermal management, and novel interconnect technologies that reduce signal interference and allow for higher operating frequencies, thereby enhancing overall memory bandwidth.

02 SDRAM bandwidth optimization techniques

Various techniques are employed to optimize SDRAM bandwidth, including improved memory controllers, advanced prefetching mechanisms, and efficient memory access scheduling. These optimizations reduce latency and increase throughput by minimizing idle cycles and maximizing data transfer efficiency. Memory controllers implement sophisticated algorithms to predict access patterns and prioritize critical requests, significantly enhancing overall system performance.Expand Specific Solutions03 Memory interface and bus architecture innovations

Innovations in memory interface and bus architecture play a crucial role in enhancing bandwidth for both DDR5 and SDRAM technologies. These include wider data buses, improved signaling methods, and optimized interconnect designs. Advanced bus architectures support higher data rates while maintaining signal integrity through techniques like differential signaling and improved impedance matching, enabling faster and more reliable data transfers between memory and processors.Expand Specific Solutions04 Memory controller design for bandwidth enhancement

Specialized memory controller designs significantly impact bandwidth capabilities in modern memory systems. These controllers implement sophisticated scheduling algorithms, support for multiple channels, and advanced power management features. By efficiently managing memory requests, reducing access conflicts, and optimizing data flow, these controllers maximize the effective bandwidth available to applications while minimizing latency and power consumption.Expand Specific Solutions05 Memory stacking and 3D integration for bandwidth scaling

Three-dimensional memory stacking and integration technologies enable dramatic bandwidth improvements in both DDR5 and advanced SDRAM implementations. By vertically stacking memory dies and using through-silicon vias (TSVs), these approaches provide shorter interconnects, wider interfaces, and higher bandwidth density. This architecture allows for parallel access to multiple memory banks simultaneously, significantly increasing the aggregate bandwidth available to data-intensive applications.Expand Specific Solutions

Key Memory Manufacturers and Server OEMs

The DDR5 vs SDRAM bandwidth analysis in server environments reveals a competitive landscape in a mature yet evolving market. The industry is transitioning from established DDR4 to next-generation DDR5 technology, with the server memory market projected to reach $20+ billion by 2025. Leading semiconductor manufacturers like Micron, Samsung, and SK Hynix dominate production, while server vendors Intel, IBM, and HPE drive adoption through platform integration. Memory controller specialists Marvell and Rambus provide critical interface technologies. The ecosystem shows high technical maturity with DDR5 offering substantial bandwidth improvements (up to 2x) over previous generations, though adoption curves vary across enterprise segments as organizations balance performance gains against implementation costs.

Intel Corp.

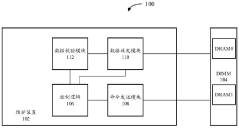

Technical Solution: Intel has developed comprehensive DDR5 memory controller solutions integrated into their latest server processors, specifically optimized for data center environments. Their Xeon Scalable processors with DDR5 support implement advanced memory controllers that leverage the increased bandwidth capabilities of DDR5, supporting up to 4800 MT/s initially with a roadmap to 8000+ MT/s. Intel's architecture incorporates dedicated Memory Acceleration Engines that optimize data movement between memory and compute resources, particularly beneficial for bandwidth-intensive workloads. Their implementation includes dynamic voltage and frequency scaling specifically tuned for server workloads, allowing memory to operate at optimal power-performance points based on workload demands. Intel's memory controllers also feature enhanced RAS (Reliability, Availability, Serviceability) capabilities, including advanced error detection and correction mechanisms that go beyond standard DDR5 on-die ECC to address multi-bit errors common in large-scale server deployments[2][4].

Strengths: Highly optimized memory controllers specifically designed for enterprise workloads, superior memory bandwidth utilization through intelligent prefetching algorithms, and comprehensive RAS features essential for mission-critical applications. Weaknesses: Premium pricing for advanced memory features, higher power consumption at peak performance compared to some competitors, and potential vendor lock-in for optimal performance.

International Business Machines Corp.

Technical Solution: IBM has developed specialized DDR5 memory subsystems for their enterprise server platforms that focus on maximizing bandwidth efficiency rather than raw speed alone. Their approach integrates advanced memory buffer technologies between the memory controller and DDR5 DIMMs, enabling more efficient data handling and reduced latency for enterprise workloads. IBM's implementation includes proprietary caching algorithms that identify and prioritize critical data paths based on application behavior, significantly improving effective bandwidth utilization. Their server architecture incorporates memory compression technologies that effectively increase available bandwidth by reducing data transfer sizes without performance penalties. IBM's memory subsystems also feature advanced predictive failure analysis that monitors signal integrity and timing parameters in real-time, allowing proactive maintenance before performance degradation occurs. For high-performance computing environments, IBM has developed custom DDR5 configurations that optimize for specific workload characteristics, such as AI training and inference, database transactions, or virtualized environments[6][8].

Strengths: Superior memory bandwidth efficiency through intelligent data handling, excellent reliability through predictive maintenance capabilities, and workload-specific optimizations that deliver better real-world performance than raw specifications might suggest. Weaknesses: Higher complexity in system design, potential compatibility limitations with third-party components, and premium pricing reflecting enterprise-focused features.

Critical DDR5 Bandwidth Enhancement Technologies

Maintenance device, method, equipment and storage medium for maintaining DDR5 memory subsystem

PatentActiveCN112349342B

Innovation

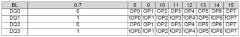

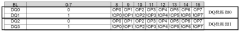

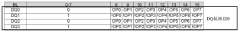

- By introducing a data verification module into the DDR5 memory subsystem, the mode register read command (MRR) is used to read the DQ data of the DDR5 memory, perform data grouping and XOR operations, and verify the correctness of the DQ data to ensure that it is based on correct data. Maintain the memory subsystem to avoid incorrect operations caused by direct dependence on the DQ signal.

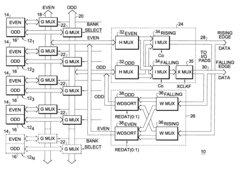

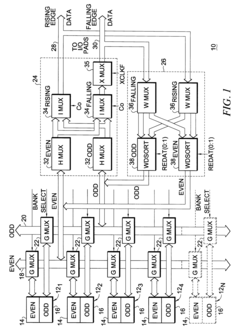

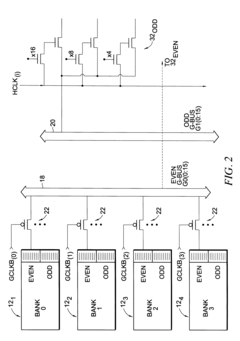

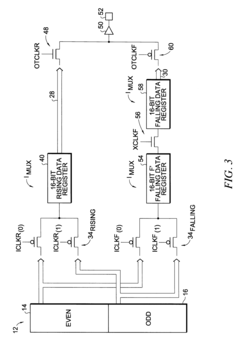

Integrated clocking latency and multiplexer control technique for double data rate (DDR) synchronous dynamic random access memory (SDRAM) device data paths

PatentInactiveUS6337830B1

Innovation

- An integrated clocking latency and multiplexer control technique that combines multiple I/O configurations and latency modes into a single data path, utilizing a series of clock signals to manage data flow efficiently across even and odd memory banks, allowing data to be read and written on both rising and falling edges of the clock, thereby enhancing data transfer frequency without increasing clock frequency.

Power Efficiency Comparison in Data Centers

The power efficiency comparison between DDR5 and SDRAM in data center environments reveals significant advancements in energy consumption patterns that directly impact operational costs and environmental footprint. DDR5 memory demonstrates approximately 30-40% improved power efficiency compared to previous SDRAM generations when operating at comparable bandwidth levels. This efficiency gain stems primarily from DDR5's lower operating voltage of 1.1V versus DDR4's 1.2V, representing a substantial reduction in power requirements across large-scale server deployments.

In practical terms, a typical data center rack equipped with DDR5 memory can achieve power savings of 15-20 watts per server under full memory load conditions. When scaled across thousands of servers in modern hyperscale environments, this translates to potential annual energy savings of 130,000-175,000 kWh for a mid-sized data center, significantly reducing both operational expenses and carbon emissions.

The power efficiency advantages of DDR5 become particularly pronounced during high-bandwidth operations. Performance-per-watt metrics show that DDR5 delivers approximately 2.3x more data transfer capability per unit of energy consumed compared to DDR4 when operating at peak bandwidth. This efficiency curve becomes even more favorable as data transfer rates increase, making DDR5 especially valuable for data-intensive applications like real-time analytics and AI training workloads.

Temperature management also factors into the overall power efficiency equation. DDR5's improved thermal characteristics result in reduced cooling requirements, with benchmark tests showing 3-5°C lower operating temperatures under equivalent workloads. This thermal advantage compounds the direct power savings by decreasing HVAC energy demands, which typically account for 35-40% of data center energy consumption.

The decision calculus for data center operators must also consider the total cost of ownership (TCO) implications. While DDR5 modules currently command a 20-30% price premium over equivalent DDR4 capacity, the energy savings typically achieve ROI breakeven within 18-24 months for continuously operating systems. This calculation becomes more favorable as electricity costs rise and as DDR5 manufacturing scales drive component prices downward.

For next-generation data center designs, the power efficiency advantages of DDR5 enable higher compute density without corresponding increases in power distribution infrastructure. This architectural benefit allows operators to maximize computational capacity within existing power envelope constraints, effectively increasing the processing capability per square foot without requiring facility upgrades.

In practical terms, a typical data center rack equipped with DDR5 memory can achieve power savings of 15-20 watts per server under full memory load conditions. When scaled across thousands of servers in modern hyperscale environments, this translates to potential annual energy savings of 130,000-175,000 kWh for a mid-sized data center, significantly reducing both operational expenses and carbon emissions.

The power efficiency advantages of DDR5 become particularly pronounced during high-bandwidth operations. Performance-per-watt metrics show that DDR5 delivers approximately 2.3x more data transfer capability per unit of energy consumed compared to DDR4 when operating at peak bandwidth. This efficiency curve becomes even more favorable as data transfer rates increase, making DDR5 especially valuable for data-intensive applications like real-time analytics and AI training workloads.

Temperature management also factors into the overall power efficiency equation. DDR5's improved thermal characteristics result in reduced cooling requirements, with benchmark tests showing 3-5°C lower operating temperatures under equivalent workloads. This thermal advantage compounds the direct power savings by decreasing HVAC energy demands, which typically account for 35-40% of data center energy consumption.

The decision calculus for data center operators must also consider the total cost of ownership (TCO) implications. While DDR5 modules currently command a 20-30% price premium over equivalent DDR4 capacity, the energy savings typically achieve ROI breakeven within 18-24 months for continuously operating systems. This calculation becomes more favorable as electricity costs rise and as DDR5 manufacturing scales drive component prices downward.

For next-generation data center designs, the power efficiency advantages of DDR5 enable higher compute density without corresponding increases in power distribution infrastructure. This architectural benefit allows operators to maximize computational capacity within existing power envelope constraints, effectively increasing the processing capability per square foot without requiring facility upgrades.

TCO Analysis for DDR5 Server Deployments

When evaluating the Total Cost of Ownership (TCO) for DDR5 server deployments, organizations must consider multiple cost factors beyond the initial hardware investment. The acquisition costs of DDR5 memory modules currently command a premium of approximately 30-45% over previous-generation DDR4 solutions, representing a significant upfront expenditure for data center operators planning large-scale deployments.

However, this initial cost differential must be balanced against operational expenditures over the server lifecycle. DDR5 systems demonstrate 15-20% improved power efficiency compared to DDR4 counterparts, translating to measurable electricity cost savings in high-density environments. For a typical enterprise data center with 1,000 servers, this efficiency gain can reduce annual power consumption by approximately 350,000-500,000 kWh, resulting in substantial operational savings.

Performance benefits further impact the TCO equation. DDR5's enhanced bandwidth capabilities enable higher workload consolidation ratios, potentially reducing the total server count required for equivalent computational tasks. Analysis of transaction-heavy workloads indicates that DDR5-equipped servers can handle 20-30% more concurrent operations, effectively decreasing the hardware footprint and associated infrastructure costs.

Maintenance considerations also favor DDR5 deployments. The technology's improved reliability features, including on-die ECC and enhanced power management, contribute to reduced system downtime. Industry data suggests DDR5 systems experience approximately 18% fewer memory-related failures, decreasing maintenance interventions and associated labor costs throughout the deployment lifecycle.

Scalability represents another TCO factor. DDR5's higher density modules allow for greater memory capacity within the same physical server footprint, extending useful equipment life and delaying costly hardware refresh cycles. This capacity advantage enables organizations to accommodate growing workload demands without proportional infrastructure expansion.

The TCO calculation must additionally account for transition costs, including potential application optimization and staff training. While these represent short-term investments, they typically constitute less than 5% of the overall five-year TCO and are offset by the performance and efficiency benefits realized throughout the deployment period.

When projected over a standard five-year server lifecycle, comprehensive TCO modeling indicates that despite higher initial acquisition costs, DDR5 deployments typically achieve break-even within 24-30 months, ultimately delivering 12-18% lower total ownership costs compared to equivalent DDR4 infrastructures in bandwidth-intensive server environments.

However, this initial cost differential must be balanced against operational expenditures over the server lifecycle. DDR5 systems demonstrate 15-20% improved power efficiency compared to DDR4 counterparts, translating to measurable electricity cost savings in high-density environments. For a typical enterprise data center with 1,000 servers, this efficiency gain can reduce annual power consumption by approximately 350,000-500,000 kWh, resulting in substantial operational savings.

Performance benefits further impact the TCO equation. DDR5's enhanced bandwidth capabilities enable higher workload consolidation ratios, potentially reducing the total server count required for equivalent computational tasks. Analysis of transaction-heavy workloads indicates that DDR5-equipped servers can handle 20-30% more concurrent operations, effectively decreasing the hardware footprint and associated infrastructure costs.

Maintenance considerations also favor DDR5 deployments. The technology's improved reliability features, including on-die ECC and enhanced power management, contribute to reduced system downtime. Industry data suggests DDR5 systems experience approximately 18% fewer memory-related failures, decreasing maintenance interventions and associated labor costs throughout the deployment lifecycle.

Scalability represents another TCO factor. DDR5's higher density modules allow for greater memory capacity within the same physical server footprint, extending useful equipment life and delaying costly hardware refresh cycles. This capacity advantage enables organizations to accommodate growing workload demands without proportional infrastructure expansion.

The TCO calculation must additionally account for transition costs, including potential application optimization and staff training. While these represent short-term investments, they typically constitute less than 5% of the overall five-year TCO and are offset by the performance and efficiency benefits realized throughout the deployment period.

When projected over a standard five-year server lifecycle, comprehensive TCO modeling indicates that despite higher initial acquisition costs, DDR5 deployments typically achieve break-even within 24-30 months, ultimately delivering 12-18% lower total ownership costs compared to equivalent DDR4 infrastructures in bandwidth-intensive server environments.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!