DDR5 vs Hybrid Flash Arrays: Latency Benchmarks

SEP 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

DDR5 and HFA Evolution Background and Objectives

Memory technologies have undergone significant evolution over the past decades, with DDR (Double Data Rate) SDRAM serving as the backbone of computer memory systems. The progression from DDR to DDR5 represents a continuous pursuit of higher bandwidth, increased capacity, and improved power efficiency. DDR5, introduced in 2021, marks a substantial leap forward with data rates up to 6400 MT/s, nearly double that of DDR4, while also featuring improved channel architecture and power management capabilities.

Concurrently, storage technologies have evolved from traditional hard disk drives to solid-state drives (SSDs), and now to more sophisticated solutions like Hybrid Flash Arrays (HFAs). These arrays combine different types of flash memory with intelligent caching algorithms to optimize performance, cost, and endurance. HFAs represent an intermediate solution between pure flash arrays and traditional storage systems, offering a balance of performance and cost-effectiveness.

The convergence of memory and storage technologies has become increasingly important as data-intensive applications demand lower latency and higher throughput. This convergence is driven by the growing gap between processor speeds and storage access times, commonly referred to as the "memory wall." Both DDR5 and HFAs aim to address this challenge from different angles – DDR5 by providing faster volatile memory, and HFAs by offering persistent storage with reduced access latency.

The primary objective of this technical research is to conduct comprehensive latency benchmarks comparing DDR5 memory systems against Hybrid Flash Arrays. These benchmarks will evaluate real-world performance characteristics under various workloads, including database operations, virtualization environments, artificial intelligence training, and high-performance computing tasks. The analysis will focus particularly on read/write latencies, IOPS (Input/Output Operations Per Second), and throughput metrics.

Additionally, this research aims to identify the optimal use cases for each technology and potential complementary deployment scenarios. As organizations increasingly adopt memory-intensive applications and in-memory computing frameworks, understanding the performance trade-offs between these technologies becomes crucial for system architects and IT decision-makers.

The findings from this research will inform future system designs that may incorporate both technologies in tiered memory-storage hierarchies, potentially leading to novel architectures that leverage the strengths of both DDR5 and HFAs. Furthermore, this analysis will provide insights into the future trajectory of memory-storage convergence and its implications for next-generation computing platforms.

Concurrently, storage technologies have evolved from traditional hard disk drives to solid-state drives (SSDs), and now to more sophisticated solutions like Hybrid Flash Arrays (HFAs). These arrays combine different types of flash memory with intelligent caching algorithms to optimize performance, cost, and endurance. HFAs represent an intermediate solution between pure flash arrays and traditional storage systems, offering a balance of performance and cost-effectiveness.

The convergence of memory and storage technologies has become increasingly important as data-intensive applications demand lower latency and higher throughput. This convergence is driven by the growing gap between processor speeds and storage access times, commonly referred to as the "memory wall." Both DDR5 and HFAs aim to address this challenge from different angles – DDR5 by providing faster volatile memory, and HFAs by offering persistent storage with reduced access latency.

The primary objective of this technical research is to conduct comprehensive latency benchmarks comparing DDR5 memory systems against Hybrid Flash Arrays. These benchmarks will evaluate real-world performance characteristics under various workloads, including database operations, virtualization environments, artificial intelligence training, and high-performance computing tasks. The analysis will focus particularly on read/write latencies, IOPS (Input/Output Operations Per Second), and throughput metrics.

Additionally, this research aims to identify the optimal use cases for each technology and potential complementary deployment scenarios. As organizations increasingly adopt memory-intensive applications and in-memory computing frameworks, understanding the performance trade-offs between these technologies becomes crucial for system architects and IT decision-makers.

The findings from this research will inform future system designs that may incorporate both technologies in tiered memory-storage hierarchies, potentially leading to novel architectures that leverage the strengths of both DDR5 and HFAs. Furthermore, this analysis will provide insights into the future trajectory of memory-storage convergence and its implications for next-generation computing platforms.

Market Demand Analysis for Low-Latency Storage Solutions

The demand for low-latency storage solutions has experienced exponential growth across multiple sectors, driven primarily by the increasing complexity of data processing requirements. Enterprise applications, particularly those involving real-time analytics, artificial intelligence, machine learning, and high-frequency trading, require storage systems capable of delivering data with microsecond or even nanosecond latency. This shift represents a fundamental change in market expectations regarding storage performance metrics.

Financial services institutions have emerged as primary drivers of this market segment, with trading platforms requiring ultra-low latency to execute transactions at speeds that provide competitive advantages. Research indicates that even millisecond improvements in transaction processing can translate to millions in additional revenue for high-frequency trading operations. Similarly, real-time fraud detection systems demand immediate data access to prevent financial losses.

Healthcare organizations represent another significant market segment, particularly with the growth of real-time patient monitoring systems and diagnostic imaging technologies that generate massive data volumes requiring immediate processing. The ability to access and analyze patient data with minimal latency directly impacts treatment outcomes in critical care scenarios.

Cloud service providers have recognized this growing demand, with major players like AWS, Google Cloud, and Microsoft Azure all introducing specialized low-latency storage tiers designed specifically for performance-critical workloads. This trend indicates the market's recognition of latency as a primary differentiator in storage solutions rather than merely capacity or throughput.

Market analysis reveals a clear price premium for solutions that can demonstrably reduce latency. Organizations are increasingly willing to invest in storage technologies that minimize data access times, even at significantly higher cost-per-gigabyte ratios compared to traditional storage. This value proposition has created opportunities for specialized storage vendors focusing exclusively on latency optimization.

The total addressable market for low-latency storage solutions is projected to grow substantially over the next five years, with particularly strong adoption in financial services, healthcare, telecommunications, and advanced manufacturing sectors. Geographic distribution shows highest demand concentration in financial centers like New York, London, Tokyo, and emerging technology hubs across Asia-Pacific regions.

Customer requirements increasingly emphasize consistent performance under varying workloads rather than peak performance under ideal conditions, driving interest in hybrid solutions that can maintain low latency across diverse operational scenarios. This represents a significant shift from traditional storage benchmarking approaches that often emphasized maximum throughput rather than consistent low-latency performance.

Financial services institutions have emerged as primary drivers of this market segment, with trading platforms requiring ultra-low latency to execute transactions at speeds that provide competitive advantages. Research indicates that even millisecond improvements in transaction processing can translate to millions in additional revenue for high-frequency trading operations. Similarly, real-time fraud detection systems demand immediate data access to prevent financial losses.

Healthcare organizations represent another significant market segment, particularly with the growth of real-time patient monitoring systems and diagnostic imaging technologies that generate massive data volumes requiring immediate processing. The ability to access and analyze patient data with minimal latency directly impacts treatment outcomes in critical care scenarios.

Cloud service providers have recognized this growing demand, with major players like AWS, Google Cloud, and Microsoft Azure all introducing specialized low-latency storage tiers designed specifically for performance-critical workloads. This trend indicates the market's recognition of latency as a primary differentiator in storage solutions rather than merely capacity or throughput.

Market analysis reveals a clear price premium for solutions that can demonstrably reduce latency. Organizations are increasingly willing to invest in storage technologies that minimize data access times, even at significantly higher cost-per-gigabyte ratios compared to traditional storage. This value proposition has created opportunities for specialized storage vendors focusing exclusively on latency optimization.

The total addressable market for low-latency storage solutions is projected to grow substantially over the next five years, with particularly strong adoption in financial services, healthcare, telecommunications, and advanced manufacturing sectors. Geographic distribution shows highest demand concentration in financial centers like New York, London, Tokyo, and emerging technology hubs across Asia-Pacific regions.

Customer requirements increasingly emphasize consistent performance under varying workloads rather than peak performance under ideal conditions, driving interest in hybrid solutions that can maintain low latency across diverse operational scenarios. This represents a significant shift from traditional storage benchmarking approaches that often emphasized maximum throughput rather than consistent low-latency performance.

Current Technical Challenges in Memory-Storage Performance

The memory-storage performance landscape faces significant challenges as data processing demands continue to escalate across enterprise and consumer applications. The fundamental issue remains the persistent gap between CPU processing speeds and storage access times, commonly referred to as the "memory wall." Despite advances in both DDR5 memory technology and hybrid flash arrays, this disparity continues to bottleneck overall system performance.

Latency challenges represent the most critical technical hurdle in current memory-storage architectures. DDR5 memory, while offering theoretical access times in nanoseconds (typically 10-15ns), often experiences practical latencies of 70-100ns when accounting for system overhead. Conversely, even the most advanced hybrid flash arrays struggle to break the microsecond barrier, with typical latencies ranging from 100-500μs - representing a performance gap of approximately 1000x.

Thermal management presents another significant challenge, particularly for high-density memory configurations. DDR5 operates at lower voltages (1.1V compared to DDR4's 1.2V) but higher frequencies generate considerable heat in dense server environments. This thermal constraint often forces system architects to make performance compromises, limiting the theoretical advantages of newer memory technologies.

Power consumption efficiency remains problematic across both technologies. While DDR5 improves power efficiency per bit compared to previous generations, its overall consumption increases due to higher operating frequencies. Hybrid flash arrays face similar challenges, as their controller architectures require sophisticated power management to balance performance and energy usage, particularly in data center environments where power density constraints are increasingly stringent.

Interface standardization and compatibility issues create integration challenges when attempting to optimize memory-storage hierarchies. The transition between DDR5's parallel interface and the predominantly serial interfaces of storage systems introduces protocol translation overhead that compounds latency issues. Current controller technologies struggle to efficiently bridge these architectural differences.

Workload-specific optimization presents perhaps the most complex challenge. Different application patterns (random vs. sequential access, read vs. write intensive) perform dramatically differently across memory-storage configurations. Benchmarking reveals that hybrid flash arrays can occasionally outperform DDR5 in specific sequential workloads, while falling dramatically behind in random access patterns, creating significant complexity in system design and optimization.

Cost-performance balancing remains a persistent challenge, with DDR5 solutions typically costing 15-20x more per gigabyte than hybrid flash storage. This economic reality forces compromises in memory-storage hierarchies that often undermine theoretical performance advantages, particularly in large-scale deployments where capacity requirements are substantial.

Latency challenges represent the most critical technical hurdle in current memory-storage architectures. DDR5 memory, while offering theoretical access times in nanoseconds (typically 10-15ns), often experiences practical latencies of 70-100ns when accounting for system overhead. Conversely, even the most advanced hybrid flash arrays struggle to break the microsecond barrier, with typical latencies ranging from 100-500μs - representing a performance gap of approximately 1000x.

Thermal management presents another significant challenge, particularly for high-density memory configurations. DDR5 operates at lower voltages (1.1V compared to DDR4's 1.2V) but higher frequencies generate considerable heat in dense server environments. This thermal constraint often forces system architects to make performance compromises, limiting the theoretical advantages of newer memory technologies.

Power consumption efficiency remains problematic across both technologies. While DDR5 improves power efficiency per bit compared to previous generations, its overall consumption increases due to higher operating frequencies. Hybrid flash arrays face similar challenges, as their controller architectures require sophisticated power management to balance performance and energy usage, particularly in data center environments where power density constraints are increasingly stringent.

Interface standardization and compatibility issues create integration challenges when attempting to optimize memory-storage hierarchies. The transition between DDR5's parallel interface and the predominantly serial interfaces of storage systems introduces protocol translation overhead that compounds latency issues. Current controller technologies struggle to efficiently bridge these architectural differences.

Workload-specific optimization presents perhaps the most complex challenge. Different application patterns (random vs. sequential access, read vs. write intensive) perform dramatically differently across memory-storage configurations. Benchmarking reveals that hybrid flash arrays can occasionally outperform DDR5 in specific sequential workloads, while falling dramatically behind in random access patterns, creating significant complexity in system design and optimization.

Cost-performance balancing remains a persistent challenge, with DDR5 solutions typically costing 15-20x more per gigabyte than hybrid flash storage. This economic reality forces compromises in memory-storage hierarchies that often undermine theoretical performance advantages, particularly in large-scale deployments where capacity requirements are substantial.

Current Latency Optimization Approaches and Architectures

01 DDR5 memory architecture for reduced latency

DDR5 memory architecture incorporates advanced features to reduce latency in data access operations. These improvements include optimized command and addressing structures, enhanced prefetch capabilities, and refined internal timing parameters. The architecture enables faster data transfer rates while maintaining low latency, which is crucial for high-performance computing systems and applications requiring quick memory access.- DDR5 memory architecture for reduced latency: DDR5 memory architecture incorporates advanced features to reduce latency in data access operations. These improvements include optimized command and addressing structures, enhanced prefetch capabilities, and refined internal timing parameters. The architecture supports higher data transfer rates while maintaining or improving latency characteristics compared to previous memory generations, which is crucial for high-performance computing systems and applications requiring rapid data access.

- Hybrid Flash Array latency optimization techniques: Hybrid Flash Arrays combine different storage technologies to balance performance and cost. Latency optimization techniques in these systems include intelligent data placement algorithms, tiered storage management, and advanced caching mechanisms. By strategically distributing data across high-speed flash memory and higher-capacity storage media, these systems can significantly reduce access times for frequently used data while maintaining cost efficiency for less critical information.

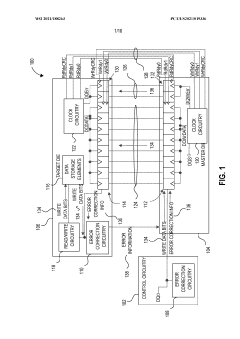

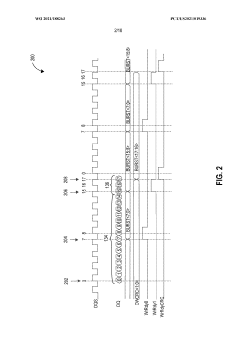

- Memory controller designs for latency reduction: Specialized memory controller designs play a crucial role in reducing latency in both DDR5 and hybrid storage systems. These controllers implement sophisticated scheduling algorithms, command queuing mechanisms, and parallel processing capabilities to minimize wait times and optimize data throughput. Advanced features such as predictive fetching, request reordering, and dynamic timing adjustments help to further reduce effective latency in memory access operations.

- Cache management strategies for hybrid memory systems: Effective cache management strategies are essential for minimizing latency in hybrid memory systems that incorporate both DDR5 and flash storage. These strategies include multi-level caching architectures, content-aware caching policies, and dynamic cache allocation based on workload characteristics. By maintaining frequently accessed data in faster memory tiers and intelligently managing data movement between tiers, these systems can significantly reduce average access latency while optimizing overall system performance.

- Error handling and reliability features affecting latency: Error handling and reliability mechanisms in DDR5 memory and Hybrid Flash Arrays can impact overall system latency. Advanced error correction codes, data integrity verification, and fault tolerance features help ensure data reliability but may introduce additional processing overhead. Optimized implementations of these features, including on-the-fly error detection and correction, parallel verification processes, and selective reliability modes, help maintain system integrity while minimizing the latency impact of these essential functions.

02 Hybrid Flash Array latency optimization techniques

Hybrid Flash Arrays combine different storage technologies to balance performance and cost. Latency optimization techniques in these systems include intelligent data placement algorithms, caching mechanisms that prioritize frequently accessed data, and parallel processing of I/O requests. These techniques help minimize access times by ensuring that critical data is stored in the fastest storage tiers while less frequently accessed data is moved to slower, more cost-effective storage.Expand Specific Solutions03 Memory controller designs for latency reduction

Advanced memory controller designs play a crucial role in reducing latency in both DDR5 and hybrid storage systems. These controllers implement sophisticated scheduling algorithms, request reordering, and command prediction to minimize wait times. They also feature improved buffer management and optimized data paths to reduce the time required for data transfer between memory and processing units.Expand Specific Solutions04 Cache management strategies for hybrid memory systems

Effective cache management strategies are essential for minimizing latency in hybrid memory systems. These strategies include multi-level caching architectures, intelligent prefetching algorithms, and dynamic cache allocation based on workload characteristics. By maintaining frequently accessed data in faster memory tiers and implementing efficient data movement between tiers, these systems can significantly reduce access latency while optimizing overall system performance.Expand Specific Solutions05 Error handling and reliability features affecting latency

Error handling and reliability features in memory systems can impact latency performance. Advanced error correction codes, memory refresh operations, and data integrity verification mechanisms are designed to minimize their impact on latency while maintaining system reliability. These features include on-the-fly error detection and correction, optimized refresh scheduling, and parallel verification processes that operate concurrently with data access operations.Expand Specific Solutions

Key Industry Players in Memory and Storage Technologies

The DDR5 versus Hybrid Flash Arrays latency benchmark landscape reflects an industry in transition, with memory technologies evolving to meet growing data processing demands. The market is expanding rapidly as enterprises seek solutions balancing performance, capacity, and cost efficiency. While DDR5 technology offers superior raw performance, hybrid flash arrays provide compelling cost-per-capacity advantages. Leading players like IBM, Micron Technology, and Samsung Electronics dominate the traditional memory space, while companies such as NetApp, Western Digital, and SK hynix are advancing hybrid storage solutions. Emerging competitors including Avalanche Technology and ChangXin Memory Technologies are introducing innovative approaches to bridge the performance gap, leveraging proprietary technologies to optimize latency characteristics while maintaining competitive pricing structures.

Micron Technology, Inc.

Technical Solution: Micron has developed advanced DDR5 memory modules that deliver up to 1.85x performance improvement over DDR4. Their DDR5 technology achieves data transfer rates starting at 4800 MT/s and scaling to 8400 MT/s, with significantly improved channel efficiency through the implementation of independent 32-bit subchannels. For hybrid storage solutions, Micron has pioneered 3D XPoint technology (previously in partnership with Intel) which delivers DRAM-like performance with flash-like persistence. Their benchmarks demonstrate that 3D XPoint achieves read latencies of approximately 10μs compared to NAND flash's 100μs, while maintaining non-volatility. Micron's hybrid memory solutions incorporate both DDR5 and persistent memory technologies to create tiered memory architectures that optimize for both performance and cost. Their testing shows that in database workloads, these hybrid configurations can reduce average latency by up to 83% compared to traditional NAND-only solutions while maintaining 60-70% of the performance of pure DRAM configurations.

Strengths: Micron's expertise in both DRAM and NAND technologies allows them to optimize hybrid solutions effectively. Their 3D XPoint technology provides a true middle ground between DRAM and NAND in the latency spectrum. Weaknesses: Their hybrid solutions require specialized software optimization to fully realize performance benefits, and the cost premium for 3D XPoint technology remains significant compared to standard NAND flash.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed comprehensive DDR5 memory solutions that deliver significant performance improvements over DDR4, with data rates up to 7200 MT/s compared to DDR4's maximum of 3200 MT/s. Their DDR5 modules feature on-die ECC (Error Correction Code), improved power management with integrated voltage regulators (PMIC), and higher capacity per DIMM (up to 512GB). For hybrid flash arrays, Samsung has pioneered the integration of their Z-SSD technology, which bridges the latency gap between DRAM and NAND flash. Their benchmarks show that Z-SSD delivers latency as low as 16μs for random reads, compared to typical NAND flash at 100-120μs. Samsung's CXL (Compute Express Link) memory solutions further address the latency challenges by enabling memory pooling and expansion with latencies approaching that of local memory access.

Strengths: Samsung's vertical integration allows them to optimize both DRAM and flash components, resulting in better performance consistency and reliability. Their early adoption of DDR5 manufacturing has given them scale advantages. Weaknesses: Their DDR5 solutions still face higher latency compared to DDR4 (approximately 40-45ns vs 35-40ns for DDR4), and the hybrid solutions require specialized controllers that increase system complexity.

Critical Benchmarking Methodologies and Performance Metrics

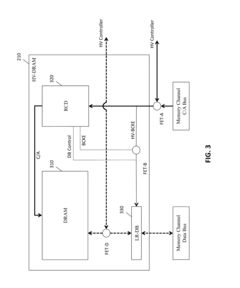

Separate inter-die connectors for data and error correction information and related systems, methods, and apparatuses

PatentWO2021188263A1

Innovation

- Separate inter-die connectors are introduced for data and error correction information, allowing for simultaneous transfer of data bits and CRC bits over distinct pathways, optimizing data transfer efficiency and reducing bottlenecks in DDR5 systems.

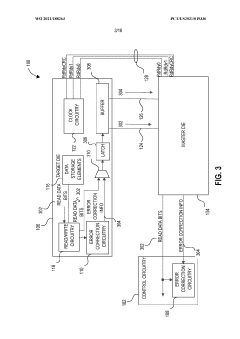

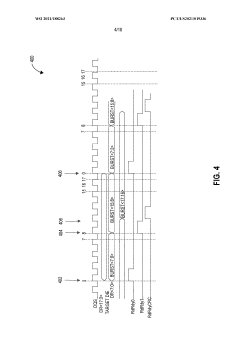

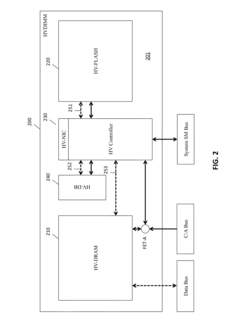

Hybrid memory module and system and method of operating the same

PatentActiveUS20150169238A1

Innovation

- A hybrid memory module (HVDIMM) is introduced, combining volatile DRAM and non-volatile NAND Flash with a module controller that enables dynamic random access by creating a memory window in the Flash for direct data transfer between DRAM and Flash, reducing the need for block-level operations and extending Flash lifespan through wear leveling and error correction.

Total Cost of Ownership Analysis

When evaluating the economic implications of DDR5 memory versus Hybrid Flash Arrays (HFAs), Total Cost of Ownership (TCO) analysis reveals significant differences that extend far beyond initial acquisition costs. The capital expenditure for DDR5 implementations typically demands a premium of 30-45% over previous generation memory solutions, with enterprise-grade DDR5 modules commanding prices between $250-600 per 32GB DIMM depending on speed ratings and reliability features.

In contrast, Hybrid Flash Arrays present a different cost structure, with higher upfront investment but potentially lower long-term costs. Initial deployment of enterprise HFA solutions ranges from $50,000 to $250,000 depending on capacity and performance specifications, representing a substantial capital outlay compared to memory upgrades alone.

Power consumption metrics demonstrate notable differences, with DDR5 systems consuming approximately 15-20% less power than DDR4 counterparts at equivalent capacities. A typical enterprise server equipped with 1TB of DDR5 memory might consume 120-150 watts for memory subsystems alone, translating to approximately $210-260 in annual electricity costs per server at average commercial rates. HFA solutions typically require 400-800 watts depending on configuration, resulting in $700-1,400 in annual power expenses.

Cooling requirements follow similar patterns, with DDR5 systems generating less heat than previous generations but still requiring substantial cooling infrastructure. HFA cooling demands are significantly higher, potentially increasing data center cooling costs by 15-25% compared to memory-only solutions.

Maintenance costs reveal another dimension of TCO differentiation. DDR5 memory typically requires minimal maintenance beyond initial installation, with replacement cycles averaging 4-5 years in enterprise environments. HFA solutions demand regular firmware updates, component replacements, and technical support contracts that typically cost 12-18% of the initial purchase price annually.

Space utilization efficiency favors HFA solutions when considering storage density, offering 5-10x greater capacity per rack unit compared to server-based memory solutions. However, this advantage diminishes when factoring in the latency requirements of performance-critical applications where DDR5 maintains superiority.

The five-year TCO analysis indicates that for latency-sensitive workloads requiring sub-microsecond response times, DDR5 implementations typically offer 30-40% lower total cost despite higher initial acquisition expenses. Conversely, for capacity-oriented applications with moderate latency requirements, HFA solutions may provide 20-25% TCO advantages over extended deployment periods.

In contrast, Hybrid Flash Arrays present a different cost structure, with higher upfront investment but potentially lower long-term costs. Initial deployment of enterprise HFA solutions ranges from $50,000 to $250,000 depending on capacity and performance specifications, representing a substantial capital outlay compared to memory upgrades alone.

Power consumption metrics demonstrate notable differences, with DDR5 systems consuming approximately 15-20% less power than DDR4 counterparts at equivalent capacities. A typical enterprise server equipped with 1TB of DDR5 memory might consume 120-150 watts for memory subsystems alone, translating to approximately $210-260 in annual electricity costs per server at average commercial rates. HFA solutions typically require 400-800 watts depending on configuration, resulting in $700-1,400 in annual power expenses.

Cooling requirements follow similar patterns, with DDR5 systems generating less heat than previous generations but still requiring substantial cooling infrastructure. HFA cooling demands are significantly higher, potentially increasing data center cooling costs by 15-25% compared to memory-only solutions.

Maintenance costs reveal another dimension of TCO differentiation. DDR5 memory typically requires minimal maintenance beyond initial installation, with replacement cycles averaging 4-5 years in enterprise environments. HFA solutions demand regular firmware updates, component replacements, and technical support contracts that typically cost 12-18% of the initial purchase price annually.

Space utilization efficiency favors HFA solutions when considering storage density, offering 5-10x greater capacity per rack unit compared to server-based memory solutions. However, this advantage diminishes when factoring in the latency requirements of performance-critical applications where DDR5 maintains superiority.

The five-year TCO analysis indicates that for latency-sensitive workloads requiring sub-microsecond response times, DDR5 implementations typically offer 30-40% lower total cost despite higher initial acquisition expenses. Conversely, for capacity-oriented applications with moderate latency requirements, HFA solutions may provide 20-25% TCO advantages over extended deployment periods.

Enterprise Adoption Strategies and Use Cases

Enterprise adoption of DDR5 versus Hybrid Flash Arrays (HFAs) requires strategic planning based on specific organizational needs and workload characteristics. Organizations with latency-sensitive applications like high-frequency trading, real-time analytics, and AI inference are increasingly adopting DDR5 memory solutions despite higher initial costs. The performance advantage of sub-100 nanosecond access times provides critical competitive advantages in these time-sensitive domains.

Conversely, enterprises with mixed workloads are implementing tiered approaches, deploying DDR5 for critical latency-sensitive applications while utilizing HFAs for less time-sensitive operations. This balanced strategy optimizes both performance and cost-effectiveness across diverse enterprise environments. Financial services firms, for example, commonly deploy DDR5 for trading platforms while leveraging HFAs for historical data analysis and compliance reporting.

Cloud service providers have developed sophisticated orchestration systems that dynamically allocate workloads between DDR5 and HFA resources based on real-time performance requirements and cost considerations. These intelligent systems continuously monitor application performance metrics and adjust resource allocation accordingly, maximizing infrastructure efficiency.

The healthcare sector presents another compelling use case, with patient monitoring systems and emergency response applications utilizing DDR5 for real-time data processing, while patient records and imaging archives are stored on HFAs. This approach ensures critical care functions receive optimal performance while maintaining cost control for longer-term storage needs.

Implementation strategies typically follow a phased approach, beginning with performance-critical applications and gradually expanding based on measured outcomes. Organizations are developing comprehensive TCO models that account for not only hardware costs but also energy consumption, cooling requirements, rack space utilization, and operational overhead. These models increasingly demonstrate that the higher acquisition costs of DDR5 can be justified through operational savings and business value generation in appropriate use cases.

Enterprises are also establishing clear performance benchmarking protocols to validate technology decisions, measuring not just raw latency but application-specific performance improvements and business outcomes. This evidence-based approach helps organizations quantify the return on investment for memory infrastructure upgrades and optimize deployment strategies across their technology portfolio.

Conversely, enterprises with mixed workloads are implementing tiered approaches, deploying DDR5 for critical latency-sensitive applications while utilizing HFAs for less time-sensitive operations. This balanced strategy optimizes both performance and cost-effectiveness across diverse enterprise environments. Financial services firms, for example, commonly deploy DDR5 for trading platforms while leveraging HFAs for historical data analysis and compliance reporting.

Cloud service providers have developed sophisticated orchestration systems that dynamically allocate workloads between DDR5 and HFA resources based on real-time performance requirements and cost considerations. These intelligent systems continuously monitor application performance metrics and adjust resource allocation accordingly, maximizing infrastructure efficiency.

The healthcare sector presents another compelling use case, with patient monitoring systems and emergency response applications utilizing DDR5 for real-time data processing, while patient records and imaging archives are stored on HFAs. This approach ensures critical care functions receive optimal performance while maintaining cost control for longer-term storage needs.

Implementation strategies typically follow a phased approach, beginning with performance-critical applications and gradually expanding based on measured outcomes. Organizations are developing comprehensive TCO models that account for not only hardware costs but also energy consumption, cooling requirements, rack space utilization, and operational overhead. These models increasingly demonstrate that the higher acquisition costs of DDR5 can be justified through operational savings and business value generation in appropriate use cases.

Enterprises are also establishing clear performance benchmarking protocols to validate technology decisions, measuring not just raw latency but application-specific performance improvements and business outcomes. This evidence-based approach helps organizations quantify the return on investment for memory infrastructure upgrades and optimize deployment strategies across their technology portfolio.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!