DDR5 Feasibility in Augmented Intelligence Developments

SEP 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

DDR5 Evolution and AI Integration Goals

The evolution of DDR (Double Data Rate) memory technology has been a cornerstone in computing advancement, with DDR5 representing the latest significant leap forward. Since the introduction of the first DDR standard in 2000, each generation has brought substantial improvements in bandwidth, capacity, and power efficiency. DDR5, officially launched in 2020, marks a pivotal advancement with transfer rates beginning at 4800 MT/s and potentially scaling to 8400 MT/s—more than double DDR4's capabilities. This evolution aligns perfectly with the exponential growth in data processing demands driven by augmented intelligence applications.

The integration of DDR5 with augmented intelligence systems represents a strategic convergence of two rapidly evolving technologies. Augmented intelligence—AI systems designed to enhance rather than replace human capabilities—requires unprecedented memory performance to process complex algorithms and vast datasets in real-time. The technical goals for this integration focus on addressing the memory wall challenge that has historically bottlenecked AI performance, particularly in edge computing scenarios where power constraints are significant.

Current augmented intelligence developments face several memory-related limitations, including bandwidth constraints, latency issues, and power consumption concerns. DDR5 aims to address these challenges through architectural innovations such as decision feedback equalization, same-bank refresh capabilities, and dual-channel architecture. These features collectively enable more efficient data handling for neural network operations, particularly beneficial for transformer models that require extensive memory access patterns.

The roadmap for DDR5 implementation in augmented intelligence systems envisions a phased approach, beginning with high-performance computing centers and gradually extending to edge devices as power efficiency improves. Key technical objectives include achieving sub-nanosecond latency for critical AI operations, supporting model sizes exceeding 100 billion parameters on distributed systems, and reducing memory-related power consumption by at least 30% compared to DDR4-based solutions.

Industry consensus suggests that DDR5's higher channel utilization efficiency will significantly benefit AI training workloads, potentially reducing training time for large language models by 15-20%. For inference operations, particularly in augmented reality applications where real-time processing is essential, DDR5's improved bandwidth is expected to enable more sophisticated on-device AI capabilities while maintaining acceptable power profiles for mobile and wearable form factors.

The integration of DDR5 with augmented intelligence systems represents a strategic convergence of two rapidly evolving technologies. Augmented intelligence—AI systems designed to enhance rather than replace human capabilities—requires unprecedented memory performance to process complex algorithms and vast datasets in real-time. The technical goals for this integration focus on addressing the memory wall challenge that has historically bottlenecked AI performance, particularly in edge computing scenarios where power constraints are significant.

Current augmented intelligence developments face several memory-related limitations, including bandwidth constraints, latency issues, and power consumption concerns. DDR5 aims to address these challenges through architectural innovations such as decision feedback equalization, same-bank refresh capabilities, and dual-channel architecture. These features collectively enable more efficient data handling for neural network operations, particularly beneficial for transformer models that require extensive memory access patterns.

The roadmap for DDR5 implementation in augmented intelligence systems envisions a phased approach, beginning with high-performance computing centers and gradually extending to edge devices as power efficiency improves. Key technical objectives include achieving sub-nanosecond latency for critical AI operations, supporting model sizes exceeding 100 billion parameters on distributed systems, and reducing memory-related power consumption by at least 30% compared to DDR4-based solutions.

Industry consensus suggests that DDR5's higher channel utilization efficiency will significantly benefit AI training workloads, potentially reducing training time for large language models by 15-20%. For inference operations, particularly in augmented reality applications where real-time processing is essential, DDR5's improved bandwidth is expected to enable more sophisticated on-device AI capabilities while maintaining acceptable power profiles for mobile and wearable form factors.

Market Demand Analysis for High-Performance Memory in AI

The global AI market is experiencing unprecedented growth, with memory requirements serving as a critical bottleneck in system performance. Current market analysis indicates that high-performance memory demand for AI applications is projected to grow at a CAGR of 29% through 2027, significantly outpacing the broader semiconductor market. This acceleration is primarily driven by the exponential increase in model sizes and computational requirements for training and inference workloads.

Enterprise AI deployments are particularly demanding, with 78% of organizations reporting memory constraints as a primary limitation in their AI infrastructure. The transition from DDR4 to DDR5 represents a potential solution to these constraints, with DDR5 offering bandwidth improvements of up to 85% compared to previous generation technologies.

Cloud service providers have emerged as the largest consumers of high-performance memory, accounting for approximately 42% of the total market share. These providers require memory solutions that can support massive parallel processing capabilities while maintaining energy efficiency. The energy efficiency aspect is particularly critical, as data centers currently consume about 1-2% of global electricity, with projections indicating this could rise to 8% by 2030 without significant improvements in memory efficiency.

Market segmentation reveals distinct requirements across different AI application domains. Natural language processing applications prioritize memory bandwidth, with requirements growing by 40% annually. Computer vision applications emphasize both capacity and bandwidth, while recommendation systems focus primarily on capacity scaling. DDR5's improved channel architecture addresses these diverse needs more effectively than previous memory technologies.

Regional analysis shows North America leading high-performance memory consumption at 38% market share, followed by Asia-Pacific at 36% and Europe at 21%. However, the Asia-Pacific region is experiencing the fastest growth rate at 32% annually, driven by aggressive AI adoption in China, South Korea, and Japan.

Price sensitivity varies significantly across market segments. Enterprise customers demonstrate willingness to pay premium prices for memory solutions that demonstrably improve AI workload performance, with surveys indicating acceptance of 15-20% price premiums for solutions that reduce training time by at least 30%. This price elasticity creates opportunities for DDR5 adoption despite its higher initial costs compared to DDR4.

Enterprise AI deployments are particularly demanding, with 78% of organizations reporting memory constraints as a primary limitation in their AI infrastructure. The transition from DDR4 to DDR5 represents a potential solution to these constraints, with DDR5 offering bandwidth improvements of up to 85% compared to previous generation technologies.

Cloud service providers have emerged as the largest consumers of high-performance memory, accounting for approximately 42% of the total market share. These providers require memory solutions that can support massive parallel processing capabilities while maintaining energy efficiency. The energy efficiency aspect is particularly critical, as data centers currently consume about 1-2% of global electricity, with projections indicating this could rise to 8% by 2030 without significant improvements in memory efficiency.

Market segmentation reveals distinct requirements across different AI application domains. Natural language processing applications prioritize memory bandwidth, with requirements growing by 40% annually. Computer vision applications emphasize both capacity and bandwidth, while recommendation systems focus primarily on capacity scaling. DDR5's improved channel architecture addresses these diverse needs more effectively than previous memory technologies.

Regional analysis shows North America leading high-performance memory consumption at 38% market share, followed by Asia-Pacific at 36% and Europe at 21%. However, the Asia-Pacific region is experiencing the fastest growth rate at 32% annually, driven by aggressive AI adoption in China, South Korea, and Japan.

Price sensitivity varies significantly across market segments. Enterprise customers demonstrate willingness to pay premium prices for memory solutions that demonstrably improve AI workload performance, with surveys indicating acceptance of 15-20% price premiums for solutions that reduce training time by at least 30%. This price elasticity creates opportunities for DDR5 adoption despite its higher initial costs compared to DDR4.

DDR5 Technical Challenges in AI Applications

The integration of DDR5 memory in AI applications presents significant technical challenges that must be addressed for successful implementation. The primary challenge lies in the thermal management of DDR5 modules, which operate at higher frequencies and voltages than their predecessors, generating substantially more heat during intensive AI workloads. This thermal issue is exacerbated in densely packed AI server environments where cooling solutions must be redesigned to accommodate the increased thermal output.

Power delivery represents another critical challenge, as DDR5's higher operating frequencies require more sophisticated power management systems. The transition from a centralized power management on the motherboard to on-DIMM voltage regulation modules (VRMs) necessitates careful system design to ensure stable power delivery during the high-demand, fluctuating workloads characteristic of AI applications.

Signal integrity becomes increasingly problematic at DDR5's higher data rates (4800-6400 MT/s and beyond). The shorter signal timing windows leave minimal margin for error, requiring advanced PCB designs with optimized trace routing and improved materials to minimize signal degradation. For AI systems that demand maximum memory bandwidth, maintaining signal integrity across multiple memory channels becomes exponentially more difficult.

Compatibility issues with existing AI frameworks present another layer of complexity. Many current AI software stacks are optimized for DDR4 memory characteristics, and significant modifications to memory controllers, drivers, and software are necessary to fully leverage DDR5's capabilities. This includes adapting to DDR5's new burst length and bank group architecture, which affects how data is accessed and processed.

Reliability concerns emerge as DDR5 introduces new error correction capabilities through on-die ECC. While beneficial for data integrity, implementing these features in AI systems requires careful consideration of how error handling affects computational accuracy and system performance, particularly in mission-critical AI applications where data integrity is paramount.

Cost considerations cannot be overlooked, as DDR5 modules command a significant premium over DDR4 counterparts. For large-scale AI deployments, this cost differential can substantially impact total system cost, requiring organizations to carefully evaluate the performance benefits against the increased investment.

Lastly, the increased complexity of DDR5's initialization and training procedures presents challenges for system stability. The more sophisticated power-on sequence and memory training algorithms require robust implementation to ensure consistent performance across varying environmental conditions and workloads typical in AI computing environments.

Power delivery represents another critical challenge, as DDR5's higher operating frequencies require more sophisticated power management systems. The transition from a centralized power management on the motherboard to on-DIMM voltage regulation modules (VRMs) necessitates careful system design to ensure stable power delivery during the high-demand, fluctuating workloads characteristic of AI applications.

Signal integrity becomes increasingly problematic at DDR5's higher data rates (4800-6400 MT/s and beyond). The shorter signal timing windows leave minimal margin for error, requiring advanced PCB designs with optimized trace routing and improved materials to minimize signal degradation. For AI systems that demand maximum memory bandwidth, maintaining signal integrity across multiple memory channels becomes exponentially more difficult.

Compatibility issues with existing AI frameworks present another layer of complexity. Many current AI software stacks are optimized for DDR4 memory characteristics, and significant modifications to memory controllers, drivers, and software are necessary to fully leverage DDR5's capabilities. This includes adapting to DDR5's new burst length and bank group architecture, which affects how data is accessed and processed.

Reliability concerns emerge as DDR5 introduces new error correction capabilities through on-die ECC. While beneficial for data integrity, implementing these features in AI systems requires careful consideration of how error handling affects computational accuracy and system performance, particularly in mission-critical AI applications where data integrity is paramount.

Cost considerations cannot be overlooked, as DDR5 modules command a significant premium over DDR4 counterparts. For large-scale AI deployments, this cost differential can substantially impact total system cost, requiring organizations to carefully evaluate the performance benefits against the increased investment.

Lastly, the increased complexity of DDR5's initialization and training procedures presents challenges for system stability. The more sophisticated power-on sequence and memory training algorithms require robust implementation to ensure consistent performance across varying environmental conditions and workloads typical in AI computing environments.

Current DDR5 Implementation Strategies for AI

01 DDR5 memory architecture and design improvements

DDR5 memory introduces significant architectural improvements over previous generations, including higher data rates, improved power efficiency, and enhanced signal integrity. These designs feature optimized channel architecture, better thermal management, and advanced voltage regulation modules (VRMs) integrated on the memory module rather than on the motherboard. The architecture supports higher bandwidth operations while maintaining compatibility with existing systems.- DDR5 memory architecture and design improvements: DDR5 memory introduces significant architectural improvements over previous generations, including higher data rates, improved power efficiency, and enhanced signal integrity. These designs feature optimized channel architecture, better thermal management, and advanced voltage regulation modules (VRMs) integrated on the memory module rather than on the motherboard. The architecture supports higher bandwidth operations while maintaining compatibility with existing systems.

- DDR5 implementation in computing systems: The implementation of DDR5 memory in various computing systems involves specialized interface designs, motherboard layouts, and system-level optimizations. These implementations include server configurations, desktop computing platforms, and specialized high-performance computing environments. The feasibility considerations include signal routing techniques, power delivery networks, and thermal solutions that accommodate the higher operating frequencies of DDR5 memory.

- DDR5 power management and efficiency: DDR5 memory incorporates advanced power management features that improve overall system efficiency. These include on-die voltage regulation, fine-grained power states, and improved refresh mechanisms. The power architecture allows for better control of voltage domains, reducing power consumption during both active and idle states. These enhancements make DDR5 more feasible for energy-sensitive applications while delivering higher performance.

- DDR5 compatibility and integration challenges: Integrating DDR5 memory into existing and new system designs presents several technical challenges. These include signal integrity at higher frequencies, backward compatibility considerations, and controller design complexities. Solutions involve specialized PCB materials, optimized trace routing, and advanced buffer designs. The feasibility of DDR5 adoption depends on addressing these integration challenges while maintaining system reliability and performance targets.

- DDR5 testing and validation methodologies: Ensuring the feasibility of DDR5 memory implementations requires comprehensive testing and validation methodologies. These include specialized test equipment, simulation techniques, and compliance verification procedures. The testing approaches address timing margins, signal integrity, thermal performance, and long-term reliability. Validation protocols ensure that DDR5 memory meets industry standards and performs reliably under various operating conditions and workloads.

02 DDR5 implementation in computing systems

The implementation of DDR5 memory in various computing systems involves specialized interfaces, controllers, and compatibility solutions. These implementations address challenges in system integration, including motherboard design, CPU compatibility, and BIOS/firmware support. Solutions include adaptive training algorithms, enhanced error correction capabilities, and optimized memory controllers that can handle the increased speeds and different command structures of DDR5 memory.Expand Specific Solutions03 DDR5 power management and efficiency

DDR5 memory incorporates advanced power management features that significantly improve energy efficiency compared to previous generations. These include on-die voltage regulation, multiple voltage domains, and more granular power states. The power management architecture allows for better control of different memory sections, reducing overall power consumption while maintaining or improving performance, which is particularly important for data centers and mobile applications.Expand Specific Solutions04 DDR5 performance enhancements and testing

DDR5 memory offers substantial performance improvements through higher data rates, increased density, and enhanced channel efficiency. Testing methodologies for DDR5 include specialized protocols for validating signal integrity at higher frequencies, thermal performance under load, and compatibility with various system configurations. Performance enhancements include improved burst lengths, bank group architecture, and command/address bus efficiency that collectively deliver higher bandwidth and reduced latency.Expand Specific Solutions05 DDR5 application in specialized systems

DDR5 memory is being adapted for specialized applications including high-performance computing, artificial intelligence systems, edge computing devices, and enterprise servers. These applications leverage DDR5's increased bandwidth, reduced power consumption, and higher capacity to process larger datasets more efficiently. Specialized implementations include custom form factors, cooling solutions, and integration with accelerators to maximize the benefits of DDR5 technology in specific use cases.Expand Specific Solutions

Key Memory Manufacturers and AI Hardware Players

The DDR5 memory market for augmented intelligence is in its early growth phase, characterized by rapid technological advancement and expanding applications. The market is projected to grow significantly as AI systems demand higher memory bandwidth and capacity. Among key players, Intel and AMD lead in processor integration, while Micron, Samsung, and ChangXin Memory dominate memory production. Huawei and Inspur are emerging as strong competitors in the Chinese market. The technology is maturing rapidly with companies like Rambus providing interface solutions and cloud providers like Google and Alibaba driving adoption through data center implementations. Collaboration between processor manufacturers and memory providers is accelerating DDR5 adoption in AI applications.

Intel Corp.

Technical Solution: Intel has developed a comprehensive DDR5 implementation strategy for AI acceleration through their Xeon processors and Habana AI accelerators. Their approach integrates DDR5 memory with significantly higher bandwidth (up to 4800MT/s initially, with roadmap to 8400MT/s), which provides approximately 1.5x effective bandwidth compared to DDR4 systems. Intel's architecture leverages DDR5's dual-channel design with independent 40-bit channels that can operate simultaneously, enabling more efficient data transfer for AI workloads. Their implementation includes on-die ECC support and Decision Feedback Equalization (DFE) for signal integrity at higher speeds, critical for maintaining data accuracy in AI training. Intel has also optimized memory controllers specifically for AI workloads that require frequent, large data transfers between memory and processing units.

Strengths: Comprehensive ecosystem integration with processors specifically designed for AI workloads; established relationships with memory manufacturers; mature memory controller technology. Weaknesses: Higher power consumption compared to LPDDR alternatives; implementation complexity requiring significant system redesign for optimal performance.

Micron Technology, Inc.

Technical Solution: Micron has pioneered DDR5 memory solutions specifically optimized for augmented intelligence applications, focusing on both performance and power efficiency. Their DDR5 modules deliver up to 6400MT/s data rates with 50% higher bandwidth than DDR4, while operating at 1.1V compared to DDR4's 1.2V, resulting in approximately 20% power efficiency improvement. For AI workloads, Micron has implemented advanced features including on-die ECC to reduce system errors during complex calculations, and Same-Bank Refresh to improve data availability. Their architecture incorporates dual 32-bit subchannels that can operate independently, allowing for more efficient parallel processing critical in AI training and inference. Micron has also developed specialized high-density modules (up to 256GB per DIMM) to accommodate the massive datasets required by modern AI systems, with particular emphasis on reliability features like Post-Package Repair and on-die termination for signal integrity.

Strengths: Industry-leading memory density options; extensive experience in memory manufacturing; strong reliability features specifically beneficial for mission-critical AI applications. Weaknesses: Premium pricing compared to some competitors; dependency on system manufacturers to fully utilize advanced features.

Critical Patents and Innovations in DDR5 for AI

Augmented intelligence relationship display for managing data

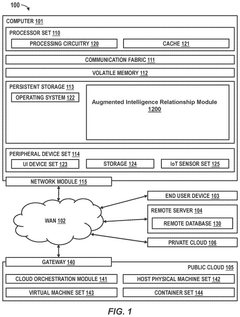

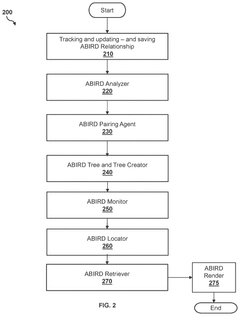

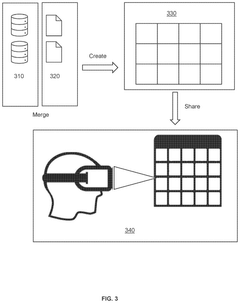

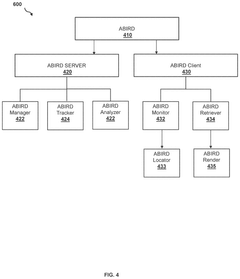

PatentPendingUS20250028702A1

Innovation

- The proposed solution involves creating a Business Intelligence (BI) table synchronized with multiple databases and reports, using a user interface with rules and criteria to provide access to the BI table. The system monitors updates, logs changes, pairs BI entries with source data, and creates a retrievable tree structure to facilitate dynamic data management and retrieval.

Power Efficiency Considerations for DDR5 in AI Systems

Power efficiency has emerged as a critical consideration in the implementation of DDR5 memory for AI systems. The transition from DDR4 to DDR5 brings significant improvements in power management capabilities that directly impact the overall energy consumption profile of augmented intelligence applications. DDR5 introduces operating voltages reduced from 1.2V to 1.1V, representing an approximate 8-9% decrease in baseline power requirements compared to its predecessor.

The power efficiency advantages of DDR5 extend beyond mere voltage reduction. The architecture incorporates on-die voltage regulators (VRs) that enable more granular power management at the DIMM level. This decentralized approach to power regulation allows for dynamic voltage adjustments based on workload demands, particularly beneficial for AI systems that experience fluctuating computational intensities during different phases of model training and inference.

Thermal management considerations also factor prominently into DDR5's power efficiency equation. The higher data rates of DDR5 (starting at 4800 MT/s) generate increased thermal output that must be effectively managed. Advanced thermal solutions including improved heat spreaders and more efficient airflow designs have been developed specifically for DDR5 implementations in high-performance AI environments.

Power-saving modes in DDR5 have been substantially enhanced compared to previous generations. The introduction of multiple power-down states with varying recovery latencies allows AI systems to optimize energy consumption during idle periods without compromising responsiveness. This capability is especially valuable for edge AI deployments where power constraints are often more stringent than in data center environments.

The relationship between power efficiency and computational density presents both opportunities and challenges. While DDR5's higher bandwidth per watt ratio improves overall system efficiency, it also enables higher computational densities that can potentially increase absolute power consumption. System architects must carefully balance these factors when designing AI platforms that leverage DDR5 technology.

Industry benchmarks indicate that DDR5-based AI systems can achieve 15-20% better performance per watt compared to equivalent DDR4 implementations. However, these efficiency gains are workload-dependent, with memory-intensive AI operations showing the most significant improvements. Transformer-based models with extensive parameter sets and frequent memory accesses demonstrate particularly notable efficiency enhancements when utilizing DDR5's advanced power management features.

The power efficiency advantages of DDR5 extend beyond mere voltage reduction. The architecture incorporates on-die voltage regulators (VRs) that enable more granular power management at the DIMM level. This decentralized approach to power regulation allows for dynamic voltage adjustments based on workload demands, particularly beneficial for AI systems that experience fluctuating computational intensities during different phases of model training and inference.

Thermal management considerations also factor prominently into DDR5's power efficiency equation. The higher data rates of DDR5 (starting at 4800 MT/s) generate increased thermal output that must be effectively managed. Advanced thermal solutions including improved heat spreaders and more efficient airflow designs have been developed specifically for DDR5 implementations in high-performance AI environments.

Power-saving modes in DDR5 have been substantially enhanced compared to previous generations. The introduction of multiple power-down states with varying recovery latencies allows AI systems to optimize energy consumption during idle periods without compromising responsiveness. This capability is especially valuable for edge AI deployments where power constraints are often more stringent than in data center environments.

The relationship between power efficiency and computational density presents both opportunities and challenges. While DDR5's higher bandwidth per watt ratio improves overall system efficiency, it also enables higher computational densities that can potentially increase absolute power consumption. System architects must carefully balance these factors when designing AI platforms that leverage DDR5 technology.

Industry benchmarks indicate that DDR5-based AI systems can achieve 15-20% better performance per watt compared to equivalent DDR4 implementations. However, these efficiency gains are workload-dependent, with memory-intensive AI operations showing the most significant improvements. Transformer-based models with extensive parameter sets and frequent memory accesses demonstrate particularly notable efficiency enhancements when utilizing DDR5's advanced power management features.

Competitive Memory Technologies vs DDR5 for AI

In the rapidly evolving landscape of artificial intelligence, memory technologies play a pivotal role in determining system performance. While DDR5 represents the latest iteration in the DRAM lineage, several competitive memory technologies present compelling alternatives that merit careful consideration for AI applications.

High Bandwidth Memory (HBM) stands as DDR5's most formidable competitor for AI workloads. With its stacked die architecture and wide interface, HBM delivers bandwidth up to 900 GB/s, significantly outpacing DDR5's typical 38-51 GB/s. This bandwidth advantage makes HBM particularly suitable for data-intensive AI training operations, despite its higher cost and integration complexity.

Compute Express Link (CXL) memory represents another emerging alternative, offering a disaggregated memory architecture that allows for flexible memory pooling across compute resources. This technology addresses one of DDR5's limitations by enabling more efficient memory utilization and scaling, which is particularly valuable in large-scale AI deployments where memory requirements fluctuate.

Non-volatile memory technologies such as Intel's Optane (3D XPoint) occupy a middle ground between DRAM and storage, providing persistence with performance approaching that of volatile memory. While not matching DDR5's raw speed, these technologies offer density advantages and persistence that can benefit certain AI workloads, particularly those requiring large memory footprints.

GDDR6 and GDDR6X, traditionally associated with graphics processing, deliver bandwidth comparable to or exceeding DDR5 while maintaining compatibility with existing GPU architectures. This makes them particularly well-suited for AI inference tasks on GPU platforms, where they can provide cost-effective performance.

Emerging technologies like Resistive RAM (ReRAM) and Magnetoresistive RAM (MRAM) promise future alternatives with potential advantages in power efficiency and density, though they currently lack the maturity and ecosystem support of DDR5.

When evaluating these alternatives against DDR5 for AI applications, several factors must be considered: bandwidth requirements, capacity needs, power constraints, thermal considerations, and system architecture compatibility. While DDR5 offers a balanced profile with established ecosystem support, specialized AI accelerators may benefit more from HBM's superior bandwidth or CXL's flexibility.

The optimal memory technology choice ultimately depends on the specific AI workload characteristics, deployment environment, and cost constraints. For many general-purpose AI systems, DDR5 provides a pragmatic balance of performance, availability, and cost, while specialized applications may justify the premium for alternative memory technologies.

High Bandwidth Memory (HBM) stands as DDR5's most formidable competitor for AI workloads. With its stacked die architecture and wide interface, HBM delivers bandwidth up to 900 GB/s, significantly outpacing DDR5's typical 38-51 GB/s. This bandwidth advantage makes HBM particularly suitable for data-intensive AI training operations, despite its higher cost and integration complexity.

Compute Express Link (CXL) memory represents another emerging alternative, offering a disaggregated memory architecture that allows for flexible memory pooling across compute resources. This technology addresses one of DDR5's limitations by enabling more efficient memory utilization and scaling, which is particularly valuable in large-scale AI deployments where memory requirements fluctuate.

Non-volatile memory technologies such as Intel's Optane (3D XPoint) occupy a middle ground between DRAM and storage, providing persistence with performance approaching that of volatile memory. While not matching DDR5's raw speed, these technologies offer density advantages and persistence that can benefit certain AI workloads, particularly those requiring large memory footprints.

GDDR6 and GDDR6X, traditionally associated with graphics processing, deliver bandwidth comparable to or exceeding DDR5 while maintaining compatibility with existing GPU architectures. This makes them particularly well-suited for AI inference tasks on GPU platforms, where they can provide cost-effective performance.

Emerging technologies like Resistive RAM (ReRAM) and Magnetoresistive RAM (MRAM) promise future alternatives with potential advantages in power efficiency and density, though they currently lack the maturity and ecosystem support of DDR5.

When evaluating these alternatives against DDR5 for AI applications, several factors must be considered: bandwidth requirements, capacity needs, power constraints, thermal considerations, and system architecture compatibility. While DDR5 offers a balanced profile with established ecosystem support, specialized AI accelerators may benefit more from HBM's superior bandwidth or CXL's flexibility.

The optimal memory technology choice ultimately depends on the specific AI workload characteristics, deployment environment, and cost constraints. For many general-purpose AI systems, DDR5 provides a pragmatic balance of performance, availability, and cost, while specialized applications may justify the premium for alternative memory technologies.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!