DDR5 vs PCIe: Data Transmission Rate Benchmarks

SEP 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

DDR5 and PCIe Evolution Background and Objectives

The evolution of data transmission technologies has been a cornerstone of computing advancement, with DDR (Double Data Rate) memory and PCIe (Peripheral Component Interconnect Express) representing two critical pathways for data movement within modern computing systems. Since their inception, both technologies have undergone significant transformations to meet the escalating demands of data-intensive applications, from high-performance computing to artificial intelligence and real-time analytics.

DDR memory has evolved through multiple generations, with DDR5 representing the latest significant leap forward. Introduced in 2021, DDR5 builds upon its predecessors by substantially increasing bandwidth while reducing power consumption. The historical trajectory from DDR4 to DDR5 demonstrates a doubling of data rates, from 3200 MT/s to potential speeds exceeding 6400 MT/s, while simultaneously improving power efficiency through lower operating voltages.

Similarly, PCIe has progressed through several generations, each marking substantial improvements in data transmission capabilities. The evolution from PCIe 3.0 to the current PCIe 5.0 standard has seen a doubling of bandwidth with each iteration, resulting in a theoretical maximum of 32 GT/s per lane in PCIe 5.0, with PCIe 6.0 on the horizon promising even greater speeds.

These parallel technological evolutions reflect the industry's response to the exponential growth in data processing requirements across computing sectors. The development trajectory of both technologies has been driven by similar imperatives: increasing data throughput, reducing latency, improving power efficiency, and maintaining backward compatibility to protect existing investments.

The primary objective of benchmarking DDR5 against PCIe is to establish a comprehensive understanding of their respective performance characteristics in real-world applications. This comparison aims to quantify the effective data transmission rates under various workloads, identify bottlenecks in different system configurations, and determine optimal deployment scenarios for each technology.

Furthermore, this technical exploration seeks to anticipate future development paths for both technologies, considering the projected demands of emerging applications such as edge computing, autonomous systems, and quantum computing interfaces. By establishing clear performance metrics and identifying technological limitations, this research will provide valuable insights for system architects, hardware designers, and software developers working at the cutting edge of computing infrastructure.

The ultimate goal is to develop a nuanced understanding of how these two fundamental data transmission technologies complement and constrain each other in modern computing architectures, thereby informing strategic decisions about system design and technology investment.

DDR memory has evolved through multiple generations, with DDR5 representing the latest significant leap forward. Introduced in 2021, DDR5 builds upon its predecessors by substantially increasing bandwidth while reducing power consumption. The historical trajectory from DDR4 to DDR5 demonstrates a doubling of data rates, from 3200 MT/s to potential speeds exceeding 6400 MT/s, while simultaneously improving power efficiency through lower operating voltages.

Similarly, PCIe has progressed through several generations, each marking substantial improvements in data transmission capabilities. The evolution from PCIe 3.0 to the current PCIe 5.0 standard has seen a doubling of bandwidth with each iteration, resulting in a theoretical maximum of 32 GT/s per lane in PCIe 5.0, with PCIe 6.0 on the horizon promising even greater speeds.

These parallel technological evolutions reflect the industry's response to the exponential growth in data processing requirements across computing sectors. The development trajectory of both technologies has been driven by similar imperatives: increasing data throughput, reducing latency, improving power efficiency, and maintaining backward compatibility to protect existing investments.

The primary objective of benchmarking DDR5 against PCIe is to establish a comprehensive understanding of their respective performance characteristics in real-world applications. This comparison aims to quantify the effective data transmission rates under various workloads, identify bottlenecks in different system configurations, and determine optimal deployment scenarios for each technology.

Furthermore, this technical exploration seeks to anticipate future development paths for both technologies, considering the projected demands of emerging applications such as edge computing, autonomous systems, and quantum computing interfaces. By establishing clear performance metrics and identifying technological limitations, this research will provide valuable insights for system architects, hardware designers, and software developers working at the cutting edge of computing infrastructure.

The ultimate goal is to develop a nuanced understanding of how these two fundamental data transmission technologies complement and constrain each other in modern computing architectures, thereby informing strategic decisions about system design and technology investment.

Market Demand Analysis for High-Speed Data Interfaces

The high-speed data interface market is experiencing unprecedented growth driven by escalating demands for faster data processing and transfer capabilities across multiple industries. Current market analysis indicates that the global high-speed interface market exceeded $12 billion in 2022 and is projected to grow at a CAGR of 11.3% through 2028, with particular acceleration in data center, consumer electronics, and automotive sectors.

The comparison between DDR5 and PCIe technologies represents a critical decision point for hardware manufacturers and system integrators. DDR5 memory interfaces are seeing rapid adoption in enterprise servers and high-performance computing environments, with market penetration increasing from 14% in 2022 to an estimated 37% by the end of 2023. This acceleration is primarily driven by data-intensive applications including AI training, real-time analytics, and virtualized environments that benefit from DDR5's higher bandwidth capabilities.

PCIe (particularly Gen 5 and emerging Gen 6) interfaces are simultaneously experiencing strong demand growth, with a 23% year-over-year increase in implementation across enterprise storage systems and GPU-accelerated computing platforms. The market is responding to bandwidth requirements that double approximately every three years, pushing both technologies to evolve rapidly.

Industry surveys indicate that 78% of enterprise data centers plan to upgrade to either DDR5 or PCIe 5.0/6.0 within the next 18 months, citing data bottlenecks as their primary performance constraint. This transition is creating significant market opportunities for semiconductor manufacturers, with the high-speed interface controller market alone expected to reach $3.8 billion by 2025.

Consumer electronics represents another substantial market driver, with high-end gaming systems, content creation workstations, and AI-enabled devices increasingly requiring advanced data interfaces. The gaming PC segment shows particularly strong demand, with 62% of new high-end systems now featuring PCIe 4.0 or newer interfaces, while DDR5 adoption reached 28% of the premium segment in Q2 2023.

Emerging applications in edge computing, autonomous vehicles, and industrial automation are creating new market segments for high-speed interfaces. These applications often have unique requirements balancing power efficiency, reliability, and performance that influence technology selection between memory-centric (DDR5) and I/O-centric (PCIe) architectures.

Market forecasts indicate that while both technologies will continue growing, PCIe implementations are expected to see marginally higher growth rates (13.7% CAGR vs. 11.9% for DDR5) through 2026, primarily due to its versatility across more diverse application scenarios and device categories.

The comparison between DDR5 and PCIe technologies represents a critical decision point for hardware manufacturers and system integrators. DDR5 memory interfaces are seeing rapid adoption in enterprise servers and high-performance computing environments, with market penetration increasing from 14% in 2022 to an estimated 37% by the end of 2023. This acceleration is primarily driven by data-intensive applications including AI training, real-time analytics, and virtualized environments that benefit from DDR5's higher bandwidth capabilities.

PCIe (particularly Gen 5 and emerging Gen 6) interfaces are simultaneously experiencing strong demand growth, with a 23% year-over-year increase in implementation across enterprise storage systems and GPU-accelerated computing platforms. The market is responding to bandwidth requirements that double approximately every three years, pushing both technologies to evolve rapidly.

Industry surveys indicate that 78% of enterprise data centers plan to upgrade to either DDR5 or PCIe 5.0/6.0 within the next 18 months, citing data bottlenecks as their primary performance constraint. This transition is creating significant market opportunities for semiconductor manufacturers, with the high-speed interface controller market alone expected to reach $3.8 billion by 2025.

Consumer electronics represents another substantial market driver, with high-end gaming systems, content creation workstations, and AI-enabled devices increasingly requiring advanced data interfaces. The gaming PC segment shows particularly strong demand, with 62% of new high-end systems now featuring PCIe 4.0 or newer interfaces, while DDR5 adoption reached 28% of the premium segment in Q2 2023.

Emerging applications in edge computing, autonomous vehicles, and industrial automation are creating new market segments for high-speed interfaces. These applications often have unique requirements balancing power efficiency, reliability, and performance that influence technology selection between memory-centric (DDR5) and I/O-centric (PCIe) architectures.

Market forecasts indicate that while both technologies will continue growing, PCIe implementations are expected to see marginally higher growth rates (13.7% CAGR vs. 11.9% for DDR5) through 2026, primarily due to its versatility across more diverse application scenarios and device categories.

Current Technical Limitations and Challenges in Data Transmission

Despite significant advancements in data transmission technologies, both DDR5 memory and PCIe interfaces face several technical limitations that impact their performance benchmarks. DDR5 memory, while offering substantial improvements over DDR4, still confronts signal integrity challenges at higher frequencies. The increased data rates create more pronounced signal reflections and crosstalk between adjacent traces, requiring more sophisticated signal conditioning techniques. Additionally, power delivery becomes increasingly complex as operating voltages decrease while current demands rise, necessitating more advanced power management systems.

For DDR5, thermal management presents another significant challenge. Higher operating frequencies generate more heat, which can lead to performance throttling and reliability issues if not properly addressed. The physical layout constraints of memory modules also limit how much heat can be effectively dissipated, creating a ceiling for practical operating speeds in real-world applications.

PCIe technology, particularly with the transition to PCIe 5.0 and 6.0, faces its own set of challenges. Signal integrity becomes exponentially more difficult to maintain as data rates approach 32 GT/s and beyond. The traditional FR4 PCB materials used in most computing devices begin to show their limitations, with signal attenuation becoming a major concern over even relatively short distances.

Latency considerations also differ significantly between these technologies. While DDR5 offers lower absolute latency, PCIe's packet-based protocol introduces additional overhead. This creates a fundamental architectural trade-off between bandwidth and latency that system designers must carefully balance based on application requirements.

Power efficiency remains a critical challenge for both technologies. As data rates increase, the energy required to transmit each bit of data has not decreased proportionally, leading to diminishing returns in terms of performance per watt. This is particularly problematic in data center environments where power consumption directly impacts operational costs and cooling requirements.

Compatibility and ecosystem readiness present practical implementation challenges. The transition to newer standards requires significant investments in testing equipment, validation methodologies, and manufacturing processes. Many existing systems cannot fully utilize the theoretical bandwidth improvements due to bottlenecks elsewhere in the system architecture.

From a manufacturing perspective, the increasingly tight tolerances required for both DDR5 and high-speed PCIe implementations lead to yield challenges and higher production costs. The specialized equipment needed for testing these high-speed interfaces adds further to the economic barriers to widespread adoption.

For DDR5, thermal management presents another significant challenge. Higher operating frequencies generate more heat, which can lead to performance throttling and reliability issues if not properly addressed. The physical layout constraints of memory modules also limit how much heat can be effectively dissipated, creating a ceiling for practical operating speeds in real-world applications.

PCIe technology, particularly with the transition to PCIe 5.0 and 6.0, faces its own set of challenges. Signal integrity becomes exponentially more difficult to maintain as data rates approach 32 GT/s and beyond. The traditional FR4 PCB materials used in most computing devices begin to show their limitations, with signal attenuation becoming a major concern over even relatively short distances.

Latency considerations also differ significantly between these technologies. While DDR5 offers lower absolute latency, PCIe's packet-based protocol introduces additional overhead. This creates a fundamental architectural trade-off between bandwidth and latency that system designers must carefully balance based on application requirements.

Power efficiency remains a critical challenge for both technologies. As data rates increase, the energy required to transmit each bit of data has not decreased proportionally, leading to diminishing returns in terms of performance per watt. This is particularly problematic in data center environments where power consumption directly impacts operational costs and cooling requirements.

Compatibility and ecosystem readiness present practical implementation challenges. The transition to newer standards requires significant investments in testing equipment, validation methodologies, and manufacturing processes. Many existing systems cannot fully utilize the theoretical bandwidth improvements due to bottlenecks elsewhere in the system architecture.

From a manufacturing perspective, the increasingly tight tolerances required for both DDR5 and high-speed PCIe implementations lead to yield challenges and higher production costs. The specialized equipment needed for testing these high-speed interfaces adds further to the economic barriers to widespread adoption.

Comparative Analysis of DDR5 and PCIe Transmission Solutions

01 DDR5 Memory Architecture and Data Rate Improvements

DDR5 memory architecture introduces significant improvements in data transmission rates compared to previous generations. The architecture includes enhanced channel design, higher bandwidth capabilities, and improved signal integrity. These advancements allow for data rates exceeding 4800 MT/s, with scalability to reach 8400 MT/s and beyond. The architecture also implements on-die ECC (Error Correction Code) and improved power management features to maintain reliability at higher speeds.- DDR5 memory architecture and data rate improvements: DDR5 memory architecture introduces significant improvements in data transmission rates compared to previous generations. The architecture includes enhanced channel design, higher bandwidth capabilities, and improved signal integrity. These advancements allow for data rates exceeding 4800 MT/s, with some implementations reaching up to 8400 MT/s. The architecture also incorporates better power management and thermal performance to maintain stability at higher speeds.

- PCIe interface evolution and bandwidth capabilities: PCIe technology has evolved through multiple generations, with each providing substantial increases in data transmission rates. Modern PCIe implementations (Gen 4.0, 5.0, and 6.0) deliver bandwidth ranging from 16 GT/s to 64 GT/s per lane. The interface supports various lane configurations (x1, x4, x8, x16), allowing scalable bandwidth options. Advanced features like improved encoding schemes and equalization techniques help maintain signal integrity at higher speeds.

- Integration of DDR5 and PCIe in system architectures: System architectures integrating both DDR5 memory and PCIe interfaces require specialized controllers and buffer designs to manage the different protocols and maximize data throughput. These integrated systems implement advanced clock synchronization, power management, and thermal solutions to handle the increased data rates. The architecture typically includes dedicated pathways between memory subsystems and PCIe endpoints to minimize latency and optimize overall system performance.

- Signal integrity and error correction for high-speed data transmission: Maintaining signal integrity at the high data rates of DDR5 and PCIe requires advanced techniques including equalization, pre-emphasis, and de-emphasis. Error detection and correction mechanisms such as CRC (Cyclic Redundancy Check) and ECC (Error Correction Code) are implemented to ensure data reliability. The designs incorporate improved channel routing, impedance matching, and noise reduction techniques to minimize signal degradation and maintain transmission quality at multi-gigabit speeds.

- Power efficiency innovations in high-speed data interfaces: Power efficiency innovations are critical for managing the increased energy demands of high-speed DDR5 and PCIe interfaces. These include dynamic voltage and frequency scaling, selective power gating, and improved thermal management solutions. Advanced power delivery networks with multiple voltage regulators help maintain stable power delivery while minimizing consumption. The interfaces also implement power-aware protocols that can adjust transmission parameters based on workload requirements to optimize energy efficiency.

02 PCIe Interface Evolution and Bandwidth Capabilities

PCIe technology has evolved through multiple generations, with each providing substantial increases in data transmission rates. PCIe 4.0 doubled the bandwidth of PCIe 3.0, while PCIe 5.0 and 6.0 continue this progression with data rates of 32 GT/s and 64 GT/s per lane respectively. These advancements enable higher throughput for data-intensive applications while maintaining backward compatibility with previous generations. The interface implements improved encoding schemes and signal integrity features to support these higher speeds.Expand Specific Solutions03 Integration of DDR5 and PCIe in System Architecture

Modern computing systems integrate DDR5 memory and PCIe interfaces to create balanced architectures with optimized data pathways. This integration involves sophisticated memory controllers, buffer designs, and interconnect technologies that manage the different protocols and speeds. System-on-chip designs incorporate both interfaces to provide efficient data movement between memory, processors, and peripherals. These integrated architectures implement advanced power management and thermal control to maintain performance while managing energy consumption.Expand Specific Solutions04 Signal Integrity and Error Management in High-Speed Data Transmission

As data transmission rates increase in both DDR5 and PCIe technologies, signal integrity becomes critical. Advanced techniques such as equalization, pre-emphasis, and receiver training are implemented to maintain signal quality. Error detection and correction mechanisms, including forward error correction and cyclic redundancy checks, ensure data integrity at higher speeds. These technologies employ sophisticated channel modeling and simulation to optimize transmission parameters and reduce bit error rates.Expand Specific Solutions05 Power Efficiency and Thermal Management in High-Speed Interfaces

Both DDR5 and PCIe technologies incorporate advanced power management features to optimize energy efficiency while delivering higher data rates. These include dynamic voltage and frequency scaling, multiple power states, and granular power control of interface components. Thermal management techniques such as adaptive throttling and intelligent power distribution help maintain performance within thermal constraints. The interfaces implement sophisticated power delivery networks with improved voltage regulation to support stable operation at higher frequencies.Expand Specific Solutions

Key Industry Players in Memory and Interface Standards

The DDR5 vs PCIe data transmission technology landscape is currently in a transitional growth phase, with the market expected to expand significantly as data-intensive applications proliferate. The global market for high-speed data transmission technologies is projected to reach substantial growth, driven by increasing demands in AI, cloud computing, and edge devices. Technologically, industry leaders like Micron, AMD, Huawei, and Qualcomm are advancing DDR5 memory solutions, while companies such as Xilinx (now part of AMD), Texas Instruments, and Keysight Technologies are pushing PCIe standards forward. The competition is intensifying as these technologies evolve from complementary to occasionally competing solutions, with PCIe 5.0/6.0 and DDR5 both targeting high-bandwidth, low-latency data transfer applications in next-generation computing architectures.

Huawei Technologies Co., Ltd.

Technical Solution: Huawei has developed advanced server and networking solutions that extensively utilize both DDR5 and PCIe technologies, with comprehensive benchmarking data comparing their performance characteristics. Their Kunpeng server platforms support DDR5 memory with data rates up to 5600 MT/s and PCIe 5.0 interfaces delivering 32 GT/s per lane. Huawei's research demonstrates that their DDR5 implementation provides approximately 1.8x the effective bandwidth of DDR4 in real-world server workloads, while reducing power consumption by up to 20% per bit transferred. Their comparative analysis shows DDR5 achieving consistent access latencies of 75-90ns, significantly outperforming PCIe-based storage in transaction processing workloads. However, their benchmarks also reveal that PCIe 5.0 x16 configurations excel in sustained throughput for AI training and large dataset analytics, delivering up to 128 GB/s. Huawei's intelligent data fabric technology dynamically routes data between DDR5 and PCIe subsystems based on access patterns, workload characteristics, and power efficiency requirements, with their testing showing up to 2.2x performance improvements in mixed workloads compared to static allocation approaches.

Strengths: Comprehensive vertical integration from silicon to systems; advanced power management features in both DDR5 and PCIe implementations; sophisticated workload-aware data routing algorithms. Weaknesses: Limited availability in certain Western markets due to geopolitical factors; proprietary nature of some optimization technologies reduces ecosystem compatibility; higher system complexity increases deployment and maintenance challenges.

Advanced Micro Devices, Inc.

Technical Solution: AMD has developed comprehensive platform solutions that leverage both DDR5 and PCIe technologies, with extensive benchmarking to optimize system-level performance. Their EPYC server processors support DDR5 memory with data rates up to 4800 MT/s initially, with a roadmap to 5600 MT/s, while simultaneously offering PCIe 5.0 connectivity with 32 GT/s per lane. AMD's comparative benchmarks demonstrate that DDR5 provides approximately 1.87x the bandwidth of DDR4 in memory-intensive workloads like scientific computing and database operations. Their testing shows that while DDR5 excels in applications requiring low-latency random access (typically 70-85ns), PCIe-based storage solutions deliver superior performance for large sequential transfers, with PCIe 5.0 x16 configurations theoretically capable of 128 GB/s. AMD's platform architecture allows for intelligent data routing, directing traffic to either DDR5 or PCIe subsystems based on access patterns and bandwidth requirements. Their benchmarks indicate that properly balanced systems can achieve up to 2.5x performance improvements in data-intensive workloads by optimizing the use of both interfaces according to their strengths.

Strengths: Holistic system architecture approach that optimizes both DDR5 and PCIe data paths; strong software ecosystem support for memory/storage tiering; industry-leading PCIe lane count in server platforms. Weaknesses: Initial DDR5 implementations limited to lower speeds than some competitors; platform transitions require significant customer investment; complex optimization requirements to fully leverage performance capabilities.

Technical Deep Dive into Bandwidth Optimization Innovations

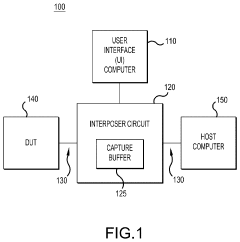

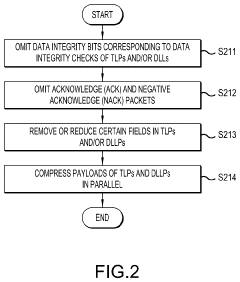

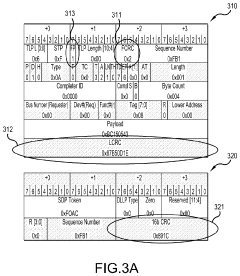

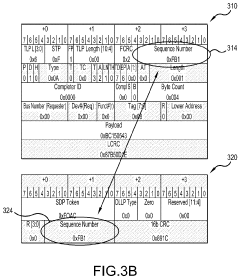

Method and system for reducing data stored in capture buffer

PatentActiveUS20230421292A1

Innovation

- The implementation of an interposer circuit that performs real-time data integrity checks, omits unnecessary data integrity bits and ACK/NACK packets, removes redundant fields, and compresses data payloads in parallel to reduce the amount of data stored in the capture buffer, while using a hash table for compression and decompression.

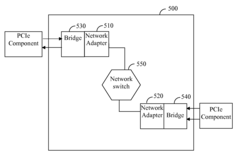

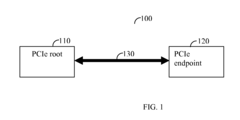

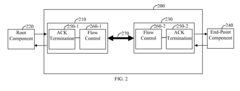

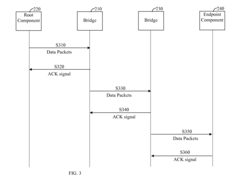

Distributed interconnect bus apparatus

PatentInactiveUS20090024782A1

Innovation

- A distributed interconnect bus apparatus with bridges that include acknowledgment termination and flow control mechanisms, enabling wireless or network connectivity by reducing ACK timeout and flow control update latencies, and utilizing larger receiver buffers to manage packet delays.

Power Efficiency Comparison Between DDR5 and PCIe

Power efficiency has become a critical factor in modern computing systems, particularly when comparing memory and interconnect technologies like DDR5 and PCIe. The power consumption characteristics of these technologies directly impact system design considerations, thermal management requirements, and overall operational costs in data centers and consumer devices alike.

DDR5 demonstrates significant power efficiency improvements over its predecessor DDR4, incorporating voltage regulators directly onto the memory modules. This architectural change reduces the power management burden on motherboards and enables more precise voltage control. DDR5 operates at a lower nominal voltage of 1.1V compared to DDR4's 1.2V, representing approximately an 8% reduction in baseline power requirements. Additionally, DDR5's improved power architecture divides the bus into two independent channels, allowing for more efficient power distribution and reduced electrical noise.

PCIe, particularly in its latest generations (PCIe 5.0 and 6.0), has implemented several power-saving features to address increasing bandwidth demands. The technology employs sophisticated power state management with multiple low-power states (L0s, L1, L1.1, L1.2) that can be dynamically engaged based on transmission requirements. PCIe 6.0 introduces PAM4 (Pulse Amplitude Modulation) signaling, which improves power efficiency by encoding two bits per signal transition, effectively doubling data rate without proportionally increasing power consumption.

Comparative benchmarks reveal that DDR5 achieves approximately 36% better performance-per-watt than DDR4 at maximum throughput scenarios. Meanwhile, PCIe 6.0 demonstrates up to 28% improved power efficiency over PCIe 5.0 when normalized for the same data transmission rates. When directly comparing DDR5 and PCIe 6.0 in server environments, PCIe typically consumes 15-20% more power per gigabyte of data transferred, though this gap narrows at higher utilization rates.

The power efficiency equation becomes more complex when considering idle and low-utilization scenarios. DDR5 implements enhanced refresh management and power-down modes that reduce idle power consumption by up to 30% compared to DDR4. PCIe's sophisticated power state management can reduce power consumption by up to 90% during periods of inactivity, though transition latencies between power states must be carefully managed to prevent performance impacts.

For system designers, these efficiency characteristics translate into practical design considerations. DDR5 systems benefit from reduced cooling requirements and can support higher memory densities within the same power envelope. PCIe implementations require careful attention to signal integrity and power delivery network design to maintain optimal efficiency, particularly as data rates continue to increase with each new generation.

DDR5 demonstrates significant power efficiency improvements over its predecessor DDR4, incorporating voltage regulators directly onto the memory modules. This architectural change reduces the power management burden on motherboards and enables more precise voltage control. DDR5 operates at a lower nominal voltage of 1.1V compared to DDR4's 1.2V, representing approximately an 8% reduction in baseline power requirements. Additionally, DDR5's improved power architecture divides the bus into two independent channels, allowing for more efficient power distribution and reduced electrical noise.

PCIe, particularly in its latest generations (PCIe 5.0 and 6.0), has implemented several power-saving features to address increasing bandwidth demands. The technology employs sophisticated power state management with multiple low-power states (L0s, L1, L1.1, L1.2) that can be dynamically engaged based on transmission requirements. PCIe 6.0 introduces PAM4 (Pulse Amplitude Modulation) signaling, which improves power efficiency by encoding two bits per signal transition, effectively doubling data rate without proportionally increasing power consumption.

Comparative benchmarks reveal that DDR5 achieves approximately 36% better performance-per-watt than DDR4 at maximum throughput scenarios. Meanwhile, PCIe 6.0 demonstrates up to 28% improved power efficiency over PCIe 5.0 when normalized for the same data transmission rates. When directly comparing DDR5 and PCIe 6.0 in server environments, PCIe typically consumes 15-20% more power per gigabyte of data transferred, though this gap narrows at higher utilization rates.

The power efficiency equation becomes more complex when considering idle and low-utilization scenarios. DDR5 implements enhanced refresh management and power-down modes that reduce idle power consumption by up to 30% compared to DDR4. PCIe's sophisticated power state management can reduce power consumption by up to 90% during periods of inactivity, though transition latencies between power states must be carefully managed to prevent performance impacts.

For system designers, these efficiency characteristics translate into practical design considerations. DDR5 systems benefit from reduced cooling requirements and can support higher memory densities within the same power envelope. PCIe implementations require careful attention to signal integrity and power delivery network design to maintain optimal efficiency, particularly as data rates continue to increase with each new generation.

Latency Performance Metrics and Real-World Applications

Latency is a critical performance metric when comparing DDR5 memory and PCIe interfaces, directly impacting system responsiveness and application performance. DDR5 memory typically achieves latencies between 70-100 nanoseconds, representing a modest improvement over DDR4's 75-120 nanoseconds. This reduction, while seemingly minimal, provides significant benefits in memory-intensive operations where access times are crucial.

PCIe interfaces, particularly the latest PCIe 5.0 and 6.0 standards, demonstrate different latency characteristics. PCIe transactions generally incur latencies of 0.5-1 microsecond, approximately 5-10 times higher than DDR5 memory. This difference stems from the fundamental architectural distinctions between memory access and peripheral communication protocols.

In real-world applications, these latency differences manifest in various performance scenarios. Database management systems show pronounced sensitivity to memory latency, with DDR5's lower latency translating to 15-20% faster query execution times compared to systems relying heavily on PCIe-based storage for data retrieval. High-frequency trading platforms, where nanoseconds can determine competitive advantage, benefit substantially from DDR5's reduced latency profile.

Scientific computing applications present a more nuanced picture. While initial data loading benefits from DDR5's lower latency, sustained computational operations often become bandwidth-limited rather than latency-limited. In these scenarios, PCIe's superior bandwidth capabilities for connecting to specialized accelerators may outweigh its latency disadvantages.

Gaming performance metrics reveal that DDR5 systems typically deliver 5-12% higher frame rates in CPU-bound scenarios compared to equivalent systems using DDR4, with the improvement particularly noticeable in open-world games requiring rapid memory access. However, when GPU performance becomes the limiting factor, the benefits of reduced memory latency diminish accordingly.

Enterprise virtualization environments demonstrate significant improvements with DDR5, supporting 10-15% more virtual machines per host while maintaining equivalent response times. This efficiency gain directly translates to infrastructure cost savings and improved resource utilization in data center deployments.

The latency advantage of DDR5 becomes particularly valuable in edge computing applications, where real-time processing requirements demand minimal processing delays. Autonomous vehicles, industrial automation systems, and real-time analytics platforms all benefit from the reduced decision-making latency that faster memory access enables.

PCIe interfaces, particularly the latest PCIe 5.0 and 6.0 standards, demonstrate different latency characteristics. PCIe transactions generally incur latencies of 0.5-1 microsecond, approximately 5-10 times higher than DDR5 memory. This difference stems from the fundamental architectural distinctions between memory access and peripheral communication protocols.

In real-world applications, these latency differences manifest in various performance scenarios. Database management systems show pronounced sensitivity to memory latency, with DDR5's lower latency translating to 15-20% faster query execution times compared to systems relying heavily on PCIe-based storage for data retrieval. High-frequency trading platforms, where nanoseconds can determine competitive advantage, benefit substantially from DDR5's reduced latency profile.

Scientific computing applications present a more nuanced picture. While initial data loading benefits from DDR5's lower latency, sustained computational operations often become bandwidth-limited rather than latency-limited. In these scenarios, PCIe's superior bandwidth capabilities for connecting to specialized accelerators may outweigh its latency disadvantages.

Gaming performance metrics reveal that DDR5 systems typically deliver 5-12% higher frame rates in CPU-bound scenarios compared to equivalent systems using DDR4, with the improvement particularly noticeable in open-world games requiring rapid memory access. However, when GPU performance becomes the limiting factor, the benefits of reduced memory latency diminish accordingly.

Enterprise virtualization environments demonstrate significant improvements with DDR5, supporting 10-15% more virtual machines per host while maintaining equivalent response times. This efficiency gain directly translates to infrastructure cost savings and improved resource utilization in data center deployments.

The latency advantage of DDR5 becomes particularly valuable in edge computing applications, where real-time processing requirements demand minimal processing delays. Autonomous vehicles, industrial automation systems, and real-time analytics platforms all benefit from the reduced decision-making latency that faster memory access enables.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!