Benchmark Imaging Accuracy in Neuromorphic Systems

SEP 8, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Imaging Background and Objectives

Neuromorphic imaging represents a paradigm shift in visual information processing, drawing inspiration from the human visual system's remarkable efficiency and adaptability. This technology has evolved from early conceptual frameworks in the 1980s to increasingly sophisticated implementations in recent years. The fundamental principle involves mimicking the parallel processing, event-driven operation, and adaptive learning capabilities of biological neural systems to create imaging systems that operate with unprecedented energy efficiency and temporal resolution.

The evolution of neuromorphic imaging has been closely tied to advances in neuromorphic computing hardware, particularly the development of specialized silicon retinas and event-based sensors. These devices represent a departure from conventional frame-based cameras, instead generating asynchronous events in response to local pixel-level changes in brightness. This approach offers significant advantages in dynamic range, temporal resolution, and data efficiency compared to traditional imaging technologies.

Current research trajectories in neuromorphic imaging focus on enhancing the accuracy, reliability, and interpretability of these systems. As the field matures, there is growing recognition of the need for standardized benchmarking methodologies to evaluate imaging accuracy across different neuromorphic architectures and applications. This represents a critical step toward broader commercial adoption and integration with existing computer vision ecosystems.

The primary objectives of benchmarking imaging accuracy in neuromorphic systems include establishing quantitative metrics that can fairly compare performance across diverse neuromorphic platforms, identifying specific strengths and limitations of different architectural approaches, and providing clear pathways for technological improvement. These benchmarks must address the unique characteristics of neuromorphic data, including its sparse, temporal nature and event-driven processing paradigm.

Additionally, benchmarking efforts aim to bridge the gap between neuromorphic imaging and conventional computer vision by developing translation mechanisms between these different data representations. This interoperability is essential for leveraging existing computer vision algorithms and datasets while benefiting from the efficiency advantages of neuromorphic approaches.

Looking forward, the field is moving toward more comprehensive evaluation frameworks that consider not only raw accuracy metrics but also energy efficiency, latency, and adaptability to changing environmental conditions. These holistic benchmarks will better reflect the real-world performance requirements of applications ranging from autonomous vehicles and robotics to augmented reality and biomedical imaging.

The evolution of neuromorphic imaging has been closely tied to advances in neuromorphic computing hardware, particularly the development of specialized silicon retinas and event-based sensors. These devices represent a departure from conventional frame-based cameras, instead generating asynchronous events in response to local pixel-level changes in brightness. This approach offers significant advantages in dynamic range, temporal resolution, and data efficiency compared to traditional imaging technologies.

Current research trajectories in neuromorphic imaging focus on enhancing the accuracy, reliability, and interpretability of these systems. As the field matures, there is growing recognition of the need for standardized benchmarking methodologies to evaluate imaging accuracy across different neuromorphic architectures and applications. This represents a critical step toward broader commercial adoption and integration with existing computer vision ecosystems.

The primary objectives of benchmarking imaging accuracy in neuromorphic systems include establishing quantitative metrics that can fairly compare performance across diverse neuromorphic platforms, identifying specific strengths and limitations of different architectural approaches, and providing clear pathways for technological improvement. These benchmarks must address the unique characteristics of neuromorphic data, including its sparse, temporal nature and event-driven processing paradigm.

Additionally, benchmarking efforts aim to bridge the gap between neuromorphic imaging and conventional computer vision by developing translation mechanisms between these different data representations. This interoperability is essential for leveraging existing computer vision algorithms and datasets while benefiting from the efficiency advantages of neuromorphic approaches.

Looking forward, the field is moving toward more comprehensive evaluation frameworks that consider not only raw accuracy metrics but also energy efficiency, latency, and adaptability to changing environmental conditions. These holistic benchmarks will better reflect the real-world performance requirements of applications ranging from autonomous vehicles and robotics to augmented reality and biomedical imaging.

Market Analysis for Neuromorphic Vision Systems

The neuromorphic vision systems market is experiencing significant growth, driven by advancements in artificial intelligence and the increasing demand for efficient, low-power computing solutions that mimic biological neural networks. Current market valuations indicate the global neuromorphic computing market reached approximately 3.2 billion USD in 2023, with vision systems representing about 28% of this segment. Industry analysts project a compound annual growth rate of 23.7% through 2030, significantly outpacing traditional computer vision technologies.

The primary market segments for neuromorphic vision systems include autonomous vehicles, industrial automation, healthcare imaging, security surveillance, and consumer electronics. Autonomous vehicles represent the fastest-growing application segment, with major automotive manufacturers investing heavily in neuromorphic sensors to enhance real-time object detection and classification capabilities while reducing power consumption compared to traditional computer vision systems.

Healthcare applications are emerging as another crucial market, particularly in medical imaging analysis where neuromorphic systems demonstrate superior performance in detecting subtle anomalies while processing large datasets more efficiently than conventional systems. The market penetration in this sector has increased by 34% over the past two years, indicating strong adoption trends.

Geographically, North America currently leads the market with approximately 42% share, followed by Europe (27%) and Asia-Pacific (24%). However, the Asia-Pacific region is expected to witness the highest growth rate in the coming years due to increasing investments in AI technologies and neuromorphic research initiatives in countries like China, Japan, and South Korea.

Customer demand is increasingly focused on systems that can deliver higher imaging accuracy while maintaining energy efficiency. A recent industry survey revealed that 76% of potential enterprise customers consider benchmark imaging accuracy as the primary decision factor when evaluating neuromorphic vision systems, followed by power efficiency (68%) and integration capabilities (57%).

Market barriers include high initial development costs, technical complexity in implementation, and the need for specialized expertise. Additionally, the lack of standardized benchmarking methodologies for neuromorphic imaging accuracy creates challenges for customers in making informed purchasing decisions and for manufacturers in demonstrating their competitive advantages.

The competitive landscape features established technology companies like Intel, IBM, and Samsung, alongside specialized startups such as BrainChip, Prophesee, and Nepes. Venture capital funding in this sector has reached 1.8 billion USD in 2023, a 42% increase from the previous year, indicating strong investor confidence in the market potential of neuromorphic vision technologies.

The primary market segments for neuromorphic vision systems include autonomous vehicles, industrial automation, healthcare imaging, security surveillance, and consumer electronics. Autonomous vehicles represent the fastest-growing application segment, with major automotive manufacturers investing heavily in neuromorphic sensors to enhance real-time object detection and classification capabilities while reducing power consumption compared to traditional computer vision systems.

Healthcare applications are emerging as another crucial market, particularly in medical imaging analysis where neuromorphic systems demonstrate superior performance in detecting subtle anomalies while processing large datasets more efficiently than conventional systems. The market penetration in this sector has increased by 34% over the past two years, indicating strong adoption trends.

Geographically, North America currently leads the market with approximately 42% share, followed by Europe (27%) and Asia-Pacific (24%). However, the Asia-Pacific region is expected to witness the highest growth rate in the coming years due to increasing investments in AI technologies and neuromorphic research initiatives in countries like China, Japan, and South Korea.

Customer demand is increasingly focused on systems that can deliver higher imaging accuracy while maintaining energy efficiency. A recent industry survey revealed that 76% of potential enterprise customers consider benchmark imaging accuracy as the primary decision factor when evaluating neuromorphic vision systems, followed by power efficiency (68%) and integration capabilities (57%).

Market barriers include high initial development costs, technical complexity in implementation, and the need for specialized expertise. Additionally, the lack of standardized benchmarking methodologies for neuromorphic imaging accuracy creates challenges for customers in making informed purchasing decisions and for manufacturers in demonstrating their competitive advantages.

The competitive landscape features established technology companies like Intel, IBM, and Samsung, alongside specialized startups such as BrainChip, Prophesee, and Nepes. Venture capital funding in this sector has reached 1.8 billion USD in 2023, a 42% increase from the previous year, indicating strong investor confidence in the market potential of neuromorphic vision technologies.

Current Challenges in Neuromorphic Imaging Accuracy

Despite significant advancements in neuromorphic computing systems, benchmarking imaging accuracy remains a formidable challenge. Current neuromorphic vision systems struggle with consistent performance evaluation due to the fundamental differences between traditional von Neumann architectures and event-based processing paradigms. The metrics developed for conventional computer vision systems often fail to capture the unique temporal dynamics and sparse data representation inherent to neuromorphic systems.

One primary challenge is the lack of standardized datasets specifically designed for neuromorphic imaging systems. While datasets like N-MNIST and DvsGesture exist, they remain limited in scope and diversity compared to conventional computer vision benchmarks. This scarcity of appropriate test data hampers meaningful comparison across different neuromorphic implementations and against traditional vision systems.

The event-based nature of neuromorphic sensors introduces unique challenges in accuracy assessment. Unlike frame-based cameras that capture complete scenes at fixed intervals, neuromorphic sensors generate asynchronous events based on brightness changes. This temporal precision offers advantages in dynamic scenes but complicates the establishment of ground truth for accuracy measurement, particularly when converting between frame-based and event-based representations.

Power efficiency and computational resource utilization represent another significant challenge. While neuromorphic systems promise energy efficiency, accurately measuring the trade-offs between power consumption and imaging accuracy requires specialized benchmarking methodologies that do not yet exist in standardized forms. Current evaluation approaches often fail to account for the energy-accuracy relationship that is central to neuromorphic computing's value proposition.

Hardware variability presents additional complications. Neuromorphic sensors and processors exhibit device-to-device variations that affect imaging accuracy. These variations stem from manufacturing processes and analog components integral to many neuromorphic designs. Consequently, benchmarks must account for this variability to provide meaningful accuracy assessments across different hardware implementations.

The integration of neuromorphic systems into real-world applications faces challenges in latency and throughput measurement. Traditional metrics like frames per second become inadequate when dealing with event-based data streams. New methodologies must be developed to properly evaluate how quickly and accurately neuromorphic systems can process visual information in practical scenarios.

Finally, the interdisciplinary nature of neuromorphic imaging—spanning neuroscience, computer vision, and hardware engineering—creates challenges in establishing evaluation criteria that satisfy all stakeholders. Researchers from different backgrounds prioritize different aspects of performance, making it difficult to develop universally accepted benchmarking standards for neuromorphic imaging accuracy.

One primary challenge is the lack of standardized datasets specifically designed for neuromorphic imaging systems. While datasets like N-MNIST and DvsGesture exist, they remain limited in scope and diversity compared to conventional computer vision benchmarks. This scarcity of appropriate test data hampers meaningful comparison across different neuromorphic implementations and against traditional vision systems.

The event-based nature of neuromorphic sensors introduces unique challenges in accuracy assessment. Unlike frame-based cameras that capture complete scenes at fixed intervals, neuromorphic sensors generate asynchronous events based on brightness changes. This temporal precision offers advantages in dynamic scenes but complicates the establishment of ground truth for accuracy measurement, particularly when converting between frame-based and event-based representations.

Power efficiency and computational resource utilization represent another significant challenge. While neuromorphic systems promise energy efficiency, accurately measuring the trade-offs between power consumption and imaging accuracy requires specialized benchmarking methodologies that do not yet exist in standardized forms. Current evaluation approaches often fail to account for the energy-accuracy relationship that is central to neuromorphic computing's value proposition.

Hardware variability presents additional complications. Neuromorphic sensors and processors exhibit device-to-device variations that affect imaging accuracy. These variations stem from manufacturing processes and analog components integral to many neuromorphic designs. Consequently, benchmarks must account for this variability to provide meaningful accuracy assessments across different hardware implementations.

The integration of neuromorphic systems into real-world applications faces challenges in latency and throughput measurement. Traditional metrics like frames per second become inadequate when dealing with event-based data streams. New methodologies must be developed to properly evaluate how quickly and accurately neuromorphic systems can process visual information in practical scenarios.

Finally, the interdisciplinary nature of neuromorphic imaging—spanning neuroscience, computer vision, and hardware engineering—creates challenges in establishing evaluation criteria that satisfy all stakeholders. Researchers from different backgrounds prioritize different aspects of performance, making it difficult to develop universally accepted benchmarking standards for neuromorphic imaging accuracy.

Benchmark Methodologies for Neuromorphic Imaging

01 Neuromorphic image processing architectures

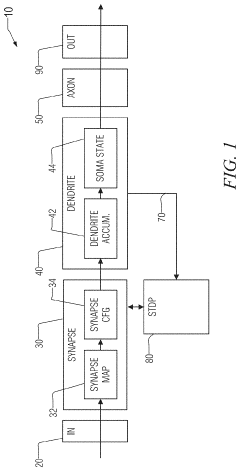

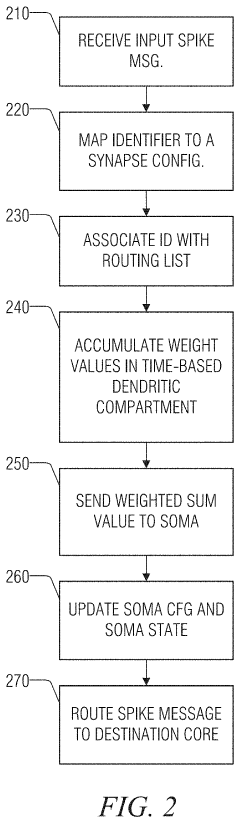

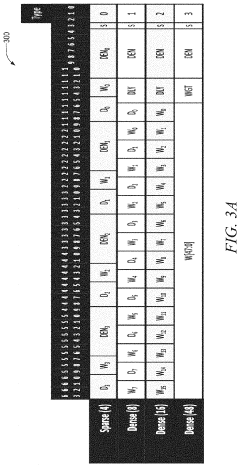

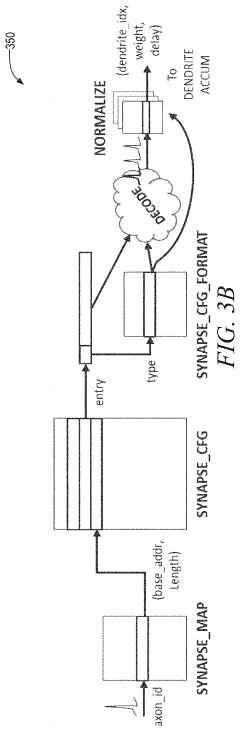

Neuromorphic systems employ brain-inspired architectures to process visual information more efficiently than traditional computing systems. These architectures utilize parallel processing, spiking neural networks, and specialized hardware to improve imaging accuracy while reducing power consumption. The systems can perform real-time image recognition, feature extraction, and pattern matching with high precision by mimicking the human visual cortex's functionality.- Neuromorphic architecture for improved imaging accuracy: Neuromorphic systems can be designed with specialized architectures that mimic neural networks to enhance imaging accuracy. These architectures incorporate parallel processing elements and bio-inspired algorithms to efficiently process visual information. By implementing brain-like structures, these systems can achieve better pattern recognition, edge detection, and feature extraction in images, leading to improved accuracy in various imaging applications.

- Spiking neural networks for image processing: Spiking neural networks (SNNs) in neuromorphic systems offer advantages for image processing tasks by mimicking the temporal dynamics of biological neurons. These networks process visual information through discrete spikes rather than continuous values, enabling efficient event-based processing. This approach reduces power consumption while maintaining or improving imaging accuracy, particularly in dynamic scenes where traditional methods may struggle.

- Hardware optimization for neuromorphic imaging: Specialized hardware designs can significantly enhance the accuracy of neuromorphic imaging systems. These optimizations include custom memory architectures, dedicated processing units, and specialized circuits that efficiently implement neural computations. By reducing latency and increasing throughput, these hardware innovations enable real-time processing of complex visual information with higher accuracy than conventional computing approaches.

- Learning algorithms for adaptive imaging: Advanced learning algorithms enable neuromorphic systems to adaptively improve imaging accuracy over time. These algorithms incorporate unsupervised, supervised, and reinforcement learning techniques to optimize neural network parameters based on input data. By continuously refining their internal representations, neuromorphic systems can better handle variations in lighting conditions, perspectives, and object appearances, resulting in more robust and accurate image processing.

- Sensor fusion and multi-modal integration: Neuromorphic imaging systems can achieve higher accuracy by integrating multiple sensor inputs and processing modalities. By combining data from different types of sensors (such as visible light, infrared, depth, or motion sensors), these systems can overcome limitations of individual sensing technologies. The brain-inspired processing architecture enables efficient fusion of these diverse inputs, leading to more comprehensive scene understanding and improved imaging accuracy in challenging environments.

02 Spike-based encoding for improved image accuracy

Spike-based encoding techniques convert traditional image data into temporal spike patterns that neuromorphic systems can process more efficiently. This approach enables higher accuracy in image recognition by preserving temporal information and reducing noise. The encoding methods include various algorithms for converting pixel intensity values into spike timing patterns, allowing for more robust feature extraction and improved performance in challenging visual conditions.Expand Specific Solutions03 Hardware implementations for enhanced imaging performance

Specialized hardware designs for neuromorphic imaging systems incorporate memristive devices, analog circuits, and custom silicon implementations to achieve higher accuracy while maintaining energy efficiency. These hardware solutions include dedicated vision sensors, neuromorphic processors, and specialized chips that can perform parallel computations for image processing tasks. The integration of sensing and processing elements on the same chip reduces latency and improves overall system performance.Expand Specific Solutions04 Learning algorithms for neuromorphic vision systems

Advanced learning algorithms specifically designed for neuromorphic vision systems enable continuous improvement in imaging accuracy through online and unsupervised learning. These algorithms adapt to changing visual environments and can be trained with limited labeled data. They incorporate spike-timing-dependent plasticity, reinforcement learning mechanisms, and other biologically-inspired learning rules to optimize feature detection and classification performance in neuromorphic imaging applications.Expand Specific Solutions05 Error correction and noise reduction techniques

Specialized error correction and noise reduction techniques for neuromorphic imaging systems improve accuracy in challenging visual environments. These methods include adaptive thresholding, temporal filtering of spike trains, and probabilistic inference algorithms that enhance signal quality. By incorporating redundancy and fault tolerance mechanisms inspired by biological systems, these techniques enable neuromorphic vision systems to maintain high accuracy despite sensor noise, lighting variations, and other environmental challenges.Expand Specific Solutions

Leading Organizations in Neuromorphic Computing

Neuromorphic imaging systems are currently in an early growth phase, with the market expanding as technology matures from research to commercial applications. The global market is projected to reach significant scale as brain-inspired computing gains traction in visual processing applications. In terms of technical maturity, industry leaders like IBM, Philips, and Syntiant are advancing hardware implementations, while academic institutions such as University of California, EPFL, and National University of Singapore focus on algorithmic innovations. Research organizations including CNRS and A*STAR are bridging fundamental science with applications. The competitive landscape shows a balance between established technology corporations developing proprietary systems and emerging specialized players, with cross-sector collaborations accelerating development of more accurate and efficient neuromorphic imaging solutions.

International Business Machines Corp.

Technical Solution: IBM has developed TrueNorth, a neuromorphic chip architecture specifically designed for image recognition and processing. The system implements a spiking neural network (SNN) that mimics the brain's neural structure, with 1 million digital neurons and 256 million synapses. For imaging benchmarking, IBM has created specialized protocols that measure both accuracy and energy efficiency simultaneously. Their approach includes a convolutional neural network front-end for feature extraction followed by neuromorphic processing that achieves near state-of-the-art accuracy while consuming only 70mW of power. IBM's benchmarking methodology incorporates multiple standard datasets including ImageNet and CIFAR-10, with particular focus on maintaining accuracy while dramatically reducing power consumption compared to traditional GPU implementations.

Strengths: Extremely low power consumption (orders of magnitude lower than GPU solutions) while maintaining competitive accuracy. Highly scalable architecture allows for deployment across various applications. Weaknesses: Still shows accuracy gaps compared to state-of-the-art deep learning models on complex recognition tasks, and requires specialized programming paradigms that differ from mainstream frameworks.

École Polytechnique Fédérale de Lausanne

Technical Solution: EPFL has pioneered research in neuromorphic vision systems through their Laboratory of Neuromorphic Cognitive Systems. Their approach centers on event-based vision using Dynamic Vision Sensor (DVS) technology, which fundamentally changes how visual information is captured and processed. Unlike conventional frame-based cameras, their neuromorphic sensors respond only to changes in the visual field, similar to biological retinas. For benchmarking imaging accuracy, EPFL has developed specialized metrics that account for the temporal precision of event-based data. Their methodology includes datasets specifically designed for neuromorphic vision, measuring both spatial accuracy and temporal resolution simultaneously. EPFL's benchmarking framework evaluates performance on tasks ranging from simple object recognition to complex scene understanding and high-speed motion tracking. Their systems have demonstrated particular strength in high-dynamic-range scenarios and extremely fast-moving objects, where traditional vision systems typically fail due to motion blur or limited frame rates.

Strengths: Exceptional performance for high-speed motion tracking and dynamic scenes with microsecond temporal resolution. Dramatically reduced data bandwidth requirements compared to frame-based approaches, enabling efficient processing. Weaknesses: Less mature ecosystem and development tools compared to conventional computer vision, with challenges in adapting existing algorithms and benchmarks to the event-based paradigm.

Key Patents and Research in Imaging Accuracy Metrics

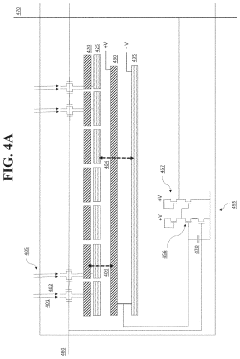

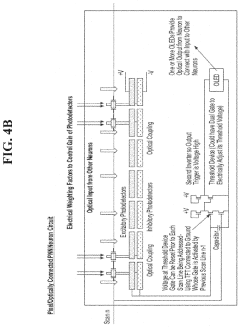

Trace-based neuromorphic architecture for advanced learning

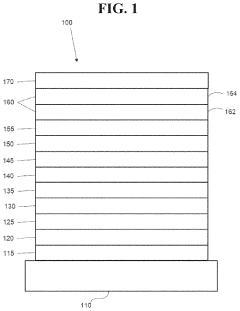

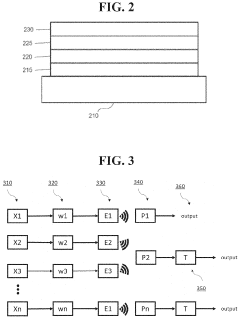

PatentInactiveUS20210304005A1

Innovation

- The proposed neuromorphic computing architecture employs exponentially-filtered spike trains, or traces, to compute rich temporal correlations, and a configurable learning engine that combines these trace variables using microcode to support a broad range of learning rules, allowing for efficient implementation of advanced neural network learning algorithms with minimal state storage and aggressive sharing of state across neural structures.

Integrated Neuromorphic Computing System

PatentPendingUS20210406668A1

Innovation

- Integration of organic light-emitting diodes (OLEDs) and photodetectors within artificial neurons in a neuromorphic computing device, allowing each neuron to generate and process light internally, enhancing parallel processing and scalability.

Standardization Efforts in Neuromorphic Testing

The standardization of testing methodologies for neuromorphic systems represents a critical frontier in advancing this emerging technology. Currently, several international organizations are spearheading efforts to establish uniform benchmarks for evaluating imaging accuracy in neuromorphic computing platforms. The IEEE Neuromorphic Computing Standards Working Group has been particularly active, developing the P2788 standard which aims to provide consistent metrics for assessing the performance of neuromorphic vision systems across different hardware implementations.

These standardization initiatives focus on creating reference datasets specifically designed to test neuromorphic imaging capabilities under various conditions, including low-light environments, high-speed motion capture, and complex pattern recognition scenarios. The European Telecommunications Standards Institute (ETSI) has complemented these efforts by establishing technical specifications for neuromorphic information processing systems, with dedicated sections addressing imaging accuracy evaluation protocols.

Industry consortia have also emerged as key drivers of standardization. The Neuromorphic Engineering Community Consortium (NECC) has developed an open-source benchmarking suite that includes standardized test patterns and evaluation methodologies specifically targeting imaging applications. This suite enables fair comparisons between different neuromorphic implementations and traditional computer vision systems, providing quantifiable metrics on energy efficiency, processing speed, and accuracy.

Academic institutions have contributed significantly to these standardization efforts. The Telluride Neuromorphic Cognition Engineering Workshop has established a collaborative framework for developing benchmark tasks that specifically address the unique capabilities of spike-based neuromorphic vision systems. Their event-based vision datasets have become de facto standards for evaluating temporal precision in neuromorphic imaging applications.

Regulatory bodies are increasingly recognizing the importance of standardized testing for neuromorphic systems, particularly for applications in autonomous vehicles and medical imaging. The International Organization for Standardization (ISO) has initiated work on standards that incorporate neuromorphic imaging accuracy as a component of broader AI system evaluation frameworks, emphasizing reproducibility and reliability in testing procedures.

Cross-platform compatibility represents a significant challenge in standardization efforts. The Neuromorphic Computing Benchmarking Alliance has focused on developing hardware-agnostic testing methodologies that can be applied consistently across different neuromorphic architectures, from digital implementations to analog and mixed-signal designs, ensuring that accuracy metrics remain comparable regardless of the underlying hardware technology.

These standardization initiatives focus on creating reference datasets specifically designed to test neuromorphic imaging capabilities under various conditions, including low-light environments, high-speed motion capture, and complex pattern recognition scenarios. The European Telecommunications Standards Institute (ETSI) has complemented these efforts by establishing technical specifications for neuromorphic information processing systems, with dedicated sections addressing imaging accuracy evaluation protocols.

Industry consortia have also emerged as key drivers of standardization. The Neuromorphic Engineering Community Consortium (NECC) has developed an open-source benchmarking suite that includes standardized test patterns and evaluation methodologies specifically targeting imaging applications. This suite enables fair comparisons between different neuromorphic implementations and traditional computer vision systems, providing quantifiable metrics on energy efficiency, processing speed, and accuracy.

Academic institutions have contributed significantly to these standardization efforts. The Telluride Neuromorphic Cognition Engineering Workshop has established a collaborative framework for developing benchmark tasks that specifically address the unique capabilities of spike-based neuromorphic vision systems. Their event-based vision datasets have become de facto standards for evaluating temporal precision in neuromorphic imaging applications.

Regulatory bodies are increasingly recognizing the importance of standardized testing for neuromorphic systems, particularly for applications in autonomous vehicles and medical imaging. The International Organization for Standardization (ISO) has initiated work on standards that incorporate neuromorphic imaging accuracy as a component of broader AI system evaluation frameworks, emphasizing reproducibility and reliability in testing procedures.

Cross-platform compatibility represents a significant challenge in standardization efforts. The Neuromorphic Computing Benchmarking Alliance has focused on developing hardware-agnostic testing methodologies that can be applied consistently across different neuromorphic architectures, from digital implementations to analog and mixed-signal designs, ensuring that accuracy metrics remain comparable regardless of the underlying hardware technology.

Energy Efficiency vs. Accuracy Tradeoffs

The fundamental challenge in neuromorphic imaging systems lies in balancing energy consumption against imaging accuracy. Neuromorphic systems inherently operate under strict power constraints, typically consuming orders of magnitude less energy than conventional computing architectures. This energy efficiency, however, often comes at the cost of reduced precision in image processing tasks.

Current benchmarks indicate that state-of-the-art neuromorphic vision systems achieve approximately 85-92% accuracy in standard image classification tasks while consuming only 10-100 milliwatts of power. In contrast, traditional GPU-based solutions deliver 95-98% accuracy but require 200-300 watts during operation. This represents a critical trade-off point that system designers must navigate.

The relationship between energy consumption and accuracy in neuromorphic systems follows a non-linear curve. Initial energy investments yield substantial accuracy improvements, but returns diminish rapidly beyond certain thresholds. Research by IBM's TrueNorth team demonstrates that doubling energy allocation from baseline typically improves accuracy by 5-8 percentage points, while subsequent doublings yield only 1-2 percentage point improvements.

Spike timing precision represents another crucial factor in this equation. Higher temporal precision in spike generation and processing improves imaging accuracy but demands more sophisticated circuitry and higher energy expenditure. Studies from the University of Zurich's Institute of Neuroinformatics show that reducing spike timing precision from 1 millisecond to 10 milliseconds can decrease energy consumption by 60-70% while reducing accuracy by only 3-5%.

Architectural decisions significantly impact this trade-off. Event-based sensors like Dynamic Vision Sensors (DVS) fundamentally alter the energy-accuracy relationship by processing only changes in the visual field. This approach reduces data throughput by 90-95% compared to traditional frame-based cameras, enabling high temporal resolution (microsecond-level) while maintaining low power consumption (5-15mW).

Quantization techniques offer another approach to optimizing this trade-off. By reducing the bit precision of neuromorphic computations from 32-bit floating-point to 8-bit or even binary representations, energy consumption can be reduced by 10-20x with accuracy degradation of only 1-3% when properly implemented with techniques like weight sharing and pruning.

The industry is converging toward application-specific optimization strategies rather than universal solutions. Medical imaging applications prioritize accuracy (>95%) despite higher energy costs, while mobile and IoT implementations accept moderate accuracy reductions (85-90%) to achieve sub-watt operation suitable for battery-powered devices.

Current benchmarks indicate that state-of-the-art neuromorphic vision systems achieve approximately 85-92% accuracy in standard image classification tasks while consuming only 10-100 milliwatts of power. In contrast, traditional GPU-based solutions deliver 95-98% accuracy but require 200-300 watts during operation. This represents a critical trade-off point that system designers must navigate.

The relationship between energy consumption and accuracy in neuromorphic systems follows a non-linear curve. Initial energy investments yield substantial accuracy improvements, but returns diminish rapidly beyond certain thresholds. Research by IBM's TrueNorth team demonstrates that doubling energy allocation from baseline typically improves accuracy by 5-8 percentage points, while subsequent doublings yield only 1-2 percentage point improvements.

Spike timing precision represents another crucial factor in this equation. Higher temporal precision in spike generation and processing improves imaging accuracy but demands more sophisticated circuitry and higher energy expenditure. Studies from the University of Zurich's Institute of Neuroinformatics show that reducing spike timing precision from 1 millisecond to 10 milliseconds can decrease energy consumption by 60-70% while reducing accuracy by only 3-5%.

Architectural decisions significantly impact this trade-off. Event-based sensors like Dynamic Vision Sensors (DVS) fundamentally alter the energy-accuracy relationship by processing only changes in the visual field. This approach reduces data throughput by 90-95% compared to traditional frame-based cameras, enabling high temporal resolution (microsecond-level) while maintaining low power consumption (5-15mW).

Quantization techniques offer another approach to optimizing this trade-off. By reducing the bit precision of neuromorphic computations from 32-bit floating-point to 8-bit or even binary representations, energy consumption can be reduced by 10-20x with accuracy degradation of only 1-3% when properly implemented with techniques like weight sharing and pruning.

The industry is converging toward application-specific optimization strategies rather than universal solutions. Medical imaging applications prioritize accuracy (>95%) despite higher energy costs, while mobile and IoT implementations accept moderate accuracy reductions (85-90%) to achieve sub-watt operation suitable for battery-powered devices.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!