How Neuromorphic Chips Enhance Computational Simplicity

SEP 5, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Background and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of the human brain. This approach emerged in the late 1980s when Carver Mead introduced the concept of using electronic analog circuits to mimic neuro-biological architectures. Since then, the field has evolved significantly, transitioning from theoretical frameworks to practical implementations in hardware.

The evolution of neuromorphic computing has been driven by the limitations of traditional von Neumann architectures, particularly in terms of energy efficiency and processing capabilities for certain types of workloads. Traditional computing systems face the "von Neumann bottleneck," where the separation between processing and memory components creates inefficiencies, especially for parallel processing tasks that neural networks excel at.

Recent technological advancements have accelerated development in this field, with significant breakthroughs in materials science, integrated circuit design, and neural network algorithms. The convergence of these disciplines has enabled the creation of more sophisticated neuromorphic chips that more closely emulate the brain's neural networks, synapses, and learning mechanisms.

The primary objective of neuromorphic computing is to achieve computational simplicity through brain-inspired design principles. This includes implementing massively parallel processing, co-locating memory and computation, and enabling event-driven computation. These features allow neuromorphic systems to process sensory data and perform pattern recognition tasks with remarkable efficiency compared to conventional computing systems.

Another critical goal is to dramatically reduce power consumption while maintaining or improving computational capabilities. The human brain operates on approximately 20 watts of power while performing complex cognitive tasks that would require supercomputers consuming megawatts of electricity. Neuromorphic chips aim to close this efficiency gap by leveraging similar architectural principles.

Looking forward, the field is trending toward more sophisticated integration of learning capabilities directly into hardware. This includes implementing spike-timing-dependent plasticity (STDP) and other biologically plausible learning rules that allow systems to adapt and learn from their environments without explicit programming.

The ultimate vision for neuromorphic computing extends beyond mere efficiency improvements to enabling entirely new computing paradigms. These systems could potentially support advanced artificial intelligence applications, real-time sensory processing for robotics, and ultra-low-power edge computing solutions that can operate continuously on limited energy resources.

The evolution of neuromorphic computing has been driven by the limitations of traditional von Neumann architectures, particularly in terms of energy efficiency and processing capabilities for certain types of workloads. Traditional computing systems face the "von Neumann bottleneck," where the separation between processing and memory components creates inefficiencies, especially for parallel processing tasks that neural networks excel at.

Recent technological advancements have accelerated development in this field, with significant breakthroughs in materials science, integrated circuit design, and neural network algorithms. The convergence of these disciplines has enabled the creation of more sophisticated neuromorphic chips that more closely emulate the brain's neural networks, synapses, and learning mechanisms.

The primary objective of neuromorphic computing is to achieve computational simplicity through brain-inspired design principles. This includes implementing massively parallel processing, co-locating memory and computation, and enabling event-driven computation. These features allow neuromorphic systems to process sensory data and perform pattern recognition tasks with remarkable efficiency compared to conventional computing systems.

Another critical goal is to dramatically reduce power consumption while maintaining or improving computational capabilities. The human brain operates on approximately 20 watts of power while performing complex cognitive tasks that would require supercomputers consuming megawatts of electricity. Neuromorphic chips aim to close this efficiency gap by leveraging similar architectural principles.

Looking forward, the field is trending toward more sophisticated integration of learning capabilities directly into hardware. This includes implementing spike-timing-dependent plasticity (STDP) and other biologically plausible learning rules that allow systems to adapt and learn from their environments without explicit programming.

The ultimate vision for neuromorphic computing extends beyond mere efficiency improvements to enabling entirely new computing paradigms. These systems could potentially support advanced artificial intelligence applications, real-time sensory processing for robotics, and ultra-low-power edge computing solutions that can operate continuously on limited energy resources.

Market Demand Analysis for Brain-Inspired Computing

The brain-inspired computing market is experiencing significant growth driven by the increasing demand for more efficient and intelligent computing solutions. Current projections indicate the neuromorphic computing market will reach approximately $8.9 billion by 2028, with a compound annual growth rate of 23.7% from 2023. This remarkable growth reflects the expanding applications across multiple industries seeking computational solutions that mimic human brain functionality.

The primary market demand stems from artificial intelligence and machine learning applications requiring real-time processing capabilities with lower power consumption. Traditional von Neumann architecture faces fundamental limitations in handling complex AI workloads efficiently, creating a substantial market gap that neuromorphic chips aim to fill. Industries including autonomous vehicles, robotics, and IoT devices particularly benefit from the computational simplicity offered by these brain-inspired architectures.

Healthcare represents another significant market segment, with neuromorphic computing showing promise in medical imaging analysis, patient monitoring systems, and drug discovery processes. The ability to process complex patterns efficiently while consuming minimal power makes these chips ideal for portable medical devices and point-of-care diagnostics, a market expected to grow at 19.2% annually through 2027.

The telecommunications sector demonstrates increasing interest in neuromorphic solutions for network optimization, signal processing, and real-time data analysis. As 5G and eventually 6G networks expand, the demand for edge computing solutions capable of processing massive data volumes with minimal latency continues to rise, creating additional market opportunities for brain-inspired computing architectures.

Financial services and cybersecurity applications represent emerging markets with substantial growth potential. Neuromorphic systems excel at anomaly detection and pattern recognition, making them valuable for fraud detection, algorithmic trading, and threat identification. Market analysis indicates financial institutions are increasing investments in these technologies by approximately 27% annually.

Geographically, North America currently leads market demand, accounting for roughly 42% of global neuromorphic computing investments. However, the Asia-Pacific region shows the fastest growth trajectory, with China, Japan, and South Korea making substantial investments in research and commercialization efforts. European markets focus primarily on industrial applications and automotive implementations of brain-inspired computing technologies.

Consumer electronics manufacturers increasingly explore neuromorphic solutions for next-generation devices requiring on-device intelligence with minimal power requirements. This segment represents a potentially massive market opportunity as these chips transition from specialized applications to mainstream consumer products over the next five years.

The primary market demand stems from artificial intelligence and machine learning applications requiring real-time processing capabilities with lower power consumption. Traditional von Neumann architecture faces fundamental limitations in handling complex AI workloads efficiently, creating a substantial market gap that neuromorphic chips aim to fill. Industries including autonomous vehicles, robotics, and IoT devices particularly benefit from the computational simplicity offered by these brain-inspired architectures.

Healthcare represents another significant market segment, with neuromorphic computing showing promise in medical imaging analysis, patient monitoring systems, and drug discovery processes. The ability to process complex patterns efficiently while consuming minimal power makes these chips ideal for portable medical devices and point-of-care diagnostics, a market expected to grow at 19.2% annually through 2027.

The telecommunications sector demonstrates increasing interest in neuromorphic solutions for network optimization, signal processing, and real-time data analysis. As 5G and eventually 6G networks expand, the demand for edge computing solutions capable of processing massive data volumes with minimal latency continues to rise, creating additional market opportunities for brain-inspired computing architectures.

Financial services and cybersecurity applications represent emerging markets with substantial growth potential. Neuromorphic systems excel at anomaly detection and pattern recognition, making them valuable for fraud detection, algorithmic trading, and threat identification. Market analysis indicates financial institutions are increasing investments in these technologies by approximately 27% annually.

Geographically, North America currently leads market demand, accounting for roughly 42% of global neuromorphic computing investments. However, the Asia-Pacific region shows the fastest growth trajectory, with China, Japan, and South Korea making substantial investments in research and commercialization efforts. European markets focus primarily on industrial applications and automotive implementations of brain-inspired computing technologies.

Consumer electronics manufacturers increasingly explore neuromorphic solutions for next-generation devices requiring on-device intelligence with minimal power requirements. This segment represents a potentially massive market opportunity as these chips transition from specialized applications to mainstream consumer products over the next five years.

Current Neuromorphic Chip Technology Landscape

The neuromorphic computing landscape has evolved significantly over the past decade, with major technological advancements from both academic institutions and industry leaders. Currently, several prominent neuromorphic chip architectures dominate the market, each with distinct approaches to brain-inspired computing. IBM's TrueNorth represents one of the pioneering commercial neuromorphic systems, featuring 1 million digital neurons and 256 million synapses organized across 4,096 neurosynaptic cores, optimized for pattern recognition tasks while consuming minimal power.

Intel's Loihi chip series has demonstrated remarkable progress, with the second-generation Loihi 2 offering 1 million neurons and implementing a more sophisticated neuromorphic architecture that supports various learning paradigms. The chip excels in sparse data processing and demonstrates up to 1,000 times better energy efficiency than conventional computing systems for certain workloads.

BrainChip's Akida neuromorphic processor focuses on edge computing applications, providing ultra-low power consumption for AI inference tasks. Its event-based processing architecture enables efficient operation in resource-constrained environments, making it suitable for IoT devices and autonomous systems.

SynSense (formerly aiCTX) has developed the DynapCNN chip, which combines neuromorphic principles with convolutional neural network architectures, achieving exceptional energy efficiency for visual processing tasks. The chip processes information asynchronously, similar to biological neural systems.

Academic research continues to drive innovation, with notable projects including the University of Manchester's SpiNNaker system, which utilizes a massive parallel architecture of ARM processors to simulate large-scale neural networks. The European Human Brain Project has also contributed significantly to neuromorphic hardware development through initiatives like the BrainScaleS system.

The current technological landscape reveals several key trends. First, there is a growing convergence between traditional deep learning accelerators and neuromorphic architectures, with hybrid approaches emerging to leverage the strengths of both paradigms. Second, the focus on edge computing applications is intensifying, with neuromorphic chips increasingly optimized for deployment in resource-constrained environments. Third, advancements in materials science are enabling more efficient analog computing elements that better mimic biological neural functions.

Manufacturing processes for neuromorphic chips have also evolved, with most current designs implemented in 14nm to 7nm process nodes. However, emerging 3D integration techniques and novel materials like memristors and phase-change memory are beginning to influence next-generation designs, promising even greater efficiency and computational density.

Intel's Loihi chip series has demonstrated remarkable progress, with the second-generation Loihi 2 offering 1 million neurons and implementing a more sophisticated neuromorphic architecture that supports various learning paradigms. The chip excels in sparse data processing and demonstrates up to 1,000 times better energy efficiency than conventional computing systems for certain workloads.

BrainChip's Akida neuromorphic processor focuses on edge computing applications, providing ultra-low power consumption for AI inference tasks. Its event-based processing architecture enables efficient operation in resource-constrained environments, making it suitable for IoT devices and autonomous systems.

SynSense (formerly aiCTX) has developed the DynapCNN chip, which combines neuromorphic principles with convolutional neural network architectures, achieving exceptional energy efficiency for visual processing tasks. The chip processes information asynchronously, similar to biological neural systems.

Academic research continues to drive innovation, with notable projects including the University of Manchester's SpiNNaker system, which utilizes a massive parallel architecture of ARM processors to simulate large-scale neural networks. The European Human Brain Project has also contributed significantly to neuromorphic hardware development through initiatives like the BrainScaleS system.

The current technological landscape reveals several key trends. First, there is a growing convergence between traditional deep learning accelerators and neuromorphic architectures, with hybrid approaches emerging to leverage the strengths of both paradigms. Second, the focus on edge computing applications is intensifying, with neuromorphic chips increasingly optimized for deployment in resource-constrained environments. Third, advancements in materials science are enabling more efficient analog computing elements that better mimic biological neural functions.

Manufacturing processes for neuromorphic chips have also evolved, with most current designs implemented in 14nm to 7nm process nodes. However, emerging 3D integration techniques and novel materials like memristors and phase-change memory are beginning to influence next-generation designs, promising even greater efficiency and computational density.

Current Neuromorphic Architecture Solutions

01 Spike-based processing for computational efficiency

Neuromorphic chips employ spike-based processing to achieve computational simplicity by mimicking the brain's event-driven communication. This approach significantly reduces power consumption compared to traditional computing architectures by only processing information when necessary. The sparse temporal coding of information through discrete spikes allows for efficient parallel processing and reduces computational overhead, making complex pattern recognition tasks more energy-efficient.- Energy-efficient neuromorphic architectures: Neuromorphic chips achieve computational simplicity through energy-efficient architectures that mimic the brain's neural networks. These designs significantly reduce power consumption compared to traditional computing systems by implementing event-driven processing and sparse activations. The architecture allows for parallel processing of information with minimal energy expenditure, making them suitable for edge computing applications where power constraints are critical.

- Spike-based processing mechanisms: Neuromorphic chips utilize spike-based processing mechanisms that simplify computation by transmitting information only when necessary. This approach reduces computational complexity by encoding information in the timing and frequency of spikes rather than continuous signals. The spike-based model enables efficient processing of temporal data patterns and facilitates unsupervised learning algorithms that can adapt to new information without extensive retraining.

- Memristive device integration: The integration of memristive devices in neuromorphic chips simplifies computational processes by enabling in-memory computing. These devices can simultaneously store and process information, eliminating the traditional von Neumann bottleneck between memory and processing units. Memristors provide analog-like behavior that closely resembles biological synapses, allowing for more efficient implementation of neural network operations and reducing the complexity of hardware required for machine learning tasks.

- Simplified learning algorithms: Neuromorphic chips implement simplified learning algorithms that reduce computational complexity compared to traditional deep learning approaches. These algorithms often utilize local learning rules such as spike-timing-dependent plasticity (STDP) that require only information available at the synapse level. This localized learning eliminates the need for complex backpropagation calculations and global weight updates, resulting in more efficient training processes and enabling on-chip learning capabilities.

- Hybrid neuromorphic computing systems: Hybrid neuromorphic computing systems combine traditional digital processing with neuromorphic elements to achieve computational simplicity for specific applications. These systems leverage the strengths of both approaches, using neuromorphic components for pattern recognition and sensory processing while utilizing conventional processors for precise calculations. The hybrid architecture simplifies complex tasks by distributing computation across specialized components, resulting in more efficient overall system performance for applications like computer vision and sensor fusion.

02 Memory-processing integration for reduced complexity

Neuromorphic architectures achieve computational simplicity by integrating memory and processing elements, eliminating the traditional von Neumann bottleneck. This co-location of memory and computation reduces data movement, which is a major source of energy consumption in conventional computing systems. The integration enables in-memory computing where calculations occur directly within memory arrays, significantly simplifying the computational architecture and reducing latency for neural network operations.Expand Specific Solutions03 Analog computing elements for simplified neural operations

Neuromorphic chips utilize analog computing elements to perform neural operations in a more direct and simplified manner than digital systems. These analog components naturally implement complex mathematical functions required for neural processing, such as multiplication and integration, with fewer transistors than their digital counterparts. This approach allows for more compact implementation of neural networks and reduces the complexity of performing operations like weight multiplication and summation.Expand Specific Solutions04 Adaptive learning mechanisms for simplified training

Neuromorphic chips incorporate adaptive learning mechanisms that simplify the training process through local learning rules like spike-timing-dependent plasticity (STDP). These biologically-inspired learning approaches enable on-chip learning without complex backpropagation algorithms, reducing computational requirements for neural network training. The self-organizing nature of these systems allows them to adapt to input patterns with minimal external supervision, simplifying deployment in real-world applications.Expand Specific Solutions05 Specialized hardware accelerators for neural computations

Neuromorphic chips employ specialized hardware accelerators designed specifically for neural computations, simplifying the execution of common neural network operations. These dedicated circuits optimize operations like convolution, pooling, and activation functions at the hardware level, reducing the computational complexity compared to general-purpose processors. The specialized architecture enables efficient parallel processing of neural network layers, significantly reducing the computational steps required for inference tasks.Expand Specific Solutions

Key Industry Players in Neuromorphic Chip Development

Neuromorphic computing is currently in an early growth phase, with the market expected to expand significantly due to increasing demand for AI applications requiring efficient processing. The global market size is projected to reach several billion dollars by 2030, driven by applications in edge computing, IoT, and autonomous systems. Leading players like IBM, Samsung, and Syntiant are advancing the technology through different approaches, with IBM focusing on cognitive computing architectures, Samsung developing neuromorphic memory solutions, and Syntiant creating ultra-low-power neural processors. Academic institutions including KAIST, Tsinghua University, and research labs from companies like Huawei and SK hynix are contributing significant innovations, particularly in energy efficiency and novel materials for neuromorphic systems.

International Business Machines Corp.

Technical Solution: IBM's TrueNorth neuromorphic chip architecture represents a revolutionary approach to computational simplicity through brain-inspired design. The chip contains one million digital neurons and 256 million synapses organized into 4,096 neurosynaptic cores[1]. Unlike traditional von Neumann architectures that separate memory and processing, TrueNorth integrates these functions, enabling parallel processing with significantly reduced power consumption - operating at just 70mW during real-time operation[2]. IBM has further developed SpiNNaker (Spiking Neural Network Architecture), which can simulate up to a billion neurons in real-time, representing approximately 1% of the human brain's neural network[3]. The architecture employs event-driven computation where neurons only communicate when necessary through "spikes," eliminating the need for constant clock-based operations and dramatically reducing power requirements while maintaining computational efficiency.

Strengths: Extremely low power consumption (20mW/cm²) compared to traditional chips; highly parallel architecture enabling simultaneous processing across thousands of cores; event-driven design eliminates power waste during inactive periods. Weaknesses: Programming complexity requires specialized knowledge of spiking neural networks; limited to specific applications optimized for neural processing; challenges in scaling to commercial production volumes.

Syntiant Corp.

Technical Solution: Syntiant has developed the Neural Decision Processor (NDP) series, specifically designed for edge AI applications requiring ultra-low power consumption. Their NDP200 chip implements a neuromorphic architecture that processes information in a manner similar to the human brain, with particular focus on always-on audio and sensor applications[1]. The chip achieves sub-milliwatt power consumption while performing complex deep learning inference tasks, representing orders of magnitude improvement over conventional digital signal processors. Syntiant's architecture employs analog computation in memory, eliminating the energy-intensive data movement between memory and processing units that plagues traditional computing architectures[2]. This approach enables the chip to perform neural network operations directly within memory arrays, dramatically reducing both computational complexity and energy requirements. The NDP architecture supports both convolutional neural networks and recurrent neural networks optimized for temporal pattern recognition, making it particularly effective for speech recognition and sensor data processing applications.

Strengths: Extremely low power consumption (under 1mW) enables always-on applications in battery-powered devices; specialized for audio and sensor processing with high accuracy; compact form factor suitable for integration in small consumer devices. Weaknesses: Limited application scope primarily focused on audio/sensor processing; less flexible than general-purpose processors for diverse computational tasks; proprietary development environment creates ecosystem dependencies.

Core Innovations in Spiking Neural Networks

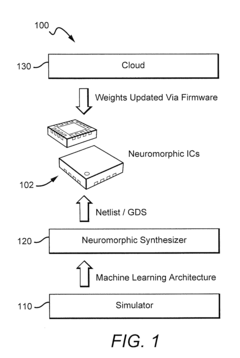

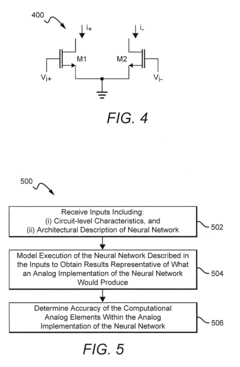

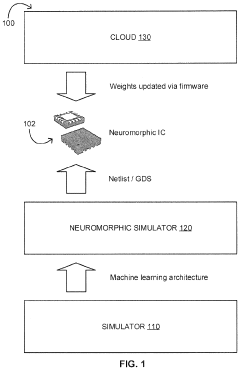

Systems And Methods For Determining Circuit-Level Effects On Classifier Accuracy

PatentActiveUS20190065962A1

Innovation

- The development of neuromorphic chips that simulate 'silicon' neurons, processing information in parallel with bursts of electric current at non-uniform intervals, and the use of systems and methods to model the effects of circuit-level characteristics on neural networks, such as thermal noise and weight inaccuracies, to optimize their performance.

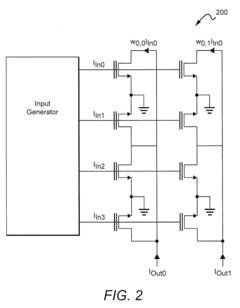

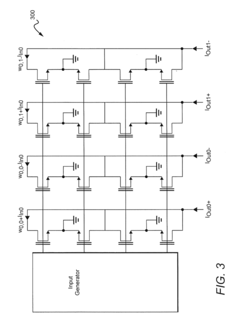

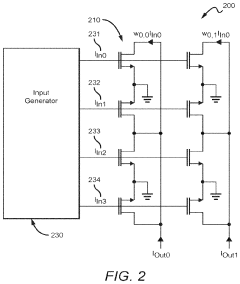

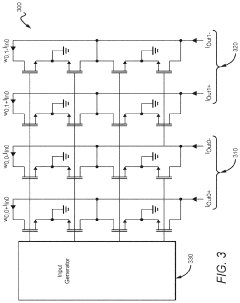

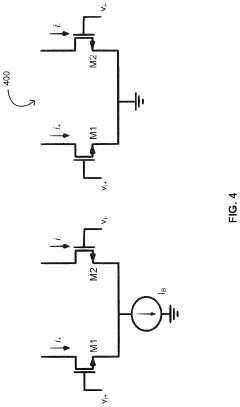

Systems and Methods of Sparsity Exploiting

PatentInactiveUS20240095510A1

Innovation

- A neuromorphic integrated circuit with a multi-layered neural network in an analog multiplier array, where two-quadrant multipliers are wired to ground and draw negligible current when input signal or weight values are zero, promoting sparsity and minimizing power consumption, and a method to train the network to drive weight values toward zero using a training algorithm.

Energy Efficiency Benchmarks and Metrics

Neuromorphic chips demonstrate remarkable energy efficiency compared to traditional computing architectures, establishing new benchmarks in computational performance per watt. Current measurements indicate that neuromorphic systems can achieve energy savings of 100-1000x over conventional von Neumann architectures when performing equivalent neural processing tasks. This efficiency stems from their event-driven processing paradigm, which activates computational resources only when necessary, eliminating the constant power drain associated with clock-driven systems.

Standard metrics for evaluating neuromorphic chip energy efficiency include synaptic operations per second per watt (SOPS/W), which measures computational throughput relative to power consumption. Leading neuromorphic implementations such as Intel's Loihi and IBM's TrueNorth have demonstrated 2-3 orders of magnitude improvement in this metric compared to GPU implementations of similar neural networks. Additionally, the energy per spike metric provides insight into the fundamental energy cost of information processing in these systems.

Power density measurements reveal another advantage of neuromorphic architectures. While traditional high-performance computing systems often operate at 100-300 W/cm², creating significant cooling challenges, neuromorphic chips typically function at 10-50 W/cm², substantially reducing thermal management requirements and associated infrastructure costs.

Benchmark testing across various application domains shows particularly impressive efficiency gains in continuous sensory processing tasks. For instance, in always-on vision applications, neuromorphic solutions consume merely 1-5% of the energy required by conventional digital signal processors. This efficiency differential becomes even more pronounced in sparse data environments where traditional architectures waste significant energy processing null or redundant information.

The energy scaling characteristics of neuromorphic systems also merit attention. Unlike traditional architectures where performance and energy consumption scale linearly or super-linearly with problem size, neuromorphic systems demonstrate sub-linear energy scaling for many workloads. This property becomes increasingly valuable as applications grow in complexity and data volume.

Standardization efforts are currently underway to establish industry-wide energy efficiency benchmarks specifically tailored to neuromorphic computing. These initiatives aim to create fair comparison frameworks that account for the fundamental architectural differences between neuromorphic and conventional systems, enabling more accurate assessment of their relative advantages across diverse application scenarios.

Standard metrics for evaluating neuromorphic chip energy efficiency include synaptic operations per second per watt (SOPS/W), which measures computational throughput relative to power consumption. Leading neuromorphic implementations such as Intel's Loihi and IBM's TrueNorth have demonstrated 2-3 orders of magnitude improvement in this metric compared to GPU implementations of similar neural networks. Additionally, the energy per spike metric provides insight into the fundamental energy cost of information processing in these systems.

Power density measurements reveal another advantage of neuromorphic architectures. While traditional high-performance computing systems often operate at 100-300 W/cm², creating significant cooling challenges, neuromorphic chips typically function at 10-50 W/cm², substantially reducing thermal management requirements and associated infrastructure costs.

Benchmark testing across various application domains shows particularly impressive efficiency gains in continuous sensory processing tasks. For instance, in always-on vision applications, neuromorphic solutions consume merely 1-5% of the energy required by conventional digital signal processors. This efficiency differential becomes even more pronounced in sparse data environments where traditional architectures waste significant energy processing null or redundant information.

The energy scaling characteristics of neuromorphic systems also merit attention. Unlike traditional architectures where performance and energy consumption scale linearly or super-linearly with problem size, neuromorphic systems demonstrate sub-linear energy scaling for many workloads. This property becomes increasingly valuable as applications grow in complexity and data volume.

Standardization efforts are currently underway to establish industry-wide energy efficiency benchmarks specifically tailored to neuromorphic computing. These initiatives aim to create fair comparison frameworks that account for the fundamental architectural differences between neuromorphic and conventional systems, enabling more accurate assessment of their relative advantages across diverse application scenarios.

Hardware-Software Co-design Strategies

Neuromorphic computing represents a paradigm shift in hardware architecture that demands equally innovative approaches to software development. The hardware-software co-design strategy for neuromorphic chips focuses on creating integrated solutions where both components evolve symbiotically, maximizing computational simplicity and efficiency.

Traditional computing architectures have long maintained a clear separation between hardware and software development cycles, often resulting in suboptimal performance when one must adapt to the limitations of the other. Neuromorphic systems, however, require a fundamentally different approach where hardware capabilities directly inform software design and vice versa.

Effective co-design strategies begin with neuromorphic-specific programming models that leverage the inherent parallelism and event-driven nature of these chips. Spiking Neural Network (SNN) frameworks such as IBM's TrueNorth Neurosynaptic System and Intel's Loihi development environment exemplify this approach, providing programming abstractions that map naturally to the underlying neuromorphic hardware.

Memory management represents another critical co-design consideration. Unlike conventional von Neumann architectures, neuromorphic systems often feature distributed memory closely integrated with processing elements. Software must be designed to exploit this characteristic through locality-aware algorithms that minimize data movement and maximize computational efficiency.

Power management co-design strategies are particularly important for neuromorphic systems, which derive much of their efficiency from event-driven computation. Software frameworks must incorporate power-aware scheduling and resource allocation mechanisms that activate only the necessary neural circuits for specific tasks, maintaining the power efficiency advantages inherent to neuromorphic hardware.

Compiler technologies for neuromorphic systems represent perhaps the most sophisticated aspect of co-design. These specialized compilers must translate high-level neural network descriptions into optimized spike-based implementations, considering factors such as timing constraints, network topology, and synaptic plasticity mechanisms supported by the target hardware.

Testing and verification methodologies must also evolve within this co-design paradigm. Traditional software testing approaches prove inadequate for neuromorphic systems, necessitating new frameworks that can verify both deterministic and probabilistic behaviors across varying temporal dynamics and network configurations.

The ultimate goal of hardware-software co-design for neuromorphic systems is to create a seamless development environment where algorithm designers can focus on solving problems rather than managing hardware complexities, while still leveraging the full computational simplicity advantages that neuromorphic architectures offer.

Traditional computing architectures have long maintained a clear separation between hardware and software development cycles, often resulting in suboptimal performance when one must adapt to the limitations of the other. Neuromorphic systems, however, require a fundamentally different approach where hardware capabilities directly inform software design and vice versa.

Effective co-design strategies begin with neuromorphic-specific programming models that leverage the inherent parallelism and event-driven nature of these chips. Spiking Neural Network (SNN) frameworks such as IBM's TrueNorth Neurosynaptic System and Intel's Loihi development environment exemplify this approach, providing programming abstractions that map naturally to the underlying neuromorphic hardware.

Memory management represents another critical co-design consideration. Unlike conventional von Neumann architectures, neuromorphic systems often feature distributed memory closely integrated with processing elements. Software must be designed to exploit this characteristic through locality-aware algorithms that minimize data movement and maximize computational efficiency.

Power management co-design strategies are particularly important for neuromorphic systems, which derive much of their efficiency from event-driven computation. Software frameworks must incorporate power-aware scheduling and resource allocation mechanisms that activate only the necessary neural circuits for specific tasks, maintaining the power efficiency advantages inherent to neuromorphic hardware.

Compiler technologies for neuromorphic systems represent perhaps the most sophisticated aspect of co-design. These specialized compilers must translate high-level neural network descriptions into optimized spike-based implementations, considering factors such as timing constraints, network topology, and synaptic plasticity mechanisms supported by the target hardware.

Testing and verification methodologies must also evolve within this co-design paradigm. Traditional software testing approaches prove inadequate for neuromorphic systems, necessitating new frameworks that can verify both deterministic and probabilistic behaviors across varying temporal dynamics and network configurations.

The ultimate goal of hardware-software co-design for neuromorphic systems is to create a seamless development environment where algorithm designers can focus on solving problems rather than managing hardware complexities, while still leveraging the full computational simplicity advantages that neuromorphic architectures offer.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!