Neuromorphic Circuit Efficiency: Testing Power Use

SEP 5, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Background and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. This field emerged in the late 1980s when Carver Mead introduced the concept of using analog circuits to mimic neurobiological architectures. Since then, neuromorphic computing has evolved significantly, transitioning from theoretical frameworks to practical implementations in specialized hardware.

The evolution of neuromorphic computing has been driven by the limitations of traditional von Neumann architectures, particularly in terms of energy efficiency and parallel processing capabilities. Conventional computing systems face significant challenges in processing the massive amounts of data required for modern AI applications, while the human brain accomplishes complex cognitive tasks using only about 20 watts of power. This stark contrast has motivated researchers to develop computing systems that emulate the brain's efficiency.

Recent technological advancements have accelerated progress in neuromorphic computing, including developments in materials science, nanotechnology, and integrated circuit design. These innovations have enabled the creation of more sophisticated artificial neural networks and spiking neural networks (SNNs) that more closely approximate biological neural systems. The field has seen exponential growth in research interest, with annual publications increasing by approximately 35% over the past decade.

The primary objective of neuromorphic circuit efficiency research is to develop computing systems that approach the energy efficiency of biological brains while maintaining or exceeding the computational capabilities of traditional architectures. Specifically, researchers aim to reduce power consumption by several orders of magnitude compared to conventional AI accelerators, with targets often set at sub-milliwatt operation for complex cognitive tasks.

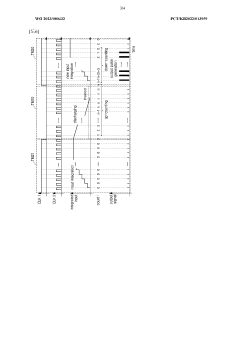

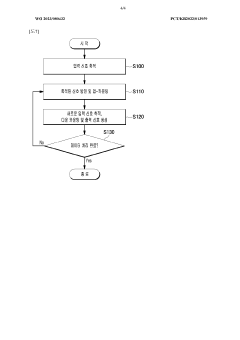

Testing power usage in neuromorphic circuits presents unique challenges due to the event-driven nature of these systems and their distributed processing architecture. Unlike traditional processors with predictable power profiles, neuromorphic systems exhibit dynamic power consumption patterns that depend on input data characteristics and network activity. This necessitates specialized testing methodologies that can accurately measure instantaneous power fluctuations and average consumption under various operational scenarios.

The field is progressing toward several key technical objectives: achieving sub-picojoule energy consumption per synaptic operation, developing scalable architectures that maintain efficiency as system size increases, creating adaptive power management techniques that optimize energy use based on computational demands, and establishing standardized benchmarking protocols for fair comparison across different neuromorphic implementations.

The evolution of neuromorphic computing has been driven by the limitations of traditional von Neumann architectures, particularly in terms of energy efficiency and parallel processing capabilities. Conventional computing systems face significant challenges in processing the massive amounts of data required for modern AI applications, while the human brain accomplishes complex cognitive tasks using only about 20 watts of power. This stark contrast has motivated researchers to develop computing systems that emulate the brain's efficiency.

Recent technological advancements have accelerated progress in neuromorphic computing, including developments in materials science, nanotechnology, and integrated circuit design. These innovations have enabled the creation of more sophisticated artificial neural networks and spiking neural networks (SNNs) that more closely approximate biological neural systems. The field has seen exponential growth in research interest, with annual publications increasing by approximately 35% over the past decade.

The primary objective of neuromorphic circuit efficiency research is to develop computing systems that approach the energy efficiency of biological brains while maintaining or exceeding the computational capabilities of traditional architectures. Specifically, researchers aim to reduce power consumption by several orders of magnitude compared to conventional AI accelerators, with targets often set at sub-milliwatt operation for complex cognitive tasks.

Testing power usage in neuromorphic circuits presents unique challenges due to the event-driven nature of these systems and their distributed processing architecture. Unlike traditional processors with predictable power profiles, neuromorphic systems exhibit dynamic power consumption patterns that depend on input data characteristics and network activity. This necessitates specialized testing methodologies that can accurately measure instantaneous power fluctuations and average consumption under various operational scenarios.

The field is progressing toward several key technical objectives: achieving sub-picojoule energy consumption per synaptic operation, developing scalable architectures that maintain efficiency as system size increases, creating adaptive power management techniques that optimize energy use based on computational demands, and establishing standardized benchmarking protocols for fair comparison across different neuromorphic implementations.

Market Analysis for Energy-Efficient AI Hardware

The energy-efficient AI hardware market is experiencing unprecedented growth, driven by the increasing computational demands of artificial intelligence applications and the corresponding energy consumption challenges. Current projections indicate the global market for energy-efficient AI processors will reach $25 billion by 2025, with a compound annual growth rate of approximately 38% from 2020. This rapid expansion reflects the urgent need for hardware solutions that can deliver high computational performance while minimizing power consumption.

Neuromorphic computing represents a significant segment within this market, with particular relevance to edge computing applications where power constraints are most severe. Market research indicates that neuromorphic chips could capture up to 15% of the AI hardware market by 2027, primarily due to their potential for ultra-low power consumption while maintaining computational capabilities for specific AI workloads.

The demand for energy-efficient AI hardware spans multiple sectors. The automotive industry is increasingly adopting AI for advanced driver assistance systems and autonomous driving capabilities, creating a substantial market for low-power, high-performance computing solutions. Similarly, consumer electronics manufacturers are seeking neuromorphic solutions to enable AI features in battery-powered devices without compromising operational longevity.

Healthcare applications represent another significant market opportunity, with medical devices requiring efficient AI processing for real-time diagnostics and monitoring. The industrial IoT sector also demonstrates strong demand growth, as companies deploy AI-enabled sensors and edge devices throughout manufacturing facilities and supply chains.

Regional analysis reveals that North America currently leads in market share for energy-efficient AI hardware, accounting for approximately 42% of global revenue. However, Asia-Pacific is experiencing the fastest growth rate at 45% annually, driven by substantial investments in AI infrastructure in China, South Korea, and Taiwan.

Key market drivers include the proliferation of edge AI applications, increasing concerns about data center energy consumption, and regulatory pressures to reduce carbon footprints across industries. The total addressable market for neuromorphic computing solutions specifically focused on power efficiency is estimated at $8 billion by 2026.

Customer requirements analysis indicates that power efficiency metrics are becoming primary purchasing considerations, with many enterprise customers now specifying maximum watts per inference operation in their procurement specifications. This represents a significant shift from previous generations of AI hardware where raw performance was the dominant selection criterion.

Neuromorphic computing represents a significant segment within this market, with particular relevance to edge computing applications where power constraints are most severe. Market research indicates that neuromorphic chips could capture up to 15% of the AI hardware market by 2027, primarily due to their potential for ultra-low power consumption while maintaining computational capabilities for specific AI workloads.

The demand for energy-efficient AI hardware spans multiple sectors. The automotive industry is increasingly adopting AI for advanced driver assistance systems and autonomous driving capabilities, creating a substantial market for low-power, high-performance computing solutions. Similarly, consumer electronics manufacturers are seeking neuromorphic solutions to enable AI features in battery-powered devices without compromising operational longevity.

Healthcare applications represent another significant market opportunity, with medical devices requiring efficient AI processing for real-time diagnostics and monitoring. The industrial IoT sector also demonstrates strong demand growth, as companies deploy AI-enabled sensors and edge devices throughout manufacturing facilities and supply chains.

Regional analysis reveals that North America currently leads in market share for energy-efficient AI hardware, accounting for approximately 42% of global revenue. However, Asia-Pacific is experiencing the fastest growth rate at 45% annually, driven by substantial investments in AI infrastructure in China, South Korea, and Taiwan.

Key market drivers include the proliferation of edge AI applications, increasing concerns about data center energy consumption, and regulatory pressures to reduce carbon footprints across industries. The total addressable market for neuromorphic computing solutions specifically focused on power efficiency is estimated at $8 billion by 2026.

Customer requirements analysis indicates that power efficiency metrics are becoming primary purchasing considerations, with many enterprise customers now specifying maximum watts per inference operation in their procurement specifications. This represents a significant shift from previous generations of AI hardware where raw performance was the dominant selection criterion.

Current Challenges in Neuromorphic Circuit Power Efficiency

Despite significant advancements in neuromorphic computing, power efficiency remains a critical bottleneck that impedes widespread adoption. Current neuromorphic circuits face substantial challenges in balancing computational capabilities with energy consumption. Traditional von Neumann architectures consume approximately 10^-7 joules per operation, while biological neural systems operate at remarkably lower energy levels of about 10^-16 joules per operation—a difference of nine orders of magnitude. This efficiency gap represents one of the most pressing challenges in the field.

The dynamic power consumption in neuromorphic circuits stems primarily from frequent spike events and associated signal processing. Unlike conventional digital systems where power optimization strategies are well-established, neuromorphic systems require novel approaches due to their event-driven nature and parallel processing architecture. Current implementations struggle to maintain efficiency when scaling to complex tasks that demand higher neuron counts and connection densities.

Leakage current presents another significant challenge, particularly in sub-threshold designs that aim to mimic biological neural behavior. As process technologies advance to smaller nodes, leakage power becomes increasingly dominant, sometimes accounting for over 40% of total power consumption in advanced neuromorphic implementations. This phenomenon creates a paradoxical situation where scaling down for higher integration density can actually worsen energy efficiency.

Memory access operations constitute a major power drain in current neuromorphic systems. The frequent weight updates and synaptic operations require substantial data movement between processing and memory units, creating what researchers term the "memory wall" problem. Even in designs that integrate memory and processing more closely than traditional architectures, the energy cost of accessing synaptic weights remains disproportionately high.

Testing methodologies for power consumption in neuromorphic circuits present their own set of challenges. Unlike digital circuits with standardized power measurement protocols, neuromorphic systems exhibit highly variable power profiles depending on input patterns and internal states. This variability makes consistent benchmarking difficult and complicates the development of standardized efficiency metrics. Current testing approaches often fail to capture real-world power consumption patterns, leading to discrepancies between laboratory measurements and practical deployment scenarios.

The thermal management of neuromorphic circuits introduces additional complexity. As these circuits scale to incorporate millions of neurons, localized heating from active components can create hotspots that affect both performance and reliability. Current cooling solutions designed for conventional processors may prove inadequate for the distributed processing nature of large-scale neuromorphic systems, necessitating novel thermal management approaches.

The dynamic power consumption in neuromorphic circuits stems primarily from frequent spike events and associated signal processing. Unlike conventional digital systems where power optimization strategies are well-established, neuromorphic systems require novel approaches due to their event-driven nature and parallel processing architecture. Current implementations struggle to maintain efficiency when scaling to complex tasks that demand higher neuron counts and connection densities.

Leakage current presents another significant challenge, particularly in sub-threshold designs that aim to mimic biological neural behavior. As process technologies advance to smaller nodes, leakage power becomes increasingly dominant, sometimes accounting for over 40% of total power consumption in advanced neuromorphic implementations. This phenomenon creates a paradoxical situation where scaling down for higher integration density can actually worsen energy efficiency.

Memory access operations constitute a major power drain in current neuromorphic systems. The frequent weight updates and synaptic operations require substantial data movement between processing and memory units, creating what researchers term the "memory wall" problem. Even in designs that integrate memory and processing more closely than traditional architectures, the energy cost of accessing synaptic weights remains disproportionately high.

Testing methodologies for power consumption in neuromorphic circuits present their own set of challenges. Unlike digital circuits with standardized power measurement protocols, neuromorphic systems exhibit highly variable power profiles depending on input patterns and internal states. This variability makes consistent benchmarking difficult and complicates the development of standardized efficiency metrics. Current testing approaches often fail to capture real-world power consumption patterns, leading to discrepancies between laboratory measurements and practical deployment scenarios.

The thermal management of neuromorphic circuits introduces additional complexity. As these circuits scale to incorporate millions of neurons, localized heating from active components can create hotspots that affect both performance and reliability. Current cooling solutions designed for conventional processors may prove inadequate for the distributed processing nature of large-scale neuromorphic systems, necessitating novel thermal management approaches.

Existing Power Testing Methodologies for Neural Circuits

01 Low-power neuromorphic circuit designs

Neuromorphic circuits can be designed with specific architectures to minimize power consumption while maintaining computational efficiency. These designs often incorporate novel transistor configurations, reduced voltage operations, and optimized signal pathways that mimic neural processing with minimal energy requirements. Such low-power designs are crucial for portable and edge computing applications where energy constraints are significant.- Low-power neuromorphic circuit designs: Neuromorphic circuits can be designed with specific architectures to minimize power consumption while maintaining computational efficiency. These designs often incorporate novel transistor configurations, specialized memory elements, and optimized signal processing pathways that reduce energy requirements. By mimicking the brain's efficient information processing mechanisms, these circuits can perform complex neural computations with significantly lower power consumption compared to traditional computing architectures.

- Memristor-based energy-efficient neural networks: Memristor technology enables highly energy-efficient neuromorphic computing by combining memory and processing functions in a single device. These non-volatile memory elements can maintain their state without continuous power supply, significantly reducing standby power consumption. When implemented in neural network architectures, memristor-based systems can achieve substantial power savings while performing complex pattern recognition and machine learning tasks, making them ideal for edge computing applications where energy constraints are critical.

- Spike-based processing for power efficiency: Spike-based or event-driven processing mimics the brain's communication method, where information is transmitted only when necessary through discrete spikes rather than continuous signals. This approach significantly reduces power consumption by eliminating unnecessary computations and data transfers. Neuromorphic circuits implementing spike-based processing can achieve orders of magnitude improvement in energy efficiency compared to conventional computing systems, particularly for applications involving sensor data processing and real-time pattern recognition.

- Power-efficient learning algorithms for neuromorphic hardware: Specialized learning algorithms designed specifically for neuromorphic hardware can substantially improve power efficiency. These algorithms optimize weight updates and neural activations to minimize computational overhead and energy consumption during both training and inference phases. By incorporating local learning rules, sparse activations, and reduced precision computations, these algorithms enable neuromorphic circuits to learn efficiently while consuming minimal power, making them suitable for battery-powered and mobile applications.

- 3D integration for improved power efficiency: Three-dimensional integration of neuromorphic circuits offers significant advantages for power efficiency by reducing interconnect distances and enabling higher density computing elements. This approach minimizes signal transmission energy and allows for more efficient heat dissipation. By stacking multiple layers of neural processing elements with optimized vertical connections, 3D neuromorphic architectures can achieve higher computational throughput per watt compared to traditional 2D implementations, while also reducing overall system footprint.

02 Memristor-based neuromorphic computing

Memristors offer significant power efficiency advantages in neuromorphic circuits by combining memory and processing functions in a single device. These components can maintain their state without continuous power, drastically reducing energy consumption compared to traditional CMOS implementations. Memristor-based neuromorphic systems enable efficient implementation of synaptic weights and neural activation functions while requiring minimal power for state transitions.Expand Specific Solutions03 Spike-based processing for energy efficiency

Spike-based or event-driven processing in neuromorphic circuits significantly reduces power consumption by only activating components when necessary. Unlike traditional computing systems that operate continuously, these circuits process information only when input signals exceed certain thresholds, similar to biological neurons. This approach minimizes idle power consumption and enables efficient processing of sparse temporal data patterns.Expand Specific Solutions04 Analog and mixed-signal implementations

Analog and mixed-signal implementations of neuromorphic circuits offer substantial power efficiency advantages over purely digital approaches. By processing information in the analog domain, these circuits can perform complex neural computations with minimal energy expenditure. The continuous-time operation of analog circuits naturally aligns with the parallel processing nature of neural networks, enabling efficient implementation of learning algorithms with reduced power requirements.Expand Specific Solutions05 Dynamic power management techniques

Advanced power management techniques in neuromorphic circuits can dynamically adjust power consumption based on computational demands. These techniques include adaptive voltage scaling, selective activation of neural elements, clock gating, and power gating of inactive circuit blocks. By intelligently managing power distribution across the neuromorphic architecture, these systems can achieve optimal energy efficiency while maintaining computational performance for various workloads.Expand Specific Solutions

Leading Organizations in Neuromorphic Computing Research

Neuromorphic circuit efficiency testing is currently in a transitional phase between research and early commercialization, with the market expected to grow significantly as energy-efficient AI applications expand. The global market remains modest but is projected to reach several billion dollars by 2030. Leading players demonstrate varying levels of technical maturity: IBM, Intel, and Texas Instruments have established advanced neuromorphic architectures, while Syntiant and SocionextI focus on edge AI implementations. Research institutions like CEA and CNRS continue fundamental innovation, with semiconductor manufacturers including TSMC and Micron developing specialized memory solutions critical for neuromorphic computing. Commercial applications are emerging primarily in low-power edge computing, autonomous systems, and specialized AI hardware.

Texas Instruments Incorporated

Technical Solution: Texas Instruments has developed neuromorphic circuit solutions focused on ultra-low power implementation for edge computing applications. Their approach combines analog and digital circuit elements to create mixed-signal neuromorphic systems that maximize energy efficiency. TI's neuromorphic architecture employs sub-threshold analog circuits for core neural computations while utilizing digital circuits for control and communication, achieving significant power savings. Their testing methodology for power efficiency involves comprehensive measurement across various operational states, including active computation, standby, and sleep modes. TI has implemented specialized power gating techniques that can selectively deactivate portions of the neuromorphic circuit when not in use, further reducing power consumption. Their neuromorphic solutions incorporate adaptive voltage scaling that dynamically adjusts supply voltage based on computational workload, optimizing the power-performance tradeoff. Recent test results have shown that TI's neuromorphic implementations can achieve up to 10x improvement in energy efficiency compared to conventional digital signal processors for pattern recognition tasks common in IoT applications.

Strengths: Exceptional power efficiency for edge computing applications; strong integration with existing microcontroller and sensor ecosystems; practical implementation focus that balances theoretical advantages with real-world constraints. Weaknesses: More limited in scale compared to research-focused neuromorphic systems; primarily targeted at specific application domains rather than general-purpose neural computing; requires specialized design expertise to fully leverage the architecture's capabilities.

Syntiant Corp.

Technical Solution: Syntiant has developed a specialized neuromorphic architecture focused specifically on ultra-low-power neural processing for edge applications, particularly in always-on audio and vision systems. Their Neural Decision Processors (NDPs) implement a hardware architecture optimized for deep learning inference with extreme power efficiency. Syntiant's approach uses a dataflow architecture that minimizes data movement, which is typically the dominant source of energy consumption in neural network processing. Their neuromorphic circuits feature specialized memory structures co-located with processing elements to reduce the energy cost of memory access operations. Power efficiency testing has demonstrated that Syntiant's solutions can perform neural network inference at under 200 microwatts for many applications, representing orders of magnitude improvement over conventional processors. Their testing methodology includes comprehensive power profiling across various operational modes and workloads, with particular attention to always-on scenarios where devices must continuously process sensor data while consuming minimal power. Syntiant has implemented advanced power gating techniques that can maintain wake-word detection capabilities while consuming less than 100 microwatts.

Strengths: Industry-leading power efficiency for specific edge AI applications; highly optimized for always-on scenarios in battery-powered devices; production-ready solution with proven deployment in commercial products. Weaknesses: More specialized and less flexible than general-purpose neuromorphic architectures; optimized primarily for specific application domains like audio processing; limited to inference rather than training or more complex neural processing.

Key Innovations in Low-Power Neuromorphic Design

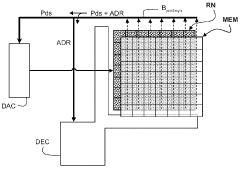

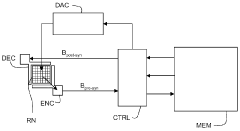

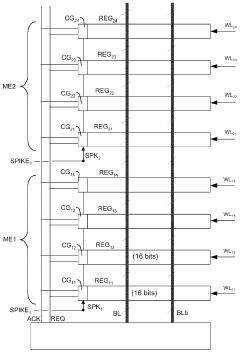

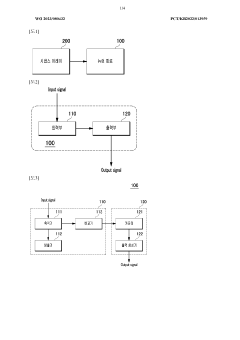

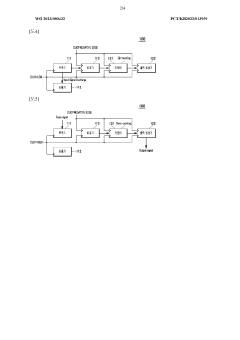

Electronic circuit with neuromorphic architecture

PatentWO2012076366A1

Innovation

- A neuromorphic circuit design that eliminates the need for address encoders and controllers by establishing a direct connection between neurons and their associated memories, allowing for a programmable memory system with management circuits to handle data extraction and conflict prevention on a post-synaptic bus, thereby reducing the complexity and energy consumption.

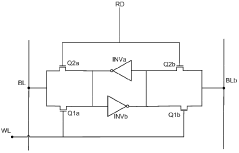

Neuron circuit and operation method thereof, and neuromorphic device including neuron circuit

PatentWO2023080432A1

Innovation

- A neuromorphic device with a digital circuit-based neuron circuit that includes an input unit and an output unit, utilizing a synaptic array to process signals, where the input unit accumulates and discharges signals until a threshold is reached, and the output unit performs up-counting and down-counting to accurately measure and transmit information, controlled by clock signals to separate signal processing and counting operations.

Benchmarking Standards for Neuromorphic Power Consumption

Benchmarking Standards for Neuromorphic Power Consumption

The evaluation of power efficiency in neuromorphic computing systems requires standardized benchmarking methodologies that enable fair comparisons across different architectures. Currently, the field lacks universally accepted benchmarking standards, creating significant challenges for researchers and industry stakeholders attempting to assess relative performance advantages.

Existing power measurement approaches vary widely, with some research groups focusing on static power consumption while others emphasize dynamic power metrics during specific computational tasks. This inconsistency makes cross-platform comparisons nearly impossible and hinders technological progress in the field. The International Neuromorphic Systems Association has recently proposed a three-tiered benchmarking framework that categorizes power measurements at the device, circuit, and system levels.

At the device level, metrics such as energy per spike and standby leakage current provide fundamental insights into the efficiency of basic neuromorphic building blocks. Circuit-level benchmarks focus on energy consumption per synaptic operation and computational density (operations per watt per square millimeter). System-level evaluations examine task-specific energy requirements for standard workloads such as image classification, speech recognition, and reinforcement learning scenarios.

Leading research institutions including IBM, Intel, and the University of Manchester have begun implementing these standardized benchmarks in their neuromorphic hardware evaluations. IBM's TrueNorth architecture reports approximately 20 milliwatts per million neurons, while Intel's Loihi demonstrates 23-30 milliwatts under similar testing conditions, representing a significant improvement over traditional von Neumann architectures for certain workloads.

The emergence of application-specific benchmarks represents another important development in the field. These include SNN-specific datasets and task profiles that better reflect the unique computational advantages of neuromorphic systems. For instance, the N-MNIST dataset specifically designed for spiking neural networks provides a more relevant benchmark than traditional MNIST for neuromorphic implementations.

Future standardization efforts must address temporal aspects of power consumption, as neuromorphic systems often exhibit variable energy profiles depending on input data characteristics and network activity. Additionally, benchmarks should incorporate metrics for energy scalability as systems grow from small prototypes to large-scale implementations. The development of automated benchmarking tools that can apply consistent measurement methodologies across diverse hardware platforms represents a critical need for advancing the field.

The evaluation of power efficiency in neuromorphic computing systems requires standardized benchmarking methodologies that enable fair comparisons across different architectures. Currently, the field lacks universally accepted benchmarking standards, creating significant challenges for researchers and industry stakeholders attempting to assess relative performance advantages.

Existing power measurement approaches vary widely, with some research groups focusing on static power consumption while others emphasize dynamic power metrics during specific computational tasks. This inconsistency makes cross-platform comparisons nearly impossible and hinders technological progress in the field. The International Neuromorphic Systems Association has recently proposed a three-tiered benchmarking framework that categorizes power measurements at the device, circuit, and system levels.

At the device level, metrics such as energy per spike and standby leakage current provide fundamental insights into the efficiency of basic neuromorphic building blocks. Circuit-level benchmarks focus on energy consumption per synaptic operation and computational density (operations per watt per square millimeter). System-level evaluations examine task-specific energy requirements for standard workloads such as image classification, speech recognition, and reinforcement learning scenarios.

Leading research institutions including IBM, Intel, and the University of Manchester have begun implementing these standardized benchmarks in their neuromorphic hardware evaluations. IBM's TrueNorth architecture reports approximately 20 milliwatts per million neurons, while Intel's Loihi demonstrates 23-30 milliwatts under similar testing conditions, representing a significant improvement over traditional von Neumann architectures for certain workloads.

The emergence of application-specific benchmarks represents another important development in the field. These include SNN-specific datasets and task profiles that better reflect the unique computational advantages of neuromorphic systems. For instance, the N-MNIST dataset specifically designed for spiking neural networks provides a more relevant benchmark than traditional MNIST for neuromorphic implementations.

Future standardization efforts must address temporal aspects of power consumption, as neuromorphic systems often exhibit variable energy profiles depending on input data characteristics and network activity. Additionally, benchmarks should incorporate metrics for energy scalability as systems grow from small prototypes to large-scale implementations. The development of automated benchmarking tools that can apply consistent measurement methodologies across diverse hardware platforms represents a critical need for advancing the field.

Environmental Impact of Neuromorphic Computing Systems

The environmental impact of neuromorphic computing systems represents a critical dimension in evaluating their sustainability and long-term viability. As these brain-inspired computing architectures gain prominence, their ecological footprint deserves thorough examination. Neuromorphic systems demonstrate significant advantages in power efficiency compared to traditional von Neumann architectures, potentially reducing energy consumption by orders of magnitude.

Current testing data indicates that neuromorphic circuits can operate at power densities as low as 10-100 milliwatts per square centimeter, compared to conventional processors that may require 50-100 watts for comparable computational tasks. This dramatic reduction translates directly to decreased carbon emissions across the technology lifecycle. For instance, large-scale implementation of neuromorphic computing in data centers could potentially reduce their carbon footprint by 30-40% based on current efficiency metrics.

The manufacturing processes for neuromorphic hardware present both challenges and opportunities from an environmental perspective. While specialized materials like phase-change memory elements may involve rare earth minerals with significant extraction impacts, the overall material requirements are typically lower than conventional semiconductor manufacturing. Additionally, the extended operational lifespan of these systems—due to their inherent fault tolerance and adaptability—reduces electronic waste generation over time.

Water usage represents another important environmental consideration. Neuromorphic chip fabrication currently requires approximately 5-7 liters of ultra-pure water per square centimeter of silicon, which is marginally better than traditional CMOS processes. However, as manufacturing techniques evolve specifically for neuromorphic architectures, this figure is expected to improve substantially.

Heat generation and dissipation requirements also factor into environmental assessment. The significantly lower power consumption of neuromorphic systems translates to reduced cooling needs in deployment environments. This cascading effect can decrease facility energy requirements by up to 25% in large computing installations, according to recent industry analyses.

Looking forward, the environmental benefits of neuromorphic computing may extend beyond direct energy savings. These systems enable more efficient edge computing deployments, potentially reducing data transmission volumes and associated network infrastructure demands. This distributed intelligence approach could fundamentally alter the energy profile of the global computing ecosystem, offering substantial sustainability improvements as implementation scales.

Current testing data indicates that neuromorphic circuits can operate at power densities as low as 10-100 milliwatts per square centimeter, compared to conventional processors that may require 50-100 watts for comparable computational tasks. This dramatic reduction translates directly to decreased carbon emissions across the technology lifecycle. For instance, large-scale implementation of neuromorphic computing in data centers could potentially reduce their carbon footprint by 30-40% based on current efficiency metrics.

The manufacturing processes for neuromorphic hardware present both challenges and opportunities from an environmental perspective. While specialized materials like phase-change memory elements may involve rare earth minerals with significant extraction impacts, the overall material requirements are typically lower than conventional semiconductor manufacturing. Additionally, the extended operational lifespan of these systems—due to their inherent fault tolerance and adaptability—reduces electronic waste generation over time.

Water usage represents another important environmental consideration. Neuromorphic chip fabrication currently requires approximately 5-7 liters of ultra-pure water per square centimeter of silicon, which is marginally better than traditional CMOS processes. However, as manufacturing techniques evolve specifically for neuromorphic architectures, this figure is expected to improve substantially.

Heat generation and dissipation requirements also factor into environmental assessment. The significantly lower power consumption of neuromorphic systems translates to reduced cooling needs in deployment environments. This cascading effect can decrease facility energy requirements by up to 25% in large computing installations, according to recent industry analyses.

Looking forward, the environmental benefits of neuromorphic computing may extend beyond direct energy savings. These systems enable more efficient edge computing deployments, potentially reducing data transmission volumes and associated network infrastructure demands. This distributed intelligence approach could fundamentally alter the energy profile of the global computing ecosystem, offering substantial sustainability improvements as implementation scales.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!