Neuromorphic Computing for IoT: Boosting Efficiency & Speed

SEP 5, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of the human brain. This bio-inspired approach emerged in the late 1980s when Carver Mead first introduced the concept, proposing electronic systems that mimic neuro-biological architectures. Since then, neuromorphic computing has evolved through several distinct phases, each marked by significant technological breakthroughs and expanding application domains.

The initial phase focused primarily on analog VLSI implementations that directly emulated neural functions. By the early 2000s, the field witnessed a transition toward hybrid analog-digital systems, offering improved scalability while maintaining energy efficiency. The current generation of neuromorphic systems incorporates advanced materials science innovations, including memristive devices and spintronic components, which enable more faithful replication of synaptic plasticity and neural dynamics.

In the context of Internet of Things (IoT), neuromorphic computing addresses critical limitations of conventional computing architectures. Traditional von Neumann architectures suffer from the "memory wall" problem—the bottleneck created by separate processing and memory units. This limitation becomes particularly problematic for IoT applications, where energy constraints and real-time processing requirements are paramount. Neuromorphic systems overcome these challenges through their inherently parallel processing capabilities and event-driven computation models.

The primary objectives of neuromorphic computing for IoT applications center around three key dimensions: ultra-low power consumption, real-time processing capabilities, and adaptive learning. Power efficiency represents perhaps the most critical goal, with neuromorphic systems aiming to achieve computational efficiencies measured in femtojoules per operation—orders of magnitude better than conventional architectures. This efficiency derives from the event-driven nature of neuromorphic computation, where energy is consumed only when information processing occurs.

Real-time processing capabilities constitute another fundamental objective, particularly for edge computing scenarios where latency constraints are stringent. By processing information in a parallel, distributed manner similar to biological neural networks, neuromorphic systems can deliver rapid responses to environmental stimuli without requiring cloud connectivity or substantial computational resources.

The third major objective involves implementing on-device learning and adaptation. Unlike traditional systems requiring pre-trained models, advanced neuromorphic architectures aim to incorporate unsupervised and reinforcement learning mechanisms that enable continuous adaptation to changing environments—a critical feature for autonomous IoT devices operating in dynamic settings.

The initial phase focused primarily on analog VLSI implementations that directly emulated neural functions. By the early 2000s, the field witnessed a transition toward hybrid analog-digital systems, offering improved scalability while maintaining energy efficiency. The current generation of neuromorphic systems incorporates advanced materials science innovations, including memristive devices and spintronic components, which enable more faithful replication of synaptic plasticity and neural dynamics.

In the context of Internet of Things (IoT), neuromorphic computing addresses critical limitations of conventional computing architectures. Traditional von Neumann architectures suffer from the "memory wall" problem—the bottleneck created by separate processing and memory units. This limitation becomes particularly problematic for IoT applications, where energy constraints and real-time processing requirements are paramount. Neuromorphic systems overcome these challenges through their inherently parallel processing capabilities and event-driven computation models.

The primary objectives of neuromorphic computing for IoT applications center around three key dimensions: ultra-low power consumption, real-time processing capabilities, and adaptive learning. Power efficiency represents perhaps the most critical goal, with neuromorphic systems aiming to achieve computational efficiencies measured in femtojoules per operation—orders of magnitude better than conventional architectures. This efficiency derives from the event-driven nature of neuromorphic computation, where energy is consumed only when information processing occurs.

Real-time processing capabilities constitute another fundamental objective, particularly for edge computing scenarios where latency constraints are stringent. By processing information in a parallel, distributed manner similar to biological neural networks, neuromorphic systems can deliver rapid responses to environmental stimuli without requiring cloud connectivity or substantial computational resources.

The third major objective involves implementing on-device learning and adaptation. Unlike traditional systems requiring pre-trained models, advanced neuromorphic architectures aim to incorporate unsupervised and reinforcement learning mechanisms that enable continuous adaptation to changing environments—a critical feature for autonomous IoT devices operating in dynamic settings.

IoT Market Demand for Energy-Efficient Computing

The Internet of Things (IoT) ecosystem is experiencing unprecedented growth, with projections indicating the number of connected devices will exceed 25 billion by 2025. This explosive expansion is driving a fundamental shift in computing requirements, particularly emphasizing the need for energy-efficient solutions. Traditional computing architectures are increasingly proving inadequate for IoT applications due to their high power consumption, creating a substantial market demand for alternative computing paradigms like neuromorphic systems.

Energy constraints represent the most significant challenge for IoT deployments. Battery-powered devices in remote locations, industrial settings, or medical implants require computing solutions that can operate for extended periods without recharging or replacement. Market research indicates that energy efficiency has become the primary consideration for 78% of IoT device manufacturers, surpassing even cost considerations in many applications.

The demand for real-time data processing at the edge is another critical market driver. With the volume of IoT-generated data growing exponentially, transmitting all information to centralized cloud servers has become impractical due to bandwidth limitations, latency issues, and energy costs. This has created a robust market for edge computing solutions that can process data locally while consuming minimal power.

Security requirements further amplify the need for efficient computing in IoT. As devices become more integrated into critical infrastructure and personal environments, implementing robust security measures becomes essential. However, conventional encryption and security protocols often demand significant computational resources, creating tension between security requirements and energy constraints.

Market segmentation reveals varying demands across industries. Healthcare IoT applications prioritize reliability and longevity, with devices often needing to function for years without maintenance. Industrial IoT emphasizes robustness in harsh environments while maintaining low power profiles. Consumer IoT focuses on cost-effectiveness while balancing performance needs.

The economic implications of energy-efficient IoT computing are substantial. Studies indicate that reducing power consumption by just 30% could save billions in operational costs across global IoT deployments. This economic incentive has created a competitive market landscape where vendors are racing to develop more efficient computing solutions.

Neuromorphic computing aligns perfectly with these market demands by mimicking the brain's energy-efficient information processing. The technology promises orders of magnitude improvement in energy efficiency while maintaining or enhancing computational capabilities for pattern recognition, sensor data processing, and decision-making—all critical functions in IoT applications.

Energy constraints represent the most significant challenge for IoT deployments. Battery-powered devices in remote locations, industrial settings, or medical implants require computing solutions that can operate for extended periods without recharging or replacement. Market research indicates that energy efficiency has become the primary consideration for 78% of IoT device manufacturers, surpassing even cost considerations in many applications.

The demand for real-time data processing at the edge is another critical market driver. With the volume of IoT-generated data growing exponentially, transmitting all information to centralized cloud servers has become impractical due to bandwidth limitations, latency issues, and energy costs. This has created a robust market for edge computing solutions that can process data locally while consuming minimal power.

Security requirements further amplify the need for efficient computing in IoT. As devices become more integrated into critical infrastructure and personal environments, implementing robust security measures becomes essential. However, conventional encryption and security protocols often demand significant computational resources, creating tension between security requirements and energy constraints.

Market segmentation reveals varying demands across industries. Healthcare IoT applications prioritize reliability and longevity, with devices often needing to function for years without maintenance. Industrial IoT emphasizes robustness in harsh environments while maintaining low power profiles. Consumer IoT focuses on cost-effectiveness while balancing performance needs.

The economic implications of energy-efficient IoT computing are substantial. Studies indicate that reducing power consumption by just 30% could save billions in operational costs across global IoT deployments. This economic incentive has created a competitive market landscape where vendors are racing to develop more efficient computing solutions.

Neuromorphic computing aligns perfectly with these market demands by mimicking the brain's energy-efficient information processing. The technology promises orders of magnitude improvement in energy efficiency while maintaining or enhancing computational capabilities for pattern recognition, sensor data processing, and decision-making—all critical functions in IoT applications.

Current Neuromorphic Technologies and Barriers

The neuromorphic computing landscape is currently dominated by several key technologies, each with distinct approaches to mimicking brain-like processing. Spiking Neural Networks (SNNs) represent the most prominent implementation, utilizing discrete spikes for information transmission rather than continuous values found in traditional neural networks. This event-driven processing enables significant power efficiency advantages, with systems like Intel's Loihi and IBM's TrueNorth demonstrating energy consumption reductions of up to 1000x compared to conventional computing architectures when handling sensor data processing tasks.

Memristive devices constitute another critical technology, functioning as artificial synapses that can maintain state without continuous power. These non-volatile memory elements enable in-memory computing paradigms that drastically reduce the energy costs associated with data movement between processing and memory units. Recent research has demonstrated memristor-based neuromorphic systems achieving 10-100x improvements in energy efficiency for pattern recognition tasks relevant to IoT applications.

Despite these advances, significant barriers impede widespread adoption of neuromorphic computing in IoT environments. The fabrication complexity of neuromorphic hardware presents a major challenge, as manufacturing processes for memristive materials and 3D integration of neuromorphic components remain inconsistent and costly. This results in limited scalability and higher production expenses compared to traditional semiconductor technologies.

Software development frameworks for neuromorphic systems remain immature, creating substantial programming barriers. The event-driven paradigm requires fundamentally different approaches to algorithm design, and the lack of standardized programming models forces developers to work with hardware-specific tools, limiting portability and increasing development costs.

Device reliability and variability issues persist across neuromorphic implementations. Memristive devices suffer from cycle-to-cycle variations and limited endurance, while analog computing elements exhibit sensitivity to environmental factors like temperature fluctuations—particularly problematic for IoT deployments in diverse environments.

The integration challenge with existing IoT infrastructure represents another significant barrier. Current neuromorphic systems typically operate as specialized accelerators rather than complete computing solutions, requiring complex system architectures to interface with conventional digital systems. This integration overhead can negate efficiency gains in smaller IoT devices with strict power and size constraints.

Finally, the lack of standardization across the neuromorphic ecosystem hampers interoperability and slows industry-wide adoption. Without established benchmarks and performance metrics specifically designed for neuromorphic computing, meaningful comparisons between different approaches remain difficult, creating uncertainty for potential adopters in the IoT space.

Memristive devices constitute another critical technology, functioning as artificial synapses that can maintain state without continuous power. These non-volatile memory elements enable in-memory computing paradigms that drastically reduce the energy costs associated with data movement between processing and memory units. Recent research has demonstrated memristor-based neuromorphic systems achieving 10-100x improvements in energy efficiency for pattern recognition tasks relevant to IoT applications.

Despite these advances, significant barriers impede widespread adoption of neuromorphic computing in IoT environments. The fabrication complexity of neuromorphic hardware presents a major challenge, as manufacturing processes for memristive materials and 3D integration of neuromorphic components remain inconsistent and costly. This results in limited scalability and higher production expenses compared to traditional semiconductor technologies.

Software development frameworks for neuromorphic systems remain immature, creating substantial programming barriers. The event-driven paradigm requires fundamentally different approaches to algorithm design, and the lack of standardized programming models forces developers to work with hardware-specific tools, limiting portability and increasing development costs.

Device reliability and variability issues persist across neuromorphic implementations. Memristive devices suffer from cycle-to-cycle variations and limited endurance, while analog computing elements exhibit sensitivity to environmental factors like temperature fluctuations—particularly problematic for IoT deployments in diverse environments.

The integration challenge with existing IoT infrastructure represents another significant barrier. Current neuromorphic systems typically operate as specialized accelerators rather than complete computing solutions, requiring complex system architectures to interface with conventional digital systems. This integration overhead can negate efficiency gains in smaller IoT devices with strict power and size constraints.

Finally, the lack of standardization across the neuromorphic ecosystem hampers interoperability and slows industry-wide adoption. Without established benchmarks and performance metrics specifically designed for neuromorphic computing, meaningful comparisons between different approaches remain difficult, creating uncertainty for potential adopters in the IoT space.

Current Neuromorphic Solutions for IoT Applications

01 Memristor-based neuromorphic architectures

Memristor-based neuromorphic computing systems offer significant improvements in processing efficiency and speed by mimicking the brain's neural structure. These architectures utilize memristive devices to implement synaptic functions, enabling parallel processing and reducing power consumption compared to traditional computing systems. The non-volatile nature of memristors allows for persistent storage without continuous power, further enhancing energy efficiency while maintaining high computational speeds for AI applications.- Memristor-based neuromorphic architectures: Memristor-based neuromorphic computing systems offer significant improvements in efficiency and speed by mimicking the brain's neural structure. These architectures utilize memristive devices to implement synaptic functions with lower power consumption compared to traditional CMOS implementations. The non-volatile nature of memristors enables persistent storage without continuous power, while their analog behavior allows for efficient parallel processing of neural network operations, resulting in faster computation for AI workloads.

- Spiking neural networks for energy efficiency: Spiking Neural Networks (SNNs) significantly enhance neuromorphic computing efficiency by transmitting information through discrete spikes rather than continuous signals. This event-driven processing approach substantially reduces power consumption as computations occur only when necessary. SNNs more closely mimic biological neural systems, enabling efficient temporal information processing and improved performance in real-time applications while maintaining low energy requirements, making them ideal for edge computing and mobile devices.

- Hardware acceleration techniques: Specialized hardware accelerators designed specifically for neuromorphic computing can dramatically improve processing speed and energy efficiency. These include custom ASIC designs, FPGA implementations, and specialized neuromorphic chips that optimize parallel processing of neural network operations. By implementing neural network functions directly in hardware rather than simulating them in software on general-purpose processors, these accelerators achieve orders of magnitude improvements in both speed and energy efficiency for AI workloads.

- Novel materials and device physics: Advanced materials and novel device physics are being explored to enhance neuromorphic computing performance. These include phase-change materials, ferroelectric devices, and spintronic components that can implement synaptic and neuronal functions with higher efficiency than conventional electronics. These materials enable multi-state memory capabilities, lower switching energies, and faster state transitions, contributing to neuromorphic systems with improved speed, energy efficiency, and computational density.

- Optimized neural network architectures: Specialized neural network architectures designed specifically for neuromorphic hardware can significantly improve computational efficiency and speed. These include sparse connectivity patterns, reduced precision representations, and hierarchical structures that minimize unnecessary computations. By co-designing the neural network architecture with the neuromorphic hardware implementation, these approaches achieve better utilization of hardware resources, reduced memory access requirements, and improved throughput for AI applications.

02 Spiking neural networks optimization

Spiking neural networks (SNNs) enhance neuromorphic computing efficiency through event-driven processing that activates only when neurons receive sufficient stimulation. This approach significantly reduces power consumption compared to traditional neural networks that operate continuously. Optimizations in spike timing, encoding methods, and network topology allow for faster information processing while maintaining energy efficiency. These improvements enable SNNs to handle complex computational tasks with lower latency and power requirements.Expand Specific Solutions03 Hardware-software co-design for neuromorphic systems

Hardware-software co-design approaches optimize neuromorphic computing by developing specialized hardware architectures alongside tailored algorithms. This integrated design methodology ensures that software can fully leverage hardware capabilities while hardware is optimized for specific neural network operations. The co-design strategy reduces communication overhead, minimizes memory access bottlenecks, and enables more efficient parallel processing. These optimizations result in significant improvements in both computational speed and energy efficiency for neuromorphic applications.Expand Specific Solutions04 Novel materials and fabrication techniques

Advanced materials and fabrication techniques are revolutionizing neuromorphic computing efficiency. Phase-change materials, ferroelectric compounds, and 2D materials enable the creation of more compact and energy-efficient synaptic elements. These materials exhibit properties that allow for faster switching speeds, lower power consumption, and higher integration density. Novel fabrication techniques, including 3D stacking and monolithic integration, further enhance performance by reducing interconnect delays and enabling more complex neuromorphic architectures with improved computational capabilities.Expand Specific Solutions05 In-memory computing for neuromorphic applications

In-memory computing architectures overcome the von Neumann bottleneck by performing computations directly within memory units, dramatically improving neuromorphic system efficiency. This approach eliminates the need to constantly transfer data between separate processing and memory components, reducing energy consumption and latency. By enabling massively parallel operations where memory elements simultaneously function as computational units, in-memory computing achieves significant speed improvements for neural network operations while maintaining low power requirements, making it ideal for edge AI applications.Expand Specific Solutions

Leading Companies in Neuromorphic Computing for IoT

Neuromorphic computing for IoT is currently in an early growth phase, with the market expected to expand significantly due to increasing demand for energy-efficient edge computing solutions. The global market is projected to reach several billion dollars by 2030, driven by applications in wearables, smart homes, and industrial IoT. Leading players include specialized startups like Polyn Technology and Syntiant, which focus on ultra-low-power neuromorphic chips, alongside established tech giants such as IBM and Intel that leverage their extensive R&D capabilities. Academic institutions including Tsinghua University, KAIST, and EPFL are advancing fundamental research, while companies like Huawei and SK hynix are integrating neuromorphic principles into their semiconductor roadmaps. The technology is approaching commercial viability for specific IoT applications, with early adopters already deploying solutions that demonstrate significant improvements in power efficiency and real-time processing capabilities.

Polyn Technology Ltd.

Technical Solution: Polyn Technology has pioneered Neuromorphic Analog Signal Processing (NASP) technology specifically designed for IoT edge computing. Their approach combines the principles of neuromorphic computing with analog signal processing to create ultra-efficient AI solutions for sensor data analysis. Polyn's NASP chips directly process analog signals from sensors without the need for traditional analog-to-digital conversion, dramatically reducing power consumption and latency. This is particularly valuable for IoT applications where sensors continuously generate analog data. The company's technology implements neural networks directly in analog hardware, allowing for extremely efficient pattern recognition and signal analysis. Their neuromorphic processors can perform complex AI tasks while consuming only microwatts of power, enabling always-on intelligence in severely power-constrained IoT devices. Polyn's architecture features in-memory computing elements that eliminate the energy-intensive data movement between memory and processing units found in conventional systems. For IoT deployments, their technology enables sophisticated sensor fusion, anomaly detection, and pattern recognition directly at the sensor node without requiring cloud connectivity. The company has demonstrated their technology in applications including wearable health monitors, industrial sensors, and smart home devices, showing 10-100x improvements in energy efficiency compared to digital solutions.

Strengths: Direct analog signal processing eliminates power-hungry ADC components; extremely low power consumption suitable for energy harvesting applications; specialized for sensor data analysis common in IoT deployments. Weaknesses: Less flexible than general-purpose digital solutions; limited to specific types of neural network architectures; relatively new technology with smaller ecosystem compared to established players.

International Business Machines Corp.

Technical Solution: IBM's neuromorphic computing approach for IoT focuses on their TrueNorth and subsequent neuromorphic chip architectures. These chips mimic the brain's neural structure with millions of programmable neurons and synapses, consuming only 70mW of power while delivering performance equivalent to conventional systems requiring much higher energy. IBM's neuromorphic systems implement spiking neural networks (SNNs) that process information in an event-driven manner, activating only when input data changes. This approach significantly reduces power consumption compared to traditional computing architectures that continuously process data regardless of changes. For IoT applications, IBM has developed specialized neuromorphic processors that can perform complex AI tasks at the edge with minimal energy requirements. Their systems feature on-chip learning capabilities that allow devices to adapt to new data patterns without cloud connectivity, crucial for remote IoT deployments. IBM's neuromorphic technology also incorporates advanced memory-compute integration, placing computational elements directly alongside memory to eliminate the energy-intensive data movement that plagues conventional architectures.

Strengths: Extremely low power consumption suitable for battery-powered IoT devices; highly scalable architecture allowing deployment across various IoT applications; proven technology with multiple generations of development. Weaknesses: Higher initial implementation complexity compared to conventional solutions; requires specialized programming approaches different from mainstream AI frameworks; limited ecosystem of development tools compared to traditional computing platforms.

Key Patents and Research in Brain-Inspired Computing

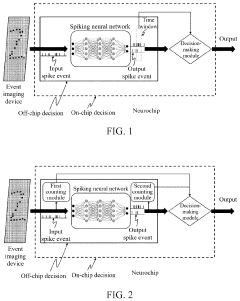

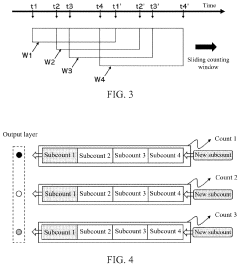

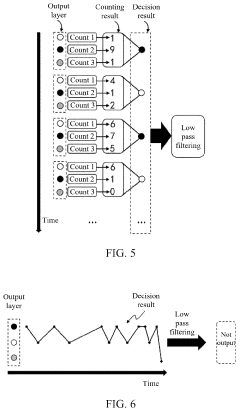

Spike event decision-making device, method, chip and electronic device

PatentPendingUS20240086690A1

Innovation

- A spike event decision-making device and method that utilizes counting modules to determine decision-making results based on the number of spike events fired by neurons in a spiking neural network, allowing for adaptive decision-making without fixed time windows, and incorporating sub-counters to improve reliability and accuracy by considering transition rates and occurrence ratios.

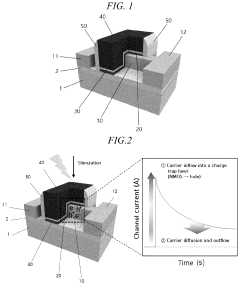

Synaptic device, neuromorphic device including synaptic device, and operating methods thereof

PatentPendingUS20230419089A1

Innovation

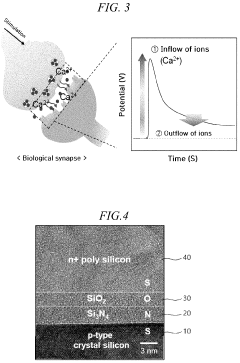

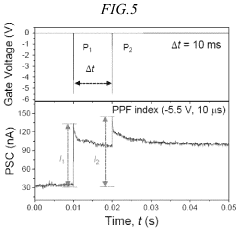

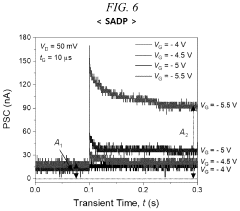

- A synaptic device with a SONS structure, comprising a doped poly-silicon/blocking oxide/charge trap nitride/silicon channel, where the gate electrode is made of silicon, the blocking insulating layer of oxide, and the charge trap layer of nitride, allowing for control of post-synaptic current and synaptic plasticity through gate voltage pulses, exhibiting characteristics like biological synapses such as spike amplitude, duration, frequency, number, and timing dependent plasticity.

Edge AI Implementation Strategies

Implementing neuromorphic computing at the edge requires strategic approaches that balance computational power with energy constraints. Edge AI deployment for neuromorphic systems can follow several implementation pathways, each offering distinct advantages for IoT environments. The hardware-software co-design approach represents a primary strategy, where neuromorphic chips are integrated with specialized software frameworks that optimize spiking neural network operations. This integration enables efficient processing of sensory data directly on IoT devices without constant cloud connectivity.

Tiered deployment strategies offer another viable approach, where neuromorphic processing is distributed across a hierarchy of edge devices. Simple pattern recognition and signal processing occur on ultra-low-power endpoint devices, while more complex inference tasks are handled by gateway devices with greater computational capacity. This architecture minimizes data transmission while maintaining responsive performance for time-critical applications.

Hybrid computing models combine traditional digital processing with neuromorphic elements to leverage the strengths of both paradigms. These systems utilize conventional processors for precise numerical calculations and control functions, while neuromorphic components handle pattern recognition, anomaly detection, and other brain-inspired computing tasks. The seamless handoff between these computing domains enables efficient resource utilization across diverse IoT workloads.

Adaptive power management represents a critical implementation strategy for neuromorphic edge systems. By dynamically adjusting computational resources based on task requirements and available energy, these systems can extend operational lifetimes in battery-powered IoT deployments. Event-driven processing further enhances efficiency by activating neuromorphic circuits only when relevant input signals are detected, maintaining minimal power consumption during idle periods.

Federated learning approaches enable neuromorphic edge devices to collectively improve their performance while preserving data privacy. Rather than transmitting raw sensor data to centralized servers, devices share model updates derived from local learning experiences. This distributed intelligence paradigm is particularly valuable for IoT applications in sensitive environments such as healthcare, industrial monitoring, and smart cities, where data sovereignty concerns are paramount.

Standardized development frameworks are emerging to simplify neuromorphic implementation at the edge. These toolchains provide abstraction layers that shield developers from the complexities of neuromorphic hardware while enabling efficient deployment of spiking neural networks. As these frameworks mature, they will accelerate adoption across diverse IoT application domains by reducing implementation barriers and fostering ecosystem growth.

Tiered deployment strategies offer another viable approach, where neuromorphic processing is distributed across a hierarchy of edge devices. Simple pattern recognition and signal processing occur on ultra-low-power endpoint devices, while more complex inference tasks are handled by gateway devices with greater computational capacity. This architecture minimizes data transmission while maintaining responsive performance for time-critical applications.

Hybrid computing models combine traditional digital processing with neuromorphic elements to leverage the strengths of both paradigms. These systems utilize conventional processors for precise numerical calculations and control functions, while neuromorphic components handle pattern recognition, anomaly detection, and other brain-inspired computing tasks. The seamless handoff between these computing domains enables efficient resource utilization across diverse IoT workloads.

Adaptive power management represents a critical implementation strategy for neuromorphic edge systems. By dynamically adjusting computational resources based on task requirements and available energy, these systems can extend operational lifetimes in battery-powered IoT deployments. Event-driven processing further enhances efficiency by activating neuromorphic circuits only when relevant input signals are detected, maintaining minimal power consumption during idle periods.

Federated learning approaches enable neuromorphic edge devices to collectively improve their performance while preserving data privacy. Rather than transmitting raw sensor data to centralized servers, devices share model updates derived from local learning experiences. This distributed intelligence paradigm is particularly valuable for IoT applications in sensitive environments such as healthcare, industrial monitoring, and smart cities, where data sovereignty concerns are paramount.

Standardized development frameworks are emerging to simplify neuromorphic implementation at the edge. These toolchains provide abstraction layers that shield developers from the complexities of neuromorphic hardware while enabling efficient deployment of spiking neural networks. As these frameworks mature, they will accelerate adoption across diverse IoT application domains by reducing implementation barriers and fostering ecosystem growth.

Power Consumption Benchmarks and Optimization

Power consumption represents a critical benchmark for neuromorphic computing systems in IoT applications, where energy efficiency directly impacts device longevity and deployment feasibility. Current benchmarks indicate that neuromorphic chips demonstrate significant power advantages over traditional computing architectures, with IBM's TrueNorth consuming merely 70mW while processing sensory data—approximately 1/1000th of conventional processors performing similar tasks. SpiNNaker systems have demonstrated power efficiency of 1W per node while simulating neural networks comparable to those requiring 10-15W on GPU implementations.

Energy optimization techniques for neuromorphic systems fall into several categories. At the hardware level, asynchronous circuit design eliminates clock signals that consume constant power, allowing components to activate only when processing spikes. This approach has yielded 20-30% power reductions in recent implementations. Novel memory architectures incorporating resistive RAM (ReRAM) and phase-change memory (PCM) further reduce energy consumption by co-locating memory and processing elements, minimizing data movement costs that typically account for 60-70% of system energy usage.

Algorithmic optimizations provide another avenue for power reduction. Sparse coding techniques reduce neural activity by encoding information in minimal spike patterns, decreasing dynamic power consumption by up to 40% in experimental setups. Adaptive threshold mechanisms dynamically adjust neuron firing thresholds based on recent activity patterns, preventing unnecessary spike generation and propagation during periods of low information content.

System-level power management strategies include selective activation of neuromorphic cores based on computational demands and dynamic voltage-frequency scaling tailored to spike processing requirements. Intel's Loihi chip implements fine-grained power gating that can deactivate unused neural cores, achieving up to 50% power savings during partial workloads without compromising computational integrity.

Benchmark comparisons across application domains reveal that neuromorphic systems excel particularly in always-on sensing applications. For instance, gesture recognition implementations on neuromorphic hardware consume 10-100x less energy than conventional microcontroller solutions. Similarly, keyword spotting applications demonstrate power consumption of 1-5mW on neuromorphic platforms compared to 50-200mW on traditional DSP implementations, enabling continuous operation on battery-powered IoT devices for months rather than days.

Energy optimization techniques for neuromorphic systems fall into several categories. At the hardware level, asynchronous circuit design eliminates clock signals that consume constant power, allowing components to activate only when processing spikes. This approach has yielded 20-30% power reductions in recent implementations. Novel memory architectures incorporating resistive RAM (ReRAM) and phase-change memory (PCM) further reduce energy consumption by co-locating memory and processing elements, minimizing data movement costs that typically account for 60-70% of system energy usage.

Algorithmic optimizations provide another avenue for power reduction. Sparse coding techniques reduce neural activity by encoding information in minimal spike patterns, decreasing dynamic power consumption by up to 40% in experimental setups. Adaptive threshold mechanisms dynamically adjust neuron firing thresholds based on recent activity patterns, preventing unnecessary spike generation and propagation during periods of low information content.

System-level power management strategies include selective activation of neuromorphic cores based on computational demands and dynamic voltage-frequency scaling tailored to spike processing requirements. Intel's Loihi chip implements fine-grained power gating that can deactivate unused neural cores, achieving up to 50% power savings during partial workloads without compromising computational integrity.

Benchmark comparisons across application domains reveal that neuromorphic systems excel particularly in always-on sensing applications. For instance, gesture recognition implementations on neuromorphic hardware consume 10-100x less energy than conventional microcontroller solutions. Similarly, keyword spotting applications demonstrate power consumption of 1-5mW on neuromorphic platforms compared to 50-200mW on traditional DSP implementations, enabling continuous operation on battery-powered IoT devices for months rather than days.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!