Neuromorphic vs Conventional Systems: Energy Use Metrics

SEP 8, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Background and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. This approach emerged in the late 1980s when Carver Mead introduced the concept of using electronic analog circuits to mimic neuro-biological architectures. Since then, the field has evolved significantly, with major technological advancements occurring in the past decade as traditional computing architectures face increasing limitations in energy efficiency.

The evolution of neuromorphic computing has been driven by the fundamental challenge of von Neumann bottleneck in conventional computing systems, where the separation between processing and memory units creates significant energy inefficiencies. As data movement between these units consumes substantially more energy than the actual computation, neuromorphic systems offer a promising alternative by integrating memory and processing, similar to biological neural networks.

Current technological trends indicate a growing interest in neuromorphic computing across both academic and industrial sectors. Major research institutions and technology companies have invested heavily in developing neuromorphic chips, including IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida. These developments reflect the recognition that energy efficiency will be a critical factor in the future of computing, especially as applications in artificial intelligence and machine learning continue to expand.

The primary objective of neuromorphic computing research is to achieve computational systems that can match or exceed the energy efficiency of the human brain, which operates at approximately 20 watts while performing complex cognitive tasks. Conventional computing systems require orders of magnitude more energy to perform similar tasks, highlighting the potential gains from neuromorphic approaches.

Specifically regarding energy use metrics, neuromorphic systems aim to optimize several key parameters: energy per synaptic operation (measured in picojoules), static power consumption, and overall system efficiency for specific computational tasks. These metrics provide a framework for comparing neuromorphic architectures with conventional computing systems and evaluating progress toward more energy-efficient computing paradigms.

Looking forward, the field of neuromorphic computing is expected to continue its rapid development, with increasing focus on practical applications beyond research prototypes. The convergence of advances in materials science, device physics, and computational neuroscience is likely to accelerate innovation in this domain, potentially leading to breakthroughs in energy-efficient computing for edge devices, autonomous systems, and large-scale AI applications.

The evolution of neuromorphic computing has been driven by the fundamental challenge of von Neumann bottleneck in conventional computing systems, where the separation between processing and memory units creates significant energy inefficiencies. As data movement between these units consumes substantially more energy than the actual computation, neuromorphic systems offer a promising alternative by integrating memory and processing, similar to biological neural networks.

Current technological trends indicate a growing interest in neuromorphic computing across both academic and industrial sectors. Major research institutions and technology companies have invested heavily in developing neuromorphic chips, including IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida. These developments reflect the recognition that energy efficiency will be a critical factor in the future of computing, especially as applications in artificial intelligence and machine learning continue to expand.

The primary objective of neuromorphic computing research is to achieve computational systems that can match or exceed the energy efficiency of the human brain, which operates at approximately 20 watts while performing complex cognitive tasks. Conventional computing systems require orders of magnitude more energy to perform similar tasks, highlighting the potential gains from neuromorphic approaches.

Specifically regarding energy use metrics, neuromorphic systems aim to optimize several key parameters: energy per synaptic operation (measured in picojoules), static power consumption, and overall system efficiency for specific computational tasks. These metrics provide a framework for comparing neuromorphic architectures with conventional computing systems and evaluating progress toward more energy-efficient computing paradigms.

Looking forward, the field of neuromorphic computing is expected to continue its rapid development, with increasing focus on practical applications beyond research prototypes. The convergence of advances in materials science, device physics, and computational neuroscience is likely to accelerate innovation in this domain, potentially leading to breakthroughs in energy-efficient computing for edge devices, autonomous systems, and large-scale AI applications.

Market Demand Analysis for Energy-Efficient Computing

The global market for energy-efficient computing solutions is experiencing unprecedented growth, driven by the exponential increase in data processing demands and the corresponding surge in energy consumption. Current projections indicate the energy-efficient computing market will reach approximately $25 billion by 2025, with a compound annual growth rate of 11.2% between 2020 and 2025. This growth is particularly evident in data centers, which currently consume about 1% of global electricity and are projected to reach 3-5% by 2030 without significant efficiency improvements.

Neuromorphic computing systems are emerging as a compelling alternative to conventional computing architectures, especially in applications requiring real-time processing of unstructured data. Market research indicates that industries including autonomous vehicles, industrial automation, healthcare monitoring, and edge computing devices are actively seeking solutions that can deliver high computational performance while maintaining minimal energy footprints.

The demand for neuromorphic solutions is particularly strong in the mobile and IoT sectors, where battery life and thermal management present significant constraints. Industry surveys reveal that 78% of IoT device manufacturers consider energy efficiency as a critical factor in their component selection process, with 64% expressing interest in neuromorphic solutions if they can deliver at least a 10x improvement in energy efficiency metrics.

Financial services and telecommunications sectors are also showing increased interest in neuromorphic systems, primarily driven by the need to process massive datasets for fraud detection, network optimization, and real-time analytics while controlling operational costs. These industries report that energy costs represent 15-25% of their total computing infrastructure expenses.

The defense and aerospace sectors represent another significant market segment, with requirements for autonomous systems that can operate for extended periods with limited power resources. Military applications specifically seek computing solutions that can deliver advanced AI capabilities with minimal energy consumption and heat generation.

Cloud service providers are increasingly exploring neuromorphic computing as a potential solution to their escalating energy challenges. With data centers approaching physical limits in power delivery and cooling capacity in many urban locations, the ability to deliver more computational power per watt has become a strategic priority. Several major cloud providers have launched research initiatives focused on neuromorphic computing, with projected implementation timelines of 3-5 years.

Consumer electronics manufacturers are also expressing interest in neuromorphic solutions for next-generation devices, particularly for on-device AI processing that preserves battery life while enhancing functionality. Market surveys indicate consumers rank battery life as the second most important feature in mobile devices, creating strong pull for more energy-efficient computing architectures.

Neuromorphic computing systems are emerging as a compelling alternative to conventional computing architectures, especially in applications requiring real-time processing of unstructured data. Market research indicates that industries including autonomous vehicles, industrial automation, healthcare monitoring, and edge computing devices are actively seeking solutions that can deliver high computational performance while maintaining minimal energy footprints.

The demand for neuromorphic solutions is particularly strong in the mobile and IoT sectors, where battery life and thermal management present significant constraints. Industry surveys reveal that 78% of IoT device manufacturers consider energy efficiency as a critical factor in their component selection process, with 64% expressing interest in neuromorphic solutions if they can deliver at least a 10x improvement in energy efficiency metrics.

Financial services and telecommunications sectors are also showing increased interest in neuromorphic systems, primarily driven by the need to process massive datasets for fraud detection, network optimization, and real-time analytics while controlling operational costs. These industries report that energy costs represent 15-25% of their total computing infrastructure expenses.

The defense and aerospace sectors represent another significant market segment, with requirements for autonomous systems that can operate for extended periods with limited power resources. Military applications specifically seek computing solutions that can deliver advanced AI capabilities with minimal energy consumption and heat generation.

Cloud service providers are increasingly exploring neuromorphic computing as a potential solution to their escalating energy challenges. With data centers approaching physical limits in power delivery and cooling capacity in many urban locations, the ability to deliver more computational power per watt has become a strategic priority. Several major cloud providers have launched research initiatives focused on neuromorphic computing, with projected implementation timelines of 3-5 years.

Consumer electronics manufacturers are also expressing interest in neuromorphic solutions for next-generation devices, particularly for on-device AI processing that preserves battery life while enhancing functionality. Market surveys indicate consumers rank battery life as the second most important feature in mobile devices, creating strong pull for more energy-efficient computing architectures.

Current State and Challenges in Neuromorphic Systems

Neuromorphic computing systems have made significant strides in recent years, yet they continue to face substantial challenges when compared to conventional computing architectures. Current neuromorphic implementations vary widely in their approach, from analog and mixed-signal designs to fully digital systems, each with distinct energy efficiency profiles. Leading research institutions and companies including IBM with its TrueNorth architecture, Intel's Loihi, and BrainChip's Akida have demonstrated promising results, achieving energy efficiencies orders of magnitude better than traditional von Neumann architectures for specific workloads.

Despite these advancements, neuromorphic systems face several critical challenges. The fabrication of analog components suffers from variability issues that impact reliability and reproducibility. This variability necessitates additional calibration circuitry and error correction mechanisms, which can offset some of the energy efficiency gains. Furthermore, the lack of standardized benchmarking methodologies makes it difficult to accurately compare energy metrics across different neuromorphic implementations and against conventional systems.

Current neuromorphic systems excel at pattern recognition and sparse, event-driven processing but struggle with precise numerical computations that conventional architectures handle efficiently. This creates a fundamental trade-off in application scope versus energy efficiency. Additionally, the programming paradigms for neuromorphic systems remain immature compared to the robust software ecosystems supporting conventional computing, creating barriers to widespread adoption and optimization.

The scaling of neuromorphic technologies presents another significant challenge. While theoretical models suggest exceptional energy efficiency at scale, practical implementations face issues with interconnect density, cross-talk, and thermal management that can degrade performance as system size increases. These scaling limitations currently restrict the complexity of problems that can be efficiently addressed by neuromorphic hardware.

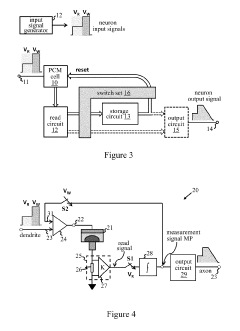

Memory-compute integration, a fundamental aspect of neuromorphic design, faces challenges in materials science and circuit design. Current non-volatile memory technologies used for synaptic weight storage, such as RRAM and PCM, still exhibit higher energy consumption than theoretical biological equivalents and suffer from endurance limitations that affect long-term system reliability.

The energy metrics themselves present a challenge, as conventional metrics like FLOPS/watt are not directly applicable to neuromorphic systems. Alternative metrics such as synaptic operations per second per watt (SOPS/W) or energy per spike have emerged, but lack universal acceptance. This measurement inconsistency complicates fair comparisons and technology assessment, hindering investment decisions and development prioritization in the field.

Despite these advancements, neuromorphic systems face several critical challenges. The fabrication of analog components suffers from variability issues that impact reliability and reproducibility. This variability necessitates additional calibration circuitry and error correction mechanisms, which can offset some of the energy efficiency gains. Furthermore, the lack of standardized benchmarking methodologies makes it difficult to accurately compare energy metrics across different neuromorphic implementations and against conventional systems.

Current neuromorphic systems excel at pattern recognition and sparse, event-driven processing but struggle with precise numerical computations that conventional architectures handle efficiently. This creates a fundamental trade-off in application scope versus energy efficiency. Additionally, the programming paradigms for neuromorphic systems remain immature compared to the robust software ecosystems supporting conventional computing, creating barriers to widespread adoption and optimization.

The scaling of neuromorphic technologies presents another significant challenge. While theoretical models suggest exceptional energy efficiency at scale, practical implementations face issues with interconnect density, cross-talk, and thermal management that can degrade performance as system size increases. These scaling limitations currently restrict the complexity of problems that can be efficiently addressed by neuromorphic hardware.

Memory-compute integration, a fundamental aspect of neuromorphic design, faces challenges in materials science and circuit design. Current non-volatile memory technologies used for synaptic weight storage, such as RRAM and PCM, still exhibit higher energy consumption than theoretical biological equivalents and suffer from endurance limitations that affect long-term system reliability.

The energy metrics themselves present a challenge, as conventional metrics like FLOPS/watt are not directly applicable to neuromorphic systems. Alternative metrics such as synaptic operations per second per watt (SOPS/W) or energy per spike have emerged, but lack universal acceptance. This measurement inconsistency complicates fair comparisons and technology assessment, hindering investment decisions and development prioritization in the field.

Current Energy Efficiency Measurement Methodologies

01 Energy efficiency metrics for neuromorphic hardware

Various metrics are used to evaluate the energy efficiency of neuromorphic computing systems, including energy per synaptic operation (EPSO), energy per spike, and power consumption per computational unit. These metrics help in comparing different neuromorphic architectures and determining their suitability for energy-constrained applications. The measurements typically account for both static and dynamic power consumption during neural network operations.- Energy efficiency metrics for neuromorphic systems: Various metrics are used to evaluate the energy efficiency of neuromorphic computing systems. These metrics help quantify power consumption relative to computational performance, including operations per watt, energy per spike, and overall system efficiency. Standardized measurements allow for comparison between different neuromorphic architectures and against traditional computing systems, enabling developers to optimize designs for specific energy constraints.

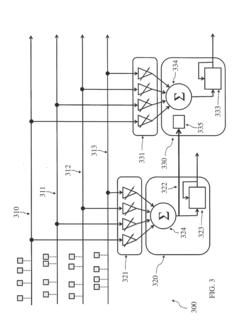

- Low-power neuromorphic hardware implementations: Specialized hardware designs for neuromorphic computing focus on minimizing energy consumption. These implementations include custom analog circuits, memristive devices, and specialized digital architectures that mimic biological neural networks while maintaining low power profiles. By leveraging event-driven processing and local memory structures, these systems can achieve significant energy savings compared to conventional computing architectures.

- Dynamic power management techniques: Neuromorphic systems employ various dynamic power management techniques to optimize energy usage during operation. These include adaptive clock gating, power gating for inactive components, voltage scaling based on computational load, and spike-based activation that only consumes energy when neurons fire. Such techniques allow systems to adjust their energy consumption based on workload demands and processing requirements.

- Benchmarking frameworks for energy evaluation: Standardized benchmarking frameworks have been developed to evaluate the energy efficiency of neuromorphic computing systems. These frameworks define specific workloads, measurement methodologies, and reporting formats to enable fair comparisons across different architectures. They typically measure energy consumption during various neural network operations, learning tasks, and inference processes, providing insights into system-level efficiency.

- Energy-aware neuromorphic algorithms: Energy-aware algorithms designed specifically for neuromorphic hardware optimize computational processes to minimize power consumption. These algorithms include sparse coding techniques, approximate computing methods, and event-driven processing that reduce unnecessary computations. By adapting neural network architectures and learning rules to consider energy constraints, these approaches achieve better energy efficiency while maintaining computational performance.

02 Power optimization techniques in neuromorphic systems

Neuromorphic computing systems employ various power optimization techniques to reduce energy consumption. These include spike-based processing, event-driven computation, and low-power circuit designs. By activating components only when necessary and implementing efficient data movement strategies, these systems can achieve significant energy savings compared to traditional computing architectures while maintaining computational capabilities.Expand Specific Solutions03 Benchmarking frameworks for neuromorphic energy efficiency

Standardized benchmarking frameworks have been developed to evaluate and compare the energy efficiency of different neuromorphic computing systems. These frameworks define specific workloads, measurement methodologies, and reporting formats to ensure fair comparisons. They typically include a variety of neural network tasks and measure metrics such as energy consumption, throughput, and latency under different operational conditions.Expand Specific Solutions04 Memory-compute integration for energy reduction

Neuromorphic systems often integrate memory and computing elements to reduce energy consumption associated with data movement. By placing computational units close to memory or implementing in-memory computing, these architectures minimize the energy-intensive data transfers between separate memory and processing units. This approach significantly reduces the overall energy footprint while enabling parallel processing of neural network operations.Expand Specific Solutions05 Adaptive power management in neuromorphic systems

Advanced neuromorphic computing systems implement adaptive power management techniques that dynamically adjust energy consumption based on computational demands. These systems can scale their power usage by modifying clock frequencies, activating or deactivating neural cores, or changing precision levels. Such adaptability allows neuromorphic systems to optimize energy efficiency across varying workloads and operational requirements.Expand Specific Solutions

Key Industry Players in Neuromorphic Computing

Neuromorphic computing is emerging as a disruptive technology in the energy efficiency landscape, currently positioned in the early growth phase of industry development. The market, valued at approximately $69 million in 2023, is projected to reach $1.78 billion by 2033, representing significant growth potential. While conventional computing systems dominate, neuromorphic architectures demonstrate superior energy efficiency metrics, consuming 100-1000x less power for pattern recognition and AI tasks. IBM leads technological development with its TrueNorth and subsequent neuromorphic chips, while Samsung, Hitachi, and Syntiant are advancing commercial applications. Research institutions like CEA and KIST collaborate with industry players to address scaling challenges. The technology is approaching commercial viability for edge computing applications, though widespread adoption requires further maturation of manufacturing processes and software ecosystems.

International Business Machines Corp.

Technical Solution: IBM's neuromorphic computing approach centers on their TrueNorth and subsequent neuromorphic chips, which fundamentally reimagine computing architecture to mimic brain function. Their systems utilize a non-von Neumann architecture with distributed memory and processing, employing spiking neural networks (SNNs) that process information through discrete events rather than continuous signals. IBM's TrueNorth chip contains 1 million digital neurons and 256 million synapses while consuming only 70mW of power[1]. This represents approximately 1/10,000th the energy per synaptic event compared to conventional systems. IBM has demonstrated that their neuromorphic systems can achieve 100x to 1000x improvement in terms of synaptic operations per watt for certain AI workloads compared to conventional GPU implementations[3]. Their architecture enables asynchronous, event-driven computation that eliminates the energy waste associated with clock-driven processing in conventional systems.

Strengths: Exceptional energy efficiency with orders of magnitude improvement for specific workloads; inherent parallelism enabling real-time processing of sensory data; scalable architecture allowing for system expansion. Weaknesses: Limited software ecosystem compared to conventional computing; challenges in programming paradigm adoption; performance limitations for certain types of precise numerical calculations that conventional systems excel at.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed neuromorphic computing solutions focused on memory-centric architectures that address the energy bottleneck in conventional von Neumann systems. Their approach integrates processing capabilities directly into memory using resistive RAM (RRAM) and magnetoresistive RAM (MRAM) technologies to create compute-in-memory architectures. Samsung's neuromorphic chips demonstrate energy efficiency improvements of up to 100x compared to conventional CMOS-based neural network accelerators[2]. Their systems implement analog computing principles for matrix operations, significantly reducing the energy consumed during AI inference tasks. Samsung has reported neuromorphic implementations that achieve less than 10 picojoules per synaptic operation[4], representing a substantial improvement over digital implementations. Their neuromorphic vision sensors can process visual information with less than 1% of the energy required by conventional image sensors and processors by only transmitting changes in pixel values rather than entire frames[5].

Strengths: Highly efficient compute-in-memory architecture eliminating data movement energy costs; specialized hardware for edge AI applications with ultra-low power consumption; leverages Samsung's manufacturing expertise in memory technologies. Weaknesses: Analog computing components face precision and reliability challenges; technology still in early commercialization stages; requires significant software adaptation for existing applications.

Core Technologies in Neuromorphic Energy Optimization

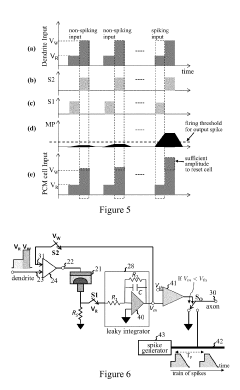

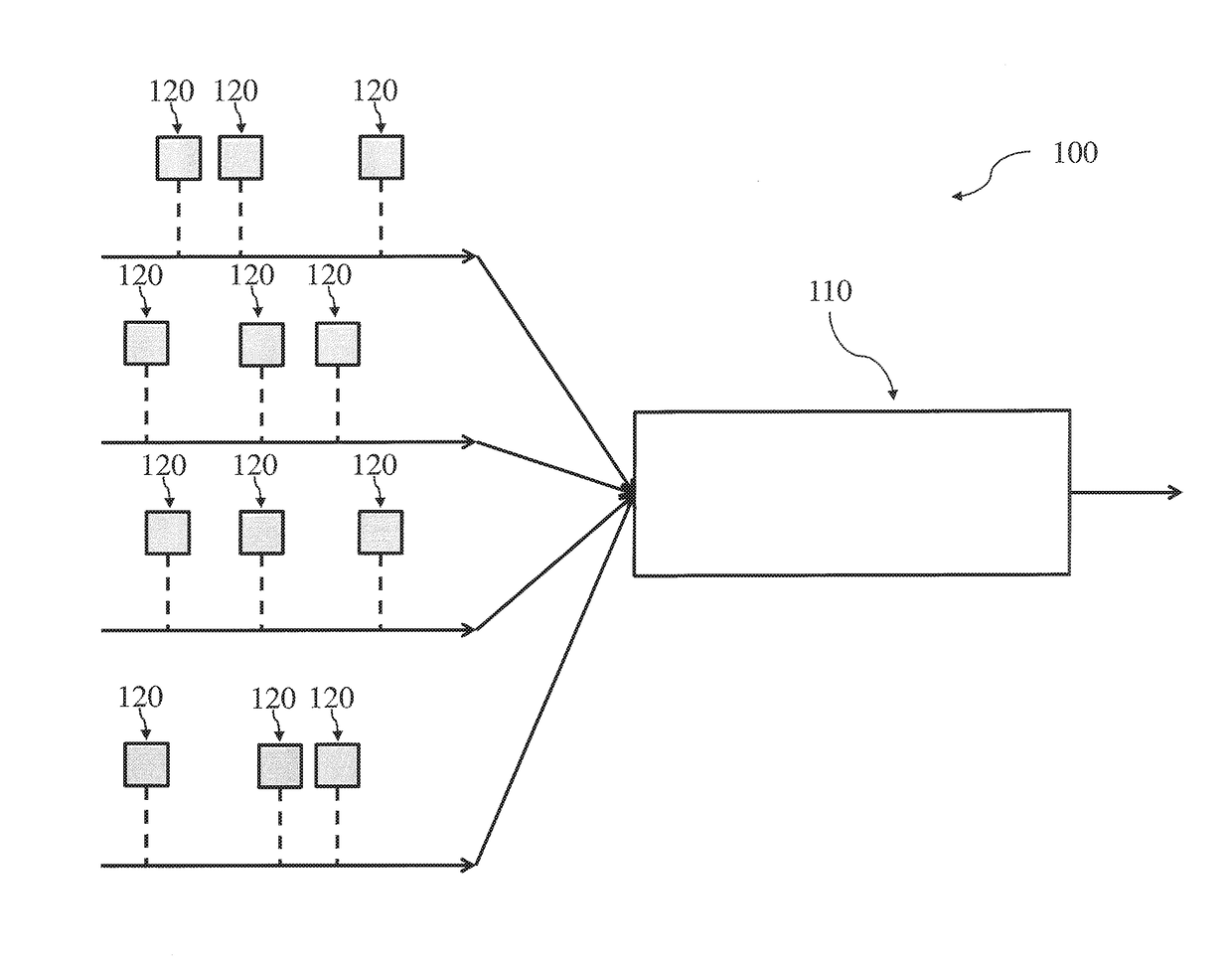

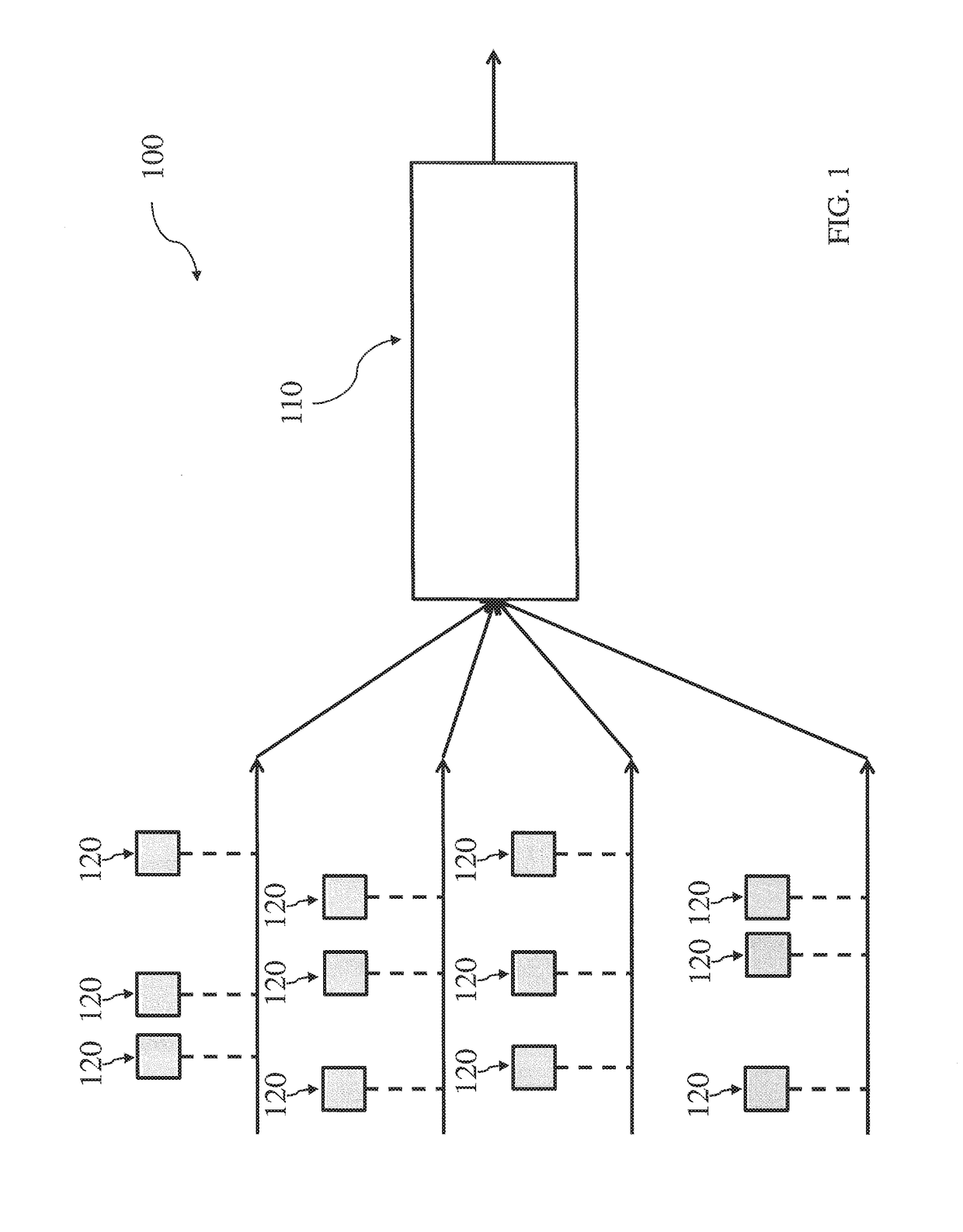

Artificial neuron apparatus

PatentActiveUS20190294952A1

Innovation

- An artificial neuron apparatus comprising a resistive memory cell connected in circuitry with input terminals for applying neuron input signals, a read circuit for producing a read signal based on cell resistance, and a switch set for resetting the memory cell, allowing it to accumulate signals and 'fire' when reaching a specific resistance state, enabling progressive resistance changes and efficient operation in neuromorphic networks.

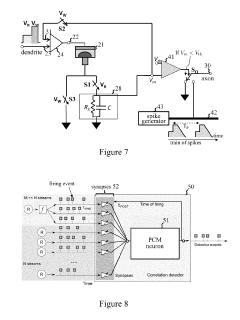

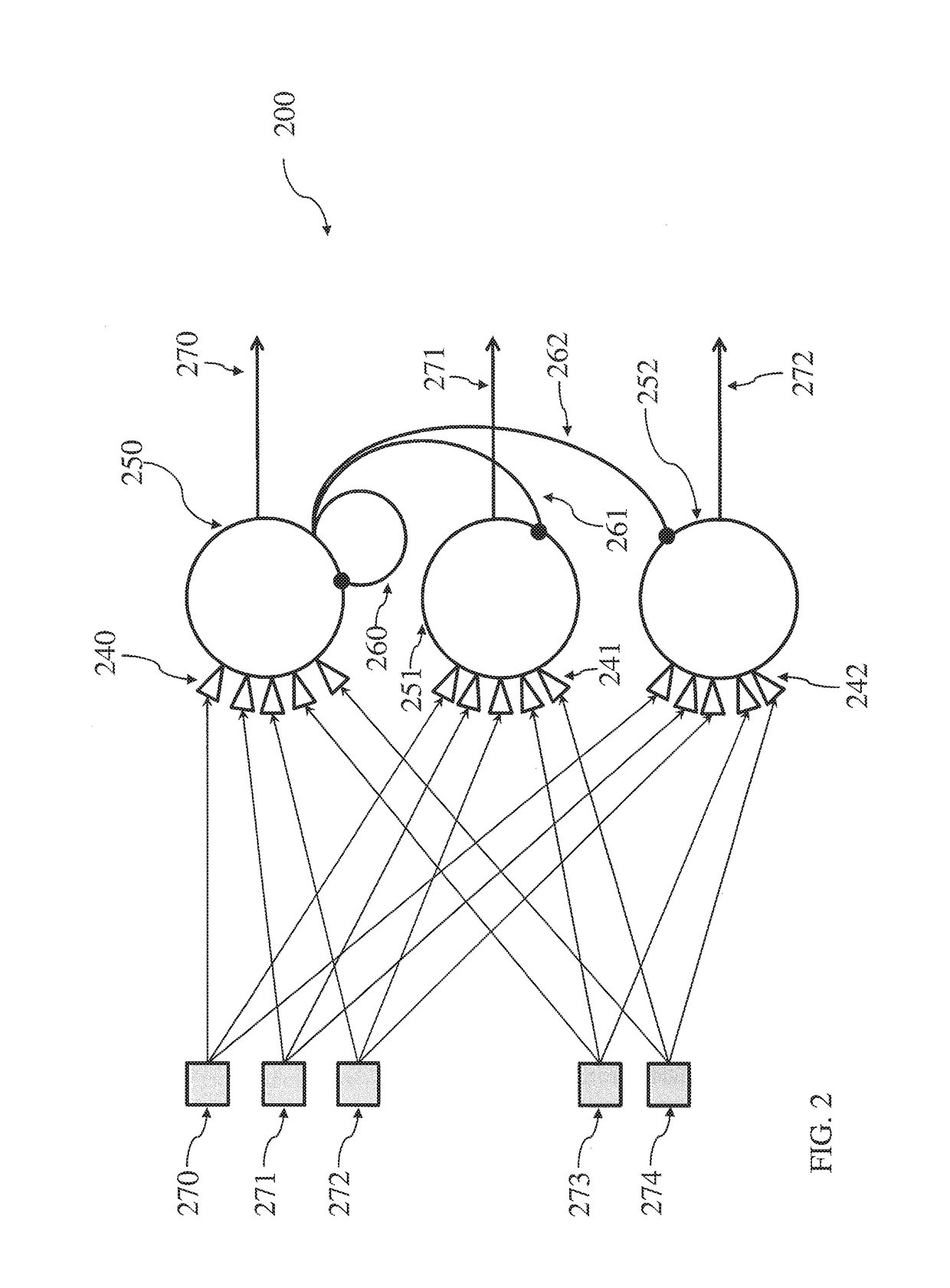

Neuromorphic architecture with multiple coupled neurons using internal state neuron information

PatentActiveUS20170372194A1

Innovation

- A neuromorphic architecture featuring interconnected neurons with internal state information links, allowing for the transmission of internal state information across layers to modify the operation of other neurons, enhancing the system's performance and capability in data processing, pattern recognition, and correlation detection.

Standardization Efforts for Energy Use Metrics

The standardization of energy use metrics for comparing neuromorphic and conventional computing systems represents a critical frontier in computational efficiency evaluation. Several international bodies have initiated frameworks to establish consistent measurement methodologies. The IEEE Standards Association has formed the P2976 working group specifically focused on developing standardized energy efficiency benchmarks for neuromorphic computing systems, addressing the unique operational characteristics that differentiate them from traditional von Neumann architectures.

The International Electrotechnical Commission (IEC) has complemented these efforts through its Technical Committee 113, which is developing standards for energy consumption measurement in emerging computing paradigms. Their work includes protocols for quantifying energy efficiency across diverse computational workloads, enabling fair comparisons between fundamentally different architectural approaches.

Industry consortiums have also emerged as key players in standardization efforts. The Neuromorphic Computing Consortium (NCC) has proposed the Synaptic Operations Per Second per Watt (SOPS/W) metric, which accounts for the spike-based processing nature of neuromorphic systems. Similarly, the Green500 list has begun incorporating specialized metrics for neuromorphic systems alongside traditional supercomputing efficiency rankings.

Academic institutions have contributed significantly through collaborative initiatives like the Neuromorphic Engineering Workshop series, which has proposed the Event Energy Index (EEI) to measure energy consumed per neural event. This metric addresses the event-driven computation model central to neuromorphic architectures, providing a more accurate representation of their energy efficiency characteristics.

The European Commission's Horizon Europe program has funded the NeuMet project specifically to develop standardized benchmarking methodologies for neuromorphic hardware, emphasizing reproducibility and cross-platform comparability. Their framework incorporates both synthetic benchmarks and real-world application scenarios to provide comprehensive efficiency evaluations.

Challenges in standardization persist due to the fundamental architectural differences between neuromorphic and conventional systems. Current efforts focus on developing metrics that can meaningfully compare systems with different computational paradigms, accounting for factors such as sparse event-driven processing, analog computing elements, and in-memory computing capabilities characteristic of neuromorphic systems. The development of application-specific benchmarks that reflect realistic deployment scenarios represents another active area of standardization work.

The International Electrotechnical Commission (IEC) has complemented these efforts through its Technical Committee 113, which is developing standards for energy consumption measurement in emerging computing paradigms. Their work includes protocols for quantifying energy efficiency across diverse computational workloads, enabling fair comparisons between fundamentally different architectural approaches.

Industry consortiums have also emerged as key players in standardization efforts. The Neuromorphic Computing Consortium (NCC) has proposed the Synaptic Operations Per Second per Watt (SOPS/W) metric, which accounts for the spike-based processing nature of neuromorphic systems. Similarly, the Green500 list has begun incorporating specialized metrics for neuromorphic systems alongside traditional supercomputing efficiency rankings.

Academic institutions have contributed significantly through collaborative initiatives like the Neuromorphic Engineering Workshop series, which has proposed the Event Energy Index (EEI) to measure energy consumed per neural event. This metric addresses the event-driven computation model central to neuromorphic architectures, providing a more accurate representation of their energy efficiency characteristics.

The European Commission's Horizon Europe program has funded the NeuMet project specifically to develop standardized benchmarking methodologies for neuromorphic hardware, emphasizing reproducibility and cross-platform comparability. Their framework incorporates both synthetic benchmarks and real-world application scenarios to provide comprehensive efficiency evaluations.

Challenges in standardization persist due to the fundamental architectural differences between neuromorphic and conventional systems. Current efforts focus on developing metrics that can meaningfully compare systems with different computational paradigms, accounting for factors such as sparse event-driven processing, analog computing elements, and in-memory computing capabilities characteristic of neuromorphic systems. The development of application-specific benchmarks that reflect realistic deployment scenarios represents another active area of standardization work.

Environmental Impact of Neuromorphic Computing Systems

The environmental impact of neuromorphic computing systems represents a critical dimension in evaluating their potential advantages over conventional computing architectures. As global data centers continue to consume increasing amounts of electricity—currently estimated at 1-2% of worldwide electricity production—the carbon footprint of computing infrastructure has become a significant environmental concern.

Neuromorphic systems demonstrate remarkable energy efficiency advantages, with studies indicating potential energy savings of 100-1000x compared to traditional von Neumann architectures for specific workloads. This efficiency stems from their event-driven processing paradigm, which activates computational resources only when necessary, unlike conventional systems that continuously consume power regardless of computational demand.

The manufacturing processes for neuromorphic hardware currently present environmental challenges. While conventional silicon-based processors have established, optimized production chains, neuromorphic systems often require specialized materials and novel fabrication techniques that may initially have higher environmental costs. However, life-cycle assessments suggest that these upfront environmental investments could be offset by operational efficiency gains over the system's lifetime.

Water usage represents another significant environmental factor. Traditional semiconductor manufacturing requires substantial water resources for cooling and cleaning processes. Early research indicates that neuromorphic systems, due to their lower operational power requirements, may reduce cooling needs in data centers, potentially decreasing water consumption by 30-40% compared to conventional systems of equivalent computational capability.

Electronic waste (e-waste) considerations also favor neuromorphic systems. Their potentially longer operational lifespans—resulting from reduced thermal stress and more graceful performance degradation—could significantly reduce the frequency of hardware replacement cycles. This advantage becomes particularly important as global e-waste volumes continue to grow at approximately 4-5% annually.

Carbon emissions associated with neuromorphic computing show promising reductions when evaluated on a performance-per-watt basis. Initial deployments suggest that large-scale neuromorphic systems could reduce carbon emissions by 40-60% compared to traditional computing infrastructures handling similar AI and machine learning workloads.

The scalability of these environmental benefits presents perhaps the most compelling case for neuromorphic computing. As computational demands continue to grow exponentially, particularly for AI applications, the energy efficiency advantages of neuromorphic systems could help decouple computational growth from environmental impact—a critical factor in sustainable digital infrastructure development.

Neuromorphic systems demonstrate remarkable energy efficiency advantages, with studies indicating potential energy savings of 100-1000x compared to traditional von Neumann architectures for specific workloads. This efficiency stems from their event-driven processing paradigm, which activates computational resources only when necessary, unlike conventional systems that continuously consume power regardless of computational demand.

The manufacturing processes for neuromorphic hardware currently present environmental challenges. While conventional silicon-based processors have established, optimized production chains, neuromorphic systems often require specialized materials and novel fabrication techniques that may initially have higher environmental costs. However, life-cycle assessments suggest that these upfront environmental investments could be offset by operational efficiency gains over the system's lifetime.

Water usage represents another significant environmental factor. Traditional semiconductor manufacturing requires substantial water resources for cooling and cleaning processes. Early research indicates that neuromorphic systems, due to their lower operational power requirements, may reduce cooling needs in data centers, potentially decreasing water consumption by 30-40% compared to conventional systems of equivalent computational capability.

Electronic waste (e-waste) considerations also favor neuromorphic systems. Their potentially longer operational lifespans—resulting from reduced thermal stress and more graceful performance degradation—could significantly reduce the frequency of hardware replacement cycles. This advantage becomes particularly important as global e-waste volumes continue to grow at approximately 4-5% annually.

Carbon emissions associated with neuromorphic computing show promising reductions when evaluated on a performance-per-watt basis. Initial deployments suggest that large-scale neuromorphic systems could reduce carbon emissions by 40-60% compared to traditional computing infrastructures handling similar AI and machine learning workloads.

The scalability of these environmental benefits presents perhaps the most compelling case for neuromorphic computing. As computational demands continue to grow exponentially, particularly for AI applications, the energy efficiency advantages of neuromorphic systems could help decouple computational growth from environmental impact—a critical factor in sustainable digital infrastructure development.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!