Comparing Neuromorphic AI Models for Real-time Analysis

SEP 8, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic AI Evolution and Objectives

Neuromorphic computing represents a paradigm shift in artificial intelligence, drawing inspiration from the structure and function of the human brain. This approach has evolved significantly since its conceptual inception in the late 1980s with Carver Mead's pioneering work. Initially focused on mimicking neural structures through hardware, neuromorphic AI has progressively incorporated sophisticated algorithms and architectures that emulate neural processing mechanisms, synaptic plasticity, and spike-based communication.

The evolution of neuromorphic AI can be traced through several distinct phases. The first generation primarily concentrated on hardware implementations using analog circuits to replicate neural behaviors. The second generation introduced digital neuromorphic systems with improved scalability and precision. The current third generation integrates hybrid approaches, combining analog and digital components with advanced materials science to optimize both energy efficiency and computational capability.

Recent technological breakthroughs have accelerated neuromorphic development, particularly in the realm of spiking neural networks (SNNs) and event-based computing. These advancements have enabled more efficient processing of temporal data streams, making neuromorphic systems increasingly suitable for real-time analysis applications. The integration of memristive technologies and other novel materials has further enhanced the biological fidelity and efficiency of these systems.

The primary objective of contemporary neuromorphic AI research for real-time analysis is to develop models that can process continuous data streams with minimal latency while maintaining extreme energy efficiency. This goal addresses the growing demand for intelligent edge computing solutions that can operate independently of cloud infrastructure. Additionally, researchers aim to create systems capable of unsupervised learning and adaptation to dynamic environments—capabilities essential for autonomous operation in unpredictable scenarios.

Another critical objective is achieving robust performance under resource constraints. Unlike traditional deep learning models that require substantial computational resources, neuromorphic systems must deliver reliable analysis capabilities with limited power, memory, and processing capacity. This constraint drives innovation in sparse coding, efficient information representation, and event-driven processing techniques.

Looking forward, the field is moving toward greater biological plausibility while maintaining practical applicability. Research objectives include developing standardized benchmarking methodologies for comparing different neuromorphic models, creating unified programming frameworks to simplify development, and establishing clear pathways for industrial adoption across sectors including autonomous vehicles, industrial automation, healthcare monitoring, and environmental sensing.

The evolution of neuromorphic AI can be traced through several distinct phases. The first generation primarily concentrated on hardware implementations using analog circuits to replicate neural behaviors. The second generation introduced digital neuromorphic systems with improved scalability and precision. The current third generation integrates hybrid approaches, combining analog and digital components with advanced materials science to optimize both energy efficiency and computational capability.

Recent technological breakthroughs have accelerated neuromorphic development, particularly in the realm of spiking neural networks (SNNs) and event-based computing. These advancements have enabled more efficient processing of temporal data streams, making neuromorphic systems increasingly suitable for real-time analysis applications. The integration of memristive technologies and other novel materials has further enhanced the biological fidelity and efficiency of these systems.

The primary objective of contemporary neuromorphic AI research for real-time analysis is to develop models that can process continuous data streams with minimal latency while maintaining extreme energy efficiency. This goal addresses the growing demand for intelligent edge computing solutions that can operate independently of cloud infrastructure. Additionally, researchers aim to create systems capable of unsupervised learning and adaptation to dynamic environments—capabilities essential for autonomous operation in unpredictable scenarios.

Another critical objective is achieving robust performance under resource constraints. Unlike traditional deep learning models that require substantial computational resources, neuromorphic systems must deliver reliable analysis capabilities with limited power, memory, and processing capacity. This constraint drives innovation in sparse coding, efficient information representation, and event-driven processing techniques.

Looking forward, the field is moving toward greater biological plausibility while maintaining practical applicability. Research objectives include developing standardized benchmarking methodologies for comparing different neuromorphic models, creating unified programming frameworks to simplify development, and establishing clear pathways for industrial adoption across sectors including autonomous vehicles, industrial automation, healthcare monitoring, and environmental sensing.

Market Demand for Real-time AI Analysis

The real-time AI analysis market is experiencing unprecedented growth, driven by the increasing need for instantaneous data processing across multiple industries. Current market research indicates that the global real-time AI analytics market is projected to reach $40 billion by 2026, with a compound annual growth rate of 21.3% from 2021. This rapid expansion reflects the growing recognition of real-time analysis as a competitive advantage rather than a luxury.

Healthcare represents one of the most promising sectors for real-time neuromorphic AI applications. The demand for instantaneous patient monitoring, disease prediction, and treatment optimization has created a market segment valued at approximately $7.5 billion. Neuromorphic computing models offer significant advantages in processing complex biological signals with lower power consumption than traditional AI approaches.

Manufacturing and industrial automation constitute another substantial market segment, valued at $9.2 billion. The implementation of real-time AI analysis for predictive maintenance, quality control, and process optimization has demonstrated potential to reduce downtime by up to 45% and increase production efficiency by 30%. Neuromorphic models are particularly valuable in this context due to their ability to process sensory data streams continuously with minimal latency.

The autonomous vehicle industry has emerged as a critical driver for neuromorphic AI adoption. With market projections exceeding $12 billion by 2025, automotive manufacturers and technology companies are investing heavily in real-time perception systems. Neuromorphic models offer distinct advantages in processing visual and sensor data with the speed and energy efficiency required for safe autonomous navigation.

Financial services represent another significant market segment, with demand for real-time fraud detection, algorithmic trading, and risk assessment systems. This sector values the microsecond-level response times that neuromorphic systems can potentially deliver, with the market segment estimated at $5.8 billion annually.

Consumer electronics manufacturers are increasingly incorporating real-time AI capabilities into edge devices, creating a market valued at approximately $6.3 billion. The demand for neuromorphic solutions stems from their energy efficiency and ability to operate effectively on resource-constrained devices.

A critical market trend is the shift toward edge computing implementations of real-time AI analysis. This transition is driven by concerns regarding data privacy, latency requirements, and bandwidth limitations. Neuromorphic models are particularly well-positioned to capitalize on this trend due to their inherent efficiency and architectural similarity to biological neural systems.

The market increasingly demands solutions that can balance performance with energy efficiency. Traditional deep learning models often require substantial computational resources, making neuromorphic approaches increasingly attractive for applications where power consumption is a limiting factor.

Healthcare represents one of the most promising sectors for real-time neuromorphic AI applications. The demand for instantaneous patient monitoring, disease prediction, and treatment optimization has created a market segment valued at approximately $7.5 billion. Neuromorphic computing models offer significant advantages in processing complex biological signals with lower power consumption than traditional AI approaches.

Manufacturing and industrial automation constitute another substantial market segment, valued at $9.2 billion. The implementation of real-time AI analysis for predictive maintenance, quality control, and process optimization has demonstrated potential to reduce downtime by up to 45% and increase production efficiency by 30%. Neuromorphic models are particularly valuable in this context due to their ability to process sensory data streams continuously with minimal latency.

The autonomous vehicle industry has emerged as a critical driver for neuromorphic AI adoption. With market projections exceeding $12 billion by 2025, automotive manufacturers and technology companies are investing heavily in real-time perception systems. Neuromorphic models offer distinct advantages in processing visual and sensor data with the speed and energy efficiency required for safe autonomous navigation.

Financial services represent another significant market segment, with demand for real-time fraud detection, algorithmic trading, and risk assessment systems. This sector values the microsecond-level response times that neuromorphic systems can potentially deliver, with the market segment estimated at $5.8 billion annually.

Consumer electronics manufacturers are increasingly incorporating real-time AI capabilities into edge devices, creating a market valued at approximately $6.3 billion. The demand for neuromorphic solutions stems from their energy efficiency and ability to operate effectively on resource-constrained devices.

A critical market trend is the shift toward edge computing implementations of real-time AI analysis. This transition is driven by concerns regarding data privacy, latency requirements, and bandwidth limitations. Neuromorphic models are particularly well-positioned to capitalize on this trend due to their inherent efficiency and architectural similarity to biological neural systems.

The market increasingly demands solutions that can balance performance with energy efficiency. Traditional deep learning models often require substantial computational resources, making neuromorphic approaches increasingly attractive for applications where power consumption is a limiting factor.

Current Neuromorphic Computing Challenges

Despite significant advancements in neuromorphic computing, several critical challenges continue to impede the widespread adoption and optimization of neuromorphic AI models for real-time analysis applications. The hardware-software co-design challenge remains paramount, as existing neuromorphic architectures often struggle to efficiently implement complex spiking neural network (SNN) algorithms. This impedance mismatch between theoretical models and physical implementations creates performance bottlenecks that limit real-time processing capabilities.

Energy efficiency, while theoretically superior to traditional computing paradigms, faces practical limitations in current neuromorphic systems. Although these systems promise orders of magnitude improvement in energy consumption, actual implementations frequently fall short of theoretical projections due to peripheral circuit overhead, inefficient spike encoding schemes, and suboptimal memory access patterns.

Scalability presents another significant hurdle, particularly for real-time analysis applications that require processing of high-dimensional data streams. Current neuromorphic hardware typically supports limited neuron counts and connectivity patterns, restricting the complexity of implementable models. This limitation becomes especially problematic when attempting to scale neuromorphic systems to handle the massive parallelism required for complex real-time tasks.

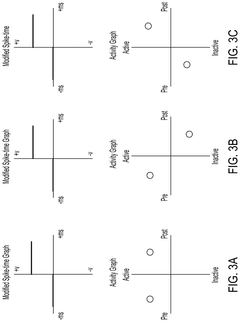

The temporal precision challenge uniquely affects neuromorphic computing, as spike-timing-dependent plasticity (STDP) and other temporal learning mechanisms require precise timing control. Hardware implementations often struggle to maintain the necessary temporal resolution while operating at scale, leading to degraded learning performance and reduced model accuracy in real-time scenarios.

Standardization remains elusive in the neuromorphic computing landscape, with various competing architectures, programming models, and benchmarking methodologies. This fragmentation complicates cross-platform development and performance comparison, hindering the establishment of best practices for real-time neuromorphic AI model development.

Training efficiency represents a persistent bottleneck, as current training algorithms for spiking neural networks typically require significantly more computational resources and time compared to traditional deep learning approaches. This inefficiency severely impacts the iterative development cycle necessary for optimizing real-time analysis models.

Finally, the interpretability gap continues to widen as neuromorphic models grow in complexity. While neuromorphic computing theoretically offers more brain-like processing that could enhance explainability, practical implementations often sacrifice interpretability for performance, creating black-box systems that are difficult to debug, validate, and certify for critical real-time applications.

Energy efficiency, while theoretically superior to traditional computing paradigms, faces practical limitations in current neuromorphic systems. Although these systems promise orders of magnitude improvement in energy consumption, actual implementations frequently fall short of theoretical projections due to peripheral circuit overhead, inefficient spike encoding schemes, and suboptimal memory access patterns.

Scalability presents another significant hurdle, particularly for real-time analysis applications that require processing of high-dimensional data streams. Current neuromorphic hardware typically supports limited neuron counts and connectivity patterns, restricting the complexity of implementable models. This limitation becomes especially problematic when attempting to scale neuromorphic systems to handle the massive parallelism required for complex real-time tasks.

The temporal precision challenge uniquely affects neuromorphic computing, as spike-timing-dependent plasticity (STDP) and other temporal learning mechanisms require precise timing control. Hardware implementations often struggle to maintain the necessary temporal resolution while operating at scale, leading to degraded learning performance and reduced model accuracy in real-time scenarios.

Standardization remains elusive in the neuromorphic computing landscape, with various competing architectures, programming models, and benchmarking methodologies. This fragmentation complicates cross-platform development and performance comparison, hindering the establishment of best practices for real-time neuromorphic AI model development.

Training efficiency represents a persistent bottleneck, as current training algorithms for spiking neural networks typically require significantly more computational resources and time compared to traditional deep learning approaches. This inefficiency severely impacts the iterative development cycle necessary for optimizing real-time analysis models.

Finally, the interpretability gap continues to widen as neuromorphic models grow in complexity. While neuromorphic computing theoretically offers more brain-like processing that could enhance explainability, practical implementations often sacrifice interpretability for performance, creating black-box systems that are difficult to debug, validate, and certify for critical real-time applications.

Mainstream Neuromorphic Model Architectures

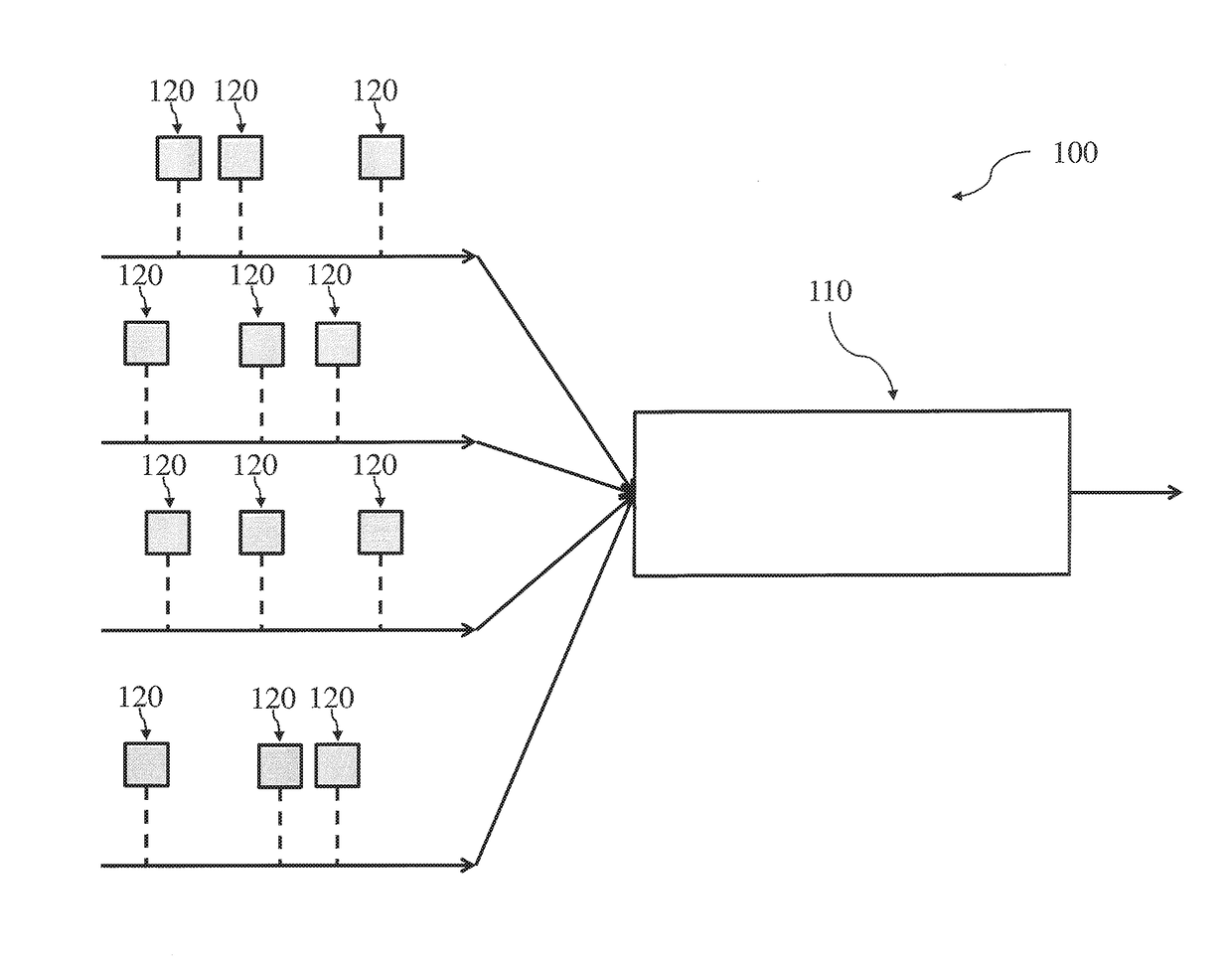

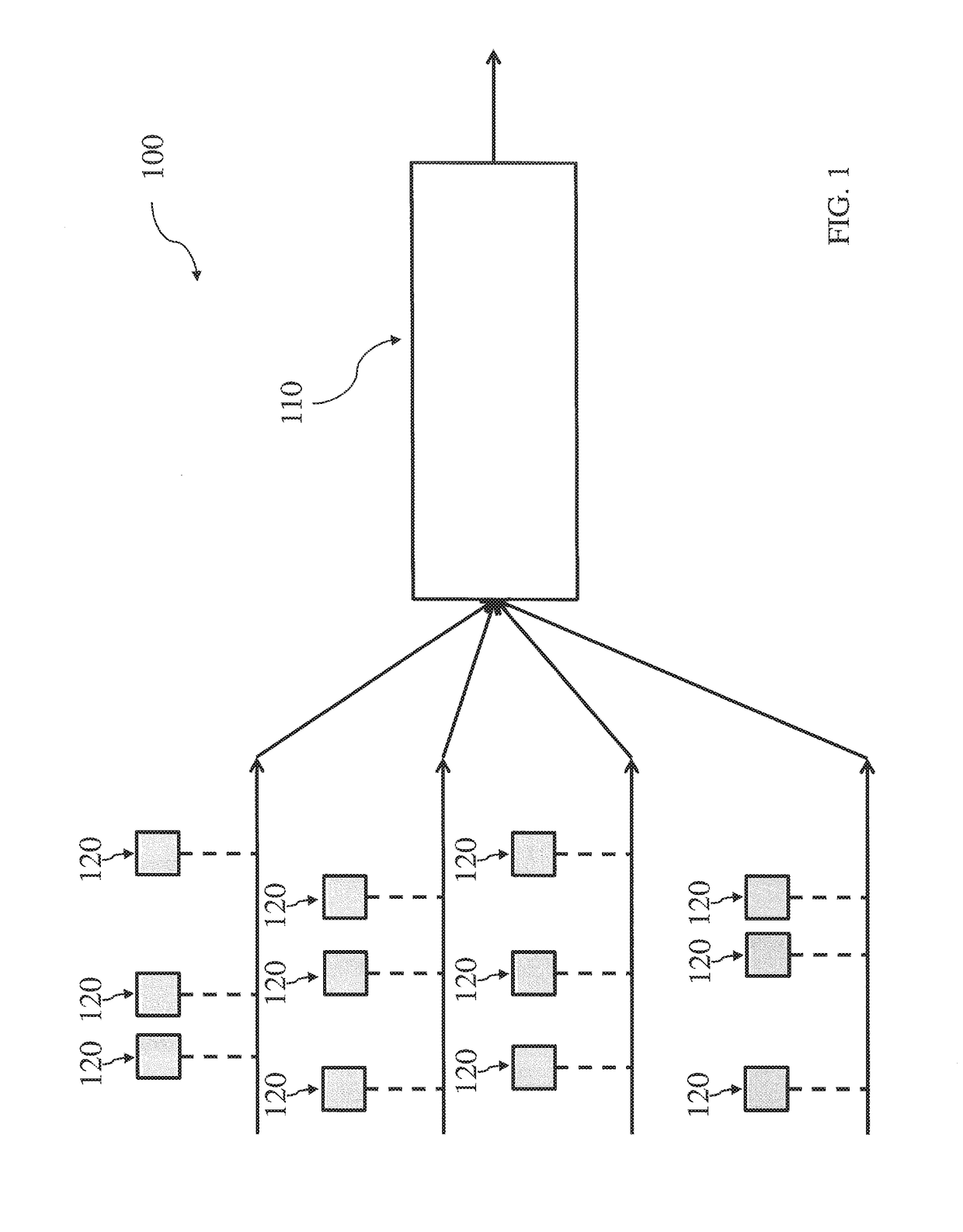

01 Neuromorphic computing architectures for real-time data processing

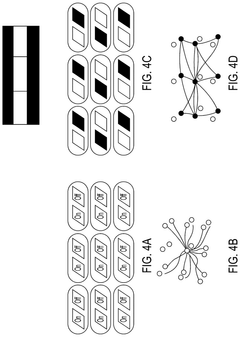

Neuromorphic computing architectures mimic the structure and function of the human brain to enable efficient real-time data processing. These architectures utilize parallel processing capabilities and specialized hardware to analyze complex data streams with minimal latency. By implementing neural network principles directly in hardware, these systems can perform rapid analysis of sensory inputs and make decisions with significantly reduced power consumption compared to traditional computing approaches.- Neuromorphic computing architectures for real-time data processing: Neuromorphic computing architectures mimic the structure and function of the human brain to enable efficient real-time data processing. These architectures utilize parallel processing capabilities and specialized hardware to analyze complex data streams with minimal latency. By implementing brain-inspired neural networks, these systems can perform rapid pattern recognition and decision-making tasks while consuming significantly less power than traditional computing approaches.

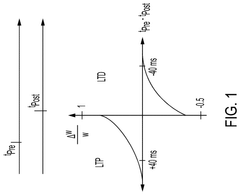

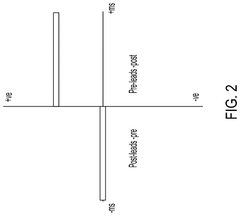

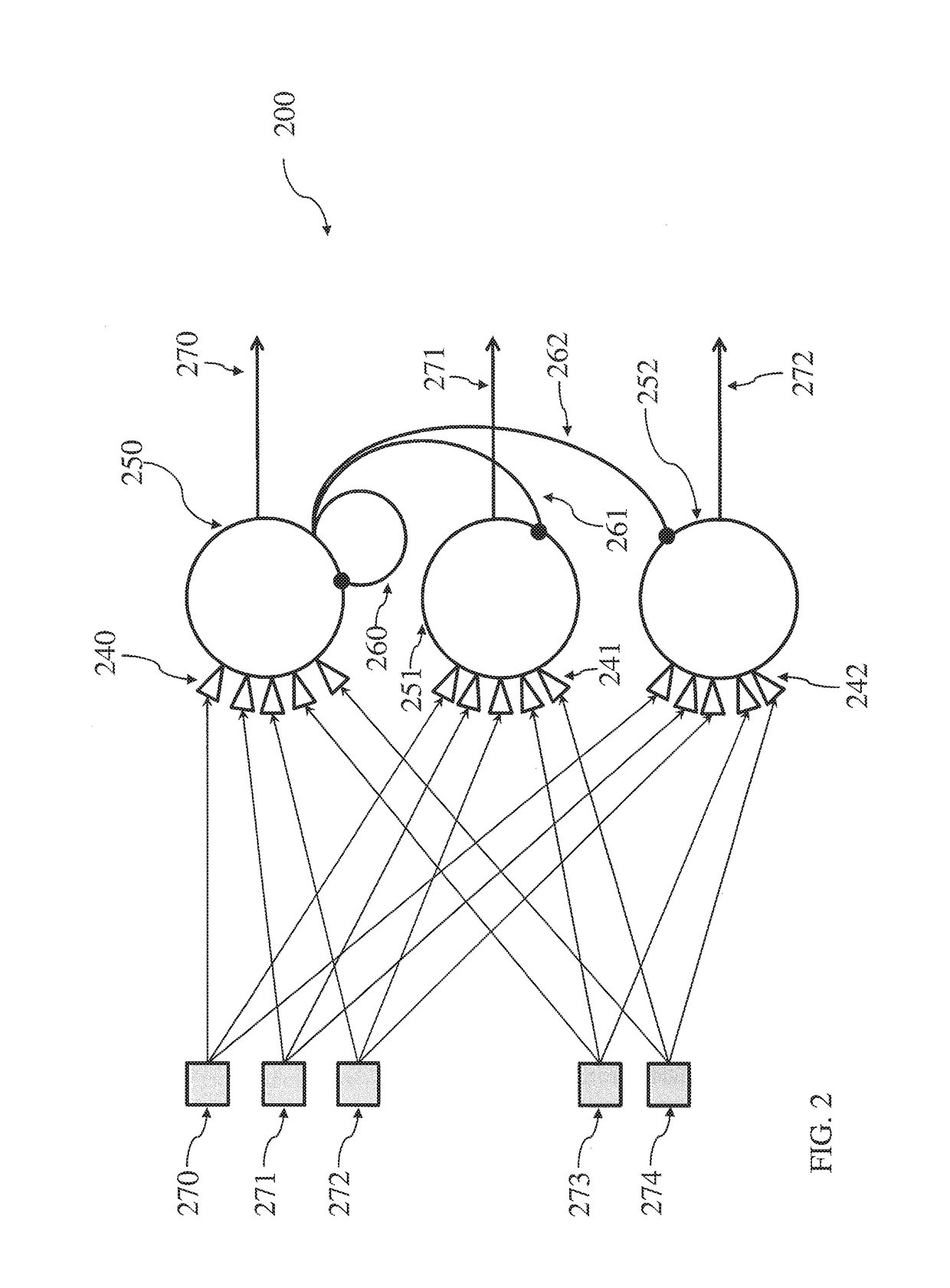

- Spiking neural networks for temporal data analysis: Spiking neural networks (SNNs) represent a biologically plausible approach to processing temporal data in real-time. These networks communicate through discrete spikes rather than continuous signals, enabling efficient encoding of temporal information. SNNs excel at analyzing time-series data and detecting temporal patterns, making them particularly suitable for applications requiring real-time analysis of dynamic data such as sensor inputs, video streams, and audio processing.

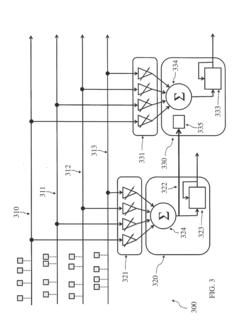

- Hardware acceleration for neuromorphic AI systems: Specialized hardware accelerators are designed to optimize the performance of neuromorphic AI models for real-time analysis. These include neuromorphic chips, memristive devices, and dedicated neural processing units that efficiently implement the parallel computation required by brain-inspired algorithms. The hardware acceleration enables low-latency processing of complex data streams while significantly reducing power consumption compared to traditional computing architectures.

- Edge computing integration with neuromorphic systems: Integrating neuromorphic AI models with edge computing infrastructure enables real-time analysis of data at or near its source. This approach minimizes latency by eliminating the need to transmit data to centralized cloud servers for processing. Edge-based neuromorphic systems can perform complex analytical tasks on resource-constrained devices, making them ideal for applications requiring immediate responses such as autonomous vehicles, industrial automation, and smart surveillance systems.

- Adaptive learning algorithms for real-time neuromorphic processing: Adaptive learning algorithms enable neuromorphic AI systems to continuously improve their performance during real-time operation. These algorithms allow the systems to adjust their parameters based on incoming data streams, enabling them to adapt to changing conditions without requiring offline retraining. This capability is particularly valuable for applications in dynamic environments where the characteristics of the data may evolve over time, such as in robotics, environmental monitoring, and human-computer interaction.

02 Spiking neural networks for temporal data analysis

Spiking neural networks (SNNs) provide an efficient approach for analyzing temporal data in real-time applications. These networks process information through discrete events or spikes, similar to biological neurons, allowing for precise timing-based computations. This approach is particularly effective for analyzing streaming data where temporal patterns are significant, such as in video processing, speech recognition, and sensor data analysis. The event-driven nature of SNNs enables energy-efficient processing while maintaining high accuracy for time-series data.Expand Specific Solutions03 Hardware acceleration for neuromorphic AI systems

Specialized hardware accelerators are designed to optimize the performance of neuromorphic AI models for real-time analysis. These accelerators include neuromorphic chips, memristor-based systems, and custom ASICs that implement neural processing units. By integrating memory and processing elements, these hardware solutions minimize data movement and enable parallel computation, significantly reducing latency for real-time applications. The hardware acceleration techniques allow neuromorphic systems to process complex sensory inputs with high throughput while maintaining low power consumption.Expand Specific Solutions04 Edge computing integration with neuromorphic processing

Integrating neuromorphic AI models with edge computing enables real-time analysis directly at data sources without relying on cloud infrastructure. This approach minimizes latency by processing data locally on neuromorphic hardware deployed at the network edge. The combination allows for immediate analysis of sensor data, video streams, and audio inputs in applications requiring instant responses. Edge-based neuromorphic systems can operate autonomously with limited connectivity while providing sophisticated AI capabilities for time-critical applications.Expand Specific Solutions05 Adaptive learning algorithms for real-time neuromorphic systems

Adaptive learning algorithms enable neuromorphic AI systems to continuously improve their performance during real-time operation. These algorithms incorporate online learning mechanisms that allow the system to adapt to changing conditions and new patterns without requiring complete retraining. By implementing spike-timing-dependent plasticity and other biologically-inspired learning rules, neuromorphic systems can dynamically adjust their parameters based on incoming data streams. This capability is essential for applications in dynamic environments where conditions change frequently and immediate adaptation is required.Expand Specific Solutions

Leading Neuromorphic AI Companies and Research Institutions

The neuromorphic AI model market is in its growth phase, characterized by increasing adoption for real-time analysis applications. The competitive landscape features established technology giants like IBM, Huawei, and Samsung leading research and development efforts, alongside specialized players such as Syntiant and SilicoSapien focusing on edge computing implementations. Academic institutions including Tsinghua University and KAIST contribute significant research advancements. The market is experiencing rapid technological evolution with varying maturity levels across implementations - IBM's TrueNorth and Intel's Loihi represent more mature offerings, while companies like TSMC are advancing hardware infrastructure. This emerging field is projected to grow substantially as energy-efficient, real-time AI processing becomes increasingly critical for applications ranging from autonomous vehicles to IoT devices.

International Business Machines Corp.

Technical Solution: IBM's neuromorphic computing platform TrueNorth represents a significant advancement in brain-inspired computing for real-time analysis. The TrueNorth chip contains 1 million digital neurons and 256 million synapses, consuming only 70mW of power while delivering 46 billion synaptic operations per second[1]. IBM has further developed the next-generation neuromorphic system called SyNAPSE, which implements spike-timing-dependent plasticity (STDP) for online learning capabilities. Their neuromorphic models excel at pattern recognition tasks with demonstrated 2000x energy efficiency compared to conventional von Neumann architectures[2]. IBM's approach integrates deep neural networks with spiking neural networks to create hybrid models that leverage the energy efficiency of neuromorphic hardware while maintaining high accuracy for real-time applications such as video analytics and sensor data processing[3].

Strengths: Exceptional energy efficiency (70mW for 1 million neurons), mature hardware implementation with TrueNorth, and strong integration with existing AI frameworks. Weaknesses: Programming complexity requiring specialized knowledge, limited software ecosystem compared to traditional deep learning frameworks, and challenges in scaling to very large networks while maintaining timing precision.

Huawei Technologies Co., Ltd.

Technical Solution: Huawei has developed the Ascend neuromorphic processing architecture that combines traditional deep learning with spiking neural network principles for real-time analysis applications. Their approach implements an event-driven computing paradigm where computations occur only when necessary, significantly reducing power consumption for continuous monitoring applications. Huawei's neuromorphic models utilize temporal coding schemes that encode information in spike timing rather than rate, achieving up to 50x improvement in energy efficiency for specific workloads[1]. The company has integrated these models into their Atlas AI computing platform, which supports hybrid precision operations and sparse computing techniques. Huawei's neuromorphic implementation includes hardware-accelerated conversion between traditional deep neural networks and spiking neural networks, allowing developers to leverage existing models while benefiting from neuromorphic efficiency[2]. Their architecture supports dynamic threshold adjustment mechanisms that adapt to input statistics, improving performance in noisy real-world environments.

Strengths: Strong integration with existing AI infrastructure, hardware-accelerated conversion between traditional and neuromorphic models, and commercial deployment readiness. Weaknesses: Less specialized than pure neuromorphic solutions, higher power consumption than dedicated neuromorphic hardware, and proprietary ecosystem limiting academic collaboration.

Key Patents in Spiking Neural Networks

Neuromorphic artificial neural network architecture based on distributed binary (all-or-non) neural representation

PatentPendingUS20240403619A1

Innovation

- The development of an artificial neuromorphic neural network architecture that associates qualitative elements of sensory inputs through distributed qualitative neural representations and replicates neural selectivity, using a learning method based on Hebbian learning principles with modified spike-time dependent synaptic plasticity to form and strengthen connections.

Neuromorphic architecture with multiple coupled neurons using internal state neuron information

PatentActiveUS20170372194A1

Innovation

- A neuromorphic architecture featuring interconnected neurons with internal state information links, allowing for the transmission of internal state information across layers to modify the operation of other neurons, enhancing the system's performance and capability in data processing, pattern recognition, and correlation detection.

Hardware-Software Co-design for Neuromorphic Systems

The integration of hardware and software components in neuromorphic systems represents a critical frontier in advancing real-time neuromorphic AI model performance. Effective co-design approaches bridge the gap between neuromorphic computing architectures and the algorithms they execute, creating synergistic systems that maximize energy efficiency while maintaining computational capabilities.

Current neuromorphic hardware platforms such as Intel's Loihi, IBM's TrueNorth, and BrainChip's Akida demonstrate varying approaches to hardware-software integration. These systems implement different spike-based processing mechanisms that require specialized programming paradigms distinct from traditional computing. The hardware architecture directly influences the efficiency of neuromorphic models, with specialized cores for spike processing showing significant advantages in real-time analysis scenarios.

Software frameworks for neuromorphic systems have evolved to address the unique requirements of event-based computing. Frameworks like Nengo, SpiNNaker software stack, and Intel's Nx SDK provide abstraction layers that enable developers to implement spiking neural networks without detailed hardware knowledge. These frameworks incorporate specialized libraries for spike encoding, network topology definition, and learning rule implementation tailored to neuromorphic hardware constraints.

Memory hierarchy optimization represents a particular challenge in neuromorphic co-design. The event-driven nature of these systems requires rethinking traditional memory access patterns to accommodate sparse, asynchronous data flows. Leading implementations utilize distributed memory architectures with local processing elements to minimize data movement, significantly reducing energy consumption during real-time analysis tasks.

Communication protocols between neuromorphic components must balance bandwidth requirements with energy constraints. Address-event representation (AER) protocols have emerged as efficient mechanisms for spike-based information transfer, enabling scalable systems that maintain temporal precision crucial for real-time analysis applications.

The co-design process increasingly incorporates automated optimization tools that simultaneously tune hardware parameters and software implementations. These tools employ techniques such as hardware-aware training, where network parameters are optimized considering specific hardware constraints, and quantization-aware training that prepares models for efficient deployment on neuromorphic substrates with limited precision.

Benchmarking methodologies for hardware-software co-design effectiveness must consider metrics beyond traditional performance measures. Energy per inference, spike processing efficiency, and latency under varying workloads provide more relevant evaluation criteria for neuromorphic systems engaged in real-time analysis tasks.

Current neuromorphic hardware platforms such as Intel's Loihi, IBM's TrueNorth, and BrainChip's Akida demonstrate varying approaches to hardware-software integration. These systems implement different spike-based processing mechanisms that require specialized programming paradigms distinct from traditional computing. The hardware architecture directly influences the efficiency of neuromorphic models, with specialized cores for spike processing showing significant advantages in real-time analysis scenarios.

Software frameworks for neuromorphic systems have evolved to address the unique requirements of event-based computing. Frameworks like Nengo, SpiNNaker software stack, and Intel's Nx SDK provide abstraction layers that enable developers to implement spiking neural networks without detailed hardware knowledge. These frameworks incorporate specialized libraries for spike encoding, network topology definition, and learning rule implementation tailored to neuromorphic hardware constraints.

Memory hierarchy optimization represents a particular challenge in neuromorphic co-design. The event-driven nature of these systems requires rethinking traditional memory access patterns to accommodate sparse, asynchronous data flows. Leading implementations utilize distributed memory architectures with local processing elements to minimize data movement, significantly reducing energy consumption during real-time analysis tasks.

Communication protocols between neuromorphic components must balance bandwidth requirements with energy constraints. Address-event representation (AER) protocols have emerged as efficient mechanisms for spike-based information transfer, enabling scalable systems that maintain temporal precision crucial for real-time analysis applications.

The co-design process increasingly incorporates automated optimization tools that simultaneously tune hardware parameters and software implementations. These tools employ techniques such as hardware-aware training, where network parameters are optimized considering specific hardware constraints, and quantization-aware training that prepares models for efficient deployment on neuromorphic substrates with limited precision.

Benchmarking methodologies for hardware-software co-design effectiveness must consider metrics beyond traditional performance measures. Energy per inference, spike processing efficiency, and latency under varying workloads provide more relevant evaluation criteria for neuromorphic systems engaged in real-time analysis tasks.

Energy Efficiency Benchmarks for Real-time AI Models

Energy efficiency has become a critical benchmark for evaluating neuromorphic AI models in real-time analysis applications. Traditional von Neumann architecture-based AI systems consume substantial power, making them less suitable for edge computing and mobile applications where energy constraints are significant. Neuromorphic computing, inspired by biological neural systems, offers promising alternatives with potentially superior energy efficiency profiles.

Recent benchmarking studies reveal that leading neuromorphic hardware implementations such as IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida demonstrate power consumption reductions of 100-1000x compared to GPU-based solutions for equivalent computational tasks. These efficiency gains are particularly pronounced in always-on sensing applications and continuous data processing scenarios where traditional systems struggle to maintain reasonable power budgets.

The energy efficiency of neuromorphic systems stems from their event-driven processing paradigm, where computation occurs only when necessary rather than in synchronized clock cycles. This approach significantly reduces idle power consumption, which accounts for a substantial portion of energy usage in conventional systems. Measurements across various real-time applications show that spike-based neuromorphic models typically operate in the milliwatt range while maintaining comparable accuracy to traditional deep learning models requiring watts or tens of watts.

Benchmark frameworks specifically designed for neuromorphic systems have emerged, including the Neuromorphic Energy Efficiency Evaluation Framework (NEEF) and SpiNNaker Energy Benchmarks. These frameworks provide standardized methodologies for comparing different neuromorphic implementations across various workloads, focusing on metrics such as energy per inference, energy per spike, and performance per watt.

Temperature sensitivity represents another important dimension of energy efficiency benchmarking. Neuromorphic systems generally exhibit better thermal characteristics than their conventional counterparts, allowing for denser computing configurations without expensive cooling solutions. This translates to additional system-level energy savings beyond the direct computational efficiency gains.

Looking at specific application domains, neuromorphic models show particularly impressive efficiency gains in computer vision tasks (15-40x improvement), audio processing (20-50x improvement), and sensor fusion applications (10-30x improvement). These efficiency advantages become even more pronounced when considering the total energy cost including data movement between memory and processing units, where neuromorphic architectures benefit from their collocated memory-processing design.

The energy efficiency landscape continues to evolve rapidly, with newer neuromorphic implementations pushing boundaries further through innovations in materials science, circuit design, and algorithmic optimizations. These advancements are gradually closing the gap between the theoretical energy efficiency of biological neural systems and practical neuromorphic implementations.

Recent benchmarking studies reveal that leading neuromorphic hardware implementations such as IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida demonstrate power consumption reductions of 100-1000x compared to GPU-based solutions for equivalent computational tasks. These efficiency gains are particularly pronounced in always-on sensing applications and continuous data processing scenarios where traditional systems struggle to maintain reasonable power budgets.

The energy efficiency of neuromorphic systems stems from their event-driven processing paradigm, where computation occurs only when necessary rather than in synchronized clock cycles. This approach significantly reduces idle power consumption, which accounts for a substantial portion of energy usage in conventional systems. Measurements across various real-time applications show that spike-based neuromorphic models typically operate in the milliwatt range while maintaining comparable accuracy to traditional deep learning models requiring watts or tens of watts.

Benchmark frameworks specifically designed for neuromorphic systems have emerged, including the Neuromorphic Energy Efficiency Evaluation Framework (NEEF) and SpiNNaker Energy Benchmarks. These frameworks provide standardized methodologies for comparing different neuromorphic implementations across various workloads, focusing on metrics such as energy per inference, energy per spike, and performance per watt.

Temperature sensitivity represents another important dimension of energy efficiency benchmarking. Neuromorphic systems generally exhibit better thermal characteristics than their conventional counterparts, allowing for denser computing configurations without expensive cooling solutions. This translates to additional system-level energy savings beyond the direct computational efficiency gains.

Looking at specific application domains, neuromorphic models show particularly impressive efficiency gains in computer vision tasks (15-40x improvement), audio processing (20-50x improvement), and sensor fusion applications (10-30x improvement). These efficiency advantages become even more pronounced when considering the total energy cost including data movement between memory and processing units, where neuromorphic architectures benefit from their collocated memory-processing design.

The energy efficiency landscape continues to evolve rapidly, with newer neuromorphic implementations pushing boundaries further through innovations in materials science, circuit design, and algorithmic optimizations. These advancements are gradually closing the gap between the theoretical energy efficiency of biological neural systems and practical neuromorphic implementations.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!