Optimizing Neuromorphic Systems for Machine Learning

SEP 5, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. Since its conceptual inception in the late 1980s by Carver Mead, this field has evolved from theoretical frameworks to practical implementations that aim to replicate the brain's efficiency in processing information. The trajectory of neuromorphic computing has been characterized by progressive advancements in hardware design, neural network modeling, and integration with conventional computing systems.

The evolution of neuromorphic systems has traversed several distinct phases. Initially, research focused on developing analog VLSI circuits that could mimic neural behavior. This was followed by the emergence of digital neuromorphic architectures that offered greater programmability while maintaining brain-inspired processing principles. Recent years have witnessed the development of hybrid systems that combine analog and digital components to optimize both energy efficiency and computational flexibility.

Current neuromorphic computing objectives center on addressing the limitations of traditional von Neumann architectures, particularly for machine learning applications. These objectives include achieving significant reductions in power consumption, enhancing parallel processing capabilities, and enabling real-time learning and adaptation. The field aims to develop systems that can process sensory data with the same efficiency and robustness as biological neural networks, while consuming orders of magnitude less energy than conventional computing platforms.

A critical objective in optimizing neuromorphic systems for machine learning is bridging the gap between neuroscience and engineering. This involves translating neurobiological principles into practical computing paradigms that can be implemented in hardware. Researchers are working to incorporate features such as spike-timing-dependent plasticity, homeostatic mechanisms, and hierarchical information processing into neuromorphic architectures to enhance their learning capabilities.

The technological trajectory points toward increasingly sophisticated neuromorphic systems capable of handling complex machine learning tasks with unprecedented energy efficiency. Future developments are expected to focus on scaling these systems to accommodate larger neural networks, improving their integration with existing computing infrastructure, and expanding their application domains beyond current limitations.

The ultimate goal of neuromorphic computing research is to create systems that not only match but potentially surpass biological neural networks in specific computational tasks, while maintaining their fundamental advantages in energy efficiency, adaptability, and robustness. This represents a convergence of neuroscience, computer engineering, and artificial intelligence that promises to revolutionize how machines learn and interact with the world.

The evolution of neuromorphic systems has traversed several distinct phases. Initially, research focused on developing analog VLSI circuits that could mimic neural behavior. This was followed by the emergence of digital neuromorphic architectures that offered greater programmability while maintaining brain-inspired processing principles. Recent years have witnessed the development of hybrid systems that combine analog and digital components to optimize both energy efficiency and computational flexibility.

Current neuromorphic computing objectives center on addressing the limitations of traditional von Neumann architectures, particularly for machine learning applications. These objectives include achieving significant reductions in power consumption, enhancing parallel processing capabilities, and enabling real-time learning and adaptation. The field aims to develop systems that can process sensory data with the same efficiency and robustness as biological neural networks, while consuming orders of magnitude less energy than conventional computing platforms.

A critical objective in optimizing neuromorphic systems for machine learning is bridging the gap between neuroscience and engineering. This involves translating neurobiological principles into practical computing paradigms that can be implemented in hardware. Researchers are working to incorporate features such as spike-timing-dependent plasticity, homeostatic mechanisms, and hierarchical information processing into neuromorphic architectures to enhance their learning capabilities.

The technological trajectory points toward increasingly sophisticated neuromorphic systems capable of handling complex machine learning tasks with unprecedented energy efficiency. Future developments are expected to focus on scaling these systems to accommodate larger neural networks, improving their integration with existing computing infrastructure, and expanding their application domains beyond current limitations.

The ultimate goal of neuromorphic computing research is to create systems that not only match but potentially surpass biological neural networks in specific computational tasks, while maintaining their fundamental advantages in energy efficiency, adaptability, and robustness. This represents a convergence of neuroscience, computer engineering, and artificial intelligence that promises to revolutionize how machines learn and interact with the world.

Market Analysis for Brain-Inspired Computing Solutions

The brain-inspired computing market is experiencing significant growth, driven by the increasing demand for efficient machine learning solutions. The global neuromorphic computing market is projected to reach $8.9 billion by 2025, growing at a compound annual growth rate of 49.1% from 2020. This remarkable growth is fueled by the limitations of traditional computing architectures in handling complex AI workloads and the need for more energy-efficient computing solutions.

Several key market segments are emerging within the brain-inspired computing landscape. The edge computing segment represents the fastest-growing application area, as neuromorphic systems offer substantial advantages in power efficiency and real-time processing capabilities. This is particularly valuable for IoT devices, autonomous vehicles, and mobile applications where energy constraints are significant barriers to AI deployment.

Healthcare and scientific research constitute another major market segment, with neuromorphic systems being deployed for medical imaging analysis, drug discovery, and neural signal processing. The financial sector is also adopting these technologies for fraud detection and algorithmic trading, leveraging their ability to process temporal patterns efficiently.

From a geographic perspective, North America currently leads the market with approximately 40% share, driven by substantial investments from technology giants and defense agencies. Asia-Pacific represents the fastest-growing region, with China, Japan, and South Korea making strategic investments in neuromorphic research and development to establish technological sovereignty in advanced computing.

Customer demand is increasingly focused on three key value propositions: energy efficiency, with neuromorphic systems consuming 100-1000x less power than conventional architectures for certain workloads; real-time processing capabilities for time-critical applications; and adaptability to learn from limited training data, similar to biological systems.

Market barriers include the lack of standardized programming frameworks for neuromorphic hardware, limited software ecosystem maturity, and the high initial investment required for custom hardware development. Additionally, the interdisciplinary nature of neuromorphic computing necessitates collaboration between neuroscientists, computer architects, and machine learning specialists, creating organizational challenges for market entrants.

The competitive landscape features established technology companies like Intel, IBM, and Qualcomm alongside specialized startups such as BrainChip, SynSense, and Rain Neuromorphics. Academic research institutions also play a crucial role in technology transfer and innovation within this ecosystem, often serving as incubators for commercial ventures.

Several key market segments are emerging within the brain-inspired computing landscape. The edge computing segment represents the fastest-growing application area, as neuromorphic systems offer substantial advantages in power efficiency and real-time processing capabilities. This is particularly valuable for IoT devices, autonomous vehicles, and mobile applications where energy constraints are significant barriers to AI deployment.

Healthcare and scientific research constitute another major market segment, with neuromorphic systems being deployed for medical imaging analysis, drug discovery, and neural signal processing. The financial sector is also adopting these technologies for fraud detection and algorithmic trading, leveraging their ability to process temporal patterns efficiently.

From a geographic perspective, North America currently leads the market with approximately 40% share, driven by substantial investments from technology giants and defense agencies. Asia-Pacific represents the fastest-growing region, with China, Japan, and South Korea making strategic investments in neuromorphic research and development to establish technological sovereignty in advanced computing.

Customer demand is increasingly focused on three key value propositions: energy efficiency, with neuromorphic systems consuming 100-1000x less power than conventional architectures for certain workloads; real-time processing capabilities for time-critical applications; and adaptability to learn from limited training data, similar to biological systems.

Market barriers include the lack of standardized programming frameworks for neuromorphic hardware, limited software ecosystem maturity, and the high initial investment required for custom hardware development. Additionally, the interdisciplinary nature of neuromorphic computing necessitates collaboration between neuroscientists, computer architects, and machine learning specialists, creating organizational challenges for market entrants.

The competitive landscape features established technology companies like Intel, IBM, and Qualcomm alongside specialized startups such as BrainChip, SynSense, and Rain Neuromorphics. Academic research institutions also play a crucial role in technology transfer and innovation within this ecosystem, often serving as incubators for commercial ventures.

Current Neuromorphic Architectures and Limitations

Current neuromorphic computing architectures can be broadly categorized into digital, analog, and hybrid implementations, each with distinct advantages and limitations. Digital neuromorphic systems, exemplified by IBM's TrueNorth and Intel's Loihi, utilize digital circuits to emulate neural behavior. While these systems offer precision and programmability, they struggle with power efficiency when scaling to complex neural networks, typically consuming orders of magnitude more energy than their biological counterparts.

Analog neuromorphic architectures, such as memristor-based systems developed by HP Labs and various academic institutions, more closely mimic the continuous-valued nature of biological neurons. These systems excel in power efficiency and parallel processing but face significant challenges in manufacturing consistency, device variability, and noise susceptibility, limiting their practical deployment in commercial applications.

Hybrid approaches attempt to leverage the strengths of both paradigms, combining digital control logic with analog computing elements. Notable examples include BrainScaleS from the University of Heidelberg and SpiNNaker from the University of Manchester. While promising, these systems often encounter integration challenges and complexity in programming models.

A fundamental limitation across all current architectures is the gap between neuromorphic hardware capabilities and the requirements of modern machine learning algorithms. Most contemporary ML frameworks are optimized for traditional von Neumann architectures, creating a significant translation barrier when implementing these algorithms on neuromorphic hardware. This mismatch necessitates either substantial algorithm adaptation or hardware compromises.

Scalability presents another critical challenge. Current neuromorphic systems typically contain thousands to millions of neurons, whereas the human brain contains approximately 86 billion neurons with trillions of synapses. The density, connectivity, and energy efficiency required to approach biological scale remain elusive in silicon implementations.

Learning mechanisms represent a further limitation. While biological systems continuously adapt through various plasticity mechanisms, implementing efficient on-chip learning in hardware remains challenging. Most current systems either require offline training or implement simplified learning rules that capture only a fraction of biological learning complexity.

Finally, the lack of standardized benchmarks, programming interfaces, and development tools significantly impedes broader adoption of neuromorphic computing for machine learning applications. Unlike traditional computing platforms with established ecosystems, neuromorphic systems often require specialized expertise and custom software stacks, creating substantial barriers to entry for potential users and developers.

Analog neuromorphic architectures, such as memristor-based systems developed by HP Labs and various academic institutions, more closely mimic the continuous-valued nature of biological neurons. These systems excel in power efficiency and parallel processing but face significant challenges in manufacturing consistency, device variability, and noise susceptibility, limiting their practical deployment in commercial applications.

Hybrid approaches attempt to leverage the strengths of both paradigms, combining digital control logic with analog computing elements. Notable examples include BrainScaleS from the University of Heidelberg and SpiNNaker from the University of Manchester. While promising, these systems often encounter integration challenges and complexity in programming models.

A fundamental limitation across all current architectures is the gap between neuromorphic hardware capabilities and the requirements of modern machine learning algorithms. Most contemporary ML frameworks are optimized for traditional von Neumann architectures, creating a significant translation barrier when implementing these algorithms on neuromorphic hardware. This mismatch necessitates either substantial algorithm adaptation or hardware compromises.

Scalability presents another critical challenge. Current neuromorphic systems typically contain thousands to millions of neurons, whereas the human brain contains approximately 86 billion neurons with trillions of synapses. The density, connectivity, and energy efficiency required to approach biological scale remain elusive in silicon implementations.

Learning mechanisms represent a further limitation. While biological systems continuously adapt through various plasticity mechanisms, implementing efficient on-chip learning in hardware remains challenging. Most current systems either require offline training or implement simplified learning rules that capture only a fraction of biological learning complexity.

Finally, the lack of standardized benchmarks, programming interfaces, and development tools significantly impedes broader adoption of neuromorphic computing for machine learning applications. Unlike traditional computing platforms with established ecosystems, neuromorphic systems often require specialized expertise and custom software stacks, creating substantial barriers to entry for potential users and developers.

Existing Neuromorphic Implementations for ML Workloads

01 Hardware optimization for neuromorphic systems

Optimization of hardware components in neuromorphic systems to improve performance and efficiency. This includes specialized circuit designs, memristive devices, and novel architectures that mimic neural structures. These hardware optimizations enable better power efficiency, faster processing, and more accurate modeling of biological neural networks, which are crucial for applications requiring real-time processing and low power consumption.- Hardware optimization for neuromorphic systems: Hardware optimization techniques for neuromorphic systems focus on improving the physical architecture to enhance performance and efficiency. These techniques include specialized circuit designs, memristor-based implementations, and optimized chip layouts that mimic neural structures. By integrating these hardware optimizations, neuromorphic systems can achieve better power efficiency, faster processing speeds, and more accurate neural simulations.

- Energy efficiency in neuromorphic computing: Energy efficiency optimization in neuromorphic systems involves developing algorithms and architectures that minimize power consumption while maintaining computational performance. These approaches include low-power neural network implementations, spike-based processing techniques, and event-driven computing models. By focusing on energy efficiency, neuromorphic systems can operate with significantly reduced power requirements compared to traditional computing architectures, making them suitable for edge devices and battery-powered applications.

- Learning algorithms for neuromorphic architectures: Specialized learning algorithms designed for neuromorphic architectures enable these systems to adapt and improve over time. These include spike-timing-dependent plasticity (STDP), reinforcement learning adaptations, and unsupervised learning techniques optimized for spiking neural networks. By implementing these algorithms, neuromorphic systems can efficiently process temporal data patterns and learn from unstructured inputs with minimal supervision.

- Neuromorphic system integration with conventional computing: Integration techniques for combining neuromorphic systems with conventional computing architectures allow for hybrid approaches that leverage the strengths of both paradigms. These methods include interface protocols, data conversion mechanisms, and co-processing strategies that enable seamless communication between neuromorphic components and traditional processors. This integration facilitates the adoption of neuromorphic computing in existing systems and applications while maintaining compatibility with established software ecosystems.

- Application-specific neuromorphic optimization: Optimization techniques tailored for specific applications of neuromorphic computing focus on customizing system parameters to meet particular use case requirements. These include specialized network topologies for computer vision, optimized memory structures for natural language processing, and dedicated signal processing pathways for sensor fusion. By fine-tuning neuromorphic systems for specific applications, performance can be significantly enhanced while reducing computational overhead and improving accuracy for targeted tasks.

02 Neural network training algorithms for neuromorphic computing

Advanced training algorithms specifically designed for neuromorphic systems that optimize learning processes and improve model accuracy. These algorithms adapt traditional neural network training methods to the unique constraints and capabilities of neuromorphic hardware, including spike-based processing and event-driven computation. They enable more efficient training, better generalization, and improved performance in pattern recognition and classification tasks.Expand Specific Solutions03 Energy efficiency optimization in neuromorphic systems

Methods and techniques to reduce power consumption in neuromorphic computing systems while maintaining computational performance. These approaches include optimized spike encoding schemes, efficient neuron models, and power-aware learning algorithms. Energy efficiency is particularly important for edge computing applications and mobile devices where battery life is a critical constraint.Expand Specific Solutions04 Spike-based processing optimization

Optimization techniques specifically for spike-based neuromorphic computing, focusing on efficient encoding, transmission, and processing of neural spikes. These methods improve the temporal precision of information processing, reduce latency, and enhance the system's ability to process time-varying data. Spike-based optimization is essential for applications requiring real-time sensory processing and dynamic pattern recognition.Expand Specific Solutions05 Application-specific neuromorphic system optimization

Tailoring neuromorphic systems for specific applications such as computer vision, natural language processing, and autonomous systems. This includes optimizing network architectures, learning rules, and hardware configurations to meet the unique requirements of each application domain. Application-specific optimization enables better performance, reduced resource utilization, and more effective deployment in real-world scenarios.Expand Specific Solutions

Leading Organizations in Neuromorphic Computing Research

The neuromorphic computing market for machine learning is currently in an early growth phase, characterized by significant research activity but limited commercial deployment. Market size is projected to expand rapidly, reaching approximately $8-10 billion by 2028, driven by demand for energy-efficient AI solutions. Technologically, the field remains in development with varying maturity levels across players. IBM leads with its TrueNorth and subsequent architectures, while Intel's Loihi platform demonstrates promising capabilities. Specialized startups like Syntiant and Innatera are advancing application-specific neuromorphic chips. Samsung, Google, and Bosch are investing heavily in neuromorphic R&D, while academic institutions like GIST and Peking University contribute fundamental research. The competitive landscape features both established technology giants and innovative startups racing to overcome implementation challenges.

International Business Machines Corp.

Technical Solution: IBM's neuromorphic computing approach centers on their TrueNorth and subsequent systems that mimic brain-like architectures. Their technology implements spiking neural networks (SNNs) with extremely low power consumption - approximately 70mW for a chip with 1 million neurons and 256 million synapses. IBM's neuromorphic systems feature event-driven computation where neurons only consume power when they fire, dramatically reducing energy requirements compared to traditional architectures. The company has developed specialized programming frameworks and algorithms optimized for their neuromorphic hardware, including a backpropagation algorithm adapted for SNNs that achieves accuracy comparable to conventional deep learning while maintaining energy efficiency. IBM has also pioneered phase-change memory (PCM) technology for implementing synaptic weights in hardware, allowing for analog-like computation with digital precision and stability. Their systems demonstrate remarkable capabilities in pattern recognition tasks while consuming orders of magnitude less power than GPU-based solutions.

Strengths: Extremely low power consumption (milliwatts vs. watts for conventional systems); highly scalable architecture; mature development ecosystem with programming tools. Weaknesses: Limited application scope compared to general-purpose ML systems; requires specialized programming approaches; challenges in training complex models directly on neuromorphic hardware.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed neuromorphic processing units (NPUs) that integrate directly with their memory technologies, creating a comprehensive neuromorphic computing solution. Their approach leverages resistive RAM (RRAM) and magnetoresistive RAM (MRAM) technologies to implement synaptic functions directly in memory, significantly reducing the energy costs associated with data movement between processing and memory units. Samsung's neuromorphic systems employ a hierarchical architecture that combines traditional CMOS technology with emerging non-volatile memory to create efficient in-memory computing capabilities. Their NPUs feature specialized circuits for implementing spiking neural networks with configurable neuron models and learning rules. Samsung has demonstrated these systems achieving 20x energy efficiency improvements over conventional deep learning accelerators for tasks like image recognition and natural language processing. The company has also developed software frameworks that allow developers to deploy conventional deep learning models onto their neuromorphic hardware with minimal modifications, bridging the gap between traditional machine learning and neuromorphic computing paradigms.

Strengths: Integration with memory technologies reduces data movement bottlenecks; compatibility with existing ML frameworks; strong manufacturing capabilities for scaled production. Weaknesses: Still emerging technology with limited deployment in commercial products; requires specialized hardware-software co-design; performance on complex ML tasks still lags behind conventional approaches.

Key Innovations in Spiking Neural Networks

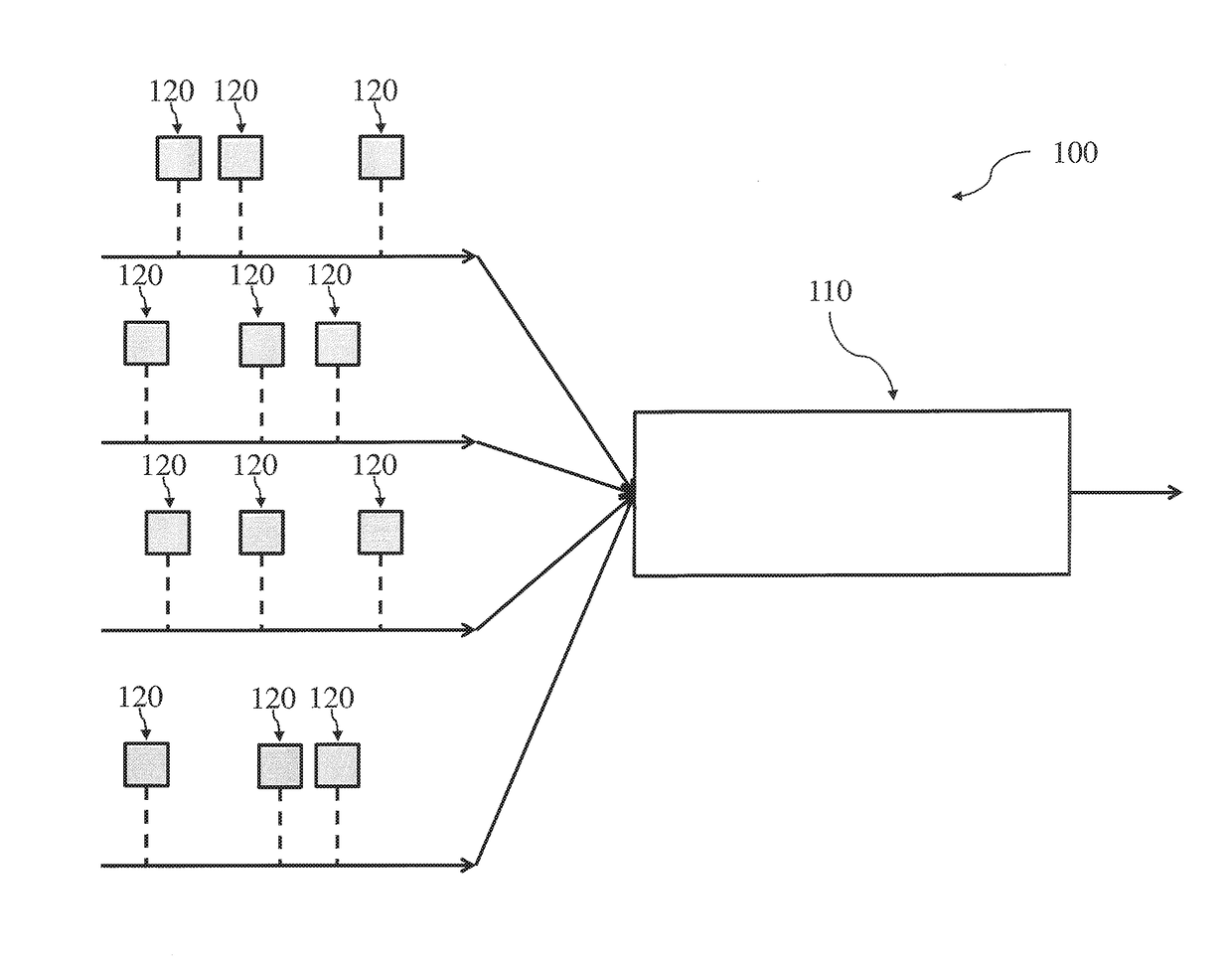

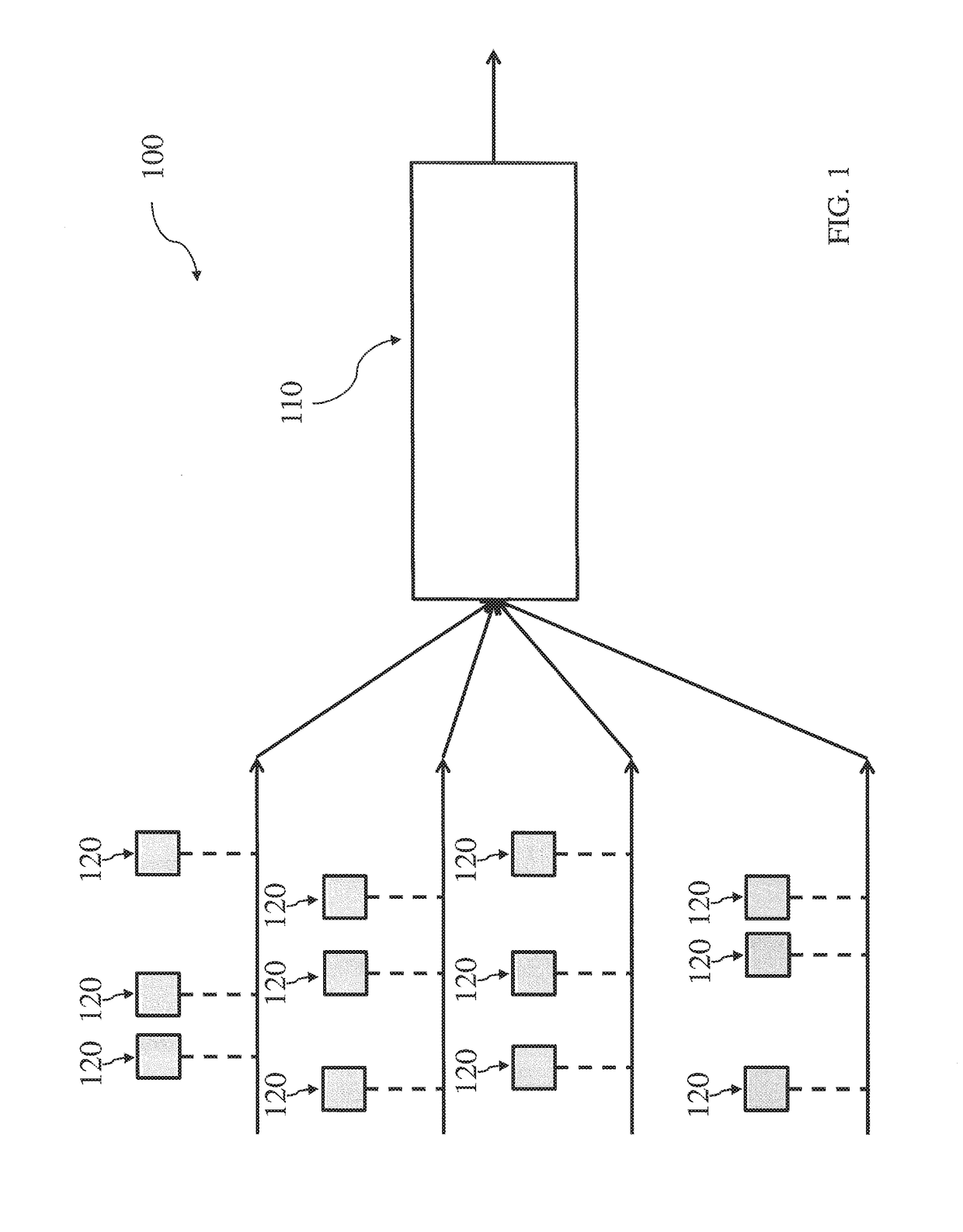

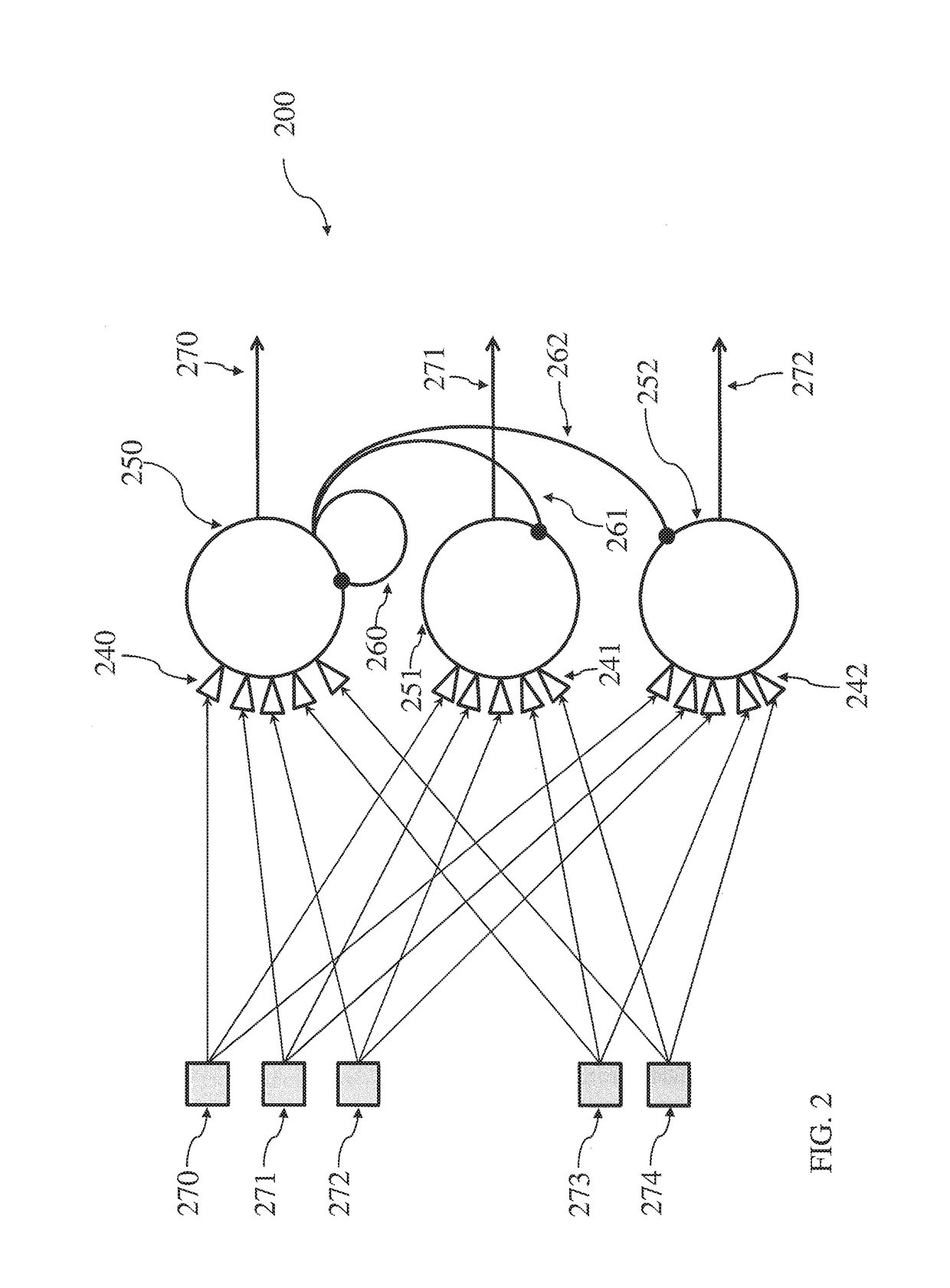

Neuromorphic architecture with multiple coupled neurons using internal state neuron information

PatentActiveUS20170372194A1

Innovation

- A neuromorphic architecture featuring interconnected neurons with internal state information links, allowing for the transmission of internal state information across layers to modify the operation of other neurons, enhancing the system's performance and capability in data processing, pattern recognition, and correlation detection.

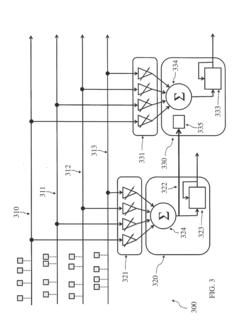

Neural network optimization using knowledge representations

PatentPendingUS20230297835A1

Innovation

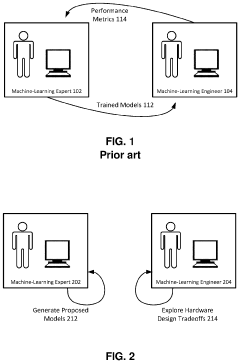

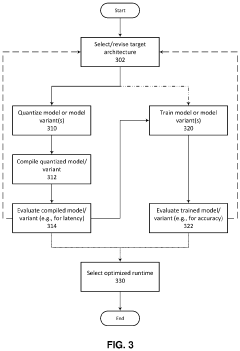

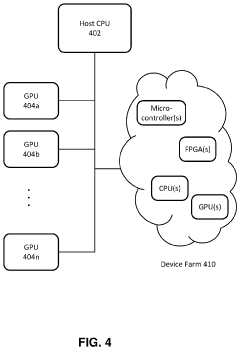

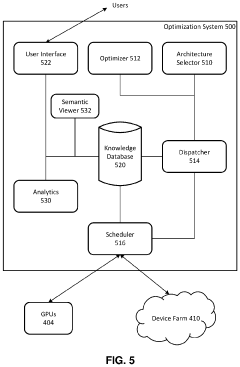

- The optimization process is bifurcated into independent workflows, allowing machine-learning engineers to evaluate hardware constraints and experts to focus on accuracy, enabling parallel processing and dynamic architecture evolution based on evaluation results, with multiple model variants being tested and optimized simultaneously for latency and accuracy.

Hardware-Software Co-design Strategies

Hardware-Software Co-design Strategies for neuromorphic systems represent a critical approach to optimizing these brain-inspired architectures for machine learning applications. The traditional separation between hardware and software development cycles has proven inadequate for neuromorphic computing, where the unique computational paradigm demands integrated design thinking. Effective co-design methodologies synchronize hardware capabilities with software requirements, creating systems that maximize energy efficiency while maintaining computational performance.

Current co-design approaches focus on several key areas of integration. Algorithm-hardware mapping techniques ensure that neural network models are optimally translated to physical neuromorphic substrates, accounting for specific hardware constraints such as limited precision, connectivity patterns, and timing dependencies. This mapping process often requires specialized compilers and toolchains that understand both the mathematical properties of neural algorithms and the physical limitations of neuromorphic hardware.

Memory hierarchy optimization represents another crucial co-design strategy. Neuromorphic systems typically employ distributed memory architectures that differ significantly from conventional von Neumann designs. Co-design approaches carefully balance on-chip memory, synaptic weight storage, and external memory access patterns to minimize energy-intensive data movement while maintaining computational throughput for different machine learning workloads.

Timing and synchronization mechanisms must be jointly developed across hardware and software layers. Unlike traditional computing systems, neuromorphic architectures often employ event-driven processing with complex temporal dynamics. Co-design strategies include developing programming models that express timing dependencies efficiently while designing hardware timing circuits that support these models with minimal overhead.

Power management co-design has emerged as particularly important for neuromorphic systems, which promise significant energy efficiency advantages. Adaptive power schemes that dynamically adjust circuit behavior based on computational demands require tight integration between hardware power controllers and software workload management. These strategies often implement activity-dependent power gating and voltage scaling techniques controlled through software-accessible registers.

Programming abstractions represent perhaps the most challenging aspect of neuromorphic co-design. Creating intuitive interfaces that shield machine learning practitioners from hardware complexities while still enabling efficient resource utilization remains an active research area. Successful approaches include domain-specific languages that express neural computations at multiple levels of abstraction, with compilation tools that progressively map these abstractions to specific hardware implementations.

Current co-design approaches focus on several key areas of integration. Algorithm-hardware mapping techniques ensure that neural network models are optimally translated to physical neuromorphic substrates, accounting for specific hardware constraints such as limited precision, connectivity patterns, and timing dependencies. This mapping process often requires specialized compilers and toolchains that understand both the mathematical properties of neural algorithms and the physical limitations of neuromorphic hardware.

Memory hierarchy optimization represents another crucial co-design strategy. Neuromorphic systems typically employ distributed memory architectures that differ significantly from conventional von Neumann designs. Co-design approaches carefully balance on-chip memory, synaptic weight storage, and external memory access patterns to minimize energy-intensive data movement while maintaining computational throughput for different machine learning workloads.

Timing and synchronization mechanisms must be jointly developed across hardware and software layers. Unlike traditional computing systems, neuromorphic architectures often employ event-driven processing with complex temporal dynamics. Co-design strategies include developing programming models that express timing dependencies efficiently while designing hardware timing circuits that support these models with minimal overhead.

Power management co-design has emerged as particularly important for neuromorphic systems, which promise significant energy efficiency advantages. Adaptive power schemes that dynamically adjust circuit behavior based on computational demands require tight integration between hardware power controllers and software workload management. These strategies often implement activity-dependent power gating and voltage scaling techniques controlled through software-accessible registers.

Programming abstractions represent perhaps the most challenging aspect of neuromorphic co-design. Creating intuitive interfaces that shield machine learning practitioners from hardware complexities while still enabling efficient resource utilization remains an active research area. Successful approaches include domain-specific languages that express neural computations at multiple levels of abstraction, with compilation tools that progressively map these abstractions to specific hardware implementations.

Benchmarking Frameworks for Neuromorphic Systems

Benchmarking frameworks for neuromorphic systems have become increasingly critical as the field matures and diversifies. These frameworks provide standardized methods to evaluate, compare, and optimize neuromorphic hardware and algorithms across different platforms and applications. The development of robust benchmarking tools addresses the fundamental challenge of establishing common metrics for systems that often employ vastly different architectural approaches and computational paradigms.

Current leading benchmarking frameworks include SNN-TB (Spiking Neural Network Toolbox Benchmark), Nengo Benchmark, and the more recent N2D2 (Neural Network Design & Deployment). These frameworks offer varying levels of abstraction and focus on different aspects of neuromorphic system performance. SNN-TB primarily evaluates the energy efficiency and computational speed of spiking neural networks, while Nengo Benchmark provides comprehensive assessment tools for cognitive computing tasks. N2D2 offers end-to-end evaluation capabilities from network design to hardware deployment.

Performance metrics commonly employed in these frameworks include energy efficiency (typically measured in operations per joule), throughput (operations per second), latency, and accuracy. More specialized metrics address neuromorphic-specific characteristics such as spike timing precision, dynamic range adaptation, and fault tolerance. The multi-dimensional nature of these metrics reflects the complex trade-offs inherent in neuromorphic computing.

Standardization efforts have been led by organizations such as the IEEE Neuromorphic Computing Standards Committee and the Neuromorphic Computing Benchmark consortium. These initiatives aim to establish industry-wide benchmarks that can fairly compare diverse neuromorphic implementations, from digital ASIC designs to analog memristor-based systems and optical computing platforms.

Recent advancements in benchmarking methodologies include the development of application-specific test suites that evaluate neuromorphic systems on real-world machine learning tasks rather than synthetic workloads. These include neuromorphic implementations of standard ML datasets like MNIST and CIFAR, as well as specialized datasets for temporal pattern recognition, anomaly detection, and continuous online learning scenarios.

The integration of these benchmarking frameworks with mainstream machine learning ecosystems remains challenging. Current efforts focus on developing interface layers between neuromorphic simulators and popular frameworks like TensorFlow and PyTorch, enabling more direct comparisons between conventional deep learning approaches and neuromorphic implementations. This convergence will be crucial for broader adoption of neuromorphic computing in mainstream machine learning applications.

Current leading benchmarking frameworks include SNN-TB (Spiking Neural Network Toolbox Benchmark), Nengo Benchmark, and the more recent N2D2 (Neural Network Design & Deployment). These frameworks offer varying levels of abstraction and focus on different aspects of neuromorphic system performance. SNN-TB primarily evaluates the energy efficiency and computational speed of spiking neural networks, while Nengo Benchmark provides comprehensive assessment tools for cognitive computing tasks. N2D2 offers end-to-end evaluation capabilities from network design to hardware deployment.

Performance metrics commonly employed in these frameworks include energy efficiency (typically measured in operations per joule), throughput (operations per second), latency, and accuracy. More specialized metrics address neuromorphic-specific characteristics such as spike timing precision, dynamic range adaptation, and fault tolerance. The multi-dimensional nature of these metrics reflects the complex trade-offs inherent in neuromorphic computing.

Standardization efforts have been led by organizations such as the IEEE Neuromorphic Computing Standards Committee and the Neuromorphic Computing Benchmark consortium. These initiatives aim to establish industry-wide benchmarks that can fairly compare diverse neuromorphic implementations, from digital ASIC designs to analog memristor-based systems and optical computing platforms.

Recent advancements in benchmarking methodologies include the development of application-specific test suites that evaluate neuromorphic systems on real-world machine learning tasks rather than synthetic workloads. These include neuromorphic implementations of standard ML datasets like MNIST and CIFAR, as well as specialized datasets for temporal pattern recognition, anomaly detection, and continuous online learning scenarios.

The integration of these benchmarking frameworks with mainstream machine learning ecosystems remains challenging. Current efforts focus on developing interface layers between neuromorphic simulators and popular frameworks like TensorFlow and PyTorch, enabling more direct comparisons between conventional deep learning approaches and neuromorphic implementations. This convergence will be crucial for broader adoption of neuromorphic computing in mainstream machine learning applications.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!