How Neuromorphic Computing Reduces Power Consumption

SEP 5, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Power Efficiency Goals

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. This approach has evolved significantly since its conceptual inception in the late 1980s by Carver Mead, who first proposed using analog circuits to mimic neurobiological architectures. The evolution trajectory has moved from theoretical frameworks to practical implementations, with each generation addressing fundamental limitations in power efficiency.

The first generation of neuromorphic systems in the 1990s focused primarily on mimicking basic neural functions through analog VLSI (Very Large Scale Integration) circuits. These early systems demonstrated the potential for brain-inspired computing but were limited by manufacturing technologies and design methodologies of the era. Power efficiency was not yet a primary concern, as researchers concentrated on establishing foundational principles.

By the early 2000s, the second generation emerged with hybrid analog-digital designs that improved functionality while beginning to address energy consumption. This period saw the development of address-event representation (AER) protocols, allowing for efficient communication between neuromorphic components. The goal shifted toward creating systems that could process information with greater energy efficiency than conventional computing architectures.

The third generation, spanning from 2010 to present, has placed power efficiency at the forefront of design objectives. This shift coincides with the exponential growth in data processing requirements and the physical limitations of traditional computing architectures. Modern neuromorphic systems aim to achieve energy efficiencies orders of magnitude better than conventional computers for specific tasks, particularly those involving pattern recognition, sensory processing, and real-time adaptation.

Current power efficiency goals for neuromorphic computing are ambitious and multifaceted. The primary objective is to achieve computing capabilities that approach the human brain's remarkable efficiency of approximately 20 watts for complex cognitive functions. Specific targets include reducing energy consumption to picojoules per synaptic operation, enabling edge computing applications where power constraints are critical.

Another key goal is developing neuromorphic systems capable of autonomous operation in energy-limited environments, such as remote sensors, implantable medical devices, and space exploration equipment. These applications demand not only low absolute power consumption but also adaptive power management that can respond to changing computational demands and available energy resources.

The evolution continues toward neuromorphic architectures that combine ultra-low power operation with capabilities for online learning and adaptation, potentially revolutionizing how computing systems interact with complex, dynamic environments while maintaining minimal energy footprints.

The first generation of neuromorphic systems in the 1990s focused primarily on mimicking basic neural functions through analog VLSI (Very Large Scale Integration) circuits. These early systems demonstrated the potential for brain-inspired computing but were limited by manufacturing technologies and design methodologies of the era. Power efficiency was not yet a primary concern, as researchers concentrated on establishing foundational principles.

By the early 2000s, the second generation emerged with hybrid analog-digital designs that improved functionality while beginning to address energy consumption. This period saw the development of address-event representation (AER) protocols, allowing for efficient communication between neuromorphic components. The goal shifted toward creating systems that could process information with greater energy efficiency than conventional computing architectures.

The third generation, spanning from 2010 to present, has placed power efficiency at the forefront of design objectives. This shift coincides with the exponential growth in data processing requirements and the physical limitations of traditional computing architectures. Modern neuromorphic systems aim to achieve energy efficiencies orders of magnitude better than conventional computers for specific tasks, particularly those involving pattern recognition, sensory processing, and real-time adaptation.

Current power efficiency goals for neuromorphic computing are ambitious and multifaceted. The primary objective is to achieve computing capabilities that approach the human brain's remarkable efficiency of approximately 20 watts for complex cognitive functions. Specific targets include reducing energy consumption to picojoules per synaptic operation, enabling edge computing applications where power constraints are critical.

Another key goal is developing neuromorphic systems capable of autonomous operation in energy-limited environments, such as remote sensors, implantable medical devices, and space exploration equipment. These applications demand not only low absolute power consumption but also adaptive power management that can respond to changing computational demands and available energy resources.

The evolution continues toward neuromorphic architectures that combine ultra-low power operation with capabilities for online learning and adaptation, potentially revolutionizing how computing systems interact with complex, dynamic environments while maintaining minimal energy footprints.

Market Demand for Energy-Efficient Computing Solutions

The global demand for energy-efficient computing solutions has witnessed unprecedented growth in recent years, driven by the convergence of several market forces. Data centers, which form the backbone of our digital infrastructure, now consume approximately 1% of global electricity, with projections indicating this figure could rise to 3-8% by 2030 if current trends continue. This escalating energy consumption presents both environmental challenges and significant operational costs for organizations.

The proliferation of edge computing devices, expected to reach 75 billion connected devices by 2025, further amplifies the need for power-efficient computing architectures. Traditional von Neumann computing architectures face fundamental limitations in energy efficiency, creating a substantial market gap that neuromorphic computing aims to address.

Financial implications are equally compelling, with data center operators spending between 20-40% of their operational budgets on energy costs. Major cloud service providers like Amazon Web Services, Microsoft Azure, and Google Cloud have publicly committed to reducing their carbon footprints, creating market pull for technologies that can deliver computational power with significantly reduced energy requirements.

The automotive and aerospace industries represent rapidly expanding markets for energy-efficient computing solutions. Advanced driver-assistance systems and autonomous vehicles require substantial computational capabilities while operating within strict power constraints. Similarly, satellite systems and drones need to maximize computational performance within limited power budgets.

Mobile device manufacturers face persistent consumer demands for longer battery life alongside increased functionality, creating another significant market segment. The average smartphone user now expects devices to last at least a full day under heavy usage, while simultaneously demanding more computationally intensive applications like augmented reality and AI-powered features.

Healthcare applications present another growth area, with wearable medical devices and implantable technologies requiring ultra-low power consumption for extended operation. The market for these devices is projected to grow at a CAGR of 26% through 2026, creating substantial demand for neuromorphic solutions.

Regulatory pressures are also shaping market dynamics, with governments worldwide implementing increasingly stringent energy efficiency standards for electronic devices and data centers. The European Union's Ecodesign Directive and similar regulations in North America and Asia create regulatory incentives for adopting more energy-efficient computing technologies.

Investment trends reflect this growing market demand, with venture capital funding for energy-efficient computing startups reaching record levels. Between 2018 and 2022, investments in this sector grew by 215%, signaling strong market confidence in the commercial potential of technologies like neuromorphic computing that promise order-of-magnitude improvements in computational energy efficiency.

The proliferation of edge computing devices, expected to reach 75 billion connected devices by 2025, further amplifies the need for power-efficient computing architectures. Traditional von Neumann computing architectures face fundamental limitations in energy efficiency, creating a substantial market gap that neuromorphic computing aims to address.

Financial implications are equally compelling, with data center operators spending between 20-40% of their operational budgets on energy costs. Major cloud service providers like Amazon Web Services, Microsoft Azure, and Google Cloud have publicly committed to reducing their carbon footprints, creating market pull for technologies that can deliver computational power with significantly reduced energy requirements.

The automotive and aerospace industries represent rapidly expanding markets for energy-efficient computing solutions. Advanced driver-assistance systems and autonomous vehicles require substantial computational capabilities while operating within strict power constraints. Similarly, satellite systems and drones need to maximize computational performance within limited power budgets.

Mobile device manufacturers face persistent consumer demands for longer battery life alongside increased functionality, creating another significant market segment. The average smartphone user now expects devices to last at least a full day under heavy usage, while simultaneously demanding more computationally intensive applications like augmented reality and AI-powered features.

Healthcare applications present another growth area, with wearable medical devices and implantable technologies requiring ultra-low power consumption for extended operation. The market for these devices is projected to grow at a CAGR of 26% through 2026, creating substantial demand for neuromorphic solutions.

Regulatory pressures are also shaping market dynamics, with governments worldwide implementing increasingly stringent energy efficiency standards for electronic devices and data centers. The European Union's Ecodesign Directive and similar regulations in North America and Asia create regulatory incentives for adopting more energy-efficient computing technologies.

Investment trends reflect this growing market demand, with venture capital funding for energy-efficient computing startups reaching record levels. Between 2018 and 2022, investments in this sector grew by 215%, signaling strong market confidence in the commercial potential of technologies like neuromorphic computing that promise order-of-magnitude improvements in computational energy efficiency.

Current State and Challenges in Neuromorphic Power Reduction

Neuromorphic computing has made significant strides in power efficiency, yet the current landscape reveals both promising achievements and substantial challenges. Leading research institutions including IBM, Intel, and several academic powerhouses have developed neuromorphic chips that demonstrate power consumption reductions of 100-1000x compared to traditional von Neumann architectures when performing specific neural network tasks. IBM's TrueNorth and Intel's Loihi represent the current state-of-the-art, achieving milliwatt-level power consumption while performing complex cognitive tasks that would require watts of power on conventional systems.

Despite these advances, several fundamental challenges persist in neuromorphic power reduction. The primary obstacle remains the development of truly efficient memory-processing integration that mimics biological neural systems. Current neuromorphic designs still suffer from bottlenecks in the memory hierarchy, with energy losses occurring during data movement between processing and storage components. While biological neurons operate at femtojoule energy levels per spike, even the most advanced neuromorphic implementations typically operate at picojoule levels—still orders of magnitude higher than their biological counterparts.

Material science limitations present another significant challenge. The search for ideal materials for implementing synaptic elements with ultra-low power consumption continues, with memristive technologies showing promise but facing reliability and scalability issues. Current memristor-based implementations demonstrate inconsistent behavior across large arrays, limiting their practical deployment in commercial-scale neuromorphic systems.

Algorithmic challenges further complicate power reduction efforts. The translation of conventional deep learning algorithms to spike-based neuromorphic implementations introduces inefficiencies, as many algorithms were not originally designed for the temporal and sparse computational paradigm of neuromorphic systems. This mismatch often results in suboptimal power efficiency when deploying mainstream AI workloads on neuromorphic hardware.

Fabrication technology presents additional hurdles, as most neuromorphic chips are currently manufactured using conventional CMOS processes that were not specifically optimized for neuromorphic architectures. The industry lacks standardized fabrication techniques tailored to the unique requirements of neuromorphic circuits, resulting in compromises that impact power efficiency.

The geographic distribution of neuromorphic computing research shows concentration in North America, Europe, and East Asia, with the United States and China leading in terms of research output and patent filings. This uneven distribution creates challenges in global standardization and adoption of power-efficient neuromorphic technologies. Additionally, the fragmentation of research efforts across different architectural approaches—digital, analog, and hybrid implementations—has slowed convergence toward optimal power-efficient designs.

Despite these advances, several fundamental challenges persist in neuromorphic power reduction. The primary obstacle remains the development of truly efficient memory-processing integration that mimics biological neural systems. Current neuromorphic designs still suffer from bottlenecks in the memory hierarchy, with energy losses occurring during data movement between processing and storage components. While biological neurons operate at femtojoule energy levels per spike, even the most advanced neuromorphic implementations typically operate at picojoule levels—still orders of magnitude higher than their biological counterparts.

Material science limitations present another significant challenge. The search for ideal materials for implementing synaptic elements with ultra-low power consumption continues, with memristive technologies showing promise but facing reliability and scalability issues. Current memristor-based implementations demonstrate inconsistent behavior across large arrays, limiting their practical deployment in commercial-scale neuromorphic systems.

Algorithmic challenges further complicate power reduction efforts. The translation of conventional deep learning algorithms to spike-based neuromorphic implementations introduces inefficiencies, as many algorithms were not originally designed for the temporal and sparse computational paradigm of neuromorphic systems. This mismatch often results in suboptimal power efficiency when deploying mainstream AI workloads on neuromorphic hardware.

Fabrication technology presents additional hurdles, as most neuromorphic chips are currently manufactured using conventional CMOS processes that were not specifically optimized for neuromorphic architectures. The industry lacks standardized fabrication techniques tailored to the unique requirements of neuromorphic circuits, resulting in compromises that impact power efficiency.

The geographic distribution of neuromorphic computing research shows concentration in North America, Europe, and East Asia, with the United States and China leading in terms of research output and patent filings. This uneven distribution creates challenges in global standardization and adoption of power-efficient neuromorphic technologies. Additionally, the fragmentation of research efforts across different architectural approaches—digital, analog, and hybrid implementations—has slowed convergence toward optimal power-efficient designs.

Current Power Optimization Techniques in Neuromorphic Systems

01 Low-power neuromorphic computing architectures

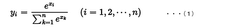

Neuromorphic computing systems can be designed with specialized architectures that significantly reduce power consumption compared to traditional computing paradigms. These architectures mimic the brain's neural networks and synaptic connections, enabling efficient processing with minimal energy requirements. By implementing event-driven processing and sparse activation patterns, these systems can achieve substantial power savings while maintaining computational capabilities for AI and machine learning tasks.- Low-power neuromorphic computing architectures: Neuromorphic computing systems are designed with specialized architectures that mimic the brain's neural networks to achieve significant power efficiency. These architectures incorporate novel circuit designs, spike-based processing, and event-driven computation to minimize energy consumption while maintaining computational capabilities. By optimizing the hardware specifically for neural network operations, these systems can achieve orders of magnitude better energy efficiency compared to traditional computing paradigms.

- Power management techniques in neuromorphic systems: Various power management strategies are employed in neuromorphic computing to reduce energy consumption. These include dynamic voltage and frequency scaling, selective activation of neural components, power gating unused circuits, and adaptive power states based on computational load. Advanced power controllers monitor system activity and adjust power delivery to different components, ensuring optimal energy usage while maintaining performance requirements for neural network processing tasks.

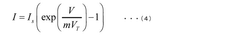

- Memory-centric neuromorphic computing for energy efficiency: Memory-centric approaches to neuromorphic computing focus on reducing the energy costs associated with data movement between processing and memory units. By integrating computation directly with memory elements through technologies like memristors, resistive RAM, or phase-change memory, these systems minimize the power-intensive data transfers that dominate energy consumption in conventional architectures. This in-memory computing paradigm significantly reduces power consumption while accelerating neural network operations.

- Spike-based processing for power optimization: Spike-based neuromorphic computing systems leverage the brain's sparse communication model to achieve substantial power savings. By encoding information in the timing and frequency of discrete spikes rather than continuous values, these systems can operate with extremely low power consumption during periods of inactivity. This event-driven approach ensures that energy is only consumed when information processing is necessary, leading to significant power efficiency advantages over traditional computing methods.

- Hardware-software co-design for energy-efficient neuromorphic systems: Energy efficiency in neuromorphic computing is maximized through the coordinated design of hardware and software components. This approach involves developing specialized neural network algorithms that exploit the unique characteristics of neuromorphic hardware, optimizing network topologies to reduce computational requirements, and implementing intelligent resource allocation strategies. The co-design methodology ensures that both hardware capabilities and software implementations work together to minimize power consumption while maintaining computational performance.

02 Power management techniques for neuromorphic systems

Various power management techniques can be implemented in neuromorphic computing systems to optimize energy efficiency. These include dynamic voltage and frequency scaling, selective activation of neural components, and power gating of inactive circuits. Advanced power management controllers can monitor system activity and adjust power states accordingly, enabling significant reductions in overall energy consumption while maintaining computational performance for neural processing tasks.Expand Specific Solutions03 Memristor-based neuromorphic computing for energy efficiency

Memristor-based neuromorphic computing offers substantial power efficiency advantages by integrating memory and processing functions. These non-volatile memory elements can maintain their state without continuous power, significantly reducing energy consumption. When implemented in neuromorphic architectures, memristors enable efficient synaptic weight storage and updates with minimal energy requirements, making them ideal for power-constrained applications like edge computing and mobile devices.Expand Specific Solutions04 Spike-based processing for power optimization

Spike-based neuromorphic computing systems utilize sparse, event-driven communication similar to biological neural networks, substantially reducing power consumption. By processing information only when necessary through discrete spikes rather than continuous signals, these systems minimize energy usage. This approach enables efficient implementation of neural networks that can operate at extremely low power levels while maintaining computational capabilities for pattern recognition, classification, and other AI tasks.Expand Specific Solutions05 Hardware-software co-design for energy-efficient neuromorphic computing

Hardware-software co-design approaches optimize neuromorphic computing systems for minimal power consumption. By developing specialized hardware architectures alongside optimized algorithms and programming models, these systems can achieve significant energy efficiency. Techniques include algorithm-hardware mapping optimization, workload-aware power management, and specialized instruction sets for neural processing. This integrated approach enables neuromorphic systems to deliver high computational performance for AI applications while maintaining extremely low power profiles.Expand Specific Solutions

Key Industry Players in Neuromorphic Computing

Neuromorphic computing is currently in an early growth phase, with the market expected to expand significantly due to its potential for drastically reducing power consumption in AI applications. The global market size is projected to reach several billion dollars by 2030, driven by increasing demand for energy-efficient computing solutions. From a technological maturity perspective, the field is transitioning from research to commercial applications, with key players at different development stages. IBM leads with its TrueNorth and subsequent neuromorphic architectures, while Samsung, Huawei, and SK hynix are investing heavily in hardware implementations. Specialized companies like Syntiant and Grai Matter Labs are developing application-specific neuromorphic chips, while academic institutions including Tsinghua University, Zhejiang University, and KAIST collaborate with industry to advance fundamental research and practical implementations.

International Business Machines Corp.

Technical Solution: IBM's neuromorphic computing approach focuses on TrueNorth architecture, which mimics the brain's neural structure with 1 million programmable neurons and 256 million synapses on a single chip. This architecture operates on an event-driven basis rather than traditional clock-based processing, activating only when needed. IBM's TrueNorth consumes merely 70mW during real-time operation, achieving 46 billion synaptic operations per second per watt, representing a 1,000x improvement in energy efficiency compared to conventional computing architectures[1]. The company has further advanced this technology with their second-generation neuromorphic chip design that incorporates phase-change memory (PCM) for synaptic connections, enabling more efficient on-chip learning capabilities while maintaining ultra-low power consumption. IBM's neuromorphic systems are designed to excel at pattern recognition tasks while consuming orders of magnitude less power than traditional von Neumann architectures.

Strengths: Exceptional energy efficiency (1,000x improvement over conventional architectures), scalable architecture allowing multiple chips to be tiled together, and mature development ecosystem with programming tools. Weaknesses: Limited application scope primarily focused on pattern recognition tasks, requires specialized programming approaches different from conventional computing paradigms, and faces challenges in general-purpose computing applications.

Syntiant Corp.

Technical Solution: Syntiant has developed the Neural Decision Processor (NDP), a specialized neuromorphic chip designed specifically for edge AI applications with extreme power efficiency. The NDP architecture processes information in a fundamentally different way than traditional CPUs or GPUs, using a data-flow approach that activates only the necessary neural pathways for a given input. Syntiant's NDP100 and NDP120 chips can run deep learning algorithms while consuming less than 1mW of power, enabling always-on voice and sensor processing in battery-powered devices[2]. The company's technology achieves this remarkable efficiency through a combination of analog computing elements, memory-compute integration, and specialized neural network optimization. Syntiant's chips can perform wake word detection, speaker identification, and other audio classification tasks while consuming 100-1000x less energy than conventional digital solutions. Their architecture also incorporates on-chip memory to minimize data movement, which is typically the most energy-intensive operation in computing systems.

Strengths: Ultra-low power consumption (sub-milliwatt operation for many tasks), optimized for always-on edge applications, and production-ready solutions already deployed in commercial products. Weaknesses: Specialized for audio and sensor processing applications with limited flexibility for other computing tasks, and requires specific neural network architectures optimized for their hardware.

Core Innovations in Low-Power Neuromorphic Architecture

Analog computing element and neuromorphic device

PatentWO2024203769A1

Innovation

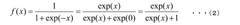

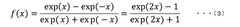

- An analog computing unit that converts activation function computation into analog processing using a physical device, incorporating voltage-current conversion, current addition/subtraction, and current-voltage conversion circuits to perform operations such as softmax, sigmoid, and hyperbolic tangent functions in parallel, reducing the computational load and power consumption.

Electronic circuit with neuromorphic architecture

PatentWO2012076366A1

Innovation

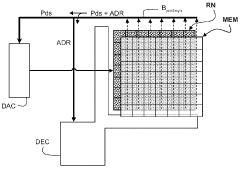

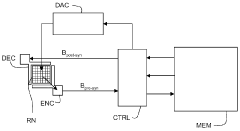

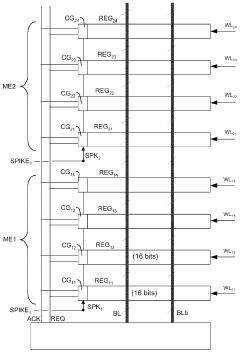

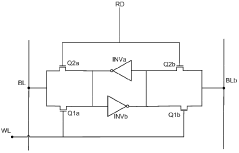

- A neuromorphic circuit design that eliminates the need for address encoders and controllers by establishing a direct connection between neurons and their associated memories, allowing for a programmable memory system with management circuits to handle data extraction and conflict prevention on a post-synaptic bus, thereby reducing the complexity and energy consumption.

Hardware-Software Co-design for Power Optimization

Neuromorphic computing systems require a holistic approach to power optimization that integrates hardware and software design considerations. The co-design methodology addresses power consumption challenges by ensuring that hardware architectures and software frameworks work in harmony to maximize energy efficiency. This approach has proven particularly effective as traditional von Neumann architectures reach their physical limits in terms of power efficiency.

Hardware components in neuromorphic systems can be specifically tailored to support event-driven processing, which inherently consumes less power by activating circuits only when necessary. Specialized memory hierarchies that minimize data movement between processing units and memory banks significantly reduce energy consumption, as data transfer operations typically account for a substantial portion of power usage in computing systems.

Software frameworks designed for neuromorphic hardware must implement sparse coding techniques and event-based algorithms that leverage the asynchronous nature of these systems. By encoding information in the timing of spikes rather than continuous signal values, these algorithms naturally align with the power-saving mechanisms built into neuromorphic hardware.

Dynamic power management strategies represent another crucial aspect of hardware-software co-design. These strategies include adaptive clock gating, voltage scaling, and selective activation of neural circuits based on computational demands. Software can intelligently control these hardware features by analyzing workload patterns and adjusting system parameters accordingly.

Compiler optimizations specifically designed for neuromorphic architectures play a vital role in translating high-level neural network descriptions into efficient spike-based implementations. These compilers must understand both the mathematical properties of neural networks and the physical constraints of the target hardware to generate code that minimizes unnecessary computations and memory accesses.

Runtime systems that dynamically balance workloads across neuromorphic cores further enhance power efficiency. By distributing computational tasks based on real-time power consumption metrics and processing requirements, these systems ensure optimal utilization of available resources while maintaining minimal energy footprint.

Case studies from leading neuromorphic computing projects demonstrate the effectiveness of co-design approaches. For instance, IBM's TrueNorth architecture achieved remarkable power efficiency through tight integration of hardware design choices and programming models specifically crafted for neuromorphic computation. Similarly, Intel's Loihi research chip incorporates hardware features that directly support software-level sparse coding and event-driven processing paradigms.

Hardware components in neuromorphic systems can be specifically tailored to support event-driven processing, which inherently consumes less power by activating circuits only when necessary. Specialized memory hierarchies that minimize data movement between processing units and memory banks significantly reduce energy consumption, as data transfer operations typically account for a substantial portion of power usage in computing systems.

Software frameworks designed for neuromorphic hardware must implement sparse coding techniques and event-based algorithms that leverage the asynchronous nature of these systems. By encoding information in the timing of spikes rather than continuous signal values, these algorithms naturally align with the power-saving mechanisms built into neuromorphic hardware.

Dynamic power management strategies represent another crucial aspect of hardware-software co-design. These strategies include adaptive clock gating, voltage scaling, and selective activation of neural circuits based on computational demands. Software can intelligently control these hardware features by analyzing workload patterns and adjusting system parameters accordingly.

Compiler optimizations specifically designed for neuromorphic architectures play a vital role in translating high-level neural network descriptions into efficient spike-based implementations. These compilers must understand both the mathematical properties of neural networks and the physical constraints of the target hardware to generate code that minimizes unnecessary computations and memory accesses.

Runtime systems that dynamically balance workloads across neuromorphic cores further enhance power efficiency. By distributing computational tasks based on real-time power consumption metrics and processing requirements, these systems ensure optimal utilization of available resources while maintaining minimal energy footprint.

Case studies from leading neuromorphic computing projects demonstrate the effectiveness of co-design approaches. For instance, IBM's TrueNorth architecture achieved remarkable power efficiency through tight integration of hardware design choices and programming models specifically crafted for neuromorphic computation. Similarly, Intel's Loihi research chip incorporates hardware features that directly support software-level sparse coding and event-driven processing paradigms.

Environmental Impact and Sustainability Benefits

Neuromorphic computing systems offer significant environmental benefits through their drastically reduced power consumption compared to conventional computing architectures. The brain-inspired design fundamentally changes how computational tasks are approached, resulting in energy efficiency improvements of up to 1000x for certain applications. This translates directly into reduced carbon emissions from data centers and computing infrastructure, which currently account for approximately 2% of global electricity consumption and are projected to reach 8% by 2030 without intervention.

The sustainability advantages extend beyond direct energy savings. By enabling edge computing with minimal power requirements, neuromorphic systems reduce the need for data transmission to centralized servers, further decreasing network infrastructure energy demands. This distributed computing approach minimizes the carbon footprint associated with data movement, which can represent up to 60% of total energy costs in traditional computing paradigms.

Material resource conservation represents another critical environmental benefit. Neuromorphic chips typically require less silicon and rare earth elements per computational unit compared to conventional processors. Their longer operational lifespan due to lower heat generation further reduces electronic waste, addressing a growing environmental concern as global e-waste exceeds 50 million metric tons annually.

Water conservation emerges as an often-overlooked sustainability advantage. Traditional semiconductor manufacturing and data center cooling systems consume vast quantities of water—a single conventional chip fabrication facility can use 2-4 million gallons daily. Neuromorphic computing's reduced thermal output and potentially simplified manufacturing processes promise significant reductions in water usage throughout the technology lifecycle.

The environmental benefits scale exponentially when considering widespread adoption across IoT devices. With projections indicating over 75 billion connected devices by 2025, implementing neuromorphic solutions could prevent megatons of carbon emissions while extending battery life and reducing the frequency of device replacement and associated manufacturing impacts.

From a circular economy perspective, neuromorphic computing aligns with sustainability principles by enabling more efficient use of existing resources. The technology's ability to perform complex computations with minimal energy input represents a paradigm shift toward computing systems that respect planetary boundaries while meeting growing computational demands.

The sustainability advantages extend beyond direct energy savings. By enabling edge computing with minimal power requirements, neuromorphic systems reduce the need for data transmission to centralized servers, further decreasing network infrastructure energy demands. This distributed computing approach minimizes the carbon footprint associated with data movement, which can represent up to 60% of total energy costs in traditional computing paradigms.

Material resource conservation represents another critical environmental benefit. Neuromorphic chips typically require less silicon and rare earth elements per computational unit compared to conventional processors. Their longer operational lifespan due to lower heat generation further reduces electronic waste, addressing a growing environmental concern as global e-waste exceeds 50 million metric tons annually.

Water conservation emerges as an often-overlooked sustainability advantage. Traditional semiconductor manufacturing and data center cooling systems consume vast quantities of water—a single conventional chip fabrication facility can use 2-4 million gallons daily. Neuromorphic computing's reduced thermal output and potentially simplified manufacturing processes promise significant reductions in water usage throughout the technology lifecycle.

The environmental benefits scale exponentially when considering widespread adoption across IoT devices. With projections indicating over 75 billion connected devices by 2025, implementing neuromorphic solutions could prevent megatons of carbon emissions while extending battery life and reducing the frequency of device replacement and associated manufacturing impacts.

From a circular economy perspective, neuromorphic computing aligns with sustainability principles by enabling more efficient use of existing resources. The technology's ability to perform complex computations with minimal energy input represents a paradigm shift toward computing systems that respect planetary boundaries while meeting growing computational demands.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!