Neuromorphic Computing: Signal Processing vs Traditional

SEP 5, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. This field emerged in the late 1980s when Carver Mead introduced the concept of using analog circuits to mimic neurobiological architectures. Since then, neuromorphic computing has evolved through several distinct phases, each marked by significant technological breakthroughs and shifting objectives.

The initial phase (1980s-1990s) focused primarily on creating electronic circuits that could emulate basic neural functions. These early systems demonstrated the potential for implementing brain-inspired computation but were limited by the available fabrication technologies and theoretical understanding of neural processes. The primary objective during this period was proof-of-concept: demonstrating that silicon-based systems could indeed implement neural-like computation.

The second phase (2000s-early 2010s) witnessed the emergence of more sophisticated neuromorphic architectures as advances in VLSI technology enabled the integration of more complex neural models. During this period, research objectives expanded beyond basic emulation to include energy efficiency, as conventional computing architectures began facing significant power constraints. Projects like IBM's TrueNorth and the European Human Brain Project established ambitious goals for large-scale neural simulation.

The current phase (mid-2010s-present) has been characterized by a convergence of neuromorphic computing with machine learning and artificial intelligence. The field has increasingly focused on practical applications, particularly in signal processing domains where traditional computing architectures face efficiency challenges. Modern neuromorphic systems aim to leverage the inherent advantages of brain-inspired computation—parallelism, event-driven processing, and co-located memory and computation—to overcome the von Neumann bottleneck that plagues conventional architectures.

Looking forward, the evolution of neuromorphic computing is likely to be driven by several key objectives. First, achieving greater energy efficiency for edge computing applications, where power constraints are particularly stringent. Second, developing more sophisticated learning algorithms that can fully exploit the unique characteristics of neuromorphic hardware. Third, creating scalable architectures that can approach the complexity of biological neural systems while maintaining manageable power requirements.

The ultimate goal of neuromorphic computing is not merely to replicate biological neural systems but to extract the computational principles that make the brain so remarkably efficient at certain tasks—particularly signal processing—and implement these principles in ways that complement traditional computing approaches. This represents a fundamental shift from the deterministic, sequential processing model that has dominated computing for decades toward a more parallel, adaptive, and energy-efficient paradigm.

The initial phase (1980s-1990s) focused primarily on creating electronic circuits that could emulate basic neural functions. These early systems demonstrated the potential for implementing brain-inspired computation but were limited by the available fabrication technologies and theoretical understanding of neural processes. The primary objective during this period was proof-of-concept: demonstrating that silicon-based systems could indeed implement neural-like computation.

The second phase (2000s-early 2010s) witnessed the emergence of more sophisticated neuromorphic architectures as advances in VLSI technology enabled the integration of more complex neural models. During this period, research objectives expanded beyond basic emulation to include energy efficiency, as conventional computing architectures began facing significant power constraints. Projects like IBM's TrueNorth and the European Human Brain Project established ambitious goals for large-scale neural simulation.

The current phase (mid-2010s-present) has been characterized by a convergence of neuromorphic computing with machine learning and artificial intelligence. The field has increasingly focused on practical applications, particularly in signal processing domains where traditional computing architectures face efficiency challenges. Modern neuromorphic systems aim to leverage the inherent advantages of brain-inspired computation—parallelism, event-driven processing, and co-located memory and computation—to overcome the von Neumann bottleneck that plagues conventional architectures.

Looking forward, the evolution of neuromorphic computing is likely to be driven by several key objectives. First, achieving greater energy efficiency for edge computing applications, where power constraints are particularly stringent. Second, developing more sophisticated learning algorithms that can fully exploit the unique characteristics of neuromorphic hardware. Third, creating scalable architectures that can approach the complexity of biological neural systems while maintaining manageable power requirements.

The ultimate goal of neuromorphic computing is not merely to replicate biological neural systems but to extract the computational principles that make the brain so remarkably efficient at certain tasks—particularly signal processing—and implement these principles in ways that complement traditional computing approaches. This represents a fundamental shift from the deterministic, sequential processing model that has dominated computing for decades toward a more parallel, adaptive, and energy-efficient paradigm.

Market Demand for Brain-Inspired Computing Solutions

The neuromorphic computing market is experiencing significant growth driven by increasing demand for brain-inspired computing solutions across multiple sectors. Current market analysis indicates that the global neuromorphic computing market is projected to reach $8.9 billion by 2025, with a compound annual growth rate of approximately 49% from 2020. This remarkable growth trajectory is fueled by the escalating need for more efficient computing architectures that can handle complex signal processing tasks while consuming minimal power.

The primary market demand stems from applications requiring real-time signal processing capabilities, particularly in edge computing environments where traditional computing architectures face significant limitations. Industries such as autonomous vehicles, robotics, and advanced surveillance systems are actively seeking neuromorphic solutions that can process sensory data with the efficiency and adaptability characteristic of biological neural systems.

Healthcare represents another substantial market segment, with growing demand for neuromorphic systems capable of processing complex biological signals for applications ranging from brain-computer interfaces to early disease detection systems. The ability of neuromorphic architectures to handle noisy, incomplete data makes them particularly valuable for medical diagnostic tools and personalized medicine applications.

Telecommunications and consumer electronics industries are increasingly investing in neuromorphic technologies to enhance signal processing in next-generation devices. The demand is particularly strong for solutions that can efficiently handle natural language processing, computer vision, and other AI-intensive tasks directly on mobile and IoT devices without relying on cloud computing resources.

Financial services and cybersecurity sectors represent emerging markets for neuromorphic computing, with growing interest in systems capable of real-time anomaly detection and pattern recognition in complex data streams. These applications benefit significantly from the parallel processing capabilities and energy efficiency of neuromorphic architectures compared to traditional computing approaches.

Defense and aerospace industries are making substantial investments in neuromorphic technologies for applications ranging from autonomous drones to advanced radar systems. The demand in these sectors is driven by the need for computing systems that can operate in resource-constrained environments while processing multiple sensory inputs simultaneously.

Despite this growing demand, market research indicates several barriers to widespread adoption, including the need for specialized programming paradigms, limited software ecosystems, and challenges in integrating neuromorphic components with existing systems. These factors have created a significant market opportunity for companies that can deliver comprehensive neuromorphic solutions addressing these integration challenges.

The primary market demand stems from applications requiring real-time signal processing capabilities, particularly in edge computing environments where traditional computing architectures face significant limitations. Industries such as autonomous vehicles, robotics, and advanced surveillance systems are actively seeking neuromorphic solutions that can process sensory data with the efficiency and adaptability characteristic of biological neural systems.

Healthcare represents another substantial market segment, with growing demand for neuromorphic systems capable of processing complex biological signals for applications ranging from brain-computer interfaces to early disease detection systems. The ability of neuromorphic architectures to handle noisy, incomplete data makes them particularly valuable for medical diagnostic tools and personalized medicine applications.

Telecommunications and consumer electronics industries are increasingly investing in neuromorphic technologies to enhance signal processing in next-generation devices. The demand is particularly strong for solutions that can efficiently handle natural language processing, computer vision, and other AI-intensive tasks directly on mobile and IoT devices without relying on cloud computing resources.

Financial services and cybersecurity sectors represent emerging markets for neuromorphic computing, with growing interest in systems capable of real-time anomaly detection and pattern recognition in complex data streams. These applications benefit significantly from the parallel processing capabilities and energy efficiency of neuromorphic architectures compared to traditional computing approaches.

Defense and aerospace industries are making substantial investments in neuromorphic technologies for applications ranging from autonomous drones to advanced radar systems. The demand in these sectors is driven by the need for computing systems that can operate in resource-constrained environments while processing multiple sensory inputs simultaneously.

Despite this growing demand, market research indicates several barriers to widespread adoption, including the need for specialized programming paradigms, limited software ecosystems, and challenges in integrating neuromorphic components with existing systems. These factors have created a significant market opportunity for companies that can deliver comprehensive neuromorphic solutions addressing these integration challenges.

Signal Processing Challenges in Neuromorphic Systems

Neuromorphic computing systems face unique signal processing challenges that differentiate them from traditional computing architectures. The fundamental difference lies in how these systems process information - mimicking the brain's neural networks rather than following sequential von Neumann architecture principles. This paradigm shift introduces several significant signal processing hurdles.

The primary challenge involves converting conventional digital or analog signals into spike-based representations compatible with neuromorphic hardware. Traditional signal processing relies on precise numerical values, whereas neuromorphic systems operate on spike timing and frequencies. This conversion requires specialized encoding schemes such as temporal coding, rate coding, or population coding, each introducing different trade-offs between information density, energy efficiency, and computational complexity.

Temporal precision presents another critical challenge. Neuromorphic systems must maintain precise timing relationships between spikes to preserve information integrity. Environmental factors, component variations, and noise can disrupt these timing relationships, potentially leading to computational errors. Unlike traditional systems with discrete clock cycles, neuromorphic computing relies on asynchronous event-based processing, making timing management considerably more complex.

Power consumption optimization remains a persistent challenge despite neuromorphic computing's inherent energy efficiency advantages. Signal processing operations must be redesigned to leverage sparse, event-driven computation while maintaining functional accuracy. This requires fundamentally rethinking traditional DSP algorithms that assume continuous availability of complete data sets.

Noise management in neuromorphic systems differs significantly from traditional approaches. While biological neural networks demonstrate remarkable resilience to noise, engineered neuromorphic systems must explicitly incorporate noise-tolerance mechanisms. This includes implementing adaptive thresholds, redundant pathways, and stochastic computing elements that can function effectively despite signal variability.

Learning and adaptation mechanisms present unique signal processing challenges. Neuromorphic systems typically implement on-chip learning through mechanisms like spike-timing-dependent plasticity (STDP), which requires continuous monitoring and modification of synaptic weights based on temporal correlations between input and output spikes. These processes demand specialized circuitry for weight updates while maintaining system stability.

Hardware constraints further complicate signal processing in neuromorphic systems. Limited precision in synaptic weights, finite neuron counts, and restricted connectivity patterns constrain implementation options. Engineers must develop signal processing techniques that remain effective within these hardware limitations while preserving computational capabilities.

The primary challenge involves converting conventional digital or analog signals into spike-based representations compatible with neuromorphic hardware. Traditional signal processing relies on precise numerical values, whereas neuromorphic systems operate on spike timing and frequencies. This conversion requires specialized encoding schemes such as temporal coding, rate coding, or population coding, each introducing different trade-offs between information density, energy efficiency, and computational complexity.

Temporal precision presents another critical challenge. Neuromorphic systems must maintain precise timing relationships between spikes to preserve information integrity. Environmental factors, component variations, and noise can disrupt these timing relationships, potentially leading to computational errors. Unlike traditional systems with discrete clock cycles, neuromorphic computing relies on asynchronous event-based processing, making timing management considerably more complex.

Power consumption optimization remains a persistent challenge despite neuromorphic computing's inherent energy efficiency advantages. Signal processing operations must be redesigned to leverage sparse, event-driven computation while maintaining functional accuracy. This requires fundamentally rethinking traditional DSP algorithms that assume continuous availability of complete data sets.

Noise management in neuromorphic systems differs significantly from traditional approaches. While biological neural networks demonstrate remarkable resilience to noise, engineered neuromorphic systems must explicitly incorporate noise-tolerance mechanisms. This includes implementing adaptive thresholds, redundant pathways, and stochastic computing elements that can function effectively despite signal variability.

Learning and adaptation mechanisms present unique signal processing challenges. Neuromorphic systems typically implement on-chip learning through mechanisms like spike-timing-dependent plasticity (STDP), which requires continuous monitoring and modification of synaptic weights based on temporal correlations between input and output spikes. These processes demand specialized circuitry for weight updates while maintaining system stability.

Hardware constraints further complicate signal processing in neuromorphic systems. Limited precision in synaptic weights, finite neuron counts, and restricted connectivity patterns constrain implementation options. Engineers must develop signal processing techniques that remain effective within these hardware limitations while preserving computational capabilities.

Current Neuromorphic Signal Processing Implementations

01 Neuromorphic hardware architectures for signal processing

Neuromorphic computing systems implement hardware architectures that mimic the structure and function of biological neural networks for efficient signal processing. These architectures include specialized circuits, memristive devices, and spiking neural networks that enable parallel processing and low power consumption. The hardware designs optimize signal processing tasks by leveraging brain-inspired computational principles, allowing for efficient handling of complex data patterns and real-time processing capabilities.- Neuromorphic hardware architectures for signal processing: Neuromorphic computing systems implement hardware architectures that mimic the structure and function of biological neural networks for efficient signal processing. These architectures include specialized circuits, memristive devices, and spiking neural networks that enable parallel processing and low power consumption. The hardware implementations allow for real-time processing of complex signals while maintaining energy efficiency, making them suitable for applications requiring fast response times and operation in resource-constrained environments.

- Spiking neural networks for temporal signal processing: Spiking neural networks (SNNs) are employed in neuromorphic computing for processing time-varying signals. These networks use discrete spikes to transmit information, similar to biological neurons, making them particularly effective for temporal signal processing tasks. SNNs can efficiently encode and process temporal patterns in data streams, enabling applications in speech recognition, motion detection, and other time-series analysis tasks with significantly reduced computational requirements compared to traditional approaches.

- Memristive devices for neuromorphic signal processing: Memristive devices serve as key components in neuromorphic computing systems for signal processing applications. These devices can emulate synaptic behavior by changing their resistance based on the history of applied voltage or current, enabling efficient implementation of neural network weights. The non-volatile nature of memristors allows for persistent storage of learned patterns, while their analog operation facilitates continuous signal processing with reduced power consumption compared to digital implementations.

- On-chip learning and adaptation for signal processing: Neuromorphic computing systems incorporate on-chip learning capabilities that allow them to adapt to changing signal characteristics in real-time. These systems implement various learning algorithms such as spike-timing-dependent plasticity (STDP) and backpropagation to continuously update their internal parameters based on input signals. The ability to learn and adapt on-chip enables these systems to process signals in dynamic environments, making them suitable for applications like adaptive filtering, pattern recognition, and anomaly detection in sensor networks.

- Event-based sensing and processing techniques: Event-based sensing and processing is a fundamental approach in neuromorphic computing that focuses on detecting and processing significant changes in signals rather than sampling at fixed intervals. This approach mimics the asynchronous nature of biological sensory systems, resulting in sparse data representation and efficient processing. Event-based techniques are particularly effective for processing visual and auditory signals, enabling applications such as high-speed object tracking, gesture recognition, and acoustic event detection with minimal computational resources and power consumption.

02 Spiking neural networks for temporal signal processing

Spiking neural networks (SNNs) are employed in neuromorphic computing for processing time-dependent signals. These networks use discrete spikes to transmit information, similar to biological neurons, making them particularly effective for temporal signal processing tasks. SNNs can efficiently encode and process time-varying data, enabling applications in speech recognition, motion detection, and other dynamic signal processing domains while maintaining energy efficiency through event-driven computation.Expand Specific Solutions03 Memristive devices for neuromorphic signal processing

Memristive devices serve as key components in neuromorphic computing systems for signal processing applications. These devices can emulate synaptic behavior by changing their resistance based on the history of applied voltage or current, enabling efficient implementation of neural network weights. Memristors allow for in-memory computing, reducing the energy costs associated with data movement between processing and memory units, which is particularly beneficial for complex signal processing tasks requiring substantial computational resources.Expand Specific Solutions04 On-chip learning algorithms for adaptive signal processing

Neuromorphic computing systems implement on-chip learning algorithms that enable adaptive signal processing capabilities. These algorithms allow the system to modify its parameters in response to input signals, similar to how biological neural systems learn from experience. On-chip learning facilitates real-time adaptation to changing signal characteristics, making these systems particularly effective for applications requiring continuous learning and adaptation such as sensor fusion, pattern recognition in noisy environments, and autonomous signal classification.Expand Specific Solutions05 Energy-efficient signal processing through neuromorphic computing

Neuromorphic computing offers significant energy efficiency advantages for signal processing applications compared to conventional computing approaches. By mimicking the brain's sparse and event-driven processing, neuromorphic systems can perform complex signal processing tasks while consuming minimal power. This energy efficiency is achieved through specialized circuit designs, asynchronous processing, and optimized data representation methods that minimize unnecessary computations, making neuromorphic computing particularly suitable for edge devices and other power-constrained signal processing applications.Expand Specific Solutions

Leading Organizations in Neuromorphic Computing Research

Neuromorphic computing is currently in an early growth phase, with the market expected to expand significantly as signal processing applications demonstrate advantages over traditional computing. The global market size is projected to reach $8-10 billion by 2028, growing at a CAGR of approximately 25%. Technologically, the field is transitioning from research to commercial applications, with varying maturity levels across companies. Industry leaders like IBM, Intel, and Samsung are developing comprehensive neuromorphic platforms, while specialized players such as Syntiant, Polyn Technology, and Lingxi Technology focus on edge AI applications. University collaborations with Tsinghua, Peking, and Tianjin universities are accelerating innovation, particularly in signal processing applications where neuromorphic approaches offer significant power efficiency and real-time processing advantages over traditional computing architectures.

International Business Machines Corp.

Technical Solution: IBM's neuromorphic computing approach is embodied in their TrueNorth architecture, which represents a radical departure from conventional computing paradigms for signal processing. TrueNorth implements a non-von Neumann architecture with co-located memory and processing in neurosynaptic cores. Each chip contains 4,096 cores with 1 million digital neurons and 256 million synapses[3]. For signal processing applications, IBM's solution operates at extremely low power consumption (70mW) while delivering 46 giga-synaptic operations per second per watt, making it approximately 1,000 times more energy-efficient than traditional architectures for certain workloads[4]. IBM has demonstrated TrueNorth's capabilities in real-time video analysis, audio processing, and complex pattern recognition tasks. The architecture's event-driven processing model is particularly suited for sensor data processing, as it only consumes power when information changes, unlike traditional systems that continuously sample at fixed intervals. IBM has also developed programming frameworks like Corelet to abstract the complexity of neuromorphic programming.

Strengths: Exceptional energy efficiency (>1000x improvement for certain tasks); Highly scalable architecture; Fault-tolerant design with redundant components. Weaknesses: Limited precision compared to traditional floating-point architectures; Requires rethinking algorithms to fit the neuromorphic paradigm; Higher initial development complexity for applications traditionally designed for von Neumann architectures.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung's neuromorphic computing initiative focuses on developing brain-inspired hardware for efficient signal processing in mobile and IoT devices. Their approach centers on neuromorphic processing units (NPUs) that implement spiking neural networks using analog/digital hybrid circuits. Samsung's architecture features memristor-based synaptic elements that enable massively parallel, low-power computation[7]. For signal processing applications, Samsung has demonstrated systems that achieve up to 100x improvement in energy efficiency compared to traditional digital signal processors when handling continuous sensor data streams[8]. Their neuromorphic solution excels at processing temporal data patterns, making it particularly effective for applications like voice recognition, gesture detection, and health monitoring. Samsung has integrated their neuromorphic technology with their advanced semiconductor manufacturing capabilities, allowing for highly miniaturized implementations suitable for wearable and mobile devices. Their research also explores 3D stacking of neuromorphic elements to increase neural density while maintaining thermal efficiency, critical for complex signal processing tasks in compact form factors.

Strengths: Excellent integration potential with existing mobile and IoT ecosystems; Strong manufacturing capabilities for scaled production; Highly optimized for power-constrained environments. Weaknesses: Less established research presence in neuromorphic computing compared to some competitors; Currently more focused on specific application domains rather than general-purpose computing; Relatively early in commercialization journey for this technology.

Breakthrough Technologies in Spiking Neural Networks

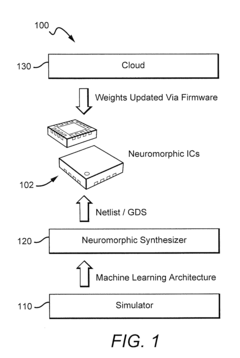

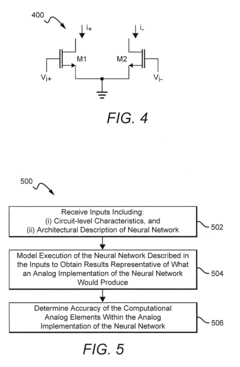

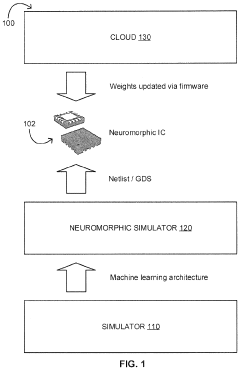

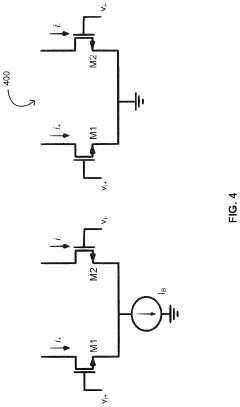

Systems And Methods For Determining Circuit-Level Effects On Classifier Accuracy

PatentActiveUS20190065962A1

Innovation

- The development of neuromorphic chips that simulate 'silicon' neurons, processing information in parallel with bursts of electric current at non-uniform intervals, and the use of systems and methods to model the effects of circuit-level characteristics on neural networks, such as thermal noise and weight inaccuracies, to optimize their performance.

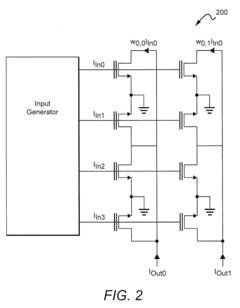

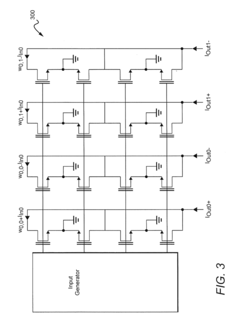

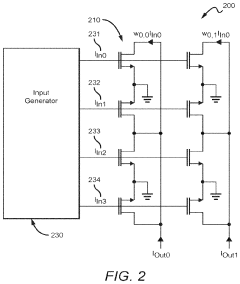

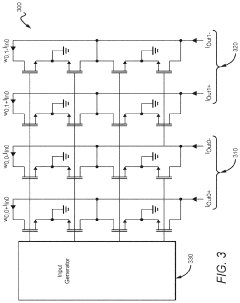

Systems and Methods of Sparsity Exploiting

PatentInactiveUS20240095510A1

Innovation

- A neuromorphic integrated circuit with a multi-layered neural network in an analog multiplier array, where two-quadrant multipliers are wired to ground and draw negligible current when input signal or weight values are zero, promoting sparsity and minimizing power consumption, and a method to train the network to drive weight values toward zero using a training algorithm.

Energy Efficiency Comparison with Traditional Computing

Neuromorphic computing systems demonstrate remarkable energy efficiency advantages over traditional computing architectures, particularly in signal processing applications. When examining power consumption metrics, neuromorphic chips typically operate in the milliwatt range while performing complex pattern recognition tasks that would require several watts in conventional processors. For instance, Intel's Loihi neuromorphic research chip achieves 1000x better energy efficiency compared to conventional CPUs when running certain sparse coding algorithms relevant to signal processing.

This efficiency stems from the fundamental architectural differences between neuromorphic and von Neumann computing paradigms. Traditional computing architectures suffer from the "von Neumann bottleneck," where energy is predominantly consumed shuttling data between separate memory and processing units. In contrast, neuromorphic systems employ distributed memory-processing units that mimic biological neural networks, significantly reducing energy requirements for data movement operations.

Event-driven computation represents another critical energy-saving feature in neuromorphic systems. Unlike traditional processors that operate on fixed clock cycles, neuromorphic circuits process information only when relevant signals arrive, similar to biological neurons that fire only when necessary. This approach eliminates the constant power drain associated with clock-driven architectures, resulting in substantial energy savings during periods of low computational activity.

Quantitative benchmarks from recent studies demonstrate that for specific signal processing tasks such as audio filtering and feature extraction, neuromorphic implementations consume 10-100x less energy than their digital signal processor (DSP) counterparts. This efficiency becomes particularly pronounced in applications requiring continuous monitoring with intermittent processing, such as always-on audio recognition systems.

The energy advantages extend to hardware implementation as well. Analog neuromorphic circuits leverage physical properties of electronic components to perform computations, avoiding the energy costs associated with digital-to-analog conversion in traditional systems. This approach enables direct processing of analog sensor inputs without energy-intensive conversion steps, further enhancing efficiency in signal processing applications.

However, these efficiency gains must be contextualized within specific application domains. While neuromorphic systems excel at pattern recognition and sensory processing tasks, they may not offer significant energy advantages for applications requiring precise numerical calculations or highly sequential processing. The energy efficiency comparison therefore remains highly dependent on the specific signal processing workload and deployment environment.

This efficiency stems from the fundamental architectural differences between neuromorphic and von Neumann computing paradigms. Traditional computing architectures suffer from the "von Neumann bottleneck," where energy is predominantly consumed shuttling data between separate memory and processing units. In contrast, neuromorphic systems employ distributed memory-processing units that mimic biological neural networks, significantly reducing energy requirements for data movement operations.

Event-driven computation represents another critical energy-saving feature in neuromorphic systems. Unlike traditional processors that operate on fixed clock cycles, neuromorphic circuits process information only when relevant signals arrive, similar to biological neurons that fire only when necessary. This approach eliminates the constant power drain associated with clock-driven architectures, resulting in substantial energy savings during periods of low computational activity.

Quantitative benchmarks from recent studies demonstrate that for specific signal processing tasks such as audio filtering and feature extraction, neuromorphic implementations consume 10-100x less energy than their digital signal processor (DSP) counterparts. This efficiency becomes particularly pronounced in applications requiring continuous monitoring with intermittent processing, such as always-on audio recognition systems.

The energy advantages extend to hardware implementation as well. Analog neuromorphic circuits leverage physical properties of electronic components to perform computations, avoiding the energy costs associated with digital-to-analog conversion in traditional systems. This approach enables direct processing of analog sensor inputs without energy-intensive conversion steps, further enhancing efficiency in signal processing applications.

However, these efficiency gains must be contextualized within specific application domains. While neuromorphic systems excel at pattern recognition and sensory processing tasks, they may not offer significant energy advantages for applications requiring precise numerical calculations or highly sequential processing. The energy efficiency comparison therefore remains highly dependent on the specific signal processing workload and deployment environment.

Hardware-Software Co-design Considerations

Neuromorphic computing systems require a fundamentally different approach to hardware-software co-design compared to traditional computing architectures. The integration of specialized neuromorphic hardware with appropriate software frameworks creates unique challenges and opportunities. When designing neuromorphic systems for signal processing applications, hardware considerations must account for the inherent parallelism, event-driven processing, and analog computation capabilities that mimic neural functions.

The hardware layer in neuromorphic systems typically consists of specialized circuits such as silicon neurons, synaptic elements, and memory structures that enable spike-based computation. These components must be designed to efficiently process temporal signals while maintaining low power consumption—a key advantage over traditional computing approaches. For signal processing applications, hardware designers must carefully balance analog precision with energy efficiency, as many neuromorphic implementations leverage analog computing principles to achieve performance gains.

Software frameworks for neuromorphic computing require significant adaptation from traditional programming paradigms. Rather than sequential instruction execution, neuromorphic software must support event-driven processing models and spiking neural network representations. Languages and tools that facilitate the mapping of signal processing algorithms to spiking neural networks are essential for effective implementation. This includes specialized compilers that can translate conventional signal processing operations into spike-based equivalents optimized for the underlying neuromorphic hardware.

The co-design process necessitates close collaboration between hardware and software teams to ensure optimal system performance. Interface standards between hardware and software layers must be carefully defined to allow efficient data transfer while preserving the temporal characteristics of signals. Additionally, simulation environments that accurately model both hardware behavior and software execution are critical for validating designs before physical implementation.

Performance evaluation metrics for neuromorphic systems differ substantially from traditional computing benchmarks. While traditional systems prioritize throughput and latency, neuromorphic designs must also consider energy per operation, spike efficiency, and temporal precision. Co-design methodologies must incorporate these specialized metrics throughout the development process to ensure the resulting system meets application requirements.

Debugging and optimization tools represent another critical aspect of the co-design process. Traditional software debugging approaches are often inadequate for neuromorphic systems due to their event-driven nature and distributed processing model. Specialized visualization and analysis tools that can represent temporal spike patterns and network activity are essential for effective development and troubleshooting.

The hardware layer in neuromorphic systems typically consists of specialized circuits such as silicon neurons, synaptic elements, and memory structures that enable spike-based computation. These components must be designed to efficiently process temporal signals while maintaining low power consumption—a key advantage over traditional computing approaches. For signal processing applications, hardware designers must carefully balance analog precision with energy efficiency, as many neuromorphic implementations leverage analog computing principles to achieve performance gains.

Software frameworks for neuromorphic computing require significant adaptation from traditional programming paradigms. Rather than sequential instruction execution, neuromorphic software must support event-driven processing models and spiking neural network representations. Languages and tools that facilitate the mapping of signal processing algorithms to spiking neural networks are essential for effective implementation. This includes specialized compilers that can translate conventional signal processing operations into spike-based equivalents optimized for the underlying neuromorphic hardware.

The co-design process necessitates close collaboration between hardware and software teams to ensure optimal system performance. Interface standards between hardware and software layers must be carefully defined to allow efficient data transfer while preserving the temporal characteristics of signals. Additionally, simulation environments that accurately model both hardware behavior and software execution are critical for validating designs before physical implementation.

Performance evaluation metrics for neuromorphic systems differ substantially from traditional computing benchmarks. While traditional systems prioritize throughput and latency, neuromorphic designs must also consider energy per operation, spike efficiency, and temporal precision. Co-design methodologies must incorporate these specialized metrics throughout the development process to ensure the resulting system meets application requirements.

Debugging and optimization tools represent another critical aspect of the co-design process. Traditional software debugging approaches are often inadequate for neuromorphic systems due to their event-driven nature and distributed processing model. Specialized visualization and analysis tools that can represent temporal spike patterns and network activity are essential for effective development and troubleshooting.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!