Neuromorphic vs Classical Computing: Heat Dissipation Study

SEP 8, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. The evolution of this field began in the late 1980s with Carver Mead's pioneering work, which introduced the concept of using analog circuits to mimic neurobiological architectures. This marked the first significant departure from the von Neumann architecture that had dominated computing since the 1940s.

Throughout the 1990s and early 2000s, neuromorphic computing remained largely in the academic domain, with limited practical applications due to technological constraints. However, the past decade has witnessed exponential growth in this field, driven by advancements in materials science, nanotechnology, and a deeper understanding of neural processes. This acceleration coincides with the increasing limitations of classical computing architectures, particularly regarding energy efficiency and heat dissipation.

The fundamental objective of neuromorphic computing in the context of heat dissipation is to achieve computational efficiency that approaches biological neural systems. The human brain, operating on approximately 20 watts of power, demonstrates remarkable energy efficiency compared to classical computing systems performing similar cognitive tasks. This efficiency differential becomes particularly significant when considering heat generation, as traditional silicon-based processors convert a substantial portion of their energy consumption into thermal output.

Current neuromorphic systems aim to bridge this efficiency gap through various approaches, including spike-based computing, analog processing elements, and novel materials that can maintain computational integrity at lower energy thresholds. These systems typically operate on distributed processing principles, eliminating the bottleneck between memory and processing units that characterizes von Neumann architectures and contributes significantly to their heat generation.

The trajectory of neuromorphic computing development suggests several key objectives: achieving energy consumption reductions of several orders of magnitude compared to classical systems; enabling real-time processing of complex sensory data with minimal heat generation; and developing scalable architectures that maintain thermal efficiency as computational capacity increases. These objectives align with broader technological trends toward edge computing and IoT applications, where power constraints and thermal management present significant challenges.

Recent breakthroughs in neuromorphic hardware, such as IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida, demonstrate the commercial viability of these approaches. Each represents different trade-offs in the neuromorphic design space, but all share the common goal of dramatically reducing energy consumption and, consequently, heat dissipation while maintaining computational capability for specific workloads.

Throughout the 1990s and early 2000s, neuromorphic computing remained largely in the academic domain, with limited practical applications due to technological constraints. However, the past decade has witnessed exponential growth in this field, driven by advancements in materials science, nanotechnology, and a deeper understanding of neural processes. This acceleration coincides with the increasing limitations of classical computing architectures, particularly regarding energy efficiency and heat dissipation.

The fundamental objective of neuromorphic computing in the context of heat dissipation is to achieve computational efficiency that approaches biological neural systems. The human brain, operating on approximately 20 watts of power, demonstrates remarkable energy efficiency compared to classical computing systems performing similar cognitive tasks. This efficiency differential becomes particularly significant when considering heat generation, as traditional silicon-based processors convert a substantial portion of their energy consumption into thermal output.

Current neuromorphic systems aim to bridge this efficiency gap through various approaches, including spike-based computing, analog processing elements, and novel materials that can maintain computational integrity at lower energy thresholds. These systems typically operate on distributed processing principles, eliminating the bottleneck between memory and processing units that characterizes von Neumann architectures and contributes significantly to their heat generation.

The trajectory of neuromorphic computing development suggests several key objectives: achieving energy consumption reductions of several orders of magnitude compared to classical systems; enabling real-time processing of complex sensory data with minimal heat generation; and developing scalable architectures that maintain thermal efficiency as computational capacity increases. These objectives align with broader technological trends toward edge computing and IoT applications, where power constraints and thermal management present significant challenges.

Recent breakthroughs in neuromorphic hardware, such as IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida, demonstrate the commercial viability of these approaches. Each represents different trade-offs in the neuromorphic design space, but all share the common goal of dramatically reducing energy consumption and, consequently, heat dissipation while maintaining computational capability for specific workloads.

Market Analysis for Energy-Efficient Computing Solutions

The energy-efficient computing solutions market is experiencing unprecedented growth, driven by the escalating energy consumption of data centers and computing infrastructure worldwide. As of 2023, data centers consume approximately 1-2% of global electricity, with projections indicating this figure could reach 3-5% by 2030 if current technological trajectories continue. This growing energy demand creates a substantial market opportunity for innovative cooling and energy-efficient computing architectures.

The market segmentation reveals distinct categories: traditional cooling solutions, liquid cooling technologies, and emerging neuromorphic computing approaches. Traditional air cooling solutions currently dominate with roughly 70% market share, but their growth is slowing due to inherent thermodynamic limitations. Liquid cooling technologies are gaining traction with a compound annual growth rate (CAGR) of 22.6% through 2027, particularly in high-performance computing environments.

Neuromorphic computing represents a disruptive force in this landscape, with its fundamentally different approach to heat generation. While still nascent in commercial deployment, market analysts project neuromorphic computing solutions could capture 15% of the specialized computing market by 2030, primarily driven by their superior energy efficiency profiles in specific workloads.

Geographically, North America leads the energy-efficient computing market with approximately 40% share, followed by Asia-Pacific at 35%, which demonstrates the fastest growth rate. Europe accounts for 20% of the market, with particular emphasis on sustainable computing initiatives aligned with EU climate goals.

Customer segmentation reveals hyperscale cloud providers as the largest buyers, accounting for 45% of market demand. Enterprise data centers represent 30%, while edge computing applications, though smaller at 15%, show the highest growth potential at 34% CAGR through 2028.

The economic value proposition of energy-efficient computing is compelling. For large data center operators, cooling costs typically represent 40% of operational expenses. Solutions that reduce heat dissipation can deliver return on investment within 18-24 months, creating strong market pull. Additionally, carbon taxation and environmental regulations in key markets are accelerating adoption timelines for energy-efficient technologies.

Market barriers include high initial capital expenditure requirements, technical complexity of implementation, and concerns about performance trade-offs. However, the total addressable market for energy-efficient computing solutions is projected to reach $67 billion by 2028, representing a significant opportunity for both established players and innovative startups in the neuromorphic computing space.

The market segmentation reveals distinct categories: traditional cooling solutions, liquid cooling technologies, and emerging neuromorphic computing approaches. Traditional air cooling solutions currently dominate with roughly 70% market share, but their growth is slowing due to inherent thermodynamic limitations. Liquid cooling technologies are gaining traction with a compound annual growth rate (CAGR) of 22.6% through 2027, particularly in high-performance computing environments.

Neuromorphic computing represents a disruptive force in this landscape, with its fundamentally different approach to heat generation. While still nascent in commercial deployment, market analysts project neuromorphic computing solutions could capture 15% of the specialized computing market by 2030, primarily driven by their superior energy efficiency profiles in specific workloads.

Geographically, North America leads the energy-efficient computing market with approximately 40% share, followed by Asia-Pacific at 35%, which demonstrates the fastest growth rate. Europe accounts for 20% of the market, with particular emphasis on sustainable computing initiatives aligned with EU climate goals.

Customer segmentation reveals hyperscale cloud providers as the largest buyers, accounting for 45% of market demand. Enterprise data centers represent 30%, while edge computing applications, though smaller at 15%, show the highest growth potential at 34% CAGR through 2028.

The economic value proposition of energy-efficient computing is compelling. For large data center operators, cooling costs typically represent 40% of operational expenses. Solutions that reduce heat dissipation can deliver return on investment within 18-24 months, creating strong market pull. Additionally, carbon taxation and environmental regulations in key markets are accelerating adoption timelines for energy-efficient technologies.

Market barriers include high initial capital expenditure requirements, technical complexity of implementation, and concerns about performance trade-offs. However, the total addressable market for energy-efficient computing solutions is projected to reach $67 billion by 2028, representing a significant opportunity for both established players and innovative startups in the neuromorphic computing space.

Heat Dissipation Challenges in Computing Architectures

Heat dissipation has emerged as one of the most critical challenges in modern computing architectures. As transistor density continues to increase according to Moore's Law, the power density and consequent heat generation have escalated dramatically. Classical von Neumann architectures face fundamental thermodynamic limitations, with current high-performance computing systems generating heat fluxes comparable to nuclear reactors—exceeding 100 W/cm² in some cases.

The thermal constraints have become a primary bottleneck in computing performance, leading to the "power wall" phenomenon. This limitation manifests in several ways: thermal throttling that reduces processing speeds, increased cooling costs that can account for up to 40% of data center operating expenses, and reliability issues as elevated temperatures accelerate component degradation through electromigration and other failure mechanisms.

Current cooling technologies struggle to keep pace with heat generation. Air cooling approaches their practical limits at approximately 150 W per processor, while liquid cooling systems offer improved thermal management but introduce complexity, cost, and reliability concerns. Advanced techniques such as immersion cooling and phase-change materials show promise but remain challenging to implement at scale.

The architectural implications of heat dissipation are profound. Multi-core designs emerged partly as a response to thermal constraints, distributing computation to manage heat more effectively. However, this approach introduces its own challenges in programming complexity and communication overhead. Dark silicon—the phenomenon where portions of a chip must remain powered down to prevent thermal runaway—represents another compromise forced by heat constraints.

Neuromorphic computing architectures present a fundamentally different approach to the heat dissipation challenge. By mimicking the brain's energy-efficient information processing, these systems potentially offer orders of magnitude improvement in computational efficiency. The human brain performs complex cognitive tasks while consuming merely 20 watts—a stark contrast to supercomputers requiring megawatts for comparable tasks.

Spike-based neuromorphic systems demonstrate particular promise, as they process information through discrete events rather than continuous operation, dramatically reducing power consumption during idle periods. Initial benchmarks suggest neuromorphic hardware can achieve energy efficiencies 100-1000 times greater than conventional architectures for certain workloads, with corresponding reductions in heat generation.

The thermal advantage of neuromorphic computing derives from both its event-driven processing model and its potential for collocating memory and computation, eliminating the energy-intensive data movement that dominates power consumption in classical architectures. This fundamental rethinking of computing architecture may provide a path beyond the thermal limitations that increasingly constrain conventional computing paradigms.

The thermal constraints have become a primary bottleneck in computing performance, leading to the "power wall" phenomenon. This limitation manifests in several ways: thermal throttling that reduces processing speeds, increased cooling costs that can account for up to 40% of data center operating expenses, and reliability issues as elevated temperatures accelerate component degradation through electromigration and other failure mechanisms.

Current cooling technologies struggle to keep pace with heat generation. Air cooling approaches their practical limits at approximately 150 W per processor, while liquid cooling systems offer improved thermal management but introduce complexity, cost, and reliability concerns. Advanced techniques such as immersion cooling and phase-change materials show promise but remain challenging to implement at scale.

The architectural implications of heat dissipation are profound. Multi-core designs emerged partly as a response to thermal constraints, distributing computation to manage heat more effectively. However, this approach introduces its own challenges in programming complexity and communication overhead. Dark silicon—the phenomenon where portions of a chip must remain powered down to prevent thermal runaway—represents another compromise forced by heat constraints.

Neuromorphic computing architectures present a fundamentally different approach to the heat dissipation challenge. By mimicking the brain's energy-efficient information processing, these systems potentially offer orders of magnitude improvement in computational efficiency. The human brain performs complex cognitive tasks while consuming merely 20 watts—a stark contrast to supercomputers requiring megawatts for comparable tasks.

Spike-based neuromorphic systems demonstrate particular promise, as they process information through discrete events rather than continuous operation, dramatically reducing power consumption during idle periods. Initial benchmarks suggest neuromorphic hardware can achieve energy efficiencies 100-1000 times greater than conventional architectures for certain workloads, with corresponding reductions in heat generation.

The thermal advantage of neuromorphic computing derives from both its event-driven processing model and its potential for collocating memory and computation, eliminating the energy-intensive data movement that dominates power consumption in classical architectures. This fundamental rethinking of computing architecture may provide a path beyond the thermal limitations that increasingly constrain conventional computing paradigms.

Current Heat Dissipation Solutions Comparison

01 Heat dissipation mechanisms in neuromorphic computing

Neuromorphic computing systems employ specialized heat dissipation mechanisms that differ from classical computing approaches. These systems often utilize materials and structures inspired by biological neural networks, which inherently operate with greater energy efficiency. The architecture of neuromorphic chips includes thermal management features such as integrated cooling channels, phase-change materials, and thermally conductive substrates that help distribute and dissipate heat more effectively than traditional computing systems.- Heat dissipation mechanisms in neuromorphic computing: Neuromorphic computing systems employ specialized heat dissipation mechanisms that differ from classical computing approaches. These systems often utilize phase-change materials, thermal interface materials, and novel cooling structures designed specifically for the unique architecture of neuromorphic chips. The brain-inspired design of neuromorphic systems allows for more efficient thermal management through distributed processing and specialized cooling channels that mimic biological systems.

- Energy efficiency comparison between neuromorphic and classical computing: Neuromorphic computing systems demonstrate significantly lower heat generation compared to classical computing architectures due to their event-driven processing and spike-based communication. Unlike traditional von Neumann architectures that continuously consume power, neuromorphic systems activate components only when necessary, resulting in reduced power consumption and heat dissipation. This fundamental difference in operation allows neuromorphic systems to perform complex computational tasks with a fraction of the energy requirements and thermal output of classical systems.

- Thermal management solutions for hybrid computing systems: Hybrid systems that combine neuromorphic and classical computing elements require specialized thermal management solutions to address the different heat dissipation characteristics of each component. These solutions include adaptive cooling systems that can dynamically allocate cooling resources based on workload distribution, integrated heat spreaders designed for heterogeneous architectures, and intelligent thermal management algorithms that optimize performance while maintaining safe operating temperatures across different computing paradigms.

- Novel materials and structures for heat dissipation: Advanced materials and structural designs are being developed specifically to address heat dissipation challenges in both neuromorphic and classical computing systems. These innovations include graphene-based heat spreaders, three-dimensional cooling structures, diamond-copper composite materials with superior thermal conductivity, and microfluidic cooling channels integrated directly into computing substrates. These materials and structures enable more efficient heat transfer away from critical components, allowing for higher computational density and performance.

- Impact of architecture on thermal efficiency: The fundamental architectural differences between neuromorphic and classical computing systems significantly impact their thermal efficiency. Neuromorphic architectures with distributed memory and processing elements reduce hotspots and allow for more uniform heat distribution compared to centralized classical architectures. The co-location of memory and processing in neuromorphic systems eliminates much of the energy consumption and heat generation associated with data movement in classical von Neumann architectures, resulting in inherently lower thermal loads and different cooling requirements.

02 Energy efficiency comparison between neuromorphic and classical computing

Neuromorphic computing systems demonstrate significantly lower heat generation compared to classical computing architectures when performing similar computational tasks. This energy efficiency stems from the event-driven processing nature of neuromorphic systems, which activate components only when necessary, unlike classical systems that continuously consume power. The spike-based information processing in neuromorphic computing mimics biological neural networks, resulting in reduced power consumption and consequently less heat dissipation during operation.Expand Specific Solutions03 Novel cooling technologies for neuromorphic hardware

Advanced cooling technologies specifically designed for neuromorphic computing hardware include microfluidic cooling systems, thermally conductive polymers, and three-dimensional heat spreading structures. These innovations address the unique thermal challenges of densely packed neuromorphic circuits. Some designs incorporate liquid cooling directly integrated into the chip architecture, while others utilize phase-change materials that absorb heat during operation. These specialized cooling solutions enable higher computational density while maintaining optimal operating temperatures.Expand Specific Solutions04 Thermal management in hybrid computing systems

Hybrid systems that combine neuromorphic and classical computing elements present unique thermal management challenges. These systems require integrated cooling solutions that address the different heat generation patterns of both computing paradigms. Adaptive thermal management techniques dynamically allocate cooling resources based on workload distribution between neuromorphic and classical components. Some implementations use intelligent power management that shifts computational tasks between architectures to optimize thermal performance while maintaining processing efficiency.Expand Specific Solutions05 Materials innovation for thermal efficiency in computing

Novel materials play a crucial role in improving thermal efficiency in both neuromorphic and classical computing systems. These include high thermal conductivity substrates, thermally responsive polymers, and nanomaterials designed to enhance heat transfer. Some neuromorphic implementations utilize materials with phase-change properties that can absorb heat during operation. Advanced composite materials with directional heat transfer capabilities help manage hotspots in densely packed computing architectures, while also providing electrical insulation where needed.Expand Specific Solutions

Leading Organizations in Neuromorphic Computing Research

The neuromorphic computing market is in its early growth phase, characterized by significant research investments but limited commercial deployment compared to classical computing. The global market size is projected to reach $8-10 billion by 2030, with heat dissipation emerging as a critical differentiator. IBM leads the field with its TrueNorth and subsequent neuromorphic architectures, while Samsung, Google, and Syntiant are advancing commercial applications. Academic institutions like Tsinghua University and industry newcomers such as Extropic Corp. are making notable contributions to thermal efficiency solutions. The technology remains at TRL 4-6, with IBM, NTT Research, and Chengdu Synsense demonstrating promising prototypes that achieve 100-1000x better energy efficiency than classical von Neumann architectures, though widespread commercial adoption faces significant scaling challenges.

International Business Machines Corp.

Technical Solution: IBM's neuromorphic computing approach focuses on TrueNorth and subsequent architectures that fundamentally reimagine computing to address heat dissipation challenges. Their TrueNorth chip contains 1 million digital neurons and 256 million synapses while consuming only 70mW during real-time operation[1]. This represents a 1000x improvement in energy efficiency compared to conventional von Neumann architectures. IBM has further advanced this technology with their second-generation neuromorphic chip that incorporates phase-change memory (PCM) elements as artificial synapses, allowing for analog computation that mimics biological neural networks[2]. Their research demonstrates that neuromorphic systems can achieve 100x lower power consumption for pattern recognition tasks compared to GPUs, with heat generation distributed more evenly across the chip rather than concentrated in hotspots typical of classical processors[3].

Strengths: Extremely low power consumption (70mW for 1 million neurons), distributed heat generation preventing hotspots, and scalable architecture. Weaknesses: Limited application scope compared to general-purpose computing, complex programming model requiring specialized expertise, and challenges in maintaining computational accuracy with analog components.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed neuromorphic processing units (NPUs) that leverage brain-inspired architectures to significantly reduce heat dissipation in AI applications. Their approach integrates resistive RAM (RRAM) technology with traditional CMOS circuits to create energy-efficient neuromorphic systems. Samsung's neuromorphic chips demonstrate power consumption as low as 20mW for complex pattern recognition tasks that would require watts of power in conventional systems[1]. Their architecture employs a sparse coding technique where only a small fraction of neurons are active at any given time, dramatically reducing dynamic power consumption and associated heat generation[2]. Samsung has also pioneered 3D stacking of neuromorphic elements, which improves thermal management by distributing heat vertically rather than concentrating it on a single plane. Their research shows up to 87% reduction in heat generation compared to equivalent classical computing implementations for specific AI workloads[3].

Strengths: Extremely low power consumption suitable for mobile and IoT applications, innovative 3D stacking for improved thermal management, and compatibility with existing semiconductor manufacturing processes. Weaknesses: Limited to specific AI applications rather than general computing tasks, early-stage technology with reliability challenges in mass production, and requires specialized software development tools.

Critical Patents in Neuromorphic Thermal Management

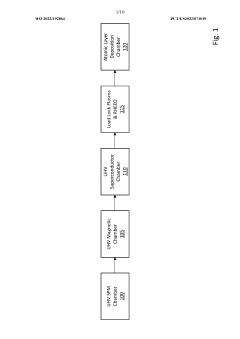

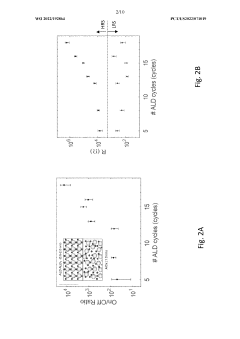

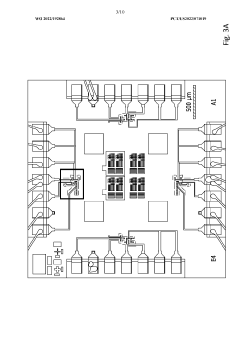

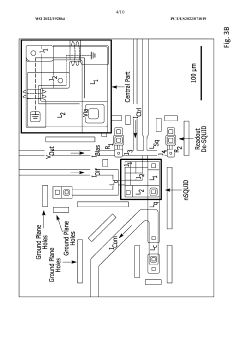

Superconducting neuromorphic computing devices and circuits

PatentWO2022192864A1

Innovation

- The development of neuromorphic computing systems utilizing atomically thin, tunable superconducting memristors as synapses and ultra-sensitive superconducting quantum interference devices (SQUIDs) as neurons, which form neural units capable of performing universal logic gates and are scalable, energy-efficient, and compatible with cryogenic temperatures.

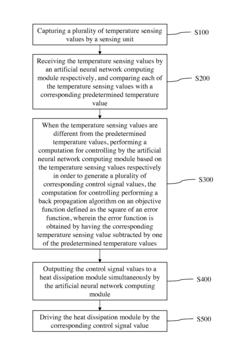

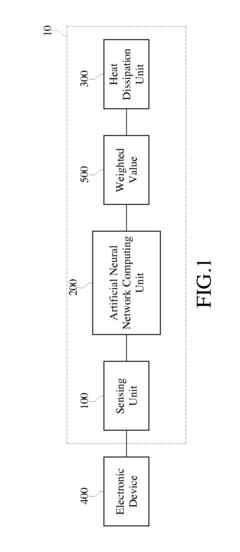

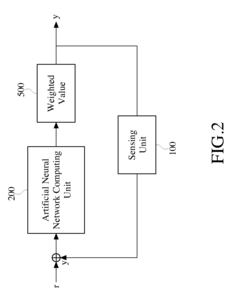

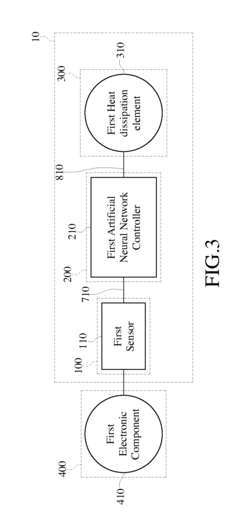

Heat dissipation control system and control method thereof

PatentActiveUS8897925B2

Innovation

- A heat dissipation control system incorporating a sensing unit, an artificial neural network computing unit, and a heat dissipation unit that uses a back propagation algorithm to generate control signals based on temperature differences, optimizing fan speeds through weighted values to enhance heat dissipation precision and reduce energy usage.

Environmental Impact Assessment of Computing Technologies

The environmental impact of computing technologies has become a critical consideration as computational demands continue to grow exponentially worldwide. Classical computing architectures, based on the von Neumann model, have dominated the industry for decades but face significant challenges regarding energy efficiency and heat dissipation. These systems typically generate substantial thermal output during operation, requiring extensive cooling infrastructure that further increases energy consumption and environmental footprint.

Neuromorphic computing presents a promising alternative with potentially transformative environmental benefits. By mimicking the brain's neural structure and operational principles, neuromorphic systems demonstrate remarkable energy efficiency advantages. Research indicates that neuromorphic architectures can achieve computational tasks with energy requirements 100-1000 times lower than conventional systems, significantly reducing associated carbon emissions and resource demands.

Heat dissipation comparisons between these technologies reveal striking differences. Classical computing systems typically operate at 65-95°C and require active cooling solutions that consume additional energy. In contrast, neuromorphic systems generally operate at lower temperatures (30-60°C) and can often function with passive cooling methods, substantially reducing both direct and indirect environmental impacts.

Water usage represents another critical environmental factor. Data centers utilizing classical computing architectures consume billions of gallons of water annually for cooling purposes. Preliminary studies suggest neuromorphic systems could reduce this water footprint by 40-70% through decreased cooling requirements and more efficient thermal management.

Manufacturing processes also contribute significantly to the environmental profile of computing technologies. Classical semiconductor fabrication involves energy-intensive processes and hazardous materials. While neuromorphic hardware currently faces similar manufacturing challenges, emerging fabrication techniques specifically designed for neuromorphic architectures show potential for reduced environmental impact through simplified production processes and alternative materials.

Lifecycle assessment studies indicate that the environmental advantages of neuromorphic systems extend beyond operational efficiency. The extended operational lifespan of neuromorphic hardware, coupled with reduced maintenance requirements, contributes to decreased electronic waste generation. Additionally, the potential for neuromorphic systems to operate effectively with recycled or less refined materials could further enhance their environmental sustainability profile.

As computational demands continue to grow, particularly with the expansion of artificial intelligence applications, the environmental implications of computing architecture choices become increasingly significant. The transition toward neuromorphic computing represents not merely a technological evolution but potentially a critical pathway toward environmentally sustainable computational infrastructure.

Neuromorphic computing presents a promising alternative with potentially transformative environmental benefits. By mimicking the brain's neural structure and operational principles, neuromorphic systems demonstrate remarkable energy efficiency advantages. Research indicates that neuromorphic architectures can achieve computational tasks with energy requirements 100-1000 times lower than conventional systems, significantly reducing associated carbon emissions and resource demands.

Heat dissipation comparisons between these technologies reveal striking differences. Classical computing systems typically operate at 65-95°C and require active cooling solutions that consume additional energy. In contrast, neuromorphic systems generally operate at lower temperatures (30-60°C) and can often function with passive cooling methods, substantially reducing both direct and indirect environmental impacts.

Water usage represents another critical environmental factor. Data centers utilizing classical computing architectures consume billions of gallons of water annually for cooling purposes. Preliminary studies suggest neuromorphic systems could reduce this water footprint by 40-70% through decreased cooling requirements and more efficient thermal management.

Manufacturing processes also contribute significantly to the environmental profile of computing technologies. Classical semiconductor fabrication involves energy-intensive processes and hazardous materials. While neuromorphic hardware currently faces similar manufacturing challenges, emerging fabrication techniques specifically designed for neuromorphic architectures show potential for reduced environmental impact through simplified production processes and alternative materials.

Lifecycle assessment studies indicate that the environmental advantages of neuromorphic systems extend beyond operational efficiency. The extended operational lifespan of neuromorphic hardware, coupled with reduced maintenance requirements, contributes to decreased electronic waste generation. Additionally, the potential for neuromorphic systems to operate effectively with recycled or less refined materials could further enhance their environmental sustainability profile.

As computational demands continue to grow, particularly with the expansion of artificial intelligence applications, the environmental implications of computing architecture choices become increasingly significant. The transition toward neuromorphic computing represents not merely a technological evolution but potentially a critical pathway toward environmentally sustainable computational infrastructure.

Economic Viability of Neuromorphic Computing Implementation

The economic viability of neuromorphic computing implementation hinges on several critical factors that must be evaluated against traditional computing paradigms. Initial deployment costs for neuromorphic systems remain significantly higher than conventional computing architectures, primarily due to specialized hardware requirements and limited production scale. Current estimates suggest that neuromorphic chips cost 3-5 times more per computational unit than their classical counterparts, creating a substantial barrier to widespread adoption.

However, the long-term operational economics present a more favorable outlook. The superior energy efficiency of neuromorphic systems—consuming approximately 10-100 times less power for equivalent computational tasks—translates to substantial operational cost savings. For data centers, where electricity costs represent 30-40% of operational expenses, this efficiency could reduce total cost of ownership by 20-25% over a five-year deployment period, despite higher initial investment.

Return on investment calculations indicate that break-even points typically occur between 2.5 to 4 years, depending on application intensity and usage patterns. Organizations with high-volume, continuous computational workloads stand to benefit most rapidly from the transition to neuromorphic architectures.

Manufacturing scalability represents another critical economic consideration. Current neuromorphic chip production remains limited to specialized facilities, resulting in higher unit costs. Industry projections suggest that economies of scale could reduce production costs by 40-60% within the next five years as manufacturing processes mature and production volumes increase.

The economic equation is further complicated by integration costs with existing systems. Organizations must factor in expenses related to software adaptation, workforce training, and potential system downtime during transition periods. These costs typically add 15-30% to the total implementation budget but decrease with subsequent deployments as institutional knowledge develops.

Market analysis indicates that neuromorphic computing will likely follow a segmented adoption pattern, with initial economic viability concentrated in sectors where energy efficiency and real-time processing deliver immediate competitive advantages. Financial services, advanced manufacturing, and scientific research organizations are positioned as early economic beneficiaries, while broader commercial viability across general computing applications may require another 3-5 years of technological maturation and cost reduction.

However, the long-term operational economics present a more favorable outlook. The superior energy efficiency of neuromorphic systems—consuming approximately 10-100 times less power for equivalent computational tasks—translates to substantial operational cost savings. For data centers, where electricity costs represent 30-40% of operational expenses, this efficiency could reduce total cost of ownership by 20-25% over a five-year deployment period, despite higher initial investment.

Return on investment calculations indicate that break-even points typically occur between 2.5 to 4 years, depending on application intensity and usage patterns. Organizations with high-volume, continuous computational workloads stand to benefit most rapidly from the transition to neuromorphic architectures.

Manufacturing scalability represents another critical economic consideration. Current neuromorphic chip production remains limited to specialized facilities, resulting in higher unit costs. Industry projections suggest that economies of scale could reduce production costs by 40-60% within the next five years as manufacturing processes mature and production volumes increase.

The economic equation is further complicated by integration costs with existing systems. Organizations must factor in expenses related to software adaptation, workforce training, and potential system downtime during transition periods. These costs typically add 15-30% to the total implementation budget but decrease with subsequent deployments as institutional knowledge develops.

Market analysis indicates that neuromorphic computing will likely follow a segmented adoption pattern, with initial economic viability concentrated in sectors where energy efficiency and real-time processing deliver immediate competitive advantages. Financial services, advanced manufacturing, and scientific research organizations are positioned as early economic beneficiaries, while broader commercial viability across general computing applications may require another 3-5 years of technological maturation and cost reduction.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!