Neuromorphic Design Benefits: Lower Energy Use vs Speed

SEP 5, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. This approach emerged in the late 1980s when Carver Mead first proposed using analog circuits to mimic neurobiological architectures. Since then, the field has evolved through several distinct phases, each marked by significant technological breakthroughs and shifting objectives.

The initial phase (1980s-1990s) focused primarily on theoretical foundations and proof-of-concept implementations. Researchers concentrated on understanding how neural processes could be translated into electronic equivalents, with energy efficiency as a secondary consideration to basic functionality. During this period, the primary goal was to demonstrate that brain-inspired computing was technically feasible.

The second phase (2000s-early 2010s) saw increased attention to practical applications and scalability challenges. As conventional computing began approaching physical limitations predicted by Moore's Law, neuromorphic designs gained attention for their potential to overcome these barriers. This period witnessed the emergence of several landmark projects, including IBM's TrueNorth and the European Human Brain Project, which established more concrete objectives around energy efficiency.

The current phase (mid-2010s-present) has been characterized by a deliberate focus on optimizing the energy-performance trade-off. Modern neuromorphic systems explicitly aim to achieve brain-like energy efficiency—approximately 20 watts for human-level cognitive capabilities—while maintaining acceptable computational speed. This represents a fundamental shift from traditional computing paradigms that prioritize raw performance over energy consumption.

Today's neuromorphic computing objectives have evolved to address specific limitations in conventional computing architectures, particularly for applications involving pattern recognition, sensory processing, and adaptive learning. The primary technical goals now include: achieving ultra-low power consumption (typically measured in milliwatts or even microwatts), enabling real-time processing of sensory data, supporting online learning capabilities, and maintaining fault tolerance through distributed processing.

The tension between energy efficiency and computational speed represents a central challenge in the field. While neuromorphic designs excel at reducing energy requirements—often achieving improvements of several orders of magnitude compared to conventional architectures—they frequently make trade-offs in terms of raw computational speed for certain types of operations. This trade-off is increasingly viewed not as a limitation but as an appropriate design choice for specific application domains where energy constraints are paramount, such as edge computing, autonomous systems, and implantable medical devices.

The initial phase (1980s-1990s) focused primarily on theoretical foundations and proof-of-concept implementations. Researchers concentrated on understanding how neural processes could be translated into electronic equivalents, with energy efficiency as a secondary consideration to basic functionality. During this period, the primary goal was to demonstrate that brain-inspired computing was technically feasible.

The second phase (2000s-early 2010s) saw increased attention to practical applications and scalability challenges. As conventional computing began approaching physical limitations predicted by Moore's Law, neuromorphic designs gained attention for their potential to overcome these barriers. This period witnessed the emergence of several landmark projects, including IBM's TrueNorth and the European Human Brain Project, which established more concrete objectives around energy efficiency.

The current phase (mid-2010s-present) has been characterized by a deliberate focus on optimizing the energy-performance trade-off. Modern neuromorphic systems explicitly aim to achieve brain-like energy efficiency—approximately 20 watts for human-level cognitive capabilities—while maintaining acceptable computational speed. This represents a fundamental shift from traditional computing paradigms that prioritize raw performance over energy consumption.

Today's neuromorphic computing objectives have evolved to address specific limitations in conventional computing architectures, particularly for applications involving pattern recognition, sensory processing, and adaptive learning. The primary technical goals now include: achieving ultra-low power consumption (typically measured in milliwatts or even microwatts), enabling real-time processing of sensory data, supporting online learning capabilities, and maintaining fault tolerance through distributed processing.

The tension between energy efficiency and computational speed represents a central challenge in the field. While neuromorphic designs excel at reducing energy requirements—often achieving improvements of several orders of magnitude compared to conventional architectures—they frequently make trade-offs in terms of raw computational speed for certain types of operations. This trade-off is increasingly viewed not as a limitation but as an appropriate design choice for specific application domains where energy constraints are paramount, such as edge computing, autonomous systems, and implantable medical devices.

Market Demand Analysis for Energy-Efficient Computing

The energy-efficient computing market is experiencing unprecedented growth driven by the convergence of data explosion, environmental concerns, and economic pressures. Current estimates value this market at approximately $25 billion, with projections indicating a compound annual growth rate of 18-20% through 2030. This acceleration stems from escalating data center power consumption, which currently accounts for 1-2% of global electricity usage and is expected to reach 3-5% by 2030 if traditional computing paradigms persist.

Neuromorphic computing represents a revolutionary approach to addressing these energy challenges. Unlike conventional von Neumann architectures that separate processing and memory, neuromorphic designs mimic the brain's neural structure, potentially reducing energy consumption by 100-1000x for specific workloads. This dramatic efficiency improvement has captured significant attention from both enterprise and hyperscale data center operators seeking to manage operational costs and carbon footprints.

The demand for energy-efficient computing solutions spans multiple sectors. Cloud service providers face mounting pressure as their infrastructure energy costs often exceed 40% of operational expenses. Edge computing applications, projected to number over 75 billion connected devices by 2025, require ultra-low-power processing capabilities that neuromorphic designs can deliver. Additionally, mobile device manufacturers are actively seeking solutions to extend battery life while supporting increasingly complex AI workloads.

Financial services, healthcare, and scientific research organizations represent key vertical markets with substantial demand for energy-efficient high-performance computing. These sectors process massive datasets requiring intensive computational resources while facing increasing regulatory pressure to reduce carbon emissions. Neuromorphic computing offers a compelling value proposition by potentially delivering equivalent or superior performance at a fraction of the energy cost.

Market research indicates that 78% of enterprise IT decision-makers now consider energy efficiency a "critical" or "very important" factor in computing infrastructure investments, up from 45% five years ago. This shift reflects both environmental corporate responsibility initiatives and the economic reality that power and cooling costs now represent 30-50% of data center operational expenses.

The trade-off between energy efficiency and processing speed remains a key consideration for potential adopters. While neuromorphic designs excel at specific workloads like pattern recognition and sensory processing, they may underperform in traditional computational tasks. Market analysis suggests a hybrid approach will likely dominate in the near term, with neuromorphic accelerators complementing conventional processors in specialized applications where their energy advantages outweigh potential speed limitations.

Neuromorphic computing represents a revolutionary approach to addressing these energy challenges. Unlike conventional von Neumann architectures that separate processing and memory, neuromorphic designs mimic the brain's neural structure, potentially reducing energy consumption by 100-1000x for specific workloads. This dramatic efficiency improvement has captured significant attention from both enterprise and hyperscale data center operators seeking to manage operational costs and carbon footprints.

The demand for energy-efficient computing solutions spans multiple sectors. Cloud service providers face mounting pressure as their infrastructure energy costs often exceed 40% of operational expenses. Edge computing applications, projected to number over 75 billion connected devices by 2025, require ultra-low-power processing capabilities that neuromorphic designs can deliver. Additionally, mobile device manufacturers are actively seeking solutions to extend battery life while supporting increasingly complex AI workloads.

Financial services, healthcare, and scientific research organizations represent key vertical markets with substantial demand for energy-efficient high-performance computing. These sectors process massive datasets requiring intensive computational resources while facing increasing regulatory pressure to reduce carbon emissions. Neuromorphic computing offers a compelling value proposition by potentially delivering equivalent or superior performance at a fraction of the energy cost.

Market research indicates that 78% of enterprise IT decision-makers now consider energy efficiency a "critical" or "very important" factor in computing infrastructure investments, up from 45% five years ago. This shift reflects both environmental corporate responsibility initiatives and the economic reality that power and cooling costs now represent 30-50% of data center operational expenses.

The trade-off between energy efficiency and processing speed remains a key consideration for potential adopters. While neuromorphic designs excel at specific workloads like pattern recognition and sensory processing, they may underperform in traditional computational tasks. Market analysis suggests a hybrid approach will likely dominate in the near term, with neuromorphic accelerators complementing conventional processors in specialized applications where their energy advantages outweigh potential speed limitations.

Current Neuromorphic Design Landscape and Challenges

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the human brain's neural networks to create more efficient processing systems. Currently, the landscape of neuromorphic design is characterized by a diverse range of approaches, from analog and digital implementations to hybrid systems that combine elements of both. Major research institutions and technology companies including IBM, Intel, BrainChip, and SynSense have made significant strides in developing neuromorphic chips and systems, each with unique architectural approaches and performance characteristics.

The field faces several critical challenges that impede widespread adoption. Power efficiency, while improved compared to traditional computing architectures, still falls short of the human brain's remarkable energy efficiency. Current neuromorphic systems consume orders of magnitude more energy per operation than biological neural systems, limiting their application in power-constrained environments such as edge devices and autonomous systems.

Scalability presents another significant hurdle. Many existing neuromorphic designs struggle to scale effectively while maintaining their performance advantages. As these systems grow in size and complexity, issues related to interconnect density, signal integrity, and thermal management become increasingly problematic, creating bottlenecks that limit practical implementation at scale.

The speed-efficiency tradeoff remains a central challenge in the field. While neuromorphic systems excel at certain tasks with lower energy consumption compared to traditional computing architectures, they often sacrifice processing speed. This tradeoff becomes particularly evident in applications requiring real-time processing or high throughput, where the inherent parallelism of neuromorphic systems may not fully compensate for lower clock speeds.

Manufacturing complexity also poses significant barriers. Current fabrication processes optimized for conventional CMOS technology are not ideally suited for neuromorphic designs, particularly those incorporating novel materials or devices such as memristors, phase-change memory, or spintronic elements. This manufacturing gap increases production costs and slows commercialization efforts.

The software ecosystem supporting neuromorphic computing remains underdeveloped compared to traditional computing platforms. Programming models, development tools, and algorithms specifically optimized for neuromorphic architectures are still evolving, creating a significant barrier to entry for developers and limiting application development.

Standardization across the industry is lacking, with different research groups and companies pursuing divergent approaches to neuromorphic design. This fragmentation complicates interoperability and slows the establishment of benchmarks for fair comparison between different neuromorphic solutions, hindering industry-wide progress and adoption.

The field faces several critical challenges that impede widespread adoption. Power efficiency, while improved compared to traditional computing architectures, still falls short of the human brain's remarkable energy efficiency. Current neuromorphic systems consume orders of magnitude more energy per operation than biological neural systems, limiting their application in power-constrained environments such as edge devices and autonomous systems.

Scalability presents another significant hurdle. Many existing neuromorphic designs struggle to scale effectively while maintaining their performance advantages. As these systems grow in size and complexity, issues related to interconnect density, signal integrity, and thermal management become increasingly problematic, creating bottlenecks that limit practical implementation at scale.

The speed-efficiency tradeoff remains a central challenge in the field. While neuromorphic systems excel at certain tasks with lower energy consumption compared to traditional computing architectures, they often sacrifice processing speed. This tradeoff becomes particularly evident in applications requiring real-time processing or high throughput, where the inherent parallelism of neuromorphic systems may not fully compensate for lower clock speeds.

Manufacturing complexity also poses significant barriers. Current fabrication processes optimized for conventional CMOS technology are not ideally suited for neuromorphic designs, particularly those incorporating novel materials or devices such as memristors, phase-change memory, or spintronic elements. This manufacturing gap increases production costs and slows commercialization efforts.

The software ecosystem supporting neuromorphic computing remains underdeveloped compared to traditional computing platforms. Programming models, development tools, and algorithms specifically optimized for neuromorphic architectures are still evolving, creating a significant barrier to entry for developers and limiting application development.

Standardization across the industry is lacking, with different research groups and companies pursuing divergent approaches to neuromorphic design. This fragmentation complicates interoperability and slows the establishment of benchmarks for fair comparison between different neuromorphic solutions, hindering industry-wide progress and adoption.

Energy-Speed Tradeoff Solutions in Current Neuromorphic Systems

01 Energy-efficient neuromorphic computing architectures

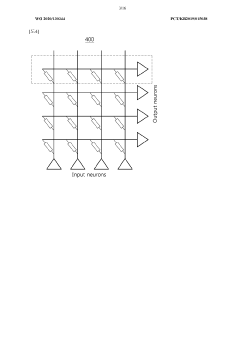

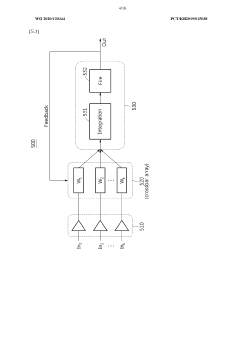

Neuromorphic designs that focus on reducing energy consumption through specialized hardware architectures. These designs implement brain-inspired computing principles to minimize power usage while maintaining computational capabilities. Techniques include optimized circuit designs, low-power memory integration, and energy-efficient signal processing that mimic neural functions with minimal energy overhead.- Energy-efficient neuromorphic computing architectures: Neuromorphic designs that focus on reducing energy consumption through specialized hardware architectures. These designs implement brain-inspired computing principles to achieve significant power efficiency compared to traditional computing systems. Key approaches include optimized circuit designs, low-power memory integration, and energy-aware processing algorithms that minimize computational overhead while maintaining performance.

- Speed optimization in neuromorphic systems: Techniques for enhancing processing speed in neuromorphic designs through parallel processing architectures and optimized signal pathways. These approaches enable faster neural network operations by implementing efficient spike processing, reduced latency connections, and specialized hardware accelerators. The designs focus on maintaining biological plausibility while achieving computational speeds suitable for real-time applications.

- Balanced energy-speed tradeoffs in neuromorphic design: Neuromorphic architectures that specifically address the balance between energy consumption and processing speed. These designs implement adaptive power management, dynamic voltage scaling, and workload-dependent resource allocation to optimize performance based on application requirements. The approaches include configurable neural elements that can prioritize either energy efficiency or speed depending on the computational context.

- Novel materials and fabrication techniques for neuromorphic efficiency: Implementation of advanced materials and fabrication methods to enhance both energy efficiency and speed in neuromorphic systems. These approaches include memristive devices, phase-change materials, and 3D integration techniques that enable more efficient neural processing. The designs leverage material properties to create compact, low-power synaptic elements while maintaining high-speed signal transmission capabilities.

- Application-specific neuromorphic optimizations: Neuromorphic designs tailored for specific applications with customized energy and speed profiles. These implementations include specialized neural network architectures optimized for particular tasks such as image recognition, natural language processing, or autonomous control systems. The designs incorporate application-specific constraints to achieve optimal performance metrics while minimizing energy consumption for their target use cases.

02 Speed optimization in neuromorphic systems

Approaches to enhance processing speed in neuromorphic designs through parallel computing architectures and optimized data pathways. These systems implement specialized hardware accelerators and efficient neural network implementations to reduce latency and increase throughput. The designs focus on balancing computational speed with energy constraints through innovative circuit designs and processing techniques.Expand Specific Solutions03 Memristor-based neuromorphic computing

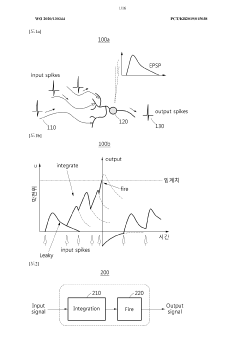

Neuromorphic designs utilizing memristor technology to achieve both energy efficiency and high-speed operation. Memristors enable efficient implementation of synaptic functions in hardware, allowing for reduced power consumption while maintaining fast signal processing. These designs leverage the analog nature of memristors to perform neural computations with significantly lower energy requirements compared to traditional digital approaches.Expand Specific Solutions04 Spiking neural networks for energy-efficient computing

Implementation of spiking neural networks (SNNs) in neuromorphic hardware to achieve superior energy efficiency. SNNs process information through discrete spikes rather than continuous signals, mimicking biological neural systems and significantly reducing energy consumption. These designs optimize the timing and frequency of neural spikes to balance computational performance with power requirements.Expand Specific Solutions05 Hardware-software co-design for neuromorphic systems

Integrated approaches that optimize both hardware and software components of neuromorphic systems to balance energy use and processing speed. These designs implement specialized algorithms tailored to neuromorphic hardware, enabling efficient resource utilization and power management. The co-design methodology includes adaptive power scaling, optimized neural network mapping, and hardware-aware training techniques to maximize performance while minimizing energy consumption.Expand Specific Solutions

Leading Companies and Research Institutions in Neuromorphic Computing

Neuromorphic computing is currently in a transitional phase from research to early commercialization, with the market expected to grow significantly from approximately $69 million in 2024 to over $1.78 billion by 2033. The technology's primary advantage lies in its energy efficiency, offering up to 1000x lower power consumption compared to traditional computing architectures, though often at the expense of processing speed. Major players like IBM, Intel, and Samsung are advancing commercial applications, while research institutions such as Zhejiang University and Brown University focus on fundamental innovations. Emerging companies like Polyn Technology and Syntiant are developing specialized neuromorphic chips for edge AI applications. The technology is approaching maturity for specific use cases in IoT, edge computing, and sensor processing, where energy efficiency is prioritized over raw computational speed.

International Business Machines Corp.

Technical Solution: IBM's TrueNorth neuromorphic chip represents a groundbreaking approach to brain-inspired computing, featuring 1 million programmable neurons and 256 million synapses arranged in 4,096 neurosynaptic cores. The architecture operates on an event-driven model that only activates neurons when necessary, consuming just 70mW while running at real-time speed - approximately 1/10,000th the power consumption of conventional chips with comparable capabilities. IBM has demonstrated TrueNorth's efficiency in applications like real-time video analysis, where it can classify objects at 2,600 frames per second while using only 25-275mW of power. The chip's architecture fundamentally breaks from the traditional von Neumann bottleneck by co-locating memory and processing, enabling massively parallel operations while maintaining extremely low power requirements.

Strengths: Exceptional energy efficiency (20mW per cm² compared to 50-100W for conventional processors); scalable architecture; inherent parallelism enabling real-time processing. Weaknesses: Limited to spiking neural network paradigms; programming complexity requiring specialized knowledge; potential challenges in integration with conventional computing systems.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed neuromorphic processing technology that integrates memory and computing elements to mimic brain functionality, focusing on their proprietary PIM (Processing-In-Memory) architecture. Their approach embeds computational elements directly within DRAM structures, eliminating the energy-intensive data movement between separate memory and processing units that dominates power consumption in conventional computing. Samsung's HBM-PIM (High Bandwidth Memory with Processing-In-Memory) technology demonstrates up to 2x performance improvement while reducing energy consumption by approximately 70% compared to conventional HBM implementations. The company has also explored true neuromorphic designs using emerging memory technologies like MRAM and RRAM to implement synaptic functions directly in hardware. These designs enable massively parallel, event-driven computation similar to biological neural systems, with energy efficiency improvements of up to 100x for specific AI workloads. Samsung has demonstrated applications ranging from image recognition to natural language processing that operate at significantly reduced power budgets while maintaining comparable accuracy to traditional implementations.

Strengths: Integration with existing memory manufacturing capabilities; practical hybrid approach combining conventional and neuromorphic elements; strong potential for commercial deployment in mobile and edge devices. Weaknesses: Less specialized than pure neuromorphic designs; still partially reliant on conventional computing paradigms; requires software ecosystem development to fully leverage hardware capabilities.

Key Innovations in Neuromorphic Circuit Design

Neuron, and neuromorphic system comprising same

PatentWO2020130344A1

Innovation

- Incorporating a two-terminal spin device with negative differential resistance (NDR) that performs integration and firing, eliminating the need for capacitors and reducing power consumption to 0.06mW, while enabling efficient integration and learning capabilities in neuromorphic systems.

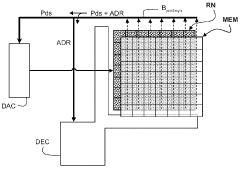

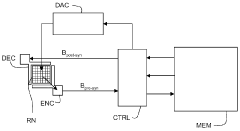

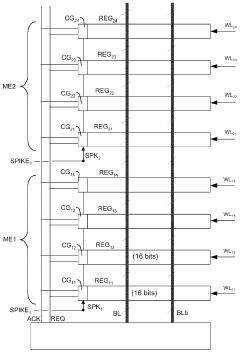

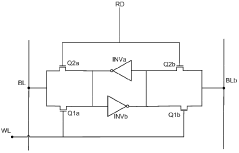

Electronic circuit with neuromorphic architecture

PatentWO2012076366A1

Innovation

- A neuromorphic circuit design that eliminates the need for address encoders and controllers by establishing a direct connection between neurons and their associated memories, allowing for a programmable memory system with management circuits to handle data extraction and conflict prevention on a post-synaptic bus, thereby reducing the complexity and energy consumption.

Benchmarking Methodologies for Neuromorphic Performance

Benchmarking methodologies for neuromorphic systems require specialized approaches that differ significantly from traditional computing performance metrics. The unique architecture of neuromorphic designs, which mimic biological neural networks, demands evaluation frameworks that can accurately capture their energy efficiency advantages while acknowledging speed trade-offs.

Standard benchmarking suites like SPEC and PARSEC, commonly used for conventional processors, fail to adequately measure neuromorphic performance characteristics. Instead, specialized benchmarks such as N-MNIST, SNN-MNIST, and CIFAR-10-DVS have emerged specifically for spiking neural networks and neuromorphic hardware. These datasets incorporate temporal dynamics essential for evaluating event-based processing capabilities.

Energy efficiency metrics for neuromorphic systems typically include Joules per inference, synaptic operations per second per watt (SOPS/W), and total power consumption under various workloads. These measurements reveal the significant advantage neuromorphic designs hold in scenarios where power constraints are critical, often demonstrating 100-1000x improvements over conventional architectures for certain tasks.

Speed evaluation presents greater complexity, as neuromorphic systems process information fundamentally differently than von Neumann architectures. Metrics such as time-to-first-spike, classification latency, and throughput under continuous operation provide more relevant performance indicators than traditional clock speed or FLOPS measurements. Real-time processing capabilities for event-based data streams represent another crucial benchmark dimension.

Cross-platform comparison methodologies have evolved to enable fair assessment between neuromorphic and conventional systems. These include task-oriented benchmarks that evaluate both architectures on identical problems while measuring multiple performance dimensions simultaneously. The SNN Performance Evaluation Platform (SNPE) and Nengo Benchmarks offer standardized frameworks for such comparisons.

Application-specific benchmarking has proven particularly valuable, with edge computing, sensor processing, and autonomous systems serving as representative workloads. These real-world scenarios highlight neuromorphic advantages in continuous, low-power operation while revealing limitations in computational density for certain complex calculations.

Industry-academia collaborations have recently established the Neuromorphic Computing Benchmark (NCB) initiative, which aims to standardize evaluation methodologies across the field. This framework incorporates both synthetic benchmarks for isolating specific performance characteristics and application-derived workloads that reflect deployment scenarios, providing a comprehensive assessment of the energy-speed tradeoff inherent to neuromorphic designs.

Standard benchmarking suites like SPEC and PARSEC, commonly used for conventional processors, fail to adequately measure neuromorphic performance characteristics. Instead, specialized benchmarks such as N-MNIST, SNN-MNIST, and CIFAR-10-DVS have emerged specifically for spiking neural networks and neuromorphic hardware. These datasets incorporate temporal dynamics essential for evaluating event-based processing capabilities.

Energy efficiency metrics for neuromorphic systems typically include Joules per inference, synaptic operations per second per watt (SOPS/W), and total power consumption under various workloads. These measurements reveal the significant advantage neuromorphic designs hold in scenarios where power constraints are critical, often demonstrating 100-1000x improvements over conventional architectures for certain tasks.

Speed evaluation presents greater complexity, as neuromorphic systems process information fundamentally differently than von Neumann architectures. Metrics such as time-to-first-spike, classification latency, and throughput under continuous operation provide more relevant performance indicators than traditional clock speed or FLOPS measurements. Real-time processing capabilities for event-based data streams represent another crucial benchmark dimension.

Cross-platform comparison methodologies have evolved to enable fair assessment between neuromorphic and conventional systems. These include task-oriented benchmarks that evaluate both architectures on identical problems while measuring multiple performance dimensions simultaneously. The SNN Performance Evaluation Platform (SNPE) and Nengo Benchmarks offer standardized frameworks for such comparisons.

Application-specific benchmarking has proven particularly valuable, with edge computing, sensor processing, and autonomous systems serving as representative workloads. These real-world scenarios highlight neuromorphic advantages in continuous, low-power operation while revealing limitations in computational density for certain complex calculations.

Industry-academia collaborations have recently established the Neuromorphic Computing Benchmark (NCB) initiative, which aims to standardize evaluation methodologies across the field. This framework incorporates both synthetic benchmarks for isolating specific performance characteristics and application-derived workloads that reflect deployment scenarios, providing a comprehensive assessment of the energy-speed tradeoff inherent to neuromorphic designs.

Integration Pathways with Conventional Computing Systems

The integration of neuromorphic computing systems with conventional computing architectures represents a critical pathway for leveraging the energy efficiency benefits of brain-inspired designs while maintaining computational speed and compatibility with existing infrastructure. This hybrid approach enables organizations to gradually adopt neuromorphic technology without wholesale replacement of current systems.

One promising integration strategy involves using neuromorphic processors as specialized co-processors alongside traditional CPUs and GPUs. In this configuration, computationally intensive tasks that benefit from parallel processing and pattern recognition—such as image classification, natural language processing, and anomaly detection—can be offloaded to the neuromorphic component. Meanwhile, sequential processing tasks remain with conventional computing systems, creating an efficient division of labor.

Hardware-level integration requires development of standardized interfaces and communication protocols between neuromorphic and conventional components. Industry leaders like Intel (with its Loihi chip) and IBM (with TrueNorth) have made significant progress in creating neuromorphic systems that can interface with standard computing architectures through PCIe connections and specialized APIs.

Software frameworks play an equally crucial role in successful integration. Middleware solutions that abstract the complexity of neuromorphic hardware enable developers to leverage these systems without specialized knowledge of their underlying architecture. Notable examples include Intel's Nengo framework and IBM's TrueNorth Neurosynaptic System, which provide programming models that bridge conventional and neuromorphic paradigms.

Edge computing represents a particularly promising application domain for integrated neuromorphic systems. By deploying energy-efficient neuromorphic components at the network edge, organizations can perform complex AI tasks with minimal power consumption while maintaining connectivity with cloud-based conventional systems for more complex processing when necessary.

The heterogeneous computing model—combining different processor types optimized for specific workloads—offers a practical transition path. This approach allows organizations to incrementally introduce neuromorphic components into their computing infrastructure, targeting specific applications where the energy efficiency advantages outweigh potential speed limitations.

Looking forward, the development of unified programming models that seamlessly span conventional and neuromorphic architectures will be essential for widespread adoption. These models must abstract hardware differences while enabling developers to exploit the unique capabilities of each computing paradigm, ultimately creating systems that deliver both energy efficiency and computational speed.

One promising integration strategy involves using neuromorphic processors as specialized co-processors alongside traditional CPUs and GPUs. In this configuration, computationally intensive tasks that benefit from parallel processing and pattern recognition—such as image classification, natural language processing, and anomaly detection—can be offloaded to the neuromorphic component. Meanwhile, sequential processing tasks remain with conventional computing systems, creating an efficient division of labor.

Hardware-level integration requires development of standardized interfaces and communication protocols between neuromorphic and conventional components. Industry leaders like Intel (with its Loihi chip) and IBM (with TrueNorth) have made significant progress in creating neuromorphic systems that can interface with standard computing architectures through PCIe connections and specialized APIs.

Software frameworks play an equally crucial role in successful integration. Middleware solutions that abstract the complexity of neuromorphic hardware enable developers to leverage these systems without specialized knowledge of their underlying architecture. Notable examples include Intel's Nengo framework and IBM's TrueNorth Neurosynaptic System, which provide programming models that bridge conventional and neuromorphic paradigms.

Edge computing represents a particularly promising application domain for integrated neuromorphic systems. By deploying energy-efficient neuromorphic components at the network edge, organizations can perform complex AI tasks with minimal power consumption while maintaining connectivity with cloud-based conventional systems for more complex processing when necessary.

The heterogeneous computing model—combining different processor types optimized for specific workloads—offers a practical transition path. This approach allows organizations to incrementally introduce neuromorphic components into their computing infrastructure, targeting specific applications where the energy efficiency advantages outweigh potential speed limitations.

Looking forward, the development of unified programming models that seamlessly span conventional and neuromorphic architectures will be essential for widespread adoption. These models must abstract hardware differences while enabling developers to exploit the unique capabilities of each computing paradigm, ultimately creating systems that deliver both energy efficiency and computational speed.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!