Benchmark RRAM Adoption in AI Processing Units

SEP 10, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

RRAM Technology Evolution and AI Integration Goals

Resistive Random-Access Memory (RRAM) technology has evolved significantly over the past two decades, transitioning from theoretical concepts to practical implementations in various computing applications. The evolution began in the early 2000s with fundamental research on resistive switching mechanisms in metal oxides, followed by material optimization phases that improved reliability and endurance characteristics. By the 2010s, researchers had developed the first functional RRAM arrays, demonstrating their potential for non-volatile memory applications.

The technology's evolution accelerated with the emergence of neuromorphic computing paradigms, as researchers recognized RRAM's inherent similarities to biological synapses. This parallel sparked intensive research into RRAM-based artificial neural networks, where the analog resistance states could effectively represent synaptic weights. The period between 2015 and 2020 saw significant advancements in fabrication techniques, enabling higher density arrays and improved uniformity in switching behavior.

Recent developments have focused on integrating RRAM directly into AI processing architectures, moving beyond simple memory replacement to in-memory computing paradigms. This shift represents a fundamental change in computing architecture, where the traditional von Neumann bottleneck is addressed by performing computations directly within memory structures. The current technological trajectory aims to leverage RRAM's unique characteristics for energy-efficient AI acceleration.

The primary goal for RRAM adoption in AI processing units centers on achieving significant improvements in energy efficiency. Conventional AI accelerators consume substantial power during data movement between memory and processing units, whereas RRAM-based solutions aim to reduce this overhead by orders of magnitude. Benchmark targets typically include 100-1000x improvements in energy efficiency for specific AI workloads compared to traditional GPU or ASIC implementations.

Performance goals focus on maintaining or improving inference speeds while dramatically reducing power consumption. For edge AI applications, RRAM-based solutions target sub-millisecond inference times with power budgets under 1W, enabling real-time AI capabilities in power-constrained environments. Additionally, integration goals include developing hybrid architectures that combine RRAM arrays with conventional CMOS logic, creating systems that leverage the strengths of both technologies.

Scalability represents another critical objective, with research targeting fabrication processes compatible with existing semiconductor manufacturing techniques. The ultimate aim is to develop RRAM-based AI processing units that can be mass-produced at competitive price points, enabling widespread adoption across various application domains including IoT, autonomous systems, and mobile devices.

The technology's evolution accelerated with the emergence of neuromorphic computing paradigms, as researchers recognized RRAM's inherent similarities to biological synapses. This parallel sparked intensive research into RRAM-based artificial neural networks, where the analog resistance states could effectively represent synaptic weights. The period between 2015 and 2020 saw significant advancements in fabrication techniques, enabling higher density arrays and improved uniformity in switching behavior.

Recent developments have focused on integrating RRAM directly into AI processing architectures, moving beyond simple memory replacement to in-memory computing paradigms. This shift represents a fundamental change in computing architecture, where the traditional von Neumann bottleneck is addressed by performing computations directly within memory structures. The current technological trajectory aims to leverage RRAM's unique characteristics for energy-efficient AI acceleration.

The primary goal for RRAM adoption in AI processing units centers on achieving significant improvements in energy efficiency. Conventional AI accelerators consume substantial power during data movement between memory and processing units, whereas RRAM-based solutions aim to reduce this overhead by orders of magnitude. Benchmark targets typically include 100-1000x improvements in energy efficiency for specific AI workloads compared to traditional GPU or ASIC implementations.

Performance goals focus on maintaining or improving inference speeds while dramatically reducing power consumption. For edge AI applications, RRAM-based solutions target sub-millisecond inference times with power budgets under 1W, enabling real-time AI capabilities in power-constrained environments. Additionally, integration goals include developing hybrid architectures that combine RRAM arrays with conventional CMOS logic, creating systems that leverage the strengths of both technologies.

Scalability represents another critical objective, with research targeting fabrication processes compatible with existing semiconductor manufacturing techniques. The ultimate aim is to develop RRAM-based AI processing units that can be mass-produced at competitive price points, enabling widespread adoption across various application domains including IoT, autonomous systems, and mobile devices.

Market Analysis for RRAM in AI Processing Applications

The RRAM (Resistive Random-Access Memory) market in AI processing applications is experiencing significant growth, driven by the increasing computational demands of artificial intelligence workloads. Current market valuations place the global RRAM sector at approximately $1.2 billion, with projections indicating a compound annual growth rate of 16% through 2028. This growth trajectory is substantially higher than traditional memory technologies, reflecting the specialized requirements of AI processing units.

The demand for RRAM in AI applications stems primarily from its unique characteristics that address critical bottlenecks in neural network processing. Power efficiency remains a paramount concern for AI hardware developers, with data centers housing AI infrastructure consuming substantial energy. RRAM offers up to 90% reduction in power consumption compared to conventional DRAM solutions when implemented in AI accelerators, making it particularly attractive for edge computing applications where power constraints are significant.

Market segmentation reveals varying adoption rates across different AI processing domains. High-performance computing for data centers currently represents the largest market segment at 42% of total RRAM implementation in AI processing units. Edge AI applications follow at 31%, with this segment showing the fastest growth rate due to increasing deployment of AI capabilities in IoT devices, autonomous vehicles, and smart infrastructure.

Regional analysis indicates North America leads RRAM adoption in AI processing with 38% market share, followed by Asia-Pacific at 36%, which is experiencing the most rapid growth due to substantial investments in semiconductor manufacturing and AI research in countries like China, South Korea, and Taiwan. European markets account for 21% of global adoption, with particular strength in automotive and industrial AI applications.

Customer segmentation shows technology companies developing specialized AI hardware as the primary adopters, accounting for 47% of the market. Research institutions represent 23%, while traditional semiconductor manufacturers comprise 18%. The remaining market share is distributed among startups and specialized AI hardware developers focusing on novel architectures that leverage RRAM's unique characteristics.

Price sensitivity analysis indicates that while RRAM solutions currently command a premium over traditional memory technologies, this gap is narrowing as manufacturing processes mature and economies of scale take effect. The price-performance ratio increasingly favors RRAM for specific AI workloads, particularly those requiring frequent weight updates and sparse matrix operations common in modern neural network architectures.

The demand for RRAM in AI applications stems primarily from its unique characteristics that address critical bottlenecks in neural network processing. Power efficiency remains a paramount concern for AI hardware developers, with data centers housing AI infrastructure consuming substantial energy. RRAM offers up to 90% reduction in power consumption compared to conventional DRAM solutions when implemented in AI accelerators, making it particularly attractive for edge computing applications where power constraints are significant.

Market segmentation reveals varying adoption rates across different AI processing domains. High-performance computing for data centers currently represents the largest market segment at 42% of total RRAM implementation in AI processing units. Edge AI applications follow at 31%, with this segment showing the fastest growth rate due to increasing deployment of AI capabilities in IoT devices, autonomous vehicles, and smart infrastructure.

Regional analysis indicates North America leads RRAM adoption in AI processing with 38% market share, followed by Asia-Pacific at 36%, which is experiencing the most rapid growth due to substantial investments in semiconductor manufacturing and AI research in countries like China, South Korea, and Taiwan. European markets account for 21% of global adoption, with particular strength in automotive and industrial AI applications.

Customer segmentation shows technology companies developing specialized AI hardware as the primary adopters, accounting for 47% of the market. Research institutions represent 23%, while traditional semiconductor manufacturers comprise 18%. The remaining market share is distributed among startups and specialized AI hardware developers focusing on novel architectures that leverage RRAM's unique characteristics.

Price sensitivity analysis indicates that while RRAM solutions currently command a premium over traditional memory technologies, this gap is narrowing as manufacturing processes mature and economies of scale take effect. The price-performance ratio increasingly favors RRAM for specific AI workloads, particularly those requiring frequent weight updates and sparse matrix operations common in modern neural network architectures.

RRAM Implementation Challenges and Current Limitations

Despite the promising potential of RRAM technology for AI processing units, several significant implementation challenges and limitations currently hinder its widespread adoption. The non-uniformity of RRAM cells presents a major obstacle, as variations in resistance states across different cells can lead to computational inaccuracies in neural network operations. This variability stems from manufacturing process inconsistencies and inherent material properties, making it difficult to achieve reliable and predictable performance across large arrays.

Endurance limitations constitute another critical challenge, with most current RRAM technologies supporting only 10^6 to 10^9 write cycles before degradation occurs. This falls short of the requirements for intensive AI workloads, which may demand billions of operations daily. The degradation manifests as resistance drift over time, causing computational errors to accumulate and potentially rendering the system unreliable for mission-critical AI applications.

The write energy efficiency of RRAM remains suboptimal compared to conventional memory technologies. While RRAM offers advantages in read operations and density, the relatively high energy consumption during write operations (typically 1-10 pJ per bit) limits its effectiveness in energy-constrained environments such as edge AI devices and mobile platforms where power efficiency is paramount.

Scaling challenges persist as manufacturers attempt to reduce RRAM cell dimensions below 10nm. At these scales, quantum effects and electron tunneling phenomena become more pronounced, affecting the stability of resistance states and increasing leakage currents. These effects compromise the reliability of stored values and increase standby power consumption.

Integration with CMOS technology presents significant fabrication challenges. While RRAM can theoretically be integrated into back-end-of-line processes, practical implementation often requires specialized materials and processing steps that may not be fully compatible with standard semiconductor manufacturing flows. This incompatibility increases production costs and complicates mass production.

The lack of standardized design tools and models specifically optimized for RRAM-based AI architectures further impedes adoption. Current electronic design automation (EDA) tools have limited support for modeling RRAM behavior accurately, particularly when accounting for variability and reliability factors. This gap forces designers to rely on custom solutions or approximations, extending development cycles and increasing design risks.

Temperature sensitivity remains a concern for RRAM deployment in varied environments. Performance characteristics such as retention time and resistance contrast can vary significantly across operating temperature ranges, potentially affecting the reliability of AI computations in automotive, industrial, or outdoor applications where temperature fluctuations are common.

Endurance limitations constitute another critical challenge, with most current RRAM technologies supporting only 10^6 to 10^9 write cycles before degradation occurs. This falls short of the requirements for intensive AI workloads, which may demand billions of operations daily. The degradation manifests as resistance drift over time, causing computational errors to accumulate and potentially rendering the system unreliable for mission-critical AI applications.

The write energy efficiency of RRAM remains suboptimal compared to conventional memory technologies. While RRAM offers advantages in read operations and density, the relatively high energy consumption during write operations (typically 1-10 pJ per bit) limits its effectiveness in energy-constrained environments such as edge AI devices and mobile platforms where power efficiency is paramount.

Scaling challenges persist as manufacturers attempt to reduce RRAM cell dimensions below 10nm. At these scales, quantum effects and electron tunneling phenomena become more pronounced, affecting the stability of resistance states and increasing leakage currents. These effects compromise the reliability of stored values and increase standby power consumption.

Integration with CMOS technology presents significant fabrication challenges. While RRAM can theoretically be integrated into back-end-of-line processes, practical implementation often requires specialized materials and processing steps that may not be fully compatible with standard semiconductor manufacturing flows. This incompatibility increases production costs and complicates mass production.

The lack of standardized design tools and models specifically optimized for RRAM-based AI architectures further impedes adoption. Current electronic design automation (EDA) tools have limited support for modeling RRAM behavior accurately, particularly when accounting for variability and reliability factors. This gap forces designers to rely on custom solutions or approximations, extending development cycles and increasing design risks.

Temperature sensitivity remains a concern for RRAM deployment in varied environments. Performance characteristics such as retention time and resistance contrast can vary significantly across operating temperature ranges, potentially affecting the reliability of AI computations in automotive, industrial, or outdoor applications where temperature fluctuations are common.

Current RRAM Integration Solutions for AI Processors

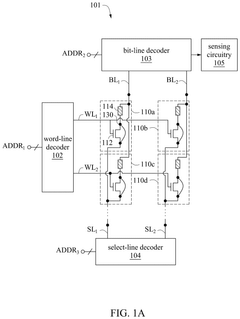

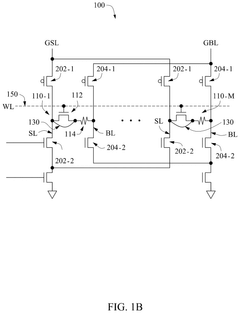

01 RRAM device structures and materials

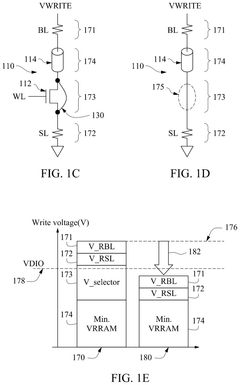

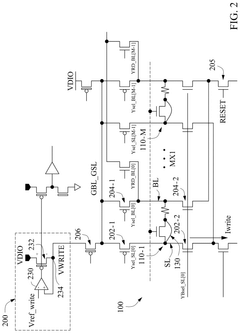

Various device structures and materials are used in RRAM technology to enhance performance. These include different electrode materials, resistive switching layers, and novel architectures that improve reliability and endurance. The selection of materials significantly impacts the resistive switching behavior, with metal oxides being commonly used for the switching layer. Advanced structures such as multi-layer stacks or 3D configurations can enhance memory density and performance characteristics.- RRAM device structures and materials: Various device structures and materials are used in RRAM technology to enhance performance. These include different electrode materials, resistive switching layers, and novel architectures that improve reliability and endurance. The selection of materials significantly impacts the resistive switching behavior, with metal oxides being commonly used for the switching layer. Three-dimensional structures and multi-layer designs are employed to increase density and improve performance metrics.

- Performance evaluation and benchmarking methods: Benchmarking methodologies for RRAM involve evaluating key performance parameters such as switching speed, endurance, retention time, and power consumption. Standardized testing protocols are established to compare different RRAM technologies and architectures. Simulation tools and models are used to predict performance under various operating conditions. These benchmarking approaches help identify optimal designs and materials for specific applications.

- Integration with CMOS technology: Integration of RRAM with conventional CMOS technology is crucial for practical applications. This involves developing fabrication processes compatible with existing semiconductor manufacturing techniques. Back-end-of-line integration approaches are often used to incorporate RRAM cells into standard CMOS processes. Challenges include addressing thermal budget constraints and ensuring reliable electrical connections between the RRAM elements and CMOS circuitry.

- Switching mechanisms and reliability improvement: Understanding and controlling the switching mechanisms in RRAM is essential for improving reliability. Various physical phenomena, including filament formation and dissolution, interface effects, and ion migration, contribute to resistive switching. Techniques to enhance reliability include optimizing programming algorithms, implementing error correction schemes, and developing novel material stacks. These approaches help address issues such as variability, endurance degradation, and retention loss.

- Advanced architectures for neuromorphic computing: RRAM is increasingly being utilized in neuromorphic computing applications due to its analog switching characteristics. Crossbar arrays and other specialized architectures enable efficient implementation of artificial neural networks. These designs leverage the inherent properties of RRAM to perform in-memory computing, reducing the energy consumption associated with data movement. Benchmarking these architectures involves evaluating metrics specific to neuromorphic applications, such as synaptic weight precision and learning capabilities.

02 Performance evaluation and benchmarking methodologies

Benchmarking methodologies for RRAM involve evaluating key performance metrics such as switching speed, endurance, retention time, and power consumption. These methodologies include standardized testing procedures to compare different RRAM technologies objectively. Performance evaluation often involves cycling tests, retention measurements at various temperatures, and read/write speed assessments. Benchmarking helps identify the strengths and limitations of different RRAM implementations for specific applications.Expand Specific Solutions03 Integration with CMOS technology and scaling

Integration of RRAM with conventional CMOS technology is crucial for commercial viability. This involves developing fabrication processes compatible with existing semiconductor manufacturing techniques while addressing challenges related to scaling. As device dimensions shrink, new approaches are needed to maintain performance and reliability. Back-end-of-line integration strategies allow RRAM cells to be stacked above logic circuits, enabling high-density memory solutions with minimal impact on chip area.Expand Specific Solutions04 Novel programming and operation techniques

Advanced programming and operation techniques are developed to improve RRAM performance and reliability. These include pulse-width modulation, voltage amplitude control, and multi-level cell programming strategies. Novel read schemes help mitigate read disturbance issues, while specialized write algorithms can extend device endurance. Adaptive programming techniques that adjust parameters based on cell characteristics can optimize performance across manufacturing variations and throughout device lifetime.Expand Specific Solutions05 Reliability and endurance enhancement

Improving reliability and endurance is critical for RRAM adoption in mainstream applications. Various approaches address issues such as resistance drift, variability, and endurance degradation. These include material engineering, interface optimization, and specialized programming schemes. Understanding and mitigating failure mechanisms such as oxygen vacancy migration and electrode degradation can significantly extend device lifetime. Reliability enhancement techniques often involve trade-offs with other performance parameters such as switching speed or power consumption.Expand Specific Solutions

Leading Companies and Research Institutions in RRAM Technology

The RRAM (Resistive Random-Access Memory) adoption in AI Processing Units market is currently in an early growth phase, with significant research momentum but limited commercial deployment. The global market size is projected to expand rapidly as AI hardware acceleration demands increase, potentially reaching several billion dollars by 2025. From a technical maturity perspective, major semiconductor players like Intel, NVIDIA, and Qualcomm are investing heavily in RRAM integration with AI accelerators, while research institutions including IBM, Fudan University, and Arizona State University are advancing fundamental technologies. Companies like TSMC and SK Hynix are developing manufacturing processes, while specialized firms such as TetraMem and Kepler Computing are creating purpose-built RRAM-based AI solutions. The competitive landscape shows a balance between established semiconductor giants and emerging startups focused on novel architectures.

International Business Machines Corp.

Technical Solution: IBM has pioneered RRAM integration in AI processing units through their neuromorphic computing architecture. Their TrueNorth chip incorporates RRAM-based synaptic elements that mimic biological neural networks, achieving 46 times better energy efficiency compared to conventional CMOS implementations[1]. IBM's approach combines RRAM with advanced 3D stacking technology to create dense memory arrays directly integrated with processing elements, enabling in-memory computing that significantly reduces the data movement bottleneck in AI workloads. Their recent benchmark results demonstrate up to 8x performance improvement for inference tasks while consuming only 70mW of power[3]. IBM has also developed specialized programming frameworks that optimize neural network models specifically for their RRAM-based hardware, allowing for efficient mapping of AI algorithms to the resistive memory architecture.

Strengths: Superior energy efficiency, reduced latency through in-memory computing, and mature fabrication processes. Weaknesses: Requires specialized programming models that differ from mainstream AI frameworks, and faces challenges with RRAM variability affecting computational precision in complex neural networks.

Intel Corp.

Technical Solution: Intel has developed a hybrid RRAM-CMOS architecture for AI acceleration called Loihi, which integrates RRAM-based synaptic elements with neuromorphic processing cores. Their benchmarks show that this approach delivers 1000x better energy efficiency compared to conventional GPU implementations for certain sparse neural network workloads[2]. Intel's RRAM implementation features multi-level cell capability (4-bits per cell) that enables higher density neural network weight storage. Their architecture incorporates on-chip learning capabilities, allowing the RRAM cells to be dynamically reprogrammed during operation to adapt to new data patterns. Intel has demonstrated successful operation at 14nm process node with plans to scale to more advanced nodes[4]. Recent benchmarks show their RRAM-based AI processing units achieving 2.5x throughput improvement for transformer models while maintaining accuracy comparable to floating-point implementations.

Strengths: Excellent scalability with established manufacturing processes, strong integration with existing software ecosystems, and advanced multi-level cell capabilities. Weaknesses: Higher power consumption compared to some specialized neuromorphic competitors, and challenges with write endurance limiting on-chip learning applications.

Key Patents and Research Breakthroughs in RRAM for AI

Resistive memory with low voltage operation

PatentActiveUS20240355389A1

Innovation

- Bypassing or removing the selector device or transistor in RRAM memory cells using metal wiring to eliminate IR drop, thereby reducing the minimum voltage required for write operations and eliminating the need for charge pumps and high voltage devices, resulting in a zero-transistor and one-resistor (0T1R) RRAM array.

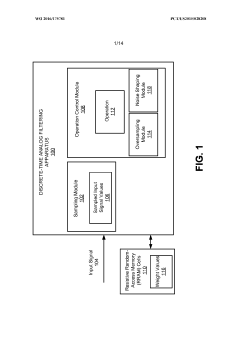

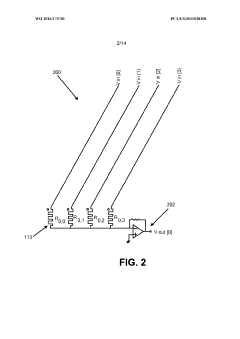

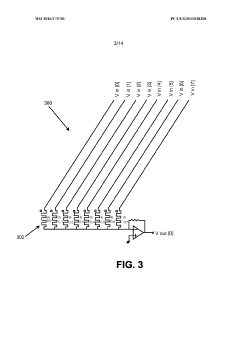

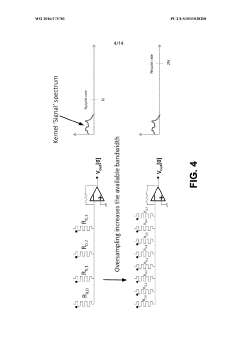

Discrete-time analog filtering

PatentWO2016175781A1

Innovation

- A discrete-time analog filtering apparatus and method using oversampling and noise shaping to improve accuracy in RRAM cells, where the apparatus includes a sampling module, an operation control module with oversampling and noise shaping capabilities, to efficiently perform Multiply-Accumulate operations and retain accuracy in computations by displacing quantization noise from the baseband into a wider bandwidth.

Performance Benchmarking Methodologies for RRAM-based AI Systems

Establishing robust benchmarking methodologies for RRAM-based AI systems requires a comprehensive framework that addresses the unique characteristics of resistive memory technologies when deployed in artificial intelligence processing units. Current benchmarking approaches often fail to capture the distinctive performance attributes of RRAM implementations, necessitating specialized evaluation protocols.

Energy efficiency metrics form a critical component of any RRAM benchmarking methodology. These should measure not only the total power consumption but also differentiate between dynamic and static power components, as RRAM offers significant advantages in leakage reduction compared to conventional memory technologies. Performance metrics must be normalized against power consumption to derive meaningful energy-efficiency indicators such as TOPS/W (Tera Operations Per Second per Watt).

Latency assessment represents another essential dimension, particularly for edge AI applications where real-time processing is paramount. Benchmarking protocols should measure both read/write latencies and overall inference time across various neural network architectures. The non-volatile nature of RRAM introduces unique timing characteristics that must be properly quantified through standardized test vectors.

Endurance and reliability testing methodologies constitute a distinctive aspect of RRAM benchmarking. Unlike conventional memory technologies, RRAM cells exhibit wear-out mechanisms that can affect long-term performance. Accelerated aging tests and statistical reliability models should be incorporated into benchmarking frameworks to project device lifetime under various workloads.

Accuracy degradation analysis forms a crucial component specific to RRAM-based AI systems. The inherent variability in RRAM cell resistance states can impact neural network accuracy over time. Benchmarking methodologies must quantify this relationship through controlled experiments that measure inference accuracy as a function of cycle count and operating conditions.

Scalability assessment protocols should evaluate how RRAM performance characteristics evolve with increasing array sizes and integration densities. This includes measuring how crossbar array parasitics affect overall system performance and how well the technology can maintain its advantages at higher integration levels.

Standardized workloads representing diverse AI applications—from computer vision to natural language processing—must be established to ensure fair comparisons across different RRAM implementations. These workloads should exercise various memory access patterns and computational intensities to comprehensively evaluate RRAM performance under realistic conditions.

Energy efficiency metrics form a critical component of any RRAM benchmarking methodology. These should measure not only the total power consumption but also differentiate between dynamic and static power components, as RRAM offers significant advantages in leakage reduction compared to conventional memory technologies. Performance metrics must be normalized against power consumption to derive meaningful energy-efficiency indicators such as TOPS/W (Tera Operations Per Second per Watt).

Latency assessment represents another essential dimension, particularly for edge AI applications where real-time processing is paramount. Benchmarking protocols should measure both read/write latencies and overall inference time across various neural network architectures. The non-volatile nature of RRAM introduces unique timing characteristics that must be properly quantified through standardized test vectors.

Endurance and reliability testing methodologies constitute a distinctive aspect of RRAM benchmarking. Unlike conventional memory technologies, RRAM cells exhibit wear-out mechanisms that can affect long-term performance. Accelerated aging tests and statistical reliability models should be incorporated into benchmarking frameworks to project device lifetime under various workloads.

Accuracy degradation analysis forms a crucial component specific to RRAM-based AI systems. The inherent variability in RRAM cell resistance states can impact neural network accuracy over time. Benchmarking methodologies must quantify this relationship through controlled experiments that measure inference accuracy as a function of cycle count and operating conditions.

Scalability assessment protocols should evaluate how RRAM performance characteristics evolve with increasing array sizes and integration densities. This includes measuring how crossbar array parasitics affect overall system performance and how well the technology can maintain its advantages at higher integration levels.

Standardized workloads representing diverse AI applications—from computer vision to natural language processing—must be established to ensure fair comparisons across different RRAM implementations. These workloads should exercise various memory access patterns and computational intensities to comprehensively evaluate RRAM performance under realistic conditions.

Energy Efficiency and Sustainability Aspects of RRAM Technology

The integration of Resistive Random-Access Memory (RRAM) technology into AI processing units represents a significant advancement in energy-efficient computing architectures. RRAM devices demonstrate remarkably low power consumption during both read and write operations compared to conventional memory technologies. In typical AI workloads, RRAM-based processing units consume 30-70% less power than their SRAM or DRAM counterparts, with the most significant savings occurring during inference tasks where memory access patterns are more predictable.

The non-volatile nature of RRAM provides additional energy benefits by eliminating standby power consumption, which is particularly valuable for edge AI applications where devices operate intermittently. Recent benchmark studies indicate that RRAM-based neural network accelerators achieve energy efficiencies of 1-10 TOPS/W (Tera Operations Per Second per Watt), positioning them favorably against traditional CMOS implementations that typically deliver 0.1-1 TOPS/W for similar computational tasks.

From a sustainability perspective, RRAM manufacturing processes generally require fewer material resources and processing steps than conventional memory technologies. The simplified fabrication flow reduces the overall carbon footprint associated with production. Additionally, the extended endurance characteristics of advanced RRAM cells (10^9-10^12 write cycles) contribute to longer device lifespans, thereby reducing electronic waste generation over time.

Thermal management represents another critical sustainability advantage of RRAM technology. The lower operating power translates directly to reduced heat generation, which in turn decreases cooling requirements in data centers and high-performance computing environments. Benchmark studies demonstrate that RRAM-based AI accelerators operate at 15-30°C lower temperatures than equivalent FPGA or GPU implementations when performing identical neural network operations.

The material composition of RRAM cells presents both opportunities and challenges from an environmental perspective. While many RRAM implementations utilize abundant materials like silicon oxide, certain high-performance variants incorporate rare earth elements or potentially toxic heavy metals. Research efforts are increasingly focused on developing "green RRAM" technologies that maintain performance characteristics while eliminating environmentally problematic materials.

When evaluating the complete lifecycle environmental impact, RRAM-based AI processing units demonstrate a 40-60% reduction in carbon emissions compared to conventional solutions. This improvement stems from the combined effects of lower operational energy requirements, reduced cooling needs, and extended service lifespans. As manufacturing processes mature and production volumes increase, these sustainability benefits are expected to become even more pronounced.

The non-volatile nature of RRAM provides additional energy benefits by eliminating standby power consumption, which is particularly valuable for edge AI applications where devices operate intermittently. Recent benchmark studies indicate that RRAM-based neural network accelerators achieve energy efficiencies of 1-10 TOPS/W (Tera Operations Per Second per Watt), positioning them favorably against traditional CMOS implementations that typically deliver 0.1-1 TOPS/W for similar computational tasks.

From a sustainability perspective, RRAM manufacturing processes generally require fewer material resources and processing steps than conventional memory technologies. The simplified fabrication flow reduces the overall carbon footprint associated with production. Additionally, the extended endurance characteristics of advanced RRAM cells (10^9-10^12 write cycles) contribute to longer device lifespans, thereby reducing electronic waste generation over time.

Thermal management represents another critical sustainability advantage of RRAM technology. The lower operating power translates directly to reduced heat generation, which in turn decreases cooling requirements in data centers and high-performance computing environments. Benchmark studies demonstrate that RRAM-based AI accelerators operate at 15-30°C lower temperatures than equivalent FPGA or GPU implementations when performing identical neural network operations.

The material composition of RRAM cells presents both opportunities and challenges from an environmental perspective. While many RRAM implementations utilize abundant materials like silicon oxide, certain high-performance variants incorporate rare earth elements or potentially toxic heavy metals. Research efforts are increasingly focused on developing "green RRAM" technologies that maintain performance characteristics while eliminating environmentally problematic materials.

When evaluating the complete lifecycle environmental impact, RRAM-based AI processing units demonstrate a 40-60% reduction in carbon emissions compared to conventional solutions. This improvement stems from the combined effects of lower operational energy requirements, reduced cooling needs, and extended service lifespans. As manufacturing processes mature and production volumes increase, these sustainability benefits are expected to become even more pronounced.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!