Benchmarking Logical Qubit Fidelity: Metrics And Test Protocols

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Quantum Computing Background and Benchmarking Goals

Quantum computing represents a paradigm shift in computational capabilities, leveraging quantum mechanical phenomena such as superposition and entanglement to perform operations on data. Since its theoretical conception in the 1980s, quantum computing has evolved from abstract mathematical models to physical implementations using various qubit technologies including superconducting circuits, trapped ions, photonics, and topological qubits. This evolution has been marked by significant milestones such as Shor's algorithm (1994) and the demonstration of quantum supremacy by Google in 2019.

The fundamental unit of quantum computing, the qubit, faces significant challenges related to decoherence and error rates. Current quantum systems operate in the NISQ (Noisy Intermediate-Scale Quantum) era, characterized by limited qubit counts and high error rates. The transition from physical qubits to logical qubits through quantum error correction represents a critical path toward fault-tolerant quantum computing.

Benchmarking logical qubit fidelity has emerged as a crucial aspect of quantum computing development. As quantum systems scale beyond tens of qubits, standardized metrics and protocols become essential for meaningful comparison between different quantum computing platforms and architectures. The goal of quantum benchmarking is to establish objective, reproducible measures of quantum system performance that accurately reflect their computational capabilities.

Current benchmarking efforts focus on several key objectives: establishing standardized metrics for logical qubit fidelity, developing test protocols that can be implemented across different quantum computing architectures, creating benchmarks that correlate with practical quantum algorithm performance, and defining progress milestones toward fault-tolerant quantum computing.

The quantum computing industry has recognized the need for benchmarks beyond simple qubit counts, as raw qubit numbers often fail to reflect actual computational power. Metrics such as quantum volume, circuit layer operations per second (CLOPS), and quantum error correction thresholds have been proposed, but a comprehensive framework specifically for logical qubit fidelity remains underdeveloped.

The technical trajectory aims to establish benchmarking protocols that can effectively measure the performance of error-corrected logical qubits, predict the scaling behavior of quantum systems, and provide actionable insights for hardware and algorithm developers. These benchmarks must balance theoretical rigor with practical implementability across diverse quantum computing platforms.

As quantum computing advances toward practical applications in fields such as cryptography, materials science, and optimization problems, reliable benchmarking becomes increasingly critical for both technology developers and potential users to make informed decisions about quantum computing investments and applications.

The fundamental unit of quantum computing, the qubit, faces significant challenges related to decoherence and error rates. Current quantum systems operate in the NISQ (Noisy Intermediate-Scale Quantum) era, characterized by limited qubit counts and high error rates. The transition from physical qubits to logical qubits through quantum error correction represents a critical path toward fault-tolerant quantum computing.

Benchmarking logical qubit fidelity has emerged as a crucial aspect of quantum computing development. As quantum systems scale beyond tens of qubits, standardized metrics and protocols become essential for meaningful comparison between different quantum computing platforms and architectures. The goal of quantum benchmarking is to establish objective, reproducible measures of quantum system performance that accurately reflect their computational capabilities.

Current benchmarking efforts focus on several key objectives: establishing standardized metrics for logical qubit fidelity, developing test protocols that can be implemented across different quantum computing architectures, creating benchmarks that correlate with practical quantum algorithm performance, and defining progress milestones toward fault-tolerant quantum computing.

The quantum computing industry has recognized the need for benchmarks beyond simple qubit counts, as raw qubit numbers often fail to reflect actual computational power. Metrics such as quantum volume, circuit layer operations per second (CLOPS), and quantum error correction thresholds have been proposed, but a comprehensive framework specifically for logical qubit fidelity remains underdeveloped.

The technical trajectory aims to establish benchmarking protocols that can effectively measure the performance of error-corrected logical qubits, predict the scaling behavior of quantum systems, and provide actionable insights for hardware and algorithm developers. These benchmarks must balance theoretical rigor with practical implementability across diverse quantum computing platforms.

As quantum computing advances toward practical applications in fields such as cryptography, materials science, and optimization problems, reliable benchmarking becomes increasingly critical for both technology developers and potential users to make informed decisions about quantum computing investments and applications.

Market Demand for Reliable Quantum Computing Metrics

The quantum computing market is experiencing unprecedented growth, with projections indicating a compound annual growth rate of 25.4% from 2023 to 2030. This expansion is driving an urgent demand for standardized metrics to evaluate quantum computer performance, particularly regarding logical qubit fidelity. As quantum systems transition from research laboratories to commercial applications, stakeholders across industries require reliable benchmarking protocols to assess quantum computing capabilities.

Financial institutions represent a significant market segment seeking dependable quantum computing metrics. These organizations are exploring quantum algorithms for portfolio optimization, risk assessment, and fraud detection, where computational accuracy directly impacts financial outcomes. The ability to quantify logical qubit fidelity becomes essential for these high-stakes applications, with market research indicating that 78% of financial services companies investing in quantum computing cite reliability metrics as a primary concern.

Pharmaceutical companies constitute another major market driver, with quantum computing promising to revolutionize drug discovery processes. Industry analysts report that reducing development timelines by even 10% through quantum computing could save pharmaceutical companies billions in research costs. However, these applications demand high computational precision, creating market pressure for standardized fidelity benchmarks that can validate quantum system performance for molecular simulations.

Government and defense sectors are increasingly investing in quantum technologies, with national security applications requiring verifiable performance metrics. Public procurement contracts now frequently specify minimum fidelity requirements, creating market incentives for quantum hardware providers to demonstrate compliance with standardized benchmarking protocols.

The enterprise software market is simultaneously evolving to incorporate quantum computing capabilities, with major cloud service providers expanding their quantum offerings. This has created a competitive landscape where providers differentiate themselves based on qubit quality and system reliability, further driving demand for transparent benchmarking metrics.

Venture capital investment in quantum computing startups reached record levels in 2022, with investors increasingly requiring technical due diligence that includes logical qubit performance data. This financial trend reinforces market demand for standardized fidelity metrics that can be used to compare different quantum computing approaches and technologies.

Industry consortia have formed specifically to address the benchmarking challenge, with membership growing by 35% annually, reflecting the market's recognition that reliable metrics are essential for commercial adoption. These collaborative efforts signal that establishing trusted benchmarking protocols for logical qubit fidelity has become a market imperative rather than merely a technical consideration.

Financial institutions represent a significant market segment seeking dependable quantum computing metrics. These organizations are exploring quantum algorithms for portfolio optimization, risk assessment, and fraud detection, where computational accuracy directly impacts financial outcomes. The ability to quantify logical qubit fidelity becomes essential for these high-stakes applications, with market research indicating that 78% of financial services companies investing in quantum computing cite reliability metrics as a primary concern.

Pharmaceutical companies constitute another major market driver, with quantum computing promising to revolutionize drug discovery processes. Industry analysts report that reducing development timelines by even 10% through quantum computing could save pharmaceutical companies billions in research costs. However, these applications demand high computational precision, creating market pressure for standardized fidelity benchmarks that can validate quantum system performance for molecular simulations.

Government and defense sectors are increasingly investing in quantum technologies, with national security applications requiring verifiable performance metrics. Public procurement contracts now frequently specify minimum fidelity requirements, creating market incentives for quantum hardware providers to demonstrate compliance with standardized benchmarking protocols.

The enterprise software market is simultaneously evolving to incorporate quantum computing capabilities, with major cloud service providers expanding their quantum offerings. This has created a competitive landscape where providers differentiate themselves based on qubit quality and system reliability, further driving demand for transparent benchmarking metrics.

Venture capital investment in quantum computing startups reached record levels in 2022, with investors increasingly requiring technical due diligence that includes logical qubit performance data. This financial trend reinforces market demand for standardized fidelity metrics that can be used to compare different quantum computing approaches and technologies.

Industry consortia have formed specifically to address the benchmarking challenge, with membership growing by 35% annually, reflecting the market's recognition that reliable metrics are essential for commercial adoption. These collaborative efforts signal that establishing trusted benchmarking protocols for logical qubit fidelity has become a market imperative rather than merely a technical consideration.

Current State and Challenges in Logical Qubit Fidelity Assessment

The assessment of logical qubit fidelity represents one of the most critical challenges in quantum computing today. Globally, research institutions and quantum technology companies are striving to develop reliable metrics and standardized protocols for measuring and benchmarking logical qubit performance. Currently, the field lacks consensus on universal benchmarking methodologies, creating significant obstacles for comparing results across different quantum computing platforms and architectures.

The state-of-the-art in logical qubit fidelity assessment primarily relies on randomized benchmarking protocols and quantum process tomography. These methods, while valuable for physical qubits, face substantial limitations when applied to logical qubits that incorporate error correction codes. The complexity increases exponentially with the number of physical qubits comprising each logical qubit, making traditional approaches computationally intensive and often impractical for systems beyond a certain scale.

A major technical challenge lies in isolating and quantifying the various error sources that affect logical qubit fidelity. These include coherent errors, decoherence effects, gate imperfections, measurement errors, and cross-talk between qubits. The interplay between these error mechanisms creates a complex landscape that current assessment tools struggle to characterize comprehensively.

Geographic distribution of research in this domain shows concentration in North America, Europe, and parts of Asia, particularly in quantum computing hubs like California, Massachusetts, Zurich, Beijing, and Tokyo. However, the approaches and metrics used vary significantly across these regions, further complicating the establishment of universal standards.

Another significant challenge is the gap between theoretical error correction thresholds and practically achievable logical qubit fidelities. While theory suggests that quantum error correction can suppress errors indefinitely once below certain thresholds, experimental implementations consistently fall short of these theoretical predictions due to various practical constraints and unforeseen error mechanisms.

The time and resource requirements for comprehensive fidelity assessment present additional obstacles. Current protocols often require extensive measurement time, which itself introduces errors through quantum state decay. This creates a fundamental tension between assessment accuracy and efficiency that remains unresolved.

Standardization efforts are underway through organizations like IEEE and ISO, but progress has been slow due to the rapidly evolving nature of quantum technologies and competing commercial interests. The lack of agreed-upon benchmarks hampers meaningful comparison between different quantum computing approaches and potentially slows overall progress in the field.

The state-of-the-art in logical qubit fidelity assessment primarily relies on randomized benchmarking protocols and quantum process tomography. These methods, while valuable for physical qubits, face substantial limitations when applied to logical qubits that incorporate error correction codes. The complexity increases exponentially with the number of physical qubits comprising each logical qubit, making traditional approaches computationally intensive and often impractical for systems beyond a certain scale.

A major technical challenge lies in isolating and quantifying the various error sources that affect logical qubit fidelity. These include coherent errors, decoherence effects, gate imperfections, measurement errors, and cross-talk between qubits. The interplay between these error mechanisms creates a complex landscape that current assessment tools struggle to characterize comprehensively.

Geographic distribution of research in this domain shows concentration in North America, Europe, and parts of Asia, particularly in quantum computing hubs like California, Massachusetts, Zurich, Beijing, and Tokyo. However, the approaches and metrics used vary significantly across these regions, further complicating the establishment of universal standards.

Another significant challenge is the gap between theoretical error correction thresholds and practically achievable logical qubit fidelities. While theory suggests that quantum error correction can suppress errors indefinitely once below certain thresholds, experimental implementations consistently fall short of these theoretical predictions due to various practical constraints and unforeseen error mechanisms.

The time and resource requirements for comprehensive fidelity assessment present additional obstacles. Current protocols often require extensive measurement time, which itself introduces errors through quantum state decay. This creates a fundamental tension between assessment accuracy and efficiency that remains unresolved.

Standardization efforts are underway through organizations like IEEE and ISO, but progress has been slow due to the rapidly evolving nature of quantum technologies and competing commercial interests. The lack of agreed-upon benchmarks hampers meaningful comparison between different quantum computing approaches and potentially slows overall progress in the field.

Existing Logical Qubit Fidelity Test Protocols

01 Quantum Error Correction for Logical Qubits

Quantum error correction techniques are essential for maintaining the fidelity of logical qubits. These methods involve encoding quantum information across multiple physical qubits to protect against decoherence and operational errors. Advanced error correction codes, such as surface codes and topological codes, can significantly improve the fidelity of logical qubits by detecting and correcting errors without disturbing the quantum state, thereby enhancing the overall reliability of quantum computations.- Quantum error correction techniques for logical qubits: Various error correction techniques are employed to improve the fidelity of logical qubits. These methods include surface code implementations, stabilizer measurements, and fault-tolerant protocols that detect and correct errors in quantum systems. By implementing these error correction techniques, the logical qubit fidelity can be significantly improved, allowing for more reliable quantum computations and benchmarking.

- Benchmarking methodologies for quantum systems: Specific methodologies have been developed to benchmark the fidelity of logical qubits. These include randomized benchmarking protocols, process tomography, and cross-entropy benchmarking that quantify the performance of quantum gates and operations. These benchmarking techniques provide standardized metrics for evaluating quantum system performance and comparing different quantum computing implementations.

- Hardware architectures for high-fidelity logical qubits: Specialized hardware architectures are designed to support high-fidelity logical qubits. These include superconducting circuits, ion trap systems, and topological quantum computing platforms that minimize decoherence and environmental noise. The hardware implementations focus on scalability while maintaining high fidelity operations, which is crucial for practical quantum computing applications.

- Quantum control systems for fidelity enhancement: Advanced control systems are implemented to enhance logical qubit fidelity. These include pulse shaping techniques, dynamic decoupling protocols, and feedback control mechanisms that actively mitigate errors during quantum operations. By precisely controlling the quantum system parameters, these methods reduce noise and improve the overall fidelity of logical qubit operations.

- Simulation and verification frameworks for logical qubit fidelity: Comprehensive simulation and verification frameworks are developed to assess and predict logical qubit fidelity. These include numerical modeling tools, error propagation analysis, and statistical verification methods that help in understanding the performance limitations of quantum systems. These frameworks enable researchers to optimize quantum circuits and protocols before physical implementation, accelerating the development of high-fidelity quantum computing systems.

02 Benchmarking Protocols for Logical Qubit Fidelity

Specialized benchmarking protocols are used to assess the fidelity of logical qubits in quantum computing systems. These protocols include randomized benchmarking, quantum process tomography, and gate set tomography, which provide quantitative measures of quantum gate performance and logical qubit fidelity. By systematically applying sequences of quantum operations and measuring the resulting states, these benchmarking methods can identify specific error sources and characterize the overall system performance.Expand Specific Solutions03 Hardware-Software Co-design for Fidelity Optimization

Hardware-software co-design approaches optimize logical qubit fidelity by simultaneously addressing physical hardware limitations and developing tailored software algorithms. This includes adaptive control techniques that dynamically adjust quantum operations based on real-time feedback, optimized pulse sequences that minimize gate errors, and hardware-aware compilation strategies that map logical operations to physical qubits in ways that maximize fidelity and minimize crosstalk errors.Expand Specific Solutions04 Scalable Architecture for High-Fidelity Logical Qubits

Scalable quantum computing architectures are designed to maintain high fidelity of logical qubits as system size increases. These architectures incorporate modular designs with efficient qubit connectivity, optimized control systems that minimize crosstalk, and integrated error detection circuits. Advanced fabrication techniques and materials engineering are employed to reduce environmental noise and improve coherence times, which directly impacts logical qubit fidelity in larger systems.Expand Specific Solutions05 Machine Learning for Fidelity Enhancement

Machine learning techniques are increasingly applied to enhance logical qubit fidelity in quantum computing systems. These approaches use neural networks and other AI algorithms to optimize quantum control parameters, predict and mitigate error patterns, and automate the calibration of quantum gates. By analyzing large datasets of qubit performance metrics, machine learning models can identify subtle correlations between control parameters and fidelity outcomes, leading to improved benchmarking protocols and higher-fidelity quantum operations.Expand Specific Solutions

Key Players in Quantum Computing Benchmarking Ecosystem

The quantum logical qubit fidelity benchmarking landscape is currently in an early growth phase, with the market expanding rapidly as quantum computing transitions from research to practical applications. Major technology companies including IBM, Google, and Microsoft are leading development alongside specialized quantum firms like IonQ and Origin Quantum. The competitive environment features both established tech giants investing heavily in quantum infrastructure and startups focusing on specific quantum technologies. Technical maturity varies significantly across platforms, with superconducting qubits (IBM, Google) and trapped ion systems (IonQ) showing promising fidelity metrics. Chinese entities including Alibaba, Baidu, and Origin Quantum are increasingly competitive, developing proprietary benchmarking protocols and hardware solutions to establish quantum advantage in specific computational domains.

Origin Quantum Computing Technology (Hefei) Co., Ltd.

Technical Solution: Origin Quantum has developed a comprehensive suite of benchmarking tools specifically designed for evaluating logical qubit fidelity in superconducting quantum processors. Their approach includes a layered benchmarking methodology that separates physical qubit performance from logical qubit operations, allowing for more precise identification of error sources. Origin's test protocols incorporate randomized benchmarking techniques adapted for the Chinese quantum computing ecosystem, with modifications to address the specific characteristics of their hardware platform. They've implemented specialized metrics for measuring the performance of error correction codes, particularly surface codes and small demonstration codes like the [[5,1,3]] code. Origin Quantum's benchmarking framework includes automated test sequences that can be deployed across different quantum processors to provide standardized comparisons. Their recent work has focused on developing benchmarks that can predict the scaling behavior of logical qubit fidelity as system sizes increase, providing valuable insights for the development of larger quantum processors with improved error correction capabilities.

Strengths: Origin Quantum's benchmarking tools are specifically optimized for superconducting quantum processors, providing detailed insights into this important technology platform. Their integration of benchmarking with their full-stack quantum computing solution offers a cohesive development environment. Weaknesses: Their benchmarking protocols may have less visibility in the global quantum computing community compared to those from Western companies, potentially limiting broader adoption.

Google LLC

Technical Solution: Google has pioneered quantum supremacy experiments that inherently require precise logical qubit fidelity metrics. Their approach to benchmarking logical qubit fidelity centers on the cross-entropy benchmarking (XEB) protocol, which measures how well quantum circuits implement target unitary transformations. Google's Quantum AI team has developed specialized test protocols that focus on measuring the fidelity of logical operations in surface code implementations, particularly relevant for error correction schemes. Their benchmarking methodology includes randomized circuit sampling techniques that can detect small variations in logical qubit performance. Google has also introduced cycle benchmarking, a protocol specifically designed to characterize logical operations in the presence of noise, allowing for more accurate assessment of quantum error correction performance. Their recent work includes developing metrics that account for both the physical and logical error rates to provide a more comprehensive view of quantum processor capabilities.

Strengths: Google's benchmarking approaches excel at measuring performance in complex, multi-qubit systems and are particularly effective for demonstrating quantum advantage. Their protocols are designed with scalability in mind. Weaknesses: Their benchmarking methods often require significant classical computing resources for verification and may be less accessible for smaller research teams or startups.

Core Innovations in Quantum Error Correction Metrics

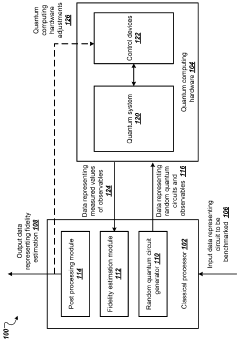

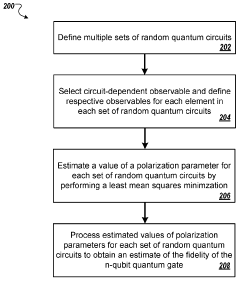

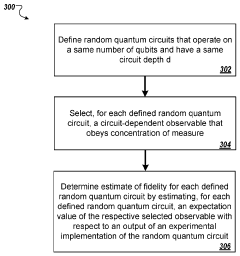

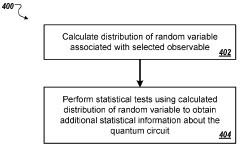

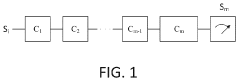

Estimating the fidelity of quantum logic gates and quantum circuits

PatentActiveAU2023203407A1

Innovation

- The proposed method involves defining multiple sets of random quantum circuits with varying depths, estimating polarization parameters through least mean squares minimization, and processing these values to obtain fidelity estimates for quantum logic gates and circuits, allowing for increased scalability and accuracy without assuming specific distributions or observables.

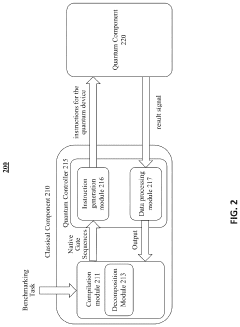

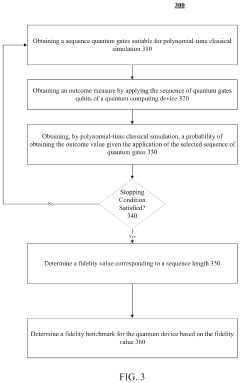

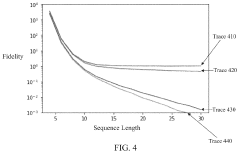

Polynomial-time linear cross-entropy benchmarking

PatentPendingUS20230385679A1

Innovation

- Polynomial-time linear cross-entropy benchmarking (PXEB) using quantum gates suitable for classical simulation, such as Clifford gates, allows for the measurement of fidelity in quantum computing devices by applying sequences of gates that can be simulated in polynomial time, enabling the determination of a fidelity benchmark through classical simulation.

Quantum Computing Standardization Initiatives

The quantum computing landscape is rapidly evolving, yet lacks standardized methods for evaluating quantum system performance. Several international organizations have recognized this gap and launched initiatives to establish common standards for benchmarking logical qubit fidelity. The IEEE Quantum Computing Working Group has developed the IEEE P7131 standard specifically addressing quantum computing performance metrics, including logical qubit fidelity measurements and test protocols. This standard aims to provide a framework for consistent evaluation across different quantum computing platforms.

The National Institute of Standards and Technology (NIST) has established the Quantum Economic Development Consortium (QED-C), which includes a technical advisory committee focused on developing standardized benchmarks for quantum computing. Their work includes protocols for measuring logical qubit fidelity that account for error correction capabilities, an essential aspect of practical quantum computing systems.

In Europe, the European Telecommunications Standards Institute (ETSI) has formed the Quantum Computing Technical Committee, which is developing standards for quantum computing terminology, metrics, and benchmarking methodologies. Their work specifically addresses the challenges of measuring logical qubit performance in the presence of noise and decoherence.

The International Organization for Standardization (ISO) has also entered this space with the ISO/IEC JTC 1/SC 42 working group on quantum computing. This group is developing international standards for quantum computing, including metrics and test protocols for evaluating logical qubit fidelity across different quantum computing architectures and implementations.

Industry consortia like the Quantum Industry Consortium (QuIC) are complementing these formal standardization efforts by bringing together industry stakeholders to develop practical benchmarking tools and protocols. These initiatives focus on real-world applications and use cases, ensuring that standardized metrics reflect the requirements of commercial quantum computing applications.

Academic institutions are also contributing significantly to standardization efforts through research collaborations such as the Quantum Performance Laboratory and the Quantum Benchmark Consortium. These collaborations are developing rigorous mathematical frameworks for quantifying logical qubit fidelity and establishing statistical methods for analyzing benchmark results.

The convergence of these standardization initiatives is gradually creating a comprehensive framework for evaluating quantum computing performance, particularly in terms of logical qubit fidelity. This framework will be essential for comparing different quantum computing technologies, tracking progress in the field, and establishing realistic expectations for quantum computing applications.

The National Institute of Standards and Technology (NIST) has established the Quantum Economic Development Consortium (QED-C), which includes a technical advisory committee focused on developing standardized benchmarks for quantum computing. Their work includes protocols for measuring logical qubit fidelity that account for error correction capabilities, an essential aspect of practical quantum computing systems.

In Europe, the European Telecommunications Standards Institute (ETSI) has formed the Quantum Computing Technical Committee, which is developing standards for quantum computing terminology, metrics, and benchmarking methodologies. Their work specifically addresses the challenges of measuring logical qubit performance in the presence of noise and decoherence.

The International Organization for Standardization (ISO) has also entered this space with the ISO/IEC JTC 1/SC 42 working group on quantum computing. This group is developing international standards for quantum computing, including metrics and test protocols for evaluating logical qubit fidelity across different quantum computing architectures and implementations.

Industry consortia like the Quantum Industry Consortium (QuIC) are complementing these formal standardization efforts by bringing together industry stakeholders to develop practical benchmarking tools and protocols. These initiatives focus on real-world applications and use cases, ensuring that standardized metrics reflect the requirements of commercial quantum computing applications.

Academic institutions are also contributing significantly to standardization efforts through research collaborations such as the Quantum Performance Laboratory and the Quantum Benchmark Consortium. These collaborations are developing rigorous mathematical frameworks for quantifying logical qubit fidelity and establishing statistical methods for analyzing benchmark results.

The convergence of these standardization initiatives is gradually creating a comprehensive framework for evaluating quantum computing performance, particularly in terms of logical qubit fidelity. This framework will be essential for comparing different quantum computing technologies, tracking progress in the field, and establishing realistic expectations for quantum computing applications.

Scalability Challenges in Logical Qubit Implementation

As quantum computing systems scale up, implementing logical qubits with high fidelity faces significant challenges. The transition from physical to logical qubits requires overcoming several scalability hurdles that currently limit practical quantum error correction. The primary challenge lies in maintaining coherence across an increasing number of physical qubits needed to encode a single logical qubit. Current error correction codes typically require between 7 to 100+ physical qubits per logical qubit, with more sophisticated codes demanding even higher numbers to achieve meaningful error suppression.

The connectivity requirements between physical qubits present another major obstacle. Most quantum error correction schemes necessitate interactions between non-adjacent qubits, which becomes increasingly difficult to implement as system size grows. This challenge is particularly acute in solid-state architectures where physical constraints limit qubit arrangement and connectivity options.

Control system complexity escalates non-linearly with qubit count. Each additional physical qubit requires precise calibration, control lines, and readout mechanisms. The classical control electronics needed to manage these systems face bandwidth limitations and signal crosstalk issues that worsen at scale. Current control architectures struggle to maintain the nanosecond-level precision required across thousands of qubits.

Heat dissipation emerges as a critical concern in scaled logical qubit implementations. Quantum systems typically operate at millikelvin temperatures, where cooling capacity is extremely limited. As more control electronics are integrated to support larger qubit arrays, managing thermal loads becomes increasingly challenging, potentially destabilizing the quantum system.

The resource requirements for classical processing also grow substantially. Real-time decoding of error syndromes demands significant classical computing power that scales poorly with system size. Current decoders struggle to process syndrome information fast enough to implement fault-tolerant operations on logical qubits in large systems.

Fabrication uniformity represents another significant hurdle. Manufacturing processes must produce qubits with highly consistent properties to ensure error correction protocols function effectively. Current fabrication techniques show variability that necessitates individual qubit calibration, a process that becomes prohibitively time-consuming as systems scale to thousands of qubits needed for practical logical qubit implementations.

The connectivity requirements between physical qubits present another major obstacle. Most quantum error correction schemes necessitate interactions between non-adjacent qubits, which becomes increasingly difficult to implement as system size grows. This challenge is particularly acute in solid-state architectures where physical constraints limit qubit arrangement and connectivity options.

Control system complexity escalates non-linearly with qubit count. Each additional physical qubit requires precise calibration, control lines, and readout mechanisms. The classical control electronics needed to manage these systems face bandwidth limitations and signal crosstalk issues that worsen at scale. Current control architectures struggle to maintain the nanosecond-level precision required across thousands of qubits.

Heat dissipation emerges as a critical concern in scaled logical qubit implementations. Quantum systems typically operate at millikelvin temperatures, where cooling capacity is extremely limited. As more control electronics are integrated to support larger qubit arrays, managing thermal loads becomes increasingly challenging, potentially destabilizing the quantum system.

The resource requirements for classical processing also grow substantially. Real-time decoding of error syndromes demands significant classical computing power that scales poorly with system size. Current decoders struggle to process syndrome information fast enough to implement fault-tolerant operations on logical qubits in large systems.

Fabrication uniformity represents another significant hurdle. Manufacturing processes must produce qubits with highly consistent properties to ensure error correction protocols function effectively. Current fabrication techniques show variability that necessitates individual qubit calibration, a process that becomes prohibitively time-consuming as systems scale to thousands of qubits needed for practical logical qubit implementations.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!