Hardware-Efficient Quantum Error Correction: Reducing Physical Qubit Overhead

Quantum Error Correction Background and Objectives

Quantum Error Correction (QEC) has emerged as a critical technology for the realization of fault-tolerant quantum computing. Since the inception of quantum computing theory in the 1980s, researchers have recognized that quantum systems are inherently fragile due to decoherence and environmental noise. This vulnerability necessitated the development of error correction techniques specifically designed for quantum information.

The evolution of QEC began with Peter Shor's groundbreaking work in 1995, introducing the first quantum error correction code. This was followed by significant contributions from researchers like Andrew Steane, Raymond Laflamme, and others who established the theoretical foundations of QEC. The field has since progressed through various generations of codes, from simple three-qubit codes to more sophisticated topological codes like surface codes.

Current QEC approaches face a fundamental challenge: the substantial physical qubit overhead required for implementation. Traditional surface codes, while promising for their high error thresholds, demand hundreds or thousands of physical qubits to encode a single logical qubit with sufficient protection. This overhead presents a significant barrier to scaling quantum computers beyond the NISQ (Noisy Intermediate-Scale Quantum) era.

The primary objective of hardware-efficient QEC research is to develop techniques that substantially reduce the physical qubit requirements while maintaining robust error correction capabilities. This involves exploring novel code architectures, optimizing encoding schemes, and leveraging hardware-specific advantages to achieve higher logical qubit densities.

Recent technological trends indicate growing interest in tailored QEC approaches that consider the specific noise characteristics and connectivity constraints of different quantum hardware platforms. This represents a shift from generic, hardware-agnostic codes toward more specialized solutions that maximize efficiency for particular quantum technologies.

The field aims to achieve several key technical goals: reducing the physical-to-logical qubit ratio below 100:1 (current best practices often require ratios exceeding 1000:1), developing codes with higher error thresholds that can operate reliably with fewer physical qubits, and creating hardware-aware QEC protocols that exploit the unique capabilities of specific quantum platforms.

Success in hardware-efficient QEC would dramatically accelerate the timeline for practical quantum advantage by enabling the construction of logical qubits with fewer physical resources. This would bridge the gap between current experimental systems with dozens to hundreds of qubits and the fault-tolerant quantum computers with thousands of logical qubits needed for transformative applications in chemistry, materials science, and cryptography.

Market Analysis for Hardware-Efficient Quantum Computing

The quantum computing market is experiencing significant growth, with projections indicating a compound annual growth rate of 25-30% over the next decade. This expansion is driven by increasing investments from both private and public sectors, recognizing the transformative potential of quantum technologies across various industries. However, the market currently faces a critical bottleneck: the high physical qubit overhead required for error correction, which substantially increases hardware complexity and manufacturing costs.

Hardware-efficient quantum error correction represents a crucial market opportunity, as reducing physical qubit overhead directly addresses the scalability challenges that currently prevent widespread commercial adoption. Organizations that can develop more efficient error correction techniques stand to capture significant market share in the emerging quantum computing ecosystem.

Market segmentation reveals distinct customer profiles with varying needs regarding hardware efficiency. Research institutions and academic laboratories prioritize qubit quality and coherence times, while enterprise clients focus on total cost of ownership and practical implementation timelines. Government agencies, particularly in defense and intelligence sectors, emphasize security features alongside hardware efficiency.

Geographically, North America leads the market for quantum computing hardware development, with significant activities concentrated in the United States and Canada. The European market shows strong growth in quantum error correction research, particularly in Germany, the Netherlands, and the UK. The Asia-Pacific region is rapidly expanding its quantum capabilities, with substantial investments from China, Japan, and South Korea focused on developing indigenous hardware-efficient quantum technologies.

Market adoption barriers include the high technical complexity of implementing error correction schemes, substantial capital requirements for quantum hardware development, and the specialized expertise needed for system integration. Early adopters are primarily found in financial services, pharmaceuticals, and advanced materials sectors, where computational advantages can justify premium investments.

The competitive landscape features established technology corporations with dedicated quantum divisions, specialized quantum startups focusing on novel error correction approaches, and research-oriented entities developing foundational technologies. Strategic partnerships between hardware developers and algorithm specialists are becoming increasingly common, creating integrated solution ecosystems.

Pricing models are evolving from the current research-oriented grant funding toward commercial structures, including quantum computing as a service (QCaaS), hardware licensing arrangements, and consulting services for implementation. The market increasingly values solutions that can demonstrate practical quantum advantage with minimal physical resource requirements.

Current Challenges in Qubit Overhead Reduction

Despite significant advancements in quantum computing, the reduction of physical qubit overhead remains one of the most formidable challenges in developing practical quantum error correction (QEC) systems. Current state-of-the-art quantum computers typically require thousands of physical qubits to create a single logical qubit with sufficient error protection, presenting a substantial barrier to scaling quantum systems beyond proof-of-concept demonstrations.

The surface code, while promising for its high error threshold and local operations, demands approximately 1,000-10,000 physical qubits per logical qubit to achieve fault-tolerance. This overhead stems from the need to implement multiple layers of error detection and correction mechanisms to combat quantum decoherence and gate errors. The physical-to-logical qubit ratio directly impacts the feasibility of constructing large-scale quantum computers capable of solving commercially relevant problems.

Hardware limitations further exacerbate the overhead challenge. Current superconducting qubit platforms struggle with connectivity constraints, where each qubit can only interact with a limited number of neighboring qubits. This restriction complicates the implementation of efficient error correction codes that often require non-local interactions. Additionally, the physical layout of qubits on chips introduces routing complexities that can increase the required number of physical qubits beyond theoretical minimums.

Crosstalk and noise correlation between adjacent qubits represent another significant hurdle. As qubit densities increase to accommodate error correction requirements, unwanted interactions between qubits become more pronounced, potentially introducing correlated errors that are particularly challenging for conventional QEC codes to address. This necessitates additional physical qubits for isolation or more complex error correction schemes.

The trade-off between code distance and resource requirements presents a fundamental dilemma. Higher code distances provide better error protection but demand exponentially more physical qubits. Current approaches struggle to optimize this balance, particularly for algorithms requiring deep circuits where error accumulation becomes critical.

Fabrication yield and variability in qubit performance add practical dimensions to the overhead problem. Manufacturing processes for quantum processors inevitably produce qubits with varying quality and performance characteristics. Error correction schemes must account for these variations, often by incorporating additional redundancy, further increasing the physical qubit count.

Time and space overhead are interrelated challenges. Some proposals suggest trading spatial overhead (number of physical qubits) for temporal overhead (longer computation times), but this approach faces limitations due to finite coherence times of physical qubits. Finding the optimal balance between these resources remains an open research question with significant implications for practical quantum computing implementations.

Existing Approaches to Hardware-Efficient QEC

01 Surface code architectures for quantum error correction

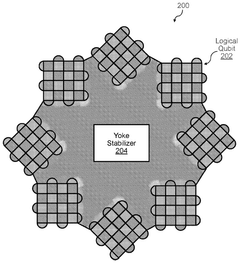

Surface codes represent a leading approach for quantum error correction, offering a balance between error correction capability and physical qubit overhead. These architectures arrange physical qubits in two-dimensional lattice structures where each physical qubit interacts with its neighbors. The overhead ratio of physical to logical qubits can be substantial, often requiring dozens or hundreds of physical qubits to encode a single logical qubit with sufficient error protection. Various surface code implementations have been developed to optimize this overhead while maintaining fault tolerance.- Surface code implementations for quantum error correction: Surface codes are a leading approach for quantum error correction, offering a balance between error correction capability and physical qubit overhead. These codes arrange physical qubits in a two-dimensional lattice structure where each logical qubit is encoded using multiple physical qubits. The overhead ratio depends on the desired logical error rate and the physical error rate of the underlying hardware. Surface codes typically require fewer physical qubits per logical qubit compared to other code families while maintaining good error correction properties.

- Topological quantum error correction techniques: Topological quantum error correction codes protect quantum information by encoding it in the topological properties of a system, which are inherently resistant to local noise. These codes, including variants of surface codes and color codes, offer improved logical error rates with more efficient physical qubit utilization. The topological nature of these codes allows for fault-tolerant quantum computation with a reduced overhead compared to concatenated codes, though they still require a significant number of physical qubits per logical qubit to achieve practical fault tolerance.

- Hardware-efficient quantum error correction codes: Hardware-efficient quantum error correction codes are designed to minimize the physical qubit overhead while maintaining error correction capabilities. These approaches include tailored codes that match specific hardware architectures, optimized encoding schemes, and hybrid approaches that combine different code families. By considering the specific noise characteristics and connectivity constraints of the underlying quantum hardware, these codes can achieve better performance with fewer physical qubits, reducing the overall overhead required for fault-tolerant quantum computation.

- Overhead reduction through code concatenation and optimization: Techniques for reducing physical qubit overhead include optimized code concatenation, where different quantum error correction codes are combined in a hierarchical structure. This approach allows for customized error correction strategies that balance overhead with performance. Additionally, optimization methods such as code deformation, dynamic decoding algorithms, and adaptive error correction can significantly reduce the number of physical qubits required while maintaining or improving the logical error rate. These techniques are crucial for making fault-tolerant quantum computing more practical with limited qubit resources.

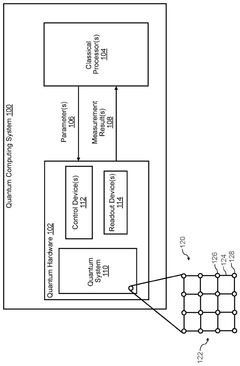

- Scalable architectures for fault-tolerant quantum computing: Scalable architectures for fault-tolerant quantum computing address the challenge of physical qubit overhead through innovative system designs. These architectures incorporate modular approaches, distributed quantum processing, and optimized qubit connectivity to efficiently implement error correction codes. By considering the entire quantum computing stack, from physical qubit properties to logical operations, these architectures aim to minimize the overhead ratio while enabling practical quantum error correction. Some approaches include networked quantum processors, 3D integrated systems, and hybrid quantum-classical architectures that optimize resource allocation.

02 Topological quantum error correction codes

Topological quantum error correction codes leverage the spatial arrangement of qubits to provide protection against errors. These codes encode quantum information in non-local degrees of freedom, making them resistant to local noise and errors. The physical qubit overhead in topological codes depends on the desired code distance, which determines the number of errors that can be corrected. While these codes require significant physical resources, they offer advantages in terms of error threshold and implementation feasibility in near-term quantum hardware.Expand Specific Solutions03 Hardware-efficient quantum error correction techniques

Hardware-efficient quantum error correction techniques aim to reduce the physical qubit overhead while maintaining adequate error protection. These approaches include tailored error correction codes designed for specific quantum computing architectures, optimized syndrome measurement circuits, and resource-efficient decoding algorithms. By considering the physical constraints and error characteristics of the underlying hardware, these techniques can achieve better logical error rates with fewer physical qubits, thereby reducing the overall overhead required for fault-tolerant quantum computation.Expand Specific Solutions04 Concatenated quantum error correction codes

Concatenated quantum error correction codes involve nesting one quantum error correction code inside another to achieve higher levels of protection. This approach allows for systematic improvement of the logical error rate at the cost of exponentially increasing physical qubit overhead. The concatenation level can be adjusted based on the desired error suppression and available physical resources. While these codes typically require more physical qubits than surface codes for comparable error protection, they can offer advantages in certain architectures and error models.Expand Specific Solutions05 Optimization methods for reducing physical qubit overhead

Various optimization methods have been developed to reduce the physical qubit overhead in quantum error correction. These include code deformation techniques, flag-based error detection, and resource state distillation protocols with reduced overhead. Advanced decoding algorithms can also improve the effective code performance without increasing the physical qubit count. Additionally, hybrid approaches that combine different error correction strategies can be tailored to specific hardware constraints and error models, potentially reducing the overall physical resources required for fault-tolerant quantum computation.Expand Specific Solutions

Leading Organizations in Quantum Computing Research

The quantum error correction landscape is evolving rapidly in its early commercialization phase, with a market expected to grow significantly as quantum computing matures. Hardware-efficient error correction represents a critical bottleneck in scaling quantum computers, currently requiring substantial physical qubit overhead. Major players demonstrate varying technical approaches and maturity levels. Google and IBM lead with superconducting qubit architectures and surface code implementations, while Microsoft pursues topological qubits for inherent error protection. Emerging companies like Quantinuum, IQM Finland, and Alice & Bob are developing specialized hardware-efficient error correction techniques. Academic institutions including MIT, University of Chicago, and University of Tokyo contribute fundamental research, while national laboratories in China (Origin Quantum) and Japan (NTT) focus on country-specific quantum ecosystems. The technology remains pre-commercial but is advancing through collaborative industry-academia partnerships.

Google LLC

Microsoft Technology Licensing LLC

Key Innovations in Physical Qubit Reduction Methods

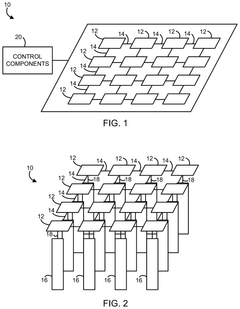

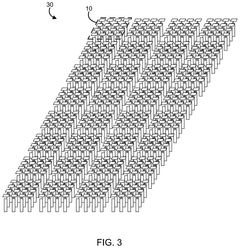

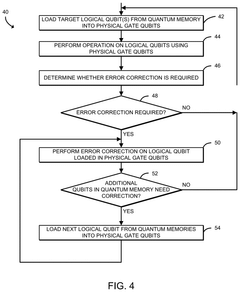

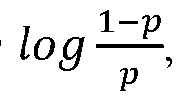

- A system for resource-efficient quantum error correction is proposed, utilizing a combination of physical gate qubits and quantum memory, where logical qubits are transferred between these components to minimize error rates, with control components managing operations to perform error correction efficiently.

- The implementation of nested quantum error correction (QEC) codes, which consist of an inner surface code and an outer high-rate parity check code, reduces qubit overhead and improves error correction capabilities.

Quantum Hardware Implementation Constraints

The implementation of quantum error correction (QEC) faces significant hardware constraints that directly impact the feasibility and efficiency of error-corrected quantum computers. Current quantum hardware platforms—including superconducting circuits, trapped ions, photonic systems, and spin qubits—each present unique physical limitations affecting QEC deployment. Superconducting systems, while offering fast gate operations, struggle with limited coherence times typically ranging from 100-300 microseconds, necessitating rapid error correction cycles. Trapped ion systems provide superior coherence but face challenges in scaling beyond tens of qubits due to ion chain management complexities.

Physical connectivity constraints represent another critical limitation. Most hardware architectures support only nearest-neighbor interactions, severely restricting the implementation of complex QEC codes that require long-range qubit interactions. This constraint necessitates additional SWAP operations that introduce more noise into the system—precisely what QEC aims to mitigate. For instance, surface codes implemented on 2D lattices with only nearest-neighbor connectivity require approximately 2-3 times more physical operations than their theoretical minimum.

Measurement and feedback latency constitutes a significant bottleneck in QEC implementations. The time required to measure syndrome qubits, process classical information, and apply corrective operations must be substantially shorter than qubit coherence times. Current systems exhibit measurement times of 100-500 nanoseconds and classical processing delays of 1-10 microseconds, which collectively consume a substantial fraction of available coherence time.

Control electronics scalability presents another formidable challenge. Each physical qubit requires dedicated control lines, measurement apparatus, and signal processing capabilities. As systems scale toward the millions of physical qubits needed for fault-tolerant computation, the associated classical control infrastructure grows exponentially in complexity. Current estimates suggest that controlling 1,000 physical qubits requires approximately 10,000 control lines and several racks of room-temperature electronics.

Fabrication yield and variability significantly impact large-scale QEC implementation. Manufacturing processes for quantum devices typically achieve 80-95% yield rates, with qubit parameters varying by 5-15% across devices. This variability necessitates individual qubit calibration and characterization, substantially increasing system complexity as qubit counts scale. These hardware constraints collectively drive the search for more hardware-efficient QEC approaches that can operate effectively within these physical limitations.

Scalability and Fault-Tolerance Trade-offs

The fundamental challenge in quantum computing lies in the tension between scalability and fault-tolerance. As quantum systems grow in size, maintaining coherence becomes increasingly difficult, necessitating more sophisticated error correction mechanisms. However, these very mechanisms demand substantial physical qubit overhead, creating a circular problem that threatens practical quantum advantage.

Current quantum error correction codes, particularly surface codes, require approximately 1,000 physical qubits to create a single logical qubit with sufficient error protection. This overhead presents a significant barrier to scaling quantum computers beyond a few hundred logical qubits, which would require hundreds of thousands of physical qubits—far beyond current technological capabilities.

Recent research has explored several promising approaches to reduce this overhead while maintaining fault-tolerance. Tailored error correction codes that exploit specific hardware characteristics have demonstrated potential for up to 50% reduction in physical qubit requirements. For instance, bias-preserving gates in superconducting architectures allow for asymmetric error correction that focuses resources on the most likely error channels.

Hardware-software co-design represents another frontier, where error correction schemes are specifically optimized for the native connectivity and error profiles of particular quantum processors. This approach has shown 30-40% improvements in resource efficiency in simulation studies, though experimental validation remains limited.

The trade-off between code distance and resource requirements presents another optimization opportunity. Adaptive protocols that dynamically adjust code distance based on computational requirements and observed error rates could significantly reduce average overhead while maintaining fault-tolerance thresholds for critical operations.

Importantly, the scalability-fault tolerance relationship is not strictly linear. Research indicates existence of "sweet spots" where modest increases in physical resources yield disproportionate improvements in logical error rates. Identifying and exploiting these inflection points could dramatically improve the efficiency frontier.

Looking forward, topological quantum computing approaches promise intrinsic error protection with potentially lower overhead, though they remain largely theoretical. Meanwhile, hybrid approaches combining different error correction strategies for different computational stages show promise for optimizing the resource-protection balance throughout quantum algorithms.