Implementing Parity Check Circuits With Minimal Control Overhead

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Parity Check Fundamentals and Objectives

Parity check circuits represent a fundamental component in error detection and correction systems, serving as a cornerstone technology in digital communication, data storage, and quantum computing. The concept of parity checking dates back to the early days of computing in the 1940s, when researchers first recognized the need for reliable data transmission and storage mechanisms. Over subsequent decades, this technology has evolved from simple single-bit error detection to sophisticated error correction codes capable of handling multiple bit errors.

The evolution of parity check implementations has followed a trajectory closely aligned with advances in integrated circuit technology. Early implementations required significant hardware resources and control logic, limiting their application in resource-constrained environments. As digital systems have become increasingly complex and miniaturized, the demand for efficient parity check circuits with minimal overhead has grown exponentially.

Current technological trends point toward ultra-low-power computing, quantum information processing, and edge computing applications, all of which require highly efficient error detection mechanisms. The challenge lies in maintaining reliability while minimizing both the circuit footprint and control complexity. This represents a critical balance between performance, power consumption, and silicon area utilization.

The primary objective of modern parity check circuit implementation is to achieve optimal error detection capabilities while minimizing control overhead. This involves developing architectures that can perform parity calculations with minimal gate count, reduced control signals, and lower power consumption. Specifically, the goals include reducing the number of control lines required for operation, minimizing state machine complexity, and decreasing the overall circuit latency.

Another key objective is to develop parity check circuits that can be easily integrated into existing digital design workflows and fabrication processes. This requires compatibility with standard cell libraries and design methodologies, allowing for seamless incorporation into complex system-on-chip (SoC) designs without requiring specialized manufacturing processes.

The advancement of parity check circuits also aims to address emerging requirements in quantum computing systems, where quantum error correction codes rely heavily on efficient parity check operations. In this domain, the objective extends to implementing circuits that can operate at cryogenic temperatures with minimal heat dissipation while maintaining quantum coherence.

Looking forward, the field is moving toward adaptive parity check systems that can dynamically adjust their operation based on channel conditions or application requirements. This represents a significant departure from traditional static implementations and offers promising avenues for further efficiency improvements in diverse application scenarios.

The evolution of parity check implementations has followed a trajectory closely aligned with advances in integrated circuit technology. Early implementations required significant hardware resources and control logic, limiting their application in resource-constrained environments. As digital systems have become increasingly complex and miniaturized, the demand for efficient parity check circuits with minimal overhead has grown exponentially.

Current technological trends point toward ultra-low-power computing, quantum information processing, and edge computing applications, all of which require highly efficient error detection mechanisms. The challenge lies in maintaining reliability while minimizing both the circuit footprint and control complexity. This represents a critical balance between performance, power consumption, and silicon area utilization.

The primary objective of modern parity check circuit implementation is to achieve optimal error detection capabilities while minimizing control overhead. This involves developing architectures that can perform parity calculations with minimal gate count, reduced control signals, and lower power consumption. Specifically, the goals include reducing the number of control lines required for operation, minimizing state machine complexity, and decreasing the overall circuit latency.

Another key objective is to develop parity check circuits that can be easily integrated into existing digital design workflows and fabrication processes. This requires compatibility with standard cell libraries and design methodologies, allowing for seamless incorporation into complex system-on-chip (SoC) designs without requiring specialized manufacturing processes.

The advancement of parity check circuits also aims to address emerging requirements in quantum computing systems, where quantum error correction codes rely heavily on efficient parity check operations. In this domain, the objective extends to implementing circuits that can operate at cryogenic temperatures with minimal heat dissipation while maintaining quantum coherence.

Looking forward, the field is moving toward adaptive parity check systems that can dynamically adjust their operation based on channel conditions or application requirements. This represents a significant departure from traditional static implementations and offers promising avenues for further efficiency improvements in diverse application scenarios.

Market Applications for Efficient Parity Check Implementations

Efficient parity check implementations with minimal control overhead have significant market applications across various industries where data integrity and error detection are critical. In telecommunications, these implementations are essential for ensuring reliable data transmission over noisy channels. The global telecommunications market, valued at $1.7 trillion, increasingly demands low-latency error detection capabilities that can operate at high frequencies without compromising power efficiency.

The data storage industry represents another substantial market opportunity. As storage densities continue to increase and physical dimensions decrease, the probability of bit errors rises significantly. Efficient parity check circuits enable storage systems to maintain data integrity while minimizing the performance impact of error detection processes. This is particularly valuable in enterprise storage systems where both reliability and performance are paramount concerns.

Cloud computing infrastructure providers have emerged as major potential adopters of advanced parity check implementations. With the exponential growth in data center operations, even marginal improvements in control overhead can translate to significant energy savings and performance gains when implemented at scale. The reduced latency offered by optimized parity check circuits directly contributes to improved service level agreements and customer satisfaction.

The automotive sector, especially with the rise of autonomous vehicles, presents a rapidly expanding market for efficient error detection mechanisms. Vehicle systems require real-time data verification with minimal processing delays, making optimized parity check circuits ideal components in safety-critical automotive electronics. Industry analysts project that the automotive electronics market segment specifically focused on data integrity solutions will grow at a compound annual rate of 14% through 2028.

Financial technology applications represent another high-value market segment. High-frequency trading systems and blockchain implementations require extremely efficient data verification mechanisms that can operate with minimal latency overhead. The microsecond advantages provided by optimized parity check implementations can translate to significant competitive advantages in these time-sensitive applications.

The Internet of Things (IoT) ecosystem presents perhaps the largest potential market by device volume. With billions of connected devices operating with constrained power and processing resources, efficient parity check implementations that minimize control overhead are essential for maintaining data integrity without depleting limited battery life or processing capabilities. The industrial IoT segment particularly values solutions that can operate reliably in harsh environments while maintaining strict power budgets.

Aerospace and defense applications constitute a specialized but high-value market segment where the reliability requirements are exceptionally stringent. Mission-critical systems demand error detection capabilities that can function with absolute reliability while minimizing the computational resources required for implementation.

The data storage industry represents another substantial market opportunity. As storage densities continue to increase and physical dimensions decrease, the probability of bit errors rises significantly. Efficient parity check circuits enable storage systems to maintain data integrity while minimizing the performance impact of error detection processes. This is particularly valuable in enterprise storage systems where both reliability and performance are paramount concerns.

Cloud computing infrastructure providers have emerged as major potential adopters of advanced parity check implementations. With the exponential growth in data center operations, even marginal improvements in control overhead can translate to significant energy savings and performance gains when implemented at scale. The reduced latency offered by optimized parity check circuits directly contributes to improved service level agreements and customer satisfaction.

The automotive sector, especially with the rise of autonomous vehicles, presents a rapidly expanding market for efficient error detection mechanisms. Vehicle systems require real-time data verification with minimal processing delays, making optimized parity check circuits ideal components in safety-critical automotive electronics. Industry analysts project that the automotive electronics market segment specifically focused on data integrity solutions will grow at a compound annual rate of 14% through 2028.

Financial technology applications represent another high-value market segment. High-frequency trading systems and blockchain implementations require extremely efficient data verification mechanisms that can operate with minimal latency overhead. The microsecond advantages provided by optimized parity check implementations can translate to significant competitive advantages in these time-sensitive applications.

The Internet of Things (IoT) ecosystem presents perhaps the largest potential market by device volume. With billions of connected devices operating with constrained power and processing resources, efficient parity check implementations that minimize control overhead are essential for maintaining data integrity without depleting limited battery life or processing capabilities. The industrial IoT segment particularly values solutions that can operate reliably in harsh environments while maintaining strict power budgets.

Aerospace and defense applications constitute a specialized but high-value market segment where the reliability requirements are exceptionally stringent. Mission-critical systems demand error detection capabilities that can function with absolute reliability while minimizing the computational resources required for implementation.

Current Limitations in Parity Check Circuit Design

Despite significant advancements in parity check circuit implementation, several critical limitations continue to impede optimal design with minimal control overhead. Current parity check circuits often suffer from excessive gate count requirements, particularly when handling large data widths. This redundancy not only increases silicon area but also contributes to higher power consumption and reduced operational efficiency. Traditional implementations frequently rely on cascaded XOR gate structures that create deep logical paths, resulting in timing bottlenecks that constrain maximum operating frequencies.

Control overhead remains a persistent challenge, with many designs requiring complex control logic that scales poorly with increasing data widths. This control complexity manifests in additional signal routing, synchronization mechanisms, and state management circuitry that can dominate the overall circuit footprint. The situation worsens in applications requiring dynamic parity checking configurations or multiple simultaneous parity calculations.

Existing solutions often make suboptimal tradeoffs between parallelism and resource utilization. Highly parallel implementations offer speed advantages but at the cost of significant area overhead, while serialized approaches reduce area requirements but introduce latency penalties that may be unacceptable for high-performance applications. This fundamental tension has not been adequately resolved in current design methodologies.

Integration challenges further complicate parity check circuit implementation. When embedded within larger systems, these circuits must interface with various bus widths, clock domains, and control protocols. Current designs frequently require custom interface logic that adds to the control overhead rather than leveraging standardized approaches that could minimize such overhead.

Testing and verification of parity check circuits presents another limitation. Conventional designs often lack built-in self-test capabilities, necessitating external test infrastructure that adds to the overall control complexity. This deficiency becomes particularly problematic in safety-critical applications where comprehensive fault coverage is essential.

Power management represents a growing concern, especially in battery-operated or energy-efficient systems. Current parity check implementations typically lack sophisticated power-gating or clock-gating mechanisms, resulting in unnecessary dynamic power consumption during periods of inactivity. The absence of fine-grained power control strategies contributes significantly to the overall energy footprint.

Scalability limitations also plague existing designs, with performance and overhead characteristics degrading non-linearly as data widths increase. This scaling inefficiency creates implementation barriers for applications handling wide data paths or requiring multiple parallel parity checks across different data segments.

Control overhead remains a persistent challenge, with many designs requiring complex control logic that scales poorly with increasing data widths. This control complexity manifests in additional signal routing, synchronization mechanisms, and state management circuitry that can dominate the overall circuit footprint. The situation worsens in applications requiring dynamic parity checking configurations or multiple simultaneous parity calculations.

Existing solutions often make suboptimal tradeoffs between parallelism and resource utilization. Highly parallel implementations offer speed advantages but at the cost of significant area overhead, while serialized approaches reduce area requirements but introduce latency penalties that may be unacceptable for high-performance applications. This fundamental tension has not been adequately resolved in current design methodologies.

Integration challenges further complicate parity check circuit implementation. When embedded within larger systems, these circuits must interface with various bus widths, clock domains, and control protocols. Current designs frequently require custom interface logic that adds to the control overhead rather than leveraging standardized approaches that could minimize such overhead.

Testing and verification of parity check circuits presents another limitation. Conventional designs often lack built-in self-test capabilities, necessitating external test infrastructure that adds to the overall control complexity. This deficiency becomes particularly problematic in safety-critical applications where comprehensive fault coverage is essential.

Power management represents a growing concern, especially in battery-operated or energy-efficient systems. Current parity check implementations typically lack sophisticated power-gating or clock-gating mechanisms, resulting in unnecessary dynamic power consumption during periods of inactivity. The absence of fine-grained power control strategies contributes significantly to the overall energy footprint.

Scalability limitations also plague existing designs, with performance and overhead characteristics degrading non-linearly as data widths increase. This scaling inefficiency creates implementation barriers for applications handling wide data paths or requiring multiple parallel parity checks across different data segments.

Existing Low-Overhead Parity Check Solutions

01 Low-Density Parity Check (LDPC) Code Optimization

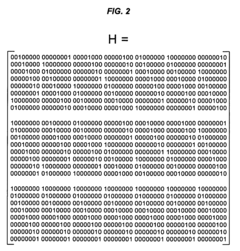

LDPC codes can be optimized to reduce control overhead while maintaining error correction capabilities. These optimizations include structured LDPC codes, efficient encoding algorithms, and specialized matrix designs that minimize the computational complexity and memory requirements. By carefully designing the parity check matrix, the overhead associated with encoding and decoding operations can be significantly reduced while maintaining robust error correction performance.- Low-Density Parity Check (LDPC) Code Optimization: LDPC codes can be optimized to reduce control overhead while maintaining error correction capabilities. These optimizations include structured designs that minimize the complexity of encoding and decoding operations, thereby reducing the computational overhead. Various techniques focus on optimizing the parity check matrix structure to achieve better performance with lower overhead requirements.

- Error Correction with Reduced Overhead: Advanced error correction techniques aim to minimize control overhead while maintaining robust error detection and correction capabilities. These methods include optimized parity check algorithms that require fewer redundant bits, efficient encoding schemes that reduce the amount of overhead data needed, and adaptive approaches that adjust the level of error protection based on channel conditions.

- Hardware Implementation of Parity Check Circuits: Efficient hardware designs for parity check circuits can significantly reduce control overhead. These implementations include specialized architectures that minimize gate count and power consumption, parallel processing structures that increase throughput without proportional increases in overhead, and configurable designs that can adapt to different error correction requirements.

- Dynamic Parity Check Mechanisms: Dynamic parity check mechanisms adjust their operation based on system conditions to optimize the balance between error protection and overhead. These systems can modify their parity check matrices on-the-fly, adapt to changing error patterns, or selectively apply different levels of error protection to different data segments, thereby reducing the average overhead while maintaining adequate protection.

- Hybrid Error Detection and Correction Schemes: Hybrid schemes combine multiple error detection and correction techniques to optimize the trade-off between protection and overhead. These approaches may integrate simple parity checks with more complex error correction codes, use hierarchical error protection strategies, or combine hardware and software error handling mechanisms to achieve efficient error management with minimal control overhead.

02 Error Correction Circuit Architectures

Specialized circuit architectures for parity check operations can reduce control overhead in error correction systems. These architectures include parallel processing units, pipeline structures, and dedicated hardware accelerators that efficiently implement parity check operations. By optimizing the circuit design specifically for parity check calculations, the control overhead can be minimized while maintaining high throughput and low latency in error detection and correction processes.Expand Specific Solutions03 Dynamic Parity Check Adaptation

Dynamic adaptation of parity check operations based on channel conditions or system requirements can optimize control overhead. These systems can adjust the number of parity bits, the complexity of the check matrix, or the decoding algorithm based on real-time error rates or available processing resources. This adaptive approach ensures that only the necessary overhead is used for each specific communication scenario, improving overall system efficiency.Expand Specific Solutions04 Memory-Efficient Parity Check Implementations

Memory-efficient implementations of parity check circuits reduce storage requirements and associated control overhead. These implementations use techniques such as compressed representation of parity check matrices, efficient storage structures, and optimized memory access patterns. By reducing the memory footprint required for parity check operations, these approaches minimize the control overhead while maintaining error correction capabilities.Expand Specific Solutions05 Hardware-Software Co-design for Parity Check Systems

Hardware-software co-design approaches optimize the implementation of parity check operations across both hardware and software components. These systems distribute parity check operations between dedicated hardware accelerators and software processing based on complexity, performance requirements, and available resources. This balanced approach minimizes control overhead by leveraging the strengths of both hardware and software implementations while addressing their respective limitations.Expand Specific Solutions

Leading Organizations in Parity Check Circuit Development

The quantum parity check circuit implementation landscape is currently in an early growth phase, characterized by increasing market interest but limited commercial deployment. The market is expected to expand significantly as quantum computing advances, with projections suggesting a compound annual growth rate of 25-30% over the next five years. Leading technology conglomerates like IBM, Samsung Electronics, and Siemens AG are investing heavily in quantum circuit optimization research, while specialized players such as Infineon Technologies and Synopsys are developing hardware-efficient implementations. Academic institutions including KAIST and CNRS are contributing fundamental research, creating a competitive ecosystem where minimizing control overhead represents a critical differentiator for practical quantum computing applications.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed innovative parity check implementations for their memory products, particularly in DRAM and NAND flash technologies. Their approach focuses on integrating parity generation and checking directly into memory arrays with minimal additional control circuitry. For their high-performance memory products, Samsung has implemented on-die parity checking that operates in parallel with normal read operations, effectively eliminating control overhead for data integrity verification. Their enterprise SSDs incorporate advanced parity-based error detection schemes that operate at multiple levels of the storage hierarchy, from individual memory cells to complete data blocks. These implementations leverage specialized hardware accelerators that perform parity calculations with minimal control overhead, enabling high-throughput error detection without impacting normal I/O operations. Samsung's research has also explored novel circuit techniques for implementing parity checks in emerging memory technologies such as MRAM and PRAM, where traditional approaches may not be directly applicable due to different error mechanisms and reliability characteristics.

Strengths: Deeply integrated with memory architectures; highly optimized for specific memory technologies; excellent scalability across different capacity points. Weaknesses: Solutions are primarily focused on memory subsystems; may require adaptation for general logic applications.

Infineon Technologies AG

Technical Solution: Infineon has developed specialized parity check implementations for their automotive and industrial microcontroller platforms, focusing on functional safety applications where reliability is paramount. Their AURIX and XMC microcontroller families incorporate hardware-accelerated parity check mechanisms that operate with minimal CPU intervention, reducing control overhead while maintaining ISO 26262 compliance for safety-critical systems. Infineon's approach leverages dedicated peripheral modules that perform continuous memory integrity checks using optimized parity algorithms that require minimal control signals. For power-sensitive applications, Infineon has developed ultra-low-power parity check implementations that utilize clock gating and power domain isolation to minimize both dynamic and static power consumption while maintaining error detection capabilities. Their security controllers feature specialized parity check circuits designed to detect fault injection attacks with minimal additional control logic, providing an efficient defense mechanism against certain classes of hardware attacks.

Strengths: Highly optimized for safety-critical applications; excellent power efficiency; robust performance in harsh environments. Weaknesses: Solutions are primarily targeted at specific application domains; may require adaptation for general-purpose computing environments.

Key Innovations in Control Overhead Reduction

Circuits for implementing parity computation in a parallel architecture LDPC decoder

PatentActiveUS8347167B2

Innovation

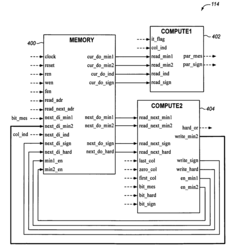

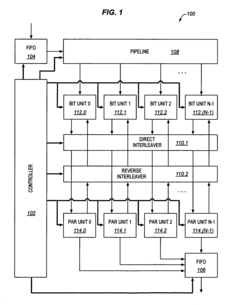

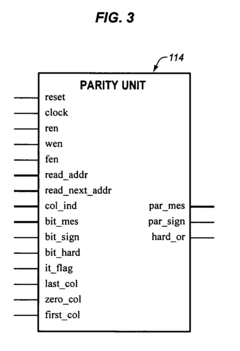

- A parity unit circuit is introduced, comprising three logic blocks: a memory logic block for data storage, a first compute logic block for computing parity messages and their signs, and a second compute logic block for updating data for the next iteration, allowing for high performance while minimizing area utilization on the integrated circuit.

Power Efficiency Considerations in Parity Check Circuits

Power efficiency has emerged as a critical consideration in the design and implementation of parity check circuits, particularly when aiming for minimal control overhead. The energy consumption of these circuits directly impacts the overall system performance, operational costs, and environmental footprint. Modern parity check implementations must balance computational accuracy with power constraints, especially in battery-operated devices and large-scale computing environments.

The power consumption in parity check circuits can be categorized into static and dynamic components. Static power dissipation occurs due to leakage currents when transistors are in off-state, while dynamic power consumption results from charging and discharging of capacitive loads during switching activities. In traditional implementations, dynamic power often dominates the total energy profile, accounting for approximately 70-80% of power consumption in typical CMOS-based parity check circuits.

Recent advancements in low-power design techniques have introduced several approaches to minimize energy requirements. Clock gating has proven effective in reducing dynamic power by selectively disabling clock signals to inactive circuit portions. This technique can achieve power savings of 15-30% in parity check implementations without compromising functional integrity. Similarly, power gating strategies disconnect idle circuit blocks from the power supply, significantly reducing static power dissipation.

Voltage scaling represents another promising approach, where operating voltage is dynamically adjusted based on performance requirements. For parity check circuits with variable throughput demands, adaptive voltage scaling can optimize energy efficiency while maintaining necessary computational capabilities. Studies indicate potential power reductions of 25-40% through intelligent voltage management systems.

The choice of implementation technology substantially influences power characteristics. While traditional CMOS technology offers reliability and manufacturing maturity, emerging alternatives such as FinFET and FDSOI provide superior power efficiency at nanoscale dimensions. These technologies demonstrate 30-50% lower power consumption compared to conventional CMOS implementations for equivalent parity check functionalities.

Circuit topology optimization presents additional opportunities for power reduction. Restructuring parity check circuits to minimize signal transitions and critical path delays can significantly reduce switching activity and associated dynamic power consumption. Algorithmic transformations that preserve mathematical equivalence while reducing computational complexity have demonstrated power savings of 20-35% in practical implementations.

For applications requiring ultimate power efficiency, asynchronous circuit design methodologies offer compelling advantages. By eliminating the global clock and operating based on local handshaking protocols, these implementations can achieve power reductions of 40-60% compared to synchronous counterparts, particularly in scenarios with irregular computational patterns typical in advanced error correction systems.

The power consumption in parity check circuits can be categorized into static and dynamic components. Static power dissipation occurs due to leakage currents when transistors are in off-state, while dynamic power consumption results from charging and discharging of capacitive loads during switching activities. In traditional implementations, dynamic power often dominates the total energy profile, accounting for approximately 70-80% of power consumption in typical CMOS-based parity check circuits.

Recent advancements in low-power design techniques have introduced several approaches to minimize energy requirements. Clock gating has proven effective in reducing dynamic power by selectively disabling clock signals to inactive circuit portions. This technique can achieve power savings of 15-30% in parity check implementations without compromising functional integrity. Similarly, power gating strategies disconnect idle circuit blocks from the power supply, significantly reducing static power dissipation.

Voltage scaling represents another promising approach, where operating voltage is dynamically adjusted based on performance requirements. For parity check circuits with variable throughput demands, adaptive voltage scaling can optimize energy efficiency while maintaining necessary computational capabilities. Studies indicate potential power reductions of 25-40% through intelligent voltage management systems.

The choice of implementation technology substantially influences power characteristics. While traditional CMOS technology offers reliability and manufacturing maturity, emerging alternatives such as FinFET and FDSOI provide superior power efficiency at nanoscale dimensions. These technologies demonstrate 30-50% lower power consumption compared to conventional CMOS implementations for equivalent parity check functionalities.

Circuit topology optimization presents additional opportunities for power reduction. Restructuring parity check circuits to minimize signal transitions and critical path delays can significantly reduce switching activity and associated dynamic power consumption. Algorithmic transformations that preserve mathematical equivalence while reducing computational complexity have demonstrated power savings of 20-35% in practical implementations.

For applications requiring ultimate power efficiency, asynchronous circuit design methodologies offer compelling advantages. By eliminating the global clock and operating based on local handshaking protocols, these implementations can achieve power reductions of 40-60% compared to synchronous counterparts, particularly in scenarios with irregular computational patterns typical in advanced error correction systems.

Scalability Challenges for Complex Error Detection Systems

As quantum computing systems scale up, error detection mechanisms face significant challenges that threaten their effectiveness and efficiency. The implementation of parity check circuits with minimal control overhead encounters exponential complexity growth when deployed across larger qubit arrays. Traditional error detection architectures that perform adequately in small-scale systems often break down when confronted with the interconnection requirements of complex quantum processors. The communication bandwidth between classical control systems and quantum hardware becomes a critical bottleneck, particularly when real-time error correction is necessary.

The resource overhead for implementing comprehensive parity checks grows non-linearly with system size. For every additional qubit incorporated into the error detection framework, the number of potential error correlations increases combinatorially, demanding more sophisticated parity check circuits. This scaling challenge is further compounded by the physical constraints of quantum hardware, where routing limitations and cross-talk effects become more pronounced in larger systems.

Time complexity presents another significant scalability hurdle. As quantum circuits grow in depth and breadth, the time required to execute comprehensive error detection protocols increases substantially. This temporal overhead directly impacts computational throughput and can potentially exceed the coherence time of the quantum system itself, rendering the error detection mechanism ineffective.

The control electronics required for managing complex parity check operations also face scaling limitations. Current FPGA and ASIC solutions struggle to maintain the necessary parallelism for simultaneous parity check operations across large qubit arrays. The heat dissipation and power requirements for such control systems create practical engineering constraints that must be addressed through novel architectural approaches.

Data processing and analysis of error syndromes becomes increasingly challenging at scale. The classical computing resources needed to process the error information in real-time grows dramatically with system size, creating a secondary bottleneck in the error detection pipeline. Machine learning approaches show promise but themselves require significant computational resources to train and deploy effectively.

Finally, the integration of minimal control overhead parity checks with other quantum operations presents scheduling conflicts that become more pronounced in larger systems. The time-sharing of control resources between computational operations and error detection functions requires increasingly sophisticated orchestration as system complexity grows.

The resource overhead for implementing comprehensive parity checks grows non-linearly with system size. For every additional qubit incorporated into the error detection framework, the number of potential error correlations increases combinatorially, demanding more sophisticated parity check circuits. This scaling challenge is further compounded by the physical constraints of quantum hardware, where routing limitations and cross-talk effects become more pronounced in larger systems.

Time complexity presents another significant scalability hurdle. As quantum circuits grow in depth and breadth, the time required to execute comprehensive error detection protocols increases substantially. This temporal overhead directly impacts computational throughput and can potentially exceed the coherence time of the quantum system itself, rendering the error detection mechanism ineffective.

The control electronics required for managing complex parity check operations also face scaling limitations. Current FPGA and ASIC solutions struggle to maintain the necessary parallelism for simultaneous parity check operations across large qubit arrays. The heat dissipation and power requirements for such control systems create practical engineering constraints that must be addressed through novel architectural approaches.

Data processing and analysis of error syndromes becomes increasingly challenging at scale. The classical computing resources needed to process the error information in real-time grows dramatically with system size, creating a secondary bottleneck in the error detection pipeline. Machine learning approaches show promise but themselves require significant computational resources to train and deploy effectively.

Finally, the integration of minimal control overhead parity checks with other quantum operations presents scheduling conflicts that become more pronounced in larger systems. The time-sharing of control resources between computational operations and error detection functions requires increasingly sophisticated orchestration as system complexity grows.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!