Hardware Co-Design For Low-Latency QEC Feedback Loops

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

QEC Hardware Co-Design Background and Objectives

Quantum Error Correction (QEC) represents a critical frontier in quantum computing, addressing the fundamental challenge of quantum decoherence. The evolution of QEC has progressed from theoretical concepts in the 1990s to practical implementations in recent years, with significant milestones including the development of surface codes, color codes, and more recently, low-density parity check (LDPC) codes. The trajectory of this field demonstrates an increasing focus on hardware-software co-design approaches to overcome the inherent limitations of quantum systems.

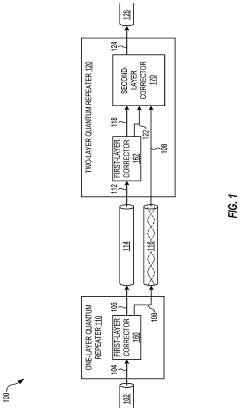

The primary objective of hardware co-design for low-latency QEC feedback loops is to develop integrated systems that can detect and correct quantum errors before they propagate and corrupt quantum information. This requires unprecedented coordination between quantum processing units, classical control electronics, and specialized firmware to achieve error correction cycles within the coherence time of qubits, typically in the microsecond range.

Current technological trends indicate a convergence of specialized hardware accelerators, reconfigurable computing architectures, and optimized algorithms designed specifically for QEC operations. The field is moving toward more tightly coupled quantum-classical interfaces that minimize communication overhead and processing delays in the error correction cycle.

The technical goals for low-latency QEC feedback systems include reducing the end-to-end latency of error detection and correction to sub-microsecond levels, scaling the system to support thousands of physical qubits, and maintaining high fidelity throughout the error correction process. Additionally, these systems must be energy-efficient and physically compact to integrate with cryogenic quantum processors.

Historical analysis reveals that early QEC implementations focused primarily on algorithmic improvements, with hardware considerations often secondary. However, the recognition that quantum advantage requires both algorithmic sophistication and hardware optimization has driven the shift toward co-design methodologies. This approach treats the quantum processor, control electronics, and error correction algorithms as a unified system to be optimized holistically.

The anticipated evolution of this technology suggests movement toward specialized QEC processors that implement error syndrome extraction and decoding directly in hardware, potentially using application-specific integrated circuits (ASICs) or field-programmable gate arrays (FPGAs) operating at cryogenic temperatures. These developments aim to address the "quantum speed limit" imposed by the need to process error information faster than decoherence occurs.

Achieving these objectives would represent a significant milestone in quantum computing, potentially enabling the first fault-tolerant quantum computers capable of executing complex quantum algorithms without being overwhelmed by errors.

The primary objective of hardware co-design for low-latency QEC feedback loops is to develop integrated systems that can detect and correct quantum errors before they propagate and corrupt quantum information. This requires unprecedented coordination between quantum processing units, classical control electronics, and specialized firmware to achieve error correction cycles within the coherence time of qubits, typically in the microsecond range.

Current technological trends indicate a convergence of specialized hardware accelerators, reconfigurable computing architectures, and optimized algorithms designed specifically for QEC operations. The field is moving toward more tightly coupled quantum-classical interfaces that minimize communication overhead and processing delays in the error correction cycle.

The technical goals for low-latency QEC feedback systems include reducing the end-to-end latency of error detection and correction to sub-microsecond levels, scaling the system to support thousands of physical qubits, and maintaining high fidelity throughout the error correction process. Additionally, these systems must be energy-efficient and physically compact to integrate with cryogenic quantum processors.

Historical analysis reveals that early QEC implementations focused primarily on algorithmic improvements, with hardware considerations often secondary. However, the recognition that quantum advantage requires both algorithmic sophistication and hardware optimization has driven the shift toward co-design methodologies. This approach treats the quantum processor, control electronics, and error correction algorithms as a unified system to be optimized holistically.

The anticipated evolution of this technology suggests movement toward specialized QEC processors that implement error syndrome extraction and decoding directly in hardware, potentially using application-specific integrated circuits (ASICs) or field-programmable gate arrays (FPGAs) operating at cryogenic temperatures. These developments aim to address the "quantum speed limit" imposed by the need to process error information faster than decoherence occurs.

Achieving these objectives would represent a significant milestone in quantum computing, potentially enabling the first fault-tolerant quantum computers capable of executing complex quantum algorithms without being overwhelmed by errors.

Market Analysis for Low-Latency Quantum Computing Solutions

The market for low-latency quantum computing solutions, particularly those focused on Quantum Error Correction (QEC) feedback loops, is experiencing significant growth as quantum computing transitions from theoretical research to practical applications. Current market estimates suggest that quantum computing technologies will reach approximately $1.3 billion by 2023, with hardware solutions specifically designed for error correction representing a crucial segment of this emerging market.

The demand for hardware co-design solutions addressing QEC feedback loops is primarily driven by research institutions, national laboratories, and technology corporations investing in quantum computing infrastructure. Organizations like IBM, Google, Microsoft, and Rigetti are making substantial investments in quantum hardware that can support efficient error correction mechanisms, recognizing that error mitigation represents one of the most significant barriers to quantum advantage.

Market analysis indicates that the financial sector shows particular interest in low-latency quantum solutions, with potential applications in high-frequency trading, risk modeling, and portfolio optimization. These applications require computational results within microseconds to milliseconds, making low-latency QEC feedback essential for practical implementation.

Healthcare and pharmaceutical industries represent another significant market segment, with an estimated 18% annual growth rate in quantum computing adoption. These sectors require quantum simulations for drug discovery and molecular modeling that maintain coherence long enough to produce meaningful results, directly benefiting from advances in QEC hardware co-design.

The defense and aerospace sectors are investing heavily in quantum-resistant cryptography and secure communications, creating demand for quantum computers with reliable error correction capabilities. Government funding in this area has increased by approximately 27% annually over the past three years, indicating strong market support for continued development.

From a geographical perspective, North America currently leads the market for quantum computing hardware solutions, accounting for roughly 45% of global investments. However, China, the European Union, and Japan are rapidly expanding their quantum initiatives with significant government backing, suggesting a more distributed market in the coming years.

Market forecasts predict that as quantum computers scale beyond 100 qubits toward the 1,000+ qubit systems currently in development, the demand for specialized hardware supporting low-latency QEC will grow exponentially. Industry analysts project that by 2026, hardware solutions specifically designed for error correction could represent up to 30% of the quantum computing hardware market.

The current market landscape features a mix of established technology companies and specialized quantum startups competing to develop the most effective hardware architectures for QEC implementation. This competitive environment is driving innovation and attracting venture capital, with quantum computing startups focused on hardware solutions raising over $800 million in 2022 alone.

The demand for hardware co-design solutions addressing QEC feedback loops is primarily driven by research institutions, national laboratories, and technology corporations investing in quantum computing infrastructure. Organizations like IBM, Google, Microsoft, and Rigetti are making substantial investments in quantum hardware that can support efficient error correction mechanisms, recognizing that error mitigation represents one of the most significant barriers to quantum advantage.

Market analysis indicates that the financial sector shows particular interest in low-latency quantum solutions, with potential applications in high-frequency trading, risk modeling, and portfolio optimization. These applications require computational results within microseconds to milliseconds, making low-latency QEC feedback essential for practical implementation.

Healthcare and pharmaceutical industries represent another significant market segment, with an estimated 18% annual growth rate in quantum computing adoption. These sectors require quantum simulations for drug discovery and molecular modeling that maintain coherence long enough to produce meaningful results, directly benefiting from advances in QEC hardware co-design.

The defense and aerospace sectors are investing heavily in quantum-resistant cryptography and secure communications, creating demand for quantum computers with reliable error correction capabilities. Government funding in this area has increased by approximately 27% annually over the past three years, indicating strong market support for continued development.

From a geographical perspective, North America currently leads the market for quantum computing hardware solutions, accounting for roughly 45% of global investments. However, China, the European Union, and Japan are rapidly expanding their quantum initiatives with significant government backing, suggesting a more distributed market in the coming years.

Market forecasts predict that as quantum computers scale beyond 100 qubits toward the 1,000+ qubit systems currently in development, the demand for specialized hardware supporting low-latency QEC will grow exponentially. Industry analysts project that by 2026, hardware solutions specifically designed for error correction could represent up to 30% of the quantum computing hardware market.

The current market landscape features a mix of established technology companies and specialized quantum startups competing to develop the most effective hardware architectures for QEC implementation. This competitive environment is driving innovation and attracting venture capital, with quantum computing startups focused on hardware solutions raising over $800 million in 2022 alone.

Current QEC Hardware Challenges and Limitations

Quantum Error Correction (QEC) hardware implementations currently face significant challenges that impede the development of effective low-latency feedback loops. The primary limitation stems from the inherent tension between quantum coherence times and error detection/correction cycles. Current superconducting qubit systems typically demonstrate coherence times in the range of 100-300 microseconds, while the complete error syndrome measurement, classical processing, and feedback application cycle often requires comparable or longer timeframes.

The physical implementation of syndrome extraction circuits presents a substantial bottleneck. These circuits require multiple two-qubit gates between data and ancilla qubits, each introducing potential error channels. Current two-qubit gate fidelities range from 99-99.9%, which remains insufficient for large-scale error correction without significant overhead. Additionally, measurement operations typically require 200-500 nanoseconds, with readout fidelities around 98-99%, creating another critical limitation point.

Classical processing infrastructure faces its own set of challenges. The translation of analog measurement signals to digital syndrome information, followed by decoding and decision-making, introduces significant latency. Current FPGA-based decoders struggle to process complex surface codes in real-time, particularly as code distances increase. The physical separation between quantum processors and classical control systems further exacerbates this issue, with signal propagation delays becoming non-negligible.

Feedback application mechanisms represent another critical limitation. The application of correction operations must be precisely timed and calibrated to avoid introducing additional errors. Current systems often rely on software-defined pulse sequences that lack the flexibility and speed required for true real-time feedback. The control electronics bandwidth and timing resolution frequently become limiting factors in high-fidelity correction operations.

Integration challenges across the quantum-classical interface create additional complications. Most current systems were designed with experimental flexibility prioritized over latency optimization, resulting in architectures ill-suited for rapid feedback loops. The lack of standardized interfaces between quantum hardware and classical control systems further impedes progress toward unified co-design approaches.

Scalability presents perhaps the most daunting challenge. As quantum systems grow in size, the number of syndrome measurements increases quadratically, placing enormous pressure on both the quantum and classical components of the system. Current architectures struggle to maintain consistent performance as system size increases, with error rates and latencies often degrading non-linearly with qubit count.

The physical implementation of syndrome extraction circuits presents a substantial bottleneck. These circuits require multiple two-qubit gates between data and ancilla qubits, each introducing potential error channels. Current two-qubit gate fidelities range from 99-99.9%, which remains insufficient for large-scale error correction without significant overhead. Additionally, measurement operations typically require 200-500 nanoseconds, with readout fidelities around 98-99%, creating another critical limitation point.

Classical processing infrastructure faces its own set of challenges. The translation of analog measurement signals to digital syndrome information, followed by decoding and decision-making, introduces significant latency. Current FPGA-based decoders struggle to process complex surface codes in real-time, particularly as code distances increase. The physical separation between quantum processors and classical control systems further exacerbates this issue, with signal propagation delays becoming non-negligible.

Feedback application mechanisms represent another critical limitation. The application of correction operations must be precisely timed and calibrated to avoid introducing additional errors. Current systems often rely on software-defined pulse sequences that lack the flexibility and speed required for true real-time feedback. The control electronics bandwidth and timing resolution frequently become limiting factors in high-fidelity correction operations.

Integration challenges across the quantum-classical interface create additional complications. Most current systems were designed with experimental flexibility prioritized over latency optimization, resulting in architectures ill-suited for rapid feedback loops. The lack of standardized interfaces between quantum hardware and classical control systems further impedes progress toward unified co-design approaches.

Scalability presents perhaps the most daunting challenge. As quantum systems grow in size, the number of syndrome measurements increases quadratically, placing enormous pressure on both the quantum and classical components of the system. Current architectures struggle to maintain consistent performance as system size increases, with error rates and latencies often degrading non-linearly with qubit count.

Current Hardware Co-Design Approaches for QEC

01 Hardware-software co-design for low-latency systems

Hardware-software co-design approaches integrate hardware and software development processes to optimize system performance and reduce latency. This methodology involves simultaneous design of hardware architecture and software algorithms to ensure efficient execution and minimal processing delays. By considering both hardware constraints and software requirements during the design phase, engineers can create systems with significantly reduced latency for time-critical applications.- Hardware-software co-design for low-latency systems: Hardware-software co-design approaches integrate hardware and software development processes to optimize system performance and reduce latency. This methodology involves simultaneous design of hardware architecture and software algorithms to ensure efficient execution and minimal processing delays. By considering both hardware constraints and software requirements during the design phase, engineers can create systems with significantly reduced latency for time-critical applications.

- FPGA-based acceleration for low-latency processing: Field-Programmable Gate Arrays (FPGAs) provide a platform for implementing custom hardware accelerators that can significantly reduce processing latency. By offloading computationally intensive tasks from general-purpose processors to specialized FPGA circuits, systems can achieve lower latency and higher throughput. This approach enables parallel processing and custom datapath optimization that is particularly beneficial for applications requiring real-time performance.

- Power-efficient hardware design for low-latency applications: Power-efficient hardware architectures are crucial for low-latency systems, especially in mobile and embedded applications. These designs incorporate techniques such as dynamic voltage and frequency scaling, selective power gating, and optimized clock distribution networks to reduce energy consumption while maintaining low-latency performance. By balancing power efficiency with performance requirements, these approaches enable sustainable operation of low-latency systems in power-constrained environments.

- Hardware acceleration for machine learning with low latency: Specialized hardware accelerators for machine learning algorithms can dramatically reduce inference and training latency. These designs include custom ASICs, neural processing units, and optimized memory architectures specifically tailored for AI workloads. By implementing machine learning operations directly in hardware, these systems can achieve orders of magnitude improvement in processing speed and latency compared to software implementations on general-purpose processors.

- System-on-Chip (SoC) design for low-latency communication: System-on-Chip designs integrate multiple components including processors, memory, and specialized hardware accelerators on a single chip to minimize communication latency. These integrated architectures feature optimized on-chip interconnects, memory hierarchies, and dedicated communication channels that reduce data transfer delays. By eliminating off-chip communication bottlenecks, SoC designs can achieve ultra-low latency for data-intensive applications requiring rapid processing and response times.

02 FPGA-based acceleration for low-latency processing

Field-Programmable Gate Arrays (FPGAs) provide reconfigurable hardware platforms that can be optimized for specific computational tasks to achieve low latency. By implementing critical processing functions directly in hardware logic, FPGAs eliminate the overhead associated with general-purpose processors. This approach enables parallel processing capabilities and custom data paths that significantly reduce processing time for computationally intensive operations.Expand Specific Solutions03 Hardware acceleration for neural networks and AI applications

Specialized hardware architectures designed specifically for neural network processing and AI workloads can dramatically reduce latency compared to general-purpose computing platforms. These designs incorporate parallel processing elements, optimized memory hierarchies, and dedicated circuitry for common AI operations such as matrix multiplication and activation functions. The co-design approach ensures that both hardware and software are optimized together to minimize processing delays for AI inference and training tasks.Expand Specific Solutions04 Simulation and verification techniques for low-latency designs

Advanced simulation and verification methodologies are essential for developing and validating low-latency hardware-software systems. These techniques enable designers to identify performance bottlenecks, verify timing constraints, and optimize system behavior before physical implementation. Hardware-in-the-loop testing, emulation platforms, and cycle-accurate simulators help ensure that the co-designed system meets strict latency requirements under various operating conditions.Expand Specific Solutions05 Power-efficient low-latency hardware architectures

Energy-efficient hardware architectures that maintain low-latency performance are critical for many applications, especially in mobile and embedded systems. These designs incorporate techniques such as dynamic voltage and frequency scaling, power gating, and specialized low-power processing elements. The co-design approach balances power consumption with latency requirements, creating systems that deliver fast response times while minimizing energy usage.Expand Specific Solutions

Key Industry Players in Quantum Error Correction

The quantum error correction (QEC) feedback loop hardware co-design landscape is currently in an early development stage, with market size expanding as quantum computing advances. The technology maturity varies significantly among key players. Research institutions like CEA, Xidian University, and Tsinghua University are establishing fundamental frameworks, while tech giants including Intel, Huawei, and NEC are developing integrated hardware solutions. Specialized quantum companies such as Rigetti are creating purpose-built architectures. The competitive landscape shows a blend of academic research pushing theoretical boundaries and corporate R&D focusing on practical implementations, with significant collaboration between sectors to overcome the substantial technical challenges of achieving low-latency QEC systems.

Commissariat à l´énergie atomique et aux énergies Alternatives

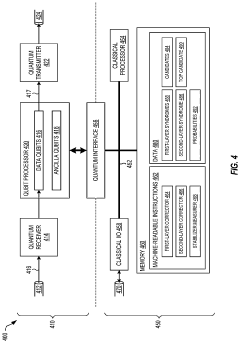

Technical Solution: CEA's hardware co-design approach for QEC feedback loops centers on their integrated quantum-classical architecture that minimizes latency through specialized hardware accelerators. Their system implements a distributed error processing framework where dedicated FPGAs handle real-time syndrome extraction and decoding while maintaining quantum coherence. CEA has developed custom analog-to-digital converters with sub-nanosecond sampling rates specifically optimized for qubit state readout, enabling faster error detection. Their architecture includes a hierarchical memory system where frequently used error correction patterns are cached in ultra-fast SRAM located physically close to the quantum processing unit. The system employs proprietary signal processing algorithms implemented directly in hardware that can identify and classify error patterns with minimal computational overhead. CEA's recent advancements include the development of radiation-hardened control electronics that can operate reliably in close proximity to quantum systems without introducing additional noise or errors.

Strengths: CEA's system achieves exceptionally low latency through hardware-accelerated error syndrome processing and optimized signal paths. Their integrated approach provides excellent scalability for larger quantum systems. Weaknesses: The specialized hardware components require significant development resources and are difficult to update once deployed. The system also demands precise calibration procedures that must be regularly maintained for optimal performance.

Intel Corp.

Technical Solution: Intel's hardware co-design for QEC feedback loops leverages their expertise in classical computing architecture to create specialized control systems for quantum processors. Their approach centers on Horse Ridge, a cryogenic control chip manufactured using Intel's 22nm FinFET technology, specifically designed to operate at temperatures around 4 Kelvin. This chip integrates directly with quantum systems to minimize latency in error correction feedback loops. Intel's architecture implements a hierarchical error processing system where low-level error syndromes are processed locally by dedicated hardware before being passed to higher-level correction algorithms. The system includes specialized instruction sets optimized for quantum error correction operations, allowing for rapid syndrome decoding and correction decision-making. Intel has also developed custom interconnect technologies that maintain signal integrity between the quantum processing unit and classical control electronics while minimizing thermal load on the cryogenic system.

Strengths: Intel's manufacturing expertise enables highly integrated control systems with exceptional reliability and performance consistency. Their cryogenic control chips significantly reduce signal path lengths, decreasing latency in QEC feedback loops. Weaknesses: The approach is heavily optimized for silicon-based quantum computing architectures, potentially limiting applicability to other quantum technologies. The system also requires complex thermal management to balance computational performance with cryogenic cooling requirements.

Critical Technologies for Low-Latency QEC Implementation

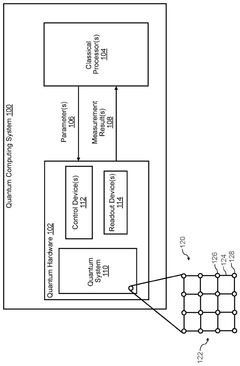

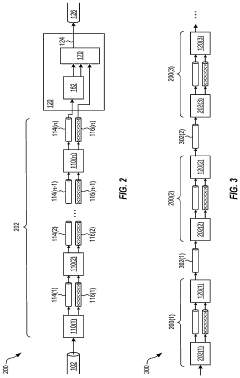

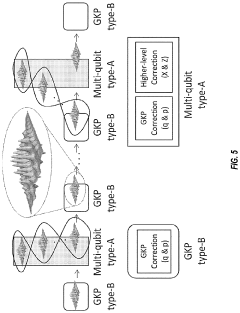

Nested quantum error correction codes for fault-tolerant quantum computation

PatentWO2025096766A1

Innovation

- The implementation of nested quantum error correction (QEC) codes, which consist of an inner surface code and an outer high-rate parity check code, reduces qubit overhead and improves error correction capabilities.

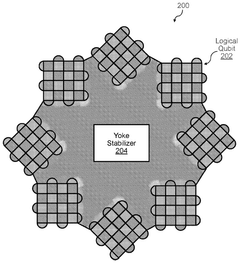

Quantum repeaters for concatenated quantum error correction, and associated methods

PatentActiveUS20230206110A1

Innovation

- The implementation of quantum repeaters using concatenated error correction codes, where a second-layer logical qubit is block-encoded by a plurality of physical qubits according to a second-layer code concatenated with a first-layer code, allowing for the detection and correction of errors through first-layer and second-layer stabilizer measurements, reducing the need for resources and noise introduction.

Quantum Computing Standards and Benchmarking

The development of quantum computing standards and benchmarking frameworks is critical for evaluating hardware co-design approaches in low-latency QEC (Quantum Error Correction) feedback loops. Currently, the quantum computing industry lacks unified standards for measuring the performance of QEC implementations, particularly regarding latency metrics that are crucial for effective error correction.

Several organizations, including IEEE, NIST, and the Quantum Economic Development Consortium (QED-C), have initiated efforts to establish standardized benchmarks specifically addressing QEC feedback loop performance. These emerging standards aim to quantify key parameters such as detection-to-correction latency, error syndrome processing time, and overall system reliability under various noise conditions.

The Quantum Volume metric, while useful for general quantum computing capabilities, proves insufficient for evaluating QEC-specific hardware co-design approaches. More specialized benchmarks like Quantum Error Correction Overhead (QECO) and Feedback Loop Latency Index (FLLI) have been proposed to specifically measure the efficiency of error correction implementations and their associated feedback mechanisms.

Hardware-specific benchmarking suites have emerged to evaluate co-designed systems, focusing on the integration between classical control electronics and quantum processing units. These benchmarks measure signal propagation delays, classical processing overhead, and the fidelity of error correction operations under realistic operating conditions.

Cross-platform comparison remains challenging due to the diversity of hardware implementations. Recent efforts have focused on developing technology-agnostic benchmarks that can fairly evaluate superconducting, trapped-ion, and photonic systems implementing QEC protocols with varying latency requirements.

The time-to-correction metric has gained prominence as a critical standard for evaluating QEC feedback loops. This end-to-end measurement captures the total elapsed time from error occurrence to the application of the corresponding correction, providing a holistic view of system performance that encompasses both quantum and classical components of the co-designed architecture.

Standardized test circuits, such as the Surface Code Benchmark Suite and the Bacon-Shor Verification Tests, offer reproducible workloads for evaluating hardware co-design approaches across different technological platforms. These test suites simulate realistic error correction scenarios while measuring the system's ability to maintain quantum information under various noise models.

Several organizations, including IEEE, NIST, and the Quantum Economic Development Consortium (QED-C), have initiated efforts to establish standardized benchmarks specifically addressing QEC feedback loop performance. These emerging standards aim to quantify key parameters such as detection-to-correction latency, error syndrome processing time, and overall system reliability under various noise conditions.

The Quantum Volume metric, while useful for general quantum computing capabilities, proves insufficient for evaluating QEC-specific hardware co-design approaches. More specialized benchmarks like Quantum Error Correction Overhead (QECO) and Feedback Loop Latency Index (FLLI) have been proposed to specifically measure the efficiency of error correction implementations and their associated feedback mechanisms.

Hardware-specific benchmarking suites have emerged to evaluate co-designed systems, focusing on the integration between classical control electronics and quantum processing units. These benchmarks measure signal propagation delays, classical processing overhead, and the fidelity of error correction operations under realistic operating conditions.

Cross-platform comparison remains challenging due to the diversity of hardware implementations. Recent efforts have focused on developing technology-agnostic benchmarks that can fairly evaluate superconducting, trapped-ion, and photonic systems implementing QEC protocols with varying latency requirements.

The time-to-correction metric has gained prominence as a critical standard for evaluating QEC feedback loops. This end-to-end measurement captures the total elapsed time from error occurrence to the application of the corresponding correction, providing a holistic view of system performance that encompasses both quantum and classical components of the co-designed architecture.

Standardized test circuits, such as the Surface Code Benchmark Suite and the Bacon-Shor Verification Tests, offer reproducible workloads for evaluating hardware co-design approaches across different technological platforms. These test suites simulate realistic error correction scenarios while measuring the system's ability to maintain quantum information under various noise models.

Resource Requirements and Scalability Considerations

Implementing quantum error correction (QEC) feedback loops with low latency requires careful consideration of hardware resources and scalability challenges. Current quantum computing systems implementing QEC demand substantial classical computing resources for syndrome extraction, decoding, and feedback operations. These requirements scale non-linearly with qubit count, creating significant bottlenecks as systems grow. For small-scale demonstrations (50-100 qubits), dedicated FPGA-based decoders can manage the computational load with latencies under 10 microseconds, but these solutions face diminishing returns at larger scales.

Resource utilization analysis reveals that memory bandwidth becomes a critical constraint in QEC implementations. High-performance systems require 10-100 GB/s memory throughput to handle syndrome measurement data in real-time. Power consumption presents another significant challenge, with current decoder implementations consuming 50-200W per module, potentially requiring kilowatts of power for large-scale error correction systems.

The scalability trajectory indicates that hardware resources must increase by approximately O(n²) for surface codes, where n represents the code distance. This quadratic scaling relationship necessitates architectural innovations to maintain practical implementation feasibility. Custom ASICs offer promising efficiency improvements, potentially reducing power requirements by 5-10x compared to FPGA implementations, but development costs remain prohibitively high for many research groups.

Distributed computing approaches present a viable path forward, with preliminary experiments demonstrating effective parallelization of decoding workloads across multiple processing nodes. These systems have achieved near-linear scaling efficiency up to 16 nodes, though communication overhead eventually dominates at higher node counts. Hybrid CPU-GPU architectures have demonstrated particular promise, leveraging GPU acceleration for syndrome extraction while CPUs handle control flow and feedback coordination.

Looking toward future implementations, resource-efficient QEC will likely require co-designed quantum-classical interfaces where decoder hardware is physically integrated with qubit control systems. This integration can potentially reduce communication latencies by an order of magnitude. Additionally, approximate decoding algorithms that trade marginal error correction performance for substantial resource savings show promise for practical large-scale systems, with recent implementations demonstrating comparable logical error rates while reducing computational requirements by up to 70%.

Resource utilization analysis reveals that memory bandwidth becomes a critical constraint in QEC implementations. High-performance systems require 10-100 GB/s memory throughput to handle syndrome measurement data in real-time. Power consumption presents another significant challenge, with current decoder implementations consuming 50-200W per module, potentially requiring kilowatts of power for large-scale error correction systems.

The scalability trajectory indicates that hardware resources must increase by approximately O(n²) for surface codes, where n represents the code distance. This quadratic scaling relationship necessitates architectural innovations to maintain practical implementation feasibility. Custom ASICs offer promising efficiency improvements, potentially reducing power requirements by 5-10x compared to FPGA implementations, but development costs remain prohibitively high for many research groups.

Distributed computing approaches present a viable path forward, with preliminary experiments demonstrating effective parallelization of decoding workloads across multiple processing nodes. These systems have achieved near-linear scaling efficiency up to 16 nodes, though communication overhead eventually dominates at higher node counts. Hybrid CPU-GPU architectures have demonstrated particular promise, leveraging GPU acceleration for syndrome extraction while CPUs handle control flow and feedback coordination.

Looking toward future implementations, resource-efficient QEC will likely require co-designed quantum-classical interfaces where decoder hardware is physically integrated with qubit control systems. This integration can potentially reduce communication latencies by an order of magnitude. Additionally, approximate decoding algorithms that trade marginal error correction performance for substantial resource savings show promise for practical large-scale systems, with recent implementations demonstrating comparable logical error rates while reducing computational requirements by up to 70%.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!