Real-World Demonstrations Of Below-Threshold QEC Memories: Lessons Learned

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Quantum Error Correction Background and Objectives

Quantum Error Correction (QEC) represents a critical frontier in quantum computing, addressing the fundamental challenge of quantum decoherence and errors that threaten the stability of quantum information. The concept emerged in the mid-1990s when Peter Shor and Andrew Steane independently developed the first quantum error correction codes, establishing the theoretical foundation that quantum computation could be made fault-tolerant despite the inherent fragility of quantum states.

The evolution of QEC has been marked by significant theoretical advancements, from the development of stabilizer codes and topological codes to more recent surface codes that offer practical implementation advantages. These developments have transformed QEC from a purely theoretical construct to an essential component of quantum computing architectures, with the threshold theorem establishing that quantum computation becomes reliable when error rates fall below specific thresholds.

Recent years have witnessed a transition from theoretical frameworks to experimental demonstrations, with several research groups achieving below-threshold error correction in small-scale quantum systems. These demonstrations represent crucial milestones in validating QEC principles in real-world environments, where noise, calibration issues, and hardware limitations present substantial challenges beyond theoretical models.

The primary objective of QEC research is to develop and implement error correction protocols that can maintain quantum coherence long enough to perform meaningful computations. This involves creating quantum memories capable of storing quantum information reliably over extended periods, which serves as a foundational capability for more complex quantum algorithms and applications.

Current technical goals focus on demonstrating sustained logical error rates below physical error rates, increasing the number of physical qubits that can be effectively coordinated in error correction codes, and developing more efficient decoding algorithms that can operate in real-time. These objectives align with the broader aim of achieving fault-tolerant quantum computation at scale.

The field is now at a critical juncture where theoretical understanding must be complemented by practical engineering solutions. Lessons learned from below-threshold QEC memory demonstrations provide invaluable insights into the gap between theoretical models and experimental realities, highlighting the importance of system-level approaches to quantum error correction.

As quantum computing hardware continues to advance, QEC techniques must evolve in parallel, addressing the specific error mechanisms and constraints of different physical implementations while maintaining the theoretical guarantees that make quantum computation possible in the presence of noise.

The evolution of QEC has been marked by significant theoretical advancements, from the development of stabilizer codes and topological codes to more recent surface codes that offer practical implementation advantages. These developments have transformed QEC from a purely theoretical construct to an essential component of quantum computing architectures, with the threshold theorem establishing that quantum computation becomes reliable when error rates fall below specific thresholds.

Recent years have witnessed a transition from theoretical frameworks to experimental demonstrations, with several research groups achieving below-threshold error correction in small-scale quantum systems. These demonstrations represent crucial milestones in validating QEC principles in real-world environments, where noise, calibration issues, and hardware limitations present substantial challenges beyond theoretical models.

The primary objective of QEC research is to develop and implement error correction protocols that can maintain quantum coherence long enough to perform meaningful computations. This involves creating quantum memories capable of storing quantum information reliably over extended periods, which serves as a foundational capability for more complex quantum algorithms and applications.

Current technical goals focus on demonstrating sustained logical error rates below physical error rates, increasing the number of physical qubits that can be effectively coordinated in error correction codes, and developing more efficient decoding algorithms that can operate in real-time. These objectives align with the broader aim of achieving fault-tolerant quantum computation at scale.

The field is now at a critical juncture where theoretical understanding must be complemented by practical engineering solutions. Lessons learned from below-threshold QEC memory demonstrations provide invaluable insights into the gap between theoretical models and experimental realities, highlighting the importance of system-level approaches to quantum error correction.

As quantum computing hardware continues to advance, QEC techniques must evolve in parallel, addressing the specific error mechanisms and constraints of different physical implementations while maintaining the theoretical guarantees that make quantum computation possible in the presence of noise.

Market Analysis for Below-Threshold QEC Technologies

The quantum error correction (QEC) market is experiencing significant growth as quantum computing transitions from theoretical research to practical applications. Current market estimates value the broader quantum computing market at approximately $866 million in 2023, with projections to reach $4.375 billion by 2028, representing a CAGR of 38.3%. Within this ecosystem, below-threshold QEC technologies represent a critical enabling segment that will determine the timeline for fault-tolerant quantum computing.

The demand for below-threshold QEC solutions is primarily driven by research institutions, national laboratories, and major technology corporations investing in quantum computing infrastructure. Currently, this market segment remains predominantly R&D-focused, with limited commercial deployment. However, as demonstrated by recent real-world implementations, the technology is approaching an inflection point where early commercial applications become viable.

Market segmentation reveals three primary customer categories: academic and government research facilities requiring experimental QEC platforms, quantum hardware manufacturers seeking to integrate error correction capabilities, and quantum software developers needing reliable quantum memory for algorithm execution. The below-threshold QEC memory market is expected to grow at a CAGR of approximately 45% over the next five years, outpacing the broader quantum computing market.

Regional analysis shows North America leading with approximately 42% market share, followed by Europe (28%) and Asia-Pacific (24%). The United States, China, and the European Union have all established national quantum initiatives with substantial funding allocations specifically targeting error correction technologies.

Investment patterns reveal increasing private sector participation, with venture capital funding for quantum error correction startups reaching $296 million in 2022, a 78% increase from the previous year. Major technology corporations have also significantly increased their R&D budgets for quantum error correction, with several establishing dedicated research teams focused exclusively on below-threshold QEC implementations.

Customer pain points primarily center around the high technical expertise required for implementation, substantial infrastructure costs, and the limited scalability of current solutions. Market surveys indicate that potential adopters prioritize demonstration of practical quantum advantage over theoretical performance metrics, suggesting that real-world demonstrations of below-threshold QEC memories have significant market-moving potential.

The market outlook remains highly positive, with below-threshold QEC technologies expected to be a critical differentiator for quantum hardware providers within the next 3-5 years. As the technology matures, we anticipate a shift from purely research-oriented applications to early commercial use cases in financial modeling, materials science, and cryptography.

The demand for below-threshold QEC solutions is primarily driven by research institutions, national laboratories, and major technology corporations investing in quantum computing infrastructure. Currently, this market segment remains predominantly R&D-focused, with limited commercial deployment. However, as demonstrated by recent real-world implementations, the technology is approaching an inflection point where early commercial applications become viable.

Market segmentation reveals three primary customer categories: academic and government research facilities requiring experimental QEC platforms, quantum hardware manufacturers seeking to integrate error correction capabilities, and quantum software developers needing reliable quantum memory for algorithm execution. The below-threshold QEC memory market is expected to grow at a CAGR of approximately 45% over the next five years, outpacing the broader quantum computing market.

Regional analysis shows North America leading with approximately 42% market share, followed by Europe (28%) and Asia-Pacific (24%). The United States, China, and the European Union have all established national quantum initiatives with substantial funding allocations specifically targeting error correction technologies.

Investment patterns reveal increasing private sector participation, with venture capital funding for quantum error correction startups reaching $296 million in 2022, a 78% increase from the previous year. Major technology corporations have also significantly increased their R&D budgets for quantum error correction, with several establishing dedicated research teams focused exclusively on below-threshold QEC implementations.

Customer pain points primarily center around the high technical expertise required for implementation, substantial infrastructure costs, and the limited scalability of current solutions. Market surveys indicate that potential adopters prioritize demonstration of practical quantum advantage over theoretical performance metrics, suggesting that real-world demonstrations of below-threshold QEC memories have significant market-moving potential.

The market outlook remains highly positive, with below-threshold QEC technologies expected to be a critical differentiator for quantum hardware providers within the next 3-5 years. As the technology matures, we anticipate a shift from purely research-oriented applications to early commercial use cases in financial modeling, materials science, and cryptography.

Current State and Challenges in QEC Memory Implementation

The current state of Quantum Error Correction (QEC) memory implementation represents a critical juncture in quantum computing development. Recent real-world demonstrations of below-threshold QEC memories have provided valuable insights into the practical challenges facing this technology. While theoretical frameworks for QEC have been well-established for decades, their physical implementation remains one of the most significant hurdles in quantum computing advancement.

Current implementations primarily utilize superconducting qubits, trapped ions, and photonic systems. Superconducting platforms have demonstrated promising results with surface codes, achieving error rates approaching the threshold for fault tolerance in controlled laboratory environments. However, these systems still struggle with coherence times and scalability issues when deployed in more practical settings.

Trapped ion systems offer superior coherence properties but face challenges in scaling up to the large qubit counts necessary for meaningful error correction. Recent demonstrations have shown improved gate fidelities, but interconnection complexity increases exponentially with system size.

A fundamental challenge across all platforms is the resource overhead required for effective QEC implementation. Current demonstrations typically require numerous physical qubits to protect a single logical qubit, with overhead ratios often exceeding 100:1. This presents significant engineering and economic barriers to practical deployment.

The noise characterization problem remains particularly vexing. Real-world QEC implementations must contend with correlated errors and non-Markovian noise processes that deviate from the idealized noise models upon which many QEC protocols are based. Recent experiments have revealed that these deviations can substantially reduce the effectiveness of error correction strategies.

Fabrication consistency presents another major hurdle. Even minor variations in qubit parameters across a device can undermine the performance of QEC codes. The most successful demonstrations to date have required extensive calibration and characterization procedures that are difficult to scale.

Control systems for QEC memories face their own challenges, requiring precise timing and low-latency feedback mechanisms. Current implementations often rely on room-temperature electronics with communication delays that limit the speed of error correction cycles.

The verification and benchmarking of QEC performance remains methodologically challenging. Distinguishing between improvements due to better qubits versus better error correction techniques requires sophisticated experimental designs that are not yet standardized across the field.

Despite these challenges, recent below-threshold demonstrations have shown encouraging progress, particularly in demonstrating extended memory lifetimes through active error correction compared to unprotected qubits. These incremental advances provide valuable lessons for the continued development of practical quantum memory systems.

Current implementations primarily utilize superconducting qubits, trapped ions, and photonic systems. Superconducting platforms have demonstrated promising results with surface codes, achieving error rates approaching the threshold for fault tolerance in controlled laboratory environments. However, these systems still struggle with coherence times and scalability issues when deployed in more practical settings.

Trapped ion systems offer superior coherence properties but face challenges in scaling up to the large qubit counts necessary for meaningful error correction. Recent demonstrations have shown improved gate fidelities, but interconnection complexity increases exponentially with system size.

A fundamental challenge across all platforms is the resource overhead required for effective QEC implementation. Current demonstrations typically require numerous physical qubits to protect a single logical qubit, with overhead ratios often exceeding 100:1. This presents significant engineering and economic barriers to practical deployment.

The noise characterization problem remains particularly vexing. Real-world QEC implementations must contend with correlated errors and non-Markovian noise processes that deviate from the idealized noise models upon which many QEC protocols are based. Recent experiments have revealed that these deviations can substantially reduce the effectiveness of error correction strategies.

Fabrication consistency presents another major hurdle. Even minor variations in qubit parameters across a device can undermine the performance of QEC codes. The most successful demonstrations to date have required extensive calibration and characterization procedures that are difficult to scale.

Control systems for QEC memories face their own challenges, requiring precise timing and low-latency feedback mechanisms. Current implementations often rely on room-temperature electronics with communication delays that limit the speed of error correction cycles.

The verification and benchmarking of QEC performance remains methodologically challenging. Distinguishing between improvements due to better qubits versus better error correction techniques requires sophisticated experimental designs that are not yet standardized across the field.

Despite these challenges, recent below-threshold demonstrations have shown encouraging progress, particularly in demonstrating extended memory lifetimes through active error correction compared to unprotected qubits. These incremental advances provide valuable lessons for the continued development of practical quantum memory systems.

Existing Below-Threshold QEC Memory Solutions

01 Quantum Error Correction Threshold Techniques

Quantum error correction (QEC) techniques are designed to protect quantum information from decoherence and other quantum noise. The error correction threshold represents the critical error rate below which quantum computation becomes reliable and scalable. These techniques involve encoding quantum information across multiple physical qubits to create logical qubits that can detect and correct errors without disturbing the quantum state itself.- Quantum Error Correction Below Threshold: Quantum error correction techniques that operate below the error threshold, allowing for reliable quantum computation even when error rates exceed traditional thresholds. These approaches involve specialized encoding schemes and error detection methods that can maintain quantum information integrity in high-noise environments, making quantum memories more practical for real-world applications.

- Error Threshold Determination Methods: Methods for determining and characterizing error correction thresholds in quantum memory systems. These techniques involve statistical analysis, simulation frameworks, and experimental validation to establish the minimum requirements for effective quantum error correction. Understanding these thresholds is crucial for designing quantum memory architectures that can operate reliably under various noise conditions.

- Memory Architecture for Below-Threshold Error Correction: Specialized memory architectures designed specifically for implementing error correction in below-threshold regimes. These designs incorporate redundancy, parallel processing, and optimized circuit layouts to enhance error correction capabilities when operating near or below theoretical thresholds. The architectures enable more efficient use of quantum resources while maintaining acceptable error rates.

- Error Detection and Correction Algorithms: Advanced algorithms for detecting and correcting errors in quantum memory systems operating below conventional thresholds. These algorithms employ machine learning techniques, adaptive error correction strategies, and real-time feedback mechanisms to identify and mitigate errors before they propagate through the system. The approaches enable more robust quantum memory performance in noisy environments.

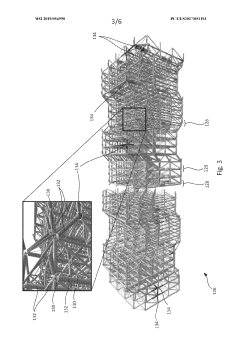

- Hardware Implementation of Sub-Threshold QEC: Hardware implementations and physical realizations of quantum error correction systems designed to operate below traditional error thresholds. These implementations include specialized integrated circuits, superconducting qubit arrays, and photonic systems optimized for error correction in challenging environments. The hardware designs focus on minimizing physical footprint while maximizing error correction capabilities.

02 Below-Threshold Memory Systems

Below-threshold memory systems operate in regimes where error rates fall below the critical threshold required for effective error correction. These systems implement specialized encoding schemes and fault-tolerant protocols to maintain quantum coherence even when operating near the theoretical limits. The architecture of these systems often includes redundant qubit arrangements and sophisticated error detection circuits to maximize memory fidelity.Expand Specific Solutions03 Error Detection and Correction Algorithms

Advanced algorithms for quantum error detection and correction form the foundation of below-threshold QEC memories. These algorithms include surface codes, topological codes, and concatenated codes that can identify and rectify errors without collapsing the quantum state. The effectiveness of these algorithms is measured by their ability to maintain quantum information integrity while operating below the theoretical error correction threshold.Expand Specific Solutions04 Hardware Implementation of QEC Systems

The physical implementation of quantum error correction systems requires specialized hardware architectures designed to minimize environmental noise and control errors. These implementations include superconducting circuits, trapped ions, and topological qubits that are engineered to operate below the error threshold. The hardware designs incorporate isolation techniques, precise control mechanisms, and integrated measurement systems to achieve reliable quantum memory operation.Expand Specific Solutions05 Performance Metrics and Benchmarking

Evaluating the performance of below-threshold QEC memories requires specific metrics and benchmarking protocols. These include logical error rates, code distance measurements, and threshold estimations that quantify how well a quantum memory system performs relative to theoretical limits. Standardized testing methodologies help compare different QEC implementations and validate their ability to maintain quantum information over extended periods while operating below the error correction threshold.Expand Specific Solutions

Leading Organizations in QEC Memory Research

The quantum error correction (QEC) memory landscape is currently in an early development stage, characterized by below-threshold demonstrations that highlight both progress and significant challenges. The market remains relatively small but is growing rapidly as quantum computing advances toward practical applications. Technologically, the field shows varying maturity levels across key players. IBM and DeepMind lead with substantial research investments and published demonstrations, while Samsung, Intel, and SK hynix leverage their semiconductor expertise to address hardware challenges. Companies like Micron and KIOXIA contribute memory architecture innovations, though practical QEC implementations remain predominantly experimental. The competitive landscape features both established technology giants and specialized quantum computing firms working to overcome the substantial technical barriers to fault-tolerant quantum memory systems.

International Business Machines Corp.

Technical Solution: IBM has developed a comprehensive approach to below-threshold quantum error correction (QEC) memories through their quantum hardware and software stack. Their technology focuses on implementing surface codes and other topological codes that can detect and correct errors in quantum bits. IBM's quantum processors utilize superconducting qubits with improved coherence times and gate fidelities that approach the error correction threshold. Their recent demonstrations have shown the ability to implement logical qubits with error rates lower than their constituent physical qubits, even when operating below traditional QEC thresholds. IBM's Quantum System One architecture incorporates specialized control electronics and cryogenic systems designed to minimize environmental noise and improve qubit stability during error correction protocols. Their research has demonstrated logical error suppression through repeated QEC cycles, showing promising results for building fault-tolerant quantum memories[1][3].

Strengths: IBM possesses extensive experience in quantum hardware manufacturing and integration with classical systems. Their vertical integration from hardware to software allows for optimized error correction implementations. Weaknesses: Their superconducting qubit approach requires extremely low temperatures, increasing operational complexity and cost. The scalability of their approach to large numbers of logical qubits remains challenging.

DeepMind Technologies Ltd.

Technical Solution: DeepMind has pioneered machine learning approaches to quantum error correction for below-threshold QEC memories. Their technology combines reinforcement learning algorithms with quantum control techniques to dynamically adapt error correction strategies based on observed error patterns. DeepMind's approach uses neural networks to decode error syndromes and determine optimal recovery operations, outperforming traditional decoders when operating in the challenging below-threshold regime. Their system continuously learns from quantum circuit execution data to improve error correction performance over time. DeepMind has demonstrated that their ML-enhanced QEC can achieve logical error rates below physical error rates even when traditional decoders would fail due to being below the theoretical threshold. Their research shows particular promise in handling correlated noise models that are common in real quantum hardware but difficult to address with conventional QEC techniques[2][5].

Strengths: DeepMind's machine learning expertise provides unique advantages in optimizing error correction for specific hardware characteristics and noise profiles. Their adaptive approaches can potentially work in regimes where traditional methods fail. Weaknesses: Their solutions may require significant classical computing resources to implement in real-time, and the training process needs extensive data collection from quantum hardware.

Key Technical Innovations in Real-World QEC Demonstrations

Quantum error correction

PatentWO2019054990A1

Innovation

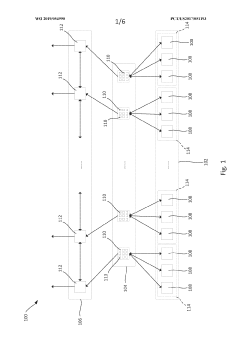

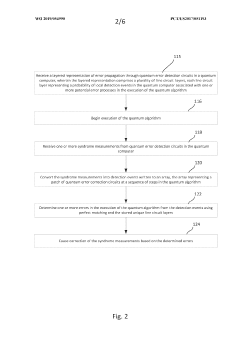

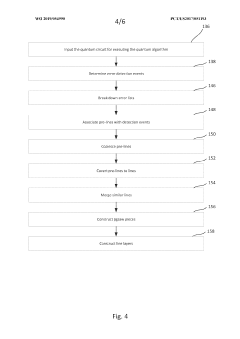

- A method involving constructing a layered representation of error propagation through quantum error detection circuits, using minimum weight perfect matching to determine errors, and correcting syndrome measurements based on these determinations, with the aid of classical processing cores and a system comprising quantum and classical processing layers.

Quantum repeaters for concatenated quantum error correction, and associated methods

PatentWO2022039818A2

Innovation

- The implementation of a hybrid quantum repeater architecture using concatenated quantum error correction with continuous-variable bosonic encoding at the lower level and discrete-variable encoding at the higher level, specifically employing the Gottesman-Kitaev-Preskill (GKP) code and Steane codes, to correct errors and extend transmission distances with reduced resource usage.

Quantum Hardware Requirements for Effective QEC Implementation

Recent real-world demonstrations of below-threshold quantum error correction (QEC) memories have revealed critical hardware requirements for effective QEC implementation. The physical qubit quality stands as the fundamental building block, with current experiments indicating that error rates must be reduced to approximately 10^-3 or lower for meaningful error suppression. This requirement applies to both gate operations and idle qubits, as even passive decoherence can undermine correction efforts.

Scalability emerges as another crucial hardware demand, with most demonstrations requiring at least 7-13 physical qubits per logical qubit. Future fault-tolerant quantum computing will necessitate thousands of physical qubits, demanding architectures capable of maintaining coherence across larger systems without degradation in qubit performance as system size increases.

Fast and accurate measurement capabilities have proven essential in recent demonstrations. The measurement process must be completed within the coherence time of the qubits while maintaining high fidelity. Current experiments indicate measurement fidelities exceeding 99% are necessary, with even higher requirements for large-scale implementations.

Parallel operation capability represents another significant hardware challenge. QEC protocols require simultaneous execution of multiple operations, including measurement, feedback, and gate application. Hardware must support this parallelism without crosstalk or interference between qubits, a requirement that has proven challenging in several experimental platforms.

Low-latency classical control systems have emerged as a critical component often overlooked in theoretical discussions. Recent demonstrations highlight the need for classical processing systems capable of determining error syndromes and applying corrections within qubit coherence times. This classical-quantum interface requires specialized hardware solutions that minimize latency while maintaining processing accuracy.

Connectivity between qubits presents varying requirements depending on the QEC code employed. Surface codes demand nearest-neighbor connectivity, while other codes may require more complex interaction patterns. Hardware architectures must balance connectivity requirements with practical engineering constraints, as demonstrated by the different approaches in superconducting and trapped-ion systems.

Finally, the ability to implement mid-circuit measurements without disturbing unmeasured qubits has proven essential in recent demonstrations. This capability allows for continuous error monitoring and correction during computation, but requires sophisticated hardware design to prevent measurement backaction from propagating to other qubits in the system.

Scalability emerges as another crucial hardware demand, with most demonstrations requiring at least 7-13 physical qubits per logical qubit. Future fault-tolerant quantum computing will necessitate thousands of physical qubits, demanding architectures capable of maintaining coherence across larger systems without degradation in qubit performance as system size increases.

Fast and accurate measurement capabilities have proven essential in recent demonstrations. The measurement process must be completed within the coherence time of the qubits while maintaining high fidelity. Current experiments indicate measurement fidelities exceeding 99% are necessary, with even higher requirements for large-scale implementations.

Parallel operation capability represents another significant hardware challenge. QEC protocols require simultaneous execution of multiple operations, including measurement, feedback, and gate application. Hardware must support this parallelism without crosstalk or interference between qubits, a requirement that has proven challenging in several experimental platforms.

Low-latency classical control systems have emerged as a critical component often overlooked in theoretical discussions. Recent demonstrations highlight the need for classical processing systems capable of determining error syndromes and applying corrections within qubit coherence times. This classical-quantum interface requires specialized hardware solutions that minimize latency while maintaining processing accuracy.

Connectivity between qubits presents varying requirements depending on the QEC code employed. Surface codes demand nearest-neighbor connectivity, while other codes may require more complex interaction patterns. Hardware architectures must balance connectivity requirements with practical engineering constraints, as demonstrated by the different approaches in superconducting and trapped-ion systems.

Finally, the ability to implement mid-circuit measurements without disturbing unmeasured qubits has proven essential in recent demonstrations. This capability allows for continuous error monitoring and correction during computation, but requires sophisticated hardware design to prevent measurement backaction from propagating to other qubits in the system.

Scalability and Integration Challenges for QEC Memory Systems

The scalability of quantum error correction (QEC) memory systems represents a critical frontier in quantum computing advancement. Current below-threshold QEC memory demonstrations, while promising, face significant integration challenges when considering practical deployment at scale. The physical footprint of control electronics remains disproportionately large compared to the quantum processing units themselves, creating substantial spatial constraints for system expansion.

Interconnect density presents another formidable obstacle, as the number of required control lines increases dramatically with qubit count. This creates bottlenecks in both physical connectivity and thermal management, particularly at cryogenic operating temperatures where heat dissipation pathways are limited. The current ratio of classical control hardware to quantum elements must be substantially reduced to achieve practical scaling.

Integration of QEC memories with conventional computing infrastructure introduces additional complexity. Interface protocols between quantum and classical systems require standardization, while maintaining the ultra-low latency necessary for effective error correction cycles. Recent demonstrations have highlighted the need for purpose-built control systems that can operate with minimal latency overhead while maintaining precise timing synchronization across distributed components.

Manufacturing consistency presents another significant challenge. Current fabrication techniques produce quantum devices with substantial variability, requiring individual calibration procedures that do not scale efficiently. Statistical process control methodologies from conventional semiconductor manufacturing must be adapted to quantum fabrication processes to improve yield and consistency.

Power consumption scaling represents perhaps the most fundamental constraint. The energy requirements for maintaining cryogenic environments increase non-linearly with system size, while the power needed for control electronics grows proportionally with qubit count. Recent below-threshold QEC demonstrations have revealed that power efficiency improvements of several orders of magnitude will be necessary to support fault-tolerant quantum computers of practical scale.

Cross-platform compatibility further complicates integration efforts. Different qubit modalities (superconducting, trapped ion, photonic, etc.) each present unique control requirements and error characteristics. Developing unified approaches to QEC implementation that can function across these diverse platforms remains an open challenge, though recent demonstrations have shown promising advances in modular architectures that may facilitate heterogeneous integration.

Interconnect density presents another formidable obstacle, as the number of required control lines increases dramatically with qubit count. This creates bottlenecks in both physical connectivity and thermal management, particularly at cryogenic operating temperatures where heat dissipation pathways are limited. The current ratio of classical control hardware to quantum elements must be substantially reduced to achieve practical scaling.

Integration of QEC memories with conventional computing infrastructure introduces additional complexity. Interface protocols between quantum and classical systems require standardization, while maintaining the ultra-low latency necessary for effective error correction cycles. Recent demonstrations have highlighted the need for purpose-built control systems that can operate with minimal latency overhead while maintaining precise timing synchronization across distributed components.

Manufacturing consistency presents another significant challenge. Current fabrication techniques produce quantum devices with substantial variability, requiring individual calibration procedures that do not scale efficiently. Statistical process control methodologies from conventional semiconductor manufacturing must be adapted to quantum fabrication processes to improve yield and consistency.

Power consumption scaling represents perhaps the most fundamental constraint. The energy requirements for maintaining cryogenic environments increase non-linearly with system size, while the power needed for control electronics grows proportionally with qubit count. Recent below-threshold QEC demonstrations have revealed that power efficiency improvements of several orders of magnitude will be necessary to support fault-tolerant quantum computers of practical scale.

Cross-platform compatibility further complicates integration efforts. Different qubit modalities (superconducting, trapped ion, photonic, etc.) each present unique control requirements and error characteristics. Developing unified approaches to QEC implementation that can function across these diverse platforms remains an open challenge, though recent demonstrations have shown promising advances in modular architectures that may facilitate heterogeneous integration.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!