Real-Time Decoders: Architectures And Latency Considerations For QEC

QEC Decoder Evolution and Objectives

Quantum Error Correction (QEC) has evolved significantly since its theoretical inception in the mid-1990s. The development trajectory began with Peter Shor's groundbreaking quantum error correction code in 1995, followed by the formulation of the stabilizer formalism by Daniel Gottesman in 1997. These foundational works established the theoretical possibility of protecting quantum information against decoherence and operational errors, which had previously been considered insurmountable obstacles to practical quantum computing.

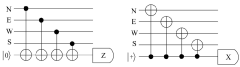

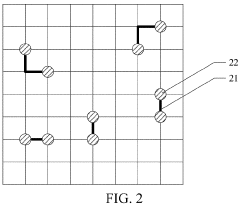

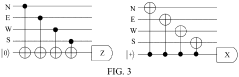

The evolution of QEC decoders has been characterized by increasing sophistication in error detection and correction mechanisms. Early decoders employed simple lookup tables and basic syndrome decoding techniques, which were effective for small-scale systems but lacked scalability. As quantum hardware advanced, more complex decoding algorithms emerged, including belief propagation, minimum-weight perfect matching (MWPM), and tensor network-based approaches.

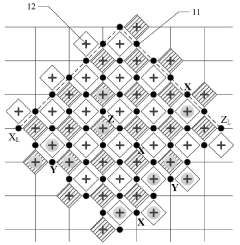

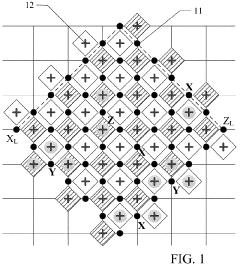

A significant milestone was reached with the development of surface codes in the early 2000s, which offered a practical architecture for implementing QEC with high error thresholds. This advancement shifted focus toward decoders that could efficiently process surface code syndromes while maintaining low latency, a critical requirement for fault-tolerant quantum computation.

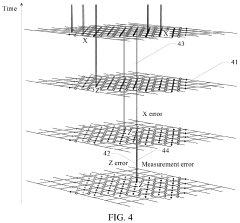

The primary objective of modern QEC decoders is to achieve real-time error correction capabilities that can keep pace with quantum operations. This necessitates decoders that can process error syndromes and determine appropriate corrections within the coherence time of qubits, typically on the order of microseconds to milliseconds depending on the physical implementation. Achieving this temporal constraint requires innovative architectural approaches that balance decoding accuracy with processing speed.

Another crucial objective is scalability. As quantum processors grow in size, decoders must efficiently handle increasing numbers of physical qubits without exponential growth in computational resources or latency. This has driven research toward parallelizable decoding algorithms and specialized hardware implementations, including FPGA-based solutions and application-specific integrated circuits (ASICs).

Reducing the resource overhead of QEC represents another key goal. Current error correction schemes often require numerous physical qubits to encode a single logical qubit, significantly limiting the practical utility of quantum computers. Developing more efficient decoders that can achieve high fidelity with lower qubit overhead would substantially advance the field toward practical quantum advantage.

The convergence of these objectives—real-time operation, scalability, and resource efficiency—defines the current frontier of QEC decoder research, with significant implications for the realization of fault-tolerant quantum computing systems capable of executing complex quantum algorithms.

Market Demand for Low-Latency QEC Solutions

The quantum computing industry is witnessing a significant surge in demand for low-latency Quantum Error Correction (QEC) solutions. As quantum systems scale beyond the NISQ (Noisy Intermediate-Scale Quantum) era toward fault-tolerant quantum computing, the need for real-time error correction becomes increasingly critical. Market research indicates that organizations developing quantum hardware are prioritizing QEC capabilities that can operate within strict latency constraints to maintain quantum coherence.

Financial institutions represent one of the most promising market segments, with quantum computing potentially offering exponential speedups for portfolio optimization, risk assessment, and fraud detection algorithms. These applications require computational results within milliseconds to seconds, creating demand for QEC decoders that can process error syndromes with minimal overhead. According to industry analyses, financial services firms have increased their quantum computing investments by 35% annually since 2020, with QEC solutions being a primary focus area.

Pharmaceutical and materials science companies form another significant market segment seeking low-latency QEC solutions. These organizations require quantum simulations that maintain coherence long enough to model complex molecular interactions. The ability to correct errors in real-time directly impacts the scale and accuracy of these simulations, translating to potential breakthroughs worth billions in drug discovery and materials development.

National security and defense sectors have emerged as major drivers for advanced QEC technologies. Government agencies worldwide are investing heavily in quantum technologies that can maintain quantum states through rapid error correction, particularly for cryptographic applications. These organizations have stringent performance requirements, often demanding error correction cycles completed within microseconds.

Cloud quantum computing providers represent perhaps the most immediate commercial market for real-time decoders. As these companies scale their quantum offerings, the ability to provide reliable computation through effective QEC becomes a key differentiator. Market leaders are actively seeking hardware-software co-designed solutions that minimize decoder latency while maximizing error correction fidelity.

Industry analysts project the market for specialized QEC hardware accelerators to grow substantially over the next five years as quantum computers approach the threshold where error correction becomes practical. Venture capital funding for startups focused on QEC hardware solutions has tripled since 2021, reflecting strong market confidence in this technological direction.

The demand for low-latency QEC solutions spans multiple industries and applications, with common requirements for minimal decoding time, scalability with increasing qubit counts, and integration capabilities with existing quantum hardware platforms. Organizations are increasingly willing to invest in specialized hardware accelerators and optimized algorithms that can deliver real-time error correction performance.

Real-Time Decoder Technical Challenges

Real-time quantum error correction (QEC) decoders face several significant technical challenges that must be addressed to achieve practical quantum computing systems. The primary obstacle is the stringent latency requirement, as decoders must process error syndromes and determine corrections within the coherence time of qubits, typically in the microsecond to millisecond range. This time constraint severely limits the computational complexity of decoding algorithms that can be implemented.

Hardware implementation presents another major challenge. Traditional software-based decoders running on classical computers cannot meet the speed requirements for large-scale quantum systems. Application-specific integrated circuits (ASICs) and field-programmable gate arrays (FPGAs) offer promising alternatives but introduce their own complexities in terms of design, verification, and manufacturing.

The scalability of decoding solutions poses a significant hurdle as quantum computers grow in size. The number of physical qubits and potential error patterns increases exponentially, demanding decoders that can scale efficiently without compromising speed or accuracy. Current architectures struggle to maintain real-time performance beyond a few hundred qubits.

Parallelization of decoding algorithms represents both a challenge and an opportunity. While parallel processing can significantly reduce latency, designing truly parallel decoders requires fundamental rethinking of algorithms that were originally conceived as sequential processes. The communication overhead between parallel units can become a bottleneck if not carefully managed.

Power consumption emerges as a critical concern, particularly for cryogenic quantum computing systems. Decoders must operate with minimal heat generation to avoid disturbing the delicate quantum state of nearby qubits. This constraint limits the computational resources available for decoding and necessitates energy-efficient design approaches.

Fault tolerance of the decoder itself cannot be overlooked. The classical hardware implementing the decoder is also susceptible to errors, which could propagate and compound quantum errors rather than correcting them. Implementing error detection and correction mechanisms within the classical decoder adds another layer of complexity.

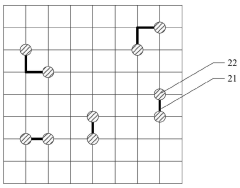

Integration with the quantum processing unit presents interface challenges. The decoder must efficiently receive syndrome measurements from the quantum system and rapidly communicate correction operations back to it. This bidirectional communication pathway must maintain high bandwidth while minimizing latency and potential sources of noise or interference.

Adaptability to different QEC codes represents a final significant challenge. As quantum error correction codes continue to evolve, decoders must be flexible enough to accommodate various code structures without requiring complete redesign, balancing specialization for performance against generality for future-proofing.

Current Real-Time Decoder Implementations

01 Techniques for reducing decoder latency in real-time systems

Various techniques can be implemented to reduce decoder latency in real-time systems. These include optimizing buffer management, implementing parallel processing architectures, and utilizing hardware acceleration. By reducing the processing time required for decoding operations, these techniques help minimize the overall latency in real-time applications, ensuring smoother performance and better user experience.- Techniques for reducing decoder latency in real-time systems: Various techniques can be implemented to reduce decoder latency in real-time systems. These include optimizing buffer management, implementing parallel processing architectures, and utilizing specialized hardware accelerators. By reducing the processing time required for decoding operations, these techniques help minimize the overall latency in real-time applications, ensuring smoother performance and better user experience.

- Network-based approaches for managing decoder latency: Network-based approaches focus on optimizing data transmission and reception to reduce decoder latency. These methods include implementing efficient network protocols, optimizing packet scheduling, and utilizing adaptive streaming technologies. By minimizing network-related delays, these approaches help ensure that data reaches decoders in a timely manner, reducing overall system latency in distributed real-time applications.

- Memory management strategies for low-latency decoding: Effective memory management is crucial for minimizing decoder latency in real-time systems. Strategies include implementing cache optimization techniques, utilizing memory prefetching, and designing efficient memory allocation algorithms. These approaches help reduce the time spent on memory access operations during the decoding process, thereby decreasing overall system latency and improving performance in time-sensitive applications.

- Synchronization mechanisms for real-time decoding: Synchronization mechanisms are essential for maintaining timing consistency in real-time decoding systems. These include implementing precise clock synchronization protocols, utilizing frame-level synchronization techniques, and developing adaptive timing adjustment algorithms. By ensuring that all components of a decoding system operate in a coordinated manner, these mechanisms help minimize latency variations and maintain consistent performance in real-time applications.

- Error handling and recovery techniques for low-latency decoders: Effective error handling and recovery techniques are vital for maintaining low latency in real-time decoding systems. These include implementing forward error correction, developing fast error detection algorithms, and utilizing adaptive error concealment methods. By quickly identifying and addressing errors without significant processing delays, these techniques help ensure that decoder latency remains minimal even when dealing with corrupted or incomplete data streams.

02 Network-based approaches for managing decoder latency

Network-based approaches focus on optimizing data transmission and reception to manage decoder latency in distributed systems. These approaches include implementing efficient streaming protocols, adaptive bitrate techniques, and network quality of service mechanisms. By optimizing how data is transmitted across networks before reaching decoders, these methods help maintain low latency even under varying network conditions.Expand Specific Solutions03 Memory management strategies for real-time decoders

Effective memory management strategies are crucial for minimizing latency in real-time decoders. These strategies include implementing efficient cache utilization, optimizing memory allocation and deallocation, and employing memory prefetching techniques. By ensuring that data is readily available when needed by the decoder, these approaches help reduce waiting times and improve overall decoding performance.Expand Specific Solutions04 Synchronization mechanisms for real-time decoding

Synchronization mechanisms are essential for maintaining timing accuracy in real-time decoders. These include implementing precise clock synchronization, frame timing management, and adaptive timing adjustment algorithms. By ensuring that decoding operations remain properly synchronized with other system components, these mechanisms help prevent timing-related latency issues in real-time applications.Expand Specific Solutions05 Hardware-software co-design for low-latency decoders

Hardware-software co-design approaches focus on creating optimized solutions that leverage both hardware capabilities and software flexibility to minimize decoder latency. These approaches include designing application-specific integrated circuits, implementing field-programmable gate array solutions, and creating specialized firmware that works in tandem with hardware components. By considering both hardware and software aspects together, these solutions achieve better latency performance than either approach alone.Expand Specific Solutions

Leading Companies in QEC Decoder Development

Real-Time Decoders for QEC are evolving rapidly in a nascent but accelerating market, with significant growth potential as quantum computing advances toward practical implementation. The technology is transitioning from early research to early commercial development, with market size expected to expand substantially as quantum error correction becomes essential for scalable quantum systems. Leading players demonstrate varying levels of technological maturity: Google, Microsoft, and IBM are at the forefront with advanced decoder architectures, while Huawei, Tencent, and Origin Quantum are making significant investments in low-latency decoding solutions. Telecommunications companies like Ericsson and Qualcomm are leveraging their expertise in real-time processing to develop specialized hardware accelerators, creating a competitive landscape spanning technology giants, quantum startups, and research institutions collaborating to overcome the critical latency challenges in quantum error correction.

Microsoft Technology Licensing LLC

Origin Quantum Computing Technology (Hefei) Co., Ltd.

Key Patents in Low-Latency QEC Technology

- A quantum error correction decoding system utilizing a neural network decoder with a multiply accumulate operation of unsigned fixed-point numbers, integrated into error correction chips, to quickly decode error syndrome information and correct errors in real-time, minimizing data volume and computational requirements.

- A quantum error correction decoding system and method utilizing neural network decoders with multiply accumulate operations on unsigned fixed-point numbers, integrated into an error correction chip, to quickly decode error syndrome information and determine error locations and types in quantum circuits, thereby enabling real-time error correction.

Quantum Hardware Integration Considerations

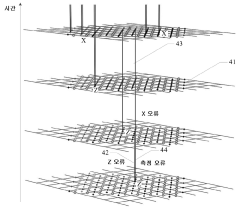

The integration of real-time decoders with quantum hardware represents a critical junction in the development of practical quantum error correction (QEC) systems. Current quantum computing architectures must accommodate specialized hardware accelerators designed specifically for error decoding operations. These accelerators need to be physically positioned in close proximity to the quantum processing units to minimize signal transmission delays, which can significantly impact the overall latency budget for QEC operations.

Physical interface considerations between classical control electronics and quantum processors present unique challenges. Signal integrity must be maintained across temperature gradients that can span from millikelvin environments (where qubits operate) to room temperature (where classical computing components function). This thermal isolation requirement necessitates careful design of interconnects that minimize heat load while maximizing data throughput.

Cryogenic compatibility emerges as another fundamental consideration. Decoder hardware components that can operate at lower temperatures (4K or below) offer substantial advantages by reducing the physical distance to the quantum processor and consequently decreasing signal propagation times. Recent advances in cryogenic CMOS technology and superconducting digital logic show promise for implementing decoder functions directly within the cryogenic environment.

Power constraints represent a significant limitation for quantum hardware integration. The cooling capacity of dilution refrigerators typically restricts power consumption to milliwatts or even microwatts at the lowest temperature stages. This power budget must be carefully allocated between the quantum processor itself and any co-located decoding hardware, driving the need for extremely energy-efficient decoder implementations.

Form factor and scalability considerations also influence integration strategies. As quantum systems scale to thousands or millions of physical qubits, the corresponding decoder hardware must scale proportionally without creating physical bottlenecks. This may require modular approaches where decoder functions are distributed across multiple temperature stages and physical locations within the quantum computing system.

Reconfigurability of the decoder hardware presents another important dimension. Quantum error correction codes and decoding algorithms continue to evolve rapidly, suggesting that hardware implementations should maintain some degree of programmability to accommodate advances in error correction techniques without requiring complete system redesigns.

The co-design of quantum processors and their associated decoding hardware represents perhaps the most promising approach to addressing these integration challenges. By considering the entire quantum error correction system holistically during the design phase, optimizations can be made that minimize latency while respecting the unique constraints of quantum computing environments.

Scalability Analysis of QEC Decoders

The scalability of quantum error correction (QEC) decoders represents a critical factor in determining the viability of large-scale quantum computing systems. As quantum processors continue to increase in qubit count, the computational resources required for decoding grow substantially, potentially creating bottlenecks in the error correction process.

Current decoder architectures demonstrate varying scalability characteristics across different error correction codes. For surface codes, the most promising near-term QEC approach, decoder complexity typically scales as O(d²) for a distance-d code, though this can increase to O(d³) for certain implementations. Union-Find decoders have shown more favorable scaling properties, approaching O(d² log d) in optimized implementations.

Hardware acceleration presents a promising avenue for improving decoder scalability. FPGA-based decoders have demonstrated throughput improvements of 10-100x compared to CPU implementations, with power efficiency gains of similar magnitude. GPU implementations offer another pathway, particularly beneficial for parallel decoding tasks across multiple code blocks.

Memory requirements pose another significant scalability challenge. High-performance decoders often require substantial memory resources that scale quadratically or cubically with code distance. This necessitates careful consideration of memory hierarchies and caching strategies to maintain performance as systems scale.

Network communication overhead becomes increasingly problematic in distributed quantum computing architectures. As quantum processors grow, the bandwidth requirements between classical control systems and quantum hardware increase proportionally, potentially creating communication bottlenecks that limit overall system performance.

Benchmarking studies indicate that current state-of-the-art decoders can handle surface codes with distances up to 25-30 with sub-millisecond latency on specialized hardware. However, scaling to the distances required for fault-tolerant universal quantum computing (d > 100) remains challenging with existing architectures.

Future scalability improvements will likely require algorithmic innovations that reduce computational complexity, such as approximate decoding methods that trade minimal error-correction performance for substantial speed improvements. Additionally, specialized hardware architectures optimized specifically for QEC workloads may provide order-of-magnitude improvements in decoder throughput and energy efficiency.