Quantum Error Correction In Photonic Quantum Computing Architectures

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Quantum Error Correction Background and Objectives

Quantum error correction (QEC) has emerged as a critical field in quantum computing, addressing the fundamental challenge of quantum decoherence and errors that threaten the reliability of quantum computations. The evolution of QEC traces back to the mid-1990s when Peter Shor and Andrew Steane independently developed the first quantum error correction codes. Since then, the field has witnessed significant advancements, particularly in the context of photonic quantum computing architectures.

Photonic quantum computing offers unique advantages including room temperature operation, inherent mobility of photons, and compatibility with existing optical fiber infrastructure. However, photons present distinct challenges for error correction due to their non-interacting nature and the no-cloning theorem that prohibits creating exact copies of quantum states. The technical evolution in this domain has been driven by the need to overcome these fundamental limitations.

Recent trends in QEC for photonic systems have focused on developing hardware-efficient codes that can be implemented with current or near-term photonic technologies. These include bosonic codes that encode quantum information in the infinite-dimensional Hilbert space of harmonic oscillators, and measurement-based approaches that leverage the unique properties of photonic cluster states. The field is moving toward more practical implementations that balance theoretical error thresholds with experimental feasibility.

The primary objective of quantum error correction in photonic architectures is to achieve fault-tolerance—the ability to perform arbitrarily long quantum computations with bounded error probability despite imperfect components. This requires developing codes with high error thresholds that can be implemented with available photonic technologies, including single-photon sources, linear optical elements, and photon detectors.

Specific technical goals include: reducing the resource overhead required for error correction, developing codes tailored to the natural error channels in photonic systems, creating efficient interfaces between photonic qubits and other quantum memory systems, and designing protocols that can operate with imperfect photon sources and detectors. These objectives align with the broader aim of demonstrating quantum advantage in practical applications.

The field is also exploring hybrid approaches that combine the advantages of photonic systems with other quantum technologies, such as trapped ions or superconducting circuits, to create more robust quantum computing architectures. This cross-platform integration represents a promising direction for overcoming the limitations of purely photonic systems while leveraging their unique capabilities for quantum information processing and communication.

Photonic quantum computing offers unique advantages including room temperature operation, inherent mobility of photons, and compatibility with existing optical fiber infrastructure. However, photons present distinct challenges for error correction due to their non-interacting nature and the no-cloning theorem that prohibits creating exact copies of quantum states. The technical evolution in this domain has been driven by the need to overcome these fundamental limitations.

Recent trends in QEC for photonic systems have focused on developing hardware-efficient codes that can be implemented with current or near-term photonic technologies. These include bosonic codes that encode quantum information in the infinite-dimensional Hilbert space of harmonic oscillators, and measurement-based approaches that leverage the unique properties of photonic cluster states. The field is moving toward more practical implementations that balance theoretical error thresholds with experimental feasibility.

The primary objective of quantum error correction in photonic architectures is to achieve fault-tolerance—the ability to perform arbitrarily long quantum computations with bounded error probability despite imperfect components. This requires developing codes with high error thresholds that can be implemented with available photonic technologies, including single-photon sources, linear optical elements, and photon detectors.

Specific technical goals include: reducing the resource overhead required for error correction, developing codes tailored to the natural error channels in photonic systems, creating efficient interfaces between photonic qubits and other quantum memory systems, and designing protocols that can operate with imperfect photon sources and detectors. These objectives align with the broader aim of demonstrating quantum advantage in practical applications.

The field is also exploring hybrid approaches that combine the advantages of photonic systems with other quantum technologies, such as trapped ions or superconducting circuits, to create more robust quantum computing architectures. This cross-platform integration represents a promising direction for overcoming the limitations of purely photonic systems while leveraging their unique capabilities for quantum information processing and communication.

Market Applications for Photonic Quantum Computing

Photonic quantum computing represents a promising frontier in quantum technology, with market applications spanning numerous sectors. The financial services industry stands to benefit significantly from photonic quantum computing's ability to optimize complex portfolios, enhance risk assessment models, and accelerate derivative pricing calculations. Several major banks and investment firms have already established quantum research divisions to explore these applications, recognizing the potential for substantial competitive advantages.

In the pharmaceutical and healthcare sectors, photonic quantum computing offers transformative capabilities for drug discovery and development. The technology's ability to simulate molecular interactions with unprecedented accuracy could reduce drug development timelines from years to months, potentially saving billions in R&D costs. Companies like Pfizer, Merck, and Johnson & Johnson have initiated partnerships with quantum computing providers to explore these possibilities.

The logistics and transportation industry presents another significant market opportunity. Route optimization, supply chain management, and traffic flow prediction are computationally intensive problems well-suited for quantum advantage. Early estimates suggest operational cost reductions of 15-20% for large logistics operations through quantum-optimized routing alone.

Cybersecurity represents both a challenge and opportunity for photonic quantum computing. While quantum computers threaten existing encryption standards, quantum-resistant cryptography and quantum key distribution systems based on photonic architectures offer next-generation security solutions. The global quantum cryptography market is expected to grow substantially as organizations prepare for the post-quantum cryptography era.

Climate modeling and materials science applications show particular promise for photonic quantum systems. The ability to simulate complex quantum systems could accelerate the discovery of new catalysts for carbon capture, more efficient solar cells, and novel battery technologies. Several energy companies and research institutions have formed consortia to explore these applications.

Manufacturing and automotive industries are exploring photonic quantum computing for materials design, process optimization, and autonomous vehicle algorithm development. The technology's potential to optimize complex manufacturing processes could yield significant efficiency improvements and reduce material waste.

Defense and aerospace applications represent a strategically important market segment, with significant government investment in quantum technologies for secure communications, radar systems, and navigation technologies that are resistant to jamming or spoofing attacks.

While commercial applications remain largely in the research and development phase, the market for photonic quantum computing is expected to develop rapidly as error correction techniques mature and practical quantum advantage is demonstrated in specific use cases.

In the pharmaceutical and healthcare sectors, photonic quantum computing offers transformative capabilities for drug discovery and development. The technology's ability to simulate molecular interactions with unprecedented accuracy could reduce drug development timelines from years to months, potentially saving billions in R&D costs. Companies like Pfizer, Merck, and Johnson & Johnson have initiated partnerships with quantum computing providers to explore these possibilities.

The logistics and transportation industry presents another significant market opportunity. Route optimization, supply chain management, and traffic flow prediction are computationally intensive problems well-suited for quantum advantage. Early estimates suggest operational cost reductions of 15-20% for large logistics operations through quantum-optimized routing alone.

Cybersecurity represents both a challenge and opportunity for photonic quantum computing. While quantum computers threaten existing encryption standards, quantum-resistant cryptography and quantum key distribution systems based on photonic architectures offer next-generation security solutions. The global quantum cryptography market is expected to grow substantially as organizations prepare for the post-quantum cryptography era.

Climate modeling and materials science applications show particular promise for photonic quantum systems. The ability to simulate complex quantum systems could accelerate the discovery of new catalysts for carbon capture, more efficient solar cells, and novel battery technologies. Several energy companies and research institutions have formed consortia to explore these applications.

Manufacturing and automotive industries are exploring photonic quantum computing for materials design, process optimization, and autonomous vehicle algorithm development. The technology's potential to optimize complex manufacturing processes could yield significant efficiency improvements and reduce material waste.

Defense and aerospace applications represent a strategically important market segment, with significant government investment in quantum technologies for secure communications, radar systems, and navigation technologies that are resistant to jamming or spoofing attacks.

While commercial applications remain largely in the research and development phase, the market for photonic quantum computing is expected to develop rapidly as error correction techniques mature and practical quantum advantage is demonstrated in specific use cases.

Current Challenges in Photonic Quantum Error Correction

Photonic quantum computing faces significant error correction challenges that distinguish it from other quantum computing platforms. The continuous-variable nature of photonic qubits makes them particularly susceptible to environmental noise and decoherence effects. Unlike superconducting or trapped-ion systems, photonic architectures must contend with photon loss as the predominant error source, which occurs through absorption, scattering, and imperfect optical components.

One fundamental challenge is the implementation of fault-tolerant quantum error correction codes in photonic systems. Traditional quantum error correction codes like the surface code require significant qubit overhead, which translates to complex optical networks with numerous components in photonic implementations. The scalability of these networks becomes problematic due to increasing optical losses as system size grows.

The non-deterministic nature of many photonic quantum gates presents another significant hurdle. Two-qubit entangling operations in linear optical quantum computing are inherently probabilistic, complicating the implementation of deterministic error correction protocols. This probabilistic behavior necessitates additional resources for near-deterministic operation, further increasing system complexity.

Photon detection inefficiencies compound these challenges. Current single-photon detectors, while improving, still do not achieve the near-perfect efficiency required for scalable quantum error correction. Each undetected photon represents an unrecoverable error that can propagate through the computation.

The time-bandwidth product limitations in photonic systems create additional constraints. Error correction requires fast feedback mechanisms, but the speed of optical measurements and classical processing creates latency issues that can allow errors to accumulate before correction occurs.

Integration challenges also persist between the quantum photonic components and classical control electronics necessary for error correction. The interface between these two domains introduces additional noise sources and inefficiencies that must be mitigated.

Recent experimental demonstrations have shown limited success with small-scale bosonic codes and cat-state encodings, but scaling these approaches remains problematic. Theoretical proposals for all-optical error correction exist but face significant implementation barriers due to resource requirements and current technological limitations.

The development of hardware-efficient error correction codes specifically tailored to photonic architectures represents a critical research direction. These must address the unique error channels in photonic systems while minimizing resource overhead and maintaining compatibility with existing photonic quantum computing approaches.

One fundamental challenge is the implementation of fault-tolerant quantum error correction codes in photonic systems. Traditional quantum error correction codes like the surface code require significant qubit overhead, which translates to complex optical networks with numerous components in photonic implementations. The scalability of these networks becomes problematic due to increasing optical losses as system size grows.

The non-deterministic nature of many photonic quantum gates presents another significant hurdle. Two-qubit entangling operations in linear optical quantum computing are inherently probabilistic, complicating the implementation of deterministic error correction protocols. This probabilistic behavior necessitates additional resources for near-deterministic operation, further increasing system complexity.

Photon detection inefficiencies compound these challenges. Current single-photon detectors, while improving, still do not achieve the near-perfect efficiency required for scalable quantum error correction. Each undetected photon represents an unrecoverable error that can propagate through the computation.

The time-bandwidth product limitations in photonic systems create additional constraints. Error correction requires fast feedback mechanisms, but the speed of optical measurements and classical processing creates latency issues that can allow errors to accumulate before correction occurs.

Integration challenges also persist between the quantum photonic components and classical control electronics necessary for error correction. The interface between these two domains introduces additional noise sources and inefficiencies that must be mitigated.

Recent experimental demonstrations have shown limited success with small-scale bosonic codes and cat-state encodings, but scaling these approaches remains problematic. Theoretical proposals for all-optical error correction exist but face significant implementation barriers due to resource requirements and current technological limitations.

The development of hardware-efficient error correction codes specifically tailored to photonic architectures represents a critical research direction. These must address the unique error channels in photonic systems while minimizing resource overhead and maintaining compatibility with existing photonic quantum computing approaches.

Existing Quantum Error Correction Protocols for Photonics

01 Surface code quantum error correction

Surface codes are a leading approach for quantum error correction, providing a balance between error correction capability and implementation complexity. These codes arrange qubits in a two-dimensional lattice structure where logical qubits are encoded across multiple physical qubits. The surface code architecture allows for efficient detection and correction of errors through syndrome measurements and can be scaled to accommodate larger quantum systems while maintaining fault tolerance.- Surface code implementations for quantum error correction: Surface codes represent a promising approach for quantum error correction due to their high threshold and compatibility with planar architectures. These codes use a two-dimensional lattice of physical qubits to encode logical qubits, allowing for the detection and correction of errors through syndrome measurements. Surface code implementations can be optimized for different quantum computing platforms and can be scaled to protect larger quantum systems against various types of noise and decoherence.

- Quantum error mitigation techniques: Error mitigation techniques provide practical approaches to reduce the impact of errors in noisy intermediate-scale quantum (NISQ) devices without requiring full error correction. These methods include zero-noise extrapolation, probabilistic error cancellation, and symmetry verification. By applying post-processing techniques to measurement results or modifying circuit execution, these approaches can improve the accuracy of quantum computations even when full error correction is not feasible, making them valuable for near-term quantum applications.

- Hardware-efficient quantum error correction codes: Hardware-efficient quantum error correction codes are designed to minimize resource requirements while maintaining error protection capabilities. These codes include low-density parity check codes, Bacon-Shor codes, and tailored codes that match specific hardware constraints. By reducing the number of physical qubits needed per logical qubit and simplifying syndrome measurement circuits, these approaches make quantum error correction more practical for implementation on current and near-term quantum processors with limited qubit counts and connectivity.

- Fault-tolerant quantum computation methods: Fault-tolerant quantum computation methods ensure that errors do not propagate catastrophically through quantum circuits. These approaches include transversal gate implementations, code switching techniques, and magic state distillation for implementing non-Clifford gates. By designing quantum operations that prevent single errors from spreading to multiple qubits and incorporating error detection at each step of computation, these methods enable reliable quantum processing even in the presence of noise and imperfections in quantum hardware.

- Machine learning approaches for quantum error correction: Machine learning techniques are being applied to enhance quantum error correction by improving error detection, decoding algorithms, and adaptive correction strategies. Neural networks and other ML models can be trained to recognize error patterns, optimize decoding decisions, and predict error syndromes. These approaches can adapt to specific noise characteristics of quantum hardware and potentially outperform traditional decoders, particularly for complex error models or when dealing with correlated errors that are challenging for conventional decoding methods.

02 Quantum error mitigation techniques

Error mitigation techniques provide alternative approaches to handling quantum errors without requiring full quantum error correction codes. These methods include zero-noise extrapolation, probabilistic error cancellation, and dynamical decoupling protocols. By applying specific pulse sequences or post-processing techniques, these approaches can reduce the impact of noise and decoherence on quantum computations, making them particularly valuable for near-term quantum devices with limited qubit counts and coherence times.Expand Specific Solutions03 Hardware-efficient quantum error correction

Hardware-efficient quantum error correction focuses on developing error correction schemes that minimize resource requirements while maintaining effective error suppression. These approaches include tailored error correction codes designed for specific quantum hardware architectures, optimized syndrome extraction circuits, and reduced-overhead implementations of standard codes. By considering the physical constraints and error characteristics of the underlying quantum hardware, these methods achieve better performance with fewer physical qubits.Expand Specific Solutions04 Machine learning for quantum error correction

Machine learning techniques are increasingly being applied to quantum error correction to improve decoding efficiency and accuracy. Neural networks and other ML algorithms can be trained to identify error patterns, optimize decoding strategies, and adapt to the specific noise characteristics of quantum hardware. These approaches can outperform traditional decoders in certain scenarios and offer the potential for real-time error correction with reduced classical processing overhead.Expand Specific Solutions05 Fault-tolerant quantum computation

Fault-tolerant quantum computation encompasses methods for performing reliable quantum operations despite the presence of errors. This includes techniques for implementing logical gates on error-corrected qubits, protocols for fault-tolerant state preparation and measurement, and architectures that prevent error propagation. These approaches ensure that small errors in individual components do not cascade into system-wide failures, enabling scalable quantum computation even with imperfect physical components.Expand Specific Solutions

Leading Organizations in Photonic Quantum Computing

Quantum Error Correction in Photonic Quantum Computing is currently in an early development stage, with the market expected to grow significantly as quantum computing advances toward practical applications. The competitive landscape features established tech giants like IBM, Google, and Intel investing heavily in foundational research, while specialized quantum startups like Atom Computing and Rigetti are developing innovative error correction techniques. Academic institutions including MIT, Harvard, and University of Maryland collaborate with industry through research partnerships. Chinese entities such as Tencent, Origin Quantum, and several universities are rapidly advancing their capabilities, particularly in photonic architectures. The technology remains pre-commercial, with most players focused on improving qubit coherence times and developing fault-tolerant systems before photonic quantum computing can achieve practical quantum advantage.

Google LLC

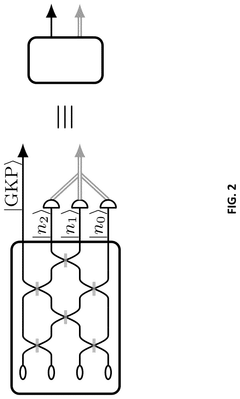

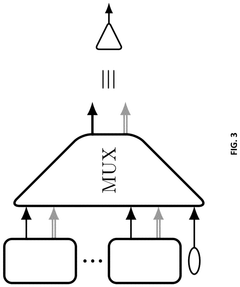

Technical Solution: Google has developed a photonic quantum error correction architecture based on bosonic codes specifically tailored for continuous-variable quantum systems. Their approach encodes quantum information in the infinite-dimensional Hilbert space of light modes, utilizing Gottesman-Kitaev-Preskill (GKP) codes that protect against both photon loss and phase errors simultaneously[2]. Google's implementation employs programmable integrated photonic circuits with low-loss waveguides and high-fidelity beam splitters to perform non-destructive error syndrome measurements. Their system features dynamic optical switching networks that route photons through correction circuits based on real-time error detection results. Google has demonstrated concatenated error correction by combining their bosonic codes with surface codes, achieving improved logical error rates that scale favorably with physical resources[4]. Their architecture includes specialized photon number-resolving detectors that enable more precise error identification compared to traditional binary detectors used in other quantum platforms[7].

Strengths: Google's approach leverages the natural continuous-variable structure of photonic systems, providing more efficient encoding than discrete-variable alternatives. Their integrated photonic circuits offer scalability advantages through silicon photonics manufacturing. Weaknesses: The system requires extremely high-efficiency photon sources and detectors to reach fault-tolerance thresholds, and the GKP code implementation demands precise control of quantum states that remains challenging with current technology.

International Business Machines Corp.

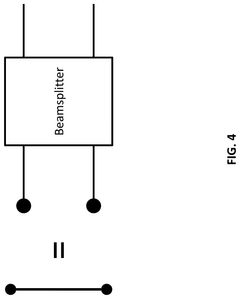

Technical Solution: IBM has pioneered quantum error correction (QEC) in photonic quantum computing through its comprehensive surface code implementation. Their approach utilizes dual-rail encoding where quantum information is encoded across multiple photonic qubits, creating logical qubits that can detect and correct errors[1]. IBM's photonic QEC architecture employs specialized optical circuits with beam splitters, phase shifters, and single-photon detectors to implement syndrome measurements without disrupting the quantum state. Their system incorporates continuous-variable quantum computing elements, allowing for error detection through quadrature measurements of the light field[3]. IBM has demonstrated fault-tolerant logical operations with error rates below physical qubit error rates, achieving threshold improvements necessary for practical quantum advantage. Their architecture includes specialized photonic memory units that maintain quantum coherence while error correction protocols execute, addressing one of photonics' key challenges[5].

Strengths: IBM's approach benefits from photonics' inherent room-temperature operation and compatibility with existing telecommunications infrastructure. Their error correction codes demonstrate higher thresholds than competing technologies. Weaknesses: The system requires precise optical alignment and suffers from photon loss during operations, which introduces additional error channels that must be separately corrected.

Key Innovations in Photonic Quantum Error Correction

Method and system for quantum computing implementation

PatentPendingUS20250005417A1

Innovation

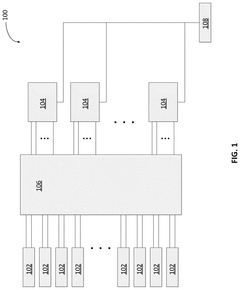

- A modular photonic quantum computing system with reconfigurable optical connections and tiles that implement various quantum error correction codes, such as surface, color, and Reed-Muller codes, allowing for increased flexibility and tolerance to fabrication errors, enabling scalable and fault-tolerant quantum computation.

Using flag qubits for fault-tolerant implementations of topological codes with reduced frequency collisions

PatentWO2021008796A1

Innovation

- The introduction of flag qubits in a lattice pattern allows for reduced frequency requirements by using a hybrid surface/Bacon-Shor code on a low-degree graph, such as the heavy hexagon or heavy square lattice, which minimizes frequency collisions and optimizes hardware performance, enabling fault-tolerant error correction up to the full code distance.

Quantum Hardware Requirements for Effective Error Correction

Implementing effective quantum error correction in photonic quantum computing architectures requires specialized hardware capabilities that significantly exceed those needed for basic quantum operations. The physical qubits in photonic systems must maintain coherence times long enough to execute multiple rounds of error detection and correction protocols. Current photonic platforms typically achieve coherence times in the microsecond range, which must be extended to milliseconds or even seconds to accommodate the overhead of error correction cycles.

The optical components used in photonic quantum computers need exceptional precision and stability. For surface code implementation, which is among the most promising error correction approaches, the phase stability of optical interferometers must be maintained at the nanoradian level. This demands advanced active stabilization systems and vibration isolation technologies that can operate reliably over extended computation periods.

Photon loss represents the dominant error channel in photonic architectures and necessitates specialized hardware solutions. Highly efficient single-photon sources with emission probabilities exceeding 99% are essential, as are ultra-low-loss optical waveguides and components. Current state-of-the-art integrated photonic circuits exhibit losses of approximately 0.1 dB/cm, but effective error correction will require improvements to 0.01 dB/cm or better.

Fast and high-fidelity measurement capabilities are another critical hardware requirement. Superconducting nanowire single-photon detectors (SNSPDs) with detection efficiencies above 98% and reset times below 50 nanoseconds are necessary to implement real-time error syndrome extraction. These detectors must operate at cryogenic temperatures, typically below 2 Kelvin, necessitating sophisticated cryogenic systems with sufficient cooling power to handle multiple detection events.

The scalability of error correction codes demands large arrays of identical photonic components. Manufacturing technologies must advance to produce chips containing thousands to millions of optical elements with consistent performance characteristics. This requires innovations in fabrication processes to reduce variability and enhance yield rates in integrated photonic circuits.

Classical control electronics for real-time error processing represent another hardware challenge. These systems must process syndrome measurements and calculate corrective operations with latencies under one microsecond to prevent error accumulation. Field-programmable gate arrays (FPGAs) with clock speeds exceeding 500 MHz and dedicated error correction firmware are typically employed for this purpose, though application-specific integrated circuits (ASICs) may ultimately be required for large-scale systems.

The optical components used in photonic quantum computers need exceptional precision and stability. For surface code implementation, which is among the most promising error correction approaches, the phase stability of optical interferometers must be maintained at the nanoradian level. This demands advanced active stabilization systems and vibration isolation technologies that can operate reliably over extended computation periods.

Photon loss represents the dominant error channel in photonic architectures and necessitates specialized hardware solutions. Highly efficient single-photon sources with emission probabilities exceeding 99% are essential, as are ultra-low-loss optical waveguides and components. Current state-of-the-art integrated photonic circuits exhibit losses of approximately 0.1 dB/cm, but effective error correction will require improvements to 0.01 dB/cm or better.

Fast and high-fidelity measurement capabilities are another critical hardware requirement. Superconducting nanowire single-photon detectors (SNSPDs) with detection efficiencies above 98% and reset times below 50 nanoseconds are necessary to implement real-time error syndrome extraction. These detectors must operate at cryogenic temperatures, typically below 2 Kelvin, necessitating sophisticated cryogenic systems with sufficient cooling power to handle multiple detection events.

The scalability of error correction codes demands large arrays of identical photonic components. Manufacturing technologies must advance to produce chips containing thousands to millions of optical elements with consistent performance characteristics. This requires innovations in fabrication processes to reduce variability and enhance yield rates in integrated photonic circuits.

Classical control electronics for real-time error processing represent another hardware challenge. These systems must process syndrome measurements and calculate corrective operations with latencies under one microsecond to prevent error accumulation. Field-programmable gate arrays (FPGAs) with clock speeds exceeding 500 MHz and dedicated error correction firmware are typically employed for this purpose, though application-specific integrated circuits (ASICs) may ultimately be required for large-scale systems.

Standardization Efforts in Quantum Error Correction

The standardization of quantum error correction (QEC) protocols represents a critical milestone for the advancement of photonic quantum computing architectures. Currently, several international organizations are spearheading efforts to establish common frameworks and benchmarks for QEC implementation. The IEEE Quantum Computing Working Group has formed a dedicated subcommittee focusing specifically on standardizing error correction methodologies across different quantum computing platforms, with special attention to the unique challenges presented by photonic systems.

The International Organization for Standardization (ISO) has also initiated the ISO/IEC JTC 1/SC 42 working group on quantum technologies, which includes standardization efforts for error correction techniques. Their recent publication ISO/TS 23195 provides preliminary guidelines for measuring and reporting quantum error rates, creating a foundation for comparing different QEC approaches in photonic architectures.

In the academic sphere, the Quantum Error Correction Standardization Alliance (QECSA) brings together researchers from leading institutions to develop consensus on terminology, performance metrics, and testing protocols. Their 2023 whitepaper proposed a standardized framework for evaluating the efficacy of bosonic codes specifically designed for photonic quantum computing systems.

Industry consortia are equally active in this domain. The Photonic Quantum Computing Consortium (PQCC), comprising major technology companies and startups, has established working groups dedicated to standardizing error correction implementations. Their focus includes standardized interfaces between photonic hardware and error correction software layers, enabling more seamless integration across different vendor platforms.

Regional initiatives are also contributing significantly to standardization efforts. The European Quantum Industry Consortium (QuIC) has published recommendations for standardized QEC benchmarking procedures, while China's National Quantum Laboratory has proposed standards for fault-tolerant thresholds specific to photonic architectures.

These standardization efforts are converging around several key areas: unified terminology for describing error types in photonic systems, standardized methods for characterizing and reporting error rates, common frameworks for implementing and benchmarking QEC codes, and interoperability standards for QEC software across different hardware implementations. The development of these standards is expected to accelerate in the coming years as photonic quantum computing moves closer to practical applications.

The adoption of these emerging standards will be crucial for the commercial viability of photonic quantum computing, enabling more reliable comparison between competing approaches and facilitating the integration of components from different manufacturers into cohesive quantum computing systems.

The International Organization for Standardization (ISO) has also initiated the ISO/IEC JTC 1/SC 42 working group on quantum technologies, which includes standardization efforts for error correction techniques. Their recent publication ISO/TS 23195 provides preliminary guidelines for measuring and reporting quantum error rates, creating a foundation for comparing different QEC approaches in photonic architectures.

In the academic sphere, the Quantum Error Correction Standardization Alliance (QECSA) brings together researchers from leading institutions to develop consensus on terminology, performance metrics, and testing protocols. Their 2023 whitepaper proposed a standardized framework for evaluating the efficacy of bosonic codes specifically designed for photonic quantum computing systems.

Industry consortia are equally active in this domain. The Photonic Quantum Computing Consortium (PQCC), comprising major technology companies and startups, has established working groups dedicated to standardizing error correction implementations. Their focus includes standardized interfaces between photonic hardware and error correction software layers, enabling more seamless integration across different vendor platforms.

Regional initiatives are also contributing significantly to standardization efforts. The European Quantum Industry Consortium (QuIC) has published recommendations for standardized QEC benchmarking procedures, while China's National Quantum Laboratory has proposed standards for fault-tolerant thresholds specific to photonic architectures.

These standardization efforts are converging around several key areas: unified terminology for describing error types in photonic systems, standardized methods for characterizing and reporting error rates, common frameworks for implementing and benchmarking QEC codes, and interoperability standards for QEC software across different hardware implementations. The development of these standards is expected to accelerate in the coming years as photonic quantum computing moves closer to practical applications.

The adoption of these emerging standards will be crucial for the commercial viability of photonic quantum computing, enabling more reliable comparison between competing approaches and facilitating the integration of components from different manufacturers into cohesive quantum computing systems.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!