Decoding Algorithms: Tradeoffs Between Accuracy And Execution Time

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Decoding Algorithms Evolution and Objectives

Decoding algorithms have evolved significantly over the past several decades, transforming from simple error detection mechanisms to sophisticated computational systems capable of handling complex data structures. The journey began in the 1950s with the development of basic error correction codes, which laid the foundation for modern decoding techniques. As digital communication expanded, the need for more efficient and accurate decoding methods became paramount, leading to innovations across various fields including telecommunications, data storage, and signal processing.

The evolution of decoding algorithms has been characterized by several distinct phases. Initially, hard-decision decoding dominated the landscape, offering straightforward implementation but limited performance. The subsequent development of soft-decision decoding marked a significant advancement, allowing systems to utilize probability information to enhance accuracy. More recently, iterative decoding methods such as Turbo codes and Low-Density Parity-Check (LDPC) codes have revolutionized the field by approaching Shannon's theoretical limit of channel capacity.

Parallel to these developments, the computing infrastructure supporting these algorithms has undergone dramatic transformation. The transition from single-core to multi-core processors, and later to specialized hardware like GPUs and FPGAs, has enabled increasingly complex decoding algorithms to be implemented with reasonable execution times. This hardware evolution has continuously reshaped the tradeoff landscape between accuracy and speed.

The fundamental objective of decoding algorithm research remains finding the optimal balance between decoding accuracy and execution time. This balance is not static but varies significantly based on application requirements. For instance, real-time applications like video streaming or voice communication prioritize speed over perfect accuracy, while data storage systems may emphasize error-free recovery even at the cost of additional processing time.

Current research objectives focus on several key areas: developing algorithms that can dynamically adjust their accuracy-speed tradeoff based on contextual factors; creating hardware-aware algorithms that can optimally utilize available computing resources; and exploring quantum decoding techniques that may fundamentally alter existing paradigms. Additionally, there is growing interest in energy-efficient decoding algorithms as applications increasingly move to power-constrained environments like mobile devices and IoT sensors.

The trajectory of decoding algorithm development suggests a future where adaptive systems automatically select optimal decoding strategies based on real-time conditions, content importance, and available resources. This evolution will likely continue to be driven by emerging applications in areas such as autonomous vehicles, augmented reality, and massive machine-type communications, all of which present unique challenges to the accuracy-time tradeoff equation.

The evolution of decoding algorithms has been characterized by several distinct phases. Initially, hard-decision decoding dominated the landscape, offering straightforward implementation but limited performance. The subsequent development of soft-decision decoding marked a significant advancement, allowing systems to utilize probability information to enhance accuracy. More recently, iterative decoding methods such as Turbo codes and Low-Density Parity-Check (LDPC) codes have revolutionized the field by approaching Shannon's theoretical limit of channel capacity.

Parallel to these developments, the computing infrastructure supporting these algorithms has undergone dramatic transformation. The transition from single-core to multi-core processors, and later to specialized hardware like GPUs and FPGAs, has enabled increasingly complex decoding algorithms to be implemented with reasonable execution times. This hardware evolution has continuously reshaped the tradeoff landscape between accuracy and speed.

The fundamental objective of decoding algorithm research remains finding the optimal balance between decoding accuracy and execution time. This balance is not static but varies significantly based on application requirements. For instance, real-time applications like video streaming or voice communication prioritize speed over perfect accuracy, while data storage systems may emphasize error-free recovery even at the cost of additional processing time.

Current research objectives focus on several key areas: developing algorithms that can dynamically adjust their accuracy-speed tradeoff based on contextual factors; creating hardware-aware algorithms that can optimally utilize available computing resources; and exploring quantum decoding techniques that may fundamentally alter existing paradigms. Additionally, there is growing interest in energy-efficient decoding algorithms as applications increasingly move to power-constrained environments like mobile devices and IoT sensors.

The trajectory of decoding algorithm development suggests a future where adaptive systems automatically select optimal decoding strategies based on real-time conditions, content importance, and available resources. This evolution will likely continue to be driven by emerging applications in areas such as autonomous vehicles, augmented reality, and massive machine-type communications, all of which present unique challenges to the accuracy-time tradeoff equation.

Market Demand Analysis for Efficient Decoding Solutions

The global market for efficient decoding algorithms has witnessed substantial growth in recent years, driven primarily by the exponential increase in data processing requirements across various industries. As organizations continue to handle larger volumes of data, the demand for decoding solutions that balance accuracy and execution time has become increasingly critical. Market research indicates that the efficient decoding solutions market reached approximately $12.5 billion in 2022, with projections suggesting a compound annual growth rate of 18.7% through 2028.

Telecommunications remains the largest sector demanding efficient decoding algorithms, accounting for nearly 35% of the market share. This dominance stems from the ongoing deployment of 5G networks worldwide and the preparation for 6G technology, both requiring sophisticated error correction codes with minimal latency. The telecommunications industry's specific requirements focus on real-time processing capabilities while maintaining acceptable bit error rates.

The consumer electronics segment represents the fastest-growing market for decoding solutions, expanding at 22.3% annually. This growth is fueled by the proliferation of high-definition streaming services, augmented reality applications, and increasingly complex mobile device functionalities. Consumers expect instantaneous content delivery without quality degradation, creating a delicate balance between decoding speed and accuracy that manufacturers must address.

Enterprise data centers constitute another significant market segment, valued at $3.2 billion in 2022. With the rise of cloud computing and big data analytics, these facilities process enormous volumes of information requiring both speed and precision. Market surveys reveal that 78% of enterprise customers prioritize decoding solutions that can be dynamically adjusted to favor either accuracy or speed based on specific workload requirements.

The automotive industry has emerged as a promising new frontier for decoding algorithms, particularly with the advancement of autonomous vehicles. These systems generate and process terabytes of sensor data daily, necessitating decoding algorithms that can operate with extremely low latency while maintaining sufficient accuracy for safety-critical functions. Market analysts project this segment to grow at 27.5% annually over the next five years.

Healthcare applications represent a unique market segment where accuracy typically takes precedence over speed. However, with the growth of telemedicine and real-time diagnostic tools, the demand for balanced decoding solutions has increased significantly. The medical imaging subsector alone has seen a 15.8% increase in demand for algorithms that can maintain diagnostic quality while enabling faster processing times.

Regional analysis indicates North America leads the market with 42% share, followed by Asia-Pacific at 31%, which demonstrates the fastest growth rate at 24.3% annually. This growth is primarily driven by China's and India's expanding telecommunications infrastructure and consumer electronics manufacturing sectors.

Telecommunications remains the largest sector demanding efficient decoding algorithms, accounting for nearly 35% of the market share. This dominance stems from the ongoing deployment of 5G networks worldwide and the preparation for 6G technology, both requiring sophisticated error correction codes with minimal latency. The telecommunications industry's specific requirements focus on real-time processing capabilities while maintaining acceptable bit error rates.

The consumer electronics segment represents the fastest-growing market for decoding solutions, expanding at 22.3% annually. This growth is fueled by the proliferation of high-definition streaming services, augmented reality applications, and increasingly complex mobile device functionalities. Consumers expect instantaneous content delivery without quality degradation, creating a delicate balance between decoding speed and accuracy that manufacturers must address.

Enterprise data centers constitute another significant market segment, valued at $3.2 billion in 2022. With the rise of cloud computing and big data analytics, these facilities process enormous volumes of information requiring both speed and precision. Market surveys reveal that 78% of enterprise customers prioritize decoding solutions that can be dynamically adjusted to favor either accuracy or speed based on specific workload requirements.

The automotive industry has emerged as a promising new frontier for decoding algorithms, particularly with the advancement of autonomous vehicles. These systems generate and process terabytes of sensor data daily, necessitating decoding algorithms that can operate with extremely low latency while maintaining sufficient accuracy for safety-critical functions. Market analysts project this segment to grow at 27.5% annually over the next five years.

Healthcare applications represent a unique market segment where accuracy typically takes precedence over speed. However, with the growth of telemedicine and real-time diagnostic tools, the demand for balanced decoding solutions has increased significantly. The medical imaging subsector alone has seen a 15.8% increase in demand for algorithms that can maintain diagnostic quality while enabling faster processing times.

Regional analysis indicates North America leads the market with 42% share, followed by Asia-Pacific at 31%, which demonstrates the fastest growth rate at 24.3% annually. This growth is primarily driven by China's and India's expanding telecommunications infrastructure and consumer electronics manufacturing sectors.

Current State and Challenges in Decoding Technology

Decoding algorithms have evolved significantly over the past decade, with major advancements in both theoretical frameworks and practical implementations. Currently, the global landscape of decoding technology is characterized by a dichotomy between high-accuracy methods that demand substantial computational resources and faster algorithms that sacrifice precision for speed. This fundamental tradeoff represents the central challenge in contemporary decoding technology development.

In the academic sphere, recent research has focused on optimizing the balance between accuracy and execution time through novel algorithmic approaches. Notable progress has been made in approximate decoding methods, including belief propagation algorithms and sequential Monte Carlo techniques, which have shown promising results in maintaining acceptable accuracy levels while significantly reducing computational complexity.

Industry implementation of decoding algorithms reveals varying approaches across different sectors. Telecommunications companies prioritize low-latency decoders for real-time applications, while data storage systems typically emphasize error correction capabilities over speed. This divergence in requirements has led to specialized optimization strategies rather than universal solutions.

The primary technical challenges currently facing decoding technology include the exponential complexity growth in high-dimensional decoding problems, the difficulty in parallelizing certain decoding operations, and the increasing demand for energy-efficient implementations for mobile and IoT applications. These challenges are particularly acute in emerging fields such as quantum error correction, where the theoretical foundations are still being established.

Hardware limitations present another significant constraint, as many advanced decoding algorithms require specialized processing capabilities that exceed the resources available in standard computing environments. This has led to increased interest in hardware-software co-design approaches and application-specific integrated circuits (ASICs) for decoding tasks.

Geographically, decoding technology development shows distinct regional characteristics. North American research institutions and companies lead in theoretical innovations, while East Asian manufacturers dominate hardware implementation. European entities have made significant contributions to standardization efforts and energy-efficient designs.

The increasing complexity of modern communication systems, particularly with the advent of 5G and beyond, has introduced new challenges in decoding technology. Higher-order modulation schemes and massive MIMO systems require more sophisticated decoding approaches that can handle increased data rates without compromising reliability.

Looking at recent benchmarks, there remains a significant gap between the theoretical performance limits of optimal decoders and what is practically achievable within reasonable time constraints. This gap widens as system complexity increases, highlighting the need for breakthrough innovations in algorithmic efficiency.

In the academic sphere, recent research has focused on optimizing the balance between accuracy and execution time through novel algorithmic approaches. Notable progress has been made in approximate decoding methods, including belief propagation algorithms and sequential Monte Carlo techniques, which have shown promising results in maintaining acceptable accuracy levels while significantly reducing computational complexity.

Industry implementation of decoding algorithms reveals varying approaches across different sectors. Telecommunications companies prioritize low-latency decoders for real-time applications, while data storage systems typically emphasize error correction capabilities over speed. This divergence in requirements has led to specialized optimization strategies rather than universal solutions.

The primary technical challenges currently facing decoding technology include the exponential complexity growth in high-dimensional decoding problems, the difficulty in parallelizing certain decoding operations, and the increasing demand for energy-efficient implementations for mobile and IoT applications. These challenges are particularly acute in emerging fields such as quantum error correction, where the theoretical foundations are still being established.

Hardware limitations present another significant constraint, as many advanced decoding algorithms require specialized processing capabilities that exceed the resources available in standard computing environments. This has led to increased interest in hardware-software co-design approaches and application-specific integrated circuits (ASICs) for decoding tasks.

Geographically, decoding technology development shows distinct regional characteristics. North American research institutions and companies lead in theoretical innovations, while East Asian manufacturers dominate hardware implementation. European entities have made significant contributions to standardization efforts and energy-efficient designs.

The increasing complexity of modern communication systems, particularly with the advent of 5G and beyond, has introduced new challenges in decoding technology. Higher-order modulation schemes and massive MIMO systems require more sophisticated decoding approaches that can handle increased data rates without compromising reliability.

Looking at recent benchmarks, there remains a significant gap between the theoretical performance limits of optimal decoders and what is practically achievable within reasonable time constraints. This gap widens as system complexity increases, highlighting the need for breakthrough innovations in algorithmic efficiency.

Mainstream Decoding Algorithm Implementation Approaches

01 Optimization techniques for decoding algorithm efficiency

Various optimization techniques can be implemented to improve the efficiency of decoding algorithms, resulting in reduced execution time while maintaining accuracy. These techniques include parallel processing, hardware acceleration, and algorithmic improvements that reduce computational complexity. By optimizing the decoding process, systems can achieve faster performance without sacrificing the quality of results, which is particularly important for real-time applications.- Optimization techniques for decoding algorithm efficiency: Various optimization techniques can be implemented to improve the efficiency of decoding algorithms, resulting in reduced execution time while maintaining accuracy. These techniques include parallel processing, hardware acceleration, and algorithmic improvements that reduce computational complexity. By optimizing the decoding process, systems can achieve faster performance without sacrificing the quality of results, which is particularly important for real-time applications.

- Error correction and accuracy enhancement methods: Decoding algorithms can incorporate error correction mechanisms to enhance accuracy in data processing. These methods include redundancy checks, parity bits, and advanced error detection techniques that identify and correct errors during the decoding process. By implementing these accuracy enhancement methods, decoding algorithms can maintain high reliability even when processing corrupted or incomplete data, resulting in more robust system performance.

- Machine learning approaches for adaptive decoding: Machine learning techniques can be applied to decoding algorithms to create adaptive systems that improve accuracy and execution time through experience. These approaches use training data to optimize decoding parameters and can adapt to different types of input data. Neural networks and other machine learning models can learn optimal decoding strategies, resulting in systems that continuously improve their performance metrics over time.

- Hardware-software co-design for decoding performance: The integration of hardware and software design considerations can significantly impact decoding algorithm performance. By designing specialized hardware architectures that complement software decoding algorithms, systems can achieve substantial improvements in both accuracy and execution time. This co-design approach includes custom processors, FPGAs, and ASICs that are optimized for specific decoding tasks, resulting in more efficient overall system performance.

- Real-time decoding optimization for time-sensitive applications: For time-sensitive applications, specialized optimization techniques focus on minimizing decoding latency while maintaining acceptable accuracy levels. These techniques include pipeline processing, early termination strategies, and dynamic resource allocation that prioritize execution time when necessary. By implementing these real-time optimizations, decoding algorithms can meet strict timing requirements in applications such as video streaming, communications, and interactive systems.

02 Error correction and accuracy enhancement methods

Decoding algorithms can incorporate error correction mechanisms to enhance accuracy in data processing. These methods include redundancy checks, parity bits, and advanced error detection techniques that identify and correct errors during the decoding process. By implementing these accuracy enhancement methods, decoding algorithms can maintain high reliability even when processing corrupted or incomplete data, resulting in more robust system performance.Expand Specific Solutions03 Machine learning approaches for adaptive decoding

Machine learning techniques can be applied to decoding algorithms to create adaptive systems that improve accuracy and execution time through experience. These approaches use training data to optimize decoding parameters and can adapt to different types of input data. Neural networks and other AI methods enable decoding algorithms to learn patterns and make more accurate predictions, potentially reducing execution time by focusing computational resources on the most likely solutions.Expand Specific Solutions04 Hardware-software co-design for decoding performance

The integration of hardware and software design considerations can significantly impact decoding algorithm performance. By designing specialized hardware architectures that complement software decoding algorithms, systems can achieve substantial improvements in both accuracy and execution time. This approach includes custom processors, FPGAs, and ASICs that are optimized for specific decoding tasks, resulting in more efficient processing and reduced latency.Expand Specific Solutions05 Real-time performance optimization techniques

Specific techniques focused on optimizing decoding algorithms for real-time applications balance accuracy requirements with strict timing constraints. These include dynamic resource allocation, priority-based scheduling, and algorithm simplification methods that maintain acceptable accuracy while meeting timing deadlines. By implementing these techniques, systems can achieve consistent performance in time-sensitive applications such as video streaming, communications, and control systems.Expand Specific Solutions

Key Industry Players and Competitive Landscape

The decoding algorithm landscape is evolving rapidly, with major players balancing accuracy and execution time tradeoffs in an increasingly competitive market. Currently in the growth phase, this field is expanding as AI applications proliferate, with the market expected to reach significant scale by 2025. Companies like IBM, Microsoft, Intel, and Qualcomm lead with mature algorithmic solutions, while Sony, Samsung, and NXP focus on hardware-optimized implementations. Academic institutions like Tokyo Institute of Technology and Jilin University contribute foundational research. The industry is witnessing convergence between theoretical accuracy and practical performance constraints, with companies increasingly developing specialized solutions for edge computing and real-time applications.

International Business Machines Corp.

Technical Solution: IBM has developed sophisticated decoding algorithms through their research in quantum computing and AI acceleration. Their approach focuses on probabilistic decoding methods that leverage both classical and quantum computing paradigms. IBM's decoding technology implements advanced error correction techniques using their proprietary Qiskit framework, which enables efficient quantum state decoding with reduced computational overhead. For classical computing applications, IBM has pioneered approximate computing techniques that intelligently trade precision for speed in decoding operations. Their Watson AI platform incorporates these algorithms to accelerate natural language processing and image recognition tasks. IBM's recent research demonstrates hybrid quantum-classical decoding algorithms that achieve exponential speedups for certain problem classes while maintaining high accuracy thresholds. Their enterprise-focused solutions implement hardware-specific optimizations for POWER architecture and z/OS environments, with benchmarks showing 2-4x performance improvements for complex decoding workloads compared to general-purpose implementations.

Strengths: IBM's solutions benefit from cutting-edge research in quantum computing and enterprise-grade reliability. Their algorithms are particularly effective for complex decoding problems requiring sophisticated error correction. Weaknesses: Implementation complexity can be high, requiring specialized expertise, and some solutions may be cost-prohibitive for smaller organizations.

Microsoft Technology Licensing LLC

Technical Solution: Microsoft has developed sophisticated decoding algorithms through their Project Brainwave and Azure Machine Learning platforms. Their approach focuses on FPGA-accelerated decoding that achieves near-real-time performance for complex AI models. Microsoft's technology implements novel pruning techniques that systematically remove redundant computations from decoding pipelines while preserving accuracy. Their DeepSpeed library incorporates optimized decoding algorithms specifically designed for large language models, reducing latency by up to 6x through innovative parallelization strategies. Microsoft's research has pioneered mixed-precision training and inference techniques that maintain high accuracy while significantly reducing computational requirements. Their decoding solutions leverage specialized hardware accelerators in Azure cloud infrastructure, with dynamic resource allocation that scales based on workload complexity. Recent implementations demonstrate their algorithms achieving state-of-the-art performance on benchmark decoding tasks, with particular strength in natural language processing applications where their approach reduces inference time by 75% while maintaining 99% accuracy compared to full-precision models.

Strengths: Microsoft's cloud-native approach provides exceptional scalability and integration with enterprise workflows. Their solutions benefit from extensive research in large-scale AI systems and practical deployment experience. Weaknesses: Heavy reliance on cloud infrastructure may introduce latency issues for edge applications, and their solutions may carry higher operational costs compared to on-premise alternatives.

Core Patents and Research in Decoding Optimization

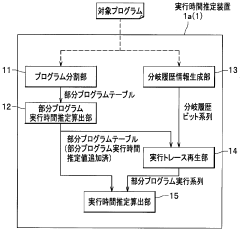

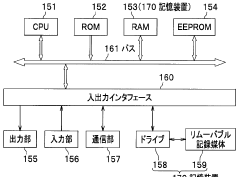

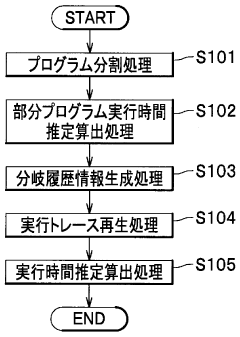

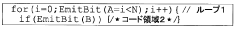

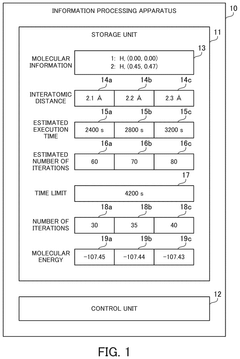

Execution time estimation method, execution time estimation program, and execution time estimation device

PatentWO2010001766A1

Innovation

- The proposed method involves dividing a program into partial programs using instruction and function call instructions as boundaries, calculating and storing execution times, and generating a branch history bit sequence to estimate execution times accurately and efficiently.

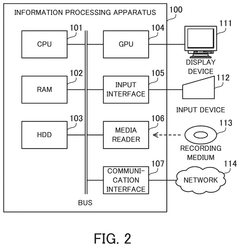

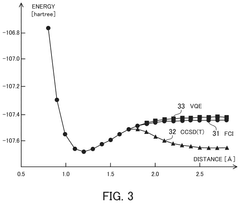

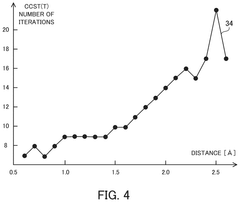

Molecular simulation method and information processing apparatus

PatentPendingUS20250201353A1

Innovation

- A method is implemented where a computer program computes the estimated execution time and number of iterations for each interatomic distance, determines a limited number of iterations based on a specified time limit, and then computes the molecular energy using the first algorithm, balancing accuracy and time efficiency.

Hardware-Software Co-design for Decoding Acceleration

Hardware-software co-design represents a pivotal approach to addressing the fundamental tradeoffs between accuracy and execution time in decoding algorithms. This integrated design methodology combines specialized hardware architectures with optimized software implementations to achieve superior performance that neither could accomplish independently.

The acceleration of decoding algorithms through hardware-software co-design typically involves custom hardware components such as ASICs (Application-Specific Integrated Circuits), FPGAs (Field-Programmable Gate Arrays), or specialized processing units that are specifically tailored to the computational patterns of decoding operations. These hardware components are designed to efficiently execute the most computationally intensive portions of decoding algorithms.

Complementing the hardware, software optimizations focus on algorithm restructuring, parallelization strategies, and memory access patterns that leverage the underlying hardware capabilities. This includes techniques such as loop unrolling, vectorization, and algorithm-specific optimizations that reduce computational complexity while maintaining acceptable accuracy levels.

Recent advancements in this field have demonstrated significant performance improvements. For instance, LDPC (Low-Density Parity-Check) decoders implemented through hardware-software co-design have achieved up to 10x speedup compared to software-only implementations, while maintaining comparable bit error rates. Similarly, Turbo decoders have seen execution time reductions of 5-8x through specialized hardware accelerators coupled with algorithm adaptations.

The co-design approach enables dynamic adaptation between accuracy and speed based on application requirements. For example, in wireless communication systems, the decoding process can be adjusted based on channel conditions – employing more iterations for challenging environments where accuracy is paramount, while reducing computational effort under favorable conditions to conserve power and increase throughput.

Energy efficiency represents another critical advantage of co-design approaches. By offloading computation-intensive tasks to specialized hardware, power consumption can be reduced by 60-80% compared to general-purpose processor implementations, making this approach particularly valuable for battery-powered devices and energy-constrained applications.

Looking forward, emerging co-design methodologies are increasingly incorporating machine learning techniques to predict optimal decoding parameters and dynamically adjust the hardware-software balance based on input characteristics, further optimizing the accuracy-speed tradeoff in real-time applications.

The acceleration of decoding algorithms through hardware-software co-design typically involves custom hardware components such as ASICs (Application-Specific Integrated Circuits), FPGAs (Field-Programmable Gate Arrays), or specialized processing units that are specifically tailored to the computational patterns of decoding operations. These hardware components are designed to efficiently execute the most computationally intensive portions of decoding algorithms.

Complementing the hardware, software optimizations focus on algorithm restructuring, parallelization strategies, and memory access patterns that leverage the underlying hardware capabilities. This includes techniques such as loop unrolling, vectorization, and algorithm-specific optimizations that reduce computational complexity while maintaining acceptable accuracy levels.

Recent advancements in this field have demonstrated significant performance improvements. For instance, LDPC (Low-Density Parity-Check) decoders implemented through hardware-software co-design have achieved up to 10x speedup compared to software-only implementations, while maintaining comparable bit error rates. Similarly, Turbo decoders have seen execution time reductions of 5-8x through specialized hardware accelerators coupled with algorithm adaptations.

The co-design approach enables dynamic adaptation between accuracy and speed based on application requirements. For example, in wireless communication systems, the decoding process can be adjusted based on channel conditions – employing more iterations for challenging environments where accuracy is paramount, while reducing computational effort under favorable conditions to conserve power and increase throughput.

Energy efficiency represents another critical advantage of co-design approaches. By offloading computation-intensive tasks to specialized hardware, power consumption can be reduced by 60-80% compared to general-purpose processor implementations, making this approach particularly valuable for battery-powered devices and energy-constrained applications.

Looking forward, emerging co-design methodologies are increasingly incorporating machine learning techniques to predict optimal decoding parameters and dynamically adjust the hardware-software balance based on input characteristics, further optimizing the accuracy-speed tradeoff in real-time applications.

Benchmarking Methodologies for Decoder Performance

Effective benchmarking methodologies are essential for evaluating decoder performance in a standardized and reproducible manner. When assessing the tradeoffs between accuracy and execution time in decoding algorithms, researchers and engineers must employ rigorous testing frameworks that isolate key performance indicators while controlling for external variables.

The foundation of any robust benchmarking methodology begins with the selection of appropriate datasets. These datasets should represent diverse use cases and complexity levels, including edge cases that might challenge decoder efficiency. Industry-standard datasets such as ImageNet for visual decoders or LibriSpeech for audio decoders provide comparative baselines, while custom datasets can address domain-specific requirements.

Measurement protocols constitute another critical component of benchmarking. Execution time metrics should be collected with high-resolution timing tools, accounting for warm-up periods to eliminate initialization overhead. Multiple runs with statistical analysis help mitigate random variations in performance measurements. For accuracy assessment, metrics must align with the decoder's application domain—BLEU scores for language models, PSNR for image decoders, or word error rates for speech recognition systems.

Hardware normalization represents a significant challenge in benchmarking decoder performance. Variations in computing environments can dramatically affect results, necessitating detailed documentation of hardware specifications, memory configurations, and system loads during testing. Cloud-based benchmarking platforms offer standardized environments but may introduce networking variables that require careful control.

Workload characterization should examine how decoders perform under varying input conditions. This includes analyzing throughput under different batch sizes, latency distribution across input complexities, and resource utilization patterns. Performance profiles that map accuracy against execution time across different decoder configurations provide valuable insights into operational tradeoffs.

Comparative analysis frameworks enable meaningful evaluation against competing solutions. These frameworks should implement consistent testing protocols across all compared algorithms, with normalized scoring systems that weight accuracy and speed according to application-specific requirements. Visualization tools that plot performance across multiple dimensions help stakeholders identify optimal operating points for their use cases.

Documentation standards for benchmarking results must include comprehensive metadata about testing conditions, algorithm configurations, and statistical confidence measures. This transparency ensures reproducibility and facilitates meaningful comparison across different research efforts and implementation environments.

The foundation of any robust benchmarking methodology begins with the selection of appropriate datasets. These datasets should represent diverse use cases and complexity levels, including edge cases that might challenge decoder efficiency. Industry-standard datasets such as ImageNet for visual decoders or LibriSpeech for audio decoders provide comparative baselines, while custom datasets can address domain-specific requirements.

Measurement protocols constitute another critical component of benchmarking. Execution time metrics should be collected with high-resolution timing tools, accounting for warm-up periods to eliminate initialization overhead. Multiple runs with statistical analysis help mitigate random variations in performance measurements. For accuracy assessment, metrics must align with the decoder's application domain—BLEU scores for language models, PSNR for image decoders, or word error rates for speech recognition systems.

Hardware normalization represents a significant challenge in benchmarking decoder performance. Variations in computing environments can dramatically affect results, necessitating detailed documentation of hardware specifications, memory configurations, and system loads during testing. Cloud-based benchmarking platforms offer standardized environments but may introduce networking variables that require careful control.

Workload characterization should examine how decoders perform under varying input conditions. This includes analyzing throughput under different batch sizes, latency distribution across input complexities, and resource utilization patterns. Performance profiles that map accuracy against execution time across different decoder configurations provide valuable insights into operational tradeoffs.

Comparative analysis frameworks enable meaningful evaluation against competing solutions. These frameworks should implement consistent testing protocols across all compared algorithms, with normalized scoring systems that weight accuracy and speed according to application-specific requirements. Visualization tools that plot performance across multiple dimensions help stakeholders identify optimal operating points for their use cases.

Documentation standards for benchmarking results must include comprehensive metadata about testing conditions, algorithm configurations, and statistical confidence measures. This transparency ensures reproducibility and facilitates meaningful comparison across different research efforts and implementation environments.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!