Best Practices For Post-Processing And Interpretation Of Finite Element Results

AUG 28, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

FEA Post-Processing Background and Objectives

Finite Element Analysis (FEA) has evolved significantly since its inception in the 1950s, transforming from a niche mathematical technique to an essential engineering tool across multiple industries. The post-processing phase of FEA represents a critical juncture where raw computational results are translated into actionable engineering insights. This technical exploration aims to comprehensively examine the best practices for FEA post-processing and interpretation, addressing the growing complexity of simulation data management in modern engineering workflows.

The evolution of FEA post-processing capabilities has closely followed advancements in computing power and visualization technologies. Early FEA implementations in the 1970s and 1980s offered limited post-processing capabilities, often restricted to basic contour plots and numerical outputs. The 1990s witnessed significant improvements with the introduction of color-coded visualization and basic animation features. Today's post-processing environments offer sophisticated tools including real-time rendering, virtual reality integration, and automated report generation.

Current technological trends indicate a shift toward more intuitive and automated post-processing workflows. Machine learning algorithms are increasingly being deployed to identify patterns in simulation results, while cloud-based solutions enable collaborative interpretation across distributed engineering teams. The integration of post-processing with digital twin technologies represents another frontier, allowing for continuous comparison between simulation results and real-world performance data.

The primary technical objectives of this investigation include establishing standardized methodologies for result verification and validation, developing frameworks for uncertainty quantification in post-processed results, and identifying optimal approaches for communicating complex simulation outcomes to diverse stakeholders. Additionally, we aim to explore emerging technologies that can enhance the efficiency and accuracy of FEA result interpretation.

A significant challenge in modern FEA workflows is the exponential growth in simulation data volume, necessitating more sophisticated data management strategies. Engineers now routinely process terabytes of simulation results, creating bottlenecks in traditional post-processing pipelines. This investigation will examine scalable approaches to handling large-scale simulation data while maintaining interpretability and accessibility.

The convergence of FEA with other digital engineering technologies, including generative design, topology optimization, and artificial intelligence, is reshaping post-processing requirements. These integrated workflows demand more dynamic and interactive result visualization capabilities that can support iterative design exploration and optimization processes.

The evolution of FEA post-processing capabilities has closely followed advancements in computing power and visualization technologies. Early FEA implementations in the 1970s and 1980s offered limited post-processing capabilities, often restricted to basic contour plots and numerical outputs. The 1990s witnessed significant improvements with the introduction of color-coded visualization and basic animation features. Today's post-processing environments offer sophisticated tools including real-time rendering, virtual reality integration, and automated report generation.

Current technological trends indicate a shift toward more intuitive and automated post-processing workflows. Machine learning algorithms are increasingly being deployed to identify patterns in simulation results, while cloud-based solutions enable collaborative interpretation across distributed engineering teams. The integration of post-processing with digital twin technologies represents another frontier, allowing for continuous comparison between simulation results and real-world performance data.

The primary technical objectives of this investigation include establishing standardized methodologies for result verification and validation, developing frameworks for uncertainty quantification in post-processed results, and identifying optimal approaches for communicating complex simulation outcomes to diverse stakeholders. Additionally, we aim to explore emerging technologies that can enhance the efficiency and accuracy of FEA result interpretation.

A significant challenge in modern FEA workflows is the exponential growth in simulation data volume, necessitating more sophisticated data management strategies. Engineers now routinely process terabytes of simulation results, creating bottlenecks in traditional post-processing pipelines. This investigation will examine scalable approaches to handling large-scale simulation data while maintaining interpretability and accessibility.

The convergence of FEA with other digital engineering technologies, including generative design, topology optimization, and artificial intelligence, is reshaping post-processing requirements. These integrated workflows demand more dynamic and interactive result visualization capabilities that can support iterative design exploration and optimization processes.

Industry Demand for Advanced FEA Result Interpretation

The demand for advanced Finite Element Analysis (FEA) result interpretation capabilities has grown exponentially across multiple industries as engineering challenges become increasingly complex. Manufacturing sectors, particularly aerospace and automotive, are driving significant market pull for sophisticated post-processing tools that can handle massive simulation datasets while providing actionable insights.

Aerospace manufacturers require enhanced visualization and interpretation capabilities to analyze composite materials behavior under extreme conditions. According to recent industry surveys, over 85% of aerospace companies have identified improved FEA result interpretation as critical to reducing development cycles and certification timelines. The ability to quickly identify stress concentrations, failure modes, and safety margins directly impacts design optimization and regulatory compliance.

Automotive industry demands focus on crash simulation result interpretation, with particular emphasis on automated identification of critical deformation patterns and energy absorption pathways. As vehicle designs incorporate more complex material combinations and lightweighting strategies, the need for advanced interpretation tools that can process multi-physics results has become paramount.

Energy sector stakeholders, especially in renewable energy infrastructure development, require specialized interpretation capabilities for large-scale structural analyses. Wind turbine manufacturers specifically seek tools that can correlate simulation results with field measurements to improve predictive accuracy and maintenance scheduling.

Medical device manufacturers represent a rapidly growing market segment demanding advanced FEA interpretation capabilities. The complexity of biomechanical interactions and stringent regulatory requirements drive the need for specialized visualization and reporting tools that can communicate simulation results effectively to both engineering and clinical stakeholders.

Cross-industry trends indicate growing demand for real-time collaborative interpretation platforms that enable geographically distributed teams to simultaneously analyze and discuss simulation results. This demand has accelerated with the shift toward remote work environments and globally distributed engineering teams.

The market also shows strong interest in AI-assisted interpretation tools that can automatically identify patterns, anomalies, and potential design improvements from complex simulation results. Early adopters report significant reductions in analysis time and improved detection of non-obvious failure modes when using machine learning augmented interpretation workflows.

Customer feedback consistently highlights the need for seamless integration between interpretation tools and broader product lifecycle management systems, enabling traceability between simulation results and design decisions throughout the development process.

Aerospace manufacturers require enhanced visualization and interpretation capabilities to analyze composite materials behavior under extreme conditions. According to recent industry surveys, over 85% of aerospace companies have identified improved FEA result interpretation as critical to reducing development cycles and certification timelines. The ability to quickly identify stress concentrations, failure modes, and safety margins directly impacts design optimization and regulatory compliance.

Automotive industry demands focus on crash simulation result interpretation, with particular emphasis on automated identification of critical deformation patterns and energy absorption pathways. As vehicle designs incorporate more complex material combinations and lightweighting strategies, the need for advanced interpretation tools that can process multi-physics results has become paramount.

Energy sector stakeholders, especially in renewable energy infrastructure development, require specialized interpretation capabilities for large-scale structural analyses. Wind turbine manufacturers specifically seek tools that can correlate simulation results with field measurements to improve predictive accuracy and maintenance scheduling.

Medical device manufacturers represent a rapidly growing market segment demanding advanced FEA interpretation capabilities. The complexity of biomechanical interactions and stringent regulatory requirements drive the need for specialized visualization and reporting tools that can communicate simulation results effectively to both engineering and clinical stakeholders.

Cross-industry trends indicate growing demand for real-time collaborative interpretation platforms that enable geographically distributed teams to simultaneously analyze and discuss simulation results. This demand has accelerated with the shift toward remote work environments and globally distributed engineering teams.

The market also shows strong interest in AI-assisted interpretation tools that can automatically identify patterns, anomalies, and potential design improvements from complex simulation results. Early adopters report significant reductions in analysis time and improved detection of non-obvious failure modes when using machine learning augmented interpretation workflows.

Customer feedback consistently highlights the need for seamless integration between interpretation tools and broader product lifecycle management systems, enabling traceability between simulation results and design decisions throughout the development process.

Current Limitations and Challenges in FEA Post-Processing

Despite significant advancements in Finite Element Analysis (FEA) technology, post-processing and interpretation of results continue to face substantial challenges that limit their effectiveness in engineering applications. One primary limitation is the overwhelming volume of data generated by modern FEA simulations. High-fidelity models can produce terabytes of data, making efficient storage, retrieval, and visualization increasingly difficult. This data deluge often leads to computational bottlenecks during post-processing, particularly when handling complex multiphysics simulations.

Visualization challenges persist as a significant hurdle in FEA post-processing. Current visualization tools struggle to effectively represent multidimensional data and time-dependent phenomena simultaneously. Engineers frequently encounter difficulties when attempting to visualize coupled physical effects or when needing to identify critical regions in large-scale models. The limitations in 3D visualization techniques often result in missed insights or incomplete understanding of simulation results.

Interpretation accuracy remains problematic due to the inherent uncertainties in FEA models. Mesh quality issues, boundary condition approximations, and material property assumptions all contribute to result uncertainties that are rarely quantified adequately during post-processing. Most commercial software packages provide limited capabilities for uncertainty quantification and sensitivity analysis, leaving engineers to make critical decisions without a comprehensive understanding of result reliability.

Interoperability between different FEA platforms presents another significant challenge. The lack of standardized data formats for results exchange creates workflow inefficiencies when organizations use multiple simulation tools or when collaborating across different teams. Engineers often resort to time-consuming data conversion processes or simplified data exchange that may compromise result fidelity.

Automation deficiencies in post-processing workflows represent a persistent limitation. While simulation setup has seen significant automation advances, result interpretation remains largely manual and expertise-dependent. The absence of intelligent algorithms capable of identifying critical results or anomalies means engineers must spend considerable time manually reviewing extensive datasets, increasing the risk of overlooking important findings.

Knowledge capture and transfer challenges further complicate FEA post-processing. The interpretation of results often relies heavily on tacit knowledge and experience that is difficult to formalize or transfer to less experienced engineers. Current tools provide limited capabilities for capturing interpretation rationales or decision-making processes, creating knowledge silos within organizations and impeding the development of junior engineers.

Visualization challenges persist as a significant hurdle in FEA post-processing. Current visualization tools struggle to effectively represent multidimensional data and time-dependent phenomena simultaneously. Engineers frequently encounter difficulties when attempting to visualize coupled physical effects or when needing to identify critical regions in large-scale models. The limitations in 3D visualization techniques often result in missed insights or incomplete understanding of simulation results.

Interpretation accuracy remains problematic due to the inherent uncertainties in FEA models. Mesh quality issues, boundary condition approximations, and material property assumptions all contribute to result uncertainties that are rarely quantified adequately during post-processing. Most commercial software packages provide limited capabilities for uncertainty quantification and sensitivity analysis, leaving engineers to make critical decisions without a comprehensive understanding of result reliability.

Interoperability between different FEA platforms presents another significant challenge. The lack of standardized data formats for results exchange creates workflow inefficiencies when organizations use multiple simulation tools or when collaborating across different teams. Engineers often resort to time-consuming data conversion processes or simplified data exchange that may compromise result fidelity.

Automation deficiencies in post-processing workflows represent a persistent limitation. While simulation setup has seen significant automation advances, result interpretation remains largely manual and expertise-dependent. The absence of intelligent algorithms capable of identifying critical results or anomalies means engineers must spend considerable time manually reviewing extensive datasets, increasing the risk of overlooking important findings.

Knowledge capture and transfer challenges further complicate FEA post-processing. The interpretation of results often relies heavily on tacit knowledge and experience that is difficult to formalize or transfer to less experienced engineers. Current tools provide limited capabilities for capturing interpretation rationales or decision-making processes, creating knowledge silos within organizations and impeding the development of junior engineers.

State-of-the-Art Post-Processing Techniques

01 Visualization and interpretation of FEA results

Advanced visualization techniques are essential for interpreting finite element analysis results. These include color-coded stress/strain maps, deformation animations, and interactive 3D models that allow engineers to identify critical areas requiring attention. Modern post-processing tools provide customizable visualization options to highlight specific parameters and thresholds, enabling more intuitive understanding of complex simulation outcomes.- Visualization techniques for FEA results: Advanced visualization techniques are essential for interpreting finite element analysis results. These include 3D rendering, color mapping of stress/strain distributions, and interactive graphical interfaces that allow engineers to examine critical areas in detail. Visualization tools help transform complex numerical data into comprehensible visual representations, enabling better understanding of structural behavior and identification of potential failure points.

- Data interpretation and analysis methods: Post-processing methods for analyzing FEA results include statistical analysis, trend identification, and comparative assessment techniques. These methods help engineers extract meaningful insights from simulation data, identify patterns, and make informed decisions. Advanced algorithms can automatically detect critical regions, calculate safety factors, and evaluate performance against design criteria, streamlining the interpretation process and improving accuracy.

- Integration with design optimization workflows: FEA post-processing can be integrated with design optimization workflows to iteratively improve designs based on analysis results. This integration enables automatic parameter adjustment, shape optimization, and topology optimization based on stress distribution, deformation patterns, and other performance metrics. The seamless connection between analysis results and design modifications accelerates product development and leads to more efficient designs.

- Automated report generation and documentation: Automated systems can generate comprehensive reports from FEA results, including summary statistics, critical findings, and visual representations. These reporting tools help engineers document analysis outcomes, communicate results to stakeholders, and maintain records for regulatory compliance. Advanced reporting features can highlight key performance indicators, compare results against acceptance criteria, and provide recommendations for design improvements.

- Error estimation and validation techniques: Post-processing includes methods for estimating numerical errors, validating results against experimental data, and assessing the reliability of FEA predictions. These techniques help engineers understand the limitations of their models and quantify the confidence level in simulation outcomes. Validation approaches may include mesh refinement studies, sensitivity analyses, and comparison with physical test results to ensure the accuracy of finite element solutions.

02 Data extraction and analysis methods

Post-processing of FEA results involves extracting relevant data points and performing statistical analysis to derive meaningful conclusions. This includes methods for identifying maximum/minimum values, calculating safety factors, and performing sensitivity analyses. Advanced algorithms can automatically detect critical regions, extract time-series data, and generate comprehensive reports that summarize key findings from complex simulations.Expand Specific Solutions03 Result validation and error assessment

Validation of FEA results is crucial for ensuring simulation accuracy. Post-processing tools incorporate error estimation techniques, mesh quality assessment, and convergence analysis to evaluate result reliability. Comparison with experimental data or analytical solutions helps verify simulation outcomes. Methods for quantifying numerical errors and identifying modeling deficiencies enable engineers to refine their analyses and increase confidence in the results.Expand Specific Solutions04 Multi-physics and multi-scale result integration

Modern FEA post-processing involves integrating results from different physics domains and across multiple scales. Tools enable correlation between structural, thermal, electromagnetic, and fluid analyses to understand complex interactions. Techniques for mapping results between different mesh types and scales allow for comprehensive system-level assessment while maintaining detailed insights at critical locations.Expand Specific Solutions05 Automated reporting and decision support

Automated post-processing systems streamline the interpretation of FEA results by generating standardized reports with key findings and recommendations. These systems incorporate decision support algorithms that flag potential design issues, suggest optimization opportunities, and provide comparative analyses against design requirements. Machine learning techniques can identify patterns in simulation results to predict potential failure modes and recommend design improvements.Expand Specific Solutions

Leading Software Vendors and Research Institutions

The finite element post-processing and interpretation landscape is currently in a mature growth phase, with an estimated market size exceeding $2 billion annually and growing at 8-10% CAGR. Industry leaders like ANSYS, Inc. dominate with comprehensive simulation ecosystems, while Siemens Industry Software maintains strong market presence through integrated PLM solutions. Major industrial players including Boeing, Mitsubishi Electric, and Lam Research are driving innovation through practical applications in aerospace, electronics, and semiconductor manufacturing. Academic institutions such as Carnegie Mellon University, Xi'an Jiaotong University, and Osaka University contribute significantly to theoretical advancements. The technology shows high maturity with standardized workflows, though emerging challenges in multi-physics simulation and AI-assisted interpretation represent frontier development areas requiring continued investment and research collaboration.

The Boeing Co.

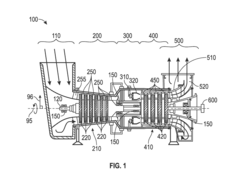

Technical Solution: Boeing has developed proprietary post-processing methodologies for aerospace applications that focus on certification-ready result interpretation. Their approach emphasizes traceability and verification of simulation results against regulatory requirements. Boeing's system incorporates automated checks against design allowables, flagging potential compliance issues during post-processing rather than requiring manual verification. The company employs specialized visualization techniques for composite structures that reveal inter-laminar stresses and potential delamination sites not immediately apparent in standard result views. Their methodology includes statistical processing of results across multiple load cases and flight conditions, identifying critical design scenarios from thousands of simulation permutations. Boeing has pioneered techniques for correlating simulation results with flight test data, creating feedback loops that continuously improve interpretation guidelines. Their approach also includes specialized fatigue analysis post-processors that account for the unique loading profiles of aerospace structures, incorporating environmental factors like temperature cycling and moisture effects into result interpretation.

Strengths: Exceptional focus on regulatory compliance and certification requirements; sophisticated handling of composite material result interpretation; robust statistical methods for managing multiple load cases. Weaknesses: Highly specialized for aerospace applications with limited applicability to other industries; requires significant domain expertise to implement effectively; proprietary nature limits broader adoption outside Boeing's ecosystem.

ANSYS, Inc.

Technical Solution: ANSYS has developed comprehensive post-processing solutions for finite element analysis (FEA) results through their ANSYS Mechanical and ANSYS Discovery platforms. Their approach integrates advanced visualization techniques with automated result interpretation algorithms. The company's methodology includes automated stress linearization for pressure vessel analysis, fatigue assessment tools that process cyclic loading results, and customizable reporting templates that streamline documentation of critical findings. ANSYS employs adaptive mesh refinement technology that intelligently identifies regions requiring higher resolution post-processing based on solution gradients. Their EnSight post-processing tool allows for comparative analysis across multiple simulation scenarios, enabling engineers to quickly identify optimal designs. The company has also pioneered techniques for uncertainty quantification in FEA results, helping engineers understand the reliability of their simulations and make data-driven decisions.

Strengths: Industry-leading visualization capabilities with extensive customization options; seamless integration across simulation workflows; robust automation tools for repetitive post-processing tasks. Weaknesses: Steep learning curve for advanced features; resource-intensive for very large models; premium pricing structure may be prohibitive for smaller organizations.

Critical Algorithms for FEA Result Validation

Post processing finite element analysis geometry

PatentInactiveUS20150227657A1

Innovation

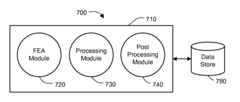

- A method and system utilizing a finite element analysis module to select seed nodes, create a node set based on a predetermined geometric pattern, and a post-processing module to aggregate and display the effects of load cycles on these nodes, significantly reducing manual effort by automating the extraction and presentation of results.

Method of finite element post - processing for a structural system involving fasteners

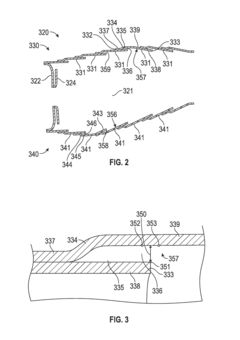

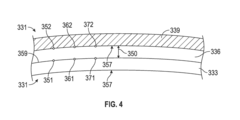

PatentWO2013175262A1

Innovation

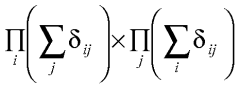

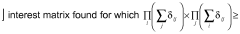

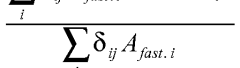

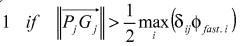

- A method for post-processing structural systems that involves reading finite element model files and fasteners pattern files, using an interest zone sizing process to associate nodes with fasteners independently of selection sequence, ensuring load conservation and moment balance, thereby simplifying and automating the calculation of junction strength.

Verification and Validation Standards

Verification and validation (V&V) standards form the cornerstone of reliable finite element analysis (FEA) post-processing. The American Society of Mechanical Engineers (ASME) V&V 10 standard provides comprehensive guidelines specifically for solid mechanics applications, establishing systematic procedures for result verification against analytical solutions and validation against experimental data. This standard emphasizes quantification of numerical errors and uncertainties, essential for establishing result credibility.

The NAFEMS (International Association for the Engineering Modelling, Analysis and Simulation Community) has developed specialized benchmarks and quality assurance procedures that have become industry standards for FEA verification. These benchmarks provide reference solutions for common engineering problems, allowing analysts to verify their post-processing methodologies against established results.

ISO 16949 and AS9100 standards, while broader in scope, contain specific provisions relevant to FEA result interpretation in automotive and aerospace industries respectively. They mandate documentation of verification procedures and traceability of simulation results, ensuring consistency in post-processing methodologies across complex projects.

For safety-critical applications, standards such as IEC 61508 require formal verification processes including independent review of post-processed results. This standard introduces the concept of Safety Integrity Levels (SILs) that directly impact the rigor required in FEA result interpretation and the acceptable uncertainty margins.

The implementation of these standards necessitates establishing clear acceptance criteria for post-processed results. This typically involves defining allowable deviations from benchmark solutions, specifying convergence criteria, and establishing protocols for mesh sensitivity studies. Organizations must develop standardized reporting templates that document compliance with relevant V&V standards.

Recent developments in V&V standards have begun addressing the challenges of non-linear analyses and multi-physics simulations. The ASME V&V 50 standard specifically addresses verification and validation in computational modeling of medical devices, providing specialized guidance for biomechanical applications where traditional engineering standards may be insufficient.

Proper implementation of these standards requires organizations to establish formal review processes for FEA results. This typically involves technical review committees with expertise in both the physical phenomena being modeled and the numerical methods employed, ensuring comprehensive evaluation of post-processed results against established validation metrics.

The NAFEMS (International Association for the Engineering Modelling, Analysis and Simulation Community) has developed specialized benchmarks and quality assurance procedures that have become industry standards for FEA verification. These benchmarks provide reference solutions for common engineering problems, allowing analysts to verify their post-processing methodologies against established results.

ISO 16949 and AS9100 standards, while broader in scope, contain specific provisions relevant to FEA result interpretation in automotive and aerospace industries respectively. They mandate documentation of verification procedures and traceability of simulation results, ensuring consistency in post-processing methodologies across complex projects.

For safety-critical applications, standards such as IEC 61508 require formal verification processes including independent review of post-processed results. This standard introduces the concept of Safety Integrity Levels (SILs) that directly impact the rigor required in FEA result interpretation and the acceptable uncertainty margins.

The implementation of these standards necessitates establishing clear acceptance criteria for post-processed results. This typically involves defining allowable deviations from benchmark solutions, specifying convergence criteria, and establishing protocols for mesh sensitivity studies. Organizations must develop standardized reporting templates that document compliance with relevant V&V standards.

Recent developments in V&V standards have begun addressing the challenges of non-linear analyses and multi-physics simulations. The ASME V&V 50 standard specifically addresses verification and validation in computational modeling of medical devices, providing specialized guidance for biomechanical applications where traditional engineering standards may be insufficient.

Proper implementation of these standards requires organizations to establish formal review processes for FEA results. This typically involves technical review committees with expertise in both the physical phenomena being modeled and the numerical methods employed, ensuring comprehensive evaluation of post-processed results against established validation metrics.

Uncertainty Quantification Methods

Uncertainty quantification (UQ) in finite element analysis has become increasingly important as engineers and researchers seek to understand the reliability and robustness of their simulation results. The inherent variability in material properties, boundary conditions, and geometric parameters necessitates systematic approaches to quantify result uncertainties.

Monte Carlo simulation remains the gold standard for uncertainty quantification in FEA, involving multiple analyses with randomly sampled input parameters. While computationally intensive, this method provides comprehensive probability distributions of output quantities. Recent advancements in sampling techniques, such as Latin Hypercube and Quasi-Monte Carlo methods, have significantly improved computational efficiency without sacrificing accuracy.

Polynomial Chaos Expansion (PCE) has emerged as a powerful alternative, representing stochastic responses as orthogonal polynomial expansions. PCE offers exceptional efficiency for problems with moderate dimensionality, providing both mean response and variance with fewer simulations than traditional Monte Carlo approaches. The non-intrusive variant (NIPCE) has gained particular traction as it allows utilization of existing deterministic solvers.

Sensitivity analysis methods complement UQ by identifying which input parameters most significantly affect output variability. Global sensitivity indices, particularly Sobol indices, quantify the contribution of each parameter to the overall output variance, enabling engineers to focus refinement efforts on the most influential factors.

Bayesian approaches to UQ have revolutionized how prior knowledge is incorporated into uncertainty assessments. These methods update probability distributions of uncertain parameters as new data becomes available, making them particularly valuable for model calibration and validation against experimental results. Markov Chain Monte Carlo (MCMC) algorithms facilitate implementation of these approaches in complex FEA applications.

Meta-modeling techniques, including Kriging and Radial Basis Functions, construct surrogate models that approximate the full FEA model at reduced computational cost. These surrogates enable rapid uncertainty propagation and are increasingly integrated with machine learning algorithms to enhance predictive capabilities across the parameter space.

Industry best practices now recommend multi-fidelity approaches that strategically combine high-fidelity FEA models with lower-fidelity approximations to balance accuracy and computational efficiency. This hierarchical framework allows comprehensive uncertainty quantification within practical time constraints, making UQ feasible even for complex industrial applications.

Monte Carlo simulation remains the gold standard for uncertainty quantification in FEA, involving multiple analyses with randomly sampled input parameters. While computationally intensive, this method provides comprehensive probability distributions of output quantities. Recent advancements in sampling techniques, such as Latin Hypercube and Quasi-Monte Carlo methods, have significantly improved computational efficiency without sacrificing accuracy.

Polynomial Chaos Expansion (PCE) has emerged as a powerful alternative, representing stochastic responses as orthogonal polynomial expansions. PCE offers exceptional efficiency for problems with moderate dimensionality, providing both mean response and variance with fewer simulations than traditional Monte Carlo approaches. The non-intrusive variant (NIPCE) has gained particular traction as it allows utilization of existing deterministic solvers.

Sensitivity analysis methods complement UQ by identifying which input parameters most significantly affect output variability. Global sensitivity indices, particularly Sobol indices, quantify the contribution of each parameter to the overall output variance, enabling engineers to focus refinement efforts on the most influential factors.

Bayesian approaches to UQ have revolutionized how prior knowledge is incorporated into uncertainty assessments. These methods update probability distributions of uncertain parameters as new data becomes available, making them particularly valuable for model calibration and validation against experimental results. Markov Chain Monte Carlo (MCMC) algorithms facilitate implementation of these approaches in complex FEA applications.

Meta-modeling techniques, including Kriging and Radial Basis Functions, construct surrogate models that approximate the full FEA model at reduced computational cost. These surrogates enable rapid uncertainty propagation and are increasingly integrated with machine learning algorithms to enhance predictive capabilities across the parameter space.

Industry best practices now recommend multi-fidelity approaches that strategically combine high-fidelity FEA models with lower-fidelity approximations to balance accuracy and computational efficiency. This hierarchical framework allows comprehensive uncertainty quantification within practical time constraints, making UQ feasible even for complex industrial applications.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!