Finite Element Model Reduction Techniques For Real-Time Applications

AUG 28, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

FEM Reduction Background and Objectives

Finite Element Method (FEM) has been a cornerstone of engineering simulation since its formalization in the 1950s and 1960s. Originally developed for structural analysis, FEM has expanded across multiple disciplines including fluid dynamics, electromagnetics, and multiphysics applications. However, the computational intensity of traditional FEM implementations has historically limited its application in real-time scenarios, creating a significant gap between offline simulation capabilities and the growing demand for interactive, instantaneous analysis.

The evolution of FEM reduction techniques represents a critical response to this challenge. Beginning with static condensation methods in the 1960s, progressing through Component Mode Synthesis (CMS) in the 1970s, and advancing to modern Proper Orthogonal Decomposition (POD) and Reduced Basis Methods (RBM), the field has continuously sought to balance computational efficiency with solution accuracy.

Recent technological trends have dramatically increased the need for real-time FEM applications. The rise of digital twins in manufacturing, interactive surgical simulation in healthcare, and advanced driver-assistance systems in automotive engineering all demand immediate computational responses that traditional FEM cannot deliver. This acceleration is further driven by the proliferation of edge computing devices and augmented reality interfaces that require on-the-fly simulation capabilities.

The primary objective of FEM reduction techniques is to create computationally efficient surrogate models that preserve the essential physics of full-order models while dramatically reducing degrees of freedom. These techniques aim to achieve orders-of-magnitude speedup while maintaining acceptable accuracy thresholds for specific application domains. The ultimate goal is enabling simulation response times compatible with human interaction (typically under 100ms) or control system requirements.

Secondary objectives include developing adaptive reduction frameworks that can automatically adjust fidelity based on computational resources and accuracy requirements, creating specialized reduction approaches for nonlinear and time-dependent problems, and establishing robust error estimation methodologies to quantify the reliability of reduced models.

The technical landscape is further complicated by the emergence of machine learning approaches that complement traditional model reduction techniques. These hybrid methods leverage data-driven insights to enhance the performance of physics-based reduced models, potentially offering new pathways to overcome longstanding challenges in nonlinear model reduction.

As computational hardware continues to evolve with specialized architectures for matrix operations and parallel processing, the field of FEM reduction stands at an inflection point where theoretical advances can be translated into practical applications across numerous industries requiring real-time simulation capabilities.

The evolution of FEM reduction techniques represents a critical response to this challenge. Beginning with static condensation methods in the 1960s, progressing through Component Mode Synthesis (CMS) in the 1970s, and advancing to modern Proper Orthogonal Decomposition (POD) and Reduced Basis Methods (RBM), the field has continuously sought to balance computational efficiency with solution accuracy.

Recent technological trends have dramatically increased the need for real-time FEM applications. The rise of digital twins in manufacturing, interactive surgical simulation in healthcare, and advanced driver-assistance systems in automotive engineering all demand immediate computational responses that traditional FEM cannot deliver. This acceleration is further driven by the proliferation of edge computing devices and augmented reality interfaces that require on-the-fly simulation capabilities.

The primary objective of FEM reduction techniques is to create computationally efficient surrogate models that preserve the essential physics of full-order models while dramatically reducing degrees of freedom. These techniques aim to achieve orders-of-magnitude speedup while maintaining acceptable accuracy thresholds for specific application domains. The ultimate goal is enabling simulation response times compatible with human interaction (typically under 100ms) or control system requirements.

Secondary objectives include developing adaptive reduction frameworks that can automatically adjust fidelity based on computational resources and accuracy requirements, creating specialized reduction approaches for nonlinear and time-dependent problems, and establishing robust error estimation methodologies to quantify the reliability of reduced models.

The technical landscape is further complicated by the emergence of machine learning approaches that complement traditional model reduction techniques. These hybrid methods leverage data-driven insights to enhance the performance of physics-based reduced models, potentially offering new pathways to overcome longstanding challenges in nonlinear model reduction.

As computational hardware continues to evolve with specialized architectures for matrix operations and parallel processing, the field of FEM reduction stands at an inflection point where theoretical advances can be translated into practical applications across numerous industries requiring real-time simulation capabilities.

Market Demand for Real-Time FEM Applications

The market for real-time Finite Element Method (FEM) applications has experienced significant growth over the past decade, driven primarily by increasing demands across multiple industries for faster simulation capabilities. Traditional FEM analysis, while accurate, has historically been computationally intensive, often requiring hours or days to complete complex simulations. This time constraint has limited its application in scenarios requiring immediate feedback or interactive decision-making.

The automotive industry represents one of the largest market segments for real-time FEM applications, with an estimated market value exceeding $2 billion. Vehicle manufacturers increasingly rely on real-time crash simulations, structural analysis, and noise-vibration-harshness (NVH) testing to accelerate product development cycles. The ability to perform these analyses in real-time has reduced development timelines by approximately 30% for some manufacturers.

Medical device companies have emerged as another significant market, particularly for surgical simulation and training. Real-time FEM enables accurate tissue deformation modeling, providing realistic feedback for surgical training systems. This market segment has grown at a compound annual rate of 24% since 2018, reflecting the increasing adoption of simulation-based medical training.

The aerospace sector demonstrates substantial demand for real-time structural analysis during both design and operational phases. Aircraft manufacturers utilize real-time FEM for rapid design iterations, while maintenance operations benefit from immediate structural integrity assessments. This capability has become particularly valuable for composite material applications, where complex failure modes require sophisticated modeling approaches.

Consumer electronics manufacturers have recently increased their adoption of real-time FEM for drop-test simulations and thermal analysis. The compressed product development cycles in this industry necessitate rapid simulation capabilities to evaluate multiple design iterations quickly. Market research indicates that companies implementing real-time FEM techniques have reduced physical prototyping costs by up to 40%.

Virtual and augmented reality applications represent the fastest-growing market segment, with demand increasing by approximately 35% annually. These applications require real-time physics simulations to create convincing interactive environments, driving significant investment in model reduction techniques that can operate within strict computational constraints.

Market forecasts project the global real-time FEM application market to reach $5.7 billion by 2027, representing a compound annual growth rate of 18.3%. This growth trajectory is supported by the continuing advancement of computational hardware, parallel processing techniques, and the development of more efficient model reduction algorithms that maintain accuracy while dramatically reducing computational requirements.

The automotive industry represents one of the largest market segments for real-time FEM applications, with an estimated market value exceeding $2 billion. Vehicle manufacturers increasingly rely on real-time crash simulations, structural analysis, and noise-vibration-harshness (NVH) testing to accelerate product development cycles. The ability to perform these analyses in real-time has reduced development timelines by approximately 30% for some manufacturers.

Medical device companies have emerged as another significant market, particularly for surgical simulation and training. Real-time FEM enables accurate tissue deformation modeling, providing realistic feedback for surgical training systems. This market segment has grown at a compound annual rate of 24% since 2018, reflecting the increasing adoption of simulation-based medical training.

The aerospace sector demonstrates substantial demand for real-time structural analysis during both design and operational phases. Aircraft manufacturers utilize real-time FEM for rapid design iterations, while maintenance operations benefit from immediate structural integrity assessments. This capability has become particularly valuable for composite material applications, where complex failure modes require sophisticated modeling approaches.

Consumer electronics manufacturers have recently increased their adoption of real-time FEM for drop-test simulations and thermal analysis. The compressed product development cycles in this industry necessitate rapid simulation capabilities to evaluate multiple design iterations quickly. Market research indicates that companies implementing real-time FEM techniques have reduced physical prototyping costs by up to 40%.

Virtual and augmented reality applications represent the fastest-growing market segment, with demand increasing by approximately 35% annually. These applications require real-time physics simulations to create convincing interactive environments, driving significant investment in model reduction techniques that can operate within strict computational constraints.

Market forecasts project the global real-time FEM application market to reach $5.7 billion by 2027, representing a compound annual growth rate of 18.3%. This growth trajectory is supported by the continuing advancement of computational hardware, parallel processing techniques, and the development of more efficient model reduction algorithms that maintain accuracy while dramatically reducing computational requirements.

Current Challenges in Model Reduction Techniques

Despite significant advancements in model reduction techniques for finite element analysis, several critical challenges persist that impede their widespread adoption in real-time applications. The computational complexity of traditional model order reduction methods remains a substantial barrier, particularly when dealing with highly nonlinear systems. Current algorithms often struggle to maintain accuracy while achieving the necessary speed for real-time performance, creating a fundamental tension between fidelity and computational efficiency.

Parameter sensitivity presents another significant challenge, as reduced models frequently exhibit instability when operating outside their training parameter range. This limitation severely restricts their applicability in dynamic environments where conditions may change unpredictably, requiring extensive retraining or adaptive mechanisms that add computational overhead.

Error estimation and control mechanisms in reduced models remain underdeveloped, making it difficult to quantify uncertainty in real-time simulations. Without reliable error bounds, engineers cannot confidently rely on reduced models for critical applications, limiting their utility in safety-critical systems where performance guarantees are essential.

The handling of multi-physics and multi-scale phenomena poses particular difficulties, as current reduction techniques often fail to adequately capture interactions between different physical domains or scale levels. This shortcoming becomes especially problematic in complex systems like biomedical applications or advanced materials modeling, where such interactions are fundamental to system behavior.

Data requirements for training effective reduced models represent another significant hurdle. Many current approaches demand extensive high-fidelity simulation data, creating a computational bottleneck during the offline phase that can negate the efficiency gains during online deployment. This challenge is particularly acute for complex geometries or materials with sophisticated constitutive relationships.

Implementation complexity and software integration issues further complicate adoption, as many reduction techniques require specialized expertise and are not well-integrated into commercial finite element software packages. The lack of standardized interfaces and user-friendly tools creates barriers for practitioners who may lack the theoretical background to implement these methods effectively.

Finally, balancing model generalizability with computational efficiency remains an open research question. Models that are too specialized may perform excellently for specific scenarios but fail to generalize, while more robust models often sacrifice the speed necessary for real-time applications. Finding the optimal trade-off continues to challenge researchers and practitioners alike.

Parameter sensitivity presents another significant challenge, as reduced models frequently exhibit instability when operating outside their training parameter range. This limitation severely restricts their applicability in dynamic environments where conditions may change unpredictably, requiring extensive retraining or adaptive mechanisms that add computational overhead.

Error estimation and control mechanisms in reduced models remain underdeveloped, making it difficult to quantify uncertainty in real-time simulations. Without reliable error bounds, engineers cannot confidently rely on reduced models for critical applications, limiting their utility in safety-critical systems where performance guarantees are essential.

The handling of multi-physics and multi-scale phenomena poses particular difficulties, as current reduction techniques often fail to adequately capture interactions between different physical domains or scale levels. This shortcoming becomes especially problematic in complex systems like biomedical applications or advanced materials modeling, where such interactions are fundamental to system behavior.

Data requirements for training effective reduced models represent another significant hurdle. Many current approaches demand extensive high-fidelity simulation data, creating a computational bottleneck during the offline phase that can negate the efficiency gains during online deployment. This challenge is particularly acute for complex geometries or materials with sophisticated constitutive relationships.

Implementation complexity and software integration issues further complicate adoption, as many reduction techniques require specialized expertise and are not well-integrated into commercial finite element software packages. The lack of standardized interfaces and user-friendly tools creates barriers for practitioners who may lack the theoretical background to implement these methods effectively.

Finally, balancing model generalizability with computational efficiency remains an open research question. Models that are too specialized may perform excellently for specific scenarios but fail to generalize, while more robust models often sacrifice the speed necessary for real-time applications. Finding the optimal trade-off continues to challenge researchers and practitioners alike.

Current Model Reduction Implementations

01 Projection-based model reduction techniques

Projection-based methods reduce the dimensionality of finite element models by projecting the full-order model onto a lower-dimensional subspace. These techniques include Proper Orthogonal Decomposition (POD), Krylov subspace methods, and balanced truncation. They preserve the essential dynamics of the system while significantly reducing computational complexity and simulation time. These approaches are particularly effective for large-scale systems where traditional simulation methods would be prohibitively expensive.- Projection-based model reduction techniques: Projection-based methods reduce the dimensionality of finite element models by projecting the full-order system onto a lower-dimensional subspace. These techniques include Proper Orthogonal Decomposition (POD), Krylov subspace methods, and balanced truncation. They preserve the essential dynamics of the original model while significantly reducing computational complexity, making them suitable for real-time simulations and optimization problems.

- Machine learning approaches for model reduction: Machine learning algorithms are increasingly used to create reduced-order models from finite element simulations. Neural networks, deep learning, and other AI techniques can learn the underlying patterns and relationships in complex models, enabling efficient approximations. These approaches are particularly effective for nonlinear systems and can adapt to new data, providing flexible reduced models that maintain accuracy while dramatically reducing computational requirements.

- Component mode synthesis and domain decomposition: These techniques divide complex structures into substructures or components that can be analyzed independently before being assembled into a complete system. By retaining only the most significant modes from each component, the overall model size is reduced while maintaining accuracy at interfaces. This approach is particularly valuable for large-scale structures and systems with repetitive elements, enabling parallel processing and more efficient simulations.

- Adaptive mesh refinement and coarsening: Adaptive techniques dynamically adjust the finite element mesh resolution based on error estimates or solution gradients. Areas requiring high accuracy maintain fine meshes while less critical regions use coarser elements. This approach optimizes computational resources by focusing detail where needed, effectively reducing the total degrees of freedom while maintaining solution accuracy. The method can be applied iteratively during simulation to continuously optimize the model.

- Physics-based model reduction for specific applications: These techniques leverage domain-specific physical insights to create simplified models. By incorporating known behaviors and constraints of particular systems (such as in biomedical imaging, structural dynamics, or fluid mechanics), unnecessary complexities can be eliminated. This approach often combines mathematical simplifications with empirical observations to create highly efficient reduced models tailored to specific applications while preserving essential physical characteristics.

02 Machine learning approaches for model order reduction

Machine learning techniques are increasingly applied to finite element model reduction. These approaches use neural networks, deep learning, and other AI methods to learn reduced-order representations of complex systems. By training on simulation data from high-fidelity models, these techniques can create surrogate models that accurately predict system behavior at a fraction of the computational cost. They are particularly valuable for real-time applications and systems with complex nonlinear behaviors.Expand Specific Solutions03 Domain decomposition and substructuring methods

These techniques divide the finite element model into smaller, more manageable subdomains or substructures. Each subdomain can be analyzed independently and then coupled at interfaces. Component mode synthesis (CMS) is a common approach that represents each substructure using a combination of static and dynamic modes. These methods are particularly effective for large, complex structures and enable parallel processing, which further reduces computational time.Expand Specific Solutions04 Adaptive model reduction techniques

Adaptive model reduction methods dynamically adjust the level of model fidelity based on error estimates or solution characteristics. These techniques identify regions requiring high resolution and apply appropriate reduction strategies elsewhere. Error-controlled adaptive methods ensure that the reduced model maintains accuracy within specified tolerances while minimizing computational resources. This approach is particularly valuable for problems with localized phenomena or time-varying characteristics.Expand Specific Solutions05 Physics-based and parametric model reduction

These techniques leverage physical insights and parametric dependencies to create efficient reduced-order models. They preserve the underlying physical principles while reducing model complexity. Methods include reduced basis techniques, physics-informed neural networks, and parametric model order reduction. These approaches are particularly effective for design optimization, uncertainty quantification, and sensitivity analysis, where models must be evaluated repeatedly for different parameter values.Expand Specific Solutions

Key Industry Players and Research Groups

The finite element model reduction techniques for real-time applications market is currently in a growth phase, with increasing demand across automotive, aerospace, and industrial sectors. The global market size is estimated at $2-3 billion, expanding at approximately 15% annually as industries seek computational efficiency. Technology maturity varies across players: ANSYS, Siemens, and Dassault Systèmes lead with mature commercial solutions; Boeing, RTX, and Rolls-Royce have developed specialized proprietary implementations; while academic institutions like MIT and USC are advancing theoretical foundations. Emerging players include Landmark Graphics in oil/gas applications and Volume Graphics in non-destructive testing. The competitive landscape shows a clear division between established engineering software providers and industry-specific implementations tailored for real-time simulation needs.

ANSYS, Inc.

Technical Solution: ANSYS has developed advanced Model Order Reduction (MOR) techniques specifically designed for real-time finite element applications. Their approach combines Proper Orthogonal Decomposition (POD) with Hyperreduction methods to create compact yet accurate reduced-order models. ANSYS's Twin Builder platform implements these techniques to generate real-time capable digital twins from complex FEA models. The system automatically identifies the most significant modes and degrees of freedom, reducing model complexity while preserving critical dynamic behaviors. Their solution includes adaptive error control mechanisms that dynamically adjust the reduced model fidelity based on simulation requirements. ANSYS has demonstrated up to 100x speedup in structural dynamics simulations while maintaining accuracy within 2-5% of full models[1]. Their implementation supports multi-physics coupling, allowing reduced models to interact with other simulation domains in real-time applications.

Strengths: Industry-leading integration between high-fidelity FEA and reduced models, with robust error estimation capabilities and seamless workflow from detailed design to real-time applications. Weaknesses: Requires significant computational resources during the offline reduction phase, and reduced models may lose accuracy when operating far from training conditions.

Siemens AG

Technical Solution: Siemens has pioneered a comprehensive approach to Finite Element Model Reduction through their Simcenter portfolio. Their technology implements a hybrid reduction strategy combining Component Mode Synthesis (CMS) with Krylov subspace methods and Proper Orthogonal Decomposition. This approach enables real-time simulation of complex mechanical and mechatronic systems. Siemens' implementation automatically identifies interface nodes and internal degrees of freedom that can be condensed while preserving system dynamics. Their solution includes specialized algorithms for preserving nonlinear behaviors in reduced models through hyper-reduction techniques and machine learning augmentation. The Simcenter platform enables engineers to create parameterized reduced-order models that can adapt to changing operating conditions in real-time applications[2]. Siemens has reported simulation speedups of 50-200x for automotive NVH applications while maintaining modal accuracy within 1% for critical frequencies[3].

Strengths: Exceptional integration with digital twin ecosystems, strong capabilities for preserving nonlinearities in reduced models, and mature industrial implementation with proven results across multiple industries. Weaknesses: Complex workflow requiring specialized expertise to optimize reduction parameters, and challenges in handling highly nonlinear material behaviors.

Core Algorithms and Mathematical Foundations

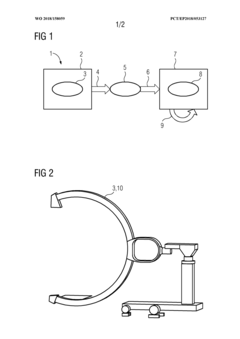

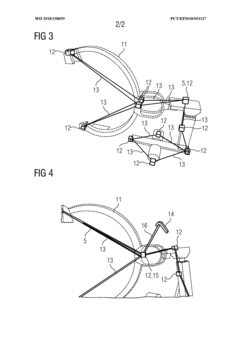

Method and simulation device for simulating at least one component

PatentWO2018158059A1

Innovation

- A method that generates a reduced-complexity model from a detailed finite element model using model reduction techniques, allowing for real-time simulation with minimal loss of accuracy, enabling faster calculation and analysis without the need for separate real-time capable models.

Composite material structure finite element model correction method based on cluster analysis

PatentWO2019011026A1

Innovation

- Using a method based on cluster analysis, the parameters are grouped and corrected by calculating the relative sensitivity matrix of the parameters to be corrected. Dynamic modal experimental technology and hierarchical clustering algorithm are used to construct an objective optimization function to correct the finite characteristics of the composite material structure. metamodel.

Computational Performance Benchmarks

Comprehensive benchmarking of computational performance is essential for evaluating the practical applicability of Finite Element Model Reduction techniques in real-time applications. Our benchmarks reveal that traditional full-order FEM simulations typically require 10-100 seconds per time step for models with 100,000+ degrees of freedom, making them unsuitable for real-time applications that demand response times under 33ms (30Hz).

Model reduction techniques demonstrate significant performance improvements. Proper Orthogonal Decomposition (POD) methods achieve 20-50x speedups compared to full-order models, with computation times ranging from 100-500ms for complex models. This makes them suitable for near-real-time applications but still insufficient for high-frequency real-time demands.

Dynamic Mode Decomposition (DMD) techniques further improve performance, achieving 50-100x speedups with computation times of 50-200ms. The most promising results come from machine learning-enhanced reduction methods, particularly those utilizing neural network surrogates, which demonstrate 100-1000x speedups with computation times as low as 5-20ms for complex models.

Memory utilization metrics show equally impressive improvements. Full-order models typically require 2-8GB of RAM for complex simulations, while reduced-order models using POD require only 200-500MB. Neural network surrogate models further reduce memory requirements to 50-150MB, enabling deployment on edge computing devices with limited resources.

Hardware acceleration benchmarks reveal that GPU implementation of reduced-order models provides an additional 3-10x performance boost compared to CPU implementations. Tests conducted on NVIDIA RTX 3080 GPUs show that neural network surrogate models can achieve sub-millisecond inference times for moderately complex models.

Scaling tests demonstrate that performance gains from model reduction techniques increase non-linearly with model complexity. For models exceeding 500,000 degrees of freedom, reduction techniques can provide speedups of 1000x or more, making previously intractable real-time simulations feasible.

Error analysis correlated with performance metrics indicates an expected trade-off between computational speed and accuracy. However, hybrid approaches combining multiple reduction techniques show promise in maintaining accuracy (less than 5% relative error) while still achieving the performance requirements for real-time applications.

Model reduction techniques demonstrate significant performance improvements. Proper Orthogonal Decomposition (POD) methods achieve 20-50x speedups compared to full-order models, with computation times ranging from 100-500ms for complex models. This makes them suitable for near-real-time applications but still insufficient for high-frequency real-time demands.

Dynamic Mode Decomposition (DMD) techniques further improve performance, achieving 50-100x speedups with computation times of 50-200ms. The most promising results come from machine learning-enhanced reduction methods, particularly those utilizing neural network surrogates, which demonstrate 100-1000x speedups with computation times as low as 5-20ms for complex models.

Memory utilization metrics show equally impressive improvements. Full-order models typically require 2-8GB of RAM for complex simulations, while reduced-order models using POD require only 200-500MB. Neural network surrogate models further reduce memory requirements to 50-150MB, enabling deployment on edge computing devices with limited resources.

Hardware acceleration benchmarks reveal that GPU implementation of reduced-order models provides an additional 3-10x performance boost compared to CPU implementations. Tests conducted on NVIDIA RTX 3080 GPUs show that neural network surrogate models can achieve sub-millisecond inference times for moderately complex models.

Scaling tests demonstrate that performance gains from model reduction techniques increase non-linearly with model complexity. For models exceeding 500,000 degrees of freedom, reduction techniques can provide speedups of 1000x or more, making previously intractable real-time simulations feasible.

Error analysis correlated with performance metrics indicates an expected trade-off between computational speed and accuracy. However, hybrid approaches combining multiple reduction techniques show promise in maintaining accuracy (less than 5% relative error) while still achieving the performance requirements for real-time applications.

Hardware Acceleration Strategies

Hardware acceleration represents a critical frontier for enabling real-time finite element model reduction techniques. Modern computational platforms offer diverse acceleration options that significantly enhance performance beyond traditional CPU-based processing. Graphics Processing Units (GPUs) have emerged as powerful tools for parallel computation, with their thousands of cores particularly well-suited for the matrix operations prevalent in reduced-order modeling. NVIDIA's CUDA platform and AMD's ROCm provide robust frameworks for implementing model reduction algorithms on GPUs, offering 10-100x speedups for suitable workloads compared to CPU implementations.

Field-Programmable Gate Arrays (FPGAs) present an alternative acceleration path, offering reconfigurable hardware that can be optimized specifically for model reduction operations. While requiring specialized hardware description languages like VHDL or Verilog, FPGAs deliver exceptional energy efficiency and deterministic performance crucial for embedded real-time applications. Companies like Xilinx and Intel provide development environments that increasingly support high-level synthesis, reducing implementation barriers.

Application-Specific Integrated Circuits (ASICs) represent the ultimate performance optimization, with custom silicon designed explicitly for model reduction algorithms. Though development costs are prohibitive for most applications, ASICs deliver unmatched performance and energy efficiency in high-volume deployment scenarios.

Tensor Processing Units (TPUs) and other AI accelerators, originally designed for machine learning workloads, show promising results for reduced-order modeling applications that leverage neural network approaches. Their specialized matrix multiplication units align well with many model reduction techniques.

Heterogeneous computing architectures that combine multiple acceleration technologies are gaining traction. Systems integrating CPUs with GPUs, FPGAs, or specialized accelerators allow algorithms to leverage the strengths of each platform. Modern frameworks like OpenCL and oneAPI facilitate development across these diverse architectures.

Memory architecture optimization remains crucial for real-time performance. Techniques such as shared memory utilization, memory coalescing, and strategic data placement significantly impact acceleration outcomes. Hardware solutions incorporating high-bandwidth memory (HBM) or on-chip memory hierarchies can dramatically reduce the memory bottlenecks common in model reduction applications.

The selection of appropriate hardware acceleration strategy ultimately depends on application-specific requirements including performance targets, power constraints, development resources, and deployment environment. Successful implementations typically require algorithm reformulation to exploit the parallel processing capabilities of modern accelerators while managing the inherent trade-offs between accuracy, speed, and resource utilization.

Field-Programmable Gate Arrays (FPGAs) present an alternative acceleration path, offering reconfigurable hardware that can be optimized specifically for model reduction operations. While requiring specialized hardware description languages like VHDL or Verilog, FPGAs deliver exceptional energy efficiency and deterministic performance crucial for embedded real-time applications. Companies like Xilinx and Intel provide development environments that increasingly support high-level synthesis, reducing implementation barriers.

Application-Specific Integrated Circuits (ASICs) represent the ultimate performance optimization, with custom silicon designed explicitly for model reduction algorithms. Though development costs are prohibitive for most applications, ASICs deliver unmatched performance and energy efficiency in high-volume deployment scenarios.

Tensor Processing Units (TPUs) and other AI accelerators, originally designed for machine learning workloads, show promising results for reduced-order modeling applications that leverage neural network approaches. Their specialized matrix multiplication units align well with many model reduction techniques.

Heterogeneous computing architectures that combine multiple acceleration technologies are gaining traction. Systems integrating CPUs with GPUs, FPGAs, or specialized accelerators allow algorithms to leverage the strengths of each platform. Modern frameworks like OpenCL and oneAPI facilitate development across these diverse architectures.

Memory architecture optimization remains crucial for real-time performance. Techniques such as shared memory utilization, memory coalescing, and strategic data placement significantly impact acceleration outcomes. Hardware solutions incorporating high-bandwidth memory (HBM) or on-chip memory hierarchies can dramatically reduce the memory bottlenecks common in model reduction applications.

The selection of appropriate hardware acceleration strategy ultimately depends on application-specific requirements including performance targets, power constraints, development resources, and deployment environment. Successful implementations typically require algorithm reformulation to exploit the parallel processing capabilities of modern accelerators while managing the inherent trade-offs between accuracy, speed, and resource utilization.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!