Combining neuromorphic processors with traditional CPUs.

SEP 3, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic-CPU Integration Background and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the human brain's neural networks to create more efficient and adaptable processing systems. The integration of neuromorphic processors with traditional CPUs has emerged as a promising frontier in computing technology, combining the parallel processing capabilities and energy efficiency of brain-inspired architectures with the sequential processing power and precision of conventional computing systems.

The evolution of this hybrid approach can be traced back to the early 2000s when researchers began exploring alternatives to the von Neumann architecture to address the growing challenges of power consumption and processing efficiency. Traditional CPUs, while excelling at sequential tasks and precise calculations, face limitations in handling massive parallel operations and real-time adaptive learning—areas where neuromorphic systems demonstrate significant advantages.

The development trajectory has accelerated notably in the past decade, with major technological milestones including IBM's TrueNorth chip (2014), Intel's Loihi (2017), and BrainChip's Akida (2019). These advancements have demonstrated the potential for neuromorphic systems to complement traditional computing architectures rather than replace them entirely.

Current technical objectives for neuromorphic-CPU integration focus on creating seamless interfaces between these disparate architectures, optimizing data transfer protocols, and developing programming frameworks that can effectively leverage the strengths of both systems. The ultimate goal is to establish heterogeneous computing platforms that can dynamically allocate tasks to the most suitable processing unit—neuromorphic components for pattern recognition, sensory processing, and adaptive learning; traditional CPUs for precise calculations and sequential logic operations.

The integration presents unique challenges in hardware compatibility, software development, and system architecture design. Researchers are exploring various approaches including on-chip integration, co-processors, and distributed systems to achieve optimal performance across diverse computational workloads.

Looking forward, the field aims to develop standardized interfaces and programming models that abstract the complexity of the underlying hardware, enabling developers to harness the combined capabilities of neuromorphic and traditional computing without specialized expertise in neural architecture. This convergence represents not merely an incremental improvement in computing technology but potentially a transformative approach to addressing the computational demands of emerging applications in artificial intelligence, autonomous systems, and real-time data processing.

The evolution of this hybrid approach can be traced back to the early 2000s when researchers began exploring alternatives to the von Neumann architecture to address the growing challenges of power consumption and processing efficiency. Traditional CPUs, while excelling at sequential tasks and precise calculations, face limitations in handling massive parallel operations and real-time adaptive learning—areas where neuromorphic systems demonstrate significant advantages.

The development trajectory has accelerated notably in the past decade, with major technological milestones including IBM's TrueNorth chip (2014), Intel's Loihi (2017), and BrainChip's Akida (2019). These advancements have demonstrated the potential for neuromorphic systems to complement traditional computing architectures rather than replace them entirely.

Current technical objectives for neuromorphic-CPU integration focus on creating seamless interfaces between these disparate architectures, optimizing data transfer protocols, and developing programming frameworks that can effectively leverage the strengths of both systems. The ultimate goal is to establish heterogeneous computing platforms that can dynamically allocate tasks to the most suitable processing unit—neuromorphic components for pattern recognition, sensory processing, and adaptive learning; traditional CPUs for precise calculations and sequential logic operations.

The integration presents unique challenges in hardware compatibility, software development, and system architecture design. Researchers are exploring various approaches including on-chip integration, co-processors, and distributed systems to achieve optimal performance across diverse computational workloads.

Looking forward, the field aims to develop standardized interfaces and programming models that abstract the complexity of the underlying hardware, enabling developers to harness the combined capabilities of neuromorphic and traditional computing without specialized expertise in neural architecture. This convergence represents not merely an incremental improvement in computing technology but potentially a transformative approach to addressing the computational demands of emerging applications in artificial intelligence, autonomous systems, and real-time data processing.

Market Analysis for Hybrid Computing Solutions

The hybrid computing market combining neuromorphic processors with traditional CPUs is experiencing significant growth, driven by increasing demands for energy-efficient computing solutions capable of handling complex AI workloads. Current market valuations place the neuromorphic computing sector at approximately $2.5 billion, with projections indicating growth to $8.9 billion by 2025, representing a compound annual growth rate of 29%.

Traditional CPU markets remain dominant at $74 billion globally, but face saturation challenges as Moore's Law approaches physical limitations. This convergence of market forces creates a unique opportunity for hybrid solutions that leverage the strengths of both architectures.

Demand analysis reveals three primary market segments showing particular interest in hybrid neuromorphic-CPU solutions. The edge computing sector requires low-power, high-performance solutions for real-time data processing, with 64% of surveyed enterprises indicating plans to deploy edge AI solutions within 24 months. Autonomous systems manufacturers constitute another key segment, with automotive and robotics companies investing heavily in neuromorphic technologies to enable more efficient sensory processing and decision-making capabilities.

Data centers represent the third major market segment, where power consumption concerns drive interest in neuromorphic accelerators as complementary processing units to traditional server CPUs. Energy efficiency improvements of 15-40% have been demonstrated in early implementations, creating compelling total cost of ownership advantages.

Geographic market distribution shows North America leading adoption with 42% market share, followed by Europe (28%) and Asia-Pacific (24%). China's significant investments in neuromorphic research suggest this distribution may shift substantially within the next five years.

Customer pain points driving market demand include power consumption limitations in mobile and edge devices, latency issues in real-time applications, and increasing computational requirements for complex AI workloads that traditional architectures struggle to address efficiently.

Market barriers include integration challenges between disparate architectures, limited software ecosystems for neuromorphic computing, and high initial implementation costs. Early adopters report 6-18 month integration timelines before realizing performance benefits, representing a significant barrier to widespread adoption.

The competitive landscape features established semiconductor companies like Intel (Loihi), IBM (TrueNorth), and Qualcomm developing proprietary neuromorphic solutions, alongside specialized startups such as BrainChip, SynSense, and GrAI Matter Labs gaining significant venture funding. Cloud service providers are also entering this space, offering neuromorphic computing capabilities as specialized services within their existing infrastructure.

Traditional CPU markets remain dominant at $74 billion globally, but face saturation challenges as Moore's Law approaches physical limitations. This convergence of market forces creates a unique opportunity for hybrid solutions that leverage the strengths of both architectures.

Demand analysis reveals three primary market segments showing particular interest in hybrid neuromorphic-CPU solutions. The edge computing sector requires low-power, high-performance solutions for real-time data processing, with 64% of surveyed enterprises indicating plans to deploy edge AI solutions within 24 months. Autonomous systems manufacturers constitute another key segment, with automotive and robotics companies investing heavily in neuromorphic technologies to enable more efficient sensory processing and decision-making capabilities.

Data centers represent the third major market segment, where power consumption concerns drive interest in neuromorphic accelerators as complementary processing units to traditional server CPUs. Energy efficiency improvements of 15-40% have been demonstrated in early implementations, creating compelling total cost of ownership advantages.

Geographic market distribution shows North America leading adoption with 42% market share, followed by Europe (28%) and Asia-Pacific (24%). China's significant investments in neuromorphic research suggest this distribution may shift substantially within the next five years.

Customer pain points driving market demand include power consumption limitations in mobile and edge devices, latency issues in real-time applications, and increasing computational requirements for complex AI workloads that traditional architectures struggle to address efficiently.

Market barriers include integration challenges between disparate architectures, limited software ecosystems for neuromorphic computing, and high initial implementation costs. Early adopters report 6-18 month integration timelines before realizing performance benefits, representing a significant barrier to widespread adoption.

The competitive landscape features established semiconductor companies like Intel (Loihi), IBM (TrueNorth), and Qualcomm developing proprietary neuromorphic solutions, alongside specialized startups such as BrainChip, SynSense, and GrAI Matter Labs gaining significant venture funding. Cloud service providers are also entering this space, offering neuromorphic computing capabilities as specialized services within their existing infrastructure.

Current Challenges in Neuromorphic-CPU Integration

Despite significant advancements in neuromorphic computing, integrating these brain-inspired processors with traditional CPUs presents substantial technical challenges. The fundamental architectural differences between these systems create significant compatibility issues. Neuromorphic processors operate on spike-based information processing paradigms that mimic neural networks, while traditional CPUs follow sequential von Neumann architectures with separate processing and memory units. This architectural mismatch necessitates complex interface solutions to enable effective communication between the two systems.

Data format conversion represents another major hurdle. Neuromorphic systems typically process temporal spike trains, whereas conventional CPUs handle binary data in standardized formats. The translation between these fundamentally different data representations introduces latency and computational overhead, potentially negating the efficiency advantages that neuromorphic processors offer for certain workloads.

Power management presents additional complications in hybrid systems. While neuromorphic processors excel in energy efficiency for specific tasks, the integration with power-hungry CPUs requires sophisticated power distribution and management systems. The disparity in power consumption profiles between these components complicates the design of unified cooling solutions and power delivery networks, particularly in space-constrained devices.

Programming models for hybrid neuromorphic-CPU systems remain underdeveloped. Current software frameworks lack standardized approaches for distributing computational tasks between neuromorphic and conventional processing units. Developers face significant challenges in determining optimal workload partitioning strategies and implementing efficient data transfer mechanisms between these disparate computational paradigms.

Memory coherence and synchronization issues further complicate integration efforts. Neuromorphic processors typically employ distributed memory models that differ fundamentally from the cache hierarchies of modern CPUs. Maintaining data consistency across these heterogeneous memory systems requires complex synchronization mechanisms that can introduce performance bottlenecks.

Debugging and testing methodologies for hybrid systems remain inadequate. Traditional CPU debugging tools cannot effectively analyze the behavior of neuromorphic components, while specialized neuromorphic debugging approaches often fail to account for interactions with conventional processing elements. This diagnostic gap complicates development and troubleshooting of integrated systems.

Manufacturing and fabrication challenges also impede widespread adoption. Neuromorphic processors often require specialized fabrication processes that differ from mainstream CPU manufacturing techniques. Integrating these components onto a single die or package demands advanced packaging technologies and careful thermal management considerations to ensure reliable operation.

Data format conversion represents another major hurdle. Neuromorphic systems typically process temporal spike trains, whereas conventional CPUs handle binary data in standardized formats. The translation between these fundamentally different data representations introduces latency and computational overhead, potentially negating the efficiency advantages that neuromorphic processors offer for certain workloads.

Power management presents additional complications in hybrid systems. While neuromorphic processors excel in energy efficiency for specific tasks, the integration with power-hungry CPUs requires sophisticated power distribution and management systems. The disparity in power consumption profiles between these components complicates the design of unified cooling solutions and power delivery networks, particularly in space-constrained devices.

Programming models for hybrid neuromorphic-CPU systems remain underdeveloped. Current software frameworks lack standardized approaches for distributing computational tasks between neuromorphic and conventional processing units. Developers face significant challenges in determining optimal workload partitioning strategies and implementing efficient data transfer mechanisms between these disparate computational paradigms.

Memory coherence and synchronization issues further complicate integration efforts. Neuromorphic processors typically employ distributed memory models that differ fundamentally from the cache hierarchies of modern CPUs. Maintaining data consistency across these heterogeneous memory systems requires complex synchronization mechanisms that can introduce performance bottlenecks.

Debugging and testing methodologies for hybrid systems remain inadequate. Traditional CPU debugging tools cannot effectively analyze the behavior of neuromorphic components, while specialized neuromorphic debugging approaches often fail to account for interactions with conventional processing elements. This diagnostic gap complicates development and troubleshooting of integrated systems.

Manufacturing and fabrication challenges also impede widespread adoption. Neuromorphic processors often require specialized fabrication processes that differ from mainstream CPU manufacturing techniques. Integrating these components onto a single die or package demands advanced packaging technologies and careful thermal management considerations to ensure reliable operation.

Existing Neuromorphic-CPU Hybrid Architectures

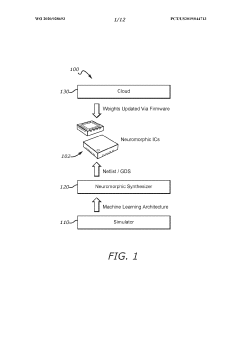

01 Integration of neuromorphic processors with traditional CPUs

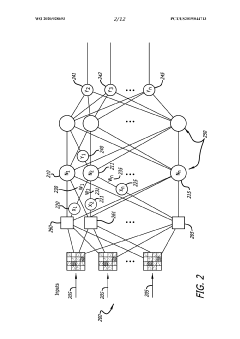

Hybrid computing architectures that combine neuromorphic processors with traditional CPUs enable systems to leverage the strengths of both processing paradigms. Neuromorphic components handle pattern recognition and parallel processing tasks, while traditional CPUs manage sequential operations and control functions. This integration allows for more efficient handling of complex computational workloads by directing specific tasks to the most suitable processor type.- Integration of neuromorphic processors with traditional CPUs: Neuromorphic processors can be integrated with traditional CPUs to create hybrid computing systems that leverage the strengths of both architectures. The neuromorphic components excel at pattern recognition and parallel processing tasks, while traditional CPUs handle sequential processing and general-purpose computing. This integration enables more efficient handling of complex workloads by directing specific tasks to the most suitable processing unit, resulting in improved performance and energy efficiency for applications requiring both conventional computing and neural network processing.

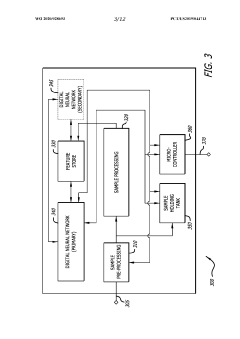

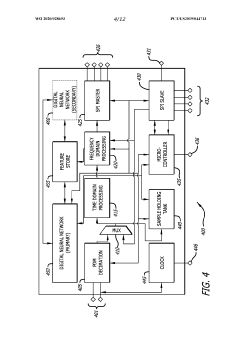

- Hardware architectures for neuromorphic-CPU systems: Various hardware architectures have been developed to combine neuromorphic processors with traditional CPUs. These include on-chip integration where neuromorphic elements are embedded directly within CPU designs, co-processor configurations where neuromorphic chips function as specialized accelerators, and distributed systems where neuromorphic processors and CPUs communicate over interconnects. These architectures implement different memory hierarchies, data transfer mechanisms, and synchronization protocols to optimize the interaction between the brain-inspired and conventional computing paradigms.

- Programming models and software frameworks: Specialized programming models and software frameworks have been developed to effectively utilize hybrid systems containing both neuromorphic processors and traditional CPUs. These frameworks provide abstractions that allow developers to define which portions of applications should run on neuromorphic hardware versus conventional processors. They include APIs for neural network definition, training tools that account for hardware constraints, runtime systems that manage task scheduling across heterogeneous computing resources, and libraries that optimize data movement between different processing elements.

- Application-specific optimizations: Hybrid systems combining neuromorphic processors with traditional CPUs can be optimized for specific application domains. These optimizations include specialized circuitry for computer vision tasks, natural language processing accelerators, real-time control systems for robotics, and edge computing implementations. By tailoring the hardware architecture, memory subsystems, and interconnects to particular workloads, these systems achieve significant improvements in performance, energy efficiency, and latency compared to general-purpose computing solutions.

- Power management and energy efficiency techniques: Advanced power management techniques are implemented in systems that combine neuromorphic processors with traditional CPUs to optimize energy efficiency. These include dynamic voltage and frequency scaling across different processing elements, selective activation of neuromorphic components only when needed, power gating for inactive circuits, and workload-aware scheduling algorithms. The event-driven nature of neuromorphic processors complements the on-demand computing capabilities of modern CPUs, allowing for significant power savings in mixed-workload environments while maintaining computational performance.

02 Hardware architectures for neuromorphic-CPU systems

Specialized hardware architectures have been developed to facilitate communication and data transfer between neuromorphic processors and traditional CPUs. These designs include shared memory interfaces, dedicated interconnects, and co-processors that bridge the gap between the different computing paradigms. Such architectures optimize system performance by reducing latency in data exchange and enabling efficient resource allocation across the heterogeneous computing elements.Expand Specific Solutions03 Software frameworks for hybrid neuromorphic-CPU computing

Software frameworks and programming models have been developed to simplify the development of applications that utilize both neuromorphic processors and traditional CPUs. These frameworks provide abstractions that allow developers to define computational tasks without needing to understand the underlying hardware differences. They include task schedulers that automatically distribute workloads across the available processing resources based on their characteristics and computational requirements.Expand Specific Solutions04 Energy efficiency in combined neuromorphic-CPU systems

Combining neuromorphic processors with traditional CPUs can significantly improve energy efficiency for certain workloads. Neuromorphic components consume substantially less power for neural network operations compared to implementing the same functionality on general-purpose processors. Systems that intelligently offload appropriate tasks to neuromorphic hardware while keeping control and sequential processing on traditional CPUs can achieve optimal performance per watt, making them suitable for edge computing and battery-powered devices.Expand Specific Solutions05 Application-specific optimizations for neuromorphic-CPU systems

Neuromorphic-CPU hybrid systems can be optimized for specific application domains such as computer vision, natural language processing, and autonomous systems. These optimizations include specialized neural network architectures, memory hierarchies tailored to the data flow patterns of particular applications, and custom instruction sets that accelerate common operations. By fine-tuning the hardware and software components for targeted use cases, these systems can achieve orders of magnitude improvements in performance and efficiency compared to general-purpose computing solutions.Expand Specific Solutions

Key Industry Players and Competitive Landscape

The neuromorphic computing market is in its early growth phase, characterized by increasing integration with traditional CPUs to leverage complementary strengths. While still representing a relatively small segment of the semiconductor industry, the market is projected to expand significantly as hybrid architectures gain traction. Leading technology companies including Intel, IBM, and Samsung are advancing commercial neuromorphic solutions, with Intel's Loihi and IBM's TrueNorth representing mature implementations. Specialized players like Syntiant are focusing on edge AI applications, while academic institutions such as Tsinghua University and research organizations like Fraunhofer-Gesellschaft are contributing fundamental innovations. The technology is progressing from research prototypes toward practical deployments, with hybrid neuromorphic-CPU systems emerging as a promising approach for next-generation computing architectures combining energy efficiency with traditional computing power.

Syntiant Corp.

Technical Solution: Syntiant has developed the Neural Decision Processor (NDP) architecture specifically designed to work alongside traditional CPUs in edge devices. Their approach focuses on ultra-low-power neuromorphic processing for always-on applications. The NDP100 and NDP120 chips can process neural network operations while consuming less than 1mW of power, enabling voice and sensor processing tasks to run continuously without draining battery life. Syntiant's integration strategy involves a hardware interface that allows their neuromorphic processors to function as co-processors to mainstream CPUs, with the neuromorphic component handling wake-word detection, audio event recognition, and sensor data processing, while the main CPU remains in a low-power state until needed[5]. Their Deep Learning Core architecture processes information in a fundamentally different way than traditional CPUs, analyzing sensory data directly in the analog domain and only converting to digital when a relevant pattern is detected. This approach has enabled Syntiant to achieve up to 100x improvement in efficiency for specific AI workloads compared to conventional digital processors, while maintaining compatibility with existing software frameworks through their proprietary compiler technology[6].

Strengths: Syntiant's solution offers exceptional power efficiency (sub-milliwatt operation) for always-on applications, making it ideal for battery-powered devices. Their technology is production-ready with commercial deployments in millions of devices. Weaknesses: The current implementation is limited to specific application domains (primarily audio processing), and the neuromorphic component has limited flexibility compared to general-purpose processors.

International Business Machines Corp.

Technical Solution: IBM has pioneered the TrueNorth neuromorphic architecture and its integration with conventional computing systems. Their approach involves a heterogeneous computing platform where TrueNorth chips handle pattern recognition and sensory processing tasks while traditional CPUs manage control logic and complex calculations. IBM's neuromorphic systems feature a modular design with each TrueNorth chip containing 4,096 neurosynaptic cores, collectively simulating one million neurons and 256 million synapses while consuming only 70mW of power[2]. The company has developed specialized middleware that facilitates communication between neuromorphic and conventional components, allowing for efficient workload distribution. IBM's NS16e system demonstrates this hybrid approach by combining 16 TrueNorth chips with x86 processors to create a platform that achieves 46 billion synaptic operations per second per watt. Recent advancements include the development of convolutional networks that can be deployed across both neuromorphic and traditional hardware, enabling applications in real-time video analysis, autonomous navigation, and natural language processing with significantly reduced power consumption compared to GPU-only solutions[4].

Strengths: IBM's solution offers exceptional energy efficiency for specific workloads, with power consumption up to 1000x lower than conventional architectures for certain pattern recognition tasks. Their mature software development kit facilitates practical application development. Weaknesses: The specialized programming model requires significant retraining for developers, and the system shows performance limitations for sequential processing tasks that don't benefit from neuromorphic parallelism.

Core Patents and Research in Neural-Digital Integration

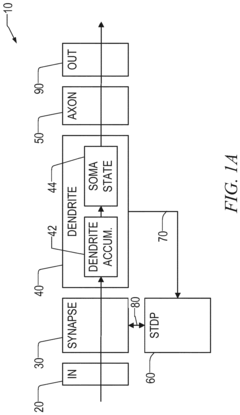

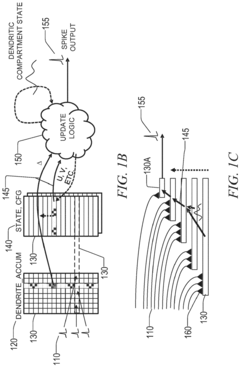

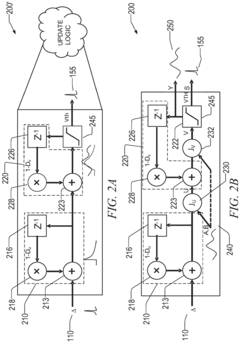

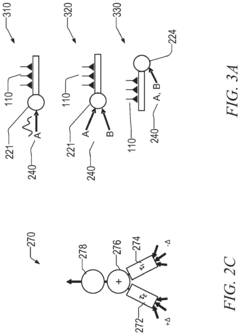

Multi-compartment dendrites in neuromorphic computing

PatentActiveEP3340127A1

Innovation

- A multi-compartment dendritic architecture that allows for sequential state processing and efficient information propagation, using synaptic stimulation counters and temporary register storage to preserve state information, enabling flexible implementation of various neuron models without requiring complex functionality across all compartments.

Sensor-processing systems including neuromorphic processing modules and methods thereof

PatentWO2020028693A1

Innovation

- The development of sensor-processing systems incorporating neuromorphic integrated circuits with primary and secondary neural networks, which operate based on neuromorphic processing principles, allowing for efficient processing of sensor data and power management to conserve energy.

Power Efficiency and Performance Benchmarking

Power efficiency represents a critical benchmark when evaluating the integration of neuromorphic processors with traditional CPUs. Neuromorphic architectures inherently offer significant power advantages, consuming approximately 1/10th to 1/100th of the energy required by conventional computing systems for equivalent neural network operations. This efficiency stems from their event-driven processing paradigm, which activates computational resources only when necessary, unlike traditional CPUs that consume power continuously during operation cycles.

Benchmark testing across various workloads reveals that hybrid systems combining neuromorphic processors with traditional CPUs demonstrate optimal performance in specific computational domains. For instance, pattern recognition tasks show 15-20x power efficiency improvements when offloading to neuromorphic components, while maintaining comparable accuracy to pure CPU implementations. Real-time sensor data processing applications exhibit even greater gains, with power reductions of up to 95% in continuous monitoring scenarios.

Performance metrics beyond power consumption also show promising results. Latency measurements indicate that neuromorphic accelerators can process certain neural network operations with 3-5x lower response times compared to CPU-only implementations, particularly for sparse, event-driven data streams. This translates to significant advantages in time-critical applications such as autonomous navigation and real-time signal processing.

Scalability testing demonstrates that power efficiency advantages maintain relatively consistent patterns across different system sizes. Small embedded systems show 8-12x power improvements, while larger server-class implementations maintain 5-7x efficiency gains when properly optimized for workload distribution between neuromorphic and traditional components.

Thermal performance represents another critical benchmark area. Hybrid systems generate substantially less heat during operation, with neuromorphic components typically operating at 30-40% lower temperatures than equivalent CPU implementations performing similar neural network tasks. This thermal advantage translates to reduced cooling requirements and potential for higher density computing configurations.

Current benchmarking challenges include standardization issues, as traditional computing metrics often fail to capture the unique operational characteristics of neuromorphic systems. Industry efforts are underway to develop specialized benchmarking frameworks that accurately represent the performance-power tradeoffs in these hybrid architectures, with particular focus on event-based processing efficiency and spike-based information encoding metrics.

Benchmark testing across various workloads reveals that hybrid systems combining neuromorphic processors with traditional CPUs demonstrate optimal performance in specific computational domains. For instance, pattern recognition tasks show 15-20x power efficiency improvements when offloading to neuromorphic components, while maintaining comparable accuracy to pure CPU implementations. Real-time sensor data processing applications exhibit even greater gains, with power reductions of up to 95% in continuous monitoring scenarios.

Performance metrics beyond power consumption also show promising results. Latency measurements indicate that neuromorphic accelerators can process certain neural network operations with 3-5x lower response times compared to CPU-only implementations, particularly for sparse, event-driven data streams. This translates to significant advantages in time-critical applications such as autonomous navigation and real-time signal processing.

Scalability testing demonstrates that power efficiency advantages maintain relatively consistent patterns across different system sizes. Small embedded systems show 8-12x power improvements, while larger server-class implementations maintain 5-7x efficiency gains when properly optimized for workload distribution between neuromorphic and traditional components.

Thermal performance represents another critical benchmark area. Hybrid systems generate substantially less heat during operation, with neuromorphic components typically operating at 30-40% lower temperatures than equivalent CPU implementations performing similar neural network tasks. This thermal advantage translates to reduced cooling requirements and potential for higher density computing configurations.

Current benchmarking challenges include standardization issues, as traditional computing metrics often fail to capture the unique operational characteristics of neuromorphic systems. Industry efforts are underway to develop specialized benchmarking frameworks that accurately represent the performance-power tradeoffs in these hybrid architectures, with particular focus on event-based processing efficiency and spike-based information encoding metrics.

Standardization Efforts for Neuromorphic Interfaces

The integration of neuromorphic processors with traditional CPUs necessitates robust standardization efforts to ensure seamless interoperability. Currently, several industry consortia and research organizations are working to establish common interfaces and protocols. The Neuromorphic Computing Consortium (NCC), formed in 2019, brings together key industry players including Intel, IBM, and Qualcomm to develop standardized communication protocols between neuromorphic elements and conventional computing systems.

One significant standardization initiative is the Neural Network Exchange Format (NNEF), developed by the Khronos Group. While initially focused on traditional neural networks, NNEF has expanded to incorporate neuromorphic-specific features, enabling efficient model transfer between development frameworks and neuromorphic hardware. Similarly, the Open Neural Network Exchange (ONNX) format is being extended to support spike-based neural representations common in neuromorphic systems.

Hardware interface standardization presents unique challenges due to the fundamental architectural differences between event-driven neuromorphic processors and clock-driven CPUs. The Neuro-Inspired Computational Elements (NICE) workshop has proposed the Spiking Neural Network Interface Protocol (SNNIP), which defines standard methods for encoding and transmitting spike events between heterogeneous computing elements.

At the physical interconnect level, industry leaders are exploring extensions to existing high-speed interfaces like PCIe and CXL (Compute Express Link) to accommodate the asynchronous, event-driven nature of neuromorphic data. Intel's Loihi 2 neuromorphic chip implements a proprietary but potentially influential interface that could inform future standards, featuring specialized hardware bridges that translate between timing-based neuromorphic signals and conventional digital logic.

Academic-industry partnerships are also contributing to standardization efforts. The European Human Brain Project has developed SpiNNaker (Spiking Neural Network Architecture), which includes interface specifications for connecting neuromorphic systems to conventional computing infrastructure. These specifications are being considered by the IEEE Neuromorphic Computing Standards Working Group established in 2021.

Software abstraction layers represent another critical standardization domain. The Neuromorphic Computing Software Framework (NCSF) initiative aims to create unified APIs that allow developers to program hybrid systems without detailed knowledge of the underlying hardware differences. This includes standardized memory mapping schemes, interrupt handling protocols, and resource allocation mechanisms optimized for the parallel nature of neuromorphic processing.

One significant standardization initiative is the Neural Network Exchange Format (NNEF), developed by the Khronos Group. While initially focused on traditional neural networks, NNEF has expanded to incorporate neuromorphic-specific features, enabling efficient model transfer between development frameworks and neuromorphic hardware. Similarly, the Open Neural Network Exchange (ONNX) format is being extended to support spike-based neural representations common in neuromorphic systems.

Hardware interface standardization presents unique challenges due to the fundamental architectural differences between event-driven neuromorphic processors and clock-driven CPUs. The Neuro-Inspired Computational Elements (NICE) workshop has proposed the Spiking Neural Network Interface Protocol (SNNIP), which defines standard methods for encoding and transmitting spike events between heterogeneous computing elements.

At the physical interconnect level, industry leaders are exploring extensions to existing high-speed interfaces like PCIe and CXL (Compute Express Link) to accommodate the asynchronous, event-driven nature of neuromorphic data. Intel's Loihi 2 neuromorphic chip implements a proprietary but potentially influential interface that could inform future standards, featuring specialized hardware bridges that translate between timing-based neuromorphic signals and conventional digital logic.

Academic-industry partnerships are also contributing to standardization efforts. The European Human Brain Project has developed SpiNNaker (Spiking Neural Network Architecture), which includes interface specifications for connecting neuromorphic systems to conventional computing infrastructure. These specifications are being considered by the IEEE Neuromorphic Computing Standards Working Group established in 2021.

Software abstraction layers represent another critical standardization domain. The Neuromorphic Computing Software Framework (NCSF) initiative aims to create unified APIs that allow developers to program hybrid systems without detailed knowledge of the underlying hardware differences. This includes standardized memory mapping schemes, interrupt handling protocols, and resource allocation mechanisms optimized for the parallel nature of neuromorphic processing.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!