Design of Leaky Integrate-and-Fire (LIF) Neuron Models in CMOS.

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

LIF Neuron Models Background and Objectives

Leaky Integrate-and-Fire (LIF) neuron models represent a fundamental building block in neuromorphic computing, offering a simplified yet effective representation of biological neuron behavior. The evolution of these models dates back to the early 20th century when Louis Lapicque first proposed the integrate-and-fire concept in 1907. Since then, LIF models have undergone significant refinements to better approximate biological neural dynamics while maintaining computational efficiency.

The technological trajectory of LIF neurons has been closely tied to advances in semiconductor technology. Early hardware implementations in the 1980s and 1990s were limited by available CMOS technology, resulting in large circuit footprints and high power consumption. The past two decades have witnessed remarkable progress in miniaturization and energy efficiency, driven by scaling trends in CMOS technology nodes from 180nm down to current 5nm processes.

Recent developments have focused on enhancing the biological plausibility of LIF models while maintaining their implementation feasibility in CMOS technology. Key evolutionary trends include the incorporation of adaptive thresholds, synaptic plasticity mechanisms, and stochastic behavior to better mimic biological neural networks. These advancements have significantly improved the computational capabilities of neuromorphic systems based on LIF neurons.

The primary technical objectives for LIF neuron design in CMOS technology center around four critical parameters: energy efficiency, silicon area, biological fidelity, and operational reliability. Modern neuromorphic systems require neurons that consume sub-picojoule energy per spike while occupying minimal silicon area to enable large-scale integration. Simultaneously, these neurons must faithfully reproduce key biological behaviors such as spike-frequency adaptation, refractory periods, and various forms of plasticity.

Another crucial objective is achieving robust operation across manufacturing variations and environmental conditions. As CMOS technology scales down, process variations become increasingly significant, necessitating design techniques that ensure consistent neural behavior despite fabrication inconsistencies. Temperature compensation mechanisms are also essential for maintaining stable operation across different environmental conditions.

Looking forward, the field is moving toward multi-compartment LIF models that better capture the spatial dynamics of biological neurons. These advanced models aim to implement dendritic computation capabilities, which are crucial for certain cognitive functions. Additionally, there is growing interest in developing LIF neurons capable of operating at ultra-low voltages (sub-threshold region) to further reduce power consumption, a critical requirement for edge AI applications and implantable neural interfaces.

The convergence of traditional CMOS design with emerging technologies such as memristive devices presents exciting opportunities for hybrid LIF implementations that could overcome current limitations in synaptic density and learning capabilities. These technological objectives align with the broader goal of creating more efficient, scalable, and biologically plausible neuromorphic computing systems.

The technological trajectory of LIF neurons has been closely tied to advances in semiconductor technology. Early hardware implementations in the 1980s and 1990s were limited by available CMOS technology, resulting in large circuit footprints and high power consumption. The past two decades have witnessed remarkable progress in miniaturization and energy efficiency, driven by scaling trends in CMOS technology nodes from 180nm down to current 5nm processes.

Recent developments have focused on enhancing the biological plausibility of LIF models while maintaining their implementation feasibility in CMOS technology. Key evolutionary trends include the incorporation of adaptive thresholds, synaptic plasticity mechanisms, and stochastic behavior to better mimic biological neural networks. These advancements have significantly improved the computational capabilities of neuromorphic systems based on LIF neurons.

The primary technical objectives for LIF neuron design in CMOS technology center around four critical parameters: energy efficiency, silicon area, biological fidelity, and operational reliability. Modern neuromorphic systems require neurons that consume sub-picojoule energy per spike while occupying minimal silicon area to enable large-scale integration. Simultaneously, these neurons must faithfully reproduce key biological behaviors such as spike-frequency adaptation, refractory periods, and various forms of plasticity.

Another crucial objective is achieving robust operation across manufacturing variations and environmental conditions. As CMOS technology scales down, process variations become increasingly significant, necessitating design techniques that ensure consistent neural behavior despite fabrication inconsistencies. Temperature compensation mechanisms are also essential for maintaining stable operation across different environmental conditions.

Looking forward, the field is moving toward multi-compartment LIF models that better capture the spatial dynamics of biological neurons. These advanced models aim to implement dendritic computation capabilities, which are crucial for certain cognitive functions. Additionally, there is growing interest in developing LIF neurons capable of operating at ultra-low voltages (sub-threshold region) to further reduce power consumption, a critical requirement for edge AI applications and implantable neural interfaces.

The convergence of traditional CMOS design with emerging technologies such as memristive devices presents exciting opportunities for hybrid LIF implementations that could overcome current limitations in synaptic density and learning capabilities. These technological objectives align with the broader goal of creating more efficient, scalable, and biologically plausible neuromorphic computing systems.

Market Applications for CMOS-based Neuromorphic Hardware

The neuromorphic computing market is experiencing significant growth, driven by the increasing demand for AI applications that require energy-efficient processing capabilities. CMOS-based neuromorphic hardware implementing LIF neuron models is finding applications across diverse sectors due to its ability to mimic brain-like processing while maintaining compatibility with existing semiconductor manufacturing infrastructure.

In the healthcare sector, neuromorphic chips are revolutionizing medical imaging analysis, enabling real-time processing of complex diagnostic data with significantly reduced power consumption compared to traditional computing architectures. These systems are particularly valuable for portable medical devices and implantable neural interfaces where energy efficiency is paramount.

Autonomous vehicles represent another substantial market opportunity, with neuromorphic processors handling sensor fusion tasks and real-time decision-making processes. The event-driven nature of LIF neurons allows for efficient processing of visual data streams from multiple cameras and sensors, reducing latency in critical safety applications while consuming less power than conventional GPU-based solutions.

Edge computing applications are rapidly adopting neuromorphic hardware to enable AI capabilities in resource-constrained environments. Smart home devices, wearable technology, and IoT sensors benefit from the ability to perform complex pattern recognition tasks locally without constant cloud connectivity, enhancing privacy and reducing bandwidth requirements.

Industrial automation systems are leveraging neuromorphic processors for anomaly detection and predictive maintenance applications. The temporal processing capabilities of LIF neuron implementations allow for effective analysis of time-series data from manufacturing equipment, identifying potential failures before they occur and optimizing production processes.

The telecommunications industry is exploring neuromorphic computing for network optimization and security applications. These systems can analyze network traffic patterns in real-time, identifying potential security threats or performance bottlenecks with greater efficiency than traditional computing approaches.

Scientific research instruments represent a specialized but growing market segment, with neuromorphic hardware enabling real-time data processing for applications such as particle physics experiments, genomic sequencing, and climate modeling. The parallel processing capabilities of neuromorphic systems allow researchers to analyze complex datasets more efficiently.

Military and defense applications are driving significant investment in neuromorphic computing, particularly for autonomous drones, signal intelligence, and battlefield awareness systems. The low power consumption and high processing efficiency make these systems ideal for deployment in remote or hostile environments where energy resources are limited.

In the healthcare sector, neuromorphic chips are revolutionizing medical imaging analysis, enabling real-time processing of complex diagnostic data with significantly reduced power consumption compared to traditional computing architectures. These systems are particularly valuable for portable medical devices and implantable neural interfaces where energy efficiency is paramount.

Autonomous vehicles represent another substantial market opportunity, with neuromorphic processors handling sensor fusion tasks and real-time decision-making processes. The event-driven nature of LIF neurons allows for efficient processing of visual data streams from multiple cameras and sensors, reducing latency in critical safety applications while consuming less power than conventional GPU-based solutions.

Edge computing applications are rapidly adopting neuromorphic hardware to enable AI capabilities in resource-constrained environments. Smart home devices, wearable technology, and IoT sensors benefit from the ability to perform complex pattern recognition tasks locally without constant cloud connectivity, enhancing privacy and reducing bandwidth requirements.

Industrial automation systems are leveraging neuromorphic processors for anomaly detection and predictive maintenance applications. The temporal processing capabilities of LIF neuron implementations allow for effective analysis of time-series data from manufacturing equipment, identifying potential failures before they occur and optimizing production processes.

The telecommunications industry is exploring neuromorphic computing for network optimization and security applications. These systems can analyze network traffic patterns in real-time, identifying potential security threats or performance bottlenecks with greater efficiency than traditional computing approaches.

Scientific research instruments represent a specialized but growing market segment, with neuromorphic hardware enabling real-time data processing for applications such as particle physics experiments, genomic sequencing, and climate modeling. The parallel processing capabilities of neuromorphic systems allow researchers to analyze complex datasets more efficiently.

Military and defense applications are driving significant investment in neuromorphic computing, particularly for autonomous drones, signal intelligence, and battlefield awareness systems. The low power consumption and high processing efficiency make these systems ideal for deployment in remote or hostile environments where energy resources are limited.

Current Challenges in CMOS LIF Neuron Implementation

The implementation of Leaky Integrate-and-Fire (LIF) neuron models in CMOS technology faces several significant challenges that limit their widespread adoption in neuromorphic computing systems. One of the primary obstacles is power consumption, as traditional CMOS implementations of LIF neurons typically require substantial energy, particularly when operating at biologically relevant time scales. This energy inefficiency becomes especially problematic in large-scale neural networks where thousands or millions of neurons must operate simultaneously.

Scaling presents another formidable challenge. As neuromorphic systems grow in complexity, the silicon area required for each neuron becomes a critical constraint. Current CMOS LIF designs often occupy considerable chip area, limiting the neuron density achievable in practical implementations. This spatial inefficiency directly impacts the scalability of neuromorphic systems and their ability to model complex neural networks.

Parameter variability in CMOS manufacturing processes introduces significant complications for LIF neuron implementation. Process variations can cause inconsistent behavior across supposedly identical neurons on the same chip, resulting in unpredictable network dynamics. While biological neural systems demonstrate remarkable robustness to such variations, engineered systems struggle to maintain consistent performance under similar conditions.

The accurate modeling of biological time constants represents another substantial challenge. Biological neurons operate on millisecond timescales, which are orders of magnitude slower than typical CMOS circuit operations. Designers must employ specialized techniques such as subthreshold operation or capacitive time-constant multiplication to bridge this temporal gap, often at the cost of increased area or reduced precision.

Noise management remains a persistent issue in CMOS LIF implementations. Thermal noise, flicker noise, and other noise sources can significantly impact the behavior of analog circuits operating at low current levels, which are often necessary for energy-efficient operation. While biological neurons leverage noise constructively, engineered systems must carefully manage noise to maintain computational integrity.

The trade-off between biological fidelity and implementation efficiency creates a fundamental tension in CMOS LIF design. More biologically accurate models typically require more complex circuitry, consuming greater power and silicon area. Conversely, simplified models may achieve better hardware efficiency but potentially sacrifice computational capabilities that emerge from more detailed biological mechanisms.

Integration with digital processing systems presents additional challenges, particularly regarding the interface between the analog domain of neuron operation and the digital domain of conventional computing. Efficient analog-to-digital and digital-to-analog conversion becomes critical for hybrid neuromorphic systems, adding complexity to the overall design.

Scaling presents another formidable challenge. As neuromorphic systems grow in complexity, the silicon area required for each neuron becomes a critical constraint. Current CMOS LIF designs often occupy considerable chip area, limiting the neuron density achievable in practical implementations. This spatial inefficiency directly impacts the scalability of neuromorphic systems and their ability to model complex neural networks.

Parameter variability in CMOS manufacturing processes introduces significant complications for LIF neuron implementation. Process variations can cause inconsistent behavior across supposedly identical neurons on the same chip, resulting in unpredictable network dynamics. While biological neural systems demonstrate remarkable robustness to such variations, engineered systems struggle to maintain consistent performance under similar conditions.

The accurate modeling of biological time constants represents another substantial challenge. Biological neurons operate on millisecond timescales, which are orders of magnitude slower than typical CMOS circuit operations. Designers must employ specialized techniques such as subthreshold operation or capacitive time-constant multiplication to bridge this temporal gap, often at the cost of increased area or reduced precision.

Noise management remains a persistent issue in CMOS LIF implementations. Thermal noise, flicker noise, and other noise sources can significantly impact the behavior of analog circuits operating at low current levels, which are often necessary for energy-efficient operation. While biological neurons leverage noise constructively, engineered systems must carefully manage noise to maintain computational integrity.

The trade-off between biological fidelity and implementation efficiency creates a fundamental tension in CMOS LIF design. More biologically accurate models typically require more complex circuitry, consuming greater power and silicon area. Conversely, simplified models may achieve better hardware efficiency but potentially sacrifice computational capabilities that emerge from more detailed biological mechanisms.

Integration with digital processing systems presents additional challenges, particularly regarding the interface between the analog domain of neuron operation and the digital domain of conventional computing. Efficient analog-to-digital and digital-to-analog conversion becomes critical for hybrid neuromorphic systems, adding complexity to the overall design.

Current CMOS LIF Neuron Implementation Approaches

01 CMOS Implementation of LIF Neuron Models

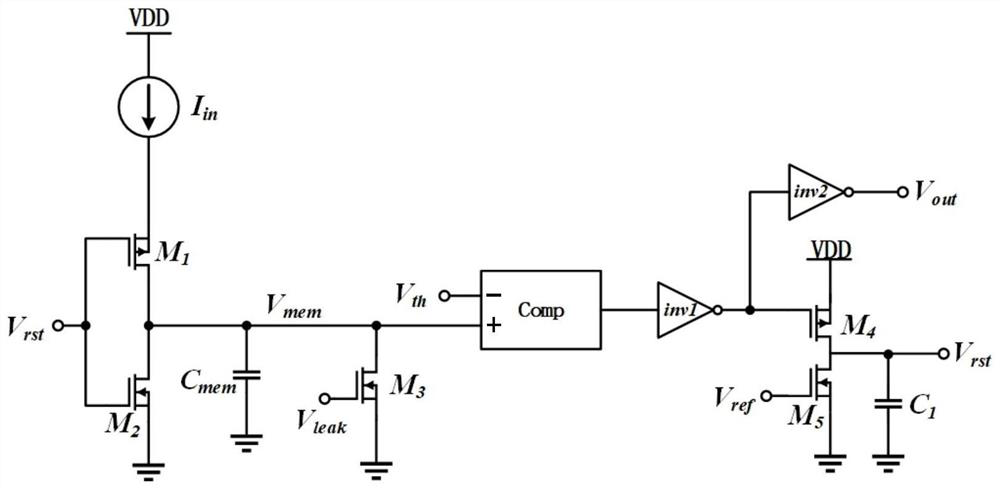

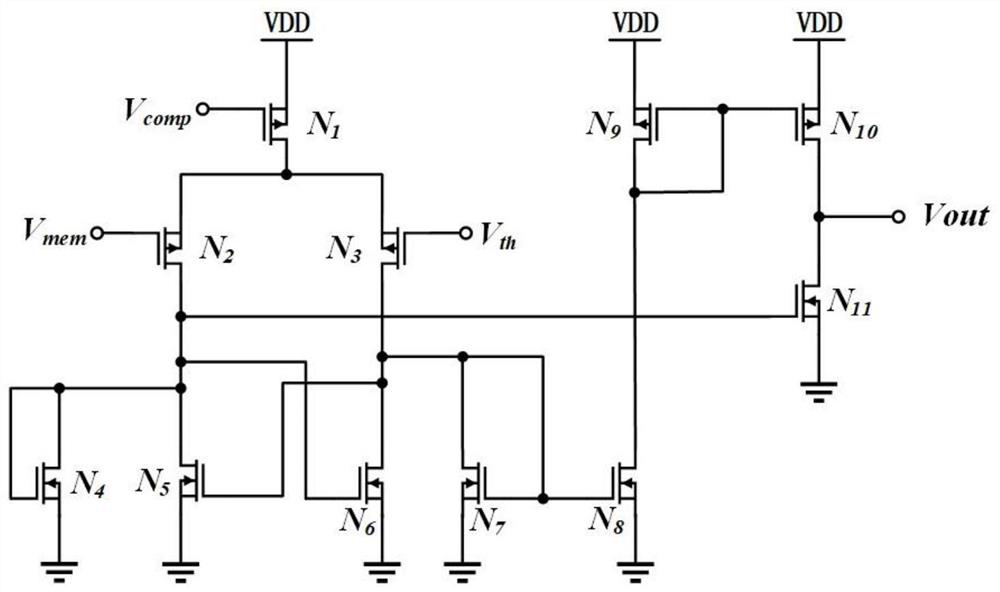

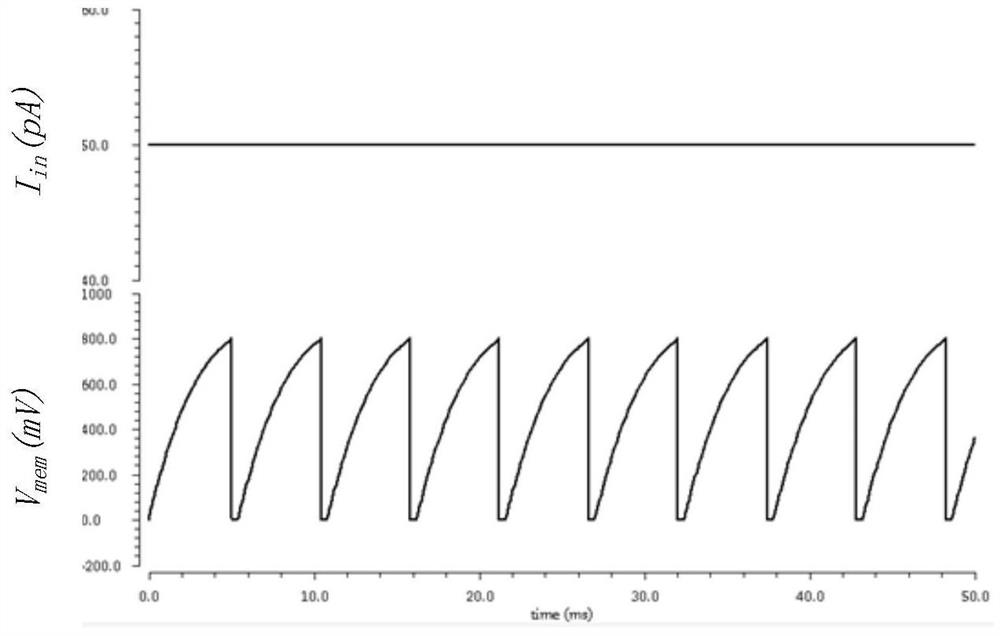

CMOS technology is used to implement Leaky Integrate-and-Fire neuron models in hardware. These implementations typically include circuits for membrane potential integration, leakage mechanisms, and threshold-based firing. The CMOS-based LIF neurons can efficiently mimic biological neuron behavior while consuming low power, making them suitable for neuromorphic computing applications.- CMOS Implementation of LIF Neuron Models: Various CMOS circuit designs have been developed to implement Leaky Integrate-and-Fire neuron models in hardware. These implementations typically include capacitors for membrane potential integration, leakage circuits to model the decay of membrane potential, and comparator circuits for threshold detection. The CMOS-based LIF neurons can efficiently mimic the biological neuron's behavior while maintaining low power consumption and compact design, making them suitable for large-scale neuromorphic systems.

- Energy-Efficient LIF Neuron Designs: Energy efficiency is a critical aspect of CMOS-based LIF neuron implementations. Various techniques have been developed to reduce power consumption, including subthreshold operation, dynamic threshold adjustment, and event-driven processing. These energy-efficient designs enable the implementation of large-scale neuromorphic systems with thousands or millions of neurons while maintaining reasonable power budgets, making them suitable for edge computing applications and battery-powered devices.

- Spike Timing and Learning Mechanisms in LIF Neurons: CMOS implementations of LIF neurons incorporate various spike timing and learning mechanisms to enable adaptive behavior. These include spike-timing-dependent plasticity (STDP), homeostatic plasticity, and intrinsic plasticity mechanisms. The timing circuits are designed to accurately model the temporal dynamics of biological neurons, allowing for precise spike generation and processing. These learning mechanisms enable the neuromorphic systems to adapt to changing inputs and learn patterns from data.

- Scalable Neuromorphic Architectures with LIF Neurons: Scalable neuromorphic architectures have been developed using CMOS-based LIF neurons as building blocks. These architectures include crossbar arrays, hierarchical networks, and modular designs that can be scaled to implement large neural networks. The scalability is achieved through efficient interconnect designs, address-event representation protocols, and hierarchical routing schemes. These architectures enable the implementation of complex neural networks for applications such as pattern recognition, classification, and control.

- Application-Specific LIF Neuron Optimizations: CMOS-based LIF neurons have been optimized for specific applications such as image processing, speech recognition, and sensor data processing. These optimizations include specialized membrane potential dynamics, adaptive thresholds, and application-specific connectivity patterns. By tailoring the LIF neuron designs to specific applications, improved performance in terms of accuracy, speed, and energy efficiency can be achieved compared to general-purpose designs.

02 Energy-Efficient LIF Neuron Designs

Energy efficiency is a critical aspect of LIF neuron implementations in CMOS. Various circuit techniques are employed to reduce power consumption while maintaining computational capabilities. These include subthreshold operation, dynamic threshold adjustment, and optimized leakage current control. Such energy-efficient designs enable the development of large-scale neuromorphic systems with thousands of neurons on a single chip.Expand Specific Solutions03 Spike Timing and Communication Protocols

LIF neuron models in CMOS incorporate specific mechanisms for spike timing and communication between neurons. These designs implement various encoding schemes for neural information, including rate coding and temporal coding. The communication protocols enable efficient signal transmission between neurons in neuromorphic networks, supporting complex pattern recognition and learning tasks.Expand Specific Solutions04 Adaptive Learning and Plasticity in LIF Neurons

CMOS implementations of LIF neurons often include circuits for adaptive learning and synaptic plasticity. These features allow the neurons to modify their connection strengths based on input patterns, implementing learning rules such as spike-timing-dependent plasticity (STDP). The adaptive capabilities enable neuromorphic systems to perform unsupervised learning and adapt to changing input conditions.Expand Specific Solutions05 Integration with Sensing and Processing Systems

LIF neuron models in CMOS are integrated with various sensing and processing systems to create complete neuromorphic solutions. These integrated systems combine the LIF neurons with sensor interfaces, memory elements, and control circuits. Applications include image recognition, speech processing, and other pattern recognition tasks that benefit from the parallel processing capabilities of neuromorphic architectures.Expand Specific Solutions

Leading Companies and Research Institutions in Neuromorphic Engineering

The LIF neuron model CMOS design field is currently in a growth phase, with increasing market interest driven by neuromorphic computing applications. The market is expanding rapidly, estimated at several billion dollars, as companies integrate these designs into AI hardware. Technologically, the field shows varying maturity levels across players. IBM leads with advanced neuromorphic architectures, while Huawei, TSMC, and Qualcomm are making significant investments in hardware implementations. Academic institutions like Xidian University, Zhejiang University, and IIT Bombay contribute fundamental research. Samsung and DB HITEK are developing specialized CMOS processes for neural circuits. The ecosystem demonstrates a collaborative yet competitive landscape between established semiconductor companies and research institutions, with commercialization accelerating as technical challenges in power efficiency and integration are addressed.

International Business Machines Corp.

Technical Solution: IBM has pioneered advanced LIF neuron implementations in CMOS technology through their TrueNorth neuromorphic architecture. Their design features a compact, energy-efficient LIF neuron circuit that integrates incoming spikes on a membrane capacitor with controlled leakage mechanisms. IBM's implementation utilizes a 28nm process technology, achieving remarkable energy efficiency of approximately 26 pJ per synaptic event. The architecture incorporates programmable leak currents that can be dynamically adjusted to model various neural behaviors. IBM's TrueNorth chip contains over 1 million digital LIF neurons and 256 million synapses, organized in a modular, scalable architecture that enables complex neural network implementations. Their design emphasizes deterministic operation and precise control over neural parameters, allowing for reliable neuromorphic computing applications in pattern recognition, classification, and real-time signal processing tasks.

Strengths: Exceptional energy efficiency with proven scalability to millions of neurons; mature fabrication process with demonstrated reliability; comprehensive programming framework for neural network deployment. Weaknesses: Digital implementation may lack some biological fidelity compared to analog approaches; relatively high implementation complexity requiring specialized design expertise.

Huawei Technologies Co., Ltd.

Technical Solution: Huawei has developed innovative LIF neuron CMOS implementations focusing on mobile and edge computing applications. Their approach utilizes a hybrid analog-digital design that optimizes both power efficiency and computational precision. Huawei's LIF neurons feature adaptive leak rates that dynamically adjust based on input patterns, enabling more efficient information processing. Their implementation includes on-chip learning capabilities through spike-timing-dependent plasticity (STDP) mechanisms integrated directly with the neuron circuits. Huawei has demonstrated these designs in 16nm FinFET technology, achieving sub-picojoule energy consumption per spike while maintaining high computational accuracy. The architecture incorporates specialized memory structures co-located with neuron arrays to minimize data movement, addressing the von Neumann bottleneck that limits conventional computing approaches. Huawei's neuromorphic chips target applications in speech recognition, computer vision, and other AI tasks requiring real-time processing with limited power budgets.

Strengths: Highly optimized for mobile/edge deployment with exceptional power efficiency; innovative hybrid design balancing precision and energy consumption; integration with existing AI frameworks. Weaknesses: Less mature ecosystem compared to some competitors; potential challenges in scaling to very large network implementations.

Key Patents and Innovations in LIF Neuron Circuit Design

Spiking neural network neuron circuit based on LIF model

PatentActiveCN112465134A

Innovation

- Design a pulse neural network neuron circuit based on the LIF model, using membrane potential accumulation circuit, leakage circuit, pulse generation circuit, refractory period circuit and reset circuit, using sub-threshold area MOS tube and hysteresis comparator, by adjusting the bias Voltage controls the frequency at which neuronal circuits fire pulses.

Power Consumption and Scaling Considerations

Power consumption represents a critical factor in the design and implementation of LIF neuron models in CMOS technology. As neuromorphic computing systems scale to incorporate thousands or millions of neurons, energy efficiency becomes paramount. Current CMOS implementations of LIF neurons typically consume power in the range of 10-100 nW per neuron, depending on the specific design choices and fabrication technology.

The static power consumption in LIF neuron circuits primarily stems from leakage currents in transistors, particularly in sub-threshold operation regions. Dynamic power consumption occurs during neuron firing events and membrane potential updates. Innovative circuit techniques such as power gating, clock gating, and adaptive biasing have demonstrated significant reductions in power consumption, with some recent designs achieving sub-nanowatt operation per neuron.

Scaling considerations present both challenges and opportunities for LIF neuron implementations. As CMOS technology nodes continue to shrink below 10nm, transistor characteristics change substantially, affecting the behavior of analog components critical to LIF neuron operation. Reduced supply voltages in advanced nodes limit the dynamic range available for representing membrane potentials, potentially compromising computational precision.

The area efficiency of LIF neurons improves with technology scaling, enabling higher neuron densities. Current implementations typically require 100-1000 μm² per neuron in mature CMOS processes, while advanced nodes can potentially reduce this footprint by an order of magnitude. However, increased integration density exacerbates thermal management challenges, as concentrated heat generation can affect circuit behavior and reliability.

Process variations become increasingly significant at smaller technology nodes, introducing inconsistencies in neuron behavior across large arrays. Techniques such as calibration circuits, adaptive biasing, and digital trimming have been developed to mitigate these variations, though they introduce additional complexity and overhead.

The trade-off between digital and analog implementations becomes more nuanced with scaling. While digital implementations offer better noise immunity and programmability, they typically consume more power and area than their analog counterparts. Hybrid approaches that combine digital control with analog computation show promise for balancing these considerations, particularly as technology scaling continues to favor digital circuits.

The static power consumption in LIF neuron circuits primarily stems from leakage currents in transistors, particularly in sub-threshold operation regions. Dynamic power consumption occurs during neuron firing events and membrane potential updates. Innovative circuit techniques such as power gating, clock gating, and adaptive biasing have demonstrated significant reductions in power consumption, with some recent designs achieving sub-nanowatt operation per neuron.

Scaling considerations present both challenges and opportunities for LIF neuron implementations. As CMOS technology nodes continue to shrink below 10nm, transistor characteristics change substantially, affecting the behavior of analog components critical to LIF neuron operation. Reduced supply voltages in advanced nodes limit the dynamic range available for representing membrane potentials, potentially compromising computational precision.

The area efficiency of LIF neurons improves with technology scaling, enabling higher neuron densities. Current implementations typically require 100-1000 μm² per neuron in mature CMOS processes, while advanced nodes can potentially reduce this footprint by an order of magnitude. However, increased integration density exacerbates thermal management challenges, as concentrated heat generation can affect circuit behavior and reliability.

Process variations become increasingly significant at smaller technology nodes, introducing inconsistencies in neuron behavior across large arrays. Techniques such as calibration circuits, adaptive biasing, and digital trimming have been developed to mitigate these variations, though they introduce additional complexity and overhead.

The trade-off between digital and analog implementations becomes more nuanced with scaling. While digital implementations offer better noise immunity and programmability, they typically consume more power and area than their analog counterparts. Hybrid approaches that combine digital control with analog computation show promise for balancing these considerations, particularly as technology scaling continues to favor digital circuits.

Benchmarking and Performance Metrics for LIF Neuron Designs

Establishing effective benchmarking and performance metrics for LIF neuron designs is crucial for evaluating and comparing different implementations. The primary metrics for CMOS-based LIF neurons include energy efficiency, typically measured in joules per spike or femtojoules per spike, which directly impacts the overall power consumption of neuromorphic systems containing thousands to millions of neurons.

Area efficiency, measured in square micrometers per neuron, represents another critical metric as it determines the scalability of neuromorphic systems. Smaller neuron footprints enable higher integration density, allowing more complex neural networks to be implemented on a single chip.

Temporal precision, quantified by jitter in spike timing (typically in nanoseconds or microseconds), is essential for applications requiring precise temporal coding. This metric becomes particularly important in sensory processing systems where timing information carries significant meaning.

Dynamic range, measured as the ratio between maximum and minimum operational frequencies, indicates the neuron's ability to function across varying input conditions. Higher dynamic range allows neurons to respond appropriately to both weak and strong stimuli, mimicking biological neurons more effectively.

Noise immunity, often expressed as signal-to-noise ratio (SNR), determines the robustness of neuron operation in noisy environments. This is particularly relevant for low-power implementations where signal levels may approach thermal noise floors.

Configurability metrics assess the neuron's adaptability to different computational requirements, including the range of adjustable time constants, threshold voltages, and leakage parameters. More configurable designs offer greater flexibility but often at the cost of increased area and complexity.

Process variation tolerance measures how consistently neurons perform across different fabrication batches and under varying operating conditions. This metric is crucial for large-scale neuromorphic systems where uniformity of neuron behavior impacts overall system performance.

Standardized benchmarking tasks have emerged to facilitate fair comparisons, including pattern recognition accuracy, spike latency in response to step inputs, and power-delay product measurements. The neuromorphic community has begun adopting these standardized tests to enable meaningful cross-comparison between different implementation approaches.

Recent efforts have focused on developing figure-of-merit metrics that combine multiple performance aspects, such as energy-delay product and area-energy efficiency, providing more comprehensive evaluation tools for neuromorphic designers seeking optimal trade-offs for specific application domains.

Area efficiency, measured in square micrometers per neuron, represents another critical metric as it determines the scalability of neuromorphic systems. Smaller neuron footprints enable higher integration density, allowing more complex neural networks to be implemented on a single chip.

Temporal precision, quantified by jitter in spike timing (typically in nanoseconds or microseconds), is essential for applications requiring precise temporal coding. This metric becomes particularly important in sensory processing systems where timing information carries significant meaning.

Dynamic range, measured as the ratio between maximum and minimum operational frequencies, indicates the neuron's ability to function across varying input conditions. Higher dynamic range allows neurons to respond appropriately to both weak and strong stimuli, mimicking biological neurons more effectively.

Noise immunity, often expressed as signal-to-noise ratio (SNR), determines the robustness of neuron operation in noisy environments. This is particularly relevant for low-power implementations where signal levels may approach thermal noise floors.

Configurability metrics assess the neuron's adaptability to different computational requirements, including the range of adjustable time constants, threshold voltages, and leakage parameters. More configurable designs offer greater flexibility but often at the cost of increased area and complexity.

Process variation tolerance measures how consistently neurons perform across different fabrication batches and under varying operating conditions. This metric is crucial for large-scale neuromorphic systems where uniformity of neuron behavior impacts overall system performance.

Standardized benchmarking tasks have emerged to facilitate fair comparisons, including pattern recognition accuracy, spike latency in response to step inputs, and power-delay product measurements. The neuromorphic community has begun adopting these standardized tests to enable meaningful cross-comparison between different implementation approaches.

Recent efforts have focused on developing figure-of-merit metrics that combine multiple performance aspects, such as energy-delay product and area-energy efficiency, providing more comprehensive evaluation tools for neuromorphic designers seeking optimal trade-offs for specific application domains.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!