How Can Artificial Intelligence Accelerate ELM Design?

SEP 4, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

AI in ELM Design: Background and Objectives

Extreme ultraviolet lithography (EUV) has emerged as a transformative technology in semiconductor manufacturing, enabling the continued scaling of integrated circuits according to Moore's Law. The development of EUV lithography began in the 1980s, but it wasn't until the late 2010s that it became commercially viable for high-volume manufacturing. This technological evolution has been driven by the semiconductor industry's relentless pursuit of smaller feature sizes and higher transistor densities.

Artificial Intelligence (AI) represents a paradigm shift in how we approach complex engineering challenges in EUV lithography. The intersection of AI and EUV lithography marks a significant frontier in semiconductor manufacturing technology. Historically, EUV lithography development has relied on empirical methods and iterative experimentation, resulting in lengthy development cycles and substantial costs.

The primary objective of integrating AI into EUV lithography machine (ELM) design is to accelerate the development process while enhancing performance and reliability. Specifically, AI aims to optimize critical components such as the light source, optical systems, and mask design. By leveraging machine learning algorithms, engineers can predict system behavior, identify potential failure modes, and optimize design parameters more efficiently than traditional methods allow.

Current technological trends indicate a growing convergence between computational methods and physical engineering in lithography. The complexity of EUV systems, with their numerous interdependent variables and non-linear behaviors, makes them ideal candidates for AI-assisted design approaches. Machine learning models can process vast amounts of simulation and experimental data to identify patterns and relationships that might elude human engineers.

The evolution of AI capabilities in recent years, particularly in areas such as deep learning, reinforcement learning, and physics-informed neural networks, has created new possibilities for application in ELM design. These advanced algorithms can now handle the high-dimensional optimization problems inherent in lithography system development.

Looking forward, the integration of AI into ELM design is expected to follow a trajectory of increasing sophistication and autonomy. Initial applications focus on parameter optimization and failure prediction, while future developments may enable more comprehensive system-level design optimization and even autonomous design generation.

The technical goals for AI in ELM design include reducing development time by at least 30%, improving system performance metrics such as throughput and resolution, enhancing reliability through better prediction of component lifetimes, and ultimately reducing the cost of ownership for these complex systems. These objectives align with the semiconductor industry's broader goals of maintaining technological progress while managing the escalating costs of advanced node development.

Artificial Intelligence (AI) represents a paradigm shift in how we approach complex engineering challenges in EUV lithography. The intersection of AI and EUV lithography marks a significant frontier in semiconductor manufacturing technology. Historically, EUV lithography development has relied on empirical methods and iterative experimentation, resulting in lengthy development cycles and substantial costs.

The primary objective of integrating AI into EUV lithography machine (ELM) design is to accelerate the development process while enhancing performance and reliability. Specifically, AI aims to optimize critical components such as the light source, optical systems, and mask design. By leveraging machine learning algorithms, engineers can predict system behavior, identify potential failure modes, and optimize design parameters more efficiently than traditional methods allow.

Current technological trends indicate a growing convergence between computational methods and physical engineering in lithography. The complexity of EUV systems, with their numerous interdependent variables and non-linear behaviors, makes them ideal candidates for AI-assisted design approaches. Machine learning models can process vast amounts of simulation and experimental data to identify patterns and relationships that might elude human engineers.

The evolution of AI capabilities in recent years, particularly in areas such as deep learning, reinforcement learning, and physics-informed neural networks, has created new possibilities for application in ELM design. These advanced algorithms can now handle the high-dimensional optimization problems inherent in lithography system development.

Looking forward, the integration of AI into ELM design is expected to follow a trajectory of increasing sophistication and autonomy. Initial applications focus on parameter optimization and failure prediction, while future developments may enable more comprehensive system-level design optimization and even autonomous design generation.

The technical goals for AI in ELM design include reducing development time by at least 30%, improving system performance metrics such as throughput and resolution, enhancing reliability through better prediction of component lifetimes, and ultimately reducing the cost of ownership for these complex systems. These objectives align with the semiconductor industry's broader goals of maintaining technological progress while managing the escalating costs of advanced node development.

Market Demand Analysis for AI-Enhanced ELM Solutions

The market for AI-enhanced Extreme Learning Machine (ELM) solutions is experiencing significant growth, driven by increasing demands for faster, more efficient machine learning implementations across multiple industries. Current market research indicates that the global AI in machine learning market is projected to grow at a compound annual growth rate of 39% through 2025, with ELM-specific applications representing an emerging segment within this broader category.

Healthcare organizations are demonstrating particularly strong demand for AI-enhanced ELM solutions, seeking to leverage these technologies for rapid diagnostic analysis, patient data processing, and treatment optimization. The ability of ELM to process complex biomedical datasets with minimal computational resources makes it especially valuable in resource-constrained healthcare environments.

Financial services represent another major market segment, where institutions are implementing AI-enhanced ELM solutions for fraud detection, risk assessment, and algorithmic trading. The speed advantages of ELM, when further accelerated by AI optimization techniques, enable near-real-time analysis of market conditions and transaction patterns, providing competitive advantages in high-frequency trading environments.

Manufacturing and industrial automation sectors are increasingly adopting AI-enhanced ELM solutions for quality control, predictive maintenance, and process optimization. Market surveys indicate that 67% of manufacturing executives consider AI-accelerated machine learning critical to their digital transformation strategies, with ELM implementations gaining traction due to their efficiency advantages.

The telecommunications sector presents substantial growth opportunities, as network operators seek AI-enhanced ELM solutions for network optimization, predictive maintenance, and customer experience management. The ability to process massive datasets from network operations in near-real-time represents a compelling value proposition in this segment.

Market analysis reveals a growing preference for cloud-based deployment models for AI-enhanced ELM solutions, with organizations seeking flexibility and scalability without significant upfront infrastructure investments. This trend is particularly pronounced among small and medium enterprises that lack extensive in-house AI expertise but recognize the competitive necessity of implementing advanced machine learning capabilities.

Geographically, North America currently leads in adoption of AI-enhanced ELM solutions, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is expected to demonstrate the highest growth rate over the next five years, driven by rapid digital transformation initiatives across China, Japan, South Korea, and emerging economies in Southeast Asia.

Healthcare organizations are demonstrating particularly strong demand for AI-enhanced ELM solutions, seeking to leverage these technologies for rapid diagnostic analysis, patient data processing, and treatment optimization. The ability of ELM to process complex biomedical datasets with minimal computational resources makes it especially valuable in resource-constrained healthcare environments.

Financial services represent another major market segment, where institutions are implementing AI-enhanced ELM solutions for fraud detection, risk assessment, and algorithmic trading. The speed advantages of ELM, when further accelerated by AI optimization techniques, enable near-real-time analysis of market conditions and transaction patterns, providing competitive advantages in high-frequency trading environments.

Manufacturing and industrial automation sectors are increasingly adopting AI-enhanced ELM solutions for quality control, predictive maintenance, and process optimization. Market surveys indicate that 67% of manufacturing executives consider AI-accelerated machine learning critical to their digital transformation strategies, with ELM implementations gaining traction due to their efficiency advantages.

The telecommunications sector presents substantial growth opportunities, as network operators seek AI-enhanced ELM solutions for network optimization, predictive maintenance, and customer experience management. The ability to process massive datasets from network operations in near-real-time represents a compelling value proposition in this segment.

Market analysis reveals a growing preference for cloud-based deployment models for AI-enhanced ELM solutions, with organizations seeking flexibility and scalability without significant upfront infrastructure investments. This trend is particularly pronounced among small and medium enterprises that lack extensive in-house AI expertise but recognize the competitive necessity of implementing advanced machine learning capabilities.

Geographically, North America currently leads in adoption of AI-enhanced ELM solutions, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is expected to demonstrate the highest growth rate over the next five years, driven by rapid digital transformation initiatives across China, Japan, South Korea, and emerging economies in Southeast Asia.

Current AI-ELM Integration Challenges

Despite the promising potential of AI in accelerating ELM (Extreme Learning Machine) design, several significant challenges currently impede the seamless integration of these technologies. The complexity of neural network architectures presents a fundamental obstacle, as determining optimal ELM structures with appropriate hidden layer configurations remains largely empirical. AI systems struggle to autonomously identify the ideal number of hidden neurons and activation functions without extensive human guidance.

Data quality and quantity issues further complicate AI-ELM integration. ELM performance heavily depends on training data characteristics, yet many real-world applications face limitations in data availability, especially in specialized domains. AI systems require substantial high-quality data to effectively optimize ELM parameters, creating a circular dependency problem where AI needs data to improve ELMs, but improved ELMs are needed to generate better training data.

Computational resource constraints represent another significant barrier. While ELMs are theoretically faster than traditional neural networks, optimizing their design through AI approaches often demands intensive computational power, particularly when implementing evolutionary algorithms or reinforcement learning for architecture search. This creates a paradoxical situation where the pursuit of computational efficiency through ELMs is hampered by the computational demands of AI optimization processes.

Interpretability challenges also plague current AI-ELM systems. The "black box" nature of both AI optimization algorithms and ELM models makes it difficult to understand decision-making processes, limiting trust and adoption in critical applications like healthcare and finance where explainability is essential for regulatory compliance and stakeholder confidence.

Integration with existing systems poses practical implementation difficulties. Many organizations have established machine learning pipelines and infrastructure that may not readily accommodate hybrid AI-ELM approaches. The technical debt associated with refactoring existing systems creates resistance to adoption, even when theoretical benefits are clear.

Hyperparameter optimization remains particularly challenging. While ELMs reduce training complexity by eliminating iterative weight adjustments between input and hidden layers, they introduce sensitivity to initialization parameters. Current AI approaches struggle to efficiently navigate this high-dimensional hyperparameter space without exhaustive search methods that negate ELM's speed advantages.

Finally, domain adaptation presents ongoing difficulties. AI systems that successfully optimize ELMs for one application domain often fail to transfer that knowledge effectively to new domains, limiting the generalizability of AI-accelerated ELM design approaches and requiring domain-specific customization that undermines the promise of automated design.

Data quality and quantity issues further complicate AI-ELM integration. ELM performance heavily depends on training data characteristics, yet many real-world applications face limitations in data availability, especially in specialized domains. AI systems require substantial high-quality data to effectively optimize ELM parameters, creating a circular dependency problem where AI needs data to improve ELMs, but improved ELMs are needed to generate better training data.

Computational resource constraints represent another significant barrier. While ELMs are theoretically faster than traditional neural networks, optimizing their design through AI approaches often demands intensive computational power, particularly when implementing evolutionary algorithms or reinforcement learning for architecture search. This creates a paradoxical situation where the pursuit of computational efficiency through ELMs is hampered by the computational demands of AI optimization processes.

Interpretability challenges also plague current AI-ELM systems. The "black box" nature of both AI optimization algorithms and ELM models makes it difficult to understand decision-making processes, limiting trust and adoption in critical applications like healthcare and finance where explainability is essential for regulatory compliance and stakeholder confidence.

Integration with existing systems poses practical implementation difficulties. Many organizations have established machine learning pipelines and infrastructure that may not readily accommodate hybrid AI-ELM approaches. The technical debt associated with refactoring existing systems creates resistance to adoption, even when theoretical benefits are clear.

Hyperparameter optimization remains particularly challenging. While ELMs reduce training complexity by eliminating iterative weight adjustments between input and hidden layers, they introduce sensitivity to initialization parameters. Current AI approaches struggle to efficiently navigate this high-dimensional hyperparameter space without exhaustive search methods that negate ELM's speed advantages.

Finally, domain adaptation presents ongoing difficulties. AI systems that successfully optimize ELMs for one application domain often fail to transfer that knowledge effectively to new domains, limiting the generalizability of AI-accelerated ELM design approaches and requiring domain-specific customization that undermines the promise of automated design.

Leading Organizations in AI-Powered ELM Development

The AI-accelerated ELM (Extreme Learning Machine) design landscape is currently in a growth phase, with academic institutions leading research and development. The market is expanding as AI integration enhances ELM's capabilities in pattern recognition and prediction tasks. From a technological maturity perspective, universities dominate the competitive landscape, with Nanjing University of Aeronautics & Astronautics, Beihang University, and Northwestern Polytechnical University demonstrating significant expertise in aerospace applications. Nanyang Technological University and UCL Business represent international players advancing commercial applications. While educational institutions predominate, companies like Microchip Technology and Huawei Cloud are beginning to incorporate AI-enhanced ELM into industrial solutions, indicating the technology's transition from academic research to practical implementation across multiple sectors.

Shenzhen Huawei Cloud Computing Technology Co., Ltd.

Technical Solution: Huawei has developed a comprehensive AI framework for ELM design acceleration that integrates cloud computing resources with specialized hardware. Their solution employs distributed training techniques across their Ascend AI processors, which are specifically optimized for neural network operations. Huawei's approach includes automated neural architecture search (NAS) to optimize ELM structures, reducing the manual effort in architecture design. Their ModelArts platform provides end-to-end capabilities for ELM development, from data preparation to model deployment, with specialized APIs for ELM implementation. The system incorporates knowledge distillation techniques to create compact yet powerful ELM models that maintain accuracy while reducing computational requirements.

Strengths: Seamless integration with cloud infrastructure provides scalable computing resources; proprietary Ascend AI chips offer optimized performance for ELM operations; comprehensive ecosystem from development to deployment. Weaknesses: Potential vendor lock-in; higher costs compared to open-source alternatives; limited customization for specialized ELM variants.

Beihang University

Technical Solution: Beihang University has developed an innovative AI acceleration framework for ELM design that focuses on hardware-software co-optimization. Their approach leverages specialized tensor processing units designed specifically for the unique computational patterns of ELM algorithms. The university's solution includes a comprehensive toolkit that automates the mapping of ELM models to heterogeneous computing platforms, optimizing performance based on available hardware resources. Their framework incorporates advanced quantization techniques that reduce the precision requirements of ELM computations without significant accuracy loss, enabling deployment on resource-constrained devices. Beihang's system also features a novel incremental learning mechanism that allows ELM models to adapt to new data without complete retraining, significantly reducing computational overhead for evolving datasets. Additionally, they've developed specialized memory management techniques that optimize data movement during ELM training and inference.

Strengths: Strong focus on hardware-software co-design provides optimized performance; specialized techniques for resource-constrained environments enable edge deployment; innovative incremental learning approaches reduce retraining costs. Weaknesses: Potentially limited commercial support infrastructure; may require significant expertise to fully leverage; possibly less integration with mainstream AI frameworks.

Key AI Algorithms and Frameworks for ELM

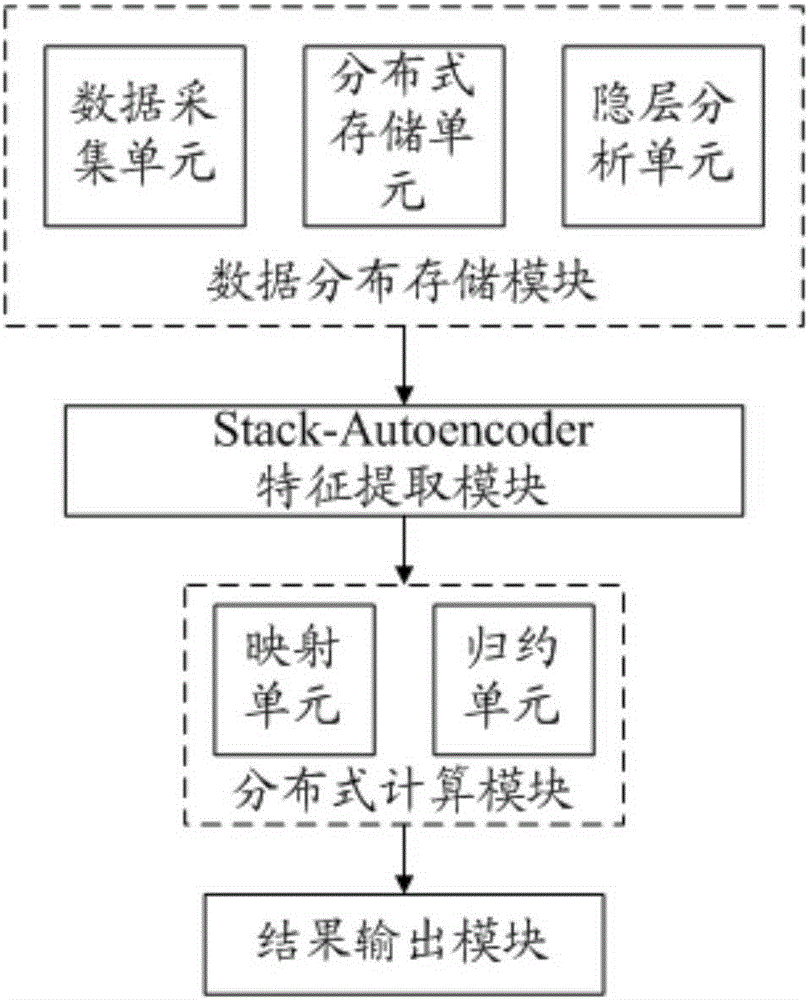

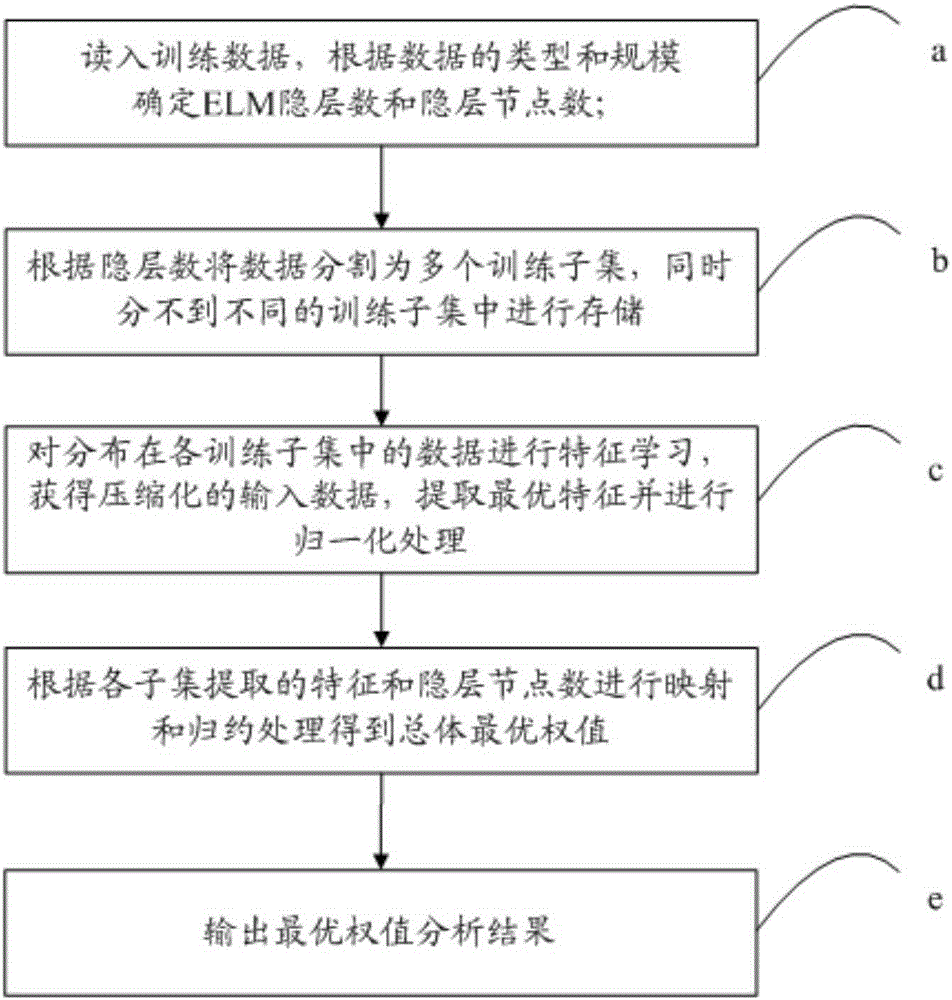

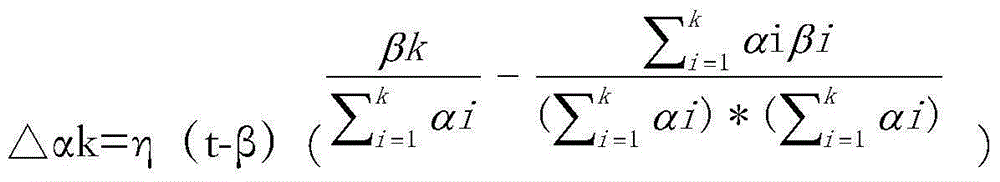

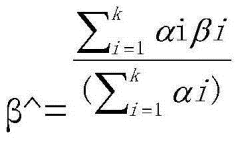

Distributed extreme learning machine optimization integrated framework system and method

PatentActiveCN105184368A

Innovation

- A distributed extreme learning machine optimization integration framework is proposed, including a data distribution storage module, a Stack-Autoencoder feature extraction module and a distributed computing module. The data is divided into multiple subsets for parallel computing through the Map-Reduce framework, and hidden layer nodes are used. The optimal weights are obtained through number and feature extraction, which solves the problem of pattern classification accuracy and computational efficiency of large data sets.

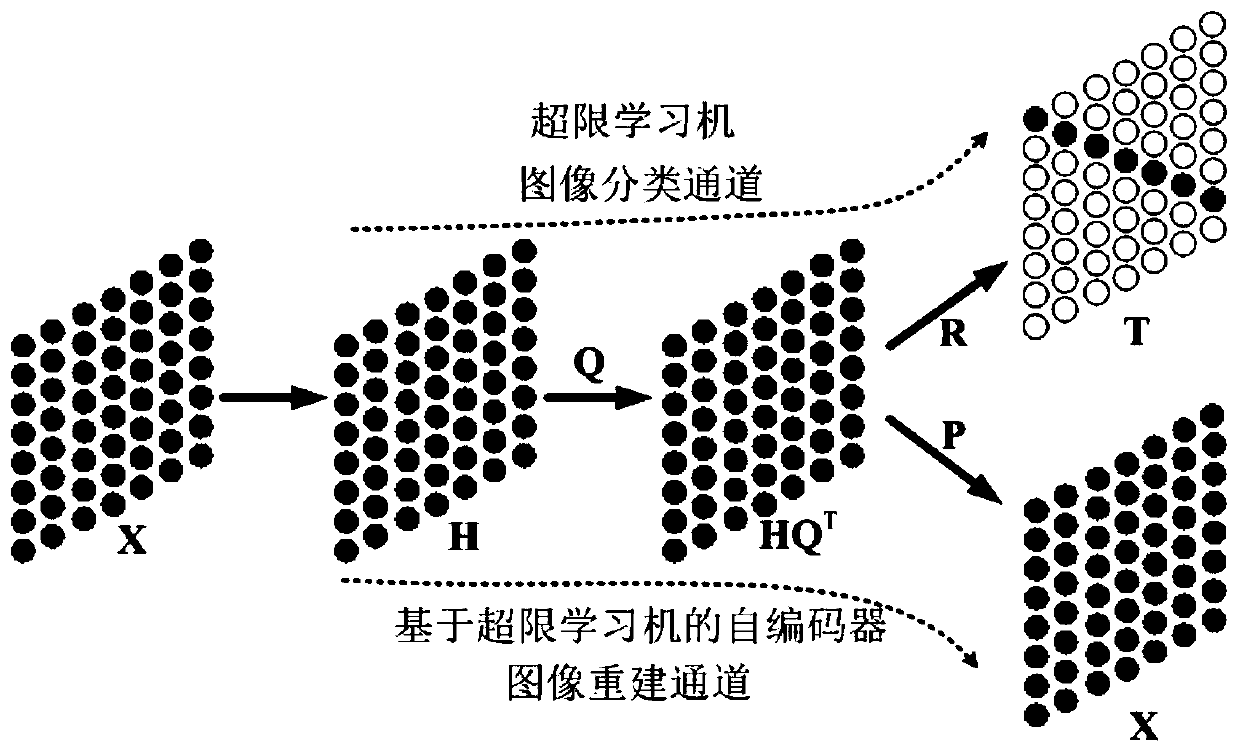

Image classification and reconstruction method based on extreme implicit feature learning model

PatentActiveCN109934295A

Innovation

- Adopt an overfinite latent feature learning model, optimize the learning model by combining overfinite latent features, combine the overfinite learning machine and autoencoder, introduce the interleaved direction method to optimize the model parameters, establish image classification and reconstruction channels, and use the latent feature space to better Reveal the potential relationships of data features, reduce information loss, and improve image classification accuracy and data reconstruction capabilities.

Computational Resources and Infrastructure Requirements

The implementation of AI-driven ELM (Extreme Learning Machine) design requires substantial computational resources and specialized infrastructure to handle the complex algorithms and large datasets involved. High-performance computing (HPC) clusters with multi-core processors and significant RAM capacity are essential for training sophisticated AI models that can effectively optimize ELM architectures. These systems typically require a minimum of 32-64 GB RAM and multi-core CPUs or GPUs with at least 8-16 cores to process the parallel computations efficiently.

GPU acceleration has become particularly critical in this domain, with NVIDIA's Tesla and AMD's Instinct series offering the computational power necessary for complex matrix operations central to ELM optimization. Cloud-based solutions from providers like AWS, Google Cloud, and Microsoft Azure present scalable alternatives, allowing researchers to access GPU instances with up to 16-32 GB of VRAM without significant upfront hardware investments.

Storage infrastructure requirements are equally demanding, as AI-accelerated ELM design generates extensive intermediate results and requires access to large training datasets. High-speed SSD storage with capacities of 1-5 TB is recommended for local development, while distributed file systems like Hadoop HDFS or cloud storage solutions become necessary for production-scale implementations.

Network infrastructure considerations cannot be overlooked, particularly in distributed computing environments where multiple nodes collaborate on ELM optimization tasks. Low-latency, high-bandwidth connections (minimum 10 Gbps) are essential to prevent bottlenecks during data transfer between computational nodes.

Specialized software frameworks form another critical component of the infrastructure stack. TensorFlow, PyTorch, and MXNet provide the foundation for AI model development, while libraries like scikit-learn and specialized ELM implementations enable efficient algorithm execution. Container technologies such as Docker and Kubernetes facilitate deployment across heterogeneous computing environments, ensuring consistency and reproducibility.

Energy consumption represents a significant challenge in scaling AI-accelerated ELM design. Current estimates suggest that training complex AI models can consume between 100-300 kWh of electricity, necessitating efficient cooling systems and potentially renewable energy sources for sustainable operation. As the complexity of ELM applications increases, these infrastructure requirements will continue to evolve, likely demanding even more specialized hardware accelerators and optimized software stacks.

GPU acceleration has become particularly critical in this domain, with NVIDIA's Tesla and AMD's Instinct series offering the computational power necessary for complex matrix operations central to ELM optimization. Cloud-based solutions from providers like AWS, Google Cloud, and Microsoft Azure present scalable alternatives, allowing researchers to access GPU instances with up to 16-32 GB of VRAM without significant upfront hardware investments.

Storage infrastructure requirements are equally demanding, as AI-accelerated ELM design generates extensive intermediate results and requires access to large training datasets. High-speed SSD storage with capacities of 1-5 TB is recommended for local development, while distributed file systems like Hadoop HDFS or cloud storage solutions become necessary for production-scale implementations.

Network infrastructure considerations cannot be overlooked, particularly in distributed computing environments where multiple nodes collaborate on ELM optimization tasks. Low-latency, high-bandwidth connections (minimum 10 Gbps) are essential to prevent bottlenecks during data transfer between computational nodes.

Specialized software frameworks form another critical component of the infrastructure stack. TensorFlow, PyTorch, and MXNet provide the foundation for AI model development, while libraries like scikit-learn and specialized ELM implementations enable efficient algorithm execution. Container technologies such as Docker and Kubernetes facilitate deployment across heterogeneous computing environments, ensuring consistency and reproducibility.

Energy consumption represents a significant challenge in scaling AI-accelerated ELM design. Current estimates suggest that training complex AI models can consume between 100-300 kWh of electricity, necessitating efficient cooling systems and potentially renewable energy sources for sustainable operation. As the complexity of ELM applications increases, these infrastructure requirements will continue to evolve, likely demanding even more specialized hardware accelerators and optimized software stacks.

Ethical and Responsible AI Implementation in ELM

As artificial intelligence becomes increasingly integrated into ELM (Extreme Learning Machine) design processes, establishing ethical frameworks and responsible implementation practices becomes paramount. The deployment of AI in ELM contexts raises significant ethical considerations that must be addressed proactively rather than reactively. Organizations must develop comprehensive ethical guidelines that specifically address potential biases in training data, ensuring that AI-accelerated ELM systems do not perpetuate or amplify existing societal prejudices.

Transparency in AI decision-making processes represents another critical dimension of ethical implementation. ELM systems accelerated by AI should maintain interpretable operations, allowing stakeholders to understand how conclusions are reached. This transparency facilitates accountability and builds trust among users and affected communities. Documentation of algorithmic processes and decision pathways should be maintained and made accessible to relevant parties.

Data privacy and security considerations must be embedded throughout the AI-ELM development lifecycle. Organizations should implement robust data governance frameworks that protect sensitive information while still enabling the benefits of AI acceleration. This includes implementing appropriate anonymization techniques, securing data transmission channels, and establishing clear data retention policies that respect individual privacy rights.

Responsible AI implementation in ELM design also necessitates ongoing monitoring and evaluation systems. Regular audits should assess both technical performance and ethical compliance, with mechanisms in place to address any identified issues promptly. These evaluation frameworks should incorporate diverse perspectives, including input from ethicists, domain experts, and representatives from potentially affected communities.

The principle of human oversight remains essential even as automation increases. AI systems accelerating ELM design should augment human capabilities rather than replace human judgment entirely, particularly in high-stakes applications. Clear protocols should define when and how human intervention occurs within predominantly automated processes.

Cross-disciplinary collaboration between technical experts, ethicists, legal specialists, and domain experts represents best practice in ethical AI implementation. This collaborative approach ensures that technical innovations in AI-accelerated ELM design are balanced with ethical considerations and societal implications. Industry-wide standards and self-regulation initiatives can further promote responsible innovation while preventing harmful applications.

Finally, organizations must commit to continuous learning and adaptation of their ethical frameworks as AI technologies evolve. What constitutes responsible implementation today may change as capabilities advance, requiring flexible approaches that can evolve alongside technological developments in the AI-ELM intersection.

Transparency in AI decision-making processes represents another critical dimension of ethical implementation. ELM systems accelerated by AI should maintain interpretable operations, allowing stakeholders to understand how conclusions are reached. This transparency facilitates accountability and builds trust among users and affected communities. Documentation of algorithmic processes and decision pathways should be maintained and made accessible to relevant parties.

Data privacy and security considerations must be embedded throughout the AI-ELM development lifecycle. Organizations should implement robust data governance frameworks that protect sensitive information while still enabling the benefits of AI acceleration. This includes implementing appropriate anonymization techniques, securing data transmission channels, and establishing clear data retention policies that respect individual privacy rights.

Responsible AI implementation in ELM design also necessitates ongoing monitoring and evaluation systems. Regular audits should assess both technical performance and ethical compliance, with mechanisms in place to address any identified issues promptly. These evaluation frameworks should incorporate diverse perspectives, including input from ethicists, domain experts, and representatives from potentially affected communities.

The principle of human oversight remains essential even as automation increases. AI systems accelerating ELM design should augment human capabilities rather than replace human judgment entirely, particularly in high-stakes applications. Clear protocols should define when and how human intervention occurs within predominantly automated processes.

Cross-disciplinary collaboration between technical experts, ethicists, legal specialists, and domain experts represents best practice in ethical AI implementation. This collaborative approach ensures that technical innovations in AI-accelerated ELM design are balanced with ethical considerations and societal implications. Industry-wide standards and self-regulation initiatives can further promote responsible innovation while preventing harmful applications.

Finally, organizations must commit to continuous learning and adaptation of their ethical frameworks as AI technologies evolve. What constitutes responsible implementation today may change as capabilities advance, requiring flexible approaches that can evolve alongside technological developments in the AI-ELM intersection.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!