How does neuromorphic hardware handle temporal data processing?

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Background and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. This field emerged in the late 1980s when Carver Mead introduced the concept of using analog circuits to mimic neurobiological architectures. Since then, neuromorphic computing has evolved significantly, transitioning from theoretical frameworks to practical hardware implementations capable of processing temporal data in ways that conventional von Neumann architectures cannot efficiently achieve.

The evolution of neuromorphic systems has been driven by the fundamental limitations of traditional computing architectures when dealing with temporal data processing tasks such as speech recognition, video analysis, and real-time sensor data interpretation. Traditional computing systems process information sequentially and separate memory from processing units, creating bottlenecks when handling time-dependent data streams that require parallel processing and temporal integration.

Neuromorphic hardware specifically addresses these challenges through its unique architectural principles: massive parallelism, co-located memory and processing, event-driven computation, and inherent temporal dynamics. These features enable efficient processing of temporal data by allowing information to be processed in a time-dependent manner similar to biological neural networks.

The technical objectives of neuromorphic computing in temporal data processing include achieving ultra-low power consumption while maintaining high computational efficiency, implementing adaptive learning mechanisms that can evolve with temporal patterns, and developing scalable architectures capable of handling complex temporal relationships across multiple timescales simultaneously.

Current research trends in this field focus on developing more sophisticated spiking neural networks (SNNs) that can better capture temporal dependencies, creating more efficient neuromorphic hardware platforms with improved temporal resolution, and designing novel learning algorithms specifically optimized for temporal pattern recognition and prediction.

Major technological milestones include the development of dedicated neuromorphic chips such as IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida, all of which incorporate mechanisms for processing time-varying signals. These platforms implement various approaches to temporal data handling, including time-to-first-spike encoding, rate coding, and temporal coding schemes that preserve timing relationships between events.

The ultimate goal of neuromorphic computing in temporal data processing is to create systems that can perceive, learn from, and respond to time-varying stimuli with the efficiency, adaptability, and robustness observed in biological systems, while overcoming the power and performance limitations of conventional computing architectures when dealing with real-world temporal data streams.

The evolution of neuromorphic systems has been driven by the fundamental limitations of traditional computing architectures when dealing with temporal data processing tasks such as speech recognition, video analysis, and real-time sensor data interpretation. Traditional computing systems process information sequentially and separate memory from processing units, creating bottlenecks when handling time-dependent data streams that require parallel processing and temporal integration.

Neuromorphic hardware specifically addresses these challenges through its unique architectural principles: massive parallelism, co-located memory and processing, event-driven computation, and inherent temporal dynamics. These features enable efficient processing of temporal data by allowing information to be processed in a time-dependent manner similar to biological neural networks.

The technical objectives of neuromorphic computing in temporal data processing include achieving ultra-low power consumption while maintaining high computational efficiency, implementing adaptive learning mechanisms that can evolve with temporal patterns, and developing scalable architectures capable of handling complex temporal relationships across multiple timescales simultaneously.

Current research trends in this field focus on developing more sophisticated spiking neural networks (SNNs) that can better capture temporal dependencies, creating more efficient neuromorphic hardware platforms with improved temporal resolution, and designing novel learning algorithms specifically optimized for temporal pattern recognition and prediction.

Major technological milestones include the development of dedicated neuromorphic chips such as IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida, all of which incorporate mechanisms for processing time-varying signals. These platforms implement various approaches to temporal data handling, including time-to-first-spike encoding, rate coding, and temporal coding schemes that preserve timing relationships between events.

The ultimate goal of neuromorphic computing in temporal data processing is to create systems that can perceive, learn from, and respond to time-varying stimuli with the efficiency, adaptability, and robustness observed in biological systems, while overcoming the power and performance limitations of conventional computing architectures when dealing with real-world temporal data streams.

Market Analysis for Temporal Data Processing Solutions

The temporal data processing market is experiencing significant growth driven by the increasing volume of time-series data generated across various industries. Current market estimates value the global temporal data processing solutions market at approximately $5.7 billion, with projections indicating a compound annual growth rate of 21.3% through 2028. This growth is primarily fueled by applications in autonomous vehicles, industrial automation, financial services, and healthcare monitoring systems.

Traditional computing architectures face substantial challenges when processing temporal data, creating a market gap that neuromorphic hardware solutions are positioned to address. The demand for real-time processing of continuous data streams has increased exponentially, with industries requiring systems capable of handling temporal dependencies efficiently while maintaining low power consumption profiles.

Financial services represent one of the largest market segments, where high-frequency trading algorithms and fraud detection systems rely heavily on temporal pattern recognition. Healthcare applications follow closely, with continuous patient monitoring systems generating vast amounts of time-series data requiring immediate analysis. The industrial IoT sector demonstrates the fastest growth rate at 24.7%, as manufacturers implement predictive maintenance solutions based on temporal sensor data.

Market research indicates that energy efficiency is becoming a critical decision factor for enterprises deploying temporal data processing solutions. Neuromorphic hardware offers significant advantages in this area, with benchmark tests showing power consumption reductions of 85-95% compared to GPU-based solutions for equivalent temporal processing tasks. This efficiency translates to substantial operational cost savings, particularly for edge computing deployments.

Regional analysis shows North America currently leading the market with 42% share, followed by Europe (27%) and Asia-Pacific (23%). However, the Asia-Pacific region is expected to demonstrate the highest growth rate over the next five years due to increasing investments in AI infrastructure across China, Japan, and South Korea.

Customer adoption patterns reveal a transition from cloud-based temporal processing to hybrid architectures incorporating edge computing elements. This shift is driven by latency requirements and data privacy concerns, creating new market opportunities for neuromorphic hardware solutions optimized for distributed deployment models.

The competitive landscape remains fragmented, with specialized neuromorphic hardware providers competing against established semiconductor companies expanding into this space. Market consolidation is anticipated as technology matures, with strategic acquisitions already occurring as larger players seek to incorporate neuromorphic capabilities into their existing product portfolios.

Traditional computing architectures face substantial challenges when processing temporal data, creating a market gap that neuromorphic hardware solutions are positioned to address. The demand for real-time processing of continuous data streams has increased exponentially, with industries requiring systems capable of handling temporal dependencies efficiently while maintaining low power consumption profiles.

Financial services represent one of the largest market segments, where high-frequency trading algorithms and fraud detection systems rely heavily on temporal pattern recognition. Healthcare applications follow closely, with continuous patient monitoring systems generating vast amounts of time-series data requiring immediate analysis. The industrial IoT sector demonstrates the fastest growth rate at 24.7%, as manufacturers implement predictive maintenance solutions based on temporal sensor data.

Market research indicates that energy efficiency is becoming a critical decision factor for enterprises deploying temporal data processing solutions. Neuromorphic hardware offers significant advantages in this area, with benchmark tests showing power consumption reductions of 85-95% compared to GPU-based solutions for equivalent temporal processing tasks. This efficiency translates to substantial operational cost savings, particularly for edge computing deployments.

Regional analysis shows North America currently leading the market with 42% share, followed by Europe (27%) and Asia-Pacific (23%). However, the Asia-Pacific region is expected to demonstrate the highest growth rate over the next five years due to increasing investments in AI infrastructure across China, Japan, and South Korea.

Customer adoption patterns reveal a transition from cloud-based temporal processing to hybrid architectures incorporating edge computing elements. This shift is driven by latency requirements and data privacy concerns, creating new market opportunities for neuromorphic hardware solutions optimized for distributed deployment models.

The competitive landscape remains fragmented, with specialized neuromorphic hardware providers competing against established semiconductor companies expanding into this space. Market consolidation is anticipated as technology matures, with strategic acquisitions already occurring as larger players seek to incorporate neuromorphic capabilities into their existing product portfolios.

Current Neuromorphic Hardware Limitations and Challenges

Despite significant advancements in neuromorphic computing, current hardware implementations face substantial limitations when processing temporal data. Traditional von Neumann architectures struggle with the parallel, event-driven nature of neuromorphic systems, creating bottlenecks in temporal data processing. This fundamental architectural mismatch results in inefficiencies when implementing spiking neural networks (SNNs) that inherently operate on temporal information.

Power consumption remains a critical challenge for neuromorphic hardware. While these systems aim to mimic the brain's energy efficiency, current implementations still consume orders of magnitude more power per operation than biological neural systems. This limitation becomes particularly evident when processing continuous temporal data streams that require persistent operation.

Scaling neuromorphic systems presents another significant hurdle. As the number of artificial neurons and synapses increases to handle complex temporal patterns, interconnect density becomes problematic. Current fabrication technologies struggle to match the brain's connectivity density, limiting the complexity of temporal patterns that can be effectively processed.

Memory bandwidth constraints further impede temporal data processing in neuromorphic systems. The frequent updates required for temporal learning algorithms create memory access bottlenecks, especially when implementing plasticity mechanisms like spike-timing-dependent plasticity (STDP) that rely on precise timing relationships.

Current neuromorphic hardware also faces challenges in temporal precision and resolution. Many implementations use simplified neuron models with limited temporal dynamics, reducing their ability to capture subtle temporal features in data. This limitation is particularly problematic for applications requiring fine-grained temporal discrimination, such as audio processing or precise motion detection.

Standardization issues compound these technical challenges. The neuromorphic computing field lacks unified programming models, benchmarks, and interfaces for temporal data processing. This fragmentation complicates development across different hardware platforms and hinders adoption in mainstream applications.

The gap between simulation and physical implementation represents another significant challenge. Algorithms that perform well in software simulations often face implementation difficulties in hardware due to physical constraints, particularly when precise timing is critical for temporal processing tasks.

Finally, current neuromorphic hardware faces integration challenges with conventional computing systems. Efficiently interfacing spike-based temporal processing with traditional data formats and processing pipelines remains difficult, limiting the practical deployment of neuromorphic solutions in existing computational ecosystems.

Power consumption remains a critical challenge for neuromorphic hardware. While these systems aim to mimic the brain's energy efficiency, current implementations still consume orders of magnitude more power per operation than biological neural systems. This limitation becomes particularly evident when processing continuous temporal data streams that require persistent operation.

Scaling neuromorphic systems presents another significant hurdle. As the number of artificial neurons and synapses increases to handle complex temporal patterns, interconnect density becomes problematic. Current fabrication technologies struggle to match the brain's connectivity density, limiting the complexity of temporal patterns that can be effectively processed.

Memory bandwidth constraints further impede temporal data processing in neuromorphic systems. The frequent updates required for temporal learning algorithms create memory access bottlenecks, especially when implementing plasticity mechanisms like spike-timing-dependent plasticity (STDP) that rely on precise timing relationships.

Current neuromorphic hardware also faces challenges in temporal precision and resolution. Many implementations use simplified neuron models with limited temporal dynamics, reducing their ability to capture subtle temporal features in data. This limitation is particularly problematic for applications requiring fine-grained temporal discrimination, such as audio processing or precise motion detection.

Standardization issues compound these technical challenges. The neuromorphic computing field lacks unified programming models, benchmarks, and interfaces for temporal data processing. This fragmentation complicates development across different hardware platforms and hinders adoption in mainstream applications.

The gap between simulation and physical implementation represents another significant challenge. Algorithms that perform well in software simulations often face implementation difficulties in hardware due to physical constraints, particularly when precise timing is critical for temporal processing tasks.

Finally, current neuromorphic hardware faces integration challenges with conventional computing systems. Efficiently interfacing spike-based temporal processing with traditional data formats and processing pipelines remains difficult, limiting the practical deployment of neuromorphic solutions in existing computational ecosystems.

Contemporary Approaches to Temporal Data in Neuromorphic Systems

01 Neuromorphic hardware architectures for temporal processing

Neuromorphic hardware architectures designed specifically for temporal data processing implement brain-inspired circuits that can efficiently process time-varying signals. These architectures often incorporate spiking neural networks (SNNs) that naturally handle temporal information through spike timing. The hardware designs include specialized memory structures and processing elements that maintain temporal relationships in data streams, enabling efficient processing of sequential information with reduced power consumption compared to traditional computing approaches.- Neuromorphic hardware architectures for temporal data processing: Neuromorphic hardware architectures specifically designed for processing temporal data sequences. These architectures mimic the brain's ability to process time-dependent information through specialized circuits and components that can handle sequential data patterns. Such hardware implementations often include timing-dependent processing elements that can recognize patterns across time, making them suitable for applications like speech recognition, video processing, and time-series analysis.

- Spiking neural networks for temporal information processing: Implementation of spiking neural networks (SNNs) in hardware for processing temporal data. SNNs use discrete spikes or pulses to transmit information, similar to biological neurons, making them inherently suitable for processing time-varying signals. These networks encode temporal information in the timing of spikes, allowing for efficient processing of sequential data with reduced power consumption compared to traditional neural networks.

- Memristor-based temporal processing systems: Neuromorphic hardware systems utilizing memristors for temporal data processing. Memristors are non-volatile memory elements whose resistance changes based on the history of applied voltage, making them ideal for implementing temporal processing functions. These systems can efficiently store and process temporal patterns by leveraging the inherent memory capabilities of memristive devices, enabling on-chip learning and adaptation to time-varying inputs.

- Energy-efficient temporal data processing techniques: Energy optimization techniques for neuromorphic hardware processing temporal data. These approaches focus on reducing power consumption while maintaining processing capabilities for time-series data. Techniques include event-driven processing, where computation occurs only when necessary, sparse coding of temporal information, and specialized low-power circuit designs that can efficiently handle sequential data patterns while minimizing energy usage.

- Reconfigurable neuromorphic architectures for adaptive temporal processing: Reconfigurable neuromorphic hardware systems that can adapt to different temporal processing tasks. These architectures allow dynamic modification of their processing elements and connectivity to handle various types of time-dependent data. The reconfigurability enables the hardware to optimize its structure for specific temporal processing requirements, supporting applications ranging from real-time signal processing to complex pattern recognition in time-varying data.

02 Spike-based temporal data encoding and processing

Spike-based approaches for encoding and processing temporal data convert continuous time-series information into discrete spike events. These methods leverage the temporal precision of spike timing to represent data efficiently. Neuromorphic hardware implementations use various spike encoding schemes such as temporal coding, rate coding, or phase coding to transform temporal data into spike patterns that can be processed by spiking neural networks. This approach enables efficient processing of time-series data while maintaining temporal relationships between events.Expand Specific Solutions03 Reconfigurable neuromorphic systems for adaptive temporal processing

Reconfigurable neuromorphic systems provide flexibility in processing different types of temporal data by allowing dynamic adjustment of network parameters and connectivity. These systems can adapt to various temporal processing tasks through on-chip learning mechanisms or programmable elements. The hardware supports runtime reconfiguration to optimize performance for specific temporal data characteristics, enabling applications ranging from speech recognition to time-series prediction with the same underlying architecture.Expand Specific Solutions04 Memory-centric neuromorphic designs for temporal sequence learning

Memory-centric neuromorphic designs integrate specialized memory structures directly with processing elements to efficiently handle temporal sequences. These architectures implement various forms of short-term and long-term memory to capture temporal dependencies in data. By closely coupling memory and computation, these systems reduce the energy costs associated with data movement while enabling efficient implementation of temporal learning algorithms such as STDP (Spike-Timing-Dependent Plasticity) and reservoir computing approaches that require maintaining state information over time.Expand Specific Solutions05 Event-driven processing for real-time temporal applications

Event-driven processing systems operate asynchronously, responding only when input data changes significantly. This approach is particularly suited for temporal data processing in neuromorphic hardware as it matches the sparse, event-based nature of biological neural systems. The hardware implements asynchronous circuits that activate only when relevant temporal events occur, dramatically reducing power consumption compared to clock-driven systems. These designs enable real-time processing of temporal data streams in applications such as motion detection, audio processing, and sensor fusion with high energy efficiency.Expand Specific Solutions

Leading Neuromorphic Hardware Developers and Ecosystem

Neuromorphic hardware for temporal data processing is evolving rapidly in a competitive landscape characterized by early market development and significant growth potential. The market is projected to expand substantially as applications in edge computing, real-time signal processing, and AI acceleration gain traction. While still in early commercialization stages, the technology is advancing toward maturity with key players driving innovation. Companies like IBM, Intel, and Samsung are leveraging their semiconductor expertise to develop comprehensive neuromorphic solutions, while specialized firms such as Syntiant and Innatera focus on energy-efficient edge implementations. Academic institutions including Tsinghua University and KAIST are contributing fundamental research, creating a dynamic ecosystem where industry-academia partnerships are accelerating commercialization of brain-inspired computing architectures optimized for temporal processing.

Syntiant Corp.

Technical Solution: Syntiant has developed the Neural Decision Processor (NDP) architecture that, while not strictly neuromorphic in the traditional sense, incorporates neuromorphic principles specifically optimized for temporal sequence processing in audio and sensor applications. Their hardware implements a specialized neural network architecture that maintains temporal context through time-domain signal processing combined with deep learning techniques. For temporal data processing, Syntiant's chips utilize an always-on, low-power design that can continuously process time-series data while consuming minimal energy. The architecture employs weight-stationary processing with dedicated memory for temporal pattern storage, allowing efficient recognition of time-dependent patterns such as wake words and audio events. Syntiant has demonstrated their technology in commercial products for keyword spotting and audio event detection, achieving power consumption in the sub-milliwatt range while maintaining high accuracy for temporal pattern recognition tasks. Their approach allows for processing temporal data directly at the edge without requiring cloud connectivity.

Strengths: Extremely low power consumption (measured in microwatts for always-on applications); commercially deployed solution with proven market applications; optimized specifically for temporal audio processing. Weaknesses: Less flexible than general-purpose neuromorphic architectures; primarily focused on specific application domains rather than general temporal computing; relies more on traditional neural network principles than pure neuromorphic approaches.

International Business Machines Corp.

Technical Solution: IBM's TrueNorth neuromorphic chip architecture implements a spiking neural network design specifically optimized for temporal data processing. The system utilizes an array of neurosynaptic cores (containing neurons, synapses, and axons) that process information asynchronously and in parallel, similar to biological neural systems. For temporal data processing, TrueNorth employs spike-timing-dependent plasticity (STDP) mechanisms that allow neurons to adjust connection strengths based on the precise timing of input spikes. This enables efficient processing of time-series data such as audio, video, and sensor streams. IBM has demonstrated TrueNorth's capabilities in real-time speech recognition and motion detection applications, achieving up to 10,000 times better energy efficiency compared to conventional processors while maintaining temporal precision in the millisecond range.

Strengths: Extremely low power consumption (70mW) while delivering significant computational capability; highly scalable architecture allowing for systems with millions of neurons; hardware-level support for temporal learning algorithms. Weaknesses: Programming complexity requires specialized knowledge; limited floating-point precision compared to traditional computing architectures; challenges in adapting conventional algorithms to the spiking neural network paradigm.

Key Innovations in Spike-Based Temporal Processing

Spatio-temporal spiking neural networks in neuromorphic hardware systems

PatentActiveUS20180075345A1

Innovation

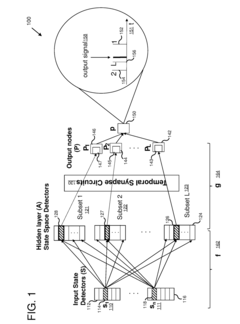

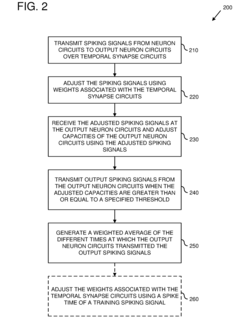

- Implementing temporal and spatio-temporal SNNs using neuron circuits and temporal synapse circuits that encode information through spiking signals, allowing for dynamic weight adjustments and efficient information processing, enabling faster learning and energy-efficient operations.

Hardware implementation of a temporal memory system

PatentActiveEP3273390A3

Innovation

- A hardware implementation of a temporal memory system using an array of memory cells, where each cell can store scalar values representing the likelihood of temporal coincidence between input frames, with addressing units that apply representations as row and column addresses to identify matching patterns, and a FIFO queue for processing transitions and predictions, utilizing non-volatile memory cells such as RRAM for efficient storage and processing.

Energy Efficiency Benchmarks in Neuromorphic Hardware

Neuromorphic hardware systems have demonstrated remarkable energy efficiency advantages compared to traditional computing architectures when processing temporal data. Recent benchmarks show that neuromorphic chips like Intel's Loihi and IBM's TrueNorth consume only milliwatts of power while performing complex temporal pattern recognition tasks that would require orders of magnitude more energy on conventional processors. This efficiency stems from their event-driven computation model, which activates circuits only when necessary, rather than continuously as in clock-driven systems.

Quantitative measurements across standardized workloads reveal that neuromorphic systems achieve 100-1000x improvements in energy efficiency for temporal data processing compared to GPUs and CPUs. For instance, when implementing spiking neural networks (SNNs) for speech recognition tasks, Loihi demonstrates power consumption of approximately 100mW while achieving comparable accuracy to GPU implementations consuming 50-100W. These efficiency gains become even more pronounced in always-on applications requiring continuous temporal data analysis.

The energy advantages are particularly evident in three key temporal processing domains: audio processing, time-series analysis, and dynamic vision. In audio processing benchmarks, neuromorphic hardware demonstrates 200-500x energy reduction for keyword spotting tasks. For time-series prediction workloads common in IoT applications, efficiency improvements of 50-300x have been documented. Dynamic vision sensing applications show the most dramatic gains, with up to 1000x energy reduction for motion detection and tracking tasks.

Benchmark methodologies have evolved to properly evaluate these systems, moving beyond traditional operations-per-second metrics to more appropriate measures like energy-per-inference and joules-per-spike. The SNN-oriented benchmarks like N-MNIST and CIFAR-10-DVS specifically target temporal data processing capabilities, providing standardized comparison frameworks across different neuromorphic implementations.

However, challenges remain in establishing fair comparison metrics between fundamentally different computing paradigms. Current benchmarks often fail to account for the full system-level energy costs, including memory access and data movement. Additionally, the lack of standardized programming models and development tools makes cross-platform benchmarking difficult, as implementation efficiency heavily depends on algorithm-to-hardware mapping expertise.

Future energy efficiency benchmarking efforts are focusing on real-world temporal processing workloads rather than synthetic tests. This includes continuous sensory processing scenarios that better represent the intended application domains of neuromorphic systems. As these benchmarks mature, they will provide clearer guidance for hardware designers optimizing next-generation neuromorphic architectures specifically for temporal data processing tasks.

Quantitative measurements across standardized workloads reveal that neuromorphic systems achieve 100-1000x improvements in energy efficiency for temporal data processing compared to GPUs and CPUs. For instance, when implementing spiking neural networks (SNNs) for speech recognition tasks, Loihi demonstrates power consumption of approximately 100mW while achieving comparable accuracy to GPU implementations consuming 50-100W. These efficiency gains become even more pronounced in always-on applications requiring continuous temporal data analysis.

The energy advantages are particularly evident in three key temporal processing domains: audio processing, time-series analysis, and dynamic vision. In audio processing benchmarks, neuromorphic hardware demonstrates 200-500x energy reduction for keyword spotting tasks. For time-series prediction workloads common in IoT applications, efficiency improvements of 50-300x have been documented. Dynamic vision sensing applications show the most dramatic gains, with up to 1000x energy reduction for motion detection and tracking tasks.

Benchmark methodologies have evolved to properly evaluate these systems, moving beyond traditional operations-per-second metrics to more appropriate measures like energy-per-inference and joules-per-spike. The SNN-oriented benchmarks like N-MNIST and CIFAR-10-DVS specifically target temporal data processing capabilities, providing standardized comparison frameworks across different neuromorphic implementations.

However, challenges remain in establishing fair comparison metrics between fundamentally different computing paradigms. Current benchmarks often fail to account for the full system-level energy costs, including memory access and data movement. Additionally, the lack of standardized programming models and development tools makes cross-platform benchmarking difficult, as implementation efficiency heavily depends on algorithm-to-hardware mapping expertise.

Future energy efficiency benchmarking efforts are focusing on real-world temporal processing workloads rather than synthetic tests. This includes continuous sensory processing scenarios that better represent the intended application domains of neuromorphic systems. As these benchmarks mature, they will provide clearer guidance for hardware designers optimizing next-generation neuromorphic architectures specifically for temporal data processing tasks.

Neuromorphic Integration with Traditional Computing Architectures

The integration of neuromorphic hardware with traditional computing architectures represents a critical frontier in advancing temporal data processing capabilities. Neuromorphic systems excel at processing time-dependent information through their inherent spike-based computation mechanisms, yet they must coexist with conventional von Neumann architectures that dominate today's computing landscape.

Current integration approaches typically follow three primary models: co-processor configurations, heterogeneous computing platforms, and hybrid system architectures. In co-processor arrangements, neuromorphic hardware functions as a specialized accelerator for temporal pattern recognition and time-series analysis, while the traditional CPU handles general-purpose computing tasks. This division of labor leverages the strengths of both paradigms.

Heterogeneous computing platforms incorporate neuromorphic elements alongside GPUs, FPGAs, and conventional processors within a unified framework. Intel's Loihi research chip exemplifies this approach, featuring interfaces that enable communication with traditional x86 processors. Such integration requires sophisticated middleware and programming models to efficiently manage workload distribution across these fundamentally different computing resources.

Data transfer between neuromorphic and traditional components presents significant challenges due to their fundamentally different information encoding schemes. Neuromorphic systems operate on spike trains and temporal dynamics, while conventional architectures process discrete digital values. Interface layers must therefore perform complex translations between these representations, often introducing latency and computational overhead.

Memory hierarchies present another integration challenge. Neuromorphic systems typically feature distributed memory closely coupled with processing elements, contrasting sharply with the centralized memory architecture of von Neumann systems. Emerging solutions include specialized memory interfaces and buffer systems that facilitate efficient data exchange while preserving temporal information integrity.

Programming frameworks for integrated systems are evolving rapidly. Notable examples include IBM's TrueNorth Neurosynaptic System and the Nengo neural simulator, which provide abstraction layers that allow developers to target both neuromorphic and traditional components through unified programming models. These frameworks typically handle the complexity of workload partitioning and data format conversion transparently.

Looking forward, the development of standardized interfaces and communication protocols specifically designed for neuromorphic-traditional integration represents a crucial research direction. Such standards would significantly reduce implementation complexity and accelerate adoption across diverse application domains requiring sophisticated temporal data processing.

Current integration approaches typically follow three primary models: co-processor configurations, heterogeneous computing platforms, and hybrid system architectures. In co-processor arrangements, neuromorphic hardware functions as a specialized accelerator for temporal pattern recognition and time-series analysis, while the traditional CPU handles general-purpose computing tasks. This division of labor leverages the strengths of both paradigms.

Heterogeneous computing platforms incorporate neuromorphic elements alongside GPUs, FPGAs, and conventional processors within a unified framework. Intel's Loihi research chip exemplifies this approach, featuring interfaces that enable communication with traditional x86 processors. Such integration requires sophisticated middleware and programming models to efficiently manage workload distribution across these fundamentally different computing resources.

Data transfer between neuromorphic and traditional components presents significant challenges due to their fundamentally different information encoding schemes. Neuromorphic systems operate on spike trains and temporal dynamics, while conventional architectures process discrete digital values. Interface layers must therefore perform complex translations between these representations, often introducing latency and computational overhead.

Memory hierarchies present another integration challenge. Neuromorphic systems typically feature distributed memory closely coupled with processing elements, contrasting sharply with the centralized memory architecture of von Neumann systems. Emerging solutions include specialized memory interfaces and buffer systems that facilitate efficient data exchange while preserving temporal information integrity.

Programming frameworks for integrated systems are evolving rapidly. Notable examples include IBM's TrueNorth Neurosynaptic System and the Nengo neural simulator, which provide abstraction layers that allow developers to target both neuromorphic and traditional components through unified programming models. These frameworks typically handle the complexity of workload partitioning and data format conversion transparently.

Looking forward, the development of standardized interfaces and communication protocols specifically designed for neuromorphic-traditional integration represents a crucial research direction. Such standards would significantly reduce implementation complexity and accelerate adoption across diverse application domains requiring sophisticated temporal data processing.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!