How Neuromorphic Chips Shape Standards in Autonomous Systems

OCT 9, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

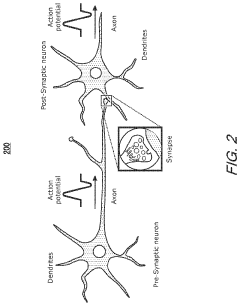

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the human brain's neural networks to create more efficient and adaptive processing systems. The evolution of this technology can be traced back to the 1980s when Carver Mead first introduced the concept of using electronic circuits to mimic neurobiological architectures. Since then, neuromorphic computing has progressed through several distinct phases, each marked by significant technological breakthroughs and expanding applications.

The initial phase focused primarily on theoretical foundations and basic circuit designs that could replicate neural functions. By the early 2000s, researchers had developed the first generation of neuromorphic chips capable of simulating limited neural networks. The field gained substantial momentum around 2010 with the emergence of more sophisticated hardware implementations, including IBM's TrueNorth and Intel's Loihi chips, which demonstrated the practical viability of neuromorphic principles at scale.

Recent years have witnessed an acceleration in neuromorphic computing development, driven by the increasing demands of artificial intelligence applications and the limitations of traditional von Neumann architectures in handling neural network computations efficiently. The technology has evolved from academic curiosity to commercial reality, with major semiconductor companies and research institutions investing heavily in neuromorphic solutions.

The primary objectives of neuromorphic computing in autonomous systems center around achieving greater energy efficiency, real-time processing capabilities, and adaptive learning. Unlike conventional computing architectures that separate memory and processing units, neuromorphic designs integrate these functions, significantly reducing power consumption while increasing processing speed for specific tasks. This makes them particularly suitable for edge computing in autonomous vehicles, drones, and robotics.

Another critical objective is to enable autonomous systems to process sensory information in a manner similar to biological systems, allowing for more natural interaction with complex, unpredictable environments. This includes the ability to handle noisy or incomplete data, adapt to changing conditions, and learn continuously from experience without requiring extensive retraining.

Looking forward, the field aims to develop more sophisticated neuromorphic architectures capable of supporting advanced cognitive functions, including decision-making under uncertainty, contextual understanding, and predictive capabilities. The ultimate goal is to create autonomous systems that can operate with human-like intelligence and adaptability while maintaining strict energy constraints and reliability standards.

The convergence of neuromorphic computing with other emerging technologies, such as quantum computing and advanced materials science, promises to further accelerate innovation in this field, potentially leading to entirely new paradigms for autonomous system design and operation.

The initial phase focused primarily on theoretical foundations and basic circuit designs that could replicate neural functions. By the early 2000s, researchers had developed the first generation of neuromorphic chips capable of simulating limited neural networks. The field gained substantial momentum around 2010 with the emergence of more sophisticated hardware implementations, including IBM's TrueNorth and Intel's Loihi chips, which demonstrated the practical viability of neuromorphic principles at scale.

Recent years have witnessed an acceleration in neuromorphic computing development, driven by the increasing demands of artificial intelligence applications and the limitations of traditional von Neumann architectures in handling neural network computations efficiently. The technology has evolved from academic curiosity to commercial reality, with major semiconductor companies and research institutions investing heavily in neuromorphic solutions.

The primary objectives of neuromorphic computing in autonomous systems center around achieving greater energy efficiency, real-time processing capabilities, and adaptive learning. Unlike conventional computing architectures that separate memory and processing units, neuromorphic designs integrate these functions, significantly reducing power consumption while increasing processing speed for specific tasks. This makes them particularly suitable for edge computing in autonomous vehicles, drones, and robotics.

Another critical objective is to enable autonomous systems to process sensory information in a manner similar to biological systems, allowing for more natural interaction with complex, unpredictable environments. This includes the ability to handle noisy or incomplete data, adapt to changing conditions, and learn continuously from experience without requiring extensive retraining.

Looking forward, the field aims to develop more sophisticated neuromorphic architectures capable of supporting advanced cognitive functions, including decision-making under uncertainty, contextual understanding, and predictive capabilities. The ultimate goal is to create autonomous systems that can operate with human-like intelligence and adaptability while maintaining strict energy constraints and reliability standards.

The convergence of neuromorphic computing with other emerging technologies, such as quantum computing and advanced materials science, promises to further accelerate innovation in this field, potentially leading to entirely new paradigms for autonomous system design and operation.

Market Demand for Brain-Inspired Computing in Autonomous Systems

The market for brain-inspired computing in autonomous systems has witnessed significant growth in recent years, driven by the increasing demand for more efficient, intelligent, and responsive autonomous technologies. Neuromorphic chips, which mimic the neural structure and function of the human brain, are positioned at the forefront of this market evolution, offering unprecedented capabilities for real-time data processing, pattern recognition, and decision-making in autonomous systems.

The global autonomous systems market, encompassing self-driving vehicles, drones, robotics, and smart infrastructure, is projected to reach substantial market value by 2030. Within this broader market, the demand for neuromorphic computing solutions is growing at a compound annual growth rate exceeding 20%, reflecting the urgent need for computing architectures that can handle complex, real-world environments with minimal power consumption.

Automotive applications represent one of the largest segments driving demand for neuromorphic chips. Advanced driver-assistance systems (ADAS) and autonomous vehicles require sophisticated sensor fusion, object recognition, and predictive capabilities that traditional computing architectures struggle to deliver efficiently. Neuromorphic solutions offer the potential to process multiple sensory inputs simultaneously while consuming significantly less power than conventional GPUs or specialized AI chips.

The drone and unmanned aerial vehicle (UAV) sector presents another rapidly expanding market for brain-inspired computing. Commercial and military applications increasingly demand on-board intelligence for navigation, obstacle avoidance, and mission execution in GPS-denied environments. Neuromorphic processors enable these capabilities while addressing the critical constraints of size, weight, and power consumption inherent to aerial platforms.

Industrial robotics constitutes a third major market segment, where collaborative robots and autonomous mobile robots require adaptive learning capabilities and real-time responsiveness to dynamic environments. The manufacturing sector's push toward Industry 4.0 has accelerated demand for robots that can work safely alongside humans while performing complex tasks with minimal supervision.

Market research indicates that end-users across these segments are prioritizing three key performance attributes in neuromorphic solutions: energy efficiency, real-time processing capability, and adaptive learning. The ability to operate effectively at the edge, without constant connectivity to cloud resources, represents a particularly valuable feature for autonomous systems operating in remote or sensitive environments.

Geographically, North America currently leads in market adoption, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is expected to demonstrate the highest growth rate over the next five years, driven by substantial investments in autonomous technologies by countries like China, Japan, and South Korea.

The global autonomous systems market, encompassing self-driving vehicles, drones, robotics, and smart infrastructure, is projected to reach substantial market value by 2030. Within this broader market, the demand for neuromorphic computing solutions is growing at a compound annual growth rate exceeding 20%, reflecting the urgent need for computing architectures that can handle complex, real-world environments with minimal power consumption.

Automotive applications represent one of the largest segments driving demand for neuromorphic chips. Advanced driver-assistance systems (ADAS) and autonomous vehicles require sophisticated sensor fusion, object recognition, and predictive capabilities that traditional computing architectures struggle to deliver efficiently. Neuromorphic solutions offer the potential to process multiple sensory inputs simultaneously while consuming significantly less power than conventional GPUs or specialized AI chips.

The drone and unmanned aerial vehicle (UAV) sector presents another rapidly expanding market for brain-inspired computing. Commercial and military applications increasingly demand on-board intelligence for navigation, obstacle avoidance, and mission execution in GPS-denied environments. Neuromorphic processors enable these capabilities while addressing the critical constraints of size, weight, and power consumption inherent to aerial platforms.

Industrial robotics constitutes a third major market segment, where collaborative robots and autonomous mobile robots require adaptive learning capabilities and real-time responsiveness to dynamic environments. The manufacturing sector's push toward Industry 4.0 has accelerated demand for robots that can work safely alongside humans while performing complex tasks with minimal supervision.

Market research indicates that end-users across these segments are prioritizing three key performance attributes in neuromorphic solutions: energy efficiency, real-time processing capability, and adaptive learning. The ability to operate effectively at the edge, without constant connectivity to cloud resources, represents a particularly valuable feature for autonomous systems operating in remote or sensitive environments.

Geographically, North America currently leads in market adoption, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is expected to demonstrate the highest growth rate over the next five years, driven by substantial investments in autonomous technologies by countries like China, Japan, and South Korea.

Neuromorphic Chip Technology Landscape and Barriers

The neuromorphic computing landscape is currently dominated by several key players, each contributing unique approaches to brain-inspired computing architectures. Intel's Loihi chip represents one of the most advanced commercial implementations, featuring self-learning capabilities and spiking neural networks that enable efficient processing of temporal data patterns. IBM's TrueNorth architecture offers a different approach with its modular design and focus on low power consumption, making it suitable for edge computing applications in autonomous systems. Meanwhile, BrainChip's Akida neuromorphic processor emphasizes on-chip learning and ultra-low power operation, positioning it as a viable solution for autonomous vehicles and drones.

Academic institutions have also made significant contributions, with the University of Manchester's SpiNNaker project and the Human Brain Project's neuromorphic computing platforms advancing fundamental research in this domain. These research initiatives have helped establish theoretical frameworks that commercial implementations are now beginning to adopt.

Despite these advancements, neuromorphic computing faces substantial technical barriers. The most significant challenge remains the development of standardized programming models and frameworks. Unlike traditional computing architectures with established programming paradigms, neuromorphic systems require fundamentally different approaches to software development, creating a steep learning curve for developers and limiting widespread adoption in autonomous systems.

Hardware integration presents another major obstacle. Neuromorphic chips must interface with conventional computing systems and sensors in autonomous platforms, requiring complex integration solutions that can translate between different data representation paradigms. This integration challenge is particularly acute in safety-critical autonomous applications where reliability and predictability are paramount.

Scalability issues also persist across the industry. While current neuromorphic implementations demonstrate impressive capabilities at small scales, scaling these architectures to handle the massive parallel processing requirements of complex autonomous systems remains problematic. Power efficiency advantages often diminish as systems scale up, negating one of the primary benefits of neuromorphic approaches.

The manufacturing ecosystem represents another significant barrier. Current fabrication processes optimized for traditional von Neumann architectures require substantial modification to efficiently produce neuromorphic designs. This manufacturing challenge increases costs and limits the availability of neuromorphic solutions for autonomous system developers.

Verification and validation methodologies for neuromorphic systems remain underdeveloped, creating uncertainty around reliability and performance guarantees. This is particularly problematic for autonomous systems that require certification and must meet stringent safety standards across various operational domains.

Academic institutions have also made significant contributions, with the University of Manchester's SpiNNaker project and the Human Brain Project's neuromorphic computing platforms advancing fundamental research in this domain. These research initiatives have helped establish theoretical frameworks that commercial implementations are now beginning to adopt.

Despite these advancements, neuromorphic computing faces substantial technical barriers. The most significant challenge remains the development of standardized programming models and frameworks. Unlike traditional computing architectures with established programming paradigms, neuromorphic systems require fundamentally different approaches to software development, creating a steep learning curve for developers and limiting widespread adoption in autonomous systems.

Hardware integration presents another major obstacle. Neuromorphic chips must interface with conventional computing systems and sensors in autonomous platforms, requiring complex integration solutions that can translate between different data representation paradigms. This integration challenge is particularly acute in safety-critical autonomous applications where reliability and predictability are paramount.

Scalability issues also persist across the industry. While current neuromorphic implementations demonstrate impressive capabilities at small scales, scaling these architectures to handle the massive parallel processing requirements of complex autonomous systems remains problematic. Power efficiency advantages often diminish as systems scale up, negating one of the primary benefits of neuromorphic approaches.

The manufacturing ecosystem represents another significant barrier. Current fabrication processes optimized for traditional von Neumann architectures require substantial modification to efficiently produce neuromorphic designs. This manufacturing challenge increases costs and limits the availability of neuromorphic solutions for autonomous system developers.

Verification and validation methodologies for neuromorphic systems remain underdeveloped, creating uncertainty around reliability and performance guarantees. This is particularly problematic for autonomous systems that require certification and must meet stringent safety standards across various operational domains.

Current Neuromorphic Solutions for Autonomous Systems

01 Standardization of neuromorphic hardware interfaces

Standardized interfaces for neuromorphic chips enable interoperability between different hardware implementations and software frameworks. These standards define communication protocols, data formats, and control mechanisms that allow neuromorphic systems to be integrated into larger computing environments. Standardized interfaces facilitate the development of portable applications and promote wider adoption of neuromorphic computing technology across various domains.- Standardization of neuromorphic hardware interfaces: Standardized interfaces for neuromorphic chips are essential for ensuring compatibility and interoperability between different neuromorphic computing systems. These standards define communication protocols, data formats, and physical connections that allow neuromorphic chips to interact with conventional computing systems and other neuromorphic hardware. Standardized interfaces facilitate the integration of neuromorphic chips into existing computing ecosystems and enable the development of modular neuromorphic systems.

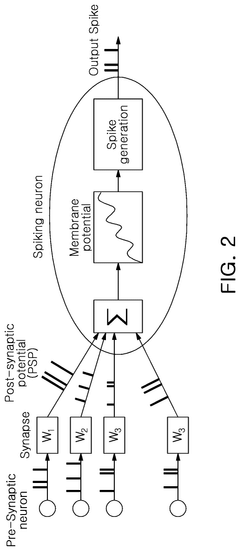

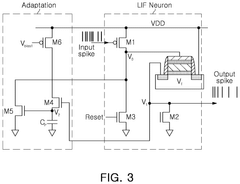

- Neural network architecture standards for neuromorphic chips: Standards for neural network architectures in neuromorphic chips define the organization and connectivity of artificial neurons and synapses. These standards specify how neural networks should be implemented in hardware, including the types of neurons, learning rules, and network topologies. Standardized neural network architectures enable consistent performance evaluation, facilitate the transfer of neural network models between different neuromorphic platforms, and support the development of neuromorphic computing applications.

- Power efficiency and thermal management standards: Standards for power efficiency and thermal management in neuromorphic chips address the energy consumption and heat dissipation characteristics of these specialized processors. These standards define metrics for measuring energy efficiency, specify acceptable power consumption levels for different operating conditions, and establish guidelines for thermal management. By adhering to these standards, neuromorphic chip designers can create energy-efficient hardware that meets the requirements of various applications while minimizing environmental impact.

- Testing and validation standards for neuromorphic systems: Testing and validation standards for neuromorphic chips establish methodologies for verifying the functionality, reliability, and performance of these specialized processors. These standards define test procedures, benchmark tasks, and performance metrics that enable objective comparison between different neuromorphic implementations. Standardized testing approaches ensure that neuromorphic chips meet specified requirements and perform consistently across various operating conditions, which is crucial for their adoption in commercial and industrial applications.

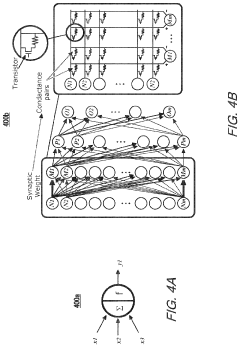

- Integration standards with conventional computing systems: Integration standards define how neuromorphic chips should interface with conventional computing architectures, including CPUs, GPUs, and memory systems. These standards specify software frameworks, programming models, and hardware interfaces that enable seamless integration of neuromorphic accelerators into existing computing ecosystems. Standardized integration approaches facilitate the development of hybrid computing systems that leverage the strengths of both neuromorphic and conventional computing paradigms, enabling more efficient processing of complex workloads.

02 Neural network architecture standards for neuromorphic chips

Standards for neural network architectures in neuromorphic chips define how spiking neural networks and other bio-inspired computing models are implemented in hardware. These standards specify neuron models, synapse configurations, learning rules, and network topologies that can be consistently implemented across different neuromorphic platforms. Standardized neural architectures enable researchers and developers to create portable neural network designs that can run on various neuromorphic hardware systems.Expand Specific Solutions03 Performance benchmarking standards for neuromorphic systems

Benchmarking standards for neuromorphic chips establish consistent metrics and methodologies for evaluating and comparing the performance of different neuromorphic computing systems. These standards define test workloads, energy efficiency measurements, processing speed metrics, and accuracy evaluations that provide objective comparisons between neuromorphic implementations. Standardized benchmarks help in assessing the suitability of neuromorphic chips for specific applications and drive improvements in neuromorphic hardware design.Expand Specific Solutions04 Neuromorphic chip manufacturing and integration standards

Manufacturing and integration standards for neuromorphic chips define fabrication processes, materials, packaging requirements, and integration methodologies. These standards ensure consistency in production quality, reliability, and compatibility with existing electronic systems. Standardized manufacturing approaches facilitate the scaling of neuromorphic technology from research prototypes to commercial products and enable integration with conventional computing systems in heterogeneous computing environments.Expand Specific Solutions05 Programming and software standards for neuromorphic computing

Programming standards for neuromorphic chips define software frameworks, programming languages, and development tools specifically designed for neuromorphic computing. These standards establish consistent approaches for implementing algorithms on neuromorphic hardware, including methods for converting traditional neural networks to spiking neural networks. Standardized programming interfaces abstract hardware details and provide developers with portable, high-level tools to leverage the unique capabilities of neuromorphic systems without requiring deep hardware knowledge.Expand Specific Solutions

Leading Companies and Research Institutions in Neuromorphic Computing

Neuromorphic computing for autonomous systems is currently in an early growth phase, with the market expected to expand significantly as technology matures. The global market is projected to reach several billion dollars by 2030, driven by increasing demand for energy-efficient AI processing at the edge. Companies like Intel, IBM, and Samsung are leading with established research programs and commercial offerings, while specialized players such as Syntiant and Polyn Technology focus on ultra-low-power neuromorphic solutions. Academic institutions including Tsinghua University and KAIST are contributing fundamental research. The technology is approaching commercial viability with early deployments in specific applications, though standardization remains fragmented as companies pursue proprietary architectures while collaborating on interoperability frameworks for autonomous systems integration.

SYNTIANT CORP.

Technical Solution: Syntiant has developed the Neural Decision Processor (NDP), a neuromorphic chip architecture specifically optimized for edge AI applications in autonomous systems. Unlike traditional von Neumann architectures, Syntiant's NDP processes information in a highly parallel fashion similar to the human brain, enabling ultra-low-power neural network processing. The NDP100 and NDP200 series chips can run deep learning algorithms while consuming less than 1mW of power, representing a 100x improvement over conventional microcontrollers. Syntiant's technology implements a memory-centric computing approach where computation happens within memory, eliminating the power-hungry data transfers between separate processing and memory units. This architecture is particularly valuable for autonomous systems requiring always-on sensing capabilities, such as voice interfaces, object detection, and environmental monitoring. The company has demonstrated its chips in automotive applications where they enable continuous monitoring of driver attention and in-cabin conditions while consuming minimal power, allowing these safety features to run without significantly impacting vehicle battery life.

Strengths: Extremely low power consumption (sub-milliwatt operation) enabling always-on capabilities in battery-constrained autonomous systems; compact form factor suitable for space-constrained applications; specialized for efficient execution of specific neural network workloads. Weaknesses: More limited in computational flexibility compared to general-purpose neuromorphic chips; primarily focused on audio and vision processing rather than broader autonomous decision-making; relatively new technology with a still-developing ecosystem.

International Business Machines Corp.

Technical Solution: IBM has pioneered TrueNorth, a neuromorphic chip architecture specifically designed for efficient neural network processing in autonomous systems. TrueNorth contains 5.4 billion transistors organized into 4,096 neurosynaptic cores, with each core having 256 neurons that together create a network of one million programmable neurons and 256 million configurable synapses. The chip operates on an asynchronous, event-driven paradigm that only activates when neurons fire, consuming just 70mW of power while performing 46 billion synaptic operations per second. IBM has demonstrated TrueNorth's capabilities in autonomous drone navigation systems, where it processes sensory data in real-time to identify objects and navigate complex environments while consuming minimal power. The company has also developed programming frameworks like Corelet that allow developers to map neural algorithms to the chip's architecture, facilitating adoption in autonomous vehicle systems, robotics, and other applications requiring real-time decision making with power constraints.

Strengths: Extremely low power consumption (70mW) while delivering high computational performance; mature development ecosystem with specialized programming tools; proven deployment in real-world autonomous applications. Weaknesses: Requires specialized programming knowledge different from conventional neural network frameworks; limited flexibility for implementing certain types of neural network architectures; higher initial implementation costs compared to conventional computing solutions.

Key Patents and Breakthroughs in Neuromorphic Engineering

Neuromorphic chip for updating precise synaptic weight values

PatentPendingUS20230385619A1

Innovation

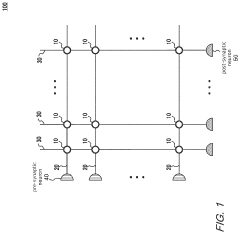

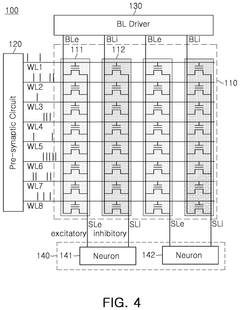

- The neuromorphic chip employs a crossbar array structure with resistive devices and switches that allow for the expression of a single synaptic weight using a variable number of resistive elements, enabling precise synaptic weight updates by dynamically connecting axon lines and aggregating resistive cells to compensate for device variability.

Neuromorphic computing device using spiking neural network and operating method thereof

PatentPendingUS20250292072A1

Innovation

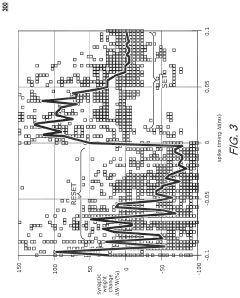

- A neuromorphic computing device utilizing ferroelectric transistors and synapse arrays with excitatory and inhibitory synapses, enabling 3D stacking and flexible neuron settings through adjustable firing rates, achieved by controlling power supply voltages and gate voltages.

Energy Efficiency Benchmarks for Neuromorphic Implementation

Energy efficiency has emerged as a critical benchmark for evaluating neuromorphic computing implementations in autonomous systems. Traditional computing architectures consume substantial power, creating significant limitations for deployment in mobile and remote autonomous applications. Neuromorphic chips, inspired by the brain's neural structure, offer promising energy efficiency advantages with their event-driven processing and distributed memory architecture.

Current industry standards measure neuromorphic efficiency using metrics such as operations per watt (TOPS/W), energy per synaptic operation (pJ/SOP), and static power consumption. Leading neuromorphic implementations like Intel's Loihi 2 demonstrate remarkable efficiency at 2,300 TOPS/W for certain workloads, while IBM's TrueNorth achieves 400 TOPS/W. These figures significantly outperform conventional GPU architectures that typically deliver 5-50 TOPS/W.

The SpiNNaker system, developed at the University of Manchester, provides another benchmark with its focus on large-scale neural simulation at approximately 1 watt per simulated million neurons. This establishes an important reference point for systems targeting brain-scale computations in autonomous applications.

Standardized benchmarking methodologies remain a challenge in the field. The Neuromorphic Computing Benchmark (NCB) suite has emerged as an attempt to create uniform evaluation protocols across different neuromorphic implementations. This suite includes standardized workloads for pattern recognition, anomaly detection, and temporal sequence processing—all critical functions for autonomous systems.

Real-world autonomous applications present unique energy constraints that influence benchmark development. Edge computing in autonomous vehicles requires processing capabilities within strict power envelopes of 10-50W, while drone systems may be limited to 1-5W. These constraints drive the development of application-specific benchmarks that evaluate neuromorphic solutions under realistic deployment scenarios.

Temperature management represents another critical dimension of energy efficiency benchmarking. Neuromorphic implementations must maintain performance across varying thermal conditions without requiring extensive cooling infrastructure, which would negate their inherent efficiency advantages. Thermal stability metrics are increasingly incorporated into comprehensive benchmarking frameworks.

The industry is moving toward standardized energy efficiency metrics that account for both computational throughput and accuracy. The Energy-Delay Product (EDP) has gained traction as a holistic measure that balances processing speed with power consumption, providing a more nuanced evaluation of neuromorphic implementations for autonomous systems.

Current industry standards measure neuromorphic efficiency using metrics such as operations per watt (TOPS/W), energy per synaptic operation (pJ/SOP), and static power consumption. Leading neuromorphic implementations like Intel's Loihi 2 demonstrate remarkable efficiency at 2,300 TOPS/W for certain workloads, while IBM's TrueNorth achieves 400 TOPS/W. These figures significantly outperform conventional GPU architectures that typically deliver 5-50 TOPS/W.

The SpiNNaker system, developed at the University of Manchester, provides another benchmark with its focus on large-scale neural simulation at approximately 1 watt per simulated million neurons. This establishes an important reference point for systems targeting brain-scale computations in autonomous applications.

Standardized benchmarking methodologies remain a challenge in the field. The Neuromorphic Computing Benchmark (NCB) suite has emerged as an attempt to create uniform evaluation protocols across different neuromorphic implementations. This suite includes standardized workloads for pattern recognition, anomaly detection, and temporal sequence processing—all critical functions for autonomous systems.

Real-world autonomous applications present unique energy constraints that influence benchmark development. Edge computing in autonomous vehicles requires processing capabilities within strict power envelopes of 10-50W, while drone systems may be limited to 1-5W. These constraints drive the development of application-specific benchmarks that evaluate neuromorphic solutions under realistic deployment scenarios.

Temperature management represents another critical dimension of energy efficiency benchmarking. Neuromorphic implementations must maintain performance across varying thermal conditions without requiring extensive cooling infrastructure, which would negate their inherent efficiency advantages. Thermal stability metrics are increasingly incorporated into comprehensive benchmarking frameworks.

The industry is moving toward standardized energy efficiency metrics that account for both computational throughput and accuracy. The Energy-Delay Product (EDP) has gained traction as a holistic measure that balances processing speed with power consumption, providing a more nuanced evaluation of neuromorphic implementations for autonomous systems.

Safety and Certification Frameworks for Neuromorphic Autonomous Systems

The integration of neuromorphic chips into autonomous systems necessitates robust safety and certification frameworks to ensure reliable operation in critical applications. Current regulatory landscapes are inadequately prepared for the unique characteristics of brain-inspired computing architectures, creating an urgent need for specialized standards that address their non-deterministic nature.

Traditional certification frameworks like ISO 26262 for automotive safety and DO-178C for avionics software were designed for conventional computing systems with predictable behaviors. Neuromorphic systems, however, operate on fundamentally different principles—utilizing spiking neural networks and event-driven processing that introduce inherent variability in decision-making processes. This paradigm shift demands new approaches to verification and validation.

Emerging certification methodologies for neuromorphic autonomous systems are adopting multi-layered approaches. Runtime monitoring systems continuously evaluate the behavior of neuromorphic components against safety envelopes, while formal verification techniques are being adapted to accommodate probabilistic computing models. These frameworks increasingly incorporate explainability requirements, ensuring that critical decisions made by neuromorphic systems can be traced and understood by human operators.

Regulatory bodies worldwide are beginning to develop specialized guidelines. The European Union's AI Act proposes risk-based certification requirements for autonomous systems, while the U.S. National Institute of Standards and Technology (NIST) is developing neuromorphic-specific testing protocols. Industry consortia like the Neuromorphic Computing Safety Alliance are establishing best practices for implementation in safety-critical environments.

Fault tolerance mechanisms represent another critical dimension of these frameworks. Unlike traditional systems where redundancy often means identical backup components, neuromorphic systems benefit from diverse redundancy—employing different neural network architectures to solve the same problem, thereby reducing common-mode failures. Graceful degradation capabilities are being engineered to ensure systems maintain minimum safety functions even when partially compromised.

Testing methodologies are evolving to include adversarial testing, where neuromorphic systems are deliberately exposed to edge cases and unexpected inputs to evaluate resilience. Simulation environments that can generate millions of scenarios provide statistical confidence in system performance, while hardware-in-the-loop testing validates real-world behavior under controlled conditions.

As these frameworks mature, they will likely converge toward a unified certification approach that balances innovation with safety assurance, enabling neuromorphic chips to fulfill their potential in transforming autonomous systems while maintaining public trust and regulatory compliance.

Traditional certification frameworks like ISO 26262 for automotive safety and DO-178C for avionics software were designed for conventional computing systems with predictable behaviors. Neuromorphic systems, however, operate on fundamentally different principles—utilizing spiking neural networks and event-driven processing that introduce inherent variability in decision-making processes. This paradigm shift demands new approaches to verification and validation.

Emerging certification methodologies for neuromorphic autonomous systems are adopting multi-layered approaches. Runtime monitoring systems continuously evaluate the behavior of neuromorphic components against safety envelopes, while formal verification techniques are being adapted to accommodate probabilistic computing models. These frameworks increasingly incorporate explainability requirements, ensuring that critical decisions made by neuromorphic systems can be traced and understood by human operators.

Regulatory bodies worldwide are beginning to develop specialized guidelines. The European Union's AI Act proposes risk-based certification requirements for autonomous systems, while the U.S. National Institute of Standards and Technology (NIST) is developing neuromorphic-specific testing protocols. Industry consortia like the Neuromorphic Computing Safety Alliance are establishing best practices for implementation in safety-critical environments.

Fault tolerance mechanisms represent another critical dimension of these frameworks. Unlike traditional systems where redundancy often means identical backup components, neuromorphic systems benefit from diverse redundancy—employing different neural network architectures to solve the same problem, thereby reducing common-mode failures. Graceful degradation capabilities are being engineered to ensure systems maintain minimum safety functions even when partially compromised.

Testing methodologies are evolving to include adversarial testing, where neuromorphic systems are deliberately exposed to edge cases and unexpected inputs to evaluate resilience. Simulation environments that can generate millions of scenarios provide statistical confidence in system performance, while hardware-in-the-loop testing validates real-world behavior under controlled conditions.

As these frameworks mature, they will likely converge toward a unified certification approach that balances innovation with safety assurance, enabling neuromorphic chips to fulfill their potential in transforming autonomous systems while maintaining public trust and regulatory compliance.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!