Neuromorphic Chips in IoT Network Infrastructure

OCT 9, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the human brain's neural networks to create more efficient and adaptive processing systems. This approach has evolved significantly since the 1980s when Carver Mead first introduced the concept, aiming to mimic the brain's parallel processing capabilities and energy efficiency. The evolution of neuromorphic computing has been marked by progressive advancements in hardware design, neural network algorithms, and integration capabilities.

The early development phase focused primarily on theoretical frameworks and basic circuit designs that could emulate neural functions. By the early 2000s, research institutions began developing more sophisticated neuromorphic systems, such as IBM's TrueNorth and the European Human Brain Project's neuromorphic computing platforms. These initiatives demonstrated the potential for creating chips that could process sensory data with significantly lower power consumption compared to traditional computing architectures.

Recent years have witnessed accelerated progress in neuromorphic chip development, driven by breakthroughs in materials science, nanotechnology, and artificial intelligence algorithms. The integration of memristors, phase-change memory, and other novel components has enabled more accurate simulation of synaptic behaviors and neural plasticity. These advancements have pushed neuromorphic computing beyond academic research into practical applications, particularly in edge computing scenarios.

In the context of IoT network infrastructure, neuromorphic computing aims to address several critical challenges. The primary objective is to enable real-time processing of massive sensor data streams while maintaining minimal power consumption—a crucial requirement for distributed IoT networks. By processing information in a brain-like manner, these chips can potentially reduce the bandwidth needed for cloud communication by performing complex pattern recognition and decision-making tasks locally.

Another key objective is to enhance adaptability and learning capabilities within IoT systems. Traditional computing architectures require explicit programming for each scenario, whereas neuromorphic systems can learn from environmental inputs and adapt their processing accordingly. This capability is particularly valuable for IoT deployments in dynamic environments where conditions frequently change.

The long-term vision for neuromorphic chips in IoT infrastructure encompasses creating self-organizing networks that can autonomously optimize data flows, predict maintenance needs, and identify anomalies without constant human oversight. This evolution aims to transform IoT from a collection of connected devices into truly intelligent systems capable of collective decision-making and autonomous operation, while maintaining strict energy constraints essential for widespread deployment.

The early development phase focused primarily on theoretical frameworks and basic circuit designs that could emulate neural functions. By the early 2000s, research institutions began developing more sophisticated neuromorphic systems, such as IBM's TrueNorth and the European Human Brain Project's neuromorphic computing platforms. These initiatives demonstrated the potential for creating chips that could process sensory data with significantly lower power consumption compared to traditional computing architectures.

Recent years have witnessed accelerated progress in neuromorphic chip development, driven by breakthroughs in materials science, nanotechnology, and artificial intelligence algorithms. The integration of memristors, phase-change memory, and other novel components has enabled more accurate simulation of synaptic behaviors and neural plasticity. These advancements have pushed neuromorphic computing beyond academic research into practical applications, particularly in edge computing scenarios.

In the context of IoT network infrastructure, neuromorphic computing aims to address several critical challenges. The primary objective is to enable real-time processing of massive sensor data streams while maintaining minimal power consumption—a crucial requirement for distributed IoT networks. By processing information in a brain-like manner, these chips can potentially reduce the bandwidth needed for cloud communication by performing complex pattern recognition and decision-making tasks locally.

Another key objective is to enhance adaptability and learning capabilities within IoT systems. Traditional computing architectures require explicit programming for each scenario, whereas neuromorphic systems can learn from environmental inputs and adapt their processing accordingly. This capability is particularly valuable for IoT deployments in dynamic environments where conditions frequently change.

The long-term vision for neuromorphic chips in IoT infrastructure encompasses creating self-organizing networks that can autonomously optimize data flows, predict maintenance needs, and identify anomalies without constant human oversight. This evolution aims to transform IoT from a collection of connected devices into truly intelligent systems capable of collective decision-making and autonomous operation, while maintaining strict energy constraints essential for widespread deployment.

IoT Market Demand for Brain-Inspired Computing

The Internet of Things (IoT) market is experiencing a paradigm shift in computational requirements, creating substantial demand for neuromorphic computing solutions. Current projections indicate the global IoT market will reach $1.5 trillion by 2027, with over 75 billion connected devices generating unprecedented volumes of data. Traditional computing architectures are increasingly inadequate for processing this data deluge at the network edge, creating a critical technology gap that neuromorphic chips are uniquely positioned to fill.

Energy efficiency represents the primary market driver for brain-inspired computing in IoT infrastructure. Conventional processors consume excessive power when handling continuous sensor data streams, whereas neuromorphic chips demonstrate power consumption reductions of 100-1000x for equivalent computational tasks. This efficiency translates directly to extended battery life for remote IoT sensors and reduced operational costs for large-scale deployments.

Real-time processing capabilities constitute another significant market demand. IoT applications in industrial automation, autonomous vehicles, and smart infrastructure require instantaneous decision-making without cloud connectivity dependencies. Neuromorphic architectures excel at parallel processing and can analyze complex sensor data patterns with millisecond latencies, enabling critical time-sensitive applications previously unfeasible with traditional computing approaches.

The security and privacy landscape further amplifies market interest in neuromorphic solutions. With regulatory frameworks like GDPR and CCPA imposing stricter data protection requirements, edge-based processing that minimizes data transmission represents a compelling compliance strategy. Neuromorphic chips enable sophisticated local analytics while reducing vulnerable data transfers across networks.

Market research indicates particular demand concentration in industrial IoT, smart cities, and healthcare sectors. Industrial manufacturers seek neuromorphic solutions for predictive maintenance and quality control, with 67% of surveyed companies expressing interest in edge AI capabilities. Smart city initiatives prioritize neuromorphic computing for traffic management, environmental monitoring, and public safety applications requiring continuous sensor analysis with minimal infrastructure footprint.

Healthcare represents perhaps the most promising vertical market, with neuromorphic chips enabling wearable devices that can continuously monitor patient vital signs and detect anomalies without constant cloud connectivity. The market for AI-enhanced medical devices is projected to grow at 28% CAGR through 2028, with neuromorphic computing positioned as a key enabling technology.

Despite clear market demand signals, adoption barriers persist around standardization, integration with existing systems, and developer ecosystem maturity. Industry consortia are actively addressing these challenges through initiatives focused on common interfaces and programming frameworks to accelerate market penetration of neuromorphic solutions in IoT infrastructure.

Energy efficiency represents the primary market driver for brain-inspired computing in IoT infrastructure. Conventional processors consume excessive power when handling continuous sensor data streams, whereas neuromorphic chips demonstrate power consumption reductions of 100-1000x for equivalent computational tasks. This efficiency translates directly to extended battery life for remote IoT sensors and reduced operational costs for large-scale deployments.

Real-time processing capabilities constitute another significant market demand. IoT applications in industrial automation, autonomous vehicles, and smart infrastructure require instantaneous decision-making without cloud connectivity dependencies. Neuromorphic architectures excel at parallel processing and can analyze complex sensor data patterns with millisecond latencies, enabling critical time-sensitive applications previously unfeasible with traditional computing approaches.

The security and privacy landscape further amplifies market interest in neuromorphic solutions. With regulatory frameworks like GDPR and CCPA imposing stricter data protection requirements, edge-based processing that minimizes data transmission represents a compelling compliance strategy. Neuromorphic chips enable sophisticated local analytics while reducing vulnerable data transfers across networks.

Market research indicates particular demand concentration in industrial IoT, smart cities, and healthcare sectors. Industrial manufacturers seek neuromorphic solutions for predictive maintenance and quality control, with 67% of surveyed companies expressing interest in edge AI capabilities. Smart city initiatives prioritize neuromorphic computing for traffic management, environmental monitoring, and public safety applications requiring continuous sensor analysis with minimal infrastructure footprint.

Healthcare represents perhaps the most promising vertical market, with neuromorphic chips enabling wearable devices that can continuously monitor patient vital signs and detect anomalies without constant cloud connectivity. The market for AI-enhanced medical devices is projected to grow at 28% CAGR through 2028, with neuromorphic computing positioned as a key enabling technology.

Despite clear market demand signals, adoption barriers persist around standardization, integration with existing systems, and developer ecosystem maturity. Industry consortia are actively addressing these challenges through initiatives focused on common interfaces and programming frameworks to accelerate market penetration of neuromorphic solutions in IoT infrastructure.

Current Neuromorphic Technology Landscape and Barriers

The neuromorphic computing landscape is currently experiencing significant growth, with major technology companies and research institutions investing heavily in this field. IBM's TrueNorth and Intel's Loihi represent the current state-of-the-art commercial neuromorphic chips, demonstrating capabilities in pattern recognition and real-time learning with significantly lower power consumption compared to traditional computing architectures. These chips employ spiking neural networks (SNNs) that more closely mimic biological neural systems, enabling efficient processing of temporal data streams common in IoT environments.

Despite these advancements, neuromorphic technology faces substantial barriers to widespread adoption in IoT network infrastructure. The primary challenge remains the scalability of neuromorphic systems, as current chips typically contain limited numbers of artificial neurons and synapses compared to biological brains. This constraint restricts their application in complex IoT networks that require processing massive amounts of heterogeneous data simultaneously.

Power efficiency, while improved over traditional architectures, still presents challenges for edge deployment in IoT networks. Current neuromorphic chips require specialized cooling systems when scaled up, limiting their utility in resource-constrained IoT environments where devices often operate on battery power for extended periods.

The programming paradigm for neuromorphic systems represents another significant barrier. Traditional software development approaches do not translate directly to neuromorphic architectures, requiring specialized knowledge of neural network principles and spike-based computing. The lack of standardized programming frameworks and development tools impedes broader adoption among IoT developers and system integrators.

Manufacturing complexity and cost present additional obstacles. Current fabrication processes for neuromorphic chips involve sophisticated techniques that have not yet reached economies of scale. This results in higher unit costs compared to conventional microprocessors, making widespread deployment in cost-sensitive IoT applications economically challenging.

Interoperability issues also hinder integration with existing IoT infrastructure. Most current IoT systems are built around conventional computing architectures, creating compatibility challenges when introducing neuromorphic components. The absence of standardized interfaces and protocols specifically designed for neuromorphic-IoT integration complicates system design and implementation.

Geographically, neuromorphic research and development remain concentrated in North America, Western Europe, and parts of East Asia, creating potential disparities in access to this technology. This concentration may limit global adoption and innovation, particularly in emerging markets where IoT applications could benefit significantly from neuromorphic computing's efficiency advantages.

Despite these advancements, neuromorphic technology faces substantial barriers to widespread adoption in IoT network infrastructure. The primary challenge remains the scalability of neuromorphic systems, as current chips typically contain limited numbers of artificial neurons and synapses compared to biological brains. This constraint restricts their application in complex IoT networks that require processing massive amounts of heterogeneous data simultaneously.

Power efficiency, while improved over traditional architectures, still presents challenges for edge deployment in IoT networks. Current neuromorphic chips require specialized cooling systems when scaled up, limiting their utility in resource-constrained IoT environments where devices often operate on battery power for extended periods.

The programming paradigm for neuromorphic systems represents another significant barrier. Traditional software development approaches do not translate directly to neuromorphic architectures, requiring specialized knowledge of neural network principles and spike-based computing. The lack of standardized programming frameworks and development tools impedes broader adoption among IoT developers and system integrators.

Manufacturing complexity and cost present additional obstacles. Current fabrication processes for neuromorphic chips involve sophisticated techniques that have not yet reached economies of scale. This results in higher unit costs compared to conventional microprocessors, making widespread deployment in cost-sensitive IoT applications economically challenging.

Interoperability issues also hinder integration with existing IoT infrastructure. Most current IoT systems are built around conventional computing architectures, creating compatibility challenges when introducing neuromorphic components. The absence of standardized interfaces and protocols specifically designed for neuromorphic-IoT integration complicates system design and implementation.

Geographically, neuromorphic research and development remain concentrated in North America, Western Europe, and parts of East Asia, creating potential disparities in access to this technology. This concentration may limit global adoption and innovation, particularly in emerging markets where IoT applications could benefit significantly from neuromorphic computing's efficiency advantages.

Existing Neuromorphic Solutions for IoT Networks

01 Neuromorphic architecture design and implementation

Neuromorphic chips are designed to mimic the structure and functionality of the human brain, with architectures that incorporate neural networks, synaptic connections, and spike-based processing. These designs enable efficient parallel processing and learning capabilities similar to biological neural systems. The implementation includes specialized hardware components that can process information in ways similar to neurons and synapses, allowing for more efficient handling of complex cognitive tasks and pattern recognition.- Neuromorphic architecture design and implementation: Neuromorphic chips are designed to mimic the structure and functionality of the human brain, using specialized architectures that integrate processing and memory. These designs incorporate neural networks with spiking neurons and synapses that can process information in parallel, enabling efficient pattern recognition and learning capabilities. The architecture typically includes arrays of artificial neurons connected by synaptic elements that can adapt their strength based on input patterns, similar to biological neural systems.

- Materials and fabrication techniques for neuromorphic devices: Advanced materials and fabrication methods are crucial for developing efficient neuromorphic chips. These include memristive materials, phase-change materials, and other novel semiconductors that can mimic synaptic behavior. Fabrication techniques involve integrating these materials with conventional CMOS processes to create hybrid systems that combine the benefits of traditional computing with brain-inspired architectures. These approaches enable the creation of devices with low power consumption, high density, and the ability to perform in-memory computing.

- Learning algorithms and training methods for neuromorphic systems: Specialized learning algorithms are developed for neuromorphic chips to enable efficient training and adaptation. These include spike-timing-dependent plasticity (STDP), reinforcement learning, and supervised learning approaches adapted for spiking neural networks. The algorithms are designed to work with the unique characteristics of neuromorphic hardware, such as event-driven processing and local learning rules. These methods allow neuromorphic systems to learn from data streams in real-time and adapt to changing environments without requiring extensive retraining.

- Energy efficiency and power optimization in neuromorphic computing: Neuromorphic chips are designed with a focus on energy efficiency, aiming to achieve brain-like computational capabilities while consuming minimal power. Techniques include sparse coding, event-driven processing, and low-power circuit designs that activate only when necessary. Power optimization strategies involve distributing computation across the chip to minimize data movement, implementing local memory structures, and utilizing asynchronous signaling. These approaches enable neuromorphic systems to perform complex cognitive tasks with orders of magnitude less energy than conventional computing architectures.

- Applications and integration of neuromorphic chips in various systems: Neuromorphic chips are being integrated into various applications including computer vision, speech recognition, autonomous vehicles, robotics, and IoT devices. These chips excel at tasks requiring pattern recognition, sensory processing, and decision-making in dynamic environments. Integration approaches include hybrid systems that combine neuromorphic processors with traditional computing elements, edge computing implementations that bring intelligence closer to sensors, and specialized interfaces that connect neuromorphic hardware with conventional digital systems. These integrations enable new capabilities in real-time processing of complex sensory data with minimal power requirements.

02 Memory and processing integration in neuromorphic systems

Neuromorphic chips integrate memory and processing units to overcome the traditional von Neumann bottleneck. This integration allows for more efficient data handling by reducing the physical distance between memory storage and computation elements. These systems often incorporate resistive memory technologies, such as memristors, which can simultaneously store information and perform computations, enabling more energy-efficient and faster processing for artificial intelligence applications.Expand Specific Solutions03 Energy efficiency and power optimization techniques

Neuromorphic chips are designed with a focus on energy efficiency, employing various techniques to minimize power consumption while maintaining computational performance. These include low-power circuit designs, spike-based computation that activates only when necessary, and specialized signal processing methods that reduce energy requirements. The power optimization approaches enable these chips to perform complex AI tasks with significantly lower energy consumption compared to traditional computing architectures.Expand Specific Solutions04 Learning and adaptation mechanisms in neuromorphic hardware

Neuromorphic chips incorporate various learning mechanisms that allow them to adapt and improve performance over time. These include spike-timing-dependent plasticity (STDP), reinforcement learning algorithms, and other biologically-inspired learning rules implemented directly in hardware. The ability to learn and adapt enables these chips to process complex patterns, recognize objects, and make decisions based on experience, similar to biological neural systems but with the speed and precision advantages of electronic systems.Expand Specific Solutions05 Applications and integration with other technologies

Neuromorphic chips are being applied across various domains including computer vision, natural language processing, autonomous systems, and edge computing. These chips can be integrated with sensors, cameras, and other input devices to create complete cognitive systems capable of real-time processing and decision-making. The integration enables applications in robotics, healthcare monitoring, smart infrastructure, and other fields where energy-efficient, real-time processing of complex sensory data is required.Expand Specific Solutions

Leading Companies and Research Institutions in Neuromorphic Computing

Neuromorphic chips in IoT network infrastructure are emerging as a transformative technology, currently in the early growth phase with significant market potential. The global market is expanding rapidly, driven by increasing demand for edge computing solutions that mimic human brain functionality. Leading players include IBM, which has pioneered neuromorphic research with its TrueNorth architecture, alongside specialized innovators like Syntiant and Polyn Technology focusing on ultra-low-power AI processing for edge devices. Intel is advancing its Loihi platform, while academic institutions such as Tsinghua University, Zhejiang University, and KAIST are contributing significant research. The technology is approaching commercial viability with companies like Hewlett Packard Enterprise and Alibaba exploring applications in IoT infrastructure, though widespread adoption remains several years away.

International Business Machines Corp.

Technical Solution: IBM's TrueNorth neuromorphic chip architecture represents a significant advancement for IoT network infrastructure. The chip features a million programmable neurons and 256 million synapses organized into 4,096 neurosynaptic cores, consuming only 70mW of power while delivering 46 billion synaptic operations per second. IBM has specifically adapted this technology for IoT applications by implementing event-driven processing that activates only when needed, dramatically reducing power consumption compared to traditional computing architectures. The TrueNorth chip incorporates on-chip learning capabilities that enable edge devices to adapt to changing network conditions without constant cloud connectivity. IBM has also developed specialized programming frameworks like Corelet that simplify the development of neuromorphic applications for IoT infrastructure, allowing for efficient implementation of complex neural network models directly on edge devices.

Strengths: Extremely low power consumption makes it ideal for battery-powered IoT devices; event-driven architecture provides real-time responsiveness for critical IoT applications; high neural density enables complex processing at the edge. Weaknesses: Programming complexity requires specialized expertise; limited commercial deployment compared to traditional processors; integration challenges with existing IoT ecosystems.

Polyn Technology Ltd.

Technical Solution: Polyn Technology has developed Neuromorphic Analog Signal Processing (NASP) technology specifically designed for IoT network infrastructure. Their approach combines analog neuromorphic circuits with digital interfaces to create ultra-low power solutions for sensor data processing. Polyn's NASP chips implement always-on pattern recognition directly in hardware, enabling continuous monitoring of sensor inputs while consuming microwatts of power. The technology features in-memory computing architecture that eliminates the traditional separation between processing and memory, reducing data movement and associated energy costs. For IoT networks, Polyn's chips provide local intelligence that can filter and pre-process sensor data before transmission, significantly reducing bandwidth requirements and extending battery life of connected devices. Their neuromorphic processors can be trained offline and then deployed with fixed weights, making them suitable for predictable IoT applications like condition monitoring, anomaly detection, and simple classification tasks at the extreme edge of networks.

Strengths: Ultra-low power consumption (microwatts range) enables deployment in energy-harvesting IoT nodes; analog processing provides efficient pattern recognition for sensor data; compact form factor suitable for space-constrained IoT devices. Weaknesses: Limited flexibility compared to programmable solutions; fixed-weight implementation restricts adaptation to changing conditions; relatively new technology with limited ecosystem support.

Key Neuromorphic Architectures and Algorithms Analysis

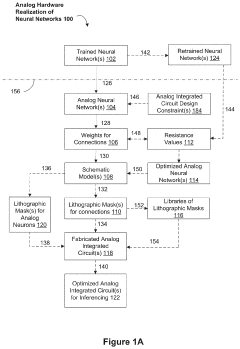

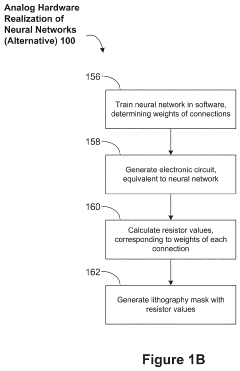

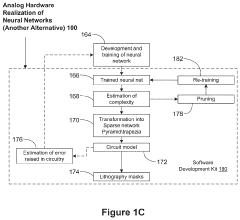

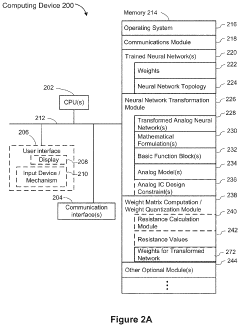

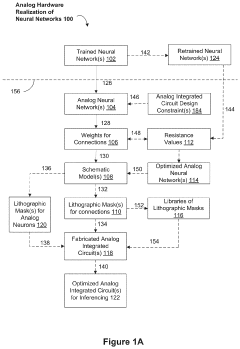

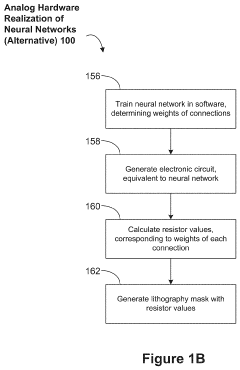

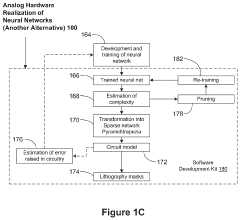

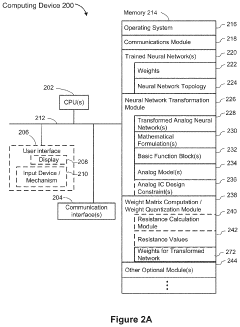

Neuromorphic Analog Signal Processor for Predictive Maintenance of Machines

PatentPendingUS20230081715A1

Innovation

- Analog neuromorphic circuits that model trained neural networks, using operational amplifiers and resistors to create hardware implementations that are more power-efficient, scalable, and less sensitive to noise and temperature changes, allowing for mass production and reduced manufacturing costs.

Systems and Methods for Human Activity Recognition Using Analog Neuromorphic Computing Hardware

PatentPendingUS20220280072A1

Innovation

- Analog neuromorphic circuits that model trained neural networks, allowing for improved performance per watt, reduced power consumption, and cost-effective manufacturing, with the ability to distinguish multiple human activities and provide personalized solutions, by transforming neural network topologies into equivalent analog networks using operational amplifiers and resistors, enabling mass production and efficient retraining.

Energy Efficiency Benchmarks and Optimization Strategies

Energy efficiency represents a critical benchmark for evaluating neuromorphic chips in IoT network infrastructure. Current neuromorphic implementations demonstrate significant advantages over traditional computing architectures, with leading solutions achieving power consumption reductions of 100-1000x compared to conventional GPUs and CPUs when handling neural network workloads. IBM's TrueNorth chip, for instance, operates at approximately 70 milliwatts while processing sensory data—a fraction of the power required by traditional processors performing similar tasks.

Benchmark methodologies for neuromorphic chips typically measure performance-per-watt metrics across various workloads, including pattern recognition, anomaly detection, and real-time sensor data processing. The SyNAPSE program has established standardized testing protocols that evaluate energy efficiency under variable network conditions and processing demands, providing a framework for comparative analysis across different neuromorphic implementations.

Optimization strategies for enhancing energy efficiency in neuromorphic IoT deployments operate at multiple levels. At the hardware level, techniques such as dynamic voltage and frequency scaling (DVFS) allow chips to adjust power consumption based on computational demands. Implementation of power gating mechanisms enables selective deactivation of unused neural circuits, significantly reducing static power consumption during periods of low activity.

Event-driven processing represents another fundamental optimization strategy, wherein computation occurs only when input data changes substantially. This approach contrasts sharply with traditional computing's constant clock-driven operations and can reduce power consumption by up to 90% in sensor-heavy IoT environments. Intel's Loihi chip exemplifies this approach, activating neural elements only when necessary for processing incoming sensory data.

Network-level optimizations include distributed computing architectures that position neuromorphic processing closer to data sources, minimizing energy-intensive data transmission. Edge-deployed neuromorphic systems can perform preliminary data filtering and feature extraction, transmitting only relevant information to central nodes and reducing overall network energy requirements by 40-60% in typical IoT deployments.

Emerging research focuses on algorithm-hardware co-design, where neural network architectures are specifically optimized for neuromorphic implementation. Techniques such as weight pruning, quantization, and sparse activation patterns further reduce computational requirements while maintaining inference accuracy. Recent implementations have demonstrated that these approaches can yield additional 30-50% energy efficiency improvements without significant performance degradation.

Benchmark methodologies for neuromorphic chips typically measure performance-per-watt metrics across various workloads, including pattern recognition, anomaly detection, and real-time sensor data processing. The SyNAPSE program has established standardized testing protocols that evaluate energy efficiency under variable network conditions and processing demands, providing a framework for comparative analysis across different neuromorphic implementations.

Optimization strategies for enhancing energy efficiency in neuromorphic IoT deployments operate at multiple levels. At the hardware level, techniques such as dynamic voltage and frequency scaling (DVFS) allow chips to adjust power consumption based on computational demands. Implementation of power gating mechanisms enables selective deactivation of unused neural circuits, significantly reducing static power consumption during periods of low activity.

Event-driven processing represents another fundamental optimization strategy, wherein computation occurs only when input data changes substantially. This approach contrasts sharply with traditional computing's constant clock-driven operations and can reduce power consumption by up to 90% in sensor-heavy IoT environments. Intel's Loihi chip exemplifies this approach, activating neural elements only when necessary for processing incoming sensory data.

Network-level optimizations include distributed computing architectures that position neuromorphic processing closer to data sources, minimizing energy-intensive data transmission. Edge-deployed neuromorphic systems can perform preliminary data filtering and feature extraction, transmitting only relevant information to central nodes and reducing overall network energy requirements by 40-60% in typical IoT deployments.

Emerging research focuses on algorithm-hardware co-design, where neural network architectures are specifically optimized for neuromorphic implementation. Techniques such as weight pruning, quantization, and sparse activation patterns further reduce computational requirements while maintaining inference accuracy. Recent implementations have demonstrated that these approaches can yield additional 30-50% energy efficiency improvements without significant performance degradation.

Security Implications of Neuromorphic Computing in IoT

The integration of neuromorphic computing into IoT infrastructure introduces significant security considerations that must be addressed as this technology becomes more prevalent. Neuromorphic chips, designed to mimic the human brain's neural networks, process information differently than traditional computing systems, creating both unique security advantages and challenges within IoT environments.

Neuromorphic architectures offer inherent security benefits through their distributed processing nature. Unlike centralized systems where a single breach can compromise the entire network, neuromorphic systems distribute computation across numerous simple processing units. This architectural approach naturally compartmentalizes security risks, potentially limiting the impact of individual breaches within IoT networks.

However, the novel architecture also presents new attack vectors. Adversarial attacks specifically designed to manipulate neural networks, such as those that subtly alter input data to produce incorrect outputs while remaining undetectable to human observers, pose significant threats. In IoT contexts, such attacks could compromise critical infrastructure by manipulating sensor data processing or decision-making algorithms without triggering traditional security alerts.

Energy efficiency, while a primary advantage of neuromorphic computing, introduces security implications for IoT deployments. The reduced power consumption enables implementation in previously inaccessible environments but may limit the computational resources available for robust security protocols. This constraint necessitates the development of lightweight security measures specifically optimized for neuromorphic hardware.

Data privacy concerns are amplified in neuromorphic IoT systems that continuously process sensory information. These systems often handle sensitive data from environments like homes, healthcare facilities, or industrial settings. The brain-inspired learning mechanisms may inadvertently memorize private information within their neural weights, creating potential extraction vulnerabilities through side-channel attacks.

Authentication mechanisms represent another critical security consideration. Neuromorphic chips offer promising capabilities for hardware-intrinsic security functions, leveraging their unique physical characteristics as potential physically unclonable functions (PUFs). These could provide strong device authentication within IoT networks without requiring extensive computational resources.

The dynamic learning capabilities of neuromorphic systems introduce additional security complexities. As these systems adapt to their environments through continuous learning, their behavior becomes less predictable from a security perspective. This adaptability necessitates new approaches to security verification and validation that can account for evolving system behaviors while maintaining security assurances throughout the system lifecycle.

Neuromorphic architectures offer inherent security benefits through their distributed processing nature. Unlike centralized systems where a single breach can compromise the entire network, neuromorphic systems distribute computation across numerous simple processing units. This architectural approach naturally compartmentalizes security risks, potentially limiting the impact of individual breaches within IoT networks.

However, the novel architecture also presents new attack vectors. Adversarial attacks specifically designed to manipulate neural networks, such as those that subtly alter input data to produce incorrect outputs while remaining undetectable to human observers, pose significant threats. In IoT contexts, such attacks could compromise critical infrastructure by manipulating sensor data processing or decision-making algorithms without triggering traditional security alerts.

Energy efficiency, while a primary advantage of neuromorphic computing, introduces security implications for IoT deployments. The reduced power consumption enables implementation in previously inaccessible environments but may limit the computational resources available for robust security protocols. This constraint necessitates the development of lightweight security measures specifically optimized for neuromorphic hardware.

Data privacy concerns are amplified in neuromorphic IoT systems that continuously process sensory information. These systems often handle sensitive data from environments like homes, healthcare facilities, or industrial settings. The brain-inspired learning mechanisms may inadvertently memorize private information within their neural weights, creating potential extraction vulnerabilities through side-channel attacks.

Authentication mechanisms represent another critical security consideration. Neuromorphic chips offer promising capabilities for hardware-intrinsic security functions, leveraging their unique physical characteristics as potential physically unclonable functions (PUFs). These could provide strong device authentication within IoT networks without requiring extensive computational resources.

The dynamic learning capabilities of neuromorphic systems introduce additional security complexities. As these systems adapt to their environments through continuous learning, their behavior becomes less predictable from a security perspective. This adaptability necessitates new approaches to security verification and validation that can account for evolving system behaviors while maintaining security assurances throughout the system lifecycle.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!