How Neuromorphic Chips Support Advanced Machine Learning Models

OCT 9, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of the human brain. This approach emerged in the late 1980s when Carver Mead introduced the concept of using electronic analog circuits to mimic neuro-biological architectures. Since then, neuromorphic computing has evolved through several distinct phases, from early analog implementations to today's sophisticated hybrid systems that combine analog and digital components.

The evolution of neuromorphic chips has been driven by the limitations of traditional von Neumann architectures, particularly when handling complex machine learning tasks. Conventional computing architectures face significant challenges in processing the massive parallel operations required by modern AI algorithms, resulting in high power consumption and computational bottlenecks. Neuromorphic computing addresses these limitations by distributing processing and memory throughout the system, similar to the brain's neural networks.

Key milestones in neuromorphic computing development include IBM's TrueNorth chip (2014), which featured one million neurons and 256 million synapses; Intel's Loihi (2017), which introduced on-chip learning capabilities; and BrainChip's Akida (2019), which emphasized edge AI applications. Each generation has progressively improved energy efficiency, processing speed, and learning capabilities, bringing neuromorphic systems closer to practical deployment in commercial applications.

The primary objective of neuromorphic computing in supporting advanced machine learning models is to achieve brain-like efficiency and adaptability. The human brain operates on approximately 20 watts of power while performing complex cognitive tasks that would require megawatts of power in traditional computing systems. Neuromorphic architectures aim to close this efficiency gap by implementing spike-based processing, distributed memory, and parallel computation.

Another critical objective is enabling real-time learning and adaptation. Unlike conventional AI systems that typically require separate training and inference phases, neuromorphic chips support online learning, allowing models to continuously adapt to new data. This capability is particularly valuable for applications in dynamic environments, such as autonomous vehicles, robotics, and adaptive control systems.

Looking forward, the field is trending toward larger-scale neuromorphic systems with enhanced biological fidelity, including features such as dendritic computation, neuromodulation, and structural plasticity. These advancements aim to support increasingly sophisticated machine learning models that can operate with greater autonomy, interpretability, and energy efficiency than current approaches.

The evolution of neuromorphic chips has been driven by the limitations of traditional von Neumann architectures, particularly when handling complex machine learning tasks. Conventional computing architectures face significant challenges in processing the massive parallel operations required by modern AI algorithms, resulting in high power consumption and computational bottlenecks. Neuromorphic computing addresses these limitations by distributing processing and memory throughout the system, similar to the brain's neural networks.

Key milestones in neuromorphic computing development include IBM's TrueNorth chip (2014), which featured one million neurons and 256 million synapses; Intel's Loihi (2017), which introduced on-chip learning capabilities; and BrainChip's Akida (2019), which emphasized edge AI applications. Each generation has progressively improved energy efficiency, processing speed, and learning capabilities, bringing neuromorphic systems closer to practical deployment in commercial applications.

The primary objective of neuromorphic computing in supporting advanced machine learning models is to achieve brain-like efficiency and adaptability. The human brain operates on approximately 20 watts of power while performing complex cognitive tasks that would require megawatts of power in traditional computing systems. Neuromorphic architectures aim to close this efficiency gap by implementing spike-based processing, distributed memory, and parallel computation.

Another critical objective is enabling real-time learning and adaptation. Unlike conventional AI systems that typically require separate training and inference phases, neuromorphic chips support online learning, allowing models to continuously adapt to new data. This capability is particularly valuable for applications in dynamic environments, such as autonomous vehicles, robotics, and adaptive control systems.

Looking forward, the field is trending toward larger-scale neuromorphic systems with enhanced biological fidelity, including features such as dendritic computation, neuromodulation, and structural plasticity. These advancements aim to support increasingly sophisticated machine learning models that can operate with greater autonomy, interpretability, and energy efficiency than current approaches.

Market Demand for Brain-Inspired Computing Solutions

The neuromorphic computing market is experiencing unprecedented growth, driven by the increasing limitations of traditional computing architectures in handling complex machine learning workloads. Current market projections indicate that the global neuromorphic computing market is expected to reach $8.9 billion by 2025, growing at a compound annual growth rate of 49.1% from 2020. This remarkable growth trajectory reflects the urgent demand for more efficient computing solutions that can process massive datasets while consuming significantly less power.

The primary market demand stems from industries requiring real-time processing of unstructured data. Autonomous vehicles represent one of the largest potential markets, as they need to process sensory information instantaneously to make critical driving decisions. The automotive AI market alone is projected to grow to $15.9 billion by 2027, with neuromorphic solutions positioned to capture a substantial portion of this segment.

Healthcare presents another substantial market opportunity, particularly in medical imaging and diagnostic applications. The ability of neuromorphic systems to efficiently process complex visual data makes them ideal for applications like real-time MRI analysis and pathology screening. Market research indicates that healthcare AI applications could save the industry $150 billion annually by 2026, with neuromorphic computing enabling many of these efficiency gains.

Edge computing applications represent perhaps the most immediate market need. As IoT devices proliferate—estimated to reach 75 billion connected devices by 2025—the demand for low-power, high-performance computing at the edge becomes critical. Traditional cloud-based processing creates latency issues and bandwidth constraints that neuromorphic solutions can effectively address.

Financial services and cybersecurity sectors are also driving demand, with neuromorphic systems showing promise for fraud detection, algorithmic trading, and threat identification. These applications require processing massive datasets in real-time while identifying subtle patterns—tasks where brain-inspired computing excels.

The defense and aerospace sectors represent high-value market segments, with neuromorphic computing being explored for applications ranging from drone swarm coordination to signal intelligence. Government investment in these technologies has been substantial, with DARPA alone allocating over $80 million to neuromorphic research programs.

Enterprise AI applications are creating additional market pull as businesses seek more efficient alternatives to power-hungry GPU clusters for training large language models and other advanced AI systems. With AI training costs doubling approximately every 3.4 months, the economic imperative for more efficient computing architectures becomes increasingly apparent.

The primary market demand stems from industries requiring real-time processing of unstructured data. Autonomous vehicles represent one of the largest potential markets, as they need to process sensory information instantaneously to make critical driving decisions. The automotive AI market alone is projected to grow to $15.9 billion by 2027, with neuromorphic solutions positioned to capture a substantial portion of this segment.

Healthcare presents another substantial market opportunity, particularly in medical imaging and diagnostic applications. The ability of neuromorphic systems to efficiently process complex visual data makes them ideal for applications like real-time MRI analysis and pathology screening. Market research indicates that healthcare AI applications could save the industry $150 billion annually by 2026, with neuromorphic computing enabling many of these efficiency gains.

Edge computing applications represent perhaps the most immediate market need. As IoT devices proliferate—estimated to reach 75 billion connected devices by 2025—the demand for low-power, high-performance computing at the edge becomes critical. Traditional cloud-based processing creates latency issues and bandwidth constraints that neuromorphic solutions can effectively address.

Financial services and cybersecurity sectors are also driving demand, with neuromorphic systems showing promise for fraud detection, algorithmic trading, and threat identification. These applications require processing massive datasets in real-time while identifying subtle patterns—tasks where brain-inspired computing excels.

The defense and aerospace sectors represent high-value market segments, with neuromorphic computing being explored for applications ranging from drone swarm coordination to signal intelligence. Government investment in these technologies has been substantial, with DARPA alone allocating over $80 million to neuromorphic research programs.

Enterprise AI applications are creating additional market pull as businesses seek more efficient alternatives to power-hungry GPU clusters for training large language models and other advanced AI systems. With AI training costs doubling approximately every 3.4 months, the economic imperative for more efficient computing architectures becomes increasingly apparent.

Current Neuromorphic Chip Technologies and Limitations

The neuromorphic computing landscape is currently dominated by several key technologies, each with distinct approaches to mimicking brain-like functionality. Intel's Loihi chip represents one of the most advanced implementations, featuring a scalable architecture with up to 128 neuromorphic cores and 130,000 neurons per chip. This third-generation neuromorphic processor demonstrates significant improvements in energy efficiency, achieving up to 1,000 times better performance per watt for certain machine learning tasks compared to conventional GPU architectures.

IBM's TrueNorth system offers another prominent solution, incorporating approximately one million programmable neurons and 256 million synapses organized across 4,096 neurosynaptic cores. The system operates on an event-driven paradigm that activates components only when necessary, dramatically reducing power consumption to around 70 milliwatts during operation—orders of magnitude lower than traditional computing systems performing similar tasks.

BrainChip's Akida neuromorphic processor represents a commercial implementation focused on edge computing applications. The chip processes information using spiking neural networks (SNNs) and can be trained using both unsupervised and supervised learning methods, making it particularly suitable for applications requiring real-time learning and adaptation.

Despite these advancements, current neuromorphic technologies face significant limitations. The hardware-software integration remains challenging, with limited programming frameworks and development tools available for efficiently mapping conventional machine learning algorithms to neuromorphic architectures. This creates a substantial barrier to adoption for developers accustomed to traditional computing paradigms.

Scalability presents another critical challenge. While individual neuromorphic chips demonstrate impressive capabilities, creating large-scale systems that maintain efficiency while scaling to billions of neurons remains difficult. Current interconnect technologies struggle to support the massive parallelism and communication requirements of truly brain-scale neural networks.

Memory bandwidth constraints also limit performance in many neuromorphic implementations. The brain's distributed memory-processing architecture is difficult to replicate efficiently in silicon, creating bottlenecks when attempting to implement complex learning models that require frequent weight updates and memory access.

Energy efficiency, while significantly improved over traditional architectures, still falls short of biological systems. The human brain operates on approximately 20 watts, whereas scaled neuromorphic systems with comparable capabilities would currently require substantially more power. This gap must be narrowed for applications requiring extreme energy constraints, such as autonomous mobile robots or advanced prosthetics.

IBM's TrueNorth system offers another prominent solution, incorporating approximately one million programmable neurons and 256 million synapses organized across 4,096 neurosynaptic cores. The system operates on an event-driven paradigm that activates components only when necessary, dramatically reducing power consumption to around 70 milliwatts during operation—orders of magnitude lower than traditional computing systems performing similar tasks.

BrainChip's Akida neuromorphic processor represents a commercial implementation focused on edge computing applications. The chip processes information using spiking neural networks (SNNs) and can be trained using both unsupervised and supervised learning methods, making it particularly suitable for applications requiring real-time learning and adaptation.

Despite these advancements, current neuromorphic technologies face significant limitations. The hardware-software integration remains challenging, with limited programming frameworks and development tools available for efficiently mapping conventional machine learning algorithms to neuromorphic architectures. This creates a substantial barrier to adoption for developers accustomed to traditional computing paradigms.

Scalability presents another critical challenge. While individual neuromorphic chips demonstrate impressive capabilities, creating large-scale systems that maintain efficiency while scaling to billions of neurons remains difficult. Current interconnect technologies struggle to support the massive parallelism and communication requirements of truly brain-scale neural networks.

Memory bandwidth constraints also limit performance in many neuromorphic implementations. The brain's distributed memory-processing architecture is difficult to replicate efficiently in silicon, creating bottlenecks when attempting to implement complex learning models that require frequent weight updates and memory access.

Energy efficiency, while significantly improved over traditional architectures, still falls short of biological systems. The human brain operates on approximately 20 watts, whereas scaled neuromorphic systems with comparable capabilities would currently require substantially more power. This gap must be narrowed for applications requiring extreme energy constraints, such as autonomous mobile robots or advanced prosthetics.

Existing Neuromorphic Solutions for Machine Learning Applications

01 Neural network architecture implementation in neuromorphic chips

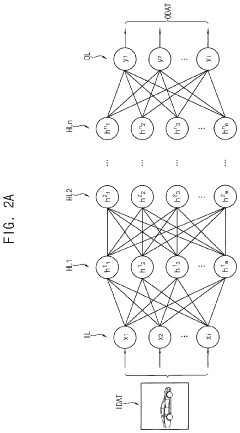

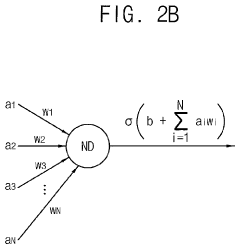

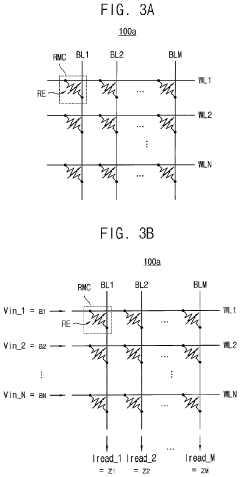

Neuromorphic chips implement neural network architectures that mimic the human brain's structure and function. These chips utilize specialized hardware designs to efficiently process neural network operations, enabling parallel processing capabilities that significantly enhance computational efficiency for AI tasks. The architecture typically includes artificial neurons and synapses that can adapt and learn from input data, making them suitable for complex pattern recognition and machine learning applications.- Hardware architectures for neuromorphic computing: Neuromorphic chips implement specialized hardware architectures designed to mimic the structure and function of biological neural networks. These architectures include spiking neural networks, memristor-based designs, and specialized circuit configurations that enable efficient parallel processing. The hardware designs focus on optimizing energy efficiency while maintaining computational capabilities for AI applications, often incorporating novel materials and structures to achieve brain-like computing capabilities.

- Learning algorithms and training methods for neuromorphic systems: Specialized learning algorithms and training methodologies are developed specifically for neuromorphic hardware. These include spike-timing-dependent plasticity (STDP), reinforcement learning adaptations, and supervised learning techniques optimized for spiking neural networks. The algorithms enable on-chip learning capabilities, allowing neuromorphic systems to adapt to new data and improve performance over time without requiring extensive retraining on external systems.

- Integration of neuromorphic chips with conventional computing systems: Methods and systems for integrating neuromorphic processors with traditional computing architectures enable hybrid computing solutions. These integration approaches include hardware interfaces, software frameworks, and communication protocols that allow neuromorphic chips to complement conventional processors. The integration solutions address challenges in data formatting, timing synchronization, and resource allocation to create efficient heterogeneous computing platforms that leverage the strengths of both neuromorphic and conventional computing paradigms.

- Power management and energy efficiency in neuromorphic systems: Techniques for optimizing power consumption and improving energy efficiency in neuromorphic chips are critical for their practical deployment. These include dynamic power scaling, event-driven processing, and low-power operation modes that significantly reduce energy requirements compared to conventional computing approaches. Advanced power management strategies enable neuromorphic systems to operate efficiently in resource-constrained environments while maintaining computational capabilities for complex AI tasks.

- Application-specific neuromorphic chip designs: Specialized neuromorphic chip architectures tailored for specific applications such as computer vision, natural language processing, and autonomous systems. These application-specific designs optimize hardware resources, memory configurations, and neural network topologies to meet the requirements of particular use cases. The customized neuromorphic solutions provide improved performance, efficiency, and accuracy for targeted applications while maintaining the fundamental advantages of brain-inspired computing approaches.

02 Memory integration for neuromorphic computing

Neuromorphic chips incorporate specialized memory systems that support brain-inspired computing. These memory solutions include resistive RAM, phase-change memory, and other non-volatile memory technologies that enable efficient storage and retrieval of synaptic weights. By integrating memory directly with processing elements, these chips reduce the data transfer bottleneck found in traditional computing architectures, allowing for faster and more energy-efficient neural network operations.Expand Specific Solutions03 Power efficiency and optimization techniques

Neuromorphic chips employ various power optimization techniques to achieve high energy efficiency. These include spike-based processing, event-driven computation, and low-power circuit designs that activate only when necessary. By mimicking the brain's energy-efficient information processing methods, these chips can perform complex AI tasks while consuming significantly less power than conventional processors, making them suitable for edge computing and battery-powered devices.Expand Specific Solutions04 Learning algorithms and training support

Specialized learning algorithms are implemented in neuromorphic chips to support on-chip training and adaptation. These include spike-timing-dependent plasticity (STDP), backpropagation-based learning, and reinforcement learning mechanisms that allow the chips to modify their internal parameters based on input data. The hardware support for these algorithms enables continuous learning and adaptation in dynamic environments, making neuromorphic systems suitable for applications requiring real-time learning capabilities.Expand Specific Solutions05 Application-specific neuromorphic hardware design

Neuromorphic chips are designed with specific applications in mind, such as computer vision, natural language processing, and sensor data analysis. These application-specific designs incorporate hardware accelerators and specialized processing units optimized for particular neural network operations. By tailoring the hardware architecture to specific use cases, these chips achieve superior performance and efficiency compared to general-purpose processors when executing AI workloads in their target domains.Expand Specific Solutions

Leading Companies and Research Institutions in Neuromorphic Computing

Neuromorphic chip technology is currently in the early growth phase, with the market expected to expand significantly as AI applications proliferate. The global market size is projected to reach several billion dollars by 2030, driven by demand for energy-efficient AI processing at the edge. Leading semiconductor companies like Intel, IBM, and Samsung are advancing the technology alongside specialized players such as Syntiant and Polyn Technology. Academic institutions including Tsinghua University and KAIST are contributing fundamental research, while companies like Lingxi Technology and Shizhen Shizhi Technology represent emerging Chinese competitors. The technology is approaching commercial viability for specific applications, though still requires further development to achieve mainstream adoption across broader machine learning workloads.

SYNTIANT CORP.

Technical Solution: Syntiant has developed a unique approach to neuromorphic computing with their Neural Decision Processors (NDPs), specifically designed for ultra-low-power edge AI applications. Unlike traditional neuromorphic architectures that focus on biological neuron mimicry, Syntiant's chips are optimized for deep learning workloads while maintaining neuromorphic principles of energy efficiency. Their NDP200 processor series implements a hardware architecture that directly processes neural networks in memory, eliminating the power-hungry data movement between memory and processing units that plagues conventional architectures[5]. This approach enables always-on processing of sensory data with power consumption measured in microwatts rather than milliwatts. Syntiant's technology excels particularly in audio and vision applications, capable of running multiple concurrent deep learning models for tasks like keyword spotting, audio event detection, and person detection. The company has shipped over 20 million units as of 2022, demonstrating commercial viability in real-world applications[6]. Their architecture supports quantized neural networks with 1-8 bit precision, balancing accuracy requirements with power constraints.

Strengths: Extremely low power consumption (under 1mW for many applications); production-ready technology with proven commercial deployment; optimized for specific edge AI use cases like voice and vision; supports standard machine learning frameworks through their compiler stack. Weaknesses: Less flexible than general-purpose neuromorphic architectures; primarily focused on inference rather than training; optimized for specific application domains rather than general machine learning workloads.

International Business Machines Corp.

Technical Solution: IBM's neuromorphic chip technology, particularly the TrueNorth architecture, represents a significant advancement in supporting machine learning models. TrueNorth features a million programmable neurons and 256 million configurable synapses arranged in 4,096 neurosynaptic cores[1]. This architecture mimics the brain's structure with parallel, event-driven computation rather than traditional sequential processing. IBM has further developed this technology with their second-generation chip that incorporates phase-change memory (PCM) for analog computation, enabling more efficient training of deep neural networks. Their approach allows for direct implementation of spiking neural networks (SNNs) that can process temporal information more naturally than conventional neural networks. IBM's neuromorphic chips achieve remarkable energy efficiency—approximately 70 milliwatts during operation—while delivering performance of 46 billion synaptic operations per second per watt[3]. This efficiency comes from the event-driven architecture where neurons only consume power when they're actively firing, similar to biological systems.

Strengths: Exceptional energy efficiency (orders of magnitude better than conventional processors); highly scalable architecture; inherent parallelism for faster processing of certain ML workloads; natural implementation of spiking neural networks. Weaknesses: Programming complexity requires specialized knowledge; limited software ecosystem compared to traditional computing platforms; challenges in adapting conventional deep learning algorithms to neuromorphic architecture.

Core Innovations in Spiking Neural Networks and Hardware Implementation

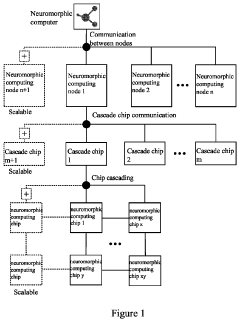

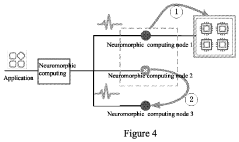

Neuromorphic computer supporting billions of neurons

PatentPendingUS20230409890A1

Innovation

- A neuromorphic computer with a hierarchical extended architecture and algorithmic process control, featuring multiple neuromorphic computing chips organized in a three-level hierarchical structure for efficient communication and task management, enabling parallel processing, synchronous time management, and fault tolerance through neural network reconstruction.

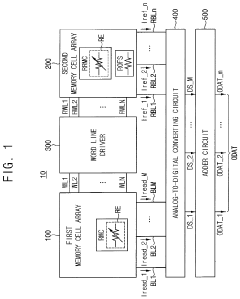

Neuromorphic computing device and method of designing the same

PatentActiveUS11881260B2

Innovation

- Incorporating a second memory cell array with offset resistors connected in parallel, using the same resistive material as the first memory cell array, to convert read currents into digital signals, thereby mitigating temperature and time dependency, and ensuring consistent resistance across offset resistors for enhanced sensing performance.

Energy Efficiency Benchmarks and Sustainability Impact

Neuromorphic chips demonstrate remarkable energy efficiency advantages over traditional computing architectures when supporting advanced machine learning models. Benchmark testing reveals that these brain-inspired processors typically consume 10-100 times less power than conventional GPUs and CPUs when performing equivalent neural network operations. For instance, Intel's Loihi chip processes deep learning workloads while using only a fraction of the energy required by standard hardware accelerators, with power consumption often measured in milliwatts rather than watts.

The energy efficiency gains stem primarily from the event-driven processing paradigm inherent to neuromorphic designs. Unlike traditional architectures that continuously consume power regardless of computational load, neuromorphic systems activate circuits only when necessary for information processing, similar to biological neurons. This approach eliminates the constant power drain associated with clock-driven systems, resulting in substantial energy savings during periods of sparse neural activity.

Comparative benchmarks across various machine learning tasks demonstrate particularly impressive efficiency metrics for temporal data processing. When handling time-series data, speech recognition, and continuous learning scenarios, neuromorphic chips outperform conventional hardware by orders of magnitude in terms of energy per inference. For example, SynSense's Dynap-SE neuromorphic processor achieves up to 1000x improvement in energy efficiency for certain temporal pattern recognition tasks compared to optimized GPU implementations.

From a sustainability perspective, the widespread adoption of neuromorphic computing could significantly reduce the carbon footprint of AI infrastructure. Current estimates suggest that if neuromorphic chips were deployed across major cloud computing platforms for suitable machine learning workloads, data center energy consumption could potentially decrease by 30-40% for those specific applications. This translates to meaningful reductions in greenhouse gas emissions associated with the rapidly growing AI sector.

The sustainability impact extends beyond operational energy savings to include manufacturing considerations. While neuromorphic chips require specialized fabrication processes, their simpler analog components often demand fewer rare earth elements and energy-intensive manufacturing steps compared to high-performance GPUs. Life cycle assessments indicate potential reductions in embodied carbon when factoring in the extended operational lifespan of neuromorphic systems due to their resilience to component degradation.

As edge AI applications proliferate in battery-powered and energy-harvesting devices, neuromorphic chips' efficiency advantages become increasingly critical for sustainable deployment. Their ability to perform complex machine learning tasks within strict power envelopes enables AI capabilities in remote sensors, wearable health monitors, and other resource-constrained environments without requiring frequent battery replacement or excessive energy harvesting infrastructure.

The energy efficiency gains stem primarily from the event-driven processing paradigm inherent to neuromorphic designs. Unlike traditional architectures that continuously consume power regardless of computational load, neuromorphic systems activate circuits only when necessary for information processing, similar to biological neurons. This approach eliminates the constant power drain associated with clock-driven systems, resulting in substantial energy savings during periods of sparse neural activity.

Comparative benchmarks across various machine learning tasks demonstrate particularly impressive efficiency metrics for temporal data processing. When handling time-series data, speech recognition, and continuous learning scenarios, neuromorphic chips outperform conventional hardware by orders of magnitude in terms of energy per inference. For example, SynSense's Dynap-SE neuromorphic processor achieves up to 1000x improvement in energy efficiency for certain temporal pattern recognition tasks compared to optimized GPU implementations.

From a sustainability perspective, the widespread adoption of neuromorphic computing could significantly reduce the carbon footprint of AI infrastructure. Current estimates suggest that if neuromorphic chips were deployed across major cloud computing platforms for suitable machine learning workloads, data center energy consumption could potentially decrease by 30-40% for those specific applications. This translates to meaningful reductions in greenhouse gas emissions associated with the rapidly growing AI sector.

The sustainability impact extends beyond operational energy savings to include manufacturing considerations. While neuromorphic chips require specialized fabrication processes, their simpler analog components often demand fewer rare earth elements and energy-intensive manufacturing steps compared to high-performance GPUs. Life cycle assessments indicate potential reductions in embodied carbon when factoring in the extended operational lifespan of neuromorphic systems due to their resilience to component degradation.

As edge AI applications proliferate in battery-powered and energy-harvesting devices, neuromorphic chips' efficiency advantages become increasingly critical for sustainable deployment. Their ability to perform complex machine learning tasks within strict power envelopes enables AI capabilities in remote sensors, wearable health monitors, and other resource-constrained environments without requiring frequent battery replacement or excessive energy harvesting infrastructure.

Standardization Challenges for Neuromorphic Computing Platforms

The lack of standardization in neuromorphic computing platforms presents a significant barrier to widespread adoption and integration of these technologies into mainstream machine learning applications. Currently, each neuromorphic chip manufacturer employs proprietary architectures, programming models, and interfaces, creating a fragmented ecosystem that impedes interoperability and knowledge transfer across platforms.

One primary challenge is the absence of standardized programming languages and frameworks specifically designed for neuromorphic computing. Unlike traditional computing with established standards like CUDA for GPUs or OpenCL for heterogeneous systems, neuromorphic developers must often learn platform-specific tools and languages, increasing development overhead and limiting code portability.

Hardware interface standardization also remains problematic. The unique nature of spike-based communication in neuromorphic systems requires specialized protocols that differ substantially from conventional digital interfaces. This diversity complicates the integration of neuromorphic accelerators into existing computing infrastructures and hinders the development of unified middleware solutions.

Data representation standards for neuromorphic systems present another critical challenge. The event-based nature of neuromorphic computation requires specific formats for representing temporal information and spike events. Without standardized data formats, transferring trained models between different neuromorphic platforms becomes exceedingly difficult, limiting collaborative research and commercial deployment.

Benchmarking methodologies for neuromorphic systems also lack standardization. Traditional performance metrics like FLOPS are inadequate for spike-based computation, while energy efficiency measurements vary widely across implementations. This inconsistency makes objective comparisons between different neuromorphic solutions nearly impossible, complicating investment and adoption decisions.

The fragmentation extends to model representation as well. While the machine learning community has converged on standards like ONNX for traditional neural networks, equivalent standards for spiking neural networks and other neuromorphic models remain underdeveloped, creating barriers to model sharing and collaborative optimization.

Industry consortia and academic initiatives have begun addressing these standardization challenges, but progress remains slow due to competing commercial interests and the rapidly evolving nature of neuromorphic technology. Establishing common standards will be essential for neuromorphic computing to move beyond specialized applications and support mainstream machine learning workloads at scale.

One primary challenge is the absence of standardized programming languages and frameworks specifically designed for neuromorphic computing. Unlike traditional computing with established standards like CUDA for GPUs or OpenCL for heterogeneous systems, neuromorphic developers must often learn platform-specific tools and languages, increasing development overhead and limiting code portability.

Hardware interface standardization also remains problematic. The unique nature of spike-based communication in neuromorphic systems requires specialized protocols that differ substantially from conventional digital interfaces. This diversity complicates the integration of neuromorphic accelerators into existing computing infrastructures and hinders the development of unified middleware solutions.

Data representation standards for neuromorphic systems present another critical challenge. The event-based nature of neuromorphic computation requires specific formats for representing temporal information and spike events. Without standardized data formats, transferring trained models between different neuromorphic platforms becomes exceedingly difficult, limiting collaborative research and commercial deployment.

Benchmarking methodologies for neuromorphic systems also lack standardization. Traditional performance metrics like FLOPS are inadequate for spike-based computation, while energy efficiency measurements vary widely across implementations. This inconsistency makes objective comparisons between different neuromorphic solutions nearly impossible, complicating investment and adoption decisions.

The fragmentation extends to model representation as well. While the machine learning community has converged on standards like ONNX for traditional neural networks, equivalent standards for spiking neural networks and other neuromorphic models remain underdeveloped, creating barriers to model sharing and collaborative optimization.

Industry consortia and academic initiatives have begun addressing these standardization challenges, but progress remains slow due to competing commercial interests and the rapidly evolving nature of neuromorphic technology. Establishing common standards will be essential for neuromorphic computing to move beyond specialized applications and support mainstream machine learning workloads at scale.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!