Why Neuromorphic Chips Are Integral to Emerging AI Technologies

OCT 9, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Background and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of the human brain. This approach emerged in the late 1980s when Carver Mead first proposed the concept of using electronic analog circuits to mimic neuro-biological architectures. Since then, the field has evolved significantly, transitioning from theoretical frameworks to practical implementations that promise to revolutionize artificial intelligence systems.

The evolution of neuromorphic computing has been driven by the limitations of traditional von Neumann architectures, particularly when handling complex AI workloads. Conventional computing systems separate memory and processing units, creating a bottleneck that impedes performance and energy efficiency when processing neural network operations. Neuromorphic chips address this fundamental constraint by integrating memory and computation, similar to how neurons and synapses function in biological brains.

Recent technological advancements have accelerated the development trajectory of neuromorphic computing. The miniaturization of transistors, improvements in materials science, and innovations in circuit design have collectively enabled the creation of more sophisticated neuromorphic systems. Notable milestones include IBM's TrueNorth chip, Intel's Loihi, and BrainChip's Akida, each representing significant steps toward brain-inspired computing at scale.

The primary objective of neuromorphic computing is to enable more efficient processing of AI workloads while dramatically reducing power consumption. Unlike traditional processors that require substantial energy for complex AI operations, neuromorphic chips aim to perform similar tasks with orders of magnitude less power. This efficiency stems from their event-driven processing nature, where computation occurs only when needed, similar to biological neural systems.

Beyond energy efficiency, neuromorphic computing targets capabilities that remain challenging for conventional AI systems. These include real-time learning, adaptation to new environments, and robust operation in the face of hardware failures. The ultimate goal is to create computing systems that can perceive, learn, and make decisions with the flexibility and resilience characteristic of biological intelligence, but with the speed and precision of electronic systems.

Looking forward, the field is trending toward greater integration with other emerging technologies, including quantum computing, advanced materials, and novel memory technologies like memristors. These convergences promise to further enhance the capabilities of neuromorphic systems, potentially enabling entirely new applications in robotics, autonomous vehicles, healthcare, and beyond.

The evolution of neuromorphic computing has been driven by the limitations of traditional von Neumann architectures, particularly when handling complex AI workloads. Conventional computing systems separate memory and processing units, creating a bottleneck that impedes performance and energy efficiency when processing neural network operations. Neuromorphic chips address this fundamental constraint by integrating memory and computation, similar to how neurons and synapses function in biological brains.

Recent technological advancements have accelerated the development trajectory of neuromorphic computing. The miniaturization of transistors, improvements in materials science, and innovations in circuit design have collectively enabled the creation of more sophisticated neuromorphic systems. Notable milestones include IBM's TrueNorth chip, Intel's Loihi, and BrainChip's Akida, each representing significant steps toward brain-inspired computing at scale.

The primary objective of neuromorphic computing is to enable more efficient processing of AI workloads while dramatically reducing power consumption. Unlike traditional processors that require substantial energy for complex AI operations, neuromorphic chips aim to perform similar tasks with orders of magnitude less power. This efficiency stems from their event-driven processing nature, where computation occurs only when needed, similar to biological neural systems.

Beyond energy efficiency, neuromorphic computing targets capabilities that remain challenging for conventional AI systems. These include real-time learning, adaptation to new environments, and robust operation in the face of hardware failures. The ultimate goal is to create computing systems that can perceive, learn, and make decisions with the flexibility and resilience characteristic of biological intelligence, but with the speed and precision of electronic systems.

Looking forward, the field is trending toward greater integration with other emerging technologies, including quantum computing, advanced materials, and novel memory technologies like memristors. These convergences promise to further enhance the capabilities of neuromorphic systems, potentially enabling entirely new applications in robotics, autonomous vehicles, healthcare, and beyond.

Market Analysis for Brain-Inspired Computing Solutions

The neuromorphic computing market is experiencing significant growth, driven by the increasing demand for AI applications that require efficient processing of complex neural networks. Current market estimates value the global neuromorphic computing sector at approximately $3.2 billion in 2023, with projections indicating a compound annual growth rate (CAGR) of 24.7% through 2030, potentially reaching $15.8 billion by the end of the decade.

The demand for brain-inspired computing solutions stems primarily from five key sectors. First, autonomous vehicles require real-time processing capabilities with minimal power consumption for object recognition and decision-making systems. This segment alone is expected to generate $2.1 billion in neuromorphic computing demand by 2027.

Second, the healthcare industry is increasingly adopting neuromorphic solutions for medical imaging analysis, patient monitoring systems, and drug discovery processes. The medical applications market for neuromorphic chips is growing at 29.3% annually, outpacing the overall market average.

Third, industrial automation and robotics represent a substantial market opportunity, with manufacturers seeking energy-efficient solutions for machine vision, predictive maintenance, and adaptive control systems. This sector currently accounts for approximately 18% of the total neuromorphic computing market.

Fourth, edge computing applications are driving demand for low-power neuromorphic solutions that can process sensory data locally without constant cloud connectivity. Market analysis indicates that edge AI implementations using neuromorphic architectures can reduce power consumption by up to 95% compared to traditional computing approaches.

Finally, the consumer electronics segment is beginning to incorporate neuromorphic elements in smartphones, wearables, and smart home devices to enable advanced AI features while preserving battery life. This represents the fastest-growing application segment with a 31.2% CAGR.

Geographically, North America currently leads the market with approximately 42% share, followed by Europe (28%) and Asia-Pacific (24%). However, the Asia-Pacific region is expected to demonstrate the highest growth rate over the next five years due to significant investments in AI infrastructure by countries like China, Japan, and South Korea.

The market is characterized by a combination of established semiconductor companies pivoting toward neuromorphic designs and specialized startups focused exclusively on brain-inspired architectures. Venture capital funding for neuromorphic computing startups has increased by 87% over the past three years, indicating strong investor confidence in the technology's commercial potential.

The demand for brain-inspired computing solutions stems primarily from five key sectors. First, autonomous vehicles require real-time processing capabilities with minimal power consumption for object recognition and decision-making systems. This segment alone is expected to generate $2.1 billion in neuromorphic computing demand by 2027.

Second, the healthcare industry is increasingly adopting neuromorphic solutions for medical imaging analysis, patient monitoring systems, and drug discovery processes. The medical applications market for neuromorphic chips is growing at 29.3% annually, outpacing the overall market average.

Third, industrial automation and robotics represent a substantial market opportunity, with manufacturers seeking energy-efficient solutions for machine vision, predictive maintenance, and adaptive control systems. This sector currently accounts for approximately 18% of the total neuromorphic computing market.

Fourth, edge computing applications are driving demand for low-power neuromorphic solutions that can process sensory data locally without constant cloud connectivity. Market analysis indicates that edge AI implementations using neuromorphic architectures can reduce power consumption by up to 95% compared to traditional computing approaches.

Finally, the consumer electronics segment is beginning to incorporate neuromorphic elements in smartphones, wearables, and smart home devices to enable advanced AI features while preserving battery life. This represents the fastest-growing application segment with a 31.2% CAGR.

Geographically, North America currently leads the market with approximately 42% share, followed by Europe (28%) and Asia-Pacific (24%). However, the Asia-Pacific region is expected to demonstrate the highest growth rate over the next five years due to significant investments in AI infrastructure by countries like China, Japan, and South Korea.

The market is characterized by a combination of established semiconductor companies pivoting toward neuromorphic designs and specialized startups focused exclusively on brain-inspired architectures. Venture capital funding for neuromorphic computing startups has increased by 87% over the past three years, indicating strong investor confidence in the technology's commercial potential.

Current Neuromorphic Technology Landscape and Barriers

The neuromorphic computing landscape has evolved significantly over the past decade, with major technological advancements from both academic institutions and industry leaders. Currently, several prominent neuromorphic chip architectures dominate the field, including IBM's TrueNorth, Intel's Loihi, BrainChip's Akida, and SynSense's Dynap-SE. These chips employ different approaches to emulate neural processing, with varying degrees of biological fidelity and computational efficiency.

IBM's TrueNorth represents one of the earliest commercial neuromorphic architectures, featuring 1 million digital neurons and 256 million synapses organized across 4,096 neurosynaptic cores. Intel's Loihi, now in its second generation, has advanced the field with its 128-core design supporting up to 1 million neurons per chip with on-chip learning capabilities. BrainChip's Akida focuses on edge AI applications with its event-based processing paradigm, while SynSense specializes in ultra-low power neuromorphic vision systems.

Despite these advancements, significant technical barriers persist in neuromorphic computing. The foremost challenge involves scaling these architectures while maintaining energy efficiency. Current fabrication techniques struggle to integrate the dense, complex connectivity patterns found in biological neural networks without prohibitive energy costs or performance degradation. Most existing neuromorphic systems still fall short of the human brain's remarkable energy efficiency of approximately 20 watts.

Memory-computation integration presents another substantial hurdle. Traditional von Neumann architectures separate memory and processing units, creating bottlenecks that neuromorphic designs aim to overcome. However, implementing truly co-located memory and computation remains challenging with current materials and manufacturing processes. Novel memory technologies like memristors, phase-change memory, and spintronic devices show promise but face reliability and scalability issues.

Programming paradigms for neuromorphic systems constitute a significant barrier to widespread adoption. Unlike conventional computing platforms with established programming frameworks, neuromorphic chips require specialized approaches for algorithm development and deployment. The lack of standardized programming models and development tools limits accessibility for software developers and AI researchers without specialized neuromorphic expertise.

Interoperability with existing AI ecosystems represents another critical challenge. Most current AI frameworks and applications are designed for traditional computing architectures, creating integration difficulties for neuromorphic systems. Bridging this gap requires substantial adaptation of existing AI workflows or development of entirely new methodologies tailored to neuromorphic processing paradigms.

Lastly, manufacturing scalability remains problematic. Current neuromorphic chips typically employ specialized fabrication processes that are difficult to scale to high-volume production. This manufacturing complexity contributes to higher costs and limited availability, restricting broader commercial adoption despite the technology's theoretical advantages for emerging AI applications.

IBM's TrueNorth represents one of the earliest commercial neuromorphic architectures, featuring 1 million digital neurons and 256 million synapses organized across 4,096 neurosynaptic cores. Intel's Loihi, now in its second generation, has advanced the field with its 128-core design supporting up to 1 million neurons per chip with on-chip learning capabilities. BrainChip's Akida focuses on edge AI applications with its event-based processing paradigm, while SynSense specializes in ultra-low power neuromorphic vision systems.

Despite these advancements, significant technical barriers persist in neuromorphic computing. The foremost challenge involves scaling these architectures while maintaining energy efficiency. Current fabrication techniques struggle to integrate the dense, complex connectivity patterns found in biological neural networks without prohibitive energy costs or performance degradation. Most existing neuromorphic systems still fall short of the human brain's remarkable energy efficiency of approximately 20 watts.

Memory-computation integration presents another substantial hurdle. Traditional von Neumann architectures separate memory and processing units, creating bottlenecks that neuromorphic designs aim to overcome. However, implementing truly co-located memory and computation remains challenging with current materials and manufacturing processes. Novel memory technologies like memristors, phase-change memory, and spintronic devices show promise but face reliability and scalability issues.

Programming paradigms for neuromorphic systems constitute a significant barrier to widespread adoption. Unlike conventional computing platforms with established programming frameworks, neuromorphic chips require specialized approaches for algorithm development and deployment. The lack of standardized programming models and development tools limits accessibility for software developers and AI researchers without specialized neuromorphic expertise.

Interoperability with existing AI ecosystems represents another critical challenge. Most current AI frameworks and applications are designed for traditional computing architectures, creating integration difficulties for neuromorphic systems. Bridging this gap requires substantial adaptation of existing AI workflows or development of entirely new methodologies tailored to neuromorphic processing paradigms.

Lastly, manufacturing scalability remains problematic. Current neuromorphic chips typically employ specialized fabrication processes that are difficult to scale to high-volume production. This manufacturing complexity contributes to higher costs and limited availability, restricting broader commercial adoption despite the technology's theoretical advantages for emerging AI applications.

Contemporary Neuromorphic Chip Implementations

01 Neuromorphic architecture design and implementation

Neuromorphic chips are designed to mimic the structure and functionality of the human brain, using specialized hardware architectures that enable efficient processing of neural network operations. These designs incorporate parallel processing elements, synaptic connections, and memory structures that closely resemble biological neural systems. The architecture typically includes arrays of artificial neurons interconnected through configurable synapses, allowing for efficient implementation of brain-inspired computing paradigms that can handle complex cognitive tasks with lower power consumption compared to traditional computing architectures.- Neuromorphic architecture design and implementation: Neuromorphic chips are designed to mimic the structure and functionality of the human brain, incorporating neural networks and synaptic connections in hardware form. These architectures typically feature massively parallel processing capabilities, low power consumption, and the ability to perform complex cognitive tasks. The implementation involves specialized circuit designs that can simulate neurons and synapses, enabling efficient processing of neural network algorithms directly in hardware.

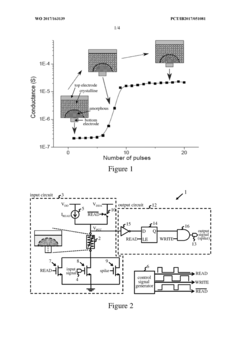

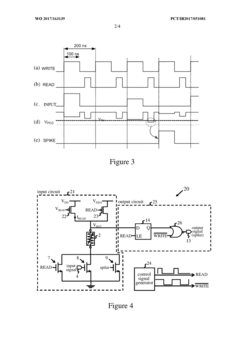

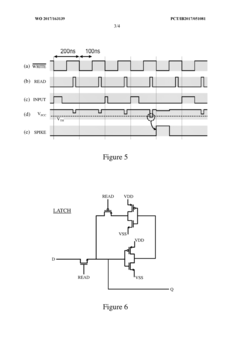

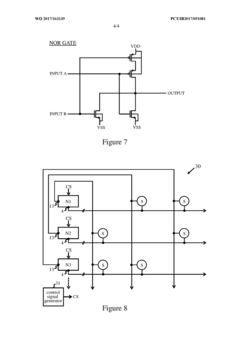

- Memristor-based neuromorphic computing: Memristors are used as key components in neuromorphic chips to simulate synaptic behavior. These non-volatile memory devices can change their resistance based on the history of applied voltage and current, making them ideal for implementing synaptic weights in neural networks. Memristor-based neuromorphic systems offer advantages in terms of energy efficiency, density, and the ability to perform both memory and computing functions in the same device, enabling more brain-like processing capabilities.

- Spiking neural networks for neuromorphic computing: Spiking neural networks (SNNs) represent a biologically inspired approach to information processing in neuromorphic chips. Unlike traditional artificial neural networks, SNNs communicate through discrete events called spikes, similar to biological neurons. This event-driven processing enables more efficient computation and lower power consumption. Implementation of SNNs in hardware allows for real-time processing of sensory data and complex pattern recognition tasks with significantly reduced energy requirements.

- Applications of neuromorphic chips in AI and machine learning: Neuromorphic chips are increasingly being applied to artificial intelligence and machine learning tasks, particularly in edge computing scenarios where power efficiency is crucial. These applications include computer vision, speech recognition, natural language processing, and autonomous systems. The brain-inspired architecture of neuromorphic chips makes them particularly well-suited for pattern recognition, anomaly detection, and adaptive learning in real-world environments where traditional computing approaches may be limited by power constraints or processing speed.

- Integration of neuromorphic chips with conventional computing systems: Integrating neuromorphic chips with conventional computing architectures creates hybrid systems that leverage the strengths of both approaches. These hybrid systems can accelerate specific AI workloads while maintaining compatibility with existing software ecosystems. The integration typically involves specialized interfaces, data conversion mechanisms, and software frameworks that enable seamless communication between neuromorphic components and traditional processors. This approach allows for gradual adoption of neuromorphic computing in existing applications while maximizing overall system performance and energy efficiency.

02 Memristor-based neuromorphic computing

Memristors are used as key components in neuromorphic chips to emulate synaptic behavior in artificial neural networks. These non-volatile memory devices can store and process information simultaneously, similar to biological synapses. Memristor-based neuromorphic systems offer advantages in terms of energy efficiency, density, and the ability to implement spike-timing-dependent plasticity (STDP) learning rules. The variable resistance states of memristors enable the representation of synaptic weights in hardware, facilitating on-chip learning and adaptation capabilities that are essential for neuromorphic computing applications.Expand Specific Solutions03 Spiking neural networks implementation

Spiking neural networks (SNNs) represent a biologically plausible approach to neural computation in neuromorphic chips. Unlike conventional artificial neural networks, SNNs process information through discrete spikes or events, similar to biological neurons. This event-driven processing paradigm offers significant energy efficiency advantages as computation occurs only when necessary. Neuromorphic chips implementing SNNs incorporate specialized circuits for spike generation, propagation, and processing, enabling efficient execution of neuromorphic algorithms for pattern recognition, classification, and other cognitive tasks with reduced power consumption.Expand Specific Solutions04 On-chip learning and adaptation mechanisms

Neuromorphic chips incorporate on-chip learning capabilities that allow the system to adapt and improve performance over time without external training. These mechanisms implement various learning rules such as spike-timing-dependent plasticity (STDP), reinforcement learning, and unsupervised learning directly in hardware. The integration of learning circuits enables continuous adaptation to changing inputs and environments, making these chips suitable for applications requiring real-time learning and adaptation. This approach reduces the need for offline training and enables more autonomous operation in edge computing scenarios.Expand Specific Solutions05 Applications and integration of neuromorphic chips

Neuromorphic chips are being integrated into various applications including computer vision, speech recognition, autonomous systems, and edge computing devices. These chips excel in scenarios requiring real-time processing of sensory data with strict power constraints. The integration of neuromorphic processors with conventional computing systems creates hybrid architectures that leverage the strengths of both paradigms. Applications include smart sensors, robotics, augmented reality devices, and IoT systems where energy efficiency and real-time processing of unstructured data are critical requirements. The event-driven nature of neuromorphic computing makes these chips particularly suitable for processing data from neuromorphic sensors.Expand Specific Solutions

Leading Companies and Research Institutions in Neuromorphic AI

The neuromorphic chip market is in a growth phase, characterized by increasing adoption in AI applications due to their energy efficiency and brain-like processing capabilities. The market is projected to expand significantly as AI technologies mature, with an estimated value reaching several billion dollars by 2030. Technologically, the field shows varying maturity levels across players. IBM leads with established neuromorphic architectures like TrueNorth, while specialized companies such as Syntiant and Polyn Technology are advancing application-specific implementations. Academic-industry collaborations involving institutions like KAIST, Tsinghua University, and Zhejiang University are accelerating innovation. Samsung and SK Hynix bring semiconductor manufacturing expertise, while Alibaba and Huawei are integrating neuromorphic computing into their AI ecosystems. This diverse competitive landscape indicates a technology approaching commercial viability with multiple specialized applications emerging.

International Business Machines Corp.

Technical Solution: IBM has developed TrueNorth, a groundbreaking neuromorphic chip architecture that mimics the brain's neural structure with 1 million programmable neurons and 256 million synapses. The chip operates on an event-driven, parallel, and fault-tolerant design that consumes only 70mW of power while delivering the equivalent of 46 billion synaptic operations per second per watt. IBM's neuromorphic technology implements spiking neural networks (SNNs) that process information asynchronously, similar to biological neurons. This approach enables real-time sensory processing capabilities while maintaining extremely low power consumption. IBM has further advanced this technology with their second-generation neuromorphic chip design that incorporates phase-change memory (PCM) elements to enable on-chip learning capabilities, allowing the system to adapt and learn from its environment dynamically.

Strengths: Exceptional energy efficiency (1000x more efficient than conventional chips), scalable architecture, and mature development ecosystem. Weaknesses: Requires specialized programming paradigms different from traditional computing, and the technology still faces challenges in broad commercial deployment beyond research applications.

SYNTIANT CORP

Technical Solution: Syntiant has developed the Neural Decision Processor (NDP), a specialized neuromorphic chip architecture optimized for edge AI applications, particularly in always-on voice and sensor processing. Their NDP100 and NDP120 chips implement a unique approach to neuromorphic computing that focuses on ultra-low power consumption while maintaining high accuracy for specific AI tasks. The technology uses a non-von Neumann architecture that processes information in a highly parallel manner, similar to the human brain. Syntiant's chips can run deep learning algorithms while consuming less than 1mW of power, enabling battery-powered devices to perform continuous AI processing without significant battery drain. The company's technology has been deployed in millions of devices, demonstrating commercial viability in consumer electronics, particularly for keyword spotting and wake word detection applications.

Strengths: Extremely low power consumption (100-1000x more efficient than traditional processors for specific tasks), optimized for edge deployment, and proven commercial implementation. Weaknesses: More specialized for audio processing applications rather than general-purpose neuromorphic computing, and limited flexibility compared to some research-oriented neuromorphic platforms.

Key Patents and Breakthroughs in Brain-Inspired Computing

Artificial neuron apparatus

PatentWO2017163139A1

Innovation

- An artificial neuron apparatus utilizing a resistive memory cell with configurable input and output circuits, allowing for alternating read and write phases controlled by simple periodic control signals, enabling progressive change in cell resistance and efficient neuron operation in neural networks, with compact integration for high-density implementation.

Synapse device, manufacturing method thereof, and neuromorphic device including synapse device

PatentPendingUS20240136445A1

Innovation

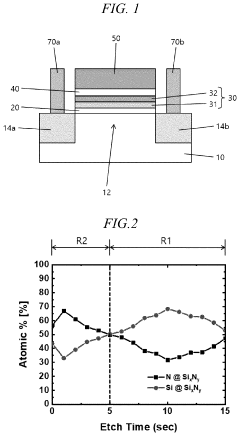

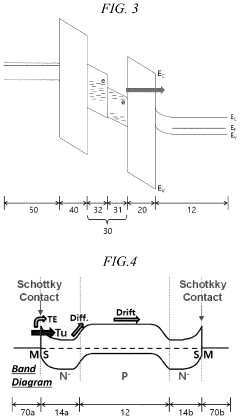

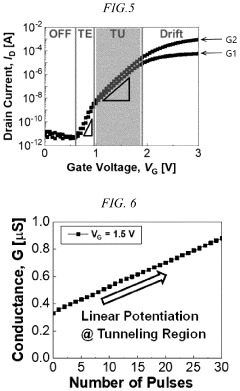

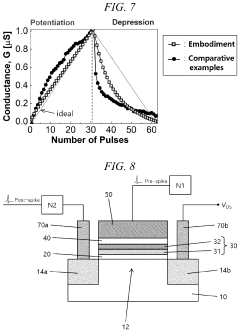

- A synapse device with a multilayer charge trap layer structure, comprising a shallower first silicon nitride layer and a deeper second silicon nitride layer, forming Schottky junctions, enhances synaptic weight update speed and linearity, and is compatible with CMOS technology.

Energy Efficiency Comparison with Traditional Computing Paradigms

Neuromorphic chips demonstrate remarkable energy efficiency advantages over traditional computing architectures when processing AI workloads. While conventional von Neumann architectures separate memory and processing units, necessitating constant data movement that consumes significant power, neuromorphic designs co-locate memory and computation similar to biological neural systems. This fundamental architectural difference results in dramatic energy savings, with neuromorphic systems typically consuming 100-1000x less power than traditional GPUs and CPUs for equivalent neural network tasks.

The energy efficiency gap becomes particularly pronounced in always-on applications and edge computing scenarios. For instance, Intel's Loihi neuromorphic research chip can perform certain machine learning tasks using just milliwatts of power, compared to several watts required by conventional processors. This translates to potentially extending battery life from hours to days or even weeks in portable AI devices.

Quantitative benchmarks reveal that neuromorphic chips excel particularly in sparse, event-driven processing scenarios. When handling temporal data streams with irregular patterns—such as sensor inputs in autonomous vehicles or IoT devices—traditional computing paradigms waste energy processing null or redundant information. Neuromorphic systems, with their event-based processing capability, activate computational resources only when meaningful changes occur in the input data.

The efficiency advantage extends beyond raw power consumption to computational density metrics. Measured in terms of operations per watt, neuromorphic architectures can achieve 10-100x improvement in energy efficiency for specific neural network workloads compared to even the most optimized traditional hardware accelerators. This efficiency stems from their ability to perform massively parallel, low-precision computations that closely match the requirements of many modern AI algorithms.

Temperature management represents another critical advantage. Traditional high-performance computing systems require elaborate cooling infrastructure that can consume as much energy as the computation itself. Neuromorphic chips operate at significantly lower temperatures due to their reduced power consumption, minimizing or eliminating cooling requirements and further enhancing their overall energy efficiency profile.

Looking forward, as process technologies advance and neuromorphic architectural innovations continue, the energy efficiency gap is expected to widen further. This trajectory positions neuromorphic computing as a potentially transformative technology for sustainable AI deployment, particularly as AI systems become more pervasive in energy-constrained environments and applications requiring continuous operation.

The energy efficiency gap becomes particularly pronounced in always-on applications and edge computing scenarios. For instance, Intel's Loihi neuromorphic research chip can perform certain machine learning tasks using just milliwatts of power, compared to several watts required by conventional processors. This translates to potentially extending battery life from hours to days or even weeks in portable AI devices.

Quantitative benchmarks reveal that neuromorphic chips excel particularly in sparse, event-driven processing scenarios. When handling temporal data streams with irregular patterns—such as sensor inputs in autonomous vehicles or IoT devices—traditional computing paradigms waste energy processing null or redundant information. Neuromorphic systems, with their event-based processing capability, activate computational resources only when meaningful changes occur in the input data.

The efficiency advantage extends beyond raw power consumption to computational density metrics. Measured in terms of operations per watt, neuromorphic architectures can achieve 10-100x improvement in energy efficiency for specific neural network workloads compared to even the most optimized traditional hardware accelerators. This efficiency stems from their ability to perform massively parallel, low-precision computations that closely match the requirements of many modern AI algorithms.

Temperature management represents another critical advantage. Traditional high-performance computing systems require elaborate cooling infrastructure that can consume as much energy as the computation itself. Neuromorphic chips operate at significantly lower temperatures due to their reduced power consumption, minimizing or eliminating cooling requirements and further enhancing their overall energy efficiency profile.

Looking forward, as process technologies advance and neuromorphic architectural innovations continue, the energy efficiency gap is expected to widen further. This trajectory positions neuromorphic computing as a potentially transformative technology for sustainable AI deployment, particularly as AI systems become more pervasive in energy-constrained environments and applications requiring continuous operation.

Integration Challenges with Existing AI Frameworks

The integration of neuromorphic chips with existing AI frameworks presents significant technical challenges that must be addressed for widespread adoption. Traditional AI frameworks like TensorFlow, PyTorch, and CUDA have been optimized for conventional von Neumann architectures and GPU acceleration, creating fundamental compatibility issues with neuromorphic hardware. These frameworks utilize computational models based on matrix operations and floating-point arithmetic, which diverge substantially from the spike-based processing paradigm of neuromorphic systems.

Development environments for neuromorphic computing remain fragmented and immature compared to the robust ecosystems supporting traditional AI. This fragmentation creates substantial barriers for developers attempting to migrate existing AI applications to neuromorphic platforms. The lack of standardized programming interfaces specifically designed for event-based processing further complicates integration efforts, requiring specialized knowledge that most AI practitioners do not possess.

Data representation presents another critical challenge. Conventional AI systems typically process data in batch-oriented, synchronous formats, while neuromorphic systems excel with asynchronous, event-driven inputs. This fundamental mismatch necessitates complex data transformation layers that can introduce latency and efficiency losses, potentially negating the inherent advantages of neuromorphic hardware.

The training methodologies for neuromorphic systems differ significantly from backpropagation-based approaches dominant in deep learning. While frameworks like SpiNNaker and Nengo have emerged to support neuromorphic programming, they often require completely different algorithmic approaches and model architectures. This incompatibility means that pre-trained models from conventional frameworks cannot be directly transferred to neuromorphic platforms without substantial modification or complete redesign.

Performance benchmarking and optimization tools for neuromorphic systems remain underdeveloped compared to those available for GPUs and TPUs. This deficiency makes it difficult to accurately compare performance metrics and optimize neuromorphic implementations against existing solutions, creating uncertainty for organizations considering adoption.

Hybrid computing approaches that combine conventional and neuromorphic processing elements show promise but introduce additional complexity in workload distribution, memory management, and synchronization between different computational paradigms. These challenges are compounded by the limited availability of middleware solutions capable of efficiently orchestrating heterogeneous computing resources that include neuromorphic components.

Development environments for neuromorphic computing remain fragmented and immature compared to the robust ecosystems supporting traditional AI. This fragmentation creates substantial barriers for developers attempting to migrate existing AI applications to neuromorphic platforms. The lack of standardized programming interfaces specifically designed for event-based processing further complicates integration efforts, requiring specialized knowledge that most AI practitioners do not possess.

Data representation presents another critical challenge. Conventional AI systems typically process data in batch-oriented, synchronous formats, while neuromorphic systems excel with asynchronous, event-driven inputs. This fundamental mismatch necessitates complex data transformation layers that can introduce latency and efficiency losses, potentially negating the inherent advantages of neuromorphic hardware.

The training methodologies for neuromorphic systems differ significantly from backpropagation-based approaches dominant in deep learning. While frameworks like SpiNNaker and Nengo have emerged to support neuromorphic programming, they often require completely different algorithmic approaches and model architectures. This incompatibility means that pre-trained models from conventional frameworks cannot be directly transferred to neuromorphic platforms without substantial modification or complete redesign.

Performance benchmarking and optimization tools for neuromorphic systems remain underdeveloped compared to those available for GPUs and TPUs. This deficiency makes it difficult to accurately compare performance metrics and optimize neuromorphic implementations against existing solutions, creating uncertainty for organizations considering adoption.

Hybrid computing approaches that combine conventional and neuromorphic processing elements show promise but introduce additional complexity in workload distribution, memory management, and synchronization between different computational paradigms. These challenges are compounded by the limited availability of middleware solutions capable of efficiently orchestrating heterogeneous computing resources that include neuromorphic components.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!