Understanding the Interplay of Neuromorphic Chips with AI Systems

OCT 9, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of the human brain. This approach emerged in the late 1980s when Carver Mead introduced the concept of using electronic analog circuits to mimic neuro-biological architectures. Since then, the field has evolved through several distinct phases, moving from theoretical frameworks to practical implementations that are increasingly relevant in today's AI-dominated technological landscape.

The evolution of neuromorphic computing can be traced through four major phases. The foundational phase (1980s-1990s) established the theoretical underpinnings and basic circuit designs. The experimental phase (2000s) saw the development of small-scale neuromorphic systems and proof-of-concept demonstrations. The scaling phase (2010s) brought forth larger, more complex systems with improved capabilities, exemplified by IBM's TrueNorth and Intel's Loihi chips. Currently, we are in the integration phase, where neuromorphic systems are being incorporated into practical applications and commercial products.

A key driver behind neuromorphic computing development has been the inherent limitations of traditional von Neumann architectures when applied to AI workloads. Conventional computing systems separate memory and processing units, creating a bottleneck that becomes particularly problematic for neural network operations. Neuromorphic designs address this by integrating memory and computation, similar to how neurons and synapses function in biological systems.

The primary objectives of neuromorphic computing research center around three interconnected goals. First, achieving energy efficiency that dramatically surpasses conventional computing systems for AI applications. Neuromorphic chips typically consume orders of magnitude less power than GPUs running equivalent neural network tasks. Second, enabling real-time processing of sensory data with minimal latency, which is crucial for applications like autonomous vehicles and advanced robotics. Third, supporting online learning capabilities that allow systems to adapt to new information without complete retraining.

Recent technological advancements have accelerated progress in this field, particularly improvements in materials science, fabrication techniques, and neural network algorithms. The development of memristors and other novel components has enabled more efficient implementation of synaptic functions. Meanwhile, spiking neural networks (SNNs) have emerged as a computational model particularly well-suited to neuromorphic hardware, offering potential advantages in both efficiency and biological plausibility.

Looking forward, the trajectory of neuromorphic computing aims toward creating systems that not only mimic the brain's architecture but also approach its remarkable efficiency and adaptability. This vision includes developing chips capable of supporting increasingly sophisticated AI applications while maintaining minimal energy consumption, ultimately enabling edge AI capabilities that current technologies cannot support.

The evolution of neuromorphic computing can be traced through four major phases. The foundational phase (1980s-1990s) established the theoretical underpinnings and basic circuit designs. The experimental phase (2000s) saw the development of small-scale neuromorphic systems and proof-of-concept demonstrations. The scaling phase (2010s) brought forth larger, more complex systems with improved capabilities, exemplified by IBM's TrueNorth and Intel's Loihi chips. Currently, we are in the integration phase, where neuromorphic systems are being incorporated into practical applications and commercial products.

A key driver behind neuromorphic computing development has been the inherent limitations of traditional von Neumann architectures when applied to AI workloads. Conventional computing systems separate memory and processing units, creating a bottleneck that becomes particularly problematic for neural network operations. Neuromorphic designs address this by integrating memory and computation, similar to how neurons and synapses function in biological systems.

The primary objectives of neuromorphic computing research center around three interconnected goals. First, achieving energy efficiency that dramatically surpasses conventional computing systems for AI applications. Neuromorphic chips typically consume orders of magnitude less power than GPUs running equivalent neural network tasks. Second, enabling real-time processing of sensory data with minimal latency, which is crucial for applications like autonomous vehicles and advanced robotics. Third, supporting online learning capabilities that allow systems to adapt to new information without complete retraining.

Recent technological advancements have accelerated progress in this field, particularly improvements in materials science, fabrication techniques, and neural network algorithms. The development of memristors and other novel components has enabled more efficient implementation of synaptic functions. Meanwhile, spiking neural networks (SNNs) have emerged as a computational model particularly well-suited to neuromorphic hardware, offering potential advantages in both efficiency and biological plausibility.

Looking forward, the trajectory of neuromorphic computing aims toward creating systems that not only mimic the brain's architecture but also approach its remarkable efficiency and adaptability. This vision includes developing chips capable of supporting increasingly sophisticated AI applications while maintaining minimal energy consumption, ultimately enabling edge AI capabilities that current technologies cannot support.

AI Market Demand for Brain-Inspired Computing

The global AI market is experiencing unprecedented growth, with neuromorphic computing emerging as a critical frontier technology. Market research indicates that the AI hardware market, which includes neuromorphic chips, is projected to reach $87.68 billion by 2025, growing at a CAGR of 37.5%. This substantial growth is driven by increasing demands for energy-efficient AI solutions that can process complex neural networks while minimizing power consumption.

Brain-inspired computing addresses several critical market needs that conventional computing architectures struggle to fulfill. Primary among these is the demand for edge AI applications, where processing must occur locally on devices with limited power resources. The IoT ecosystem, expected to connect over 75 billion devices by 2025, requires neuromorphic solutions that can perform sophisticated AI tasks with minimal energy consumption.

Healthcare represents another significant market driver, with neuromorphic systems showing promise for real-time patient monitoring, medical imaging analysis, and brain-computer interfaces. The healthcare AI market segment alone is anticipated to grow at 41.7% annually, reaching $45.2 billion by 2026, with neuromorphic computing positioned to capture a substantial portion of this growth.

Autonomous vehicles and advanced driver-assistance systems constitute another major market opportunity. These applications require real-time processing of sensor data with strict power and latency constraints—precisely the conditions where neuromorphic chips excel. Industry analysts predict that by 2030, approximately 70% of new vehicles will incorporate some level of autonomous capability, creating sustained demand for brain-inspired computing solutions.

The financial services industry has also demonstrated increasing interest in neuromorphic systems for fraud detection, algorithmic trading, and risk assessment. These applications benefit from the pattern recognition capabilities and energy efficiency of brain-inspired architectures.

Military and defense applications represent a specialized but lucrative market segment, with neuromorphic computing being explored for autonomous drones, threat detection systems, and battlefield analytics. Government investments in this sector have been substantial, with the U.S. Department of Defense allocating over $2 billion to AI research initiatives that include neuromorphic computing.

Consumer electronics manufacturers are increasingly integrating AI capabilities into smartphones, wearables, and smart home devices. This market segment values the low power consumption and real-time processing capabilities of neuromorphic systems, particularly for applications like voice recognition, image processing, and predictive user interfaces.

Brain-inspired computing addresses several critical market needs that conventional computing architectures struggle to fulfill. Primary among these is the demand for edge AI applications, where processing must occur locally on devices with limited power resources. The IoT ecosystem, expected to connect over 75 billion devices by 2025, requires neuromorphic solutions that can perform sophisticated AI tasks with minimal energy consumption.

Healthcare represents another significant market driver, with neuromorphic systems showing promise for real-time patient monitoring, medical imaging analysis, and brain-computer interfaces. The healthcare AI market segment alone is anticipated to grow at 41.7% annually, reaching $45.2 billion by 2026, with neuromorphic computing positioned to capture a substantial portion of this growth.

Autonomous vehicles and advanced driver-assistance systems constitute another major market opportunity. These applications require real-time processing of sensor data with strict power and latency constraints—precisely the conditions where neuromorphic chips excel. Industry analysts predict that by 2030, approximately 70% of new vehicles will incorporate some level of autonomous capability, creating sustained demand for brain-inspired computing solutions.

The financial services industry has also demonstrated increasing interest in neuromorphic systems for fraud detection, algorithmic trading, and risk assessment. These applications benefit from the pattern recognition capabilities and energy efficiency of brain-inspired architectures.

Military and defense applications represent a specialized but lucrative market segment, with neuromorphic computing being explored for autonomous drones, threat detection systems, and battlefield analytics. Government investments in this sector have been substantial, with the U.S. Department of Defense allocating over $2 billion to AI research initiatives that include neuromorphic computing.

Consumer electronics manufacturers are increasingly integrating AI capabilities into smartphones, wearables, and smart home devices. This market segment values the low power consumption and real-time processing capabilities of neuromorphic systems, particularly for applications like voice recognition, image processing, and predictive user interfaces.

Neuromorphic Chip Technology Status and Barriers

Neuromorphic computing technology has reached a critical development stage globally, with significant advancements in both academic research and commercial applications. Current neuromorphic chips demonstrate promising capabilities in energy efficiency, achieving power consumption reductions of 100-1000x compared to traditional von Neumann architectures when executing neural network tasks. Leading implementations such as IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida have successfully demonstrated the viability of spike-based computing paradigms in real-world applications.

Despite these achievements, several substantial technical barriers impede widespread adoption. The most significant challenge remains the hardware-software co-design problem, where existing AI frameworks and algorithms are optimized for traditional computing architectures rather than event-driven, spike-based neuromorphic systems. This creates a significant translation gap between conventional deep learning models and neuromorphic implementations, limiting practical deployment scenarios.

Scalability presents another major hurdle, as current neuromorphic chips typically contain thousands to millions of neurons, whereas biological systems operate with billions of neurons. Manufacturing processes for these specialized architectures often require custom fabrication techniques that have not yet reached the economies of scale enjoyed by conventional semiconductor technologies, resulting in higher production costs and limited availability.

The lack of standardized benchmarking methodologies specifically designed for neuromorphic systems makes objective performance comparison difficult across different implementations. Traditional AI benchmarks fail to capture the unique advantages of neuromorphic computing, particularly in temporal processing and energy efficiency metrics, creating challenges in demonstrating value propositions to potential adopters.

From a geographical perspective, neuromorphic research exhibits distinct regional characteristics. North America leads in commercial development with companies like Intel and IBM driving innovation, while Europe maintains strength in theoretical foundations through initiatives like the Human Brain Project. The Asia-Pacific region, particularly China and Japan, has recently accelerated investments in neuromorphic technologies as part of broader AI sovereignty initiatives.

Integration challenges with existing computing infrastructure represent another significant barrier. Current data centers and edge computing platforms are not designed to incorporate neuromorphic accelerators efficiently, requiring substantial modifications to hardware interfaces, data pipelines, and system software. This integration complexity slows adoption in production environments where reliability and compatibility with existing systems are paramount.

Despite these achievements, several substantial technical barriers impede widespread adoption. The most significant challenge remains the hardware-software co-design problem, where existing AI frameworks and algorithms are optimized for traditional computing architectures rather than event-driven, spike-based neuromorphic systems. This creates a significant translation gap between conventional deep learning models and neuromorphic implementations, limiting practical deployment scenarios.

Scalability presents another major hurdle, as current neuromorphic chips typically contain thousands to millions of neurons, whereas biological systems operate with billions of neurons. Manufacturing processes for these specialized architectures often require custom fabrication techniques that have not yet reached the economies of scale enjoyed by conventional semiconductor technologies, resulting in higher production costs and limited availability.

The lack of standardized benchmarking methodologies specifically designed for neuromorphic systems makes objective performance comparison difficult across different implementations. Traditional AI benchmarks fail to capture the unique advantages of neuromorphic computing, particularly in temporal processing and energy efficiency metrics, creating challenges in demonstrating value propositions to potential adopters.

From a geographical perspective, neuromorphic research exhibits distinct regional characteristics. North America leads in commercial development with companies like Intel and IBM driving innovation, while Europe maintains strength in theoretical foundations through initiatives like the Human Brain Project. The Asia-Pacific region, particularly China and Japan, has recently accelerated investments in neuromorphic technologies as part of broader AI sovereignty initiatives.

Integration challenges with existing computing infrastructure represent another significant barrier. Current data centers and edge computing platforms are not designed to incorporate neuromorphic accelerators efficiently, requiring substantial modifications to hardware interfaces, data pipelines, and system software. This integration complexity slows adoption in production environments where reliability and compatibility with existing systems are paramount.

Current Neuromorphic-AI Integration Approaches

01 Neuromorphic architecture design and implementation

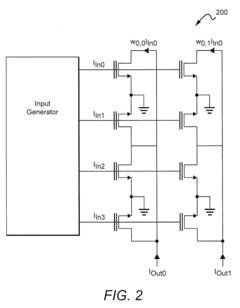

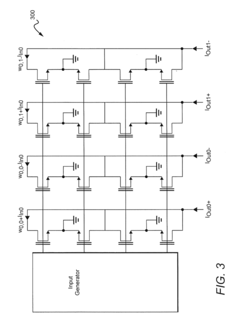

Neuromorphic chips are designed to mimic the structure and functionality of the human brain, using specialized architectures that integrate memory and processing. These designs typically incorporate neural networks, synaptic connections, and spike-based processing to achieve brain-like computation. The architecture focuses on parallel processing, energy efficiency, and the ability to learn from data, making these chips suitable for artificial intelligence applications that require cognitive capabilities similar to human reasoning.- Neuromorphic architecture design and implementation: Neuromorphic chips are designed to mimic the structure and functionality of the human brain, using specialized architectures that integrate processing and memory. These designs typically incorporate neural networks, synaptic connections, and spike-based processing to achieve brain-like computation. The architecture often includes parallel processing elements, distributed memory, and event-driven operation to enable efficient pattern recognition and learning capabilities while consuming significantly less power than traditional computing architectures.

- Memristor-based neuromorphic computing: Memristors are used as key components in neuromorphic chips to emulate synaptic behavior. These devices can store and process information simultaneously, making them ideal for implementing neural networks in hardware. Memristor-based neuromorphic systems offer advantages in terms of power efficiency, density, and the ability to perform in-memory computing. They enable the implementation of learning algorithms directly in hardware and can maintain their state without power, allowing for persistent memory in neuromorphic applications.

- Spiking neural networks implementation: Spiking neural networks (SNNs) represent a biologically inspired approach to neuromorphic computing where information is processed using discrete spikes similar to biological neurons. These implementations focus on timing-dependent processing and event-driven computation, which can significantly reduce power consumption compared to traditional artificial neural networks. SNN-based neuromorphic chips excel at processing temporal data and can be trained using various learning rules including spike-timing-dependent plasticity (STDP), making them suitable for real-time sensory processing and pattern recognition tasks.

- On-chip learning and adaptation mechanisms: Neuromorphic chips incorporate on-chip learning capabilities that allow them to adapt to new data without requiring external training. These systems implement various learning algorithms directly in hardware, enabling continuous learning and adaptation in real-time applications. The on-chip learning mechanisms often utilize local learning rules that modify synaptic weights based on neural activity patterns, allowing for unsupervised and reinforcement learning directly on the device. This capability is crucial for applications requiring adaptation to changing environments or personalized learning.

- Integration with sensors and edge computing applications: Neuromorphic chips are increasingly being integrated with sensors for edge computing applications, enabling efficient processing of sensory data directly at the source. These integrated systems can perform complex tasks such as image recognition, speech processing, and anomaly detection with minimal power consumption. The close coupling of sensors with neuromorphic processing elements reduces latency and bandwidth requirements by extracting meaningful information before data transmission. This approach is particularly valuable for IoT devices, autonomous systems, and other applications where power efficiency and real-time processing are critical.

02 Memristor-based neuromorphic computing

Memristors are used in neuromorphic chips to emulate synaptic behavior, providing a way to store and process information simultaneously. These devices can change their resistance based on the history of current flowing through them, similar to how synapses in the brain adjust their strength. Memristor-based neuromorphic systems offer advantages in terms of power efficiency, density, and the ability to implement learning algorithms directly in hardware, making them promising for next-generation AI applications.Expand Specific Solutions03 Spiking neural networks implementation

Spiking neural networks (SNNs) are a key component of neuromorphic computing, mimicking the communication method of biological neurons through discrete spikes rather than continuous signals. Neuromorphic chips implementing SNNs can process temporal information more efficiently and naturally than traditional neural networks. These implementations often include specialized circuits for spike generation, propagation, and learning mechanisms such as spike-timing-dependent plasticity, enabling more brain-like computation with lower power consumption.Expand Specific Solutions04 On-chip learning and adaptation mechanisms

Neuromorphic chips incorporate on-chip learning capabilities that allow them to adapt to new data without requiring external training. These mechanisms typically implement various forms of synaptic plasticity, enabling the chips to learn from experience similar to biological systems. On-chip learning reduces the need for constant communication with external systems, making these chips more autonomous and energy-efficient for edge computing applications where adaptability to changing environments is crucial.Expand Specific Solutions05 Applications and integration of neuromorphic chips

Neuromorphic chips are being integrated into various applications including computer vision, natural language processing, robotics, and IoT devices. These chips excel in scenarios requiring real-time processing of sensory data, pattern recognition, and decision-making under uncertainty. The integration often involves specialized interfaces to connect with conventional computing systems or sensors, allowing neuromorphic processing to complement traditional computing approaches. This hybrid approach enables more efficient AI systems that combine the strengths of both neuromorphic and conventional computing paradigms.Expand Specific Solutions

Leading Neuromorphic Computing Industry Players

The neuromorphic chip market is currently in a growth phase, characterized by increasing integration with AI systems. The market size is expanding rapidly, projected to reach significant value as these chips offer energy-efficient alternatives to traditional computing architectures for AI applications. Regarding technical maturity, industry leaders like IBM, Intel, and Samsung have made substantial progress in developing commercial neuromorphic solutions, while companies such as Syntiant and Hercules Microelectronics are focusing on specialized applications. Academic institutions including Tsinghua University, KAIST, and Fudan University are contributing fundamental research. Alibaba, Huawei, and Microsoft are exploring neuromorphic computing to enhance their AI infrastructure, indicating growing commercial interest. The technology remains in transition from research to widespread commercial deployment, with varying degrees of maturity across different implementations.

International Business Machines Corp.

Technical Solution: IBM's neuromorphic chip technology, particularly TrueNorth and subsequent developments, represents a significant advancement in brain-inspired computing architectures. Their neuromorphic systems utilize a non-von Neumann architecture with distributed memory and processing units that mimic neural networks in biological brains. IBM's TrueNorth chip contains 1 million digital neurons and 256 million synapses organized into 4,096 neurosynaptic cores[1]. The company has further evolved this technology with more recent developments like analog AI hardware that leverages phase-change memory (PCM) for implementing neural network weights directly in hardware. IBM's neuromorphic systems excel at pattern recognition tasks while consuming minimal power - approximately 70mW during real-time operation, representing orders of magnitude improvement in energy efficiency compared to traditional computing architectures[2]. IBM has also developed programming frameworks and simulation environments specifically designed for neuromorphic computing, enabling researchers to develop applications that leverage the unique capabilities of these brain-inspired chips.

Strengths: Exceptional energy efficiency (orders of magnitude better than conventional architectures); highly scalable architecture; excellent for pattern recognition and sensory processing tasks; mature development ecosystem. Weaknesses: Limited applicability for certain types of computational problems; programming paradigm differs significantly from conventional computing, creating adoption barriers; digital implementations may lack the true parallelism of biological neural systems.

SYNTIANT CORP

Technical Solution: Syntiant has developed a specialized neuromorphic architecture focused specifically on audio and speech processing applications. Their Neural Decision Processors (NDPs) are designed to run deep learning algorithms for always-on applications with extremely low power consumption. Syntiant's chips implement a dataflow architecture where data moves through the neural network with minimal data movement, dramatically reducing power consumption compared to traditional compute architectures. Their NDP120 chip can perform deep neural network processing while consuming less than 1mW of power[3], enabling always-on voice and sensor applications in battery-powered devices. The architecture directly implements neural networks in silicon, with memory and processing co-located to minimize data movement. Syntiant's technology is particularly optimized for keyword spotting, speaker identification, and other audio event detection tasks, with the ability to run multiple concurrent neural networks for different sensing modalities. Their chips include built-in digital signal processing capabilities specifically tuned for audio preprocessing before neural network execution.

Strengths: Ultra-low power consumption (sub-milliwatt operation); highly optimized for specific audio/speech recognition tasks; small form factor suitable for integration into compact devices; production-ready solution with commercial deployment. Weaknesses: Limited application scope beyond audio processing; less flexible than general-purpose neuromorphic architectures; proprietary development environment may limit third-party innovation; relatively smaller scale neural networks compared to other neuromorphic solutions.

Breakthrough Patents in Brain-Inspired Computing

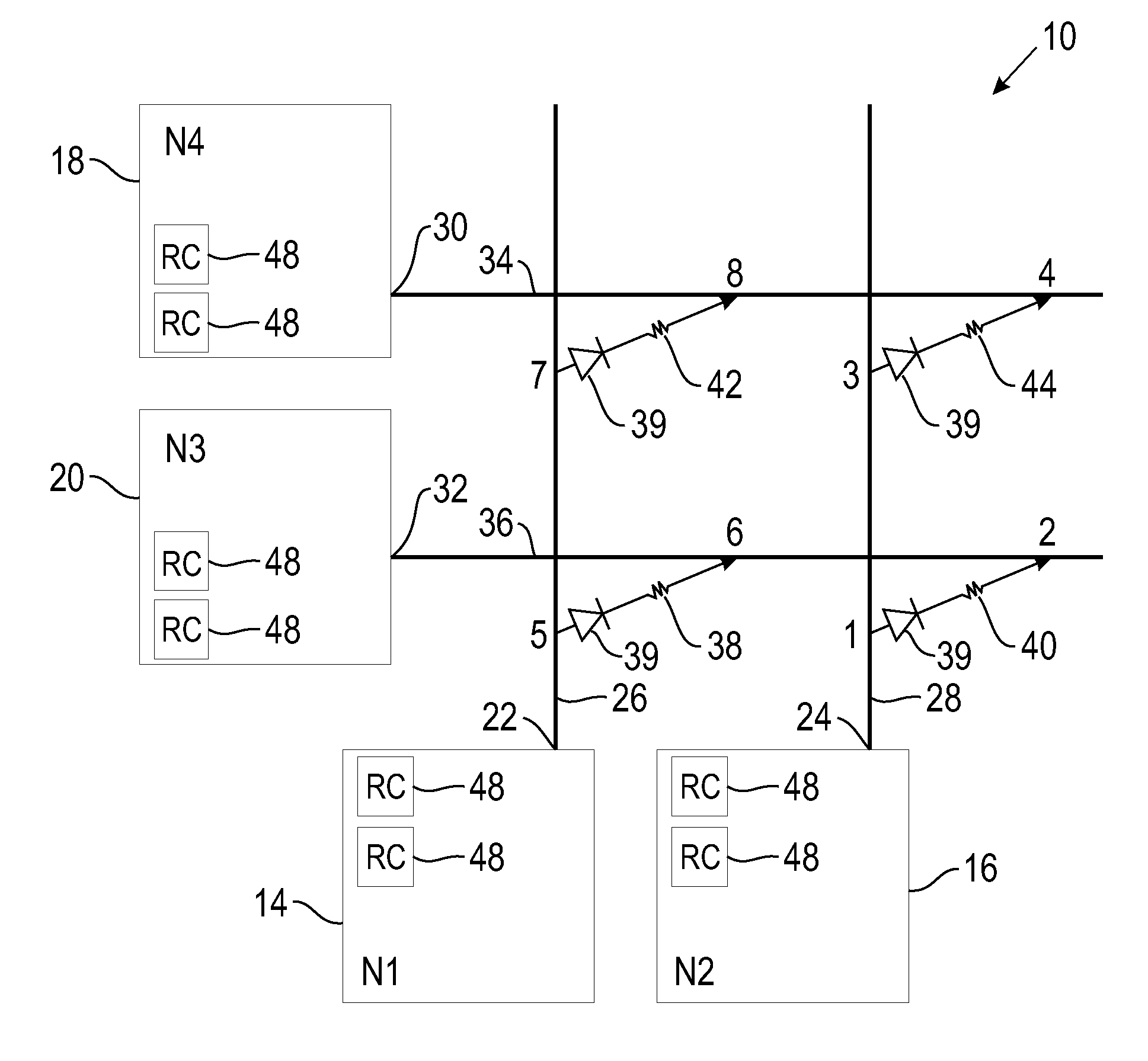

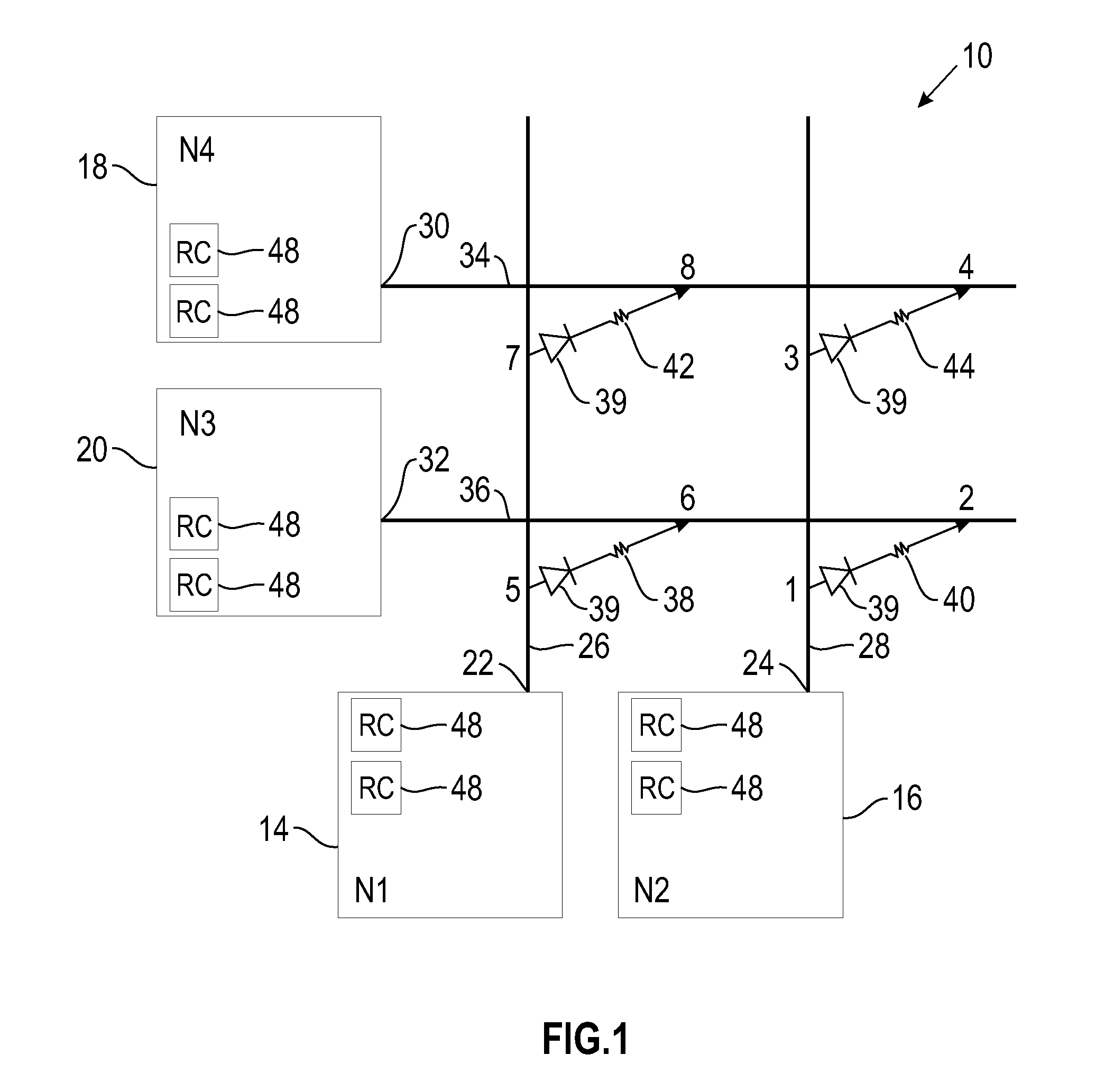

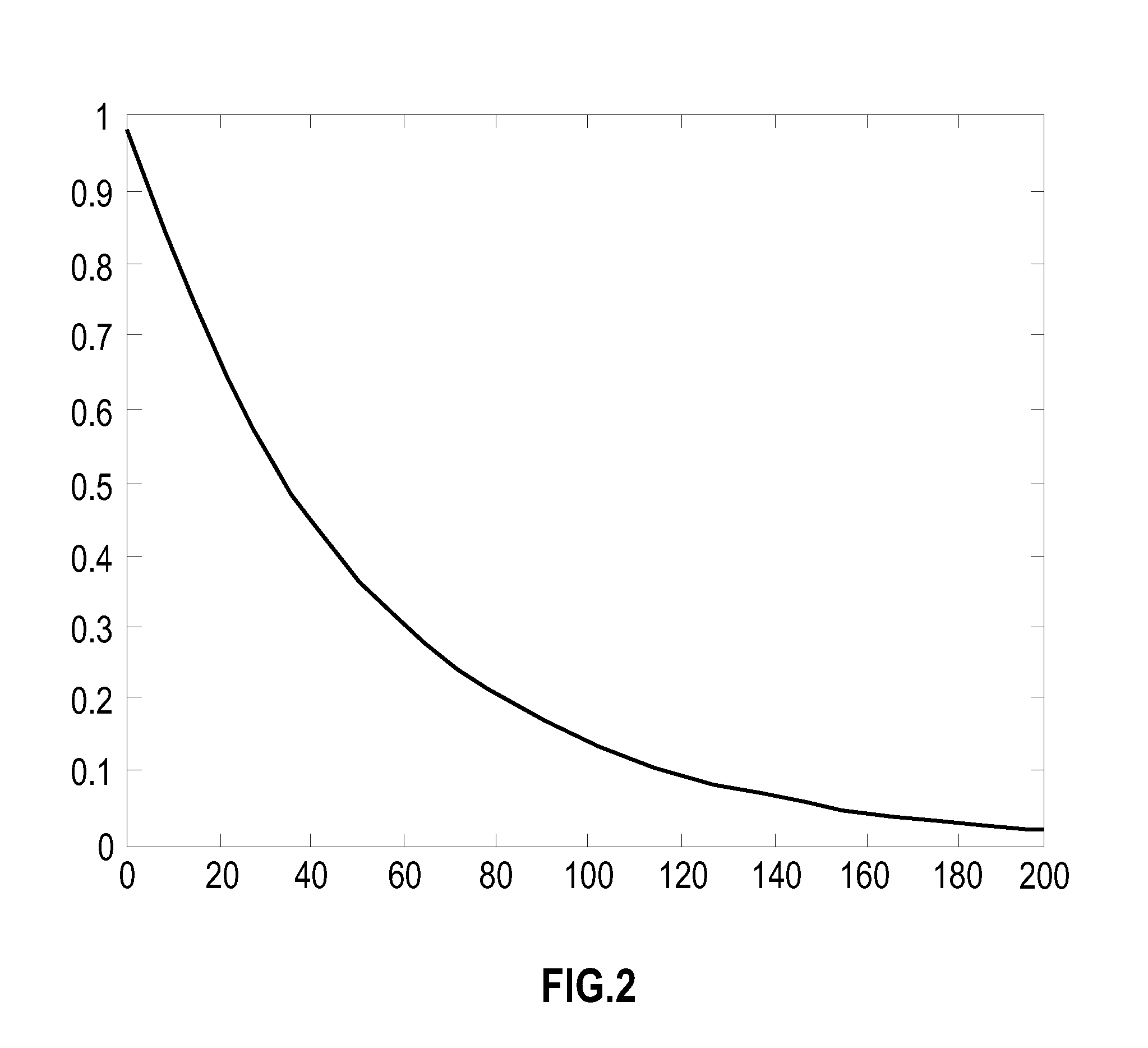

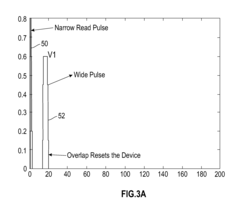

Producing spike-timing dependent plasticity in an ultra-dense synapse cross-bar array

PatentActiveUS20110153533A1

Innovation

- The implementation of a method and system that utilize alert and gate pulses to modulate the conductance of variable state resistors, where the timing of neuron spikes determines whether to increase or decrease synaptic conductance, achieved through RC circuits and pulse combinations in an ultra-dense cross-bar array.

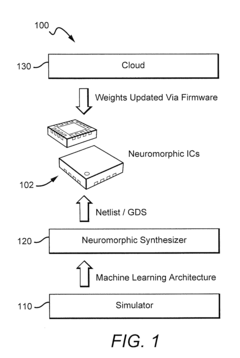

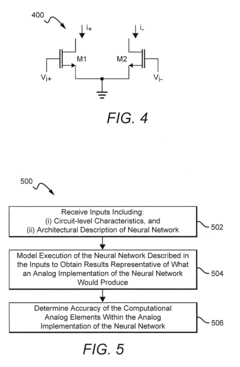

Systems And Methods For Determining Circuit-Level Effects On Classifier Accuracy

PatentActiveUS20190065962A1

Innovation

- The development of neuromorphic chips that simulate 'silicon' neurons, processing information in parallel with bursts of electric current at non-uniform intervals, and the use of systems and methods to model the effects of circuit-level characteristics on neural networks, such as thermal noise and weight inaccuracies, to optimize their performance.

Energy Efficiency Benchmarks and Comparisons

Energy efficiency represents a critical benchmark for evaluating neuromorphic computing systems, especially when compared to traditional von Neumann architectures and other AI acceleration hardware. Neuromorphic chips demonstrate significant advantages in power consumption metrics, with leading designs achieving energy efficiencies in the picojoule per synaptic operation range—orders of magnitude better than conventional GPU-based deep learning systems.

Comparative analyses between IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida reveal substantial variations in energy efficiency profiles. TrueNorth operates at approximately 70 milliwatts while simulating one million neurons, translating to roughly 70 pJ per synaptic event. Intel's Loihi demonstrates comparable efficiency at 100 pJ per synaptic operation while offering greater architectural flexibility. BrainChip's Akida pushes boundaries further with claims of efficiency approaching 20 pJ per operation in specific workloads.

When benchmarked against traditional AI hardware, the contrast becomes more pronounced. Standard GPUs typically consume 1-10 nanojoules per operation—at least 10-100 times more energy than neuromorphic solutions for equivalent computational tasks. This efficiency gap widens further when considering always-on applications and edge computing scenarios where power constraints are paramount.

Standardized benchmarking methodologies remain an evolving challenge in the field. The SNN-specific nature of neuromorphic computation makes direct comparisons with conventional deep learning metrics problematic. Emerging frameworks such as N-MNIST and DVS-CIFAR10 provide specialized datasets for neuromorphic evaluation, but industry-wide accepted benchmarking protocols are still developing.

Real-world deployment scenarios demonstrate the practical implications of these efficiency advantages. In autonomous sensor applications, neuromorphic systems can operate continuously for months on battery power where conventional solutions would require frequent recharging. Edge AI implementations using neuromorphic hardware have demonstrated 20-50x improvements in energy efficiency for specific pattern recognition and anomaly detection workloads.

The energy efficiency advantage stems from the event-driven, sparse computation model inherent to neuromorphic architectures. Unlike traditional systems that continuously consume power regardless of computational load, neuromorphic chips activate circuits only when necessary, mirroring the brain's energy-efficient information processing paradigm. This fundamental architectural difference suggests the efficiency gap will likely persist even as manufacturing processes advance.

Comparative analyses between IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida reveal substantial variations in energy efficiency profiles. TrueNorth operates at approximately 70 milliwatts while simulating one million neurons, translating to roughly 70 pJ per synaptic event. Intel's Loihi demonstrates comparable efficiency at 100 pJ per synaptic operation while offering greater architectural flexibility. BrainChip's Akida pushes boundaries further with claims of efficiency approaching 20 pJ per operation in specific workloads.

When benchmarked against traditional AI hardware, the contrast becomes more pronounced. Standard GPUs typically consume 1-10 nanojoules per operation—at least 10-100 times more energy than neuromorphic solutions for equivalent computational tasks. This efficiency gap widens further when considering always-on applications and edge computing scenarios where power constraints are paramount.

Standardized benchmarking methodologies remain an evolving challenge in the field. The SNN-specific nature of neuromorphic computation makes direct comparisons with conventional deep learning metrics problematic. Emerging frameworks such as N-MNIST and DVS-CIFAR10 provide specialized datasets for neuromorphic evaluation, but industry-wide accepted benchmarking protocols are still developing.

Real-world deployment scenarios demonstrate the practical implications of these efficiency advantages. In autonomous sensor applications, neuromorphic systems can operate continuously for months on battery power where conventional solutions would require frequent recharging. Edge AI implementations using neuromorphic hardware have demonstrated 20-50x improvements in energy efficiency for specific pattern recognition and anomaly detection workloads.

The energy efficiency advantage stems from the event-driven, sparse computation model inherent to neuromorphic architectures. Unlike traditional systems that continuously consume power regardless of computational load, neuromorphic chips activate circuits only when necessary, mirroring the brain's energy-efficient information processing paradigm. This fundamental architectural difference suggests the efficiency gap will likely persist even as manufacturing processes advance.

Hardware-Software Co-design Strategies

The effective integration of neuromorphic chips with AI systems requires sophisticated hardware-software co-design strategies that bridge the gap between novel neuromorphic architectures and existing AI frameworks. Traditional development approaches that separate hardware and software design phases are inadequate for neuromorphic computing, where the unique processing paradigm demands simultaneous consideration of both domains.

Co-design methodologies for neuromorphic systems typically begin with algorithm-hardware mapping analysis, identifying which neural network operations can be efficiently executed on neuromorphic hardware and which require modification. This process involves transforming conventional deep learning algorithms into spike-based representations compatible with neuromorphic architectures while preserving computational accuracy and efficiency.

Specialized programming models and abstractions form another critical component of co-design strategies. Frameworks such as IBM's TrueNorth Neurosynaptic System, Intel's Loihi SDK, and SpiNNaker's software stack provide domain-specific languages and programming interfaces that abstract the complexities of neuromorphic hardware while exposing essential features for optimization. These tools enable developers to express neural computations in ways that can be efficiently mapped to the underlying hardware.

Runtime systems and middleware play a pivotal role in hardware-software co-design by managing resource allocation, scheduling, and communication between neuromorphic components and conventional computing elements. Adaptive runtime systems can dynamically adjust network parameters based on input characteristics, power constraints, or accuracy requirements, maximizing the benefits of neuromorphic acceleration while maintaining system reliability.

Simulation environments and digital twins have emerged as valuable co-design tools, allowing developers to model neuromorphic system behavior before physical implementation. These environments enable rapid prototyping and performance prediction, reducing development cycles and facilitating hardware-software optimization iterations without requiring physical chip modifications.

Cross-layer optimization techniques represent the most advanced co-design strategies, simultaneously considering algorithm structure, mapping strategies, hardware configuration, and energy constraints. These approaches often employ automated design space exploration tools that can identify optimal configurations across the hardware-software boundary, balancing performance, energy efficiency, and accuracy requirements.

The future of hardware-software co-design for neuromorphic AI systems points toward increased automation through machine learning-based design tools that can predict performance outcomes and suggest optimizations across the entire system stack. As neuromorphic computing continues to mature, these co-design methodologies will become increasingly sophisticated, enabling more seamless integration between biological-inspired hardware and advanced AI applications.

Co-design methodologies for neuromorphic systems typically begin with algorithm-hardware mapping analysis, identifying which neural network operations can be efficiently executed on neuromorphic hardware and which require modification. This process involves transforming conventional deep learning algorithms into spike-based representations compatible with neuromorphic architectures while preserving computational accuracy and efficiency.

Specialized programming models and abstractions form another critical component of co-design strategies. Frameworks such as IBM's TrueNorth Neurosynaptic System, Intel's Loihi SDK, and SpiNNaker's software stack provide domain-specific languages and programming interfaces that abstract the complexities of neuromorphic hardware while exposing essential features for optimization. These tools enable developers to express neural computations in ways that can be efficiently mapped to the underlying hardware.

Runtime systems and middleware play a pivotal role in hardware-software co-design by managing resource allocation, scheduling, and communication between neuromorphic components and conventional computing elements. Adaptive runtime systems can dynamically adjust network parameters based on input characteristics, power constraints, or accuracy requirements, maximizing the benefits of neuromorphic acceleration while maintaining system reliability.

Simulation environments and digital twins have emerged as valuable co-design tools, allowing developers to model neuromorphic system behavior before physical implementation. These environments enable rapid prototyping and performance prediction, reducing development cycles and facilitating hardware-software optimization iterations without requiring physical chip modifications.

Cross-layer optimization techniques represent the most advanced co-design strategies, simultaneously considering algorithm structure, mapping strategies, hardware configuration, and energy constraints. These approaches often employ automated design space exploration tools that can identify optimal configurations across the hardware-software boundary, balancing performance, energy efficiency, and accuracy requirements.

The future of hardware-software co-design for neuromorphic AI systems points toward increased automation through machine learning-based design tools that can predict performance outcomes and suggest optimizations across the entire system stack. As neuromorphic computing continues to mature, these co-design methodologies will become increasingly sophisticated, enabling more seamless integration between biological-inspired hardware and advanced AI applications.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!