Analyze DDR5 Bus Speed in High-Frequency Applications

SEP 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

DDR5 Evolution and Performance Targets

DDR5 memory technology represents a significant evolution in the DRAM landscape, building upon the foundations established by its predecessors while introducing substantial architectural improvements. The development trajectory of DDR memory has consistently focused on increasing data rates, reducing power consumption, and enhancing overall system performance. From DDR4's maximum data rate of 3200 MT/s, DDR5 has dramatically expanded capabilities, initially launching with 4800 MT/s and establishing a roadmap toward 8400 MT/s and beyond.

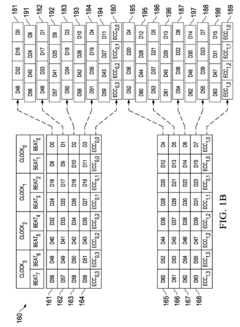

The primary performance targets for DDR5 in high-frequency applications center around doubling the bandwidth compared to DDR4 while simultaneously improving power efficiency. This ambitious goal is achieved through several key architectural innovations, including the implementation of dual 32-bit channels instead of a single 64-bit channel, effectively doubling the burst length from 8 to 16, and enabling higher prefetch capabilities.

Signal integrity at higher frequencies represents a critical challenge that DDR5 addresses through improved equalization techniques and on-die termination. The technology introduces Decision Feedback Equalization (DFE) and Feed-Forward Equalization (FFE) to maintain signal quality across increasingly demanding bus speeds. These advancements are essential as systems push toward the theoretical limits of electrical signaling on conventional PCB materials.

Power management has evolved significantly with DDR5, moving voltage regulation from the motherboard to the memory module itself. This shift to on-module power management enables more precise voltage control, reduced noise, and improved signal integrity at higher frequencies. The operating voltage has been reduced from DDR4's 1.2V to DDR5's 1.1V, contributing to overall system efficiency despite the increased performance envelope.

Reliability features have been substantially enhanced in DDR5, with the introduction of on-die ECC (Error Correction Code) capabilities that operate independently from system-level ECC. This innovation addresses the increasing soft error rates that naturally occur as memory density increases and operating voltages decrease, ensuring data integrity even at maximum bus speeds.

The industry has established clear performance milestones for DDR5 adoption, with initial implementations targeting 4800-5600 MT/s, mid-generation improvements reaching 6400 MT/s, and late-generation optimizations pushing toward 8400 MT/s. These targets are aligned with the increasing demands of data-intensive applications in AI, machine learning, and high-performance computing, where memory bandwidth often represents a critical bottleneck.

Looking forward, the DDR5 specification provides headroom for continued evolution, with the JEDEC standard theoretically supporting data rates up to 8400 MT/s while leaving room for potential extensions. This forward-looking approach ensures that DDR5 will remain relevant throughout its lifecycle, accommodating the ever-increasing performance requirements of next-generation computing platforms.

The primary performance targets for DDR5 in high-frequency applications center around doubling the bandwidth compared to DDR4 while simultaneously improving power efficiency. This ambitious goal is achieved through several key architectural innovations, including the implementation of dual 32-bit channels instead of a single 64-bit channel, effectively doubling the burst length from 8 to 16, and enabling higher prefetch capabilities.

Signal integrity at higher frequencies represents a critical challenge that DDR5 addresses through improved equalization techniques and on-die termination. The technology introduces Decision Feedback Equalization (DFE) and Feed-Forward Equalization (FFE) to maintain signal quality across increasingly demanding bus speeds. These advancements are essential as systems push toward the theoretical limits of electrical signaling on conventional PCB materials.

Power management has evolved significantly with DDR5, moving voltage regulation from the motherboard to the memory module itself. This shift to on-module power management enables more precise voltage control, reduced noise, and improved signal integrity at higher frequencies. The operating voltage has been reduced from DDR4's 1.2V to DDR5's 1.1V, contributing to overall system efficiency despite the increased performance envelope.

Reliability features have been substantially enhanced in DDR5, with the introduction of on-die ECC (Error Correction Code) capabilities that operate independently from system-level ECC. This innovation addresses the increasing soft error rates that naturally occur as memory density increases and operating voltages decrease, ensuring data integrity even at maximum bus speeds.

The industry has established clear performance milestones for DDR5 adoption, with initial implementations targeting 4800-5600 MT/s, mid-generation improvements reaching 6400 MT/s, and late-generation optimizations pushing toward 8400 MT/s. These targets are aligned with the increasing demands of data-intensive applications in AI, machine learning, and high-performance computing, where memory bandwidth often represents a critical bottleneck.

Looking forward, the DDR5 specification provides headroom for continued evolution, with the JEDEC standard theoretically supporting data rates up to 8400 MT/s while leaving room for potential extensions. This forward-looking approach ensures that DDR5 will remain relevant throughout its lifecycle, accommodating the ever-increasing performance requirements of next-generation computing platforms.

Market Demand for High-Frequency Memory Solutions

The global demand for high-frequency memory solutions has experienced exponential growth in recent years, primarily driven by the rapid advancement of data-intensive applications. The DDR5 memory standard, with its significantly improved bus speeds compared to DDR4, has emerged as a critical component in meeting these escalating performance requirements across multiple sectors.

Data centers represent the largest market segment for high-frequency DDR5 memory solutions, with the global data center market projected to reach $517 billion by 2025. The proliferation of cloud computing services, big data analytics, and artificial intelligence applications has created unprecedented demand for memory bandwidth. Enterprise customers are increasingly willing to invest in premium memory solutions that can reduce latency and improve overall system throughput.

The high-performance computing (HPC) sector presents another substantial market opportunity. As scientific research, weather modeling, and pharmaceutical development become more computationally intensive, the need for memory systems capable of handling massive parallel processing has grown dramatically. Market research indicates that organizations are prioritizing memory bandwidth improvements as a key factor in their next-generation HPC infrastructure investments.

Consumer electronics, particularly gaming systems and content creation workstations, constitute a rapidly expanding market segment. The gaming industry, valued at over $300 billion globally, continues to push hardware boundaries with increasingly realistic graphics and complex simulations that benefit directly from higher memory bus speeds. Professional content creators working with 8K video editing and 3D rendering similarly require the enhanced data transfer capabilities that DDR5 provides.

Telecommunications infrastructure, especially with the ongoing global 5G rollout, represents another significant market driver. Network equipment manufacturers are integrating high-frequency memory solutions to handle the increased data processing requirements of next-generation wireless networks. Industry analysts predict that telecom infrastructure spending on advanced memory solutions will grow at a compound annual rate of 24% through 2026.

Automotive applications, particularly advanced driver-assistance systems (ADAS) and autonomous driving platforms, are emerging as a promising growth sector for high-frequency memory. These systems process enormous amounts of sensor data in real-time, creating demand for memory solutions that can support high-bandwidth, low-latency operations in challenging environmental conditions.

Market research consistently shows that customers across these segments are willing to pay premium prices for memory solutions that deliver measurable performance improvements. This price elasticity creates significant revenue opportunities for manufacturers who can successfully develop and commercialize DDR5 technologies that push the boundaries of bus speed capabilities.

Data centers represent the largest market segment for high-frequency DDR5 memory solutions, with the global data center market projected to reach $517 billion by 2025. The proliferation of cloud computing services, big data analytics, and artificial intelligence applications has created unprecedented demand for memory bandwidth. Enterprise customers are increasingly willing to invest in premium memory solutions that can reduce latency and improve overall system throughput.

The high-performance computing (HPC) sector presents another substantial market opportunity. As scientific research, weather modeling, and pharmaceutical development become more computationally intensive, the need for memory systems capable of handling massive parallel processing has grown dramatically. Market research indicates that organizations are prioritizing memory bandwidth improvements as a key factor in their next-generation HPC infrastructure investments.

Consumer electronics, particularly gaming systems and content creation workstations, constitute a rapidly expanding market segment. The gaming industry, valued at over $300 billion globally, continues to push hardware boundaries with increasingly realistic graphics and complex simulations that benefit directly from higher memory bus speeds. Professional content creators working with 8K video editing and 3D rendering similarly require the enhanced data transfer capabilities that DDR5 provides.

Telecommunications infrastructure, especially with the ongoing global 5G rollout, represents another significant market driver. Network equipment manufacturers are integrating high-frequency memory solutions to handle the increased data processing requirements of next-generation wireless networks. Industry analysts predict that telecom infrastructure spending on advanced memory solutions will grow at a compound annual rate of 24% through 2026.

Automotive applications, particularly advanced driver-assistance systems (ADAS) and autonomous driving platforms, are emerging as a promising growth sector for high-frequency memory. These systems process enormous amounts of sensor data in real-time, creating demand for memory solutions that can support high-bandwidth, low-latency operations in challenging environmental conditions.

Market research consistently shows that customers across these segments are willing to pay premium prices for memory solutions that deliver measurable performance improvements. This price elasticity creates significant revenue opportunities for manufacturers who can successfully develop and commercialize DDR5 technologies that push the boundaries of bus speed capabilities.

DDR5 Bus Speed Limitations and Technical Challenges

DDR5 memory technology represents a significant advancement in high-frequency data transfer capabilities, yet faces substantial limitations when operating at extreme bus speeds. Current DDR5 implementations can theoretically reach up to 8400 MT/s, with roadmaps extending to 10,000+ MT/s in future iterations. However, as frequencies increase, signal integrity becomes increasingly compromised due to physical constraints inherent in high-speed circuit design.

The primary technical challenge stems from transmission line effects that become pronounced at higher frequencies. When signal wavelengths approach the physical dimensions of the bus traces, impedance mismatches create reflections and standing waves that degrade signal quality. This manifests as increased jitter, reduced voltage margins, and ultimately higher bit error rates that can compromise system stability.

Power integrity presents another significant hurdle. Higher frequencies necessitate faster switching times, resulting in larger di/dt transients that stress power delivery networks. The resulting voltage fluctuations further compromise signal integrity through power supply noise coupling. Additionally, higher operating frequencies increase dynamic power consumption according to the CV²f relationship, creating thermal management challenges in densely packed memory subsystems.

Crosstalk between adjacent signal lines intensifies at higher frequencies, as capacitive and inductive coupling effects strengthen with increased slew rates. This interference becomes particularly problematic in the dense routing environments typical of modern memory subsystems, where trace spacing is already minimized to accommodate packaging constraints.

The physical layer design faces significant challenges with increasing data rates. Maintaining signal integrity requires precise control of trace lengths, impedances, and termination schemes. At DDR5's highest speeds, even minor variations in PCB manufacturing tolerances can create sufficient timing skew to close timing margins. The industry has responded with advanced equalization techniques including decision feedback equalization (DFE) and feed-forward equalization (FFE), but these add complexity and power consumption.

Clock distribution networks face particular strain at high frequencies. Maintaining acceptable clock skew across multiple memory channels becomes increasingly difficult as frequencies rise, necessitating sophisticated clock distribution architectures and potentially limiting maximum achievable bus speeds in multi-rank configurations.

From a system architecture perspective, memory controllers must implement increasingly complex training algorithms to calibrate signal paths at initialization. These algorithms consume boot time and system resources, creating practical limitations for deployment in time-sensitive applications. The complexity of these training procedures increases exponentially with bus speed, potentially establishing practical upper limits for reliable operation.

The primary technical challenge stems from transmission line effects that become pronounced at higher frequencies. When signal wavelengths approach the physical dimensions of the bus traces, impedance mismatches create reflections and standing waves that degrade signal quality. This manifests as increased jitter, reduced voltage margins, and ultimately higher bit error rates that can compromise system stability.

Power integrity presents another significant hurdle. Higher frequencies necessitate faster switching times, resulting in larger di/dt transients that stress power delivery networks. The resulting voltage fluctuations further compromise signal integrity through power supply noise coupling. Additionally, higher operating frequencies increase dynamic power consumption according to the CV²f relationship, creating thermal management challenges in densely packed memory subsystems.

Crosstalk between adjacent signal lines intensifies at higher frequencies, as capacitive and inductive coupling effects strengthen with increased slew rates. This interference becomes particularly problematic in the dense routing environments typical of modern memory subsystems, where trace spacing is already minimized to accommodate packaging constraints.

The physical layer design faces significant challenges with increasing data rates. Maintaining signal integrity requires precise control of trace lengths, impedances, and termination schemes. At DDR5's highest speeds, even minor variations in PCB manufacturing tolerances can create sufficient timing skew to close timing margins. The industry has responded with advanced equalization techniques including decision feedback equalization (DFE) and feed-forward equalization (FFE), but these add complexity and power consumption.

Clock distribution networks face particular strain at high frequencies. Maintaining acceptable clock skew across multiple memory channels becomes increasingly difficult as frequencies rise, necessitating sophisticated clock distribution architectures and potentially limiting maximum achievable bus speeds in multi-rank configurations.

From a system architecture perspective, memory controllers must implement increasingly complex training algorithms to calibrate signal paths at initialization. These algorithms consume boot time and system resources, creating practical limitations for deployment in time-sensitive applications. The complexity of these training procedures increases exponentially with bus speed, potentially establishing practical upper limits for reliable operation.

Current DDR5 Bus Speed Enhancement Techniques

01 DDR5 Memory Bus Speed Enhancements

DDR5 memory introduces significant improvements in bus speed compared to previous generations. These enhancements include higher data transfer rates, improved signal integrity, and optimized bus architecture that allows for faster communication between memory modules and processors. The technology implements advanced clock synchronization methods and enhanced data strobe techniques to achieve higher bandwidth while maintaining stability at increased speeds.- DDR5 Memory Bus Speed Enhancements: DDR5 memory introduces significant improvements in bus speed compared to previous generations. These enhancements include higher data transfer rates, improved signal integrity, and optimized bus architecture that allows for faster communication between memory modules and processors. The technology implements advanced clock synchronization methods and enhanced signaling techniques to achieve higher bandwidth while maintaining system stability.

- Memory Controller Architectures for DDR5: Specialized memory controller architectures are designed to handle the increased bus speeds of DDR5 memory. These controllers incorporate advanced buffering techniques, improved command scheduling, and optimized timing parameters to efficiently manage high-speed data transfers. The architecture includes dedicated circuits for handling the increased bandwidth and reduced latency requirements of DDR5 memory systems.

- Error Detection and Correction for High-Speed Memory: As memory bus speeds increase in DDR5 systems, error detection and correction mechanisms become more critical. Advanced error correction code (ECC) implementations, cyclic redundancy checks, and parity verification systems are integrated to maintain data integrity at higher speeds. These mechanisms help identify and correct transmission errors that may occur due to the increased signaling rates and tighter timing constraints.

- Power Management in High-Speed Memory Buses: DDR5 memory implements sophisticated power management techniques to handle the increased power demands associated with higher bus speeds. These include dynamic voltage scaling, adaptive power states, and improved thermal management. The power delivery network is optimized to provide stable voltage levels during high-speed data transfers while minimizing power consumption during idle periods.

- Bus Interface and Signal Integrity Solutions: Specialized bus interface designs address the signal integrity challenges of high-speed DDR5 memory operations. These solutions include advanced termination schemes, impedance matching techniques, and improved physical layer designs. The interfaces incorporate equalization circuits, enhanced I/O buffers, and optimized trace routing to maintain signal quality at increased frequencies, reducing interference and ensuring reliable data transmission.

02 Memory Bus Architecture and Interface Design

The architecture of DDR5 memory buses incorporates innovative interface designs that support higher speeds. This includes improvements in the physical layer, signal routing, and termination techniques. The bus architecture features enhanced parallel processing capabilities, reduced latency mechanisms, and optimized channel configurations that collectively contribute to increased data throughput and more efficient memory operations.Expand Specific Solutions03 Memory Controller Optimization for High-Speed Operation

Memory controllers for DDR5 systems are specifically designed to handle increased bus speeds through advanced timing control, improved command scheduling, and sophisticated power management. These controllers implement adaptive algorithms that optimize data transfer rates based on system conditions, manage thermal constraints during high-speed operations, and coordinate multiple memory channels to maximize bandwidth utilization while maintaining data integrity.Expand Specific Solutions04 Error Detection and Correction at Higher Bus Speeds

As memory bus speeds increase in DDR5 systems, advanced error detection and correction mechanisms become essential. These systems implement sophisticated ECC (Error-Correcting Code) algorithms, on-die error checking, and real-time monitoring to maintain data integrity at higher frequencies. The error handling protocols include improved CRC (Cyclic Redundancy Check) implementations and adaptive retry mechanisms that ensure reliable data transmission despite the increased potential for signal interference at higher speeds.Expand Specific Solutions05 Power Management for High-Speed Memory Bus Operation

DDR5 memory incorporates advanced power management techniques to support higher bus speeds while maintaining energy efficiency. These include dynamic voltage scaling, frequency-dependent power adjustments, and intelligent power distribution across memory channels. The technology implements fine-grained power control mechanisms that optimize energy consumption based on workload demands, allowing the memory subsystem to operate at maximum speed when required while conserving power during periods of lower activity.Expand Specific Solutions

Key Memory Manufacturers and Semiconductor Industry Players

DDR5 bus speed in high-frequency applications is evolving rapidly in a maturing market estimated at $9.5 billion, with projected growth to $15 billion by 2026. The technology landscape shows varying levels of maturity, with established players like Samsung, SK hynix, and Micron leading production capabilities, while Rambus drives innovation in interface technologies. AMD, Intel, and Qualcomm are integrating advanced DDR5 controllers into their chipsets, pushing speeds beyond 6400 MT/s. Chinese entrants like ChangXin Memory and Hygon are emerging but lag in cutting-edge implementations. The competitive landscape is characterized by a race for higher bandwidth, lower latency, and improved power efficiency, with server and high-performance computing applications driving adoption.

Rambus, Inc.

Technical Solution: Rambus has developed advanced DDR5 memory interface solutions that focus on signal integrity at high frequencies. Their technology includes specialized SerDes architecture that supports data rates up to 8400 MT/s while maintaining signal integrity across challenging PCB environments. Rambus implements adaptive equalization techniques that dynamically adjust to changing signal conditions, compensating for channel loss at high frequencies. Their DDR5 memory controllers incorporate sophisticated timing control mechanisms with precision delay-locked loops (DLLs) that maintain synchronization even at extreme bus speeds. Additionally, Rambus has pioneered power management innovations for DDR5, including voltage regulation modules (VRMs) moved onto the DIMM itself, allowing for more stable power delivery during high-frequency operations.

Strengths: Industry-leading signal integrity expertise; proprietary equalization technology that extends reliable operation at higher frequencies; extensive IP portfolio in high-speed memory interfaces. Weaknesses: Solutions often come at premium pricing; implementation complexity may require specialized design expertise; higher power consumption compared to some competing approaches.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has pioneered DDR5 memory solutions with advanced high-frequency bus architectures. Their technology implements a sophisticated multi-channel architecture that supports data rates up to 7200 MT/s with improved signal integrity. Samsung's DDR5 modules feature on-die ECC (Error Correction Code) that significantly improves data reliability at high frequencies by detecting and correcting single-bit errors without CPU intervention. Their design incorporates advanced decision feedback equalization (DFE) circuits that adaptively compensate for channel losses at high frequencies. Samsung has also developed proprietary substrate materials with lower dielectric loss, reducing signal attenuation at high frequencies. Their DDR5 implementation includes integrated voltage regulators on each DIMM, allowing for more precise power delivery and reduced noise during high-speed operations, which is critical for maintaining signal integrity as bus speeds increase.

Strengths: Vertical integration from memory die manufacturing to module design; industry-leading process technology enabling higher frequencies; extensive testing and validation capabilities. Weaknesses: Premium pricing for cutting-edge solutions; potentially higher power consumption at maximum frequencies; proprietary optimizations may limit compatibility with some platforms.

Critical Patents and Research in DDR5 Signal Integrity

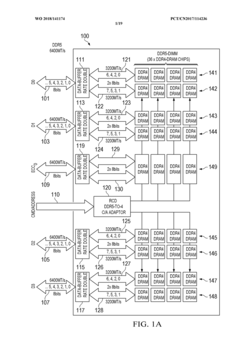

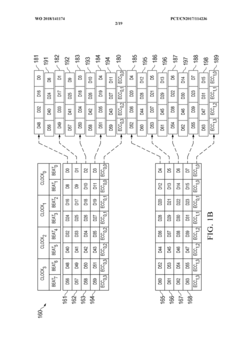

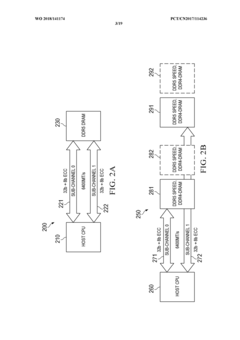

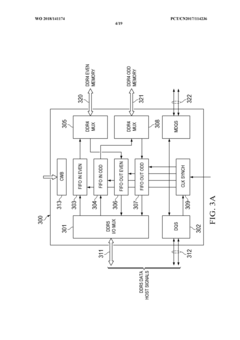

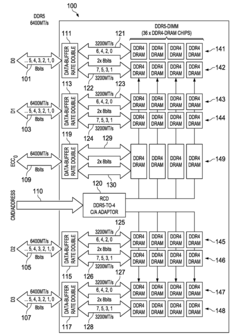

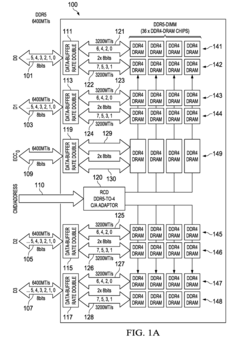

Systems and methods for utilizing DDR4-dram chips in hybrid DDR5-dimms and for cascading DDR5-dimms

PatentWO2018141174A1

Innovation

- Hybrid DDR5 DIMM design that incorporates DDR4 SDRAM chips while maintaining compatibility with DDR5 sub-channels, enabling cost-effective memory solutions.

- Dual sub-channel architecture that allows 2DPC (two DIMMs per channel) configuration at 4400MT/s or slower speeds, overcoming traditional loading limitations of high-speed memory buses.

- Cascading DDR5 DIMMs implementation that leverages existing DDR4 technology while providing a migration path to full DDR5 performance capabilities.

Systems and methods for utilizing DDR4-dram chips in hybrid DDR5-dimms and for cascading DDR5-dimms

PatentActiveUS20180225235A1

Innovation

- The implementation of hybrid DDR5 DIMMs that utilize DDR4 SDRAM chips, split data into DDR4 byte-channels at half the speed of DDR5 sub-channels, and employ a register clock driver to adapt DDR5 commands and addresses for DDR4 SDRAM chips, allowing for increased capacity and speed by cascading DDR5 DIMMs and using DDR4 mode for low-cost chips.

Thermal Management Considerations for High-Speed DDR5

The thermal challenges associated with DDR5 memory modules operating at high frequencies cannot be overstated. As bus speeds continue to increase beyond 6400 MT/s, power consumption rises significantly, with some high-performance DDR5 modules consuming up to 25-30W under load—nearly double that of DDR4 counterparts. This elevated power consumption directly translates to increased heat generation, creating thermal management challenges that can compromise system stability and reliability.

Temperature control becomes critical as DDR5 modules approach their thermal throttling thresholds, typically around 85°C. Without adequate thermal management, modules will automatically reduce performance to prevent damage, negating the speed advantages of high-frequency operation. Industry testing has demonstrated that poorly cooled DDR5 modules can experience performance degradation of 10-15% when sustained workloads push temperatures beyond optimal ranges.

Advanced cooling solutions have emerged to address these thermal concerns. Passive cooling approaches include redesigned heat spreaders with increased surface area and improved thermal interface materials that reduce thermal resistance by up to 30% compared to standard solutions. Some manufacturers have implemented vapor chamber technology within their heat spreaders, offering 20-25% better heat dissipation than traditional aluminum designs.

Active cooling solutions are gaining prominence in high-performance computing environments. These include dedicated memory cooling fans that can reduce DRAM temperatures by 15-20°C under load, and liquid cooling solutions that extend to memory modules. The latter represents a significant shift in thermal management philosophy, acknowledging that modern DDR5 modules generate heat loads comparable to some processing components.

System-level thermal design considerations have also evolved to accommodate DDR5's thermal profile. Motherboard manufacturers are implementing enhanced PCB designs with additional copper layers to improve heat spreading. Case manufacturers are increasingly incorporating memory-specific airflow channels to ensure adequate cooling for high-frequency DDR5 installations.

The relationship between temperature and signal integrity presents another critical consideration. Research indicates that for every 10°C increase in operating temperature, signal integrity metrics can degrade by 5-8%, potentially limiting maximum achievable bus speeds. This creates a complex interdependency where thermal management directly impacts the attainable performance of high-frequency DDR5 implementations.

Future thermal management approaches will likely incorporate more sophisticated monitoring and dynamic thermal regulation. Emerging technologies include embedded temperature sensors with microsecond response times and adaptive cooling systems that modulate cooling intensity based on real-time thermal data, optimizing the balance between performance, power consumption, and thermal constraints.

Temperature control becomes critical as DDR5 modules approach their thermal throttling thresholds, typically around 85°C. Without adequate thermal management, modules will automatically reduce performance to prevent damage, negating the speed advantages of high-frequency operation. Industry testing has demonstrated that poorly cooled DDR5 modules can experience performance degradation of 10-15% when sustained workloads push temperatures beyond optimal ranges.

Advanced cooling solutions have emerged to address these thermal concerns. Passive cooling approaches include redesigned heat spreaders with increased surface area and improved thermal interface materials that reduce thermal resistance by up to 30% compared to standard solutions. Some manufacturers have implemented vapor chamber technology within their heat spreaders, offering 20-25% better heat dissipation than traditional aluminum designs.

Active cooling solutions are gaining prominence in high-performance computing environments. These include dedicated memory cooling fans that can reduce DRAM temperatures by 15-20°C under load, and liquid cooling solutions that extend to memory modules. The latter represents a significant shift in thermal management philosophy, acknowledging that modern DDR5 modules generate heat loads comparable to some processing components.

System-level thermal design considerations have also evolved to accommodate DDR5's thermal profile. Motherboard manufacturers are implementing enhanced PCB designs with additional copper layers to improve heat spreading. Case manufacturers are increasingly incorporating memory-specific airflow channels to ensure adequate cooling for high-frequency DDR5 installations.

The relationship between temperature and signal integrity presents another critical consideration. Research indicates that for every 10°C increase in operating temperature, signal integrity metrics can degrade by 5-8%, potentially limiting maximum achievable bus speeds. This creates a complex interdependency where thermal management directly impacts the attainable performance of high-frequency DDR5 implementations.

Future thermal management approaches will likely incorporate more sophisticated monitoring and dynamic thermal regulation. Emerging technologies include embedded temperature sensors with microsecond response times and adaptive cooling systems that modulate cooling intensity based on real-time thermal data, optimizing the balance between performance, power consumption, and thermal constraints.

Power Efficiency and Voltage Requirements Analysis

DDR5 memory technology introduces significant improvements in power efficiency compared to its predecessors, which is crucial for high-frequency applications where power consumption and thermal management are critical concerns. The operating voltage for DDR5 has been reduced to 1.1V from DDR4's 1.2V, representing approximately an 8% reduction in power consumption before considering other efficiency improvements.

This voltage reduction is accompanied by a fundamental architecture change where power management has been moved from the motherboard to the DIMM itself. DDR5 modules incorporate a Power Management Integrated Circuit (PMIC) that provides more precise voltage regulation and power delivery directly on the memory module. This architectural shift enables more efficient power distribution and reduces power losses associated with voltage conversion across longer traces on the motherboard.

The on-die ECC (Error Correction Code) capability in DDR5 further contributes to power efficiency by reducing the need for system-level error correction processes that would otherwise consume additional power. This feature becomes increasingly important as bus speeds increase, where signal integrity challenges could otherwise necessitate more power-intensive error correction mechanisms.

At high frequencies, DDR5 implements voltage-based termination schemes that are more power-efficient than the resistive termination used in previous generations. The new termination approach reduces idle power consumption while maintaining signal integrity at speeds exceeding 4800 MT/s.

Thermal considerations are also addressed through improved power efficiency. DDR5's lower operating voltage generates less heat during high-frequency operations, which is critical for maintaining stability and reducing cooling requirements in dense computing environments. The reduced thermal output allows for more compact system designs without compromising performance.

For server and data center applications, where DDR5 bus speeds may reach up to 8400 MT/s, the improved power efficiency translates to significant operational cost savings. Analysis indicates that large-scale deployments could see power consumption reductions of 15-20% compared to equivalent DDR4 systems operating at their maximum frequencies.

However, the transition to higher bus speeds does present challenges for voltage stability. As frequencies increase, maintaining clean power delivery becomes more difficult, requiring sophisticated decoupling networks and careful PCB design. The voltage tolerance margins also tighten at higher frequencies, necessitating more precise voltage regulation to prevent data corruption or system instability.

This voltage reduction is accompanied by a fundamental architecture change where power management has been moved from the motherboard to the DIMM itself. DDR5 modules incorporate a Power Management Integrated Circuit (PMIC) that provides more precise voltage regulation and power delivery directly on the memory module. This architectural shift enables more efficient power distribution and reduces power losses associated with voltage conversion across longer traces on the motherboard.

The on-die ECC (Error Correction Code) capability in DDR5 further contributes to power efficiency by reducing the need for system-level error correction processes that would otherwise consume additional power. This feature becomes increasingly important as bus speeds increase, where signal integrity challenges could otherwise necessitate more power-intensive error correction mechanisms.

At high frequencies, DDR5 implements voltage-based termination schemes that are more power-efficient than the resistive termination used in previous generations. The new termination approach reduces idle power consumption while maintaining signal integrity at speeds exceeding 4800 MT/s.

Thermal considerations are also addressed through improved power efficiency. DDR5's lower operating voltage generates less heat during high-frequency operations, which is critical for maintaining stability and reducing cooling requirements in dense computing environments. The reduced thermal output allows for more compact system designs without compromising performance.

For server and data center applications, where DDR5 bus speeds may reach up to 8400 MT/s, the improved power efficiency translates to significant operational cost savings. Analysis indicates that large-scale deployments could see power consumption reductions of 15-20% compared to equivalent DDR4 systems operating at their maximum frequencies.

However, the transition to higher bus speeds does present challenges for voltage stability. As frequencies increase, maintaining clean power delivery becomes more difficult, requiring sophisticated decoupling networks and careful PCB design. The voltage tolerance margins also tighten at higher frequencies, necessitating more precise voltage regulation to prevent data corruption or system instability.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!