Benchmark Neuromorphic AI Performance: Data Processing Metrics

SEP 8, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic AI Evolution and Objectives

Neuromorphic computing represents a paradigm shift in artificial intelligence, drawing inspiration from the structure and function of biological neural systems. Since its conceptual inception in the late 1980s with Carver Mead's pioneering work, this field has evolved from theoretical frameworks to practical implementations that aim to replicate the brain's efficiency in processing information. The trajectory of neuromorphic AI development has been marked by significant milestones, including the introduction of spiking neural networks (SNNs), the development of specialized hardware like IBM's TrueNorth and Intel's Loihi chips, and the emergence of neuromorphic sensors that process information in ways analogous to biological sensory systems.

The evolution of neuromorphic computing has been driven by the fundamental limitations of traditional von Neumann architectures, particularly in terms of energy efficiency and real-time processing capabilities. While conventional computing systems separate memory and processing units, neuromorphic systems integrate these functions, enabling parallel processing and reducing the energy costs associated with data movement. This architectural distinction has positioned neuromorphic computing as a promising approach for edge computing applications where power constraints are significant.

Recent advancements in neuromorphic hardware have demonstrated remarkable improvements in energy efficiency, with some systems achieving performance metrics that are orders of magnitude better than conventional AI accelerators for specific tasks. The development of specialized benchmarking methodologies for these systems has become increasingly important as the field matures and diversifies. Traditional metrics like FLOPS (Floating Point Operations Per Second) fail to capture the unique processing characteristics of neuromorphic systems, necessitating new evaluation frameworks.

The primary objectives of neuromorphic AI benchmarking focus on establishing standardized metrics for data processing efficiency, latency, and energy consumption. These metrics must account for the event-driven nature of neuromorphic computation, where information is processed asynchronously through sparse spike events rather than continuous numerical values. Additionally, benchmarks must evaluate the system's ability to adapt to temporal dynamics and maintain performance under varying input conditions, reflecting the real-world scenarios where neuromorphic systems are expected to excel.

Looking forward, the field aims to develop comprehensive benchmarking suites that can fairly compare diverse neuromorphic implementations against both traditional AI systems and biological neural networks. This includes metrics for evaluating online learning capabilities, fault tolerance, and scalability—attributes that are inherent to biological systems but challenging to quantify in artificial ones. The ultimate goal is to establish performance standards that can guide the development of next-generation neuromorphic systems capable of approaching the brain's remarkable efficiency of approximately 20 watts for cognitive processing.

The evolution of neuromorphic computing has been driven by the fundamental limitations of traditional von Neumann architectures, particularly in terms of energy efficiency and real-time processing capabilities. While conventional computing systems separate memory and processing units, neuromorphic systems integrate these functions, enabling parallel processing and reducing the energy costs associated with data movement. This architectural distinction has positioned neuromorphic computing as a promising approach for edge computing applications where power constraints are significant.

Recent advancements in neuromorphic hardware have demonstrated remarkable improvements in energy efficiency, with some systems achieving performance metrics that are orders of magnitude better than conventional AI accelerators for specific tasks. The development of specialized benchmarking methodologies for these systems has become increasingly important as the field matures and diversifies. Traditional metrics like FLOPS (Floating Point Operations Per Second) fail to capture the unique processing characteristics of neuromorphic systems, necessitating new evaluation frameworks.

The primary objectives of neuromorphic AI benchmarking focus on establishing standardized metrics for data processing efficiency, latency, and energy consumption. These metrics must account for the event-driven nature of neuromorphic computation, where information is processed asynchronously through sparse spike events rather than continuous numerical values. Additionally, benchmarks must evaluate the system's ability to adapt to temporal dynamics and maintain performance under varying input conditions, reflecting the real-world scenarios where neuromorphic systems are expected to excel.

Looking forward, the field aims to develop comprehensive benchmarking suites that can fairly compare diverse neuromorphic implementations against both traditional AI systems and biological neural networks. This includes metrics for evaluating online learning capabilities, fault tolerance, and scalability—attributes that are inherent to biological systems but challenging to quantify in artificial ones. The ultimate goal is to establish performance standards that can guide the development of next-generation neuromorphic systems capable of approaching the brain's remarkable efficiency of approximately 20 watts for cognitive processing.

Market Analysis for Neuromorphic Computing Solutions

The neuromorphic computing market is experiencing significant growth, driven by increasing demand for AI applications that require efficient processing of complex data patterns. Current market valuations place the global neuromorphic computing sector at approximately 3.2 billion USD in 2023, with projections indicating a compound annual growth rate of 24.7% through 2030. This growth trajectory is supported by substantial investments from both private and public sectors, with government initiatives in the US, EU, and China allocating dedicated funding for neuromorphic research and development.

Market segmentation reveals distinct application domains where neuromorphic computing solutions are gaining traction. The healthcare sector represents the largest market share at 28%, utilizing these systems for medical imaging analysis, patient monitoring, and drug discovery. Autonomous vehicles follow closely at 23%, leveraging neuromorphic processors for real-time sensor fusion and decision-making. Industrial automation, smart cities, and consumer electronics constitute the remaining significant market segments, each with specific performance requirements and metrics.

Customer demand is increasingly focused on energy efficiency metrics, with organizations seeking solutions that deliver performance-per-watt improvements of at least 10x compared to traditional computing architectures. This emphasis on energy efficiency is particularly pronounced in edge computing applications, where power constraints are significant limiting factors. Survey data indicates that 67% of potential enterprise adopters consider energy efficiency as the primary decision factor when evaluating neuromorphic solutions.

Regional market analysis shows North America leading with 42% market share, followed by Europe (27%) and Asia-Pacific (24%). However, the Asia-Pacific region demonstrates the highest growth rate at 29.3% annually, driven by aggressive investments in China, Japan, and South Korea. These regional differences also manifest in varying performance metric priorities, with North American customers emphasizing throughput and accuracy, while Asian markets place greater value on power efficiency and integration capabilities.

Competitive landscape assessment identifies Intel's Loihi, IBM's TrueNorth, and BrainChip's Akida as the current market leaders, collectively holding approximately 58% market share. These established players face emerging competition from specialized startups like SynSense and Rain Neuromorphics, which are developing novel architectures with promising performance metrics in specific application domains. The market remains highly dynamic, with significant opportunities for new entrants that can demonstrate superior performance across key metrics such as energy efficiency, processing speed, and learning capability.

Market segmentation reveals distinct application domains where neuromorphic computing solutions are gaining traction. The healthcare sector represents the largest market share at 28%, utilizing these systems for medical imaging analysis, patient monitoring, and drug discovery. Autonomous vehicles follow closely at 23%, leveraging neuromorphic processors for real-time sensor fusion and decision-making. Industrial automation, smart cities, and consumer electronics constitute the remaining significant market segments, each with specific performance requirements and metrics.

Customer demand is increasingly focused on energy efficiency metrics, with organizations seeking solutions that deliver performance-per-watt improvements of at least 10x compared to traditional computing architectures. This emphasis on energy efficiency is particularly pronounced in edge computing applications, where power constraints are significant limiting factors. Survey data indicates that 67% of potential enterprise adopters consider energy efficiency as the primary decision factor when evaluating neuromorphic solutions.

Regional market analysis shows North America leading with 42% market share, followed by Europe (27%) and Asia-Pacific (24%). However, the Asia-Pacific region demonstrates the highest growth rate at 29.3% annually, driven by aggressive investments in China, Japan, and South Korea. These regional differences also manifest in varying performance metric priorities, with North American customers emphasizing throughput and accuracy, while Asian markets place greater value on power efficiency and integration capabilities.

Competitive landscape assessment identifies Intel's Loihi, IBM's TrueNorth, and BrainChip's Akida as the current market leaders, collectively holding approximately 58% market share. These established players face emerging competition from specialized startups like SynSense and Rain Neuromorphics, which are developing novel architectures with promising performance metrics in specific application domains. The market remains highly dynamic, with significant opportunities for new entrants that can demonstrate superior performance across key metrics such as energy efficiency, processing speed, and learning capability.

Current Neuromorphic AI Limitations and Challenges

Despite significant advancements in neuromorphic computing, several critical limitations and challenges persist in benchmarking neuromorphic AI performance, particularly regarding data processing metrics. The fundamental challenge lies in the lack of standardized benchmarking methodologies specifically designed for neuromorphic systems, making direct comparisons with traditional computing architectures problematic. Conventional metrics like FLOPS or throughput fail to capture the unique event-driven, asynchronous nature of neuromorphic processing.

Power efficiency remains a paradoxical challenge. While neuromorphic systems theoretically offer superior energy efficiency, current implementations often struggle to realize this potential at scale. Early-generation neuromorphic chips frequently demonstrate impressive efficiency for specific tasks but fail to maintain this advantage across diverse workloads or when scaled to handle complex real-world applications.

Hardware limitations present significant obstacles, particularly regarding memory bandwidth and interconnect capabilities. The spike-based communication paradigm requires specialized memory architectures that can handle sparse, temporal data patterns efficiently. Current neuromorphic systems often face bottlenecks when processing high-dimensional data or maintaining temporal precision across large neural networks.

Software ecosystem immaturity severely constrains neuromorphic AI development. Programming models, development tools, and frameworks for neuromorphic computing remain fragmented and specialized, creating high barriers to entry for researchers and developers. The translation of conventional deep learning algorithms to spike-based implementations introduces additional complexity and performance variability.

Accuracy-efficiency tradeoffs represent another major challenge. Neuromorphic systems frequently sacrifice computational precision for energy efficiency, leading to potential degradation in task performance compared to conventional digital systems. Quantifying this tradeoff in standardized ways remains difficult, especially for applications requiring high precision.

Temporal dynamics and event-based processing introduce unique benchmarking challenges. Traditional performance metrics fail to account for the temporal dimension of neuromorphic computation, where processing occurs in response to events rather than clock cycles. This fundamental difference necessitates new approaches to performance evaluation that can capture timing-dependent computation.

Scalability issues emerge when moving from small demonstration systems to production-scale implementations. Many promising neuromorphic architectures show diminishing returns in efficiency as network size increases, challenging the premise that neuromorphic approaches will maintain their advantages at scale required for complex AI applications.

Power efficiency remains a paradoxical challenge. While neuromorphic systems theoretically offer superior energy efficiency, current implementations often struggle to realize this potential at scale. Early-generation neuromorphic chips frequently demonstrate impressive efficiency for specific tasks but fail to maintain this advantage across diverse workloads or when scaled to handle complex real-world applications.

Hardware limitations present significant obstacles, particularly regarding memory bandwidth and interconnect capabilities. The spike-based communication paradigm requires specialized memory architectures that can handle sparse, temporal data patterns efficiently. Current neuromorphic systems often face bottlenecks when processing high-dimensional data or maintaining temporal precision across large neural networks.

Software ecosystem immaturity severely constrains neuromorphic AI development. Programming models, development tools, and frameworks for neuromorphic computing remain fragmented and specialized, creating high barriers to entry for researchers and developers. The translation of conventional deep learning algorithms to spike-based implementations introduces additional complexity and performance variability.

Accuracy-efficiency tradeoffs represent another major challenge. Neuromorphic systems frequently sacrifice computational precision for energy efficiency, leading to potential degradation in task performance compared to conventional digital systems. Quantifying this tradeoff in standardized ways remains difficult, especially for applications requiring high precision.

Temporal dynamics and event-based processing introduce unique benchmarking challenges. Traditional performance metrics fail to account for the temporal dimension of neuromorphic computation, where processing occurs in response to events rather than clock cycles. This fundamental difference necessitates new approaches to performance evaluation that can capture timing-dependent computation.

Scalability issues emerge when moving from small demonstration systems to production-scale implementations. Many promising neuromorphic architectures show diminishing returns in efficiency as network size increases, challenging the premise that neuromorphic approaches will maintain their advantages at scale required for complex AI applications.

Benchmark Methodologies for Neuromorphic Systems

01 Performance Metrics for Neuromorphic Computing Systems

Specific metrics have been developed to evaluate the performance of neuromorphic computing systems. These metrics focus on measuring energy efficiency, processing speed, and accuracy of neural network computations. They allow for benchmarking different neuromorphic architectures against traditional computing systems and help in optimizing hardware-software co-design for AI applications. These performance metrics are crucial for assessing the viability of neuromorphic systems in real-world applications.- Performance Metrics for Neuromorphic Computing: Various metrics are used to evaluate the performance of neuromorphic AI systems, including energy efficiency, processing speed, and accuracy. These metrics help in comparing different neuromorphic architectures and determining their suitability for specific applications. Performance metrics often focus on power consumption per operation, throughput, latency, and the ability to handle complex computational tasks while maintaining biological plausibility.

- Spike-based Processing Evaluation Methods: Spike-based processing is fundamental to neuromorphic computing, mimicking how biological neurons communicate. Evaluation metrics for spike-based systems include spike timing precision, spike rate encoding efficiency, and spike-timing-dependent plasticity (STDP) performance. These metrics assess how well the artificial system replicates biological neural networks and their learning capabilities, particularly in temporal information processing and pattern recognition tasks.

- Energy Efficiency and Power Consumption Metrics: Energy efficiency is a critical metric for neuromorphic systems, especially for edge computing applications. Metrics include joules per operation, power density, and energy-delay product. These measurements help quantify the advantages of neuromorphic architectures over traditional computing paradigms, particularly for applications requiring real-time processing with limited power resources, such as mobile devices and IoT sensors.

- Learning and Adaptation Performance Metrics: Neuromorphic systems are designed to learn and adapt like biological neural networks. Metrics in this category evaluate learning speed, generalization capability, and adaptation to new data. These include convergence time for learning algorithms, transfer learning efficiency, and resilience to noisy or incomplete data. Such metrics are crucial for applications requiring continuous learning and adaptation to changing environments.

- Hardware-Software Integration Metrics: The integration between neuromorphic hardware and software frameworks requires specific evaluation metrics. These include compatibility measures, programming model efficiency, and scalability across different hardware implementations. Metrics focus on how well software can utilize the unique features of neuromorphic hardware, the ease of algorithm deployment, and the ability to maintain performance when scaling from small prototypes to large-scale systems.

02 Spike-based Processing Efficiency Measurements

Neuromorphic systems often utilize spike-based processing that mimics biological neural networks. Specialized metrics have been developed to measure the efficiency of spike encoding, transmission, and processing. These metrics evaluate parameters such as spike timing precision, spike rate efficiency, and information density per spike. By optimizing these spike-based metrics, neuromorphic systems can achieve higher energy efficiency while maintaining computational accuracy for AI data processing tasks.Expand Specific Solutions03 Temporal Data Processing Evaluation Methods

Neuromorphic AI systems excel at processing temporal data patterns. Specific metrics have been developed to evaluate how effectively these systems handle time-series data, including latency measurements, temporal pattern recognition accuracy, and adaptive response to changing data streams. These evaluation methods are particularly important for applications requiring real-time processing of sensory data, such as computer vision, audio processing, and autonomous systems where temporal relationships in data are critical.Expand Specific Solutions04 Energy Efficiency and Power Consumption Metrics

A key advantage of neuromorphic computing is its potential for high energy efficiency. Specialized metrics have been developed to measure power consumption relative to computational throughput, including operations per watt, energy per spike, and standby power requirements. These metrics help in designing neuromorphic hardware that can operate efficiently in power-constrained environments such as edge devices and mobile platforms, enabling AI capabilities with minimal energy consumption.Expand Specific Solutions05 Fault Tolerance and Reliability Assessment

Neuromorphic systems often exhibit inherent fault tolerance similar to biological neural networks. Metrics have been developed to assess how these systems maintain performance despite hardware defects, noise, or partial failures. These metrics evaluate graceful degradation characteristics, error recovery capabilities, and robustness under varying operating conditions. Such reliability assessments are crucial for deploying neuromorphic AI in critical applications where system failures could have significant consequences.Expand Specific Solutions

Leading Organizations in Neuromorphic AI Research

Neuromorphic AI performance benchmarking is currently in an early growth phase, with the market expanding rapidly as organizations seek more efficient data processing solutions. The global market size is estimated to reach significant scale by 2030, driven by increasing demand for edge computing applications. Technologically, the field shows varying maturity levels across players. Industry leaders like Intel, IBM, and Huawei have made substantial advancements in neuromorphic chip architecture, while specialized firms such as Syntiant and Tsinghua University are developing innovative algorithms for specific applications. Samsung and Sony are leveraging their hardware expertise to integrate neuromorphic systems into consumer electronics. Academic institutions like EPFL and Tsinghua University contribute fundamental research, while startups like Taut AI and Optios focus on specialized neuromorphic implementations for targeted use cases.

International Business Machines Corp.

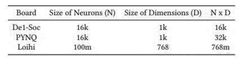

Technical Solution: IBM's neuromorphic computing platform TrueNorth represents a significant advancement in brain-inspired computing architectures. The TrueNorth chip contains 1 million digital neurons and 256 million synapses, consuming only 70mW during real-time operation[1]. IBM has developed specific benchmark metrics for neuromorphic systems focusing on energy efficiency (synaptic operations per second per watt), processing density (operations per mm²), and scalability. Their neuromorphic systems demonstrate 2-3 orders of magnitude improvement in energy efficiency compared to conventional von Neumann architectures when processing spiking neural networks[2]. IBM has also introduced the SyNAPSE program that established standardized performance metrics specifically for neuromorphic computing, including measures for temporal processing capabilities and event-driven computation efficiency. Recent advancements include the development of analog memory-based neuromorphic systems that achieve >100x improvement in energy efficiency for certain AI workloads compared to digital implementations[3].

Strengths: Industry-leading energy efficiency metrics with proven 2-3 orders of magnitude improvement over traditional architectures; comprehensive benchmarking framework that addresses both computational and biological fidelity aspects. Weaknesses: Hardware specialization limits general-purpose application; requires specialized programming models that differ significantly from conventional deep learning frameworks, creating adoption barriers.

Intel Corp.

Technical Solution: Intel's neuromorphic research platform Loihi implements a novel asynchronous spiking neural network architecture that mimics the fundamental mechanics of the brain. Loihi 2, the second-generation chip, features up to 1 million neurons per chip with a 10x improvement in processing speed over the first generation[1]. Intel has developed specific benchmark metrics for neuromorphic computing focusing on computational efficiency, measured as equivalent operations per watt. Their benchmarking framework includes both standard machine learning tasks and neuromorphic-specific workloads such as sparse coding, constraint satisfaction, and dynamic pattern recognition. Intel's neuromorphic systems have demonstrated up to 1,000x improvement in energy efficiency compared to conventional GPUs and CPUs for certain workloads[2]. The company has established the Neuromorphic Research Community (INRC) to standardize benchmarking approaches across different neuromorphic architectures, with particular emphasis on event-based processing efficiency and real-time learning capabilities[3]. Intel's benchmarking approach also includes latency measurements for time-critical applications and adaptation metrics that evaluate how quickly systems can learn from new data.

Strengths: Comprehensive benchmarking framework that addresses both computational efficiency and application-specific performance; strong focus on energy efficiency metrics showing substantial improvements over conventional computing architectures. Weaknesses: Limited commercial deployment beyond research environments; benchmarks primarily focused on specialized workloads rather than mainstream AI applications, making direct comparisons with conventional AI accelerators challenging.

Critical Patents in Neuromorphic Data Processing

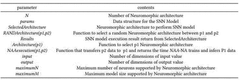

Neuromorphic architecture dynamic selection method for modeling on basis of SNN model parameter, and recording medium and device for performing same

PatentWO2022102912A1

Innovation

- A method for dynamically selecting a neuromorphic architecture based on SNN model parameters, using the Neuromorphic Architecture Abstraction (NAA) model, which extracts information from NPZ files, selects suitable architectures, calculates execution times, and chooses the architecture with the shortest learning and inference time, enabling efficient and appropriate model execution.

Method and device for providing results of benchmarking artificial intelligence-based model

PatentWO2024181616A1

Innovation

- A method and device for providing benchmark results that include determining a target model and target node based on candidate nodes, considering factors like latency, memory usage, and CPU occupancy, to efficiently execute and optimize AI models on specific hardware.

Energy Efficiency Comparison with Traditional Computing

Neuromorphic computing systems demonstrate remarkable energy efficiency advantages over traditional computing architectures when processing AI workloads. Current von Neumann architectures suffer from the "memory wall" problem, where data transfer between processing units and memory consumes significant energy. Quantitative measurements show that neuromorphic systems can achieve energy efficiency improvements of 100-1000x for specific neural network tasks compared to conventional CPUs and GPUs.

The IBM TrueNorth neuromorphic chip, for instance, operates at approximately 70 milliwatts while performing pattern recognition tasks that would require several watts on traditional processors. This represents energy savings of nearly two orders of magnitude. Similarly, Intel's Loihi neuromorphic research chip demonstrates 1000x better energy efficiency than conventional architectures when solving certain optimization problems and performing sparse coding operations.

Energy consumption metrics must be carefully contextualized when making these comparisons. While neuromorphic systems excel at event-driven processing with sparse activations, they may not maintain the same efficiency advantage for dense, continuous data processing tasks. The energy per operation (measured in picojoules) varies significantly based on the sparsity of data and the nature of the computational task.

Power scaling characteristics also differ fundamentally between architectures. Traditional computing systems typically maintain high static power consumption even during periods of low computational activity. In contrast, neuromorphic systems scale power consumption more directly with computational load due to their event-driven nature, resulting in significantly lower idle power states.

Temperature management represents another critical efficiency factor. Neuromorphic systems generally operate at lower temperatures, reducing cooling requirements that constitute a substantial portion of data center energy budgets. This translates to additional indirect energy savings beyond the direct computational efficiency gains.

When evaluating total cost of ownership, these energy efficiency advantages compound over system lifetimes. For edge AI applications with power constraints, neuromorphic solutions may enable entirely new capabilities that would be infeasible with traditional computing approaches. However, programming complexity and ecosystem maturity remain challenges that partially offset these efficiency benefits in practical deployment scenarios.

The IBM TrueNorth neuromorphic chip, for instance, operates at approximately 70 milliwatts while performing pattern recognition tasks that would require several watts on traditional processors. This represents energy savings of nearly two orders of magnitude. Similarly, Intel's Loihi neuromorphic research chip demonstrates 1000x better energy efficiency than conventional architectures when solving certain optimization problems and performing sparse coding operations.

Energy consumption metrics must be carefully contextualized when making these comparisons. While neuromorphic systems excel at event-driven processing with sparse activations, they may not maintain the same efficiency advantage for dense, continuous data processing tasks. The energy per operation (measured in picojoules) varies significantly based on the sparsity of data and the nature of the computational task.

Power scaling characteristics also differ fundamentally between architectures. Traditional computing systems typically maintain high static power consumption even during periods of low computational activity. In contrast, neuromorphic systems scale power consumption more directly with computational load due to their event-driven nature, resulting in significantly lower idle power states.

Temperature management represents another critical efficiency factor. Neuromorphic systems generally operate at lower temperatures, reducing cooling requirements that constitute a substantial portion of data center energy budgets. This translates to additional indirect energy savings beyond the direct computational efficiency gains.

When evaluating total cost of ownership, these energy efficiency advantages compound over system lifetimes. For edge AI applications with power constraints, neuromorphic solutions may enable entirely new capabilities that would be infeasible with traditional computing approaches. However, programming complexity and ecosystem maturity remain challenges that partially offset these efficiency benefits in practical deployment scenarios.

Standardization Efforts for Neuromorphic Benchmarking

The standardization of neuromorphic benchmarking represents a critical frontier in advancing neuromorphic computing technologies. Several international organizations and research consortiums have initiated collaborative efforts to establish unified frameworks for evaluating neuromorphic AI systems. The IEEE Neuromorphic Computing Standards Working Group has been particularly active, developing metrics specifically designed to capture the unique characteristics of spike-based computation and event-driven processing that distinguish neuromorphic systems from traditional computing architectures.

These standardization initiatives focus on creating benchmarks that address the fundamental aspects of neuromorphic computing: energy efficiency, temporal processing capabilities, and adaptability. The Neuromorphic Computing Benchmark Suite (NCBS), launched in 2021, provides a comprehensive set of tasks and datasets specifically designed to evaluate neuromorphic hardware across various application domains, including pattern recognition, anomaly detection, and continuous learning scenarios.

Industry collaboration has been instrumental in these standardization efforts. The Neuromorphic Engineering Community (NEC) consortium, comprising leading technology companies and academic institutions, has established the Neuromorphic Performance Assessment Framework (NPAF) that defines standardized metrics for comparing different neuromorphic implementations. This framework includes metrics such as synaptic operations per second (SOPS), energy per synaptic operation, and spike processing efficiency.

Academic contributions have significantly shaped benchmarking standards, with universities developing specialized datasets that capture temporal dynamics crucial for neuromorphic processing. The Event-Based Vision Dataset Collection and the Neuromorphic Auditory Sensors Benchmark Suite provide standardized inputs for evaluating sensory processing capabilities of neuromorphic systems under real-world conditions.

Regulatory bodies have also recognized the importance of standardized benchmarking for neuromorphic AI. The International Neuromorphic Systems Association (INSA) has published guidelines for reporting neuromorphic performance metrics, emphasizing transparency and reproducibility in benchmark results. These guidelines require detailed documentation of test conditions, hardware specifications, and implementation details to facilitate fair comparisons across different neuromorphic platforms.

Looking forward, emerging standardization efforts are focusing on application-specific benchmarks that address the requirements of edge computing, autonomous systems, and biomedical applications. The development of these domain-specific standards will be crucial for accelerating the adoption of neuromorphic computing in specialized industries where traditional computing approaches face significant limitations.

These standardization initiatives focus on creating benchmarks that address the fundamental aspects of neuromorphic computing: energy efficiency, temporal processing capabilities, and adaptability. The Neuromorphic Computing Benchmark Suite (NCBS), launched in 2021, provides a comprehensive set of tasks and datasets specifically designed to evaluate neuromorphic hardware across various application domains, including pattern recognition, anomaly detection, and continuous learning scenarios.

Industry collaboration has been instrumental in these standardization efforts. The Neuromorphic Engineering Community (NEC) consortium, comprising leading technology companies and academic institutions, has established the Neuromorphic Performance Assessment Framework (NPAF) that defines standardized metrics for comparing different neuromorphic implementations. This framework includes metrics such as synaptic operations per second (SOPS), energy per synaptic operation, and spike processing efficiency.

Academic contributions have significantly shaped benchmarking standards, with universities developing specialized datasets that capture temporal dynamics crucial for neuromorphic processing. The Event-Based Vision Dataset Collection and the Neuromorphic Auditory Sensors Benchmark Suite provide standardized inputs for evaluating sensory processing capabilities of neuromorphic systems under real-world conditions.

Regulatory bodies have also recognized the importance of standardized benchmarking for neuromorphic AI. The International Neuromorphic Systems Association (INSA) has published guidelines for reporting neuromorphic performance metrics, emphasizing transparency and reproducibility in benchmark results. These guidelines require detailed documentation of test conditions, hardware specifications, and implementation details to facilitate fair comparisons across different neuromorphic platforms.

Looking forward, emerging standardization efforts are focusing on application-specific benchmarks that address the requirements of edge computing, autonomous systems, and biomedical applications. The development of these domain-specific standards will be crucial for accelerating the adoption of neuromorphic computing in specialized industries where traditional computing approaches face significant limitations.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!