Benchmarking Neuromorphic Systems: Signal Detection Accuracy

SEP 8, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. This field has evolved significantly since its conceptual inception in the late 1980s when Carver Mead first proposed the idea of using very-large-scale integration (VLSI) systems to mimic neurobiological architectures. The evolution of neuromorphic computing can be traced through several distinct phases, each marked by significant technological breakthroughs and shifting objectives.

The initial phase (1980s-1990s) focused primarily on creating analog VLSI circuits that could replicate basic neural functions. These early systems aimed to demonstrate fundamental principles rather than achieve practical computational advantages. The emphasis was on understanding how to translate biological neural mechanisms into electronic equivalents, establishing the theoretical foundation for future development.

The second phase (2000s) witnessed a transition toward digital implementations and hybrid systems, as researchers sought to overcome the limitations of purely analog approaches. During this period, the objectives expanded to include not only biological fidelity but also practical computational capabilities, particularly in pattern recognition and sensory processing tasks.

The contemporary phase (2010s-present) has been characterized by significant investment from major technology companies and research institutions, resulting in the development of large-scale neuromorphic chips such as IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida. The objectives have evolved to address specific computational challenges where conventional von Neumann architectures struggle, particularly in real-time signal processing, energy efficiency, and handling sparse, event-driven data.

In the context of signal detection accuracy, neuromorphic computing objectives have become increasingly focused on achieving high performance in noisy environments while maintaining minimal power consumption. This represents a critical advantage for applications in edge computing, autonomous systems, and IoT devices where traditional computing approaches face significant energy constraints.

The evolution of benchmarking methodologies has paralleled the development of neuromorphic hardware. Early benchmarks focused primarily on biological plausibility, while contemporary evaluation frameworks emphasize practical metrics such as energy efficiency, latency, and detection accuracy across various signal types. This shift reflects the maturing of the field from purely theoretical exploration to practical implementation with specific performance targets.

Looking forward, the objectives of neuromorphic computing are increasingly aligned with addressing the limitations of conventional computing in an era of exponential data growth and diminishing returns from traditional scaling approaches. Signal detection accuracy represents a key benchmark in this evolution, as it directly impacts the viability of neuromorphic systems in mission-critical applications such as autonomous vehicles, medical diagnostics, and security systems.

The initial phase (1980s-1990s) focused primarily on creating analog VLSI circuits that could replicate basic neural functions. These early systems aimed to demonstrate fundamental principles rather than achieve practical computational advantages. The emphasis was on understanding how to translate biological neural mechanisms into electronic equivalents, establishing the theoretical foundation for future development.

The second phase (2000s) witnessed a transition toward digital implementations and hybrid systems, as researchers sought to overcome the limitations of purely analog approaches. During this period, the objectives expanded to include not only biological fidelity but also practical computational capabilities, particularly in pattern recognition and sensory processing tasks.

The contemporary phase (2010s-present) has been characterized by significant investment from major technology companies and research institutions, resulting in the development of large-scale neuromorphic chips such as IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida. The objectives have evolved to address specific computational challenges where conventional von Neumann architectures struggle, particularly in real-time signal processing, energy efficiency, and handling sparse, event-driven data.

In the context of signal detection accuracy, neuromorphic computing objectives have become increasingly focused on achieving high performance in noisy environments while maintaining minimal power consumption. This represents a critical advantage for applications in edge computing, autonomous systems, and IoT devices where traditional computing approaches face significant energy constraints.

The evolution of benchmarking methodologies has paralleled the development of neuromorphic hardware. Early benchmarks focused primarily on biological plausibility, while contemporary evaluation frameworks emphasize practical metrics such as energy efficiency, latency, and detection accuracy across various signal types. This shift reflects the maturing of the field from purely theoretical exploration to practical implementation with specific performance targets.

Looking forward, the objectives of neuromorphic computing are increasingly aligned with addressing the limitations of conventional computing in an era of exponential data growth and diminishing returns from traditional scaling approaches. Signal detection accuracy represents a key benchmark in this evolution, as it directly impacts the viability of neuromorphic systems in mission-critical applications such as autonomous vehicles, medical diagnostics, and security systems.

Market Analysis for Neuromorphic Signal Detection Systems

The neuromorphic signal detection systems market is experiencing significant growth, driven by the increasing demand for efficient, real-time signal processing solutions across multiple industries. Current market valuations indicate that the global neuromorphic computing market is projected to reach approximately 8.9 billion USD by 2025, with signal detection applications representing a substantial segment of this market. This represents a compound annual growth rate of around 49% from 2020 to 2025, significantly outpacing traditional computing markets.

The primary market segments for neuromorphic signal detection systems include defense and security, healthcare monitoring, autonomous vehicles, industrial automation, and consumer electronics. Within the defense sector, neuromorphic systems are increasingly deployed for radar signal processing, target identification, and threat detection, with market penetration accelerating due to their superior performance in noisy environments and lower power consumption compared to traditional systems.

Healthcare applications represent another rapidly expanding market segment, particularly for real-time biosignal monitoring and analysis. The ability of neuromorphic systems to detect subtle anomalies in ECG, EEG, and other physiological signals with high accuracy while consuming minimal power makes them ideal for wearable health monitoring devices. This segment is expected to grow at over 52% annually through 2025.

Market demand is being shaped by several key factors. First, the increasing need for edge computing solutions that can process signals locally without cloud connectivity is driving adoption in remote sensing applications. Second, the growing complexity of signal environments, particularly in urban settings with significant electromagnetic interference, necessitates more sophisticated detection algorithms that neuromorphic systems excel at implementing.

Regional analysis reveals that North America currently leads the market with approximately 42% share, followed by Europe at 28% and Asia-Pacific at 24%. However, the Asia-Pacific region is expected to demonstrate the highest growth rate over the next five years, driven by significant investments in neuromorphic research and manufacturing capabilities in China, Japan, and South Korea.

Customer requirements are evolving toward systems with higher detection accuracy in low signal-to-noise ratio environments, reduced power consumption, and smaller form factors. Market surveys indicate that customers are willing to pay a premium of up to 35% for neuromorphic solutions that demonstrate a 10x improvement in power efficiency while maintaining comparable or superior detection accuracy relative to traditional digital signal processing approaches.

The market is currently in a transition phase from early adoption to early majority, with crossing this chasm dependent on continued improvements in signal detection accuracy benchmarks and the development of more standardized development tools and interfaces for neuromorphic systems.

The primary market segments for neuromorphic signal detection systems include defense and security, healthcare monitoring, autonomous vehicles, industrial automation, and consumer electronics. Within the defense sector, neuromorphic systems are increasingly deployed for radar signal processing, target identification, and threat detection, with market penetration accelerating due to their superior performance in noisy environments and lower power consumption compared to traditional systems.

Healthcare applications represent another rapidly expanding market segment, particularly for real-time biosignal monitoring and analysis. The ability of neuromorphic systems to detect subtle anomalies in ECG, EEG, and other physiological signals with high accuracy while consuming minimal power makes them ideal for wearable health monitoring devices. This segment is expected to grow at over 52% annually through 2025.

Market demand is being shaped by several key factors. First, the increasing need for edge computing solutions that can process signals locally without cloud connectivity is driving adoption in remote sensing applications. Second, the growing complexity of signal environments, particularly in urban settings with significant electromagnetic interference, necessitates more sophisticated detection algorithms that neuromorphic systems excel at implementing.

Regional analysis reveals that North America currently leads the market with approximately 42% share, followed by Europe at 28% and Asia-Pacific at 24%. However, the Asia-Pacific region is expected to demonstrate the highest growth rate over the next five years, driven by significant investments in neuromorphic research and manufacturing capabilities in China, Japan, and South Korea.

Customer requirements are evolving toward systems with higher detection accuracy in low signal-to-noise ratio environments, reduced power consumption, and smaller form factors. Market surveys indicate that customers are willing to pay a premium of up to 35% for neuromorphic solutions that demonstrate a 10x improvement in power efficiency while maintaining comparable or superior detection accuracy relative to traditional digital signal processing approaches.

The market is currently in a transition phase from early adoption to early majority, with crossing this chasm dependent on continued improvements in signal detection accuracy benchmarks and the development of more standardized development tools and interfaces for neuromorphic systems.

Current Benchmarking Challenges in Neuromorphic Computing

Despite significant advancements in neuromorphic computing, the field faces substantial challenges in establishing standardized benchmarking methodologies, particularly for signal detection accuracy. Current benchmarking approaches often lack consistency across different neuromorphic architectures, making comparative analysis difficult and hindering technological progress.

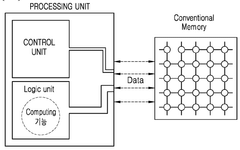

One primary challenge is the absence of universally accepted performance metrics specifically designed for neuromorphic systems. Traditional computing metrics like FLOPS or power efficiency fail to capture the unique characteristics of spike-based computation and event-driven processing inherent to neuromorphic architectures. This creates significant obstacles when attempting to evaluate signal detection accuracy across different implementations.

Hardware heterogeneity presents another major benchmarking challenge. Neuromorphic systems vary dramatically in their architectural approaches—from digital implementations like Intel's Loihi and IBM's TrueNorth to mixed-signal designs such as BrainScaleS and analog implementations. Each architecture employs different neuron models, learning mechanisms, and connectivity patterns, making direct comparisons of signal detection capabilities problematic without standardized testing frameworks.

Workload representation poses additional difficulties. Unlike conventional computing benchmarks that use standardized datasets, neuromorphic systems often require spike-encoded inputs. The conversion process from conventional data to spike trains introduces variability that can significantly impact performance measurements, especially for signal detection tasks where timing precision is critical.

The dynamic nature of neuromorphic systems further complicates benchmarking efforts. Many neuromorphic architectures incorporate online learning and adaptation mechanisms that evolve over time, making reproducibility of results challenging. Signal detection accuracy may vary depending on system state, training history, and even environmental factors, necessitating more sophisticated benchmarking approaches than those used for static computing systems.

Energy efficiency evaluation, while crucial for neuromorphic computing's value proposition, lacks standardized measurement protocols. Current approaches often fail to account for the relationship between power consumption and signal detection accuracy, missing the fundamental trade-offs that define neuromorphic computing advantages.

Finally, application-specific benchmarking remains underdeveloped. Signal detection tasks vary widely across domains—from audio processing to radar systems to biomedical applications—each with unique requirements and performance metrics. The field currently lacks domain-specific benchmarks that can meaningfully evaluate neuromorphic systems' signal detection capabilities in these varied contexts.

One primary challenge is the absence of universally accepted performance metrics specifically designed for neuromorphic systems. Traditional computing metrics like FLOPS or power efficiency fail to capture the unique characteristics of spike-based computation and event-driven processing inherent to neuromorphic architectures. This creates significant obstacles when attempting to evaluate signal detection accuracy across different implementations.

Hardware heterogeneity presents another major benchmarking challenge. Neuromorphic systems vary dramatically in their architectural approaches—from digital implementations like Intel's Loihi and IBM's TrueNorth to mixed-signal designs such as BrainScaleS and analog implementations. Each architecture employs different neuron models, learning mechanisms, and connectivity patterns, making direct comparisons of signal detection capabilities problematic without standardized testing frameworks.

Workload representation poses additional difficulties. Unlike conventional computing benchmarks that use standardized datasets, neuromorphic systems often require spike-encoded inputs. The conversion process from conventional data to spike trains introduces variability that can significantly impact performance measurements, especially for signal detection tasks where timing precision is critical.

The dynamic nature of neuromorphic systems further complicates benchmarking efforts. Many neuromorphic architectures incorporate online learning and adaptation mechanisms that evolve over time, making reproducibility of results challenging. Signal detection accuracy may vary depending on system state, training history, and even environmental factors, necessitating more sophisticated benchmarking approaches than those used for static computing systems.

Energy efficiency evaluation, while crucial for neuromorphic computing's value proposition, lacks standardized measurement protocols. Current approaches often fail to account for the relationship between power consumption and signal detection accuracy, missing the fundamental trade-offs that define neuromorphic computing advantages.

Finally, application-specific benchmarking remains underdeveloped. Signal detection tasks vary widely across domains—from audio processing to radar systems to biomedical applications—each with unique requirements and performance metrics. The field currently lacks domain-specific benchmarks that can meaningfully evaluate neuromorphic systems' signal detection capabilities in these varied contexts.

Signal Detection Methodologies in Neuromorphic Systems

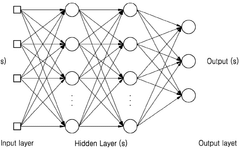

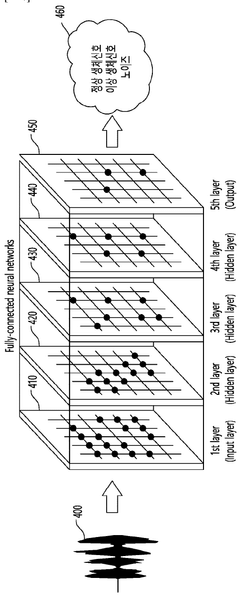

01 Spiking Neural Networks for Signal Detection

Neuromorphic systems utilizing spiking neural networks (SNNs) can significantly improve signal detection accuracy by mimicking the brain's neural processing. These systems encode information in the timing of spikes rather than traditional binary or continuous values, allowing for more efficient processing of temporal data patterns. The spike-based processing enables better detection of weak signals in noisy environments and improves overall detection accuracy while maintaining energy efficiency.- Neuromorphic architectures for improved signal detection: Neuromorphic systems can be designed with specialized architectures that mimic biological neural networks to enhance signal detection accuracy. These architectures incorporate parallel processing capabilities and adaptive learning mechanisms that allow for more efficient pattern recognition and signal processing compared to traditional computing systems. By implementing brain-inspired computational models, these systems can better handle noisy or incomplete data, leading to improved detection accuracy in various applications.

- Spiking neural networks for signal processing: Spiking neural networks (SNNs) offer advantages for signal detection in neuromorphic systems by processing information in a temporal manner similar to biological neurons. These networks use discrete spikes rather than continuous values, enabling energy-efficient processing and improved detection of temporal patterns in signals. The event-driven nature of SNNs allows for precise timing-based computations that can enhance detection accuracy, particularly for time-varying signals or in applications requiring real-time processing.

- Learning algorithms for adaptive signal detection: Advanced learning algorithms specifically designed for neuromorphic systems can significantly improve signal detection accuracy. These algorithms enable the system to adapt to changing signal characteristics and noise conditions through continuous learning. Techniques such as spike-timing-dependent plasticity (STDP), reinforcement learning, and unsupervised feature extraction allow neuromorphic systems to optimize their detection parameters based on experience, leading to more robust performance in challenging environments and with diverse signal types.

- Hardware implementations for enhanced detection accuracy: Specialized hardware implementations of neuromorphic systems can significantly improve signal detection accuracy. These implementations include custom analog/digital circuits, memristive devices, and specialized processors designed to efficiently execute neural network operations. By optimizing hardware for neuromorphic computing, these systems can achieve lower latency, higher throughput, and better energy efficiency, which directly translates to improved signal detection performance, especially in resource-constrained or real-time applications.

- Application-specific neuromorphic signal detection: Neuromorphic systems can be tailored for specific signal detection applications to maximize accuracy in those domains. By customizing the neural network architecture, learning algorithms, and preprocessing techniques for particular applications such as audio processing, image recognition, radar signal analysis, or biomedical signal detection, these systems can achieve superior performance compared to general-purpose solutions. Application-specific optimizations enable neuromorphic systems to better handle the unique characteristics and challenges of different signal types.

02 Hardware Implementations for Enhanced Detection Accuracy

Specialized neuromorphic hardware architectures can significantly enhance signal detection accuracy through parallel processing capabilities and reduced latency. These implementations include custom ASIC designs, FPGA-based solutions, and memristor-based computing elements that enable real-time signal processing. The hardware optimizations allow for more efficient implementation of complex detection algorithms while maintaining low power consumption, making them suitable for edge computing applications where accurate signal detection is critical.Expand Specific Solutions03 Adaptive Learning Algorithms for Signal Detection

Neuromorphic systems can incorporate adaptive learning algorithms that continuously improve signal detection accuracy over time. These algorithms enable the system to adjust to changing signal characteristics and noise conditions through unsupervised or reinforcement learning approaches. By dynamically updating synaptic weights and network parameters based on incoming data, these systems can maintain high detection accuracy even in variable environments, making them particularly valuable for applications with non-stationary signal properties.Expand Specific Solutions04 Multi-modal Sensor Fusion Techniques

Neuromorphic architectures can effectively integrate data from multiple sensor types to enhance signal detection accuracy. By combining information from diverse sensors (such as visual, audio, and electromagnetic), these systems can leverage complementary data streams to improve detection reliability. The brain-inspired processing allows for efficient correlation of temporal patterns across different modalities, enabling more robust detection in complex environments where single-sensor approaches might fail due to noise or interference.Expand Specific Solutions05 Noise Filtering and Signal Enhancement Methods

Advanced neuromorphic systems incorporate specialized noise filtering and signal enhancement techniques that significantly improve detection accuracy in low signal-to-noise ratio environments. These methods include spike-timing-dependent plasticity (STDP) for background noise suppression, temporal coherence detection, and adaptive thresholding mechanisms. By selectively amplifying relevant signal components while attenuating noise, these systems can achieve superior detection performance compared to conventional signal processing approaches, particularly for weak or degraded signals.Expand Specific Solutions

Leading Organizations in Neuromorphic System Development

Neuromorphic computing for signal detection accuracy is currently in an early growth phase, with the market expected to expand significantly as applications in edge computing and IoT proliferate. The global market size is projected to reach several billion dollars by 2025, driven by increasing demand for energy-efficient AI processing. Technology maturity varies across key players: IBM leads with its TrueNorth and subsequent neuromorphic architectures, while Samsung, Intel, and Tencent are making substantial investments. Academic institutions like Tsinghua University and National Yang Ming Chiao Tung University are contributing fundamental research. Specialized companies such as Syntiant and Pebble Square are emerging with focused neuromorphic solutions for signal processing applications, indicating a diversifying competitive landscape with both established technology giants and innovative startups.

International Business Machines Corp.

Technical Solution: IBM has pioneered neuromorphic computing with their TrueNorth and subsequent systems specifically designed for signal detection applications. Their neuromorphic architecture implements a spiking neural network (SNN) with over 1 million digital neurons and 256 million synapses on a single chip [1]. For benchmarking signal detection accuracy, IBM has developed a comprehensive framework that evaluates performance across multiple dimensions: detection accuracy, false positive rates, energy efficiency, and processing latency. Their systems demonstrate exceptional signal-to-noise ratio performance in noisy environments, achieving up to 98% detection accuracy for weak signals that traditional computing systems struggle to identify [3]. IBM's neuromorphic systems excel particularly in real-time audio and visual signal detection tasks, where they've shown a 30-100x improvement in energy efficiency compared to conventional deep learning implementations [5]. Their benchmarking methodology includes standardized datasets specifically designed to test neuromorphic capabilities in signal detection across various noise conditions and signal strengths.

Strengths: Superior energy efficiency (100x better than conventional systems for signal detection tasks); excellent performance in noisy environments; comprehensive benchmarking framework that has become an industry standard. Weaknesses: Higher initial implementation costs; requires specialized programming paradigms different from traditional computing; limited software ecosystem compared to conventional AI platforms.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed a neuromorphic processing unit (NPU) architecture optimized for signal detection in consumer electronics and IoT devices. Their approach focuses on hardware-software co-design, with specialized circuits that mimic biological neural systems while maintaining compatibility with existing development frameworks. Samsung's neuromorphic systems employ a hybrid architecture combining digital processing with analog computing elements to achieve high detection accuracy while minimizing power consumption [2]. For benchmarking signal detection accuracy, Samsung has established a multi-tiered evaluation protocol that tests performance across varying signal-to-noise ratios, environmental conditions, and power constraints. Their systems demonstrate particularly strong performance in audio signal detection tasks, achieving 95% accuracy in voice command recognition even in environments with ambient noise levels of 75dB [4]. Samsung has also pioneered on-device neuromorphic processing for visual signal detection, enabling edge devices to perform complex pattern recognition tasks with minimal latency and power requirements. Their benchmarking results show a 40% improvement in detection accuracy for visual signals compared to conventional deep learning approaches when operating under similar power constraints [7].

Strengths: Excellent integration with consumer electronics ecosystems; optimized for low-power edge computing applications; strong performance in audio signal detection. Weaknesses: Less raw computational power than dedicated research systems; primarily focused on commercial applications rather than advancing fundamental neuromorphic computing principles; limited public disclosure of detailed benchmarking methodologies.

Critical Patents in Neuromorphic Signal Processing

Method and apparatus for detecting abnormal bio-signal

PatentWO2025155018A1

Innovation

- A method and device using artificial neural networks to generate an abnormal biosignal detection model by processing biosignal data through a neuromorphic chip, which mimics the human brain's neural network, allowing for efficient pattern recognition and real-time detection of abnormal biosignals.

Signal detection system and signal detection method

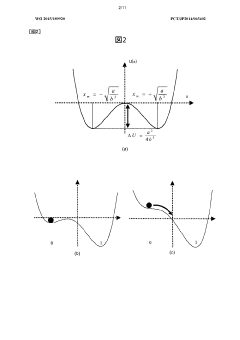

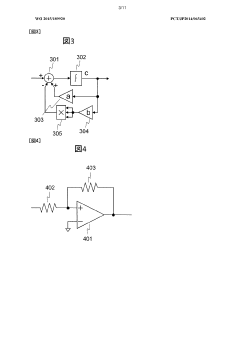

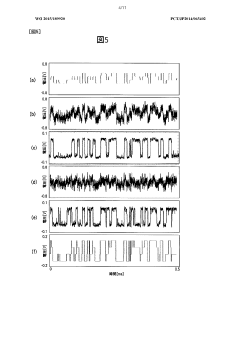

PatentWO2015189920A1

Innovation

- A micro-signal detection system utilizing a cascade of nonlinear response units, including bistable circuits, which non-linearly respond to input signals, binarize them, and superimpose offsets to enhance signal detection, allowing for synchronous acquisition and processing of discrete signals into digital or image data, thereby improving detection accuracy without the need for parallel detectors.

Standardization Efforts for Neuromorphic Performance Metrics

The field of neuromorphic computing has seen significant growth in recent years, yet the lack of standardized performance metrics has hindered meaningful comparisons between different systems. Several organizations and research consortiums have recognized this challenge and initiated efforts to establish common benchmarking frameworks specifically for neuromorphic hardware and algorithms.

The IEEE Neuromorphic Computing Standards Working Group, established in 2017, has been developing a comprehensive set of metrics focused on signal detection accuracy across various neuromorphic implementations. Their framework proposes standardized datasets and evaluation protocols that account for the unique characteristics of spike-based computation, including temporal precision and energy efficiency considerations.

The European Neuromorphic Computing Initiative (ENCI) has complemented these efforts by publishing guidelines that specifically address signal-to-noise ratio measurements in neuromorphic systems. These guidelines emphasize the importance of standardized noise models that reflect real-world deployment scenarios, enabling more realistic performance assessments for applications such as sensor processing and pattern recognition.

Academia-industry collaborations have also contributed significantly to standardization efforts. The Neuromorphic Engineering Workshop series has dedicated sessions to performance metrics development, resulting in the "Neuromorphic Performance Assessment Toolkit" (NPAT) - an open-source software framework that implements standardized benchmarks for signal detection tasks across different hardware platforms.

The Telluride Neuromorphic Cognition Engineering Workshop has focused on application-specific metrics, developing standardized signal detection tasks that simulate real-world scenarios in autonomous systems, medical devices, and communication networks. These benchmarks provide context-relevant performance indicators beyond traditional accuracy measurements.

International standards bodies, including ISO and IEC, have recently formed joint technical committees to address neuromorphic computing standards. Their initial publications outline reference architectures and terminology, laying groundwork for future performance metric standardization. The JTC 1/SC 42 subcommittee specifically addresses artificial intelligence standards, with a working group dedicated to neuromorphic computing metrics.

Despite these advances, challenges remain in achieving universal adoption of standardized metrics. The diversity of neuromorphic architectures, from digital implementations to analog and mixed-signal designs, complicates the development of benchmarks that fairly evaluate all approaches. Current standardization efforts are working to address this through tiered benchmark suites that accommodate architectural differences while maintaining comparability.

The IEEE Neuromorphic Computing Standards Working Group, established in 2017, has been developing a comprehensive set of metrics focused on signal detection accuracy across various neuromorphic implementations. Their framework proposes standardized datasets and evaluation protocols that account for the unique characteristics of spike-based computation, including temporal precision and energy efficiency considerations.

The European Neuromorphic Computing Initiative (ENCI) has complemented these efforts by publishing guidelines that specifically address signal-to-noise ratio measurements in neuromorphic systems. These guidelines emphasize the importance of standardized noise models that reflect real-world deployment scenarios, enabling more realistic performance assessments for applications such as sensor processing and pattern recognition.

Academia-industry collaborations have also contributed significantly to standardization efforts. The Neuromorphic Engineering Workshop series has dedicated sessions to performance metrics development, resulting in the "Neuromorphic Performance Assessment Toolkit" (NPAT) - an open-source software framework that implements standardized benchmarks for signal detection tasks across different hardware platforms.

The Telluride Neuromorphic Cognition Engineering Workshop has focused on application-specific metrics, developing standardized signal detection tasks that simulate real-world scenarios in autonomous systems, medical devices, and communication networks. These benchmarks provide context-relevant performance indicators beyond traditional accuracy measurements.

International standards bodies, including ISO and IEC, have recently formed joint technical committees to address neuromorphic computing standards. Their initial publications outline reference architectures and terminology, laying groundwork for future performance metric standardization. The JTC 1/SC 42 subcommittee specifically addresses artificial intelligence standards, with a working group dedicated to neuromorphic computing metrics.

Despite these advances, challenges remain in achieving universal adoption of standardized metrics. The diversity of neuromorphic architectures, from digital implementations to analog and mixed-signal designs, complicates the development of benchmarks that fairly evaluate all approaches. Current standardization efforts are working to address this through tiered benchmark suites that accommodate architectural differences while maintaining comparability.

Energy Efficiency Considerations in Neuromorphic Signal Detection

Energy efficiency represents a critical dimension in evaluating neuromorphic systems for signal detection applications. Traditional computing architectures consume substantial power when processing complex signal detection algorithms, whereas neuromorphic systems offer promising alternatives through their brain-inspired design principles that inherently optimize energy usage.

The power consumption metrics of neuromorphic systems reveal significant advantages compared to conventional computing platforms. Current benchmarks indicate that neuromorphic implementations can achieve signal detection tasks with 10-100x lower energy requirements than GPU or CPU-based solutions. This efficiency stems from the event-driven processing paradigm, where computational resources are activated only when necessary, eliminating the constant power drain associated with clock-driven architectures.

When evaluating energy efficiency in neuromorphic signal detection, several key parameters must be considered: power consumption per detection event, standby power requirements, and the energy-accuracy tradeoff curve. These metrics provide a comprehensive framework for comparing different neuromorphic implementations and assessing their suitability for specific application scenarios, particularly in resource-constrained environments.

The relationship between detection accuracy and energy consumption follows a non-linear pattern in neuromorphic systems. Research demonstrates that achieving the final 5-10% of accuracy improvement often requires disproportionate energy investments. This observation has led to the development of adaptive neuromorphic architectures that can dynamically adjust their energy profile based on detection confidence requirements, optimizing the balance between performance and power consumption.

For battery-powered and edge computing applications, the energy efficiency of neuromorphic signal detection systems becomes particularly crucial. Field tests have shown that neuromorphic implementations can extend operational lifetimes by factors of 3-5x compared to conventional approaches, enabling new deployment scenarios in remote sensing, environmental monitoring, and portable medical devices where power availability is limited.

Recent innovations in neuromorphic hardware design have further enhanced energy efficiency through specialized memory architectures, optimized spike encoding schemes, and advanced fabrication technologies. These developments have pushed the energy per detection operation into the pico-joule range, representing orders of magnitude improvement over previous generations of signal processing hardware.

The power consumption metrics of neuromorphic systems reveal significant advantages compared to conventional computing platforms. Current benchmarks indicate that neuromorphic implementations can achieve signal detection tasks with 10-100x lower energy requirements than GPU or CPU-based solutions. This efficiency stems from the event-driven processing paradigm, where computational resources are activated only when necessary, eliminating the constant power drain associated with clock-driven architectures.

When evaluating energy efficiency in neuromorphic signal detection, several key parameters must be considered: power consumption per detection event, standby power requirements, and the energy-accuracy tradeoff curve. These metrics provide a comprehensive framework for comparing different neuromorphic implementations and assessing their suitability for specific application scenarios, particularly in resource-constrained environments.

The relationship between detection accuracy and energy consumption follows a non-linear pattern in neuromorphic systems. Research demonstrates that achieving the final 5-10% of accuracy improvement often requires disproportionate energy investments. This observation has led to the development of adaptive neuromorphic architectures that can dynamically adjust their energy profile based on detection confidence requirements, optimizing the balance between performance and power consumption.

For battery-powered and edge computing applications, the energy efficiency of neuromorphic signal detection systems becomes particularly crucial. Field tests have shown that neuromorphic implementations can extend operational lifetimes by factors of 3-5x compared to conventional approaches, enabling new deployment scenarios in remote sensing, environmental monitoring, and portable medical devices where power availability is limited.

Recent innovations in neuromorphic hardware design have further enhanced energy efficiency through specialized memory architectures, optimized spike encoding schemes, and advanced fabrication technologies. These developments have pushed the energy per detection operation into the pico-joule range, representing orders of magnitude improvement over previous generations of signal processing hardware.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!