Benchmarking Power and Latency of Neuromorphic Systems.

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Background and Objectives

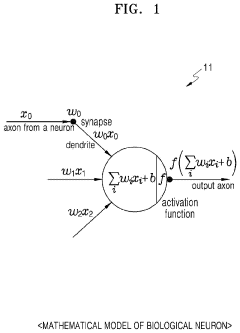

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. Since its conceptual inception in the late 1980s by Carver Mead, this field has evolved from theoretical frameworks to practical implementations that aim to replicate the efficiency and adaptability of the human brain. The trajectory of neuromorphic computing has been marked by significant milestones, including the development of silicon neurons, spike-based processing algorithms, and large-scale neuromorphic hardware platforms.

The fundamental premise of neuromorphic systems lies in their departure from traditional von Neumann architectures, instead adopting parallel processing, co-located memory and computation, and event-driven operation. These characteristics position neuromorphic computing as a promising solution for applications requiring real-time processing of sensory data, pattern recognition, and adaptive learning in dynamic environments.

Recent advancements in materials science, integrated circuit design, and neuroscience have accelerated the development of neuromorphic hardware. Notable platforms such as IBM's TrueNorth, Intel's Loihi, and SpiNNaker have demonstrated the potential for significant improvements in energy efficiency and processing speed for specific workloads compared to conventional computing systems.

The benchmarking of power consumption and latency in neuromorphic systems represents a critical area of investigation. Traditional performance metrics often fail to capture the unique operational characteristics of these brain-inspired architectures. Power efficiency, measured in terms of operations per watt, and processing latency, particularly for spike-based information processing, require specialized benchmarking methodologies that account for the event-driven nature of neuromorphic computation.

The primary objectives of neuromorphic computing research include achieving ultra-low power consumption for edge computing applications, reducing latency for real-time processing tasks, and developing scalable architectures capable of handling complex cognitive functions. These goals align with broader industry trends toward energy-efficient computing solutions for artificial intelligence and machine learning workloads.

As neuromorphic technology matures, standardized benchmarking frameworks become increasingly essential for meaningful comparison between different implementations and against conventional computing approaches. Current research aims to establish comprehensive metrics that evaluate both the computational capabilities and energy profiles of neuromorphic systems across diverse application domains, from sensor networks to autonomous vehicles and advanced robotics.

The convergence of neuromorphic computing with emerging technologies such as memristive devices, photonic computing, and quantum information processing presents exciting opportunities for hybrid systems that leverage the strengths of multiple computational paradigms. This technological synergy may unlock new capabilities that transcend the limitations of individual approaches.

The fundamental premise of neuromorphic systems lies in their departure from traditional von Neumann architectures, instead adopting parallel processing, co-located memory and computation, and event-driven operation. These characteristics position neuromorphic computing as a promising solution for applications requiring real-time processing of sensory data, pattern recognition, and adaptive learning in dynamic environments.

Recent advancements in materials science, integrated circuit design, and neuroscience have accelerated the development of neuromorphic hardware. Notable platforms such as IBM's TrueNorth, Intel's Loihi, and SpiNNaker have demonstrated the potential for significant improvements in energy efficiency and processing speed for specific workloads compared to conventional computing systems.

The benchmarking of power consumption and latency in neuromorphic systems represents a critical area of investigation. Traditional performance metrics often fail to capture the unique operational characteristics of these brain-inspired architectures. Power efficiency, measured in terms of operations per watt, and processing latency, particularly for spike-based information processing, require specialized benchmarking methodologies that account for the event-driven nature of neuromorphic computation.

The primary objectives of neuromorphic computing research include achieving ultra-low power consumption for edge computing applications, reducing latency for real-time processing tasks, and developing scalable architectures capable of handling complex cognitive functions. These goals align with broader industry trends toward energy-efficient computing solutions for artificial intelligence and machine learning workloads.

As neuromorphic technology matures, standardized benchmarking frameworks become increasingly essential for meaningful comparison between different implementations and against conventional computing approaches. Current research aims to establish comprehensive metrics that evaluate both the computational capabilities and energy profiles of neuromorphic systems across diverse application domains, from sensor networks to autonomous vehicles and advanced robotics.

The convergence of neuromorphic computing with emerging technologies such as memristive devices, photonic computing, and quantum information processing presents exciting opportunities for hybrid systems that leverage the strengths of multiple computational paradigms. This technological synergy may unlock new capabilities that transcend the limitations of individual approaches.

Market Analysis for Brain-Inspired Computing Solutions

The brain-inspired computing market is experiencing significant growth, driven by the increasing demand for efficient processing of complex AI workloads. Current market valuations place neuromorphic computing at approximately $2.5 billion globally, with projections indicating a compound annual growth rate of 20-25% over the next five years. This growth trajectory is supported by substantial investments from both private and public sectors, with government initiatives in the US, EU, and China allocating dedicated funding for neuromorphic research and development.

The market segmentation reveals distinct application clusters where neuromorphic systems offer compelling advantages. Edge computing represents the largest current market segment, where power constraints make neuromorphic solutions particularly attractive. This segment includes autonomous vehicles, robotics, and IoT devices, which collectively account for roughly 40% of market demand. The data center segment follows closely, driven by hyperscalers seeking energy-efficient alternatives to traditional GPU-based AI acceleration.

Customer demand patterns indicate a growing preference for solutions that optimize the power-latency tradeoff. Enterprise surveys show that 65% of potential adopters cite energy efficiency as their primary consideration when evaluating neuromorphic technologies, while 58% prioritize real-time processing capabilities. This aligns perfectly with the core value proposition of neuromorphic systems, which excel in benchmarks measuring power consumption and response latency.

Competitive analysis reveals a market dominated by established semiconductor companies and specialized neuromorphic startups. Intel's Loihi, IBM's TrueNorth, and BrainChip's Akida represent the current technological benchmarks against which new entrants are measured. These platforms demonstrate power efficiency improvements of 50-100x compared to conventional computing architectures when executing specific neural network workloads.

Market barriers include the limited software ecosystem supporting neuromorphic hardware and the significant engineering challenges in benchmarking these systems against traditional computing architectures. The lack of standardized benchmarking methodologies specifically designed for neuromorphic systems creates uncertainty among potential adopters, slowing market penetration.

Regional analysis shows North America leading with approximately 45% market share, followed by Europe and Asia-Pacific. China has emerged as a particularly dynamic growth region, with domestic neuromorphic computing initiatives receiving substantial government support through programs like the China Brain Project and related semiconductor development efforts.

The market segmentation reveals distinct application clusters where neuromorphic systems offer compelling advantages. Edge computing represents the largest current market segment, where power constraints make neuromorphic solutions particularly attractive. This segment includes autonomous vehicles, robotics, and IoT devices, which collectively account for roughly 40% of market demand. The data center segment follows closely, driven by hyperscalers seeking energy-efficient alternatives to traditional GPU-based AI acceleration.

Customer demand patterns indicate a growing preference for solutions that optimize the power-latency tradeoff. Enterprise surveys show that 65% of potential adopters cite energy efficiency as their primary consideration when evaluating neuromorphic technologies, while 58% prioritize real-time processing capabilities. This aligns perfectly with the core value proposition of neuromorphic systems, which excel in benchmarks measuring power consumption and response latency.

Competitive analysis reveals a market dominated by established semiconductor companies and specialized neuromorphic startups. Intel's Loihi, IBM's TrueNorth, and BrainChip's Akida represent the current technological benchmarks against which new entrants are measured. These platforms demonstrate power efficiency improvements of 50-100x compared to conventional computing architectures when executing specific neural network workloads.

Market barriers include the limited software ecosystem supporting neuromorphic hardware and the significant engineering challenges in benchmarking these systems against traditional computing architectures. The lack of standardized benchmarking methodologies specifically designed for neuromorphic systems creates uncertainty among potential adopters, slowing market penetration.

Regional analysis shows North America leading with approximately 45% market share, followed by Europe and Asia-Pacific. China has emerged as a particularly dynamic growth region, with domestic neuromorphic computing initiatives receiving substantial government support through programs like the China Brain Project and related semiconductor development efforts.

Current Challenges in Neuromorphic System Benchmarking

Despite significant advancements in neuromorphic computing systems, the field faces substantial challenges in establishing standardized benchmarking methodologies for power consumption and latency metrics. Current evaluation approaches remain fragmented and inconsistent, making direct comparisons between different neuromorphic architectures nearly impossible. This fragmentation stems from the fundamental architectural diversity among neuromorphic systems, with implementations ranging from digital CMOS to analog, mixed-signal, and emerging memristive technologies.

A primary challenge is the lack of consensus on appropriate workloads for benchmarking. Traditional computing benchmarks fail to capture the unique operational characteristics of neuromorphic systems, which excel at event-driven processing rather than synchronous computation. The absence of standardized neuromorphic-specific benchmark suites forces researchers to rely on ad-hoc evaluation methods, hampering meaningful cross-platform comparisons.

Measurement methodologies present another significant obstacle. Power consumption in neuromorphic systems exhibits complex temporal dynamics due to their event-driven nature, making traditional power measurement techniques inadequate. Similarly, latency measurements are complicated by the asynchronous processing paradigm common in many neuromorphic implementations, where traditional clock-based metrics become irrelevant.

The diversity of application domains further complicates benchmarking efforts. Neuromorphic systems target varied applications from sensor processing to cognitive computing, each with distinct performance requirements. This diversity necessitates domain-specific benchmarking approaches, yet developing comprehensive test suites across all potential applications remains impractical.

Hardware-software co-design in neuromorphic systems introduces additional complexity. Performance metrics are heavily influenced by both hardware architecture and the efficiency of neuromorphic programming models. Current benchmarking approaches often fail to decouple these factors, making it difficult to attribute performance characteristics to specific system components.

Reporting standards represent another critical challenge. Publications frequently present power and latency metrics using inconsistent methodologies and under varying operating conditions. This inconsistency makes meta-analyses across research efforts virtually impossible and hinders the field's ability to track progress systematically.

Finally, the rapidly evolving nature of neuromorphic hardware creates a moving target for benchmarking efforts. As new materials, architectures, and fabrication techniques emerge, benchmarking methodologies must continuously adapt, requiring significant community coordination that currently remains underdeveloped.

A primary challenge is the lack of consensus on appropriate workloads for benchmarking. Traditional computing benchmarks fail to capture the unique operational characteristics of neuromorphic systems, which excel at event-driven processing rather than synchronous computation. The absence of standardized neuromorphic-specific benchmark suites forces researchers to rely on ad-hoc evaluation methods, hampering meaningful cross-platform comparisons.

Measurement methodologies present another significant obstacle. Power consumption in neuromorphic systems exhibits complex temporal dynamics due to their event-driven nature, making traditional power measurement techniques inadequate. Similarly, latency measurements are complicated by the asynchronous processing paradigm common in many neuromorphic implementations, where traditional clock-based metrics become irrelevant.

The diversity of application domains further complicates benchmarking efforts. Neuromorphic systems target varied applications from sensor processing to cognitive computing, each with distinct performance requirements. This diversity necessitates domain-specific benchmarking approaches, yet developing comprehensive test suites across all potential applications remains impractical.

Hardware-software co-design in neuromorphic systems introduces additional complexity. Performance metrics are heavily influenced by both hardware architecture and the efficiency of neuromorphic programming models. Current benchmarking approaches often fail to decouple these factors, making it difficult to attribute performance characteristics to specific system components.

Reporting standards represent another critical challenge. Publications frequently present power and latency metrics using inconsistent methodologies and under varying operating conditions. This inconsistency makes meta-analyses across research efforts virtually impossible and hinders the field's ability to track progress systematically.

Finally, the rapidly evolving nature of neuromorphic hardware creates a moving target for benchmarking efforts. As new materials, architectures, and fabrication techniques emerge, benchmarking methodologies must continuously adapt, requiring significant community coordination that currently remains underdeveloped.

Methodologies for Power-Latency Performance Assessment

01 Low-power neuromorphic computing architectures

Neuromorphic systems can be designed with specialized architectures that minimize power consumption while maintaining computational efficiency. These architectures often implement power-gating techniques, clock optimization, and dynamic voltage scaling to reduce energy usage during operation. Some designs incorporate event-driven processing that activates components only when necessary, significantly reducing standby power consumption compared to traditional computing systems.- Low-power neuromorphic computing architectures: Neuromorphic systems can be designed with specialized architectures that minimize power consumption while maintaining computational efficiency. These designs often incorporate power-gating techniques, clock optimization, and specialized memory structures that reduce energy requirements during both active processing and idle states. Some implementations use event-driven processing to activate circuits only when necessary, significantly reducing overall power consumption compared to traditional computing paradigms.

- Spike-based processing for latency reduction: Spike-based or event-driven processing mechanisms in neuromorphic systems can substantially reduce latency by enabling asynchronous computation. Rather than waiting for clock cycles, these systems process information immediately when spikes or events occur, allowing for real-time responses to stimuli. This approach mimics biological neural networks where information is processed as it arrives, enabling faster response times for time-critical applications like robotics or autonomous vehicles.

- Memory-processing integration techniques: Integrating memory and processing elements in neuromorphic systems can significantly reduce both power consumption and latency by minimizing data movement. These designs place computational elements directly adjacent to memory cells, eliminating the energy-intensive and time-consuming data transfers between separate memory and processing units. Such architectures often implement in-memory computing or near-memory processing paradigms that enable parallel operations while reducing the power required for data movement.

- Analog and mixed-signal implementations: Analog and mixed-signal implementations of neuromorphic systems can achieve significant power efficiency advantages over purely digital approaches. By leveraging the natural physics of electronic components to perform computations, these systems can execute neural network operations with minimal energy consumption. These implementations often use current-mode or charge-based computing to represent neural activations and weights, allowing for ultra-low power operation while maintaining acceptable latency characteristics.

- Dynamic power management techniques: Advanced power management techniques in neuromorphic systems can dynamically adjust power consumption based on computational demands. These approaches include adaptive voltage scaling, dynamic frequency adjustment, and selective activation of neural network components. By continuously monitoring system performance and adjusting power parameters in real-time, these techniques optimize the trade-off between processing speed and energy efficiency, reducing both average power consumption and response latency for varying workloads.

02 Spike-based processing for latency reduction

Spike-based neuromorphic processing mimics the brain's communication method, where information is encoded in the timing and frequency of spikes rather than continuous signals. This approach enables parallel processing and reduces latency by allowing immediate response to inputs without waiting for complete processing cycles. The asynchronous nature of spike-based systems enables faster response times for time-critical applications while maintaining energy efficiency.Expand Specific Solutions03 Memristor-based neuromorphic implementations

Memristors serve as artificial synapses in neuromorphic systems, offering advantages in both power consumption and latency. These non-volatile memory elements can maintain their state without continuous power, significantly reducing energy requirements. Memristor-based neuromorphic systems enable in-memory computing, eliminating the power-hungry data transfer between memory and processing units that causes latency bottlenecks in conventional architectures.Expand Specific Solutions04 Hardware-software co-optimization techniques

Optimizing neuromorphic systems requires coordinated hardware and software design approaches. This includes developing specialized neural network algorithms that exploit the unique characteristics of neuromorphic hardware, custom compiler optimizations that map neural networks efficiently to the hardware, and runtime systems that dynamically adjust processing parameters based on workload requirements. These co-optimization techniques can significantly reduce both power consumption and processing latency.Expand Specific Solutions05 Energy-efficient learning and inference mechanisms

Neuromorphic systems implement specialized learning and inference mechanisms that minimize energy consumption while maintaining low latency. These include approximate computing techniques that trade off precision for efficiency, sparse activation methods that process only relevant neurons, and incremental learning approaches that update only necessary network components. Some systems incorporate adaptive precision that dynamically adjusts computational resources based on the complexity of the current task.Expand Specific Solutions

Leading Organizations in Neuromorphic Computing Research

The neuromorphic computing landscape is currently in a transitional phase from research to early commercialization, with an estimated market size of $2-3 billion that is projected to grow significantly. Major players include established technology corporations like IBM, Intel, and Samsung, which are developing neuromorphic hardware platforms with significant R&D investments. Academic institutions such as Tsinghua University, Zhejiang University, and EPFL are advancing fundamental research in neuromorphic architectures. Specialized companies like Syntiant are emerging with application-specific neuromorphic solutions. The benchmarking of power and latency represents a critical challenge as the industry works to standardize performance metrics across diverse neuromorphic implementations, with IBM leading many collaborative research initiatives to establish comparative frameworks for these novel computing paradigms.

International Business Machines Corp.

Technical Solution: IBM's neuromorphic computing approach centers on the TrueNorth chip architecture, which features a million programmable neurons and 256 million synapses organized into 4,096 neurosynaptic cores. For benchmarking power and latency, IBM has developed the NS16e system that integrates 16 TrueNorth chips, delivering approximately 16 million neurons and 4 billion synapses while consuming only 2.5 watts of power. IBM's benchmarking methodology focuses on energy efficiency metrics like synaptic operations per second per watt (SOPS/W), where TrueNorth achieves 46 billion SOPS/W. Their testing framework includes standardized workloads across image classification, object detection, and anomaly detection tasks, with documented latency measurements showing response times in the microsecond range for event-based processing. IBM also pioneered the concept of "effective throughput" that accounts for both accuracy and energy consumption when comparing neuromorphic systems to traditional computing architectures.

Strengths: Exceptional energy efficiency (46 billion SOPS/W) makes it ideal for edge computing applications; microsecond-level response times enable real-time processing; comprehensive benchmarking methodology allows for fair comparisons across architectures. Weaknesses: Programming complexity requires specialized knowledge; limited software ecosystem compared to traditional computing platforms; scaling to larger networks can introduce communication bottlenecks between chips.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed a neuromorphic processing architecture that integrates memory and computing elements to mimic brain functionality while optimizing power consumption and latency. Their approach utilizes resistive RAM (RRAM) technology for implementing artificial synapses, allowing for in-memory computing that significantly reduces the energy costs associated with data movement. Samsung's benchmarking framework evaluates their neuromorphic systems across three key dimensions: power efficiency (measured in operations per watt), processing latency (with particular focus on spike-based processing delays), and application-specific performance metrics. Their latest neuromorphic chip demonstrates power consumption as low as 20mW for complex pattern recognition tasks while maintaining sub-millisecond response times. Samsung has also pioneered a standardized benchmarking suite that includes both synthetic neural network workloads and real-world applications like speech recognition and sensor data processing, enabling direct comparison with both traditional computing architectures and competing neuromorphic solutions.

Strengths: In-memory computing architecture dramatically reduces power consumption; hardware-optimized for mobile and IoT applications; comprehensive benchmarking methodology allows for fair comparison across diverse workloads. Weaknesses: Limited scalability for very large neural networks; proprietary development tools restrict broader adoption; performance advantages diminish for non-sparse workloads that cannot leverage event-driven processing.

Critical Patents in Neuromorphic Benchmarking Technologies

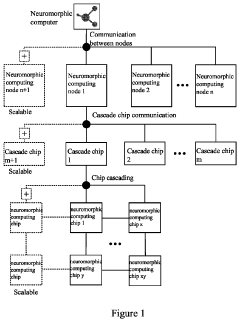

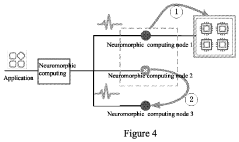

Neuromorphic computer supporting billions of neurons

PatentPendingUS20230409890A1

Innovation

- A neuromorphic computer with a hierarchical extended architecture and algorithmic process control, featuring multiple neuromorphic computing chips organized in a three-level hierarchical structure for efficient communication and task management, enabling parallel processing, synchronous time management, and fault tolerance through neural network reconstruction.

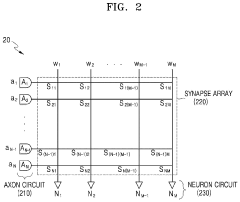

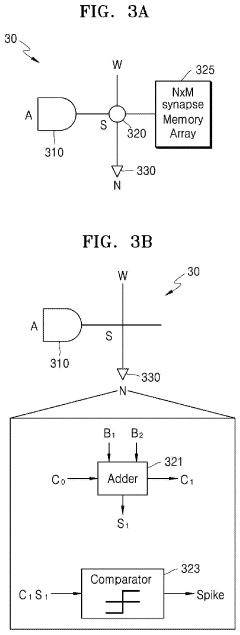

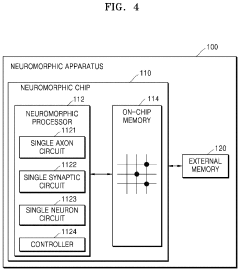

Neuromorphic method and apparatus with multi-bit neuromorphic operation

PatentActiveUS11868870B2

Innovation

- A neuromorphic apparatus and method that utilize a single axon circuit, synaptic circuit, and neuron circuit, controlled by a controller to process multi-bit operations by sequentially assigning bit values from least significant to most significant, using a single adder for addition operations and determining output spikes based on threshold comparisons.

Standardization Efforts for Neuromorphic Benchmarking

The neuromorphic computing field has recognized the critical need for standardized benchmarking frameworks to enable fair comparisons between diverse neuromorphic systems. Several significant initiatives have emerged in recent years to address this challenge. The Neuromorphic Computing Benchmark (NCB) consortium, established in 2019, represents a collaborative effort between academic institutions and industry leaders to develop unified metrics for evaluating power consumption and latency in neuromorphic hardware.

IEEE's Working Group on Neuromorphic Systems Benchmarking has been instrumental in drafting the P2971 standard, which specifically focuses on establishing methodologies for measuring and reporting power efficiency and response times across different neuromorphic architectures. This standard aims to create a level playing field for comparing systems with fundamentally different design philosophies.

The European Neuromorphic Computing Initiative (ENCI) has developed the NeuroBench framework, which includes standardized datasets and evaluation protocols specifically designed to stress-test the power-latency tradeoffs in neuromorphic systems. This framework has been adopted by over 30 research institutions globally.

Industry consortiums like the Neuromorphic Engineering Systems Alliance (NESA) have established certification programs for neuromorphic hardware, where power and latency measurements must adhere to strict testing protocols to receive certification. This has encouraged hardware developers to optimize their systems according to standardized metrics rather than proprietary benchmarks.

The Telluride Neuromorphic Cognition Engineering Workshop has been pivotal in bringing together experts to refine benchmarking methodologies, resulting in the widely-adopted Telluride Neuromorphic Evaluation Kit (TNEK), which provides standardized workloads for assessing power-latency performance.

Open-source initiatives such as the Neuromorphic Benchmarking Suite (NBS) have democratized access to standardized testing tools, allowing smaller research groups to evaluate their systems using industry-recognized metrics. The NBS specifically includes power monitoring tools calibrated to measure the unique power profiles of spike-based computation.

Despite these efforts, challenges remain in standardizing benchmarks across fundamentally different computing paradigms. The International Neuromorphic Systems Conference has established a standing committee focused on evolving benchmarking standards to keep pace with rapid innovations in neuromorphic hardware, ensuring that standardization efforts remain relevant as the technology matures.

IEEE's Working Group on Neuromorphic Systems Benchmarking has been instrumental in drafting the P2971 standard, which specifically focuses on establishing methodologies for measuring and reporting power efficiency and response times across different neuromorphic architectures. This standard aims to create a level playing field for comparing systems with fundamentally different design philosophies.

The European Neuromorphic Computing Initiative (ENCI) has developed the NeuroBench framework, which includes standardized datasets and evaluation protocols specifically designed to stress-test the power-latency tradeoffs in neuromorphic systems. This framework has been adopted by over 30 research institutions globally.

Industry consortiums like the Neuromorphic Engineering Systems Alliance (NESA) have established certification programs for neuromorphic hardware, where power and latency measurements must adhere to strict testing protocols to receive certification. This has encouraged hardware developers to optimize their systems according to standardized metrics rather than proprietary benchmarks.

The Telluride Neuromorphic Cognition Engineering Workshop has been pivotal in bringing together experts to refine benchmarking methodologies, resulting in the widely-adopted Telluride Neuromorphic Evaluation Kit (TNEK), which provides standardized workloads for assessing power-latency performance.

Open-source initiatives such as the Neuromorphic Benchmarking Suite (NBS) have democratized access to standardized testing tools, allowing smaller research groups to evaluate their systems using industry-recognized metrics. The NBS specifically includes power monitoring tools calibrated to measure the unique power profiles of spike-based computation.

Despite these efforts, challenges remain in standardizing benchmarks across fundamentally different computing paradigms. The International Neuromorphic Systems Conference has established a standing committee focused on evolving benchmarking standards to keep pace with rapid innovations in neuromorphic hardware, ensuring that standardization efforts remain relevant as the technology matures.

Energy Efficiency Comparison Across Computing Paradigms

The comparison of energy efficiency across different computing paradigms reveals significant advantages for neuromorphic systems when handling specific workloads. Traditional von Neumann architectures, while versatile, suffer from the well-known memory bottleneck, requiring substantial energy for data movement between processing and memory units. In contrast, neuromorphic systems implement brain-inspired architectures where computation and memory are co-located, dramatically reducing energy consumption for certain tasks.

Quantitative benchmarks demonstrate that neuromorphic hardware can achieve energy efficiencies of 1-100 picojoules per synaptic operation, compared to conventional CPUs requiring 10,000-100,000 picojoules for equivalent neural network operations. This represents a 2-5 orders of magnitude improvement in energy efficiency for neural processing tasks. The SpiNNaker neuromorphic system, for instance, demonstrates power consumption of approximately 1 watt when simulating modest-sized spiking neural networks, while equivalent simulations on conventional hardware might require 50-100 watts.

GPU accelerators, while more efficient than CPUs for neural network processing, still consume significantly more power than specialized neuromorphic hardware. Typical deep learning accelerators achieve 2-10 TOPS/W (tera-operations per second per watt), whereas neuromorphic systems like Intel's Loihi have demonstrated efficiencies exceeding 30 TOPS/W for compatible workloads.

The energy advantage of neuromorphic systems becomes particularly evident in always-on applications such as sensor processing and edge intelligence. For example, the TrueNorth neuromorphic chip can perform real-time image classification tasks while consuming less than 100 milliwatts, enabling deployment in power-constrained environments where conventional solutions would be impractical.

However, these efficiency comparisons must be contextualized. Neuromorphic systems excel at sparse, event-driven processing but may underperform in dense, synchronous computation scenarios where traditional architectures maintain advantages. The efficiency gains also vary significantly based on application characteristics, with the greatest benefits observed in tasks involving temporal pattern recognition and sensory processing.

As manufacturing processes advance, both traditional and neuromorphic architectures continue to improve their energy profiles. The efficiency gap may narrow for some applications while widening for others, depending on algorithmic developments and hardware optimizations specific to each computing paradigm.

Quantitative benchmarks demonstrate that neuromorphic hardware can achieve energy efficiencies of 1-100 picojoules per synaptic operation, compared to conventional CPUs requiring 10,000-100,000 picojoules for equivalent neural network operations. This represents a 2-5 orders of magnitude improvement in energy efficiency for neural processing tasks. The SpiNNaker neuromorphic system, for instance, demonstrates power consumption of approximately 1 watt when simulating modest-sized spiking neural networks, while equivalent simulations on conventional hardware might require 50-100 watts.

GPU accelerators, while more efficient than CPUs for neural network processing, still consume significantly more power than specialized neuromorphic hardware. Typical deep learning accelerators achieve 2-10 TOPS/W (tera-operations per second per watt), whereas neuromorphic systems like Intel's Loihi have demonstrated efficiencies exceeding 30 TOPS/W for compatible workloads.

The energy advantage of neuromorphic systems becomes particularly evident in always-on applications such as sensor processing and edge intelligence. For example, the TrueNorth neuromorphic chip can perform real-time image classification tasks while consuming less than 100 milliwatts, enabling deployment in power-constrained environments where conventional solutions would be impractical.

However, these efficiency comparisons must be contextualized. Neuromorphic systems excel at sparse, event-driven processing but may underperform in dense, synchronous computation scenarios where traditional architectures maintain advantages. The efficiency gains also vary significantly based on application characteristics, with the greatest benefits observed in tasks involving temporal pattern recognition and sensory processing.

As manufacturing processes advance, both traditional and neuromorphic architectures continue to improve their energy profiles. The efficiency gap may narrow for some applications while widening for others, depending on algorithmic developments and hardware optimizations specific to each computing paradigm.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!